Building Monitoring and Maintaining a Grid Jorge Luis

Building, Monitoring and Maintaining a Grid Jorge Luis Rodriguez University of Florida jorge@phys. ufl. edu July 11 -15, 2005

Introduction • What we’ve already learned – What are grids, why we want them and who is using them: GSW intro & L 1… – Grid Authentication and Authorization: L 2 – Harnessing CPU cycles with condor: L 3 – Data Management and the Grid: L 4 • In this lecture – Fabric level infrastructure: Grid building blocks – The Open Science Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 2

Grid Building Blocks • • Computational Clusters Storage Devices Networks Grid Resources and Layout: – User Interfaces – Computing Elements – Storage Elements – Monitoring Infrastructure… Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 3

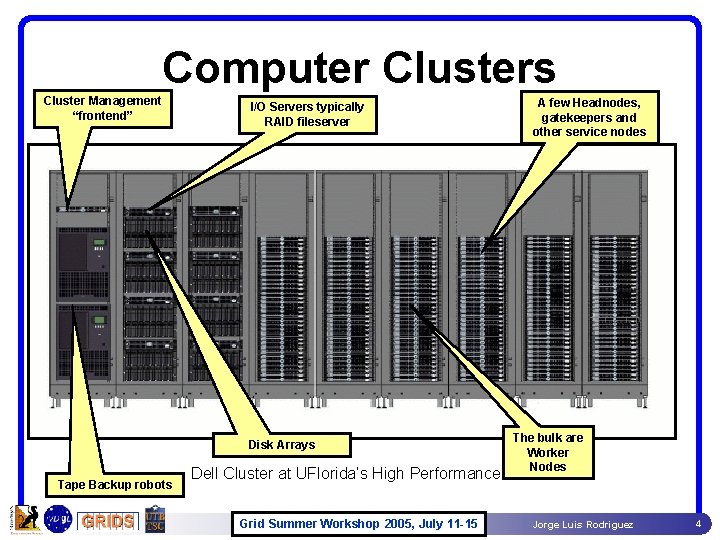

Computer Clusters Cluster Management “frontend” I/O Servers typically RAID fileserver Disk Arrays Tape Backup robots A few Headnodes, gatekeepers and other service nodes The bulk are Worker Nodes Dell Cluster at UFlorida’s High Performance Center Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 4

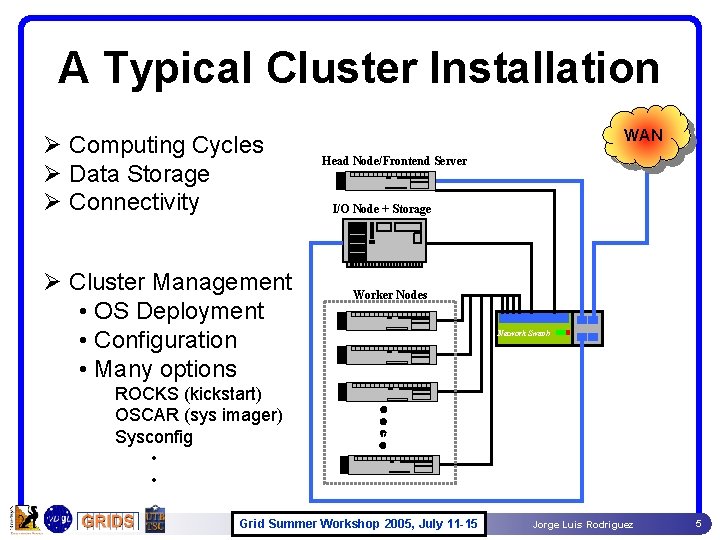

A Typical Cluster Installation Ø Computing Cycles Ø Data Storage Ø Connectivity Ø Cluster Management • OS Deployment • Configuration • Many options ROCKS (kickstart) OSCAR (sys imager) Sysconfig • • WAN Pentium III Head Node/Frontend Server I/O Node + Storage Pentium III Worker Nodes Pentium III Network Switch Pentium III Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 5

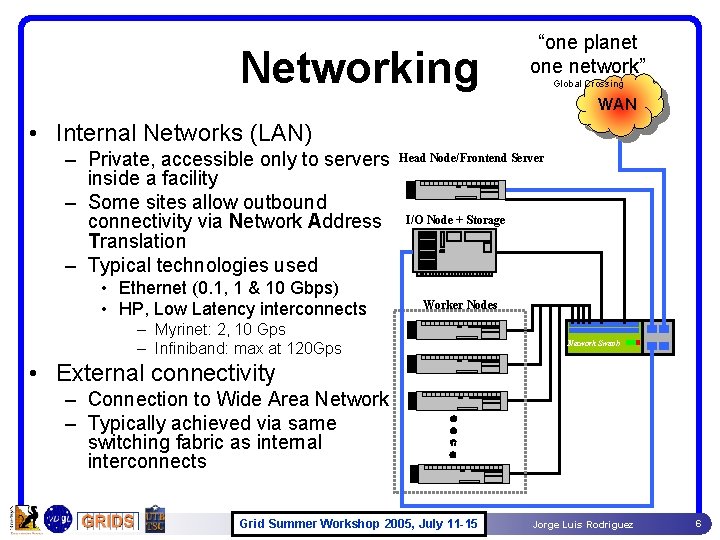

Networking “one planet one network” Global Crossing WAN • Internal Networks (LAN) – Private, accessible only to servers inside a facility – Some sites allow outbound connectivity via Network Address Translation – Typical technologies used • Ethernet (0. 1, 1 & 10 Gbps) • HP, Low Latency interconnects – Myrinet: 2, 10 Gps – Infiniband: max at 120 Gps Head Node/Frontend Server Pentium III I/O Node + Storage Pentium III Worker Nodes Pentium III Network Switch • External connectivity – Connection to Wide Area Network – Typically achieved via same switching fabric as internal interconnects Pentium III Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 6

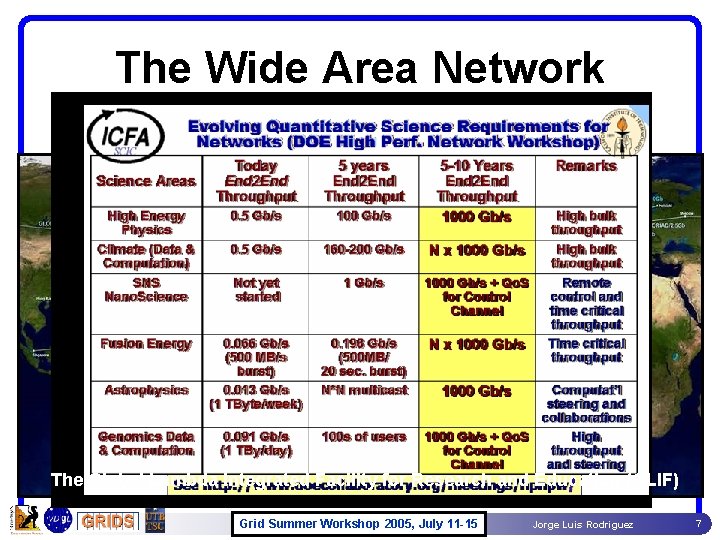

The Wide Area Network Ever increasing network capacities are what make grid computing possible, if not inevitable The Global Lambda Integrated Facility for Research and Education (GLIF) Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 7

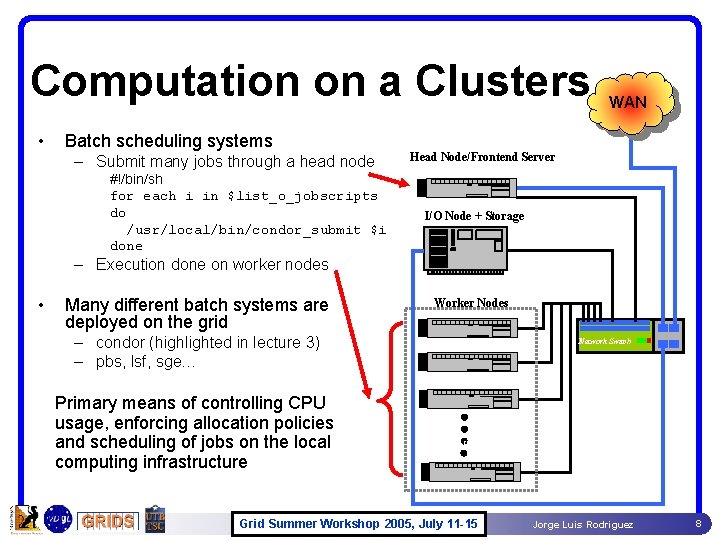

Computation on a Clusters • Batch scheduling systems – Submit many jobs through a head node #!/bin/sh for each i in $list_o_jobscripts do /usr/local/bin/condor_submit $i done WAN Head Node/Frontend Server Pentium III I/O Node + Storage – Execution done on worker nodes • Many different batch systems are deployed on the grid – condor (highlighted in lecture 3) – pbs, lsf, sge… Primary means of controlling CPU usage, enforcing allocation policies and scheduling of jobs on the local computing infrastructure Pentium III Worker Nodes Pentium III Network Switch Pentium III Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 8

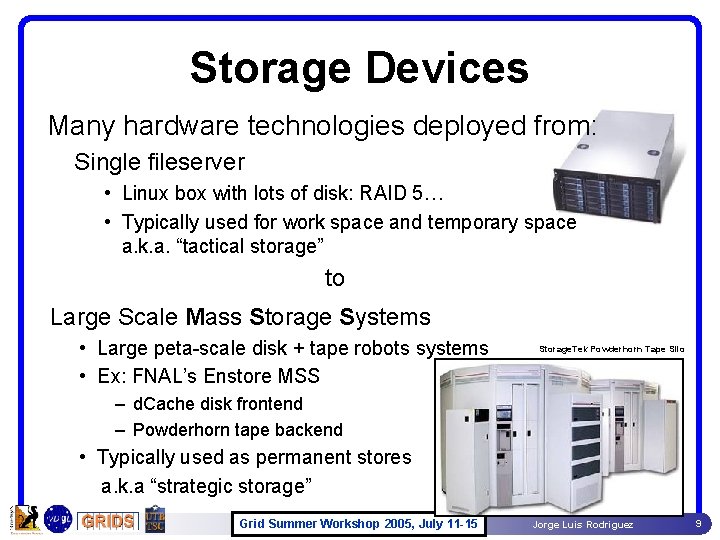

Storage Devices Many hardware technologies deployed from: Single fileserver • Linux box with lots of disk: RAID 5… • Typically used for work space and temporary space a. k. a. “tactical storage” to Large Scale Mass Storage Systems • Large peta-scale disk + tape robots systems • Ex: FNAL’s Enstore MSS Storage. Tek Powderhorn Tape Silo – d. Cache disk frontend – Powderhorn tape backend • Typically used as permanent stores a. k. a “strategic storage” Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 9

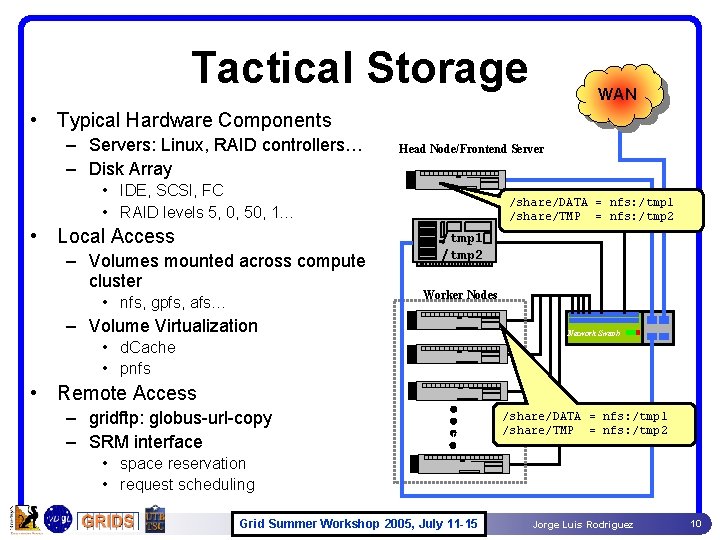

Tactical Storage WAN • Typical Hardware Components – Servers: Linux, RAID controllers… – Disk Array Head Node/Frontend Server Pentium III • IDE, SCSI, FC • RAID levels 5, 0, 50, 1… • Local Access – Volumes mounted across compute cluster • nfs, gpfs, afs… – Volume Virtualization /share/DATA = nfs: /tmp 1 /share/TMP = nfs: /tmp 2 /tmp 1 /tmp 2 Pentium III Worker Nodes Pentium III Network Switch • d. Cache • pnfs • Remote Access – gridftp: globus-url-copy – SRM interface Pentium III /share/DATA = nfs: /tmp 1 /share/TMP = nfs: /tmp 2 • space reservation • request scheduling Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 10

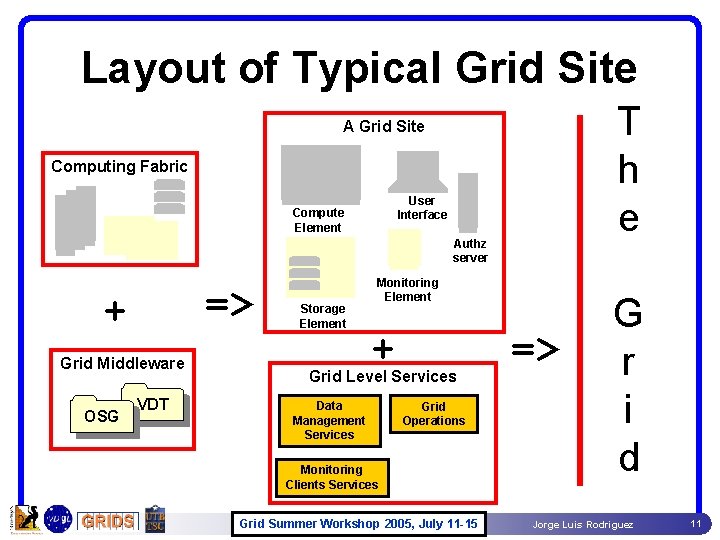

Layout of Typical Grid Site T h e A Grid Site Computing Fabric User Interface Compute Element Authz server => + Grid Middleware OSG VDT Storage Element Monitoring Element + Grid Level Services Data Management Services Grid Operations Monitoring Clients Services Grid Summer Workshop 2005, July 11 -15 => G r i d Jorge Luis Rodriguez 11

Grid 3 and Open Science Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 12

The Grid 3 Project Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 13

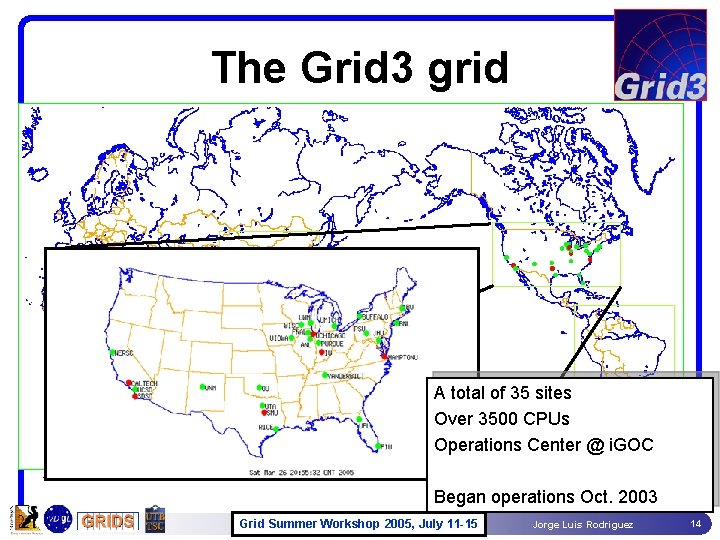

The Grid 3 grid A total of 35 sites Over 3500 CPUs Operations Center @ i. GOC Began operations Oct. 2003 Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 14

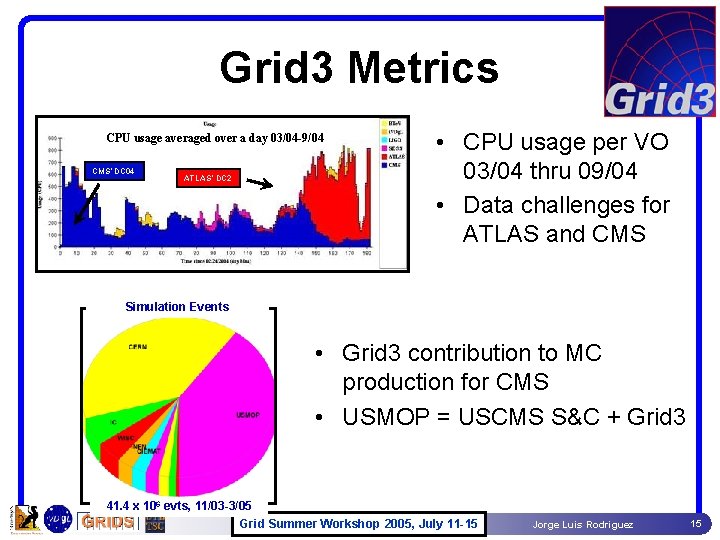

Grid 3 Metrics CPU usage averaged over a day 03/04 -9/04 CMS’ DC 04 ATLAS’ DC 2 • CPU usage per VO 03/04 thru 09/04 • Data challenges for ATLAS and CMS Simulation Events • Grid 3 contribution to MC production for CMS • USMOP = USCMS S&C + Grid 3 41. 4 x 106 evts, 11/03 -3/05 Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 15

The Open Science Grid A consortium of Universities and National Laboratories to building a sustainable grid infrastructure for Science in the U. S. … Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 16

Grid 3 Open Science Grid • Begin with iterative extension to Grid 3 – Shared resources, benefiting broad set of disciplines – Realization of the critical need for operations – More formal organization needed because of scale • Build OSG from laboratories, universities, campus grids – Argonne, Fermilab, SLAC, Brookhaven, Berkeley Lab, Jeff. Lab – UW Madison, U Florida, Purdue, Chicago, Caltech, Harvard, etc. • Further develop OSG – Partnerships and contributions from other sciences, universities – Incorporation of advanced networking – Focus on general services, operations, end-to-end performance Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 17

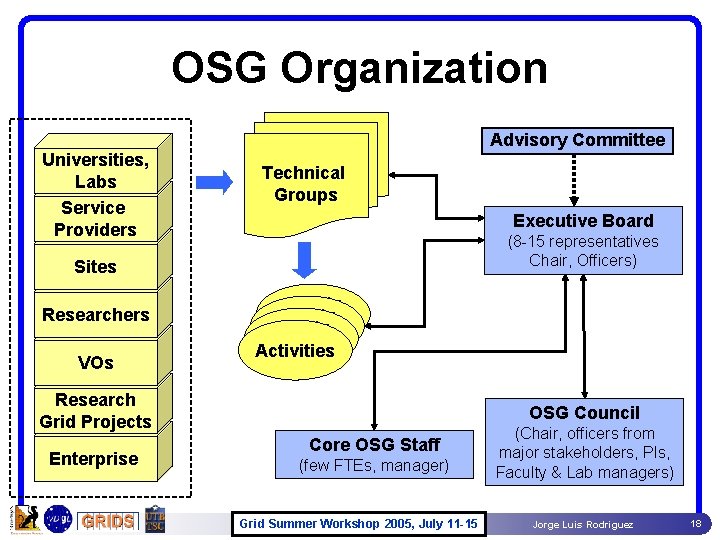

OSG Organization Advisory Committee Universities, Labs Service Providers Technical Groups Executive Board (8 -15 representatives Chair, Officers) Sites Researchers VOs activity 1 1 Activities Research Grid Projects Enterprise OSG Council Core OSG Staff (few FTEs, manager) Grid Summer Workshop 2005, July 11 -15 (Chair, officers from major stakeholders, PIs, Faculty & Lab managers) Jorge Luis Rodriguez 18

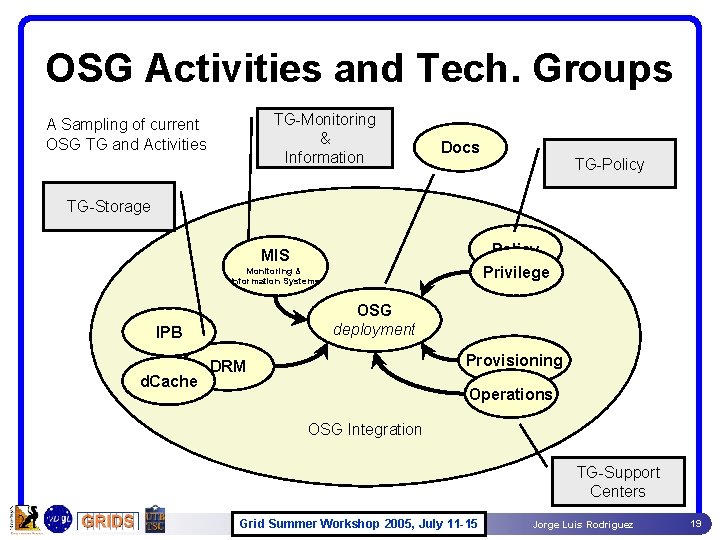

OSG Activities and Tech. Groups TG-Monitoring & Information A Sampling of current OSG TG and Activities Docs TG-Policy TG-Storage Policy Privilege MIS Monitoring & Information Systems OSG deployment IPB d. Cache Provisioning DRM Operations OSG Integration TG-Support Centers Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 19

OSG Technical Groups & Activities • Technical Groups address and coordinate – Propose and carry out activities related to their given areas – Liaise & collaborate with other peer projects (U. S. & international) – Participate in relevant standards organizations. – Chairs participate in Blueprint, Grid Integration and Deployment activities • Activities are well-defined, scoped set of tasks contributing to the OSG – Each Activity has deliverables and a plan – … is self-organized and operated – … is overseen & sponsored by one or more Technical Groups Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 20

Pr ivi leg e OSG Authentication & Authorization “Authz” Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 21

Authentication & Authorization • Authentication: Verify that you are who you say you are – OSG users typically use the DOEGrids CA – OSG sites also accept CAs from LCG and other organizations • Authorization: Allow a particular user to use a particular resource – Legacy Grid 3 method, gridmap-files – New Privilege method, employed at primarily at USLHC sites Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 22

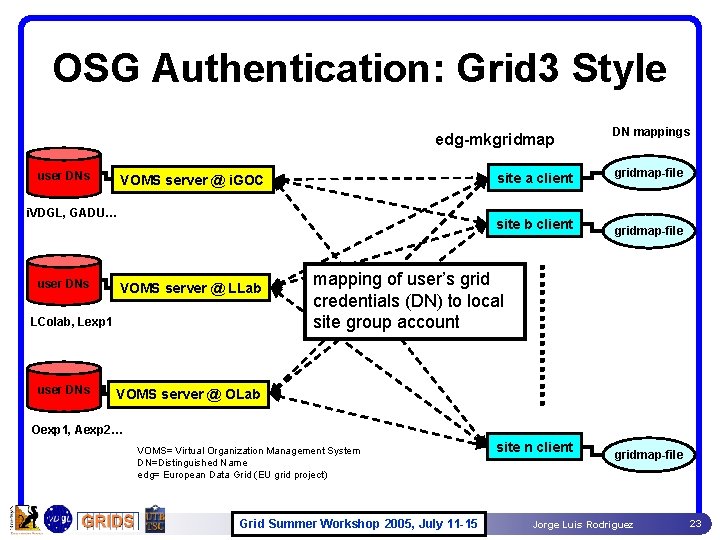

OSG Authentication: Grid 3 Style edg-mkgridmap user DNs site a client VOMS server @ i. GOC i. VDGL, GADU… user DNs site b client VOMS server @ LLab LColab, Lexp 1 user DNs DN mappings gridmap-file mapping of user’s grid credentials (DN) to local site group account VOMS server @ OLab Oexp 1, Aexp 2… VOMS= Virtual Organization Management System DN=Distinguished Name edg= European Data Grid (EU grid project) Grid Summer Workshop 2005, July 11 -15 site n client gridmap-file Jorge Luis Rodriguez 23

The Privilege Project Application of a Role Based Access Control model for OSG An advanced authorization mechanism Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 24

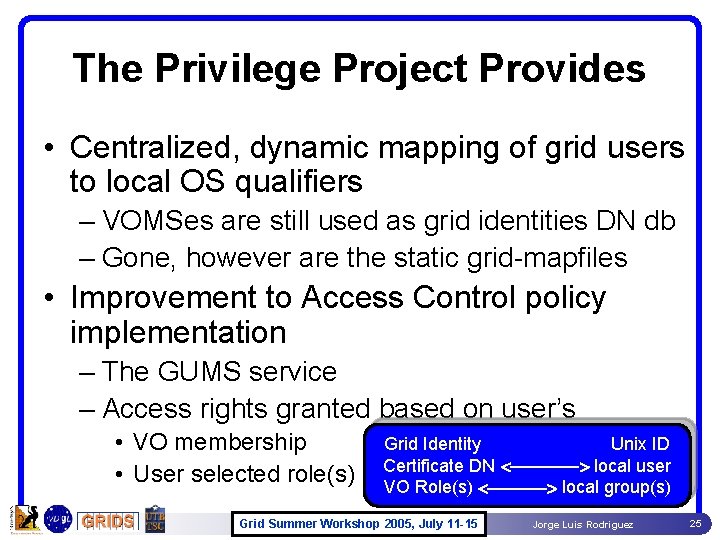

The Privilege Project Provides • Centralized, dynamic mapping of grid users to local OS qualifiers – VOMSes are still used as grid identities DN db – Gone, however are the static grid-mapfiles • Improvement to Access Control policy implementation – The GUMS service – Access rights granted based on user’s • VO membership • User selected role(s) Grid Identity Unix ID Certificate DN <-------> local user VO Role(s) <------> local group(s) Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 25

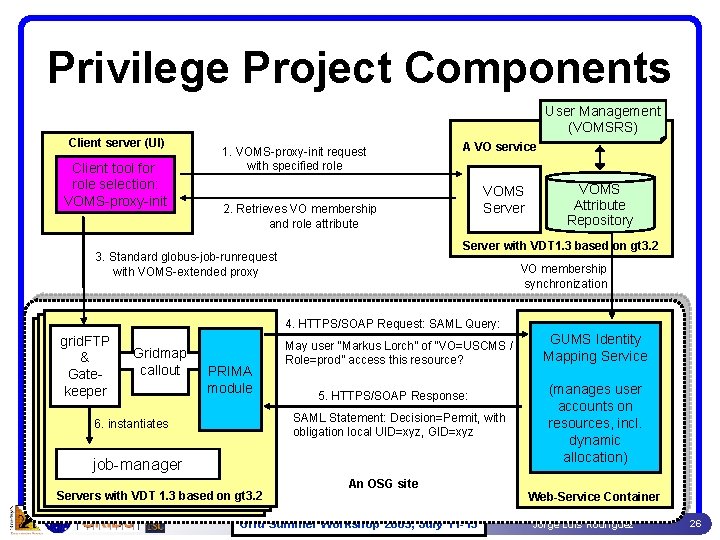

Privilege Project Components User Management (VOMSRS) Client server (UI) Client tool for role selection: VOMS-proxy-init 1. VOMS-proxy-init request with specified role A VO service VOMS Server 2. Retrieves VO membership and role attribute VOMS Attribute Repository Server with VDT 1. 3 based on gt 3. 2 3. Standard globus-job-runrequest with VOMS-extended proxy VO membership synchronization 4. HTTPS/SOAP Request: SAML Query: grid. FTP & Gatekeeper Gridmap callout PRIMA module May user “Markus Lorch” of “VO=USCMS / Role=prod” access this resource? 5. HTTPS/SOAP Response: SAML Statement: Decision=Permit, with obligation local UID=xyz, GID=xyz 6. instantiates job-manager Servers with VDT based gt 3. 2 Server with VDT 1. 3 based onon GT 3. 2 Server with VDT 1. 3 based on GT 3. 2 An OSG site Grid Summer Workshop 2005, July 11 -15 GUMS Identity Mapping Service (manages user accounts on resources, incl. dynamic allocation) Web-Service Container Jorge Luis Rodriguez 26

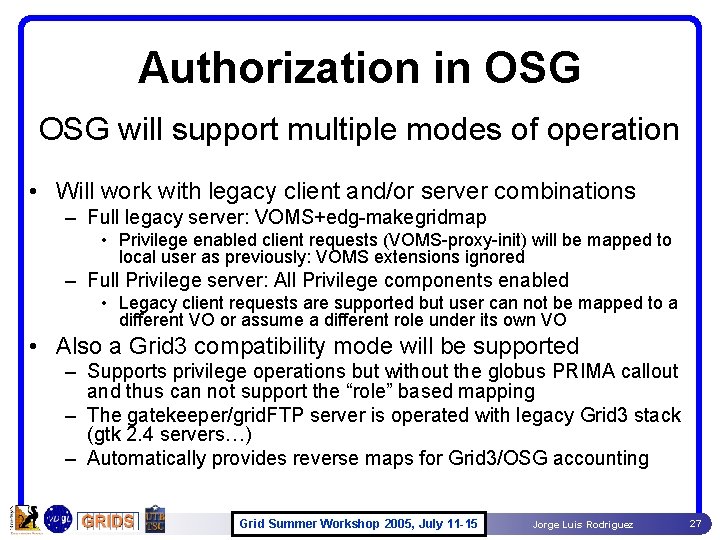

Authorization in OSG will support multiple modes of operation • Will work with legacy client and/or server combinations – Full legacy server: VOMS+edg-makegridmap • Privilege enabled client requests (VOMS-proxy-init) will be mapped to local user as previously: VOMS extensions ignored – Full Privilege server: All Privilege components enabled • Legacy client requests are supported but user can not be mapped to a different VO or assume a different role under its own VO • Also a Grid 3 compatibility mode will be supported – Supports privilege operations but without the globus PRIMA callout and thus can not support the “role” based mapping – The gatekeeper/grid. FTP server is operated with legacy Grid 3 stack (gtk 2. 4 servers…) – Automatically provides reverse maps for Grid 3/OSG accounting Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 27

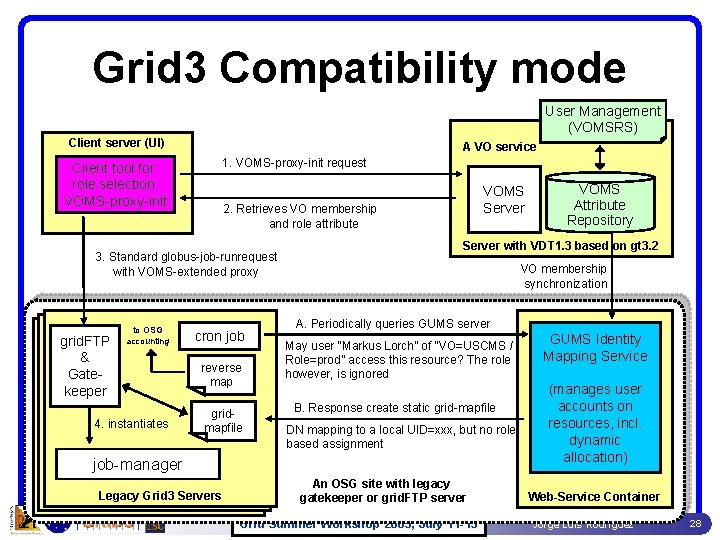

Grid 3 Compatibility mode User Management (VOMSRS) Client server (UI) Client tool for role selection: VOMS-proxy-init A VO service 1. VOMS-proxy-init request 2. Retrieves VO membership and role attribute 3. Standard globus-job-runrequest with VOMS-extended proxy grid. FTP & Gatekeeper to OSG accounting 4. instantiates VOMS Server cron job reverse map gridmapfile Server with VDT 1. 3 based on gt 3. 2 VO membership synchronization A. Periodically queries GUMS server May user “Markus Lorch” of “VO=USCMS / Role=prod” access this resource? The role however, is ignored B. Response create static grid-mapfile DN mapping to a local UID=xxx, but no role based assignment job-manager Legacy Grid 3 Servers Serverwith VDT 1. 3 based on. GT 3. 2 Server VDT on VOMS Attribute Repository An OSG site with legacy gatekeeper or grid. FTP server Grid Summer Workshop 2005, July 11 -15 GUMS Identity Mapping Service (manages user accounts on resources, incl. dynamic allocation) Web-Service Container Jorge Luis Rodriguez 28

MI S Inf Mon orm ito ati ring on Sy & ste ms OSG Grid Monitoring Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 29

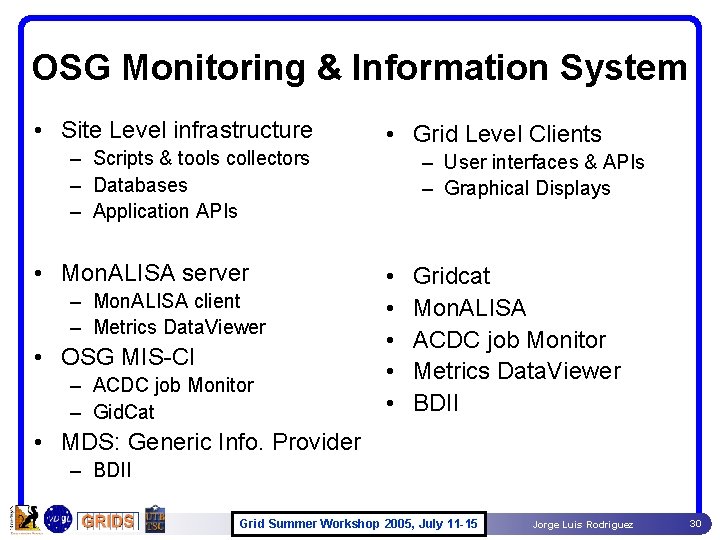

OSG Monitoring & Information System • Site Level infrastructure – Scripts & tools collectors – Databases – Application APIs • Mon. ALISA server – Mon. ALISA client – Metrics Data. Viewer • OSG MIS-CI – ACDC job Monitor – Gid. Cat • Grid Level Clients – User interfaces & APIs – Graphical Displays • • • Gridcat Mon. ALISA ACDC job Monitor Metrics Data. Viewer BDII • MDS: Generic Info. Provider – BDII Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 30

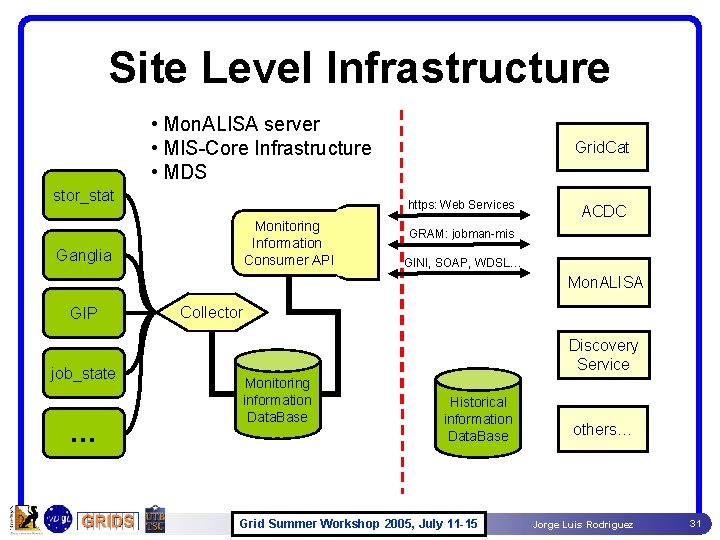

Site Level Infrastructure • Mon. ALISA server • MIS-Core Infrastructure • MDS stor_stat Grid. Cat https: Web Services Monitoring Information Consumer API Ganglia ACDC GRAM: jobman-mis GINI, SOAP, WDSL… Mon. ALISA GIP job_state … Collector Discovery Service Monitoring information Data. Base Historical information Data. Base Grid Summer Workshop 2005, July 11 -15 others… Jorge Luis Rodriguez 31

OSG Grid Level Clients • Tools provide basic information about OSG resources – Resource catalog: official tally of OSG sites – Resource discovery: what services are available, where are they and how do I access it – Metrics Information: Usage of resources over time • Used to asses scheduling priorities – Where and when should I send my jobs? – Where can I put my output? • Used to monitor health and status of the Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 32

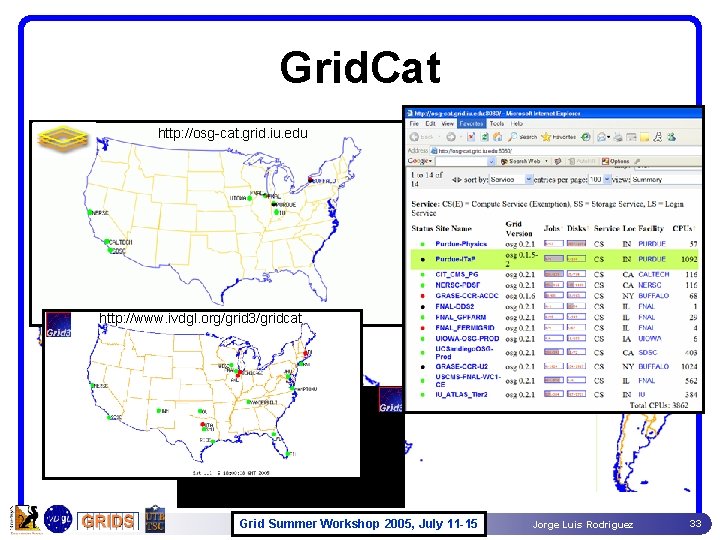

Grid. Cat http: //osg-cat. grid. iu. edu http: //www. ivdgl. org/grid 3/gridcat Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 33

Mon. ALISA Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 34

Pro vis ion ing OSG Provisioning • OSG Software Cache • OSG Meta Packager • Grid Level Services Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 35

The OSG Software Cache • Most software comes from the VDT • OSG components include – VDT configuration scripts – Some OSG specific packages too • Pacman is the OSG Meta-packager – This is how we deliver the entire cache to resources Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 36

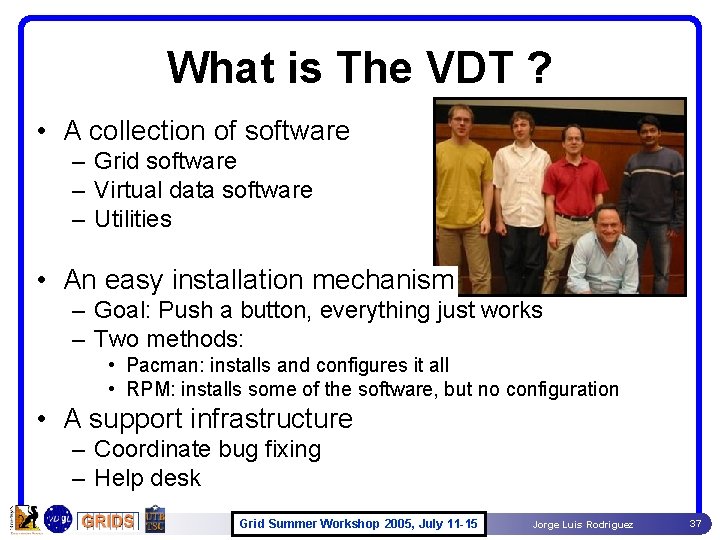

What is The VDT ? • A collection of software – Grid software – Virtual data software – Utilities • An easy installation mechanism – Goal: Push a button, everything just works – Two methods: • Pacman: installs and configures it all • RPM: installs some of the software, but no configuration • A support infrastructure – Coordinate bug fixing – Help desk Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 37

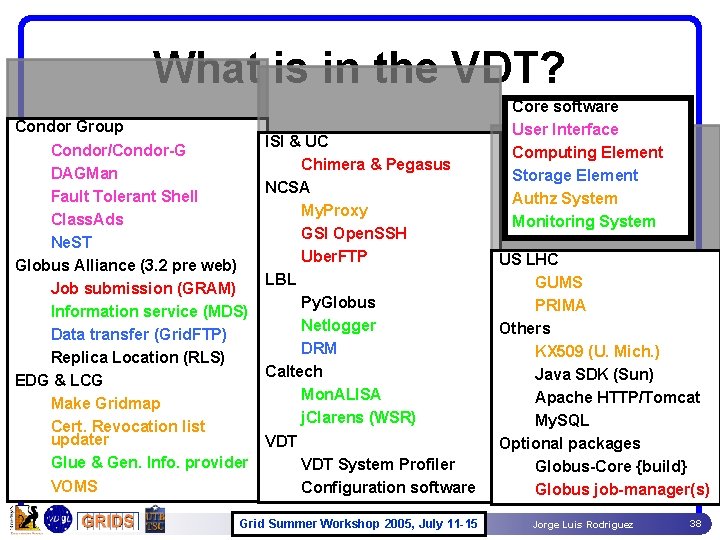

What is in the VDT? Condor Group Condor/Condor-G DAGMan Fault Tolerant Shell Class. Ads Ne. ST Globus Alliance (3. 2 pre web) Job submission (GRAM) Information service (MDS) Data transfer (Grid. FTP) Replica Location (RLS) EDG & LCG Make Gridmap Cert. Revocation list updater Glue & Gen. Info. provider VOMS ISI & UC Chimera & Pegasus NCSA My. Proxy GSI Open. SSH Uber. FTP LBL Py. Globus Netlogger DRM Caltech Mon. ALISA j. Clarens (WSR) VDT System Profiler Configuration software Grid Summer Workshop 2005, July 11 -15 Core software User Interface Computing Element Storage Element Authz System Monitoring System US LHC GUMS PRIMA Others KX 509 (U. Mich. ) Java SDK (Sun) Apache HTTP/Tomcat My. SQL Optional packages Globus-Core {build} Globus job-manager(s) Jorge Luis Rodriguez 38

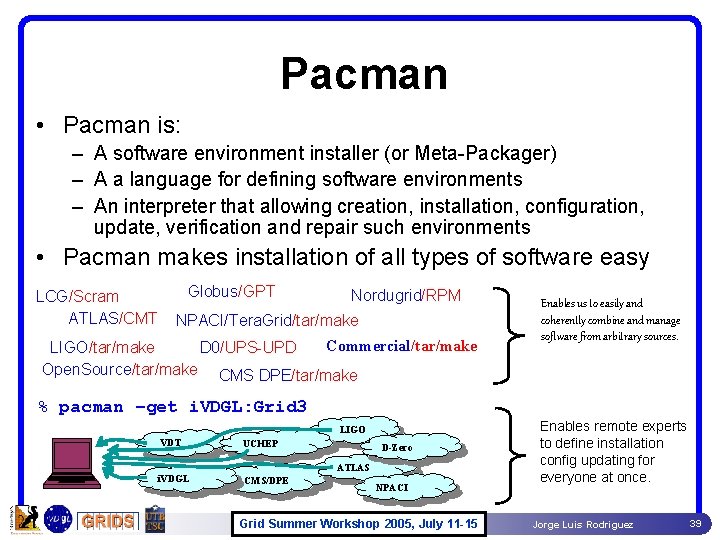

Pacman • Pacman is: – A software environment installer (or Meta-Packager) – A a language for defining software environments – An interpreter that allowing creation, installation, configuration, update, verification and repair such environments • Pacman makes installation of all types of software easy LCG/Scram ATLAS/CMT Globus/GPT Nordugrid/RPM NPACI/Tera. Grid/tar/make Commercial/tar/make D 0/UPS-UPD LIGO/tar/make Open. Source/tar/make CMS DPE/tar/make Enables us to easily and coherently combine and manage software from arbitrary sources. % pacman –get i. VDGL: Grid 3 LIGO VDT UCHEP i. VDGL CMS/DPE D-Zero ATLAS NPACI Grid Summer Workshop 2005, July 11 -15 Enables remote experts to define installation config updating for everyone at once. Jorge Luis Rodriguez 39

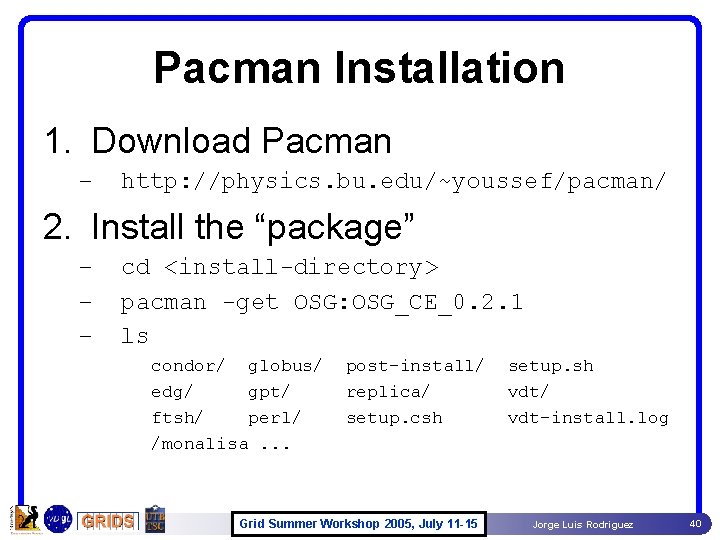

Pacman Installation 1. Download Pacman – http: //physics. bu. edu/~youssef/pacman/ 2. Install the “package” – – – cd <install-directory> pacman -get OSG: OSG_CE_0. 2. 1 ls condor/ globus/ edg/ gpt/ ftsh/ perl/ /monalisa. . . post-install/ replica/ setup. csh Grid Summer Workshop 2005, July 11 -15 setup. sh vdt/ vdt-install. log Jorge Luis Rodriguez 40

Grid Level Services • OSG Grid level Monitoring infrastructure – Mon. & Info. System(s) top level database – Monitoring Authz: mis user • OSG Operations infrastructure – Websites, Ops page, web servers … – Trouble ticket system • OSG top level Replica Location Service Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 41

Op era tion s OSG Operations Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 42

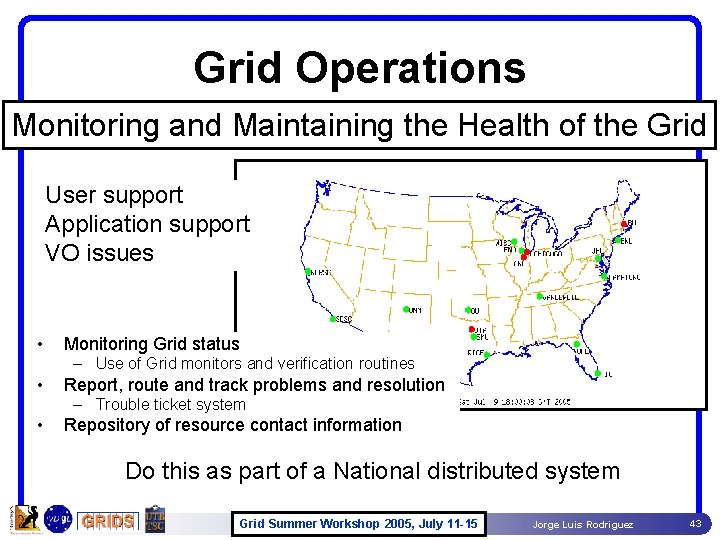

Grid Operations Monitoring and Maintaining the Health of the Grid User support Application support VO issues • Monitoring Grid status – Use of Grid monitors and verification routines • Report, route and track problems and resolution – Trouble ticket system • Repository of resource contact information Do this as part of a National distributed system Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 43

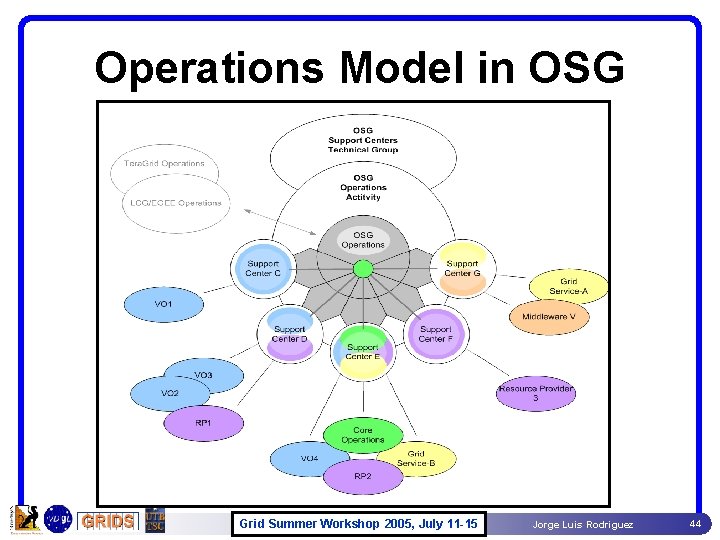

Operations Model in OSG Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 44

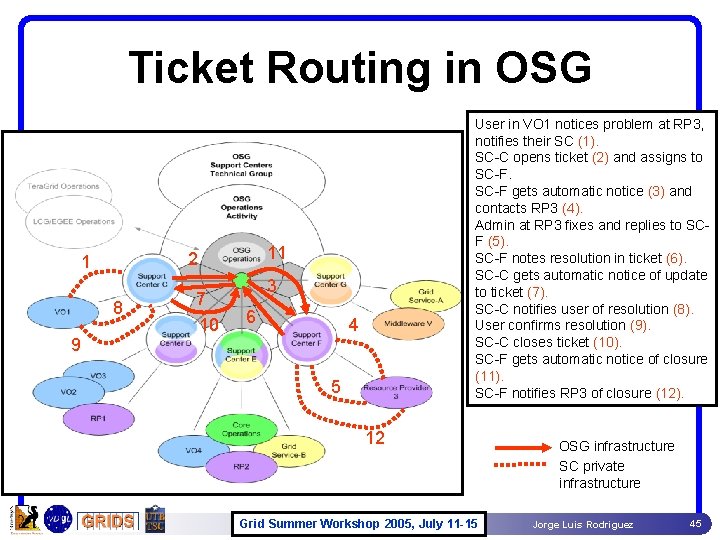

Ticket Routing in OSG 8 9 11 2 1 7 10 User in VO 1 notices problem at RP 3, notifies their SC (1). SC-C opens ticket (2) and assigns to SC-F gets automatic notice (3) and contacts RP 3 (4). Admin at RP 3 fixes and replies to SCF (5). SC-F notes resolution in ticket (6). SC-C gets automatic notice of update to ticket (7). SC-C notifies user of resolution (8). User confirms resolution (9). SC-C closes ticket (10). SC-F gets automatic notice of closure (11). SC-F notifies RP 3 of closure (12). 3 6 4 5 12 Grid Summer Workshop 2005, July 11 -15 OSG infrastructure SC private infrastructure Jorge Luis Rodriguez 45

Inte gra tion OSG Integration and Development Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 46

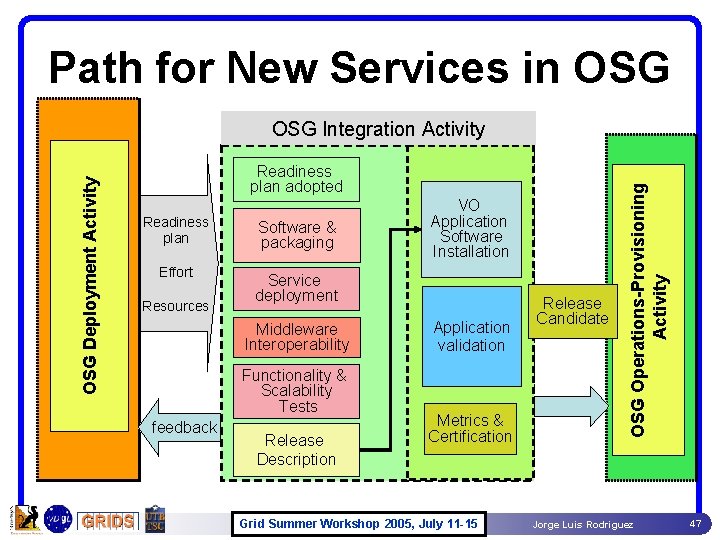

Path for New Services in OSG Readiness plan adopted Readiness plan Effort Resources Software & packaging Service deployment Middleware Interoperability Functionality & Scalability Tests feedback VO Application Software Installation Release Description Application validation Metrics & Certification Grid Summer Workshop 2005, July 11 -15 Release Candidate OSG Operations-Provisioning Activity OSG Deployment Activity OSG Integration Activity Jorge Luis Rodriguez 47

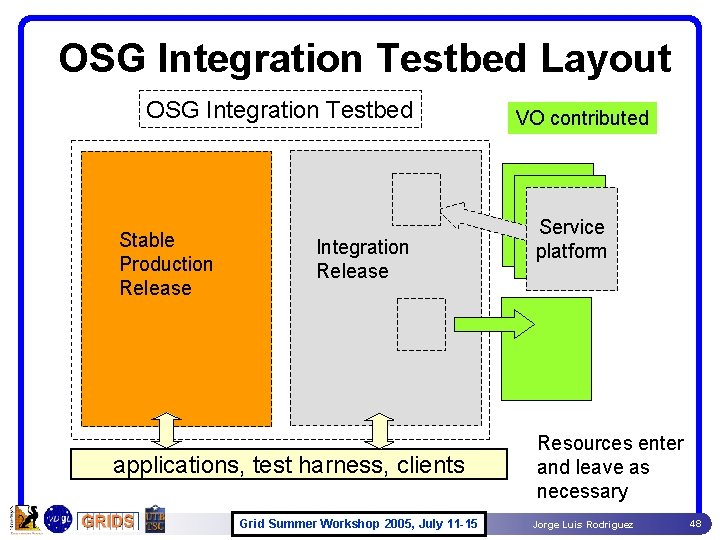

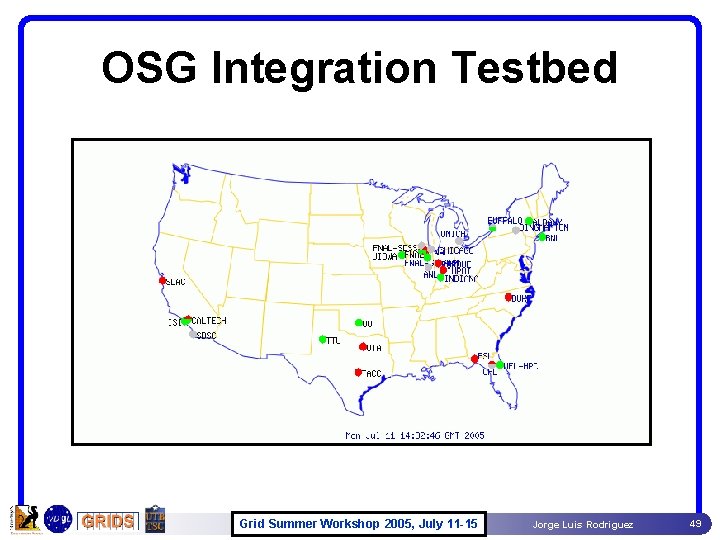

OSG Integration Testbed Layout OSG Integration Testbed Stable Production Release Integration Release applications, test harness, clients Grid Summer Workshop 2005, July 11 -15 VO contributed Service platform Resources enter and leave as necessary Jorge Luis Rodriguez 48

OSG Integration Testbed Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 49

OSG Further Work • Managed Storage • Grid Scheduling • More Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 50

Managing Storage • Problems: – No real way to control the movement of files into and out of site • Data is staged by fork processes! • Anyone with access to the site can submit such a request – There is also no space allocation control • A grid user can dump files of any size on a resource • If users do not cleanup sys admin have to Theseintervene can easily overwhelm a resource Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 51

Managing Storage • A Solution: SRM (Storage Resource Manager) • Grid enabled interface to put data on a site – Provides scheduling of data transfer requests – Provides reservation of storage space $> globus-url-copy srm: //ufdcache. phys. ufl. edu/cms/foo. rfz gsiftp: //cit. caltech. edu/data/bar. rfz TG-Storage • Technologies in the OSG pipeline – d. Cache/SRM (disk cache with SRM) d. Cach e • Provided by DESY & FNAL • SE(s) available to OSG as a service from the USCMS VO – DRM (Disk Resource Manager) DRM • Provided by LBL • Can be added on top of a normal UNIX file system Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 52

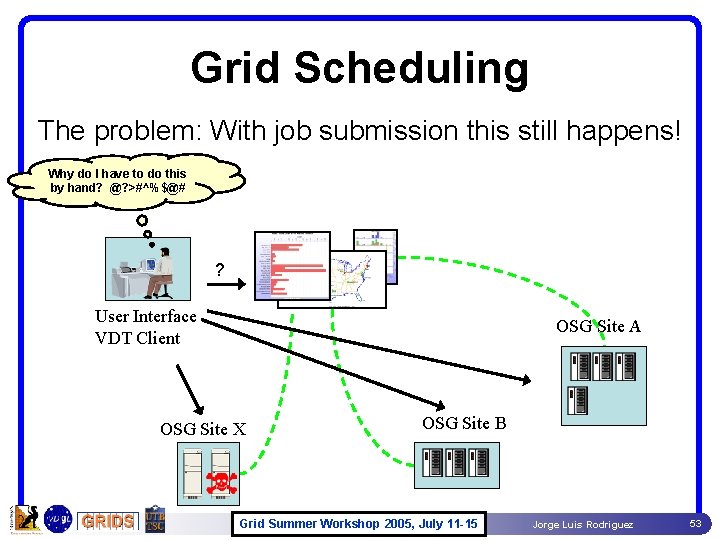

Grid Scheduling The problem: With job submission this still happens! Why do I have to do this by hand? @? >#^%$@# ? User Interface VDT Client OSG Site A OSG Site X OSG Site B Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 53

Grid Scheduling • Possible Solutions – Sphinx (Gri. Phy. N, UF) • Work flow based dynamic planning (late binding) • Policy based scheduling • More details ask Laukik – Pegasus (Gri. Phy. N, ISI/UC) • DAGman based planner and Grid scheduling (early binding) • More details in Work Flow lecture 6 – Resource Broker (LCG) • Match maker based Grid scheduling • Employed by application running on LCG Grid resources Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 54

Much More is Needed • Continue the hardening of middleware and other software components • Continue the process of federating with other Grids TG– Tera. Grid – LHC/EGEE Interoperability • Continue to synchronize the Monitoring and Information Service Infrastructure • Improve documentation • • • Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 55

Summary and Conclusions Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 56

Conclude with a simple example 1. 2. 3. Log on to a User Interface; Get your grid proxy “logon to the grid” grid-proxy-init Check OSG MIS clients • • To get list of available sites: depends on your VO affiliation To discover site specific information needed by your job ie, • • 4. 5. 6. 7. Available services: hostname, port numbers Tactical storage location: $app, $data, $tmp, $wntmp Install your application bins at selected sites Submit your jobs to selected sites via condor-G Check OSG MIS clients to see if jobs have completed Do something like this: If [ 0 ] then echo “Have a coffee (beer, margarita…)” else echo “its going to be a long night” fi Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 57

OSG Ribbon Cutting OSG Opens for Business on July 20 th, 2005 Grandfathered Grid 3 All e Som OSG-Integration Testbed Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 58

Conclusion Lots of progress since 1999 But a lot of work still remains! http: //www. opensciencegrid. org http: //www. griphyn. org http: //www. ivdgl. org http: //www. ppdg. org Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 59

The End Grid Summer Workshop 2005 July 11 -15 Jorge Luis Rodriguez 60

• Grid 3 and the Open Science Grid – Grid 3: A Shared Grid Infrastructure for Scientific Applications • • • – The Open Science Grid • • • – Grid scheduling Managed Storage Refinment of the middleware stack … Other Large Scale Grids – – • OSG Grid Schedule What is still missing – – – • The OSG integration testbed The OSG development cycle OSG Current State • • • Operations Model Operations Operation in Grid 3 OSG Development and Integration (Integration Activity) • • – Monitoring infrastructure Grid. Cat Mon. ALISA MDS: Glue and the GIP ACDC OSG Operations (Support Centers Technical Group) • • – Packaging Software Components: The VDT Grid level services OSG Grid Level Monitoring (MIS Technical Group) • • • – The OSG Consortium OSG organization OSG Technical Groups and Activities OSG Provisioning (Provision Activity) • • • – Introduction The Grid 3 Grid Metrics The LCG Tera. Grid Summary and Conclusion Grid Summer Workshop 2005, July 11 -15 Jorge Luis Rodriguez 61

- Slides: 61