Building Fast Accurate Deep Learning Models with Multiobjective

Building Fast & Accurate Deep Learning Models with Multi-objective Hyperparameter Optimization Dr. Kevin Duh JHU HLTCOE

Motivation We’re often focused on model accuracy But practical considerations are important for deployment Model Size Inference Speed Training Cost Photo from Andreas Weith CO 2 emissions (Strubell, 2019) - GPU training, 1 Transformer: 192 lbs - air travel, 1 passenger NY-SF: 1984 lbs

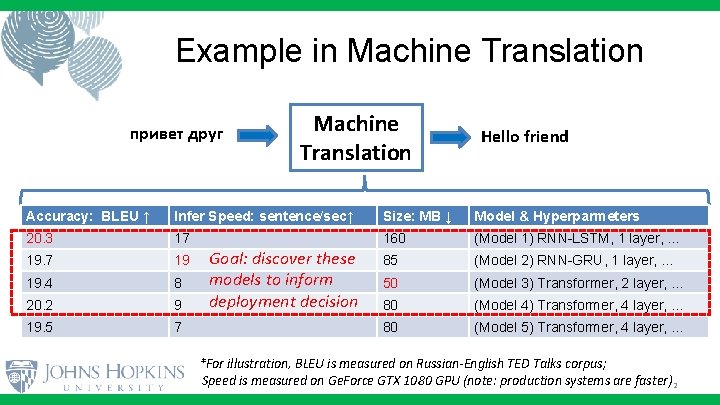

Example in Machine Translation привет друг Machine Translation Hello friend Accuracy: BLEU ↑ Infer Speed: sentence/sec↑ Size: MB ↓ Model & Hyperparmeters 20. 3 17 160 (Model 1) RNN-LSTM, 1 layer, … 19. 7 19 85 (Model 2) RNN-GRU, 1 layer, … 19. 4 8 50 (Model 3) Transformer, 2 layer, … 20. 2 9 80 (Model 4) Transformer, 4 layer, … 19. 5 7 80 (Model 5) Transformer, 4 layer, … Goal: discover these models to inform deployment decision *For illustration, BLEU is measured on Russian-English TED Talks corpus; Speed is measured on Ge. Force GTX 1080 GPU (note: production systems are faster) 2

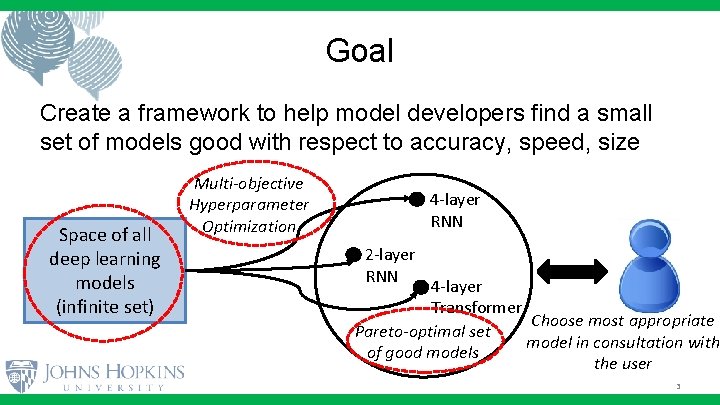

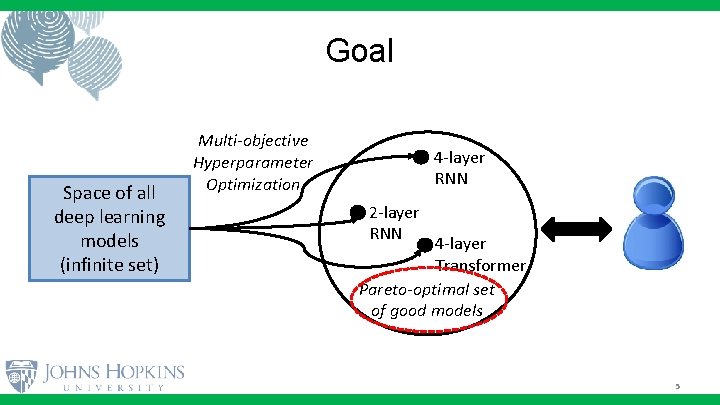

Goal Create a framework to help model developers find a small set of models good with respect to accuracy, speed, size Space of all deep learning models (infinite set) Multi-objective Hyperparameter Optimization 4 -layer RNN 2 -layer RNN 4 -layer Transformer Choose most appropriate Pareto-optimal set model in consultation with of good models the user 3

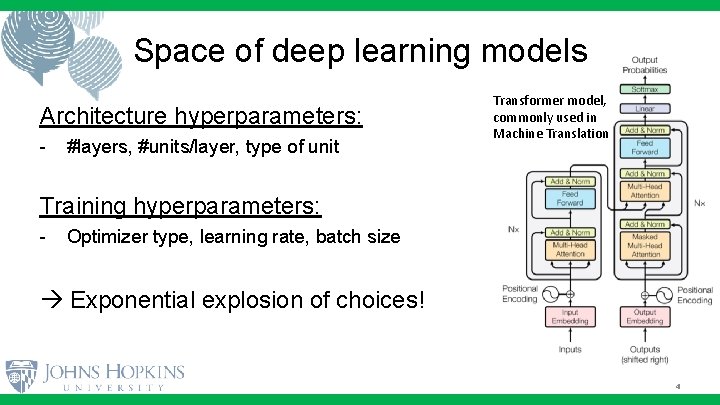

Space of deep learning models Architecture hyperparameters: - #layers, #units/layer, type of unit Transformer model, commonly used in Machine Translation Training hyperparameters: - Optimizer type, learning rate, batch size Exponential explosion of choices! 4

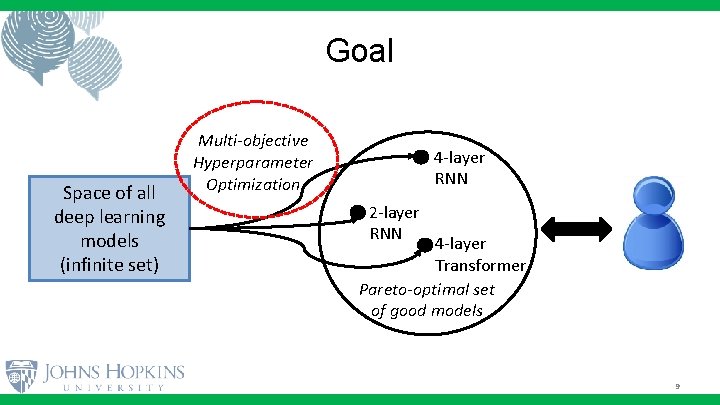

Goal Space of all deep learning models (infinite set) Multi-objective Hyperparameter Optimization 4 -layer RNN 2 -layer RNN 4 -layer Transformer Pareto-optimal set of good models 5

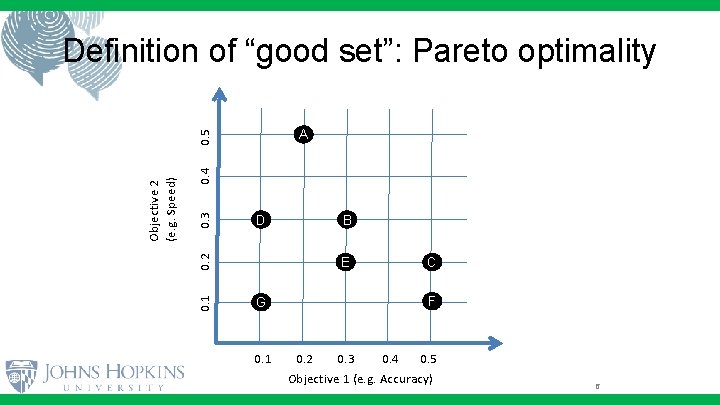

Definition of “good set”: Pareto optimality 0. 4 0. 3 B D 0. 2 0. 1 Objective 2 (e. g. Speed) 0. 5 A E C F G 0. 1 0. 2 0. 3 0. 4 0. 5 Objective 1 (e. g. Accuracy) 6

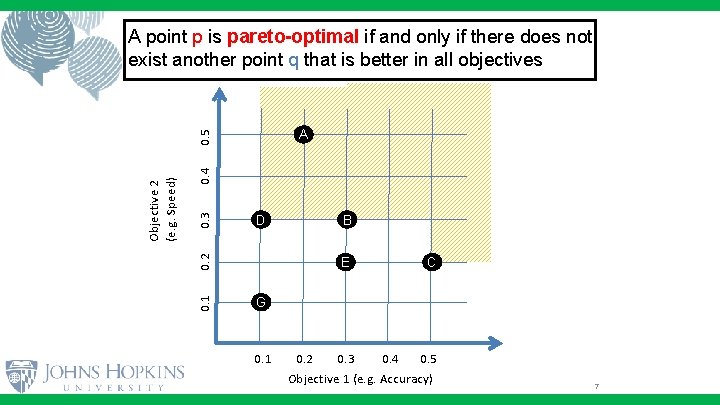

A point p is pareto-optimal if and only if there does not exist another point q that is better in all objectives 0. 4 0. 3 B D 0. 2 0. 1 Objective 2 (e. g. Speed) 0. 5 A E C G 0. 1 0. 2 0. 3 0. 4 0. 5 Objective 1 (e. g. Accuracy) 7

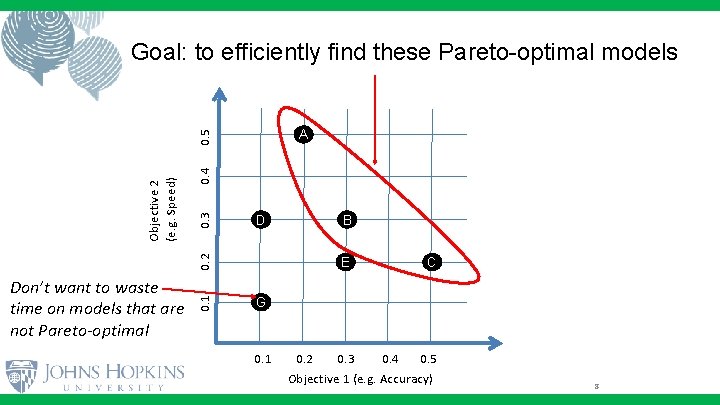

Goal: to efficiently find these Pareto-optimal models 0. 4 0. 3 B D Don’t want to waste time on models that are not Pareto-optimal 0. 1 0. 2 Objective 2 (e. g. Speed) 0. 5 A E C G 0. 1 0. 2 0. 3 0. 4 0. 5 Objective 1 (e. g. Accuracy) 8

Goal Space of all deep learning models (infinite set) Multi-objective Hyperparameter Optimization 4 -layer RNN 2 -layer RNN 4 -layer Transformer Pareto-optimal set of good models 9

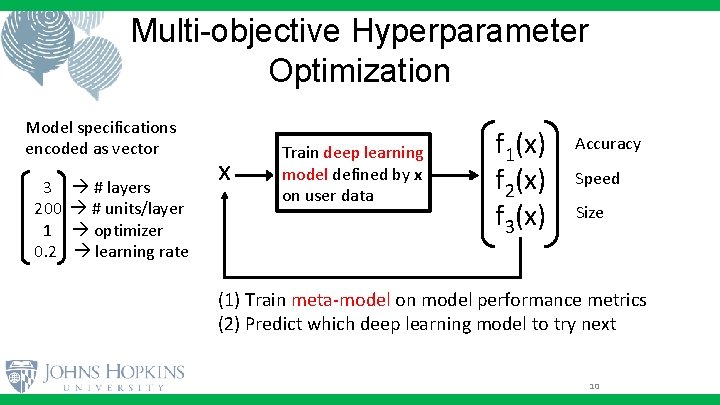

Multi-objective Hyperparameter Optimization Model specifications encoded as vector 3 200 1 0. 2 # layers # units/layer optimizer learning rate x Train deep learning model defined by x on user data f 1(x) f 2(x) f 3(x) Accuracy Speed Size (1) Train meta-model on model performance metrics (2) Predict which deep learning model to try next 10

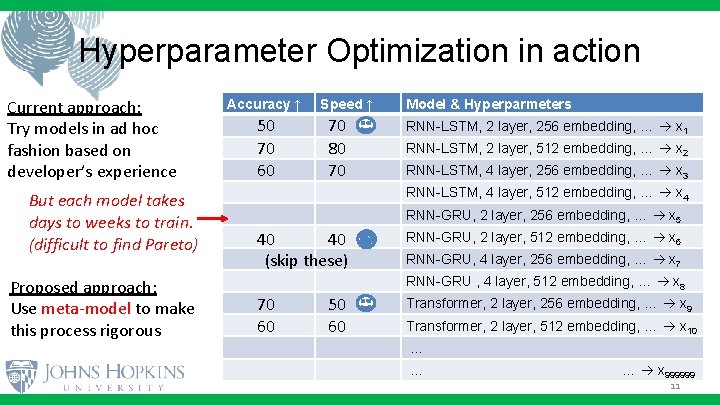

Hyperparameter Optimization in action Current approach: Try models in ad hoc fashion based on developer’s experience But each model takes days to weeks to train. (difficult to find Pareto) Proposed approach: Use meta-model to make this process rigorous Accuracy ↑ 50 70 60 Speed ↑ 70 80 70 Model & Hyperparmeters RNN-LSTM, 2 layer, 256 embedding, … x 1 RNN-LSTM, 2 layer, 512 embedding, … x 2 RNN-LSTM, 4 layer, 256 embedding, … x 3 RNN-LSTM, 4 layer, 512 embedding, … x 4 RNN-GRU, 2 layer, 256 embedding, … x 5 40 40 (skip these) RNN-GRU, 2 layer, 512 embedding, … x 6 RNN-GRU, 4 layer, 256 embedding, … x 7 RNN-GRU , 4 layer, 512 embedding, … x 8 70 60 50 60 Transformer, 2 layer, 256 embedding, … x 9 Transformer, 2 layer, 512 embedding, … x 10 … … … x 999999 11

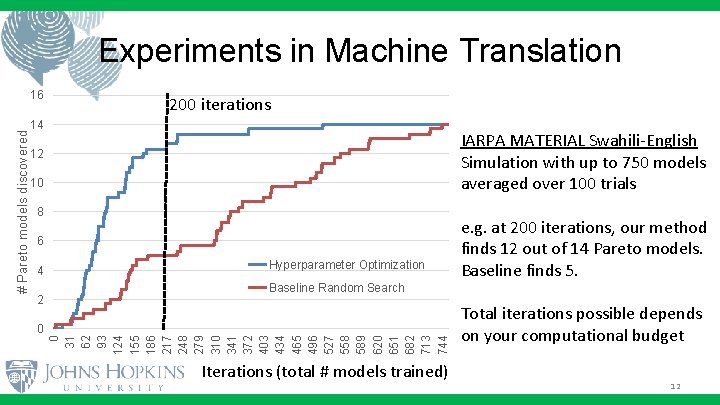

Experiments in Machine Translation 200 iterations 14 IARPA MATERIAL Swahili-English Simulation with up to 750 models averaged over 100 trials 12 10 8 6 4 2 0 Hyperparameter Optimization e. g. at 200 iterations, our method finds 12 out of 14 Pareto models. Baseline finds 5. Baseline Random Search 0 31 62 93 124 155 186 217 248 279 310 341 372 403 434 465 496 527 558 589 620 651 682 713 744 # Pareto models discovered 16 Iterations (total # models trained) Total iterations possible depends on your computational budget 12

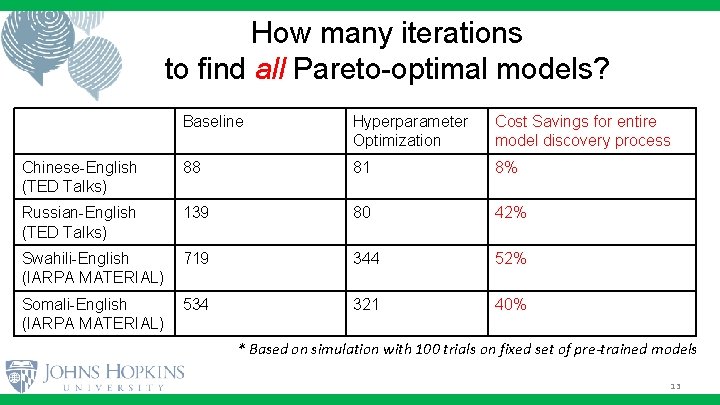

How many iterations to find all Pareto-optimal models? Baseline Hyperparameter Optimization Cost Savings for entire model discovery process Chinese-English (TED Talks) 88 81 8% Russian-English (TED Talks) 139 80 42% Swahili-English (IARPA MATERIAL) 719 344 52% Somali-English (IARPA MATERIAL) 534 321 40% * Based on simulation with 100 trials on fixed set of pre-trained models 13

Growing research area: Hyperparameter optimization & Auto. ML Evolutionary Algorithms Bayesian Optimization Neural Architecture Search Our research: Improve Robustness & Usability - Building benchmarks for rigorous evaluation, see (Zhang & Duh, TACL 2020) Exploring more tasks (Shinozaki, Watanabe, & Duh, Neuro-Evolution 2020) Alternative frameworks: model pruning & distillation (Gordon & Duh, WNGT 2020) Note: Hardware/software improvements impact speed & size orthogonal to this research 14

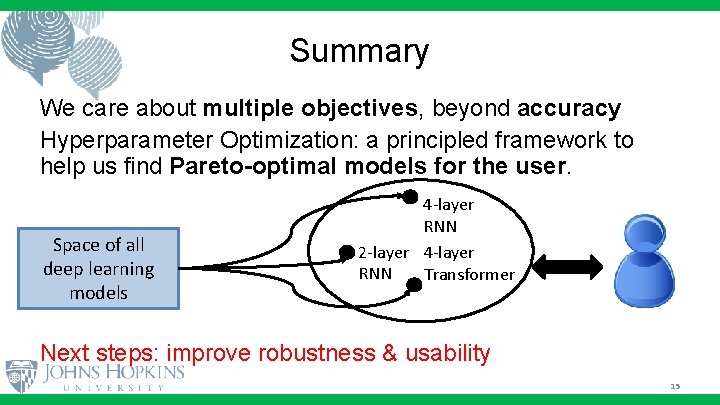

Summary We care about multiple objectives, beyond accuracy Hyperparameter Optimization: a principled framework to help us find Pareto-optimal models for the user. Space of all deep learning models 4 -layer RNN 2 -layer 4 -layer RNN Transformer Next steps: improve robustness & usability 15

Questions? Comments? 16

Additional Figures and Examples 17

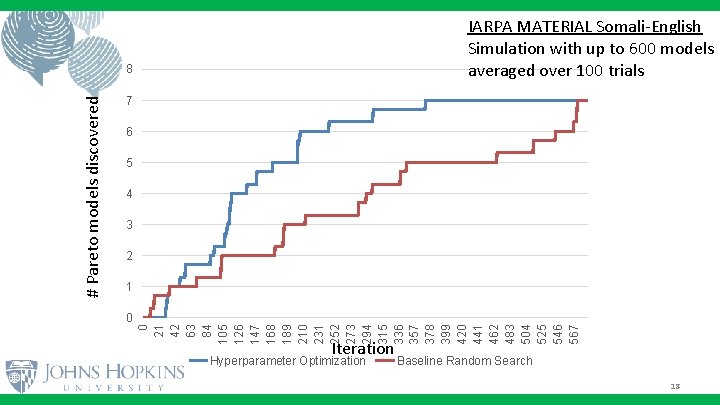

IARPA MATERIAL Somali-English Simulation with up to 600 models averaged over 100 trials 7 6 5 4 3 2 1 0 0 21 42 63 84 105 126 147 168 189 210 231 252 273 294 315 336 357 378 399 420 441 462 483 504 525 546 567 # Pareto models discovered 8 Iteration Hyperparameter Optimization Baseline Random Search 18

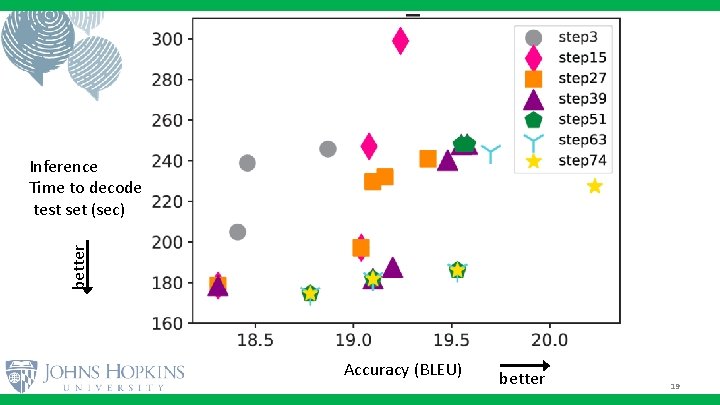

better Inference Time to decode test set (sec) Accuracy (BLEU) better 19

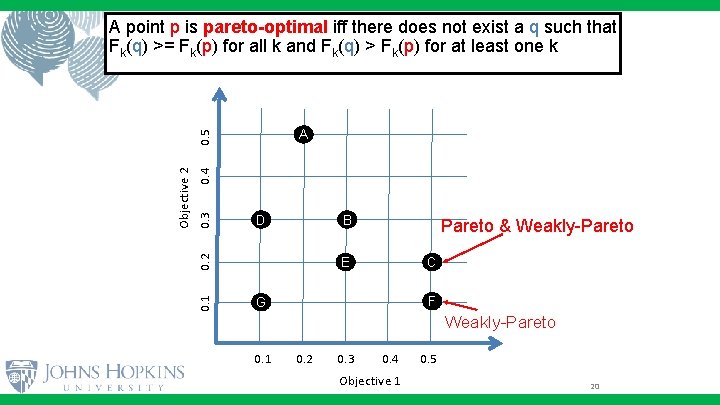

A point p is pareto-optimal iff there does not exist a q such that Fk(q) >= Fk(p) for all k and Fk(q) > Fk(p) for at least one k 0. 4 0. 3 B D 0. 2 0. 1 Objective 2 0. 5 A Pareto & Weakly-Pareto E C F G Weakly-Pareto 0. 1 0. 2 0. 3 0. 4 Objective 1 0. 5 20

- Slides: 21