Brief to Quality of Service and Experience DWG

Brief to Quality of Service and Experience DWG – Development of a Data and Services Quality Model I Burnell June 2021 © Crown copyright 2013 Dstl OFFICIAL

June 2021 © Crown copyright 2013 Dstl OFFICIAL

Introduction • The quality of services (and intrinsically the data provided by those services) needs to be described and monitored in order for the Spatial Data Infrastructure (SDI) to be able to optimise its delivery of services. • ‘Quality’ is an emerging subject for Defence with which it is relatively unfamiliar and there is a lack of consensus as to the contributing factors of spatial data and service quality and therefore no common definition: – Web service quality is inconsistently defined and implementation specific. – Data quality definitions focus on the production process and have limited value in the context of use. • There is no overarching policy specifically covering quality guidance, metrics and implementation in the context of SDI across the range of functions that impact on quality, i. e. from the supply of data at one end, through to search and discovery via web services at the other.

Approach • Define quality use cases and requirements: – Create use cases and requirements based on stakeholder input. – Investigate prioritised use cases using current examples of best practices and identifying suitable methodologies/approaches from stakeholders and academic input. • Create quality models: – Review current definitions of web service and data quality and create a draft data quality model (DQM) and web service quality model (WSQM). • Assess COTS tools and methodologies: – Assess planned tool implementation for service monitoring and optimisation under current work (i. e. Log. Rhythm). – Identify and assess relevant methodology for providing quality summaries to users (i. e. Content Maturity Model). – Identify and assess COTS data management tools against key features derived from DQM and requirements stages.

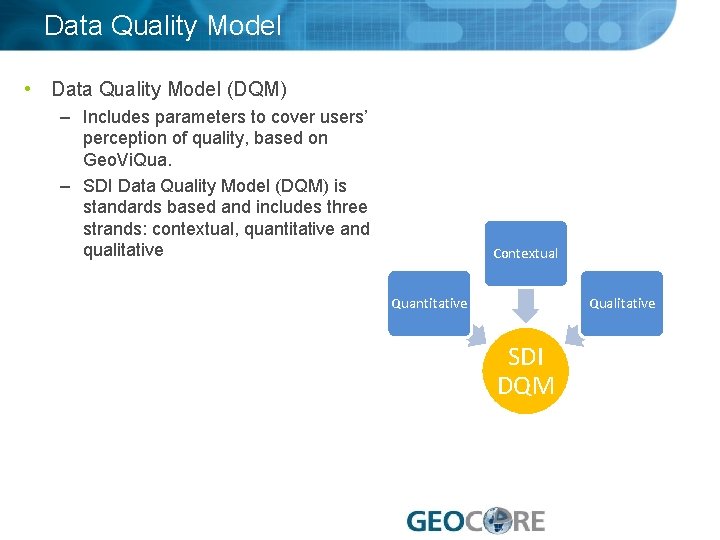

Data Quality Model • Data Quality Model (DQM) – Includes parameters to cover users’ perception of quality, based on Geo. Vi. Qua. – SDI Data Quality Model (DQM) is standards based and includes three strands: contextual, quantitative and qualitative Contextual Quantitative Qualitative SDI DQM

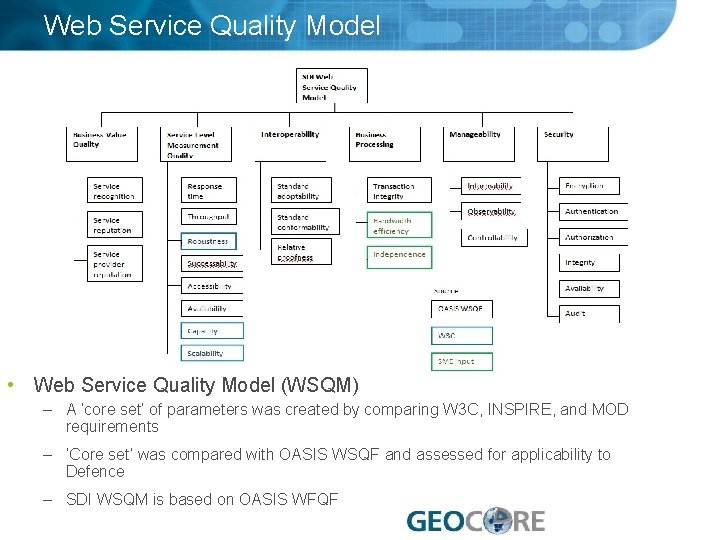

Web Service Quality Model • Web Service Quality Model (WSQM) – A ‘core set’ of parameters was created by comparing W 3 C, INSPIRE, and MOD requirements – ‘Core set’ was compared with OASIS WSQF and assessed for applicability to Defence – SDI WSQM is based on OASIS WFQF

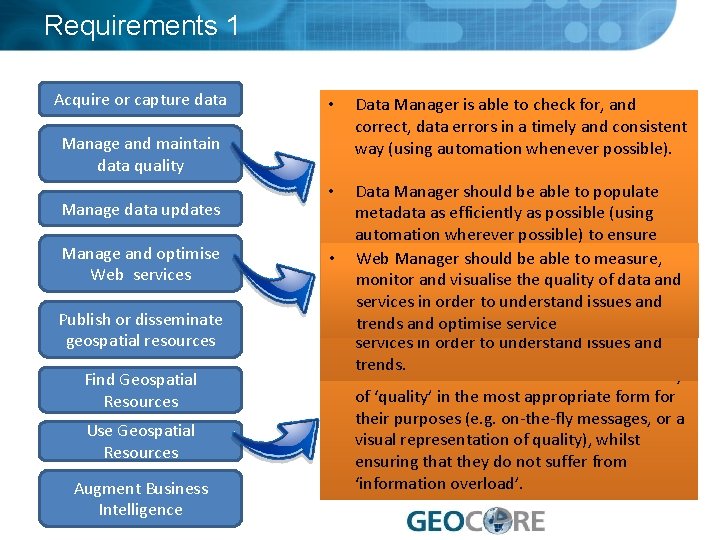

Requirements 1 Acquire or capture data • Data Manager is able to check for, and correct, data errors in a timely and consistent way (using automation whenever possible). • Data Manager should be able to populate metadata as efficiently as possible (using automation wherever possible) to ensure Consumer should be able to give qualitative completeness and quality of metadata. Web Manager should be dataset able to measure, feedback (e. g. ratings on suitability) monitor and visualise the quality of data and in order to augment subsequent user Data Manager should be able toissues measure, services in order to understand assessments of ‘quality’ during search and monitor and assess the quality of data and trends and optimise service discovery. services in order to understand issues and trends. Consumer should be able to view a summary of ‘quality’ in the most appropriate form for their purposes (e. g. on-the-fly messages, or a visual representation of quality), whilst ensuring that they do not suffer from ‘information overload’. Manage and maintain data quality Manage data updates Manage and optimise Web services Publish or disseminate geospatial resources Find Geospatial Resources Use Geospatial Resources Augment Business Intelligence • •

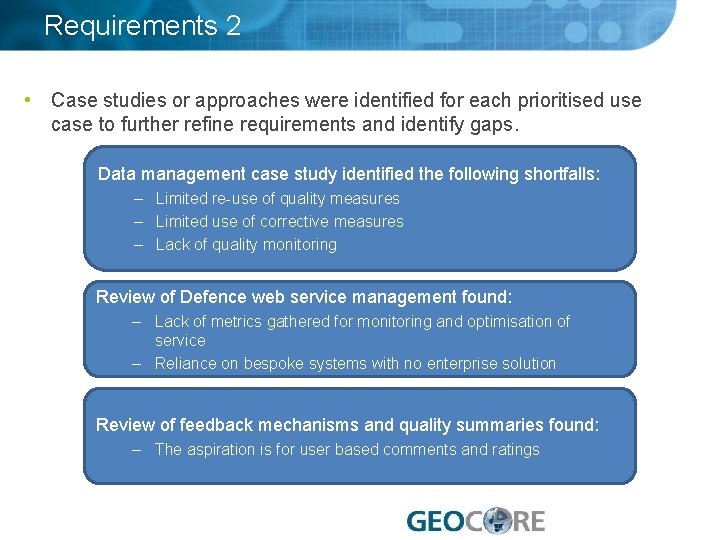

Requirements 2 • Case studies or approaches were identified for each prioritised use case to further refine requirements and identify gaps. Data management case study identified the following shortfalls: – Limited re-use of quality measures – Limited use of corrective measures – Lack of quality monitoring Review of Defence web service management found: – Lack of metrics gathered for monitoring and optimisation of service – Reliance on bespoke systems with no enterprise solution Review of feedback mechanisms and quality summaries found: – The aspiration is for user based comments and ratings

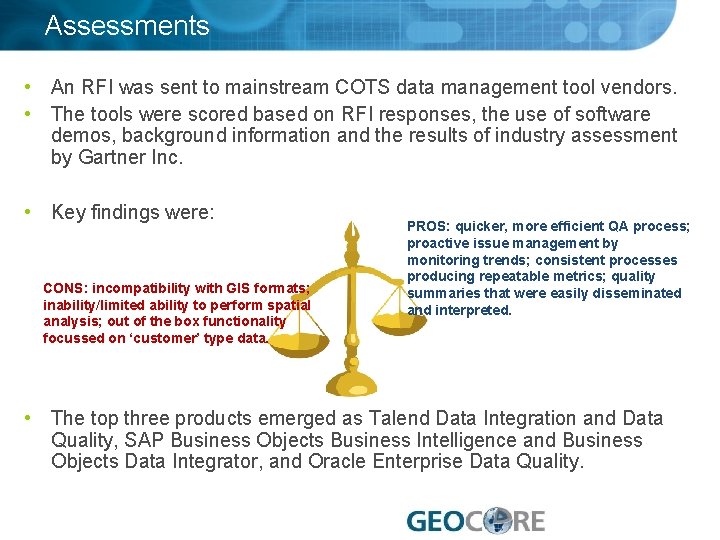

Assessments • An RFI was sent to mainstream COTS data management tool vendors. • The tools were scored based on RFI responses, the use of software demos, background information and the results of industry assessment by Gartner Inc. • Key findings were: CONS: incompatibility with GIS formats; inability/limited ability to perform spatial analysis; out of the box functionality focussed on ‘customer’ type data. PROS: quicker, more efficient QA process; proactive issue management by monitoring trends; consistent processes producing repeatable metrics; quality summaries that were easily disseminated and interpreted. • The top three products emerged as Talend Data Integration and Data Quality, SAP Business Objects Business Intelligence and Business Objects Data Integrator, and Oracle Enterprise Data Quality.

Conclusions User feedback: • Qualitative parameters, such as ‘user feedback’ are seen as high importance and are not included in quality specifications published by standards bodies. • The Content Maturity Model (CMM) describes an ideal methodology for communicating data quality to decision makers, as it uses a combination of producer and user centric input. However, it relies on user feedback mechanisms to be in place. Data quality management: • ISO 19157 describes quantitative parameter metrics, but there is a requirement for a common or minimum set to be defined, which will impact on the tool required to capture them. • The COTS data quality management tools assessed all provide advanced techniques for quality assurance and can provide additional metrics to support the SDI DQM. • They all support quicker, more efficient workflows for quality management and the ability to communicate and monitor results effectively, however, ‘quick fixes’ could be provided using existing or free tools. June 2021 © Crown copyright 2013 Dstl OFFICIAL

Conclusions Web service optimisation: • Definitions of quality parameters for web services are highly variable, as is the method used to capture quantitative metrics (e. g. response time). These need to be defined in common implementation guidance. • The WSQM parameters cover functional and non-functional requirements of a service and their definitions and example metrics should form the basis for future web service requirement documents. • Implementation of Log. Rhythm software as part of SDI supports the provision of SDI WSQM metrics with the exception of the ‘business value quality’ parameter and configuration management within the ‘manageability quality’ parameter. June 2021 © Crown copyright 2013 Dstl OFFICIAL

Questions June 2021 © Crown copyright 2013 Dstl OFFICIAL

- Slides: 12