Bridging the Semantic Gap with SQL Query Logs

Bridging the Semantic Gap with SQL Query Logs in Natural Language Interfaces to Databases Christopher Baik, H. V. Jagadish, Yunyao Li 2019 IEEE 35 TH INTERNATIONAL CONFERENCE ON DATA ENGINEERING (ICDE) 2019/10/11 yama

Author Christopher Baik H. V. Jagadish Yunyao Li University of Michigan, Ann Arbor, MI, USA IBM Research - Almaden, San Jose, CA, USA 1

1. INTRODUCTION Today’s technology-rich world is overwhelmingly dependent on database systems. While relational databases have been around for decades, query languages to access databases, such as SQL, are unlikely to ever become common knowledge for the average end-user. A major goal in this area is to provide a means for users to query databases using everyday language via a natural language interface to database (NLIDB). 2

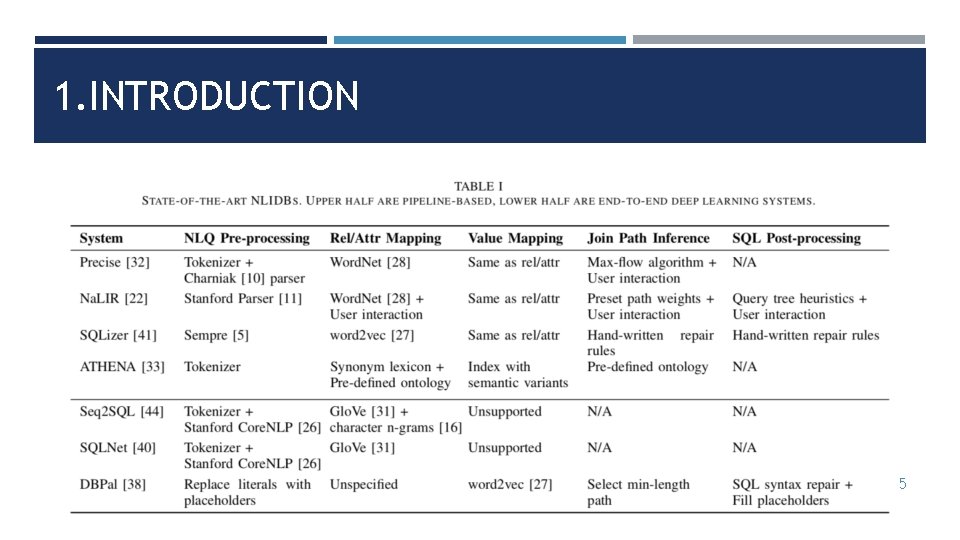

1. INTRODUCTION The task of an NLIDB has been primarily modeled as the problem of translating a natural language query (NLQ) into a SQL query. Two architectural approaches to solve the task: 1. the pipeline approach of converting an NLQ into intermediate representations then mapping these representations to SQL 2. the deep learning approach of using an end-to-end neural network to perform the translation 3

1. INTRODUCTION Two specific problems when translating NLQ to SQL: 1. keyword mapping individual keywords in the original NLQ to database elements 2. join path inference the process of selecting the relations and join conditions in the FROM clause of the final SQL query 4

1. INTRODUCTION 5

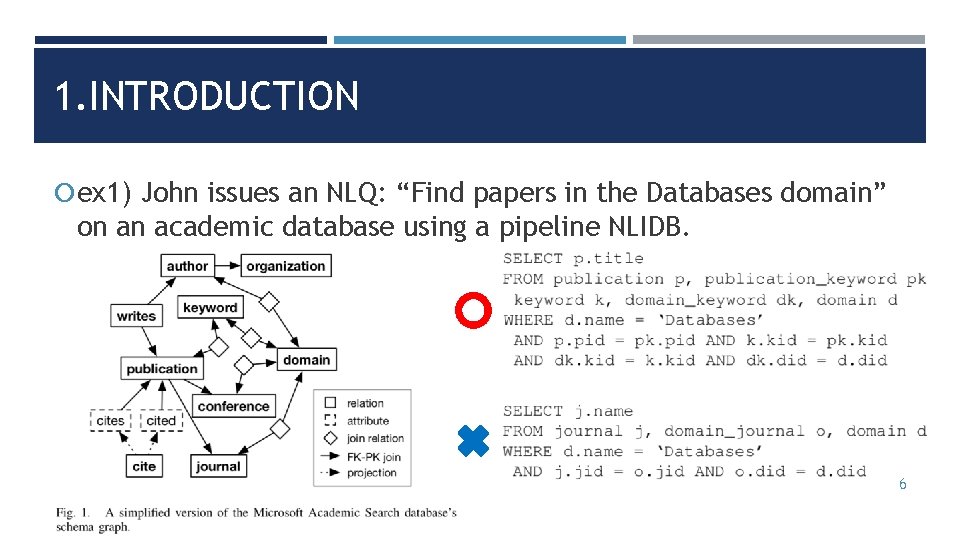

1. INTRODUCTION ex 1) John issues an NLQ: “Find papers in the Databases domain” on an academic database using a pipeline NLIDB. 6

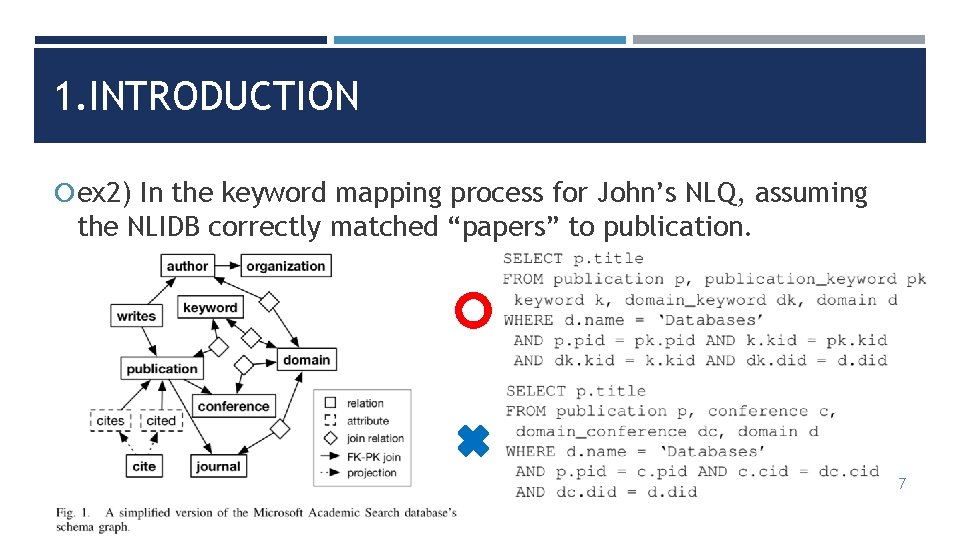

1. INTRODUCTION ex 2) In the keyword mapping process for John’s NLQ, assuming the NLIDB correctly matched “papers” to publication. 7

1. INTRODUCTION TEMPLAR: a system which augments existing pipeline-based NLIDBs with SQL query log information Three technical challenges: 1. selectively activate information in the SQL log for NLQ-SQL translation 2. allow the generation of new SQL queries not in the log 3. gracefully integrate log information with existing techniques 8

2. RELATED WORK natural language interfaces to databases (NLIDB) the pipeline-based approach the deep learning approach the need for a large set of NLQ to SQL pairs for each schema keyword search keyword mapping and join path inference problems using query logs 9

3. OVERVIEW 1. Preliminaries a schema graph: Gs = (V, E, w) a join path: (Vj, Ej, Vt) 10

3. OVERVIEW 2. Definitions a query fragment: c = (χ, τ) ex) SELECT t. a FROM table 1 t, table 2 u WHERE t. b = 15 AND t. id = u. id (t. a, SELECT), (table 1, FROM), (table 2, FROM), (t. b = 15, WHERE) a query fragment mapping: m = (s, c, σ) a configuration φ(S): a selection of exactly one query fragment mapping (sk, ck, σk) 11

3. OVERVIEW 3. Problem Definitions keyword mapping: Φ = MAPKEYWORDS(D, S, M) The goal of the problem is to return a list of configurations Φ ordered by likelihood. join path inference: J = INFERJOINS(Gs, BD) The goal is to return a list of join paths J on Gs ranked from most to least likely. 12

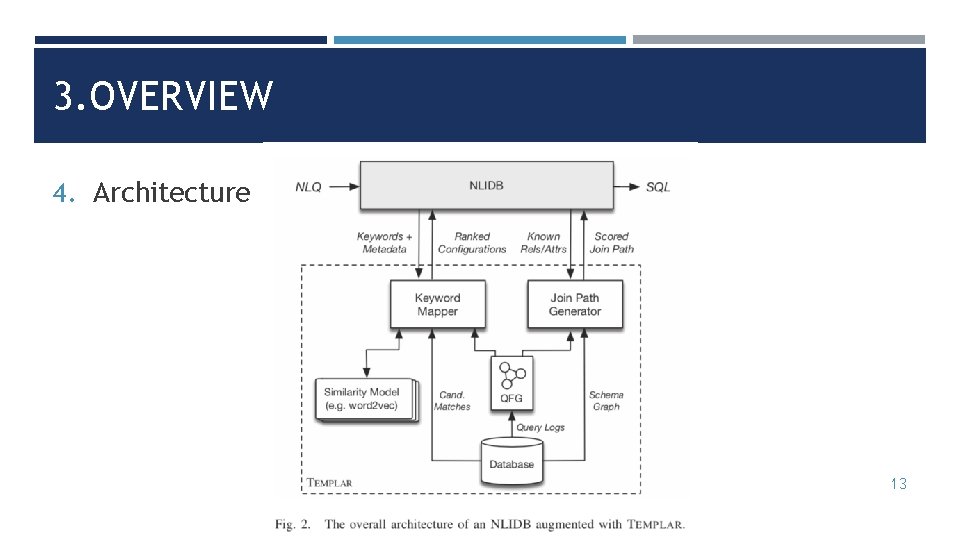

3. OVERVIEW 4. Architecture 13

3. OVERVIEW 5. NLIDB Prerequisites It must be able to parse the NLQ into keywords. The categories of metadata we expect as input in MAPKEYWORDS must be all obtainable using existing parser technology. 14

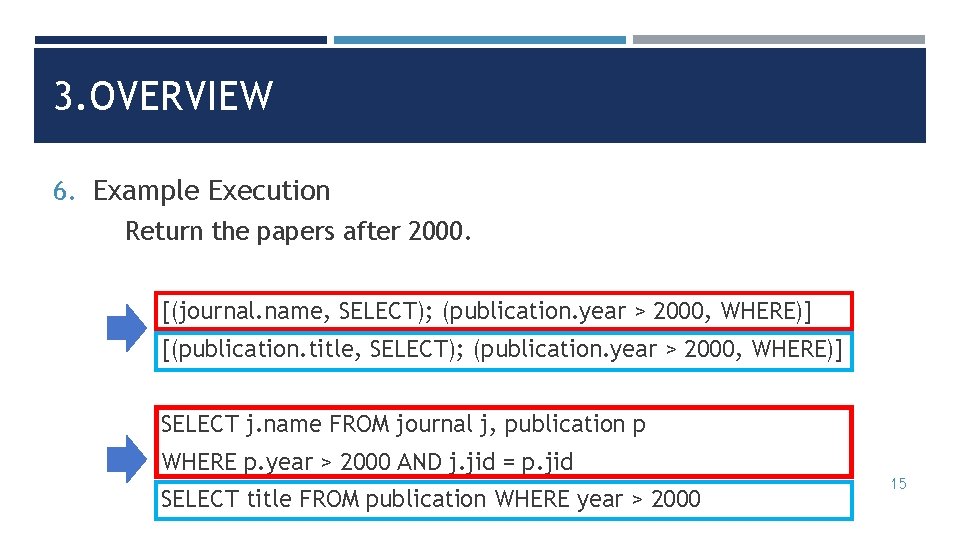

3. OVERVIEW 6. Example Execution Return the papers after 2000. [(journal. name, SELECT); (publication. year > 2000, WHERE)] [(publication. title, SELECT); (publication. year > 2000, WHERE)] SELECT j. name FROM journal j, publication p WHERE p. year > 2000 AND j. jid = p. jid SELECT title FROM publication WHERE year > 2000 15

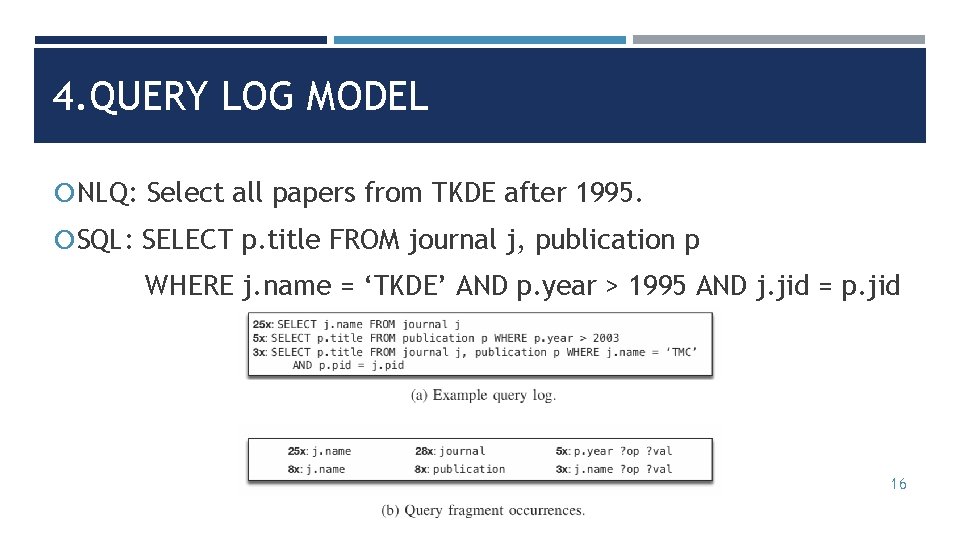

4. QUERY LOG MODEL NLQ: Select all papers from TKDE after 1995. SQL: SELECT p. title FROM journal j, publication p WHERE j. name = ‘TKDE’ AND p. year > 1995 AND j. jid = p. jid 16

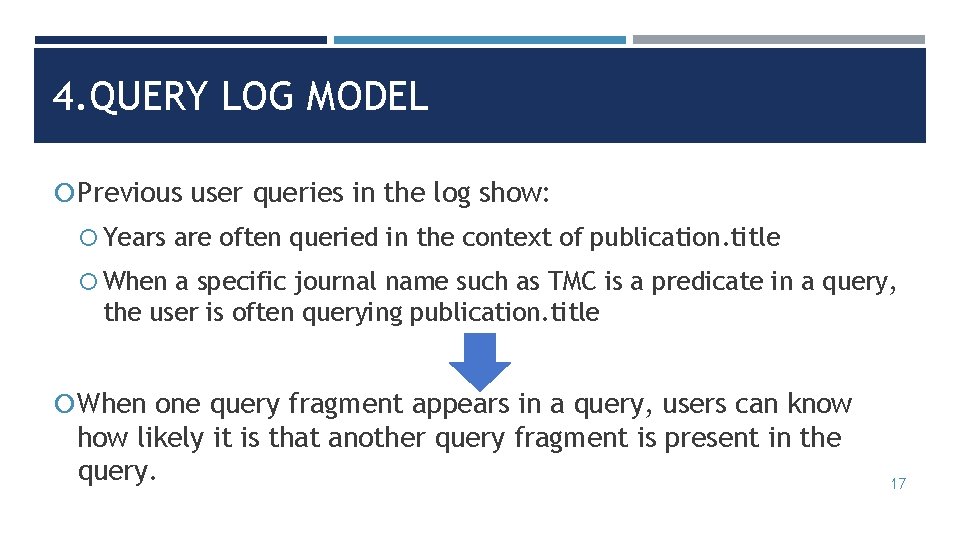

4. QUERY LOG MODEL Previous user queries in the log show: Years are often queried in the context of publication. title When a specific journal name such as TMC is a predicate in a query, the user is often querying publication. title When one query fragment appears in a query, users can know how likely it is that another query fragment is present in the query. 17

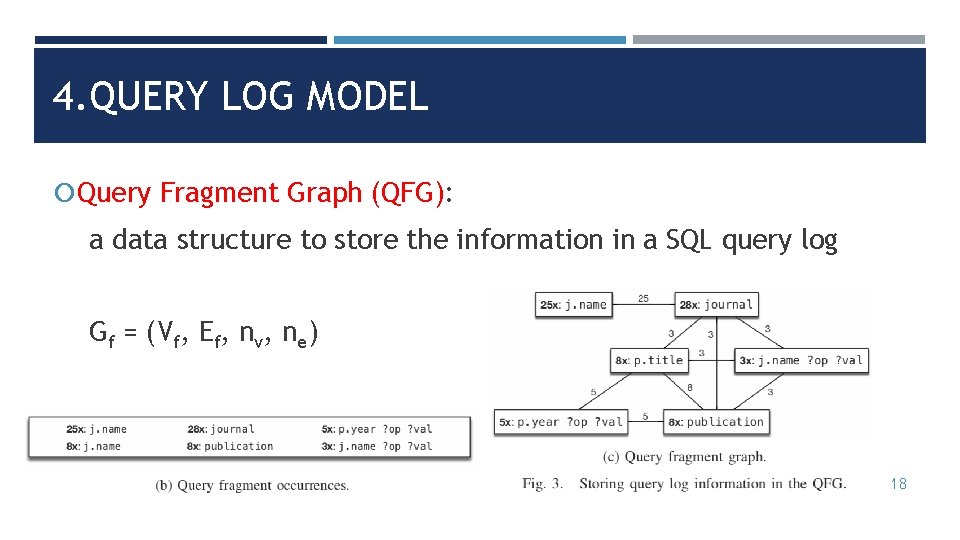

4. QUERY LOG MODEL Query Fragment Graph (QFG): a data structure to store the information in a SQL query log Gf = (Vf, Ef, nv, ne) 18

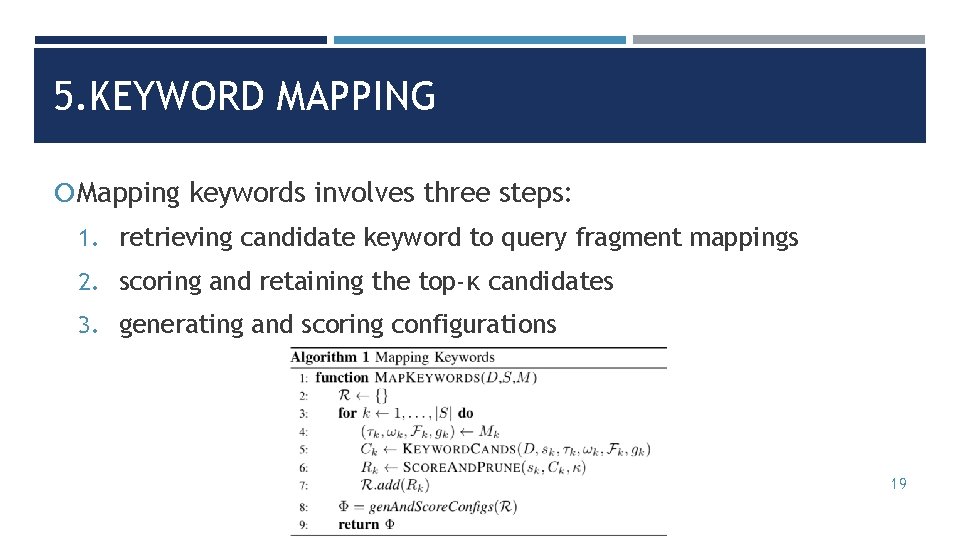

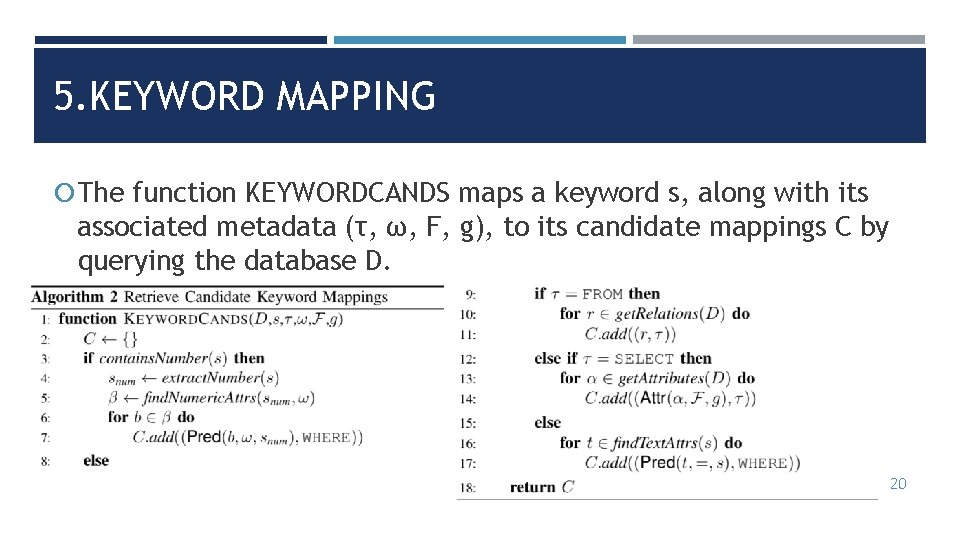

5. KEYWORD MAPPING Mapping keywords involves three steps: 1. retrieving candidate keyword to query fragment mappings 2. scoring and retaining the top-κ candidates 3. generating and scoring configurations 19

5. KEYWORD MAPPING The function KEYWORDCANDS maps a keyword s, along with its associated metadata (τ, ω, F, g), to its candidate mappings C by querying the database D. 20

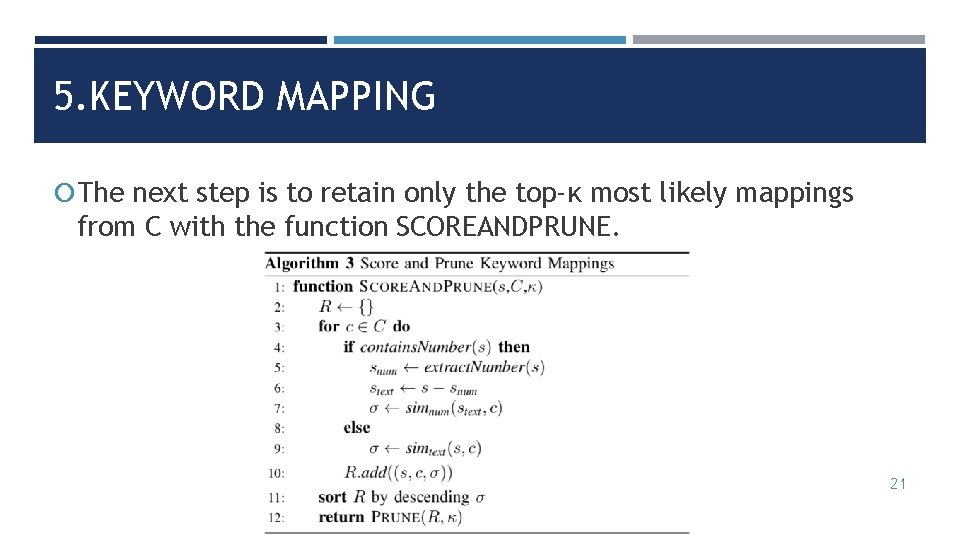

5. KEYWORD MAPPING The next step is to retain only the top-κ most likely mappings from C with the function SCOREANDPRUNE. 21

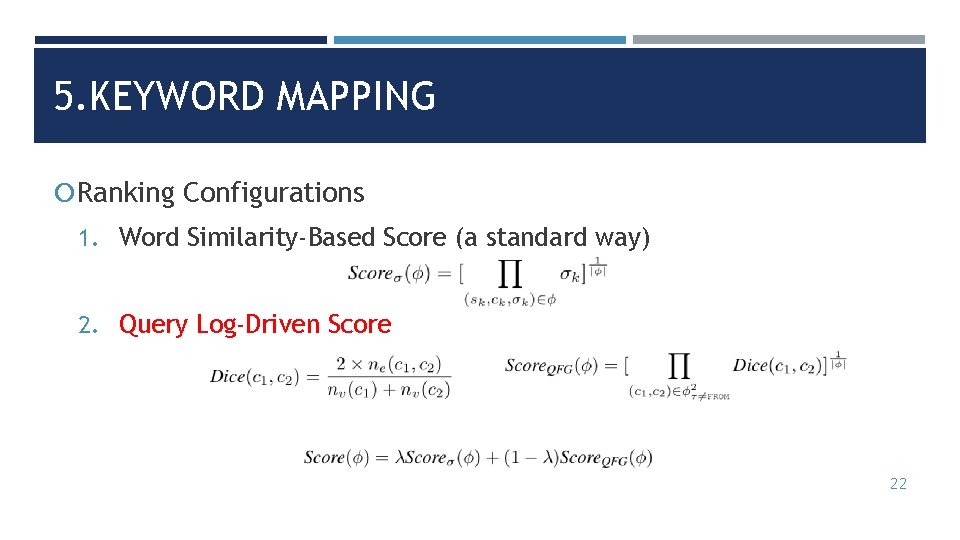

5. KEYWORD MAPPING Ranking Configurations 1. Word Similarity-Based Score (a standard way) 2. Query Log-Driven Score 22

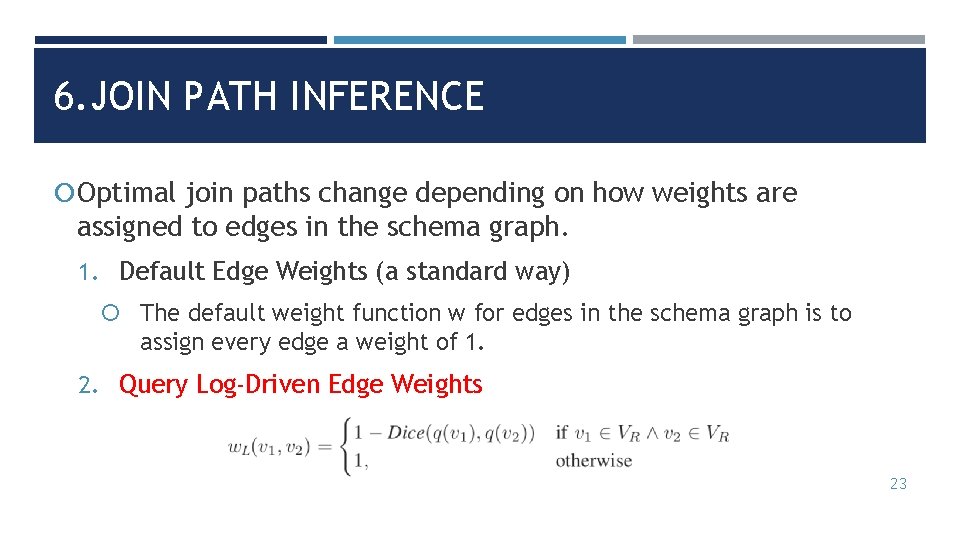

6. JOIN PATH INFERENCE Optimal join paths change depending on how weights are assigned to edges in the schema graph. 1. Default Edge Weights (a standard way) The default weight function w for edges in the schema graph is to assign every edge a weight of 1. 2. Query Log-Driven Edge Weights 23

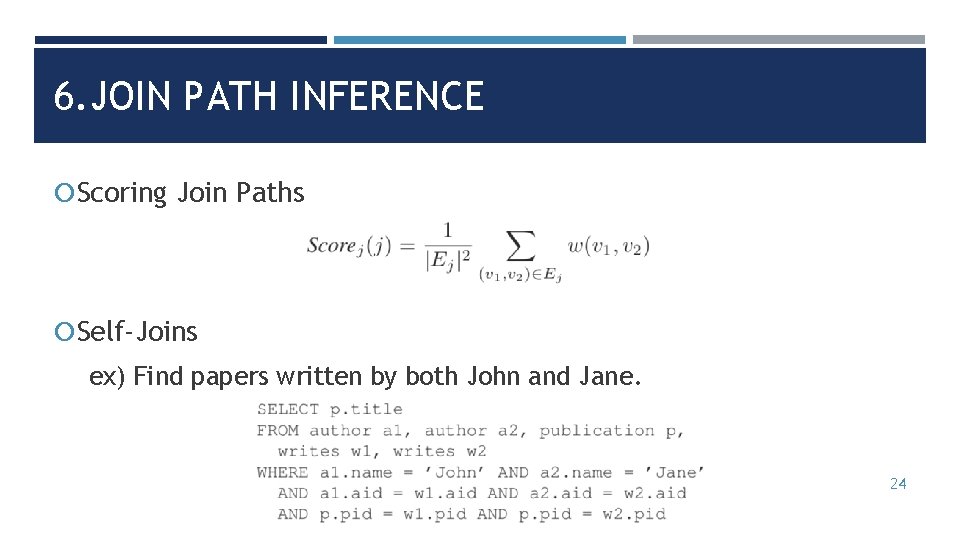

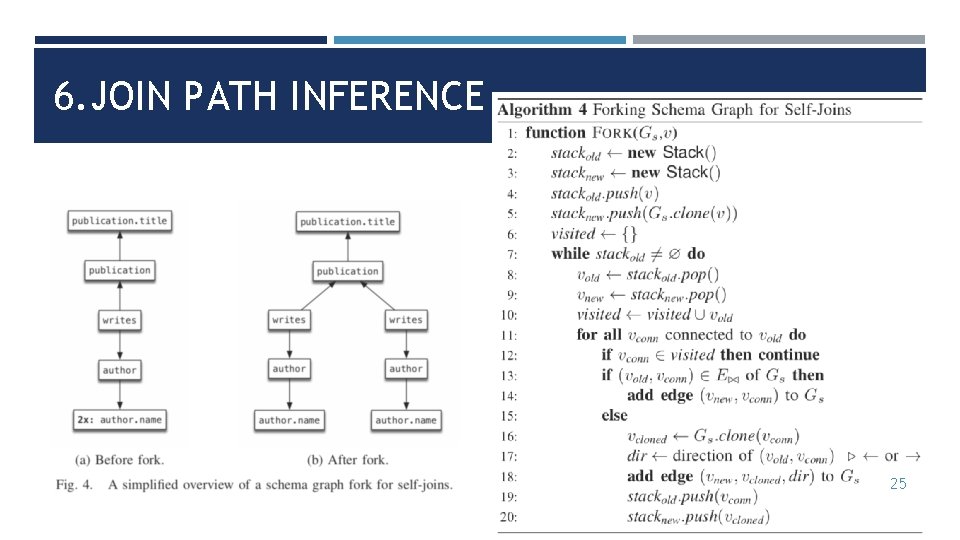

6. JOIN PATH INFERENCE Scoring Join Paths Self-Joins ex) Find papers written by both John and Jane. 24

6. JOIN PATH INFERENCE 25

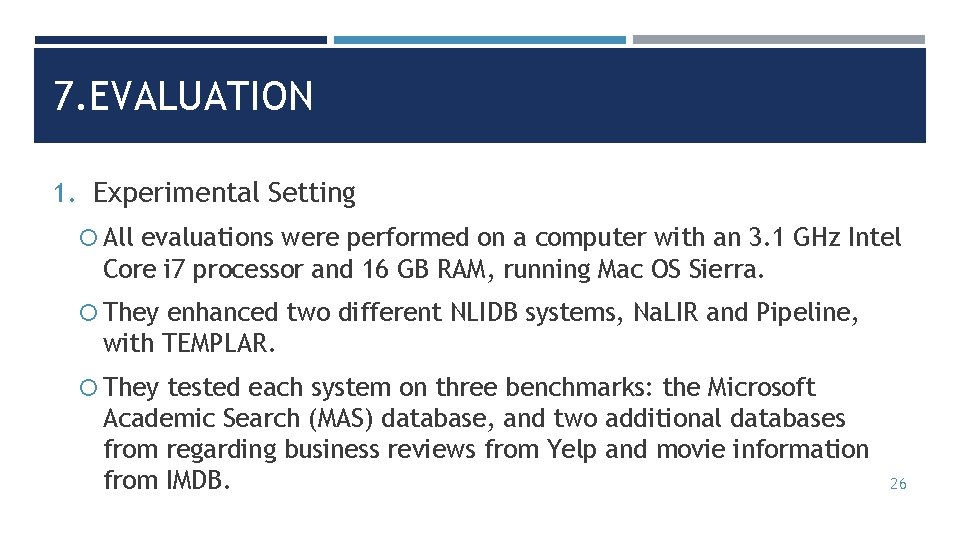

7. EVALUATION 1. Experimental Setting All evaluations were performed on a computer with an 3. 1 GHz Intel Core i 7 processor and 16 GB RAM, running Mac OS Sierra. They enhanced two different NLIDB systems, Na. LIR and Pipeline, with TEMPLAR. They tested each system on three benchmarks: the Microsoft Academic Search (MAS) database, and two additional databases from regarding business reviews from Yelp and movie information from IMDB. 26

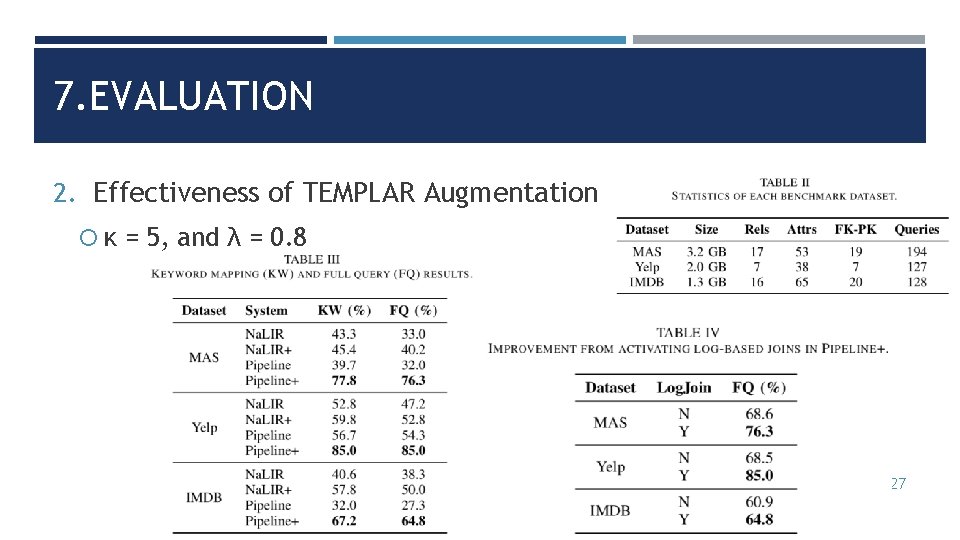

7. EVALUATION 2. Effectiveness of TEMPLAR Augmentation κ = 5, and λ = 0. 8 27

7. EVALUATION 3. Error Analysis Augmenting Pipeline with TEMPLAR had a more dramatic effect than with Na. LIR because it was given perfectly parsed keywords and metadata as input. While Na. LIR is designed to be able to return the relevant metadata, in practice, the system’s parser had trouble digesting the correct metadata from NLQs with explicit relation references. 28

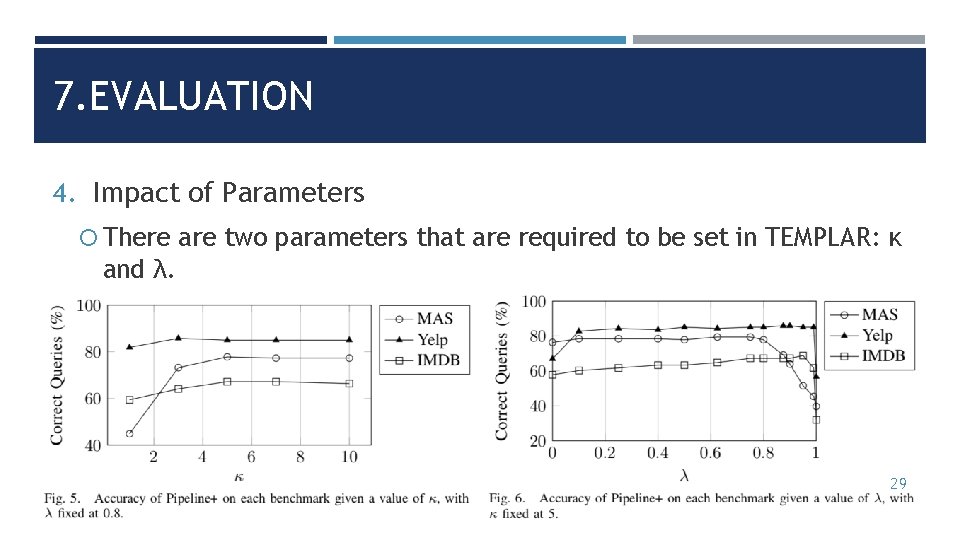

7. EVALUATION 4. Impact of Parameters There are two parameters that are required to be set in TEMPLAR: κ and λ. 29

8. CONCLUSION TEMPLAR: a system that enhances the performance of existing NLIDBs using SQL query logs Query Fragment Graph keyword mapping and join path inference 30

- Slides: 31