BOHB Robust and Efficient Hyperparameter Optimization at Scale

BOHB: Robust and Efficient Hyperparameter Optimization at Scale Frank Hutter Machine Learning Lab Department of Computer Science University of Freiburg, Germany fh@cs. uni-freiburg. de

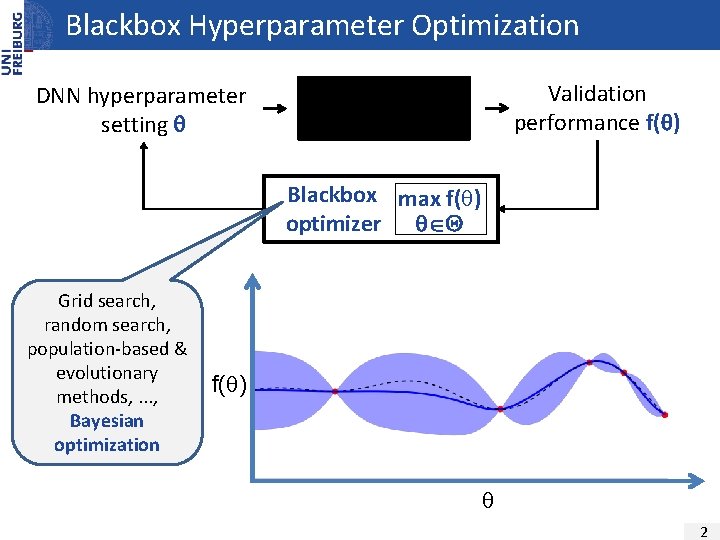

Blackbox Hyperparameter Optimization DNN hyperparameter setting Validation performance f( ) Train DNN and validate it Blackbox max f( ) optimizer Grid search, random search, population-based & evolutionary methods, . . . , Bayesian optimization f( ) 2

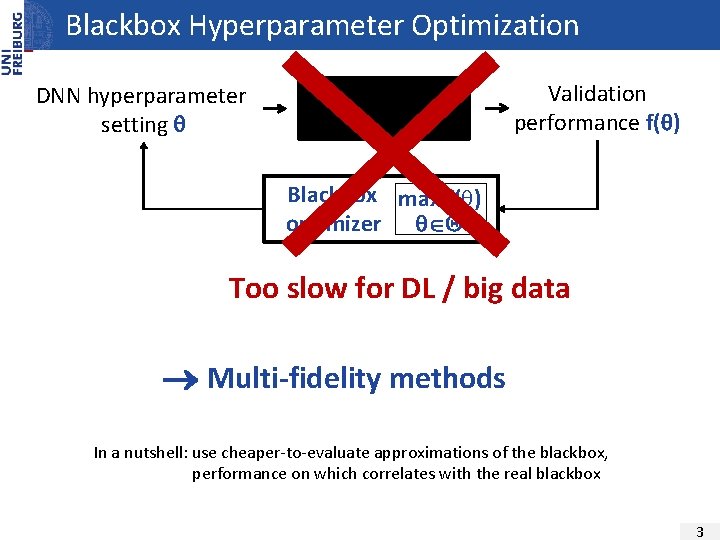

Blackbox Hyperparameter Optimization DNN hyperparameter setting Train DNN and validate it Validation performance f( ) Blackbox max f( ) optimizer Too slow for DL / big data Multi-fidelity methods In a nutshell: use cheaper-to-evaluate approximations of the blackbox, performance on which correlates with the real blackbox 3

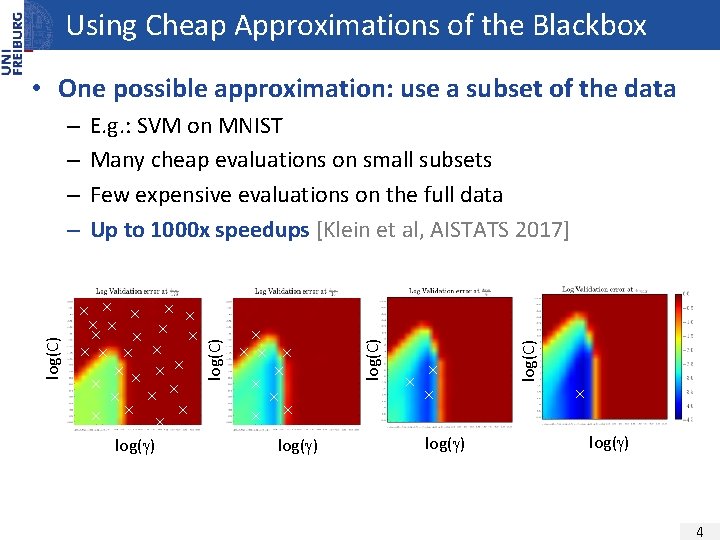

Using Cheap Approximations of the Blackbox • One possible approximation: use a subset of the data log( ) log(C) E. g. : SVM on MNIST Many cheap evaluations on small subsets Few expensive evaluations on the full data Up to 1000 x speedups [Klein et al, AISTATS 2017] log(C) – – log( ) 4

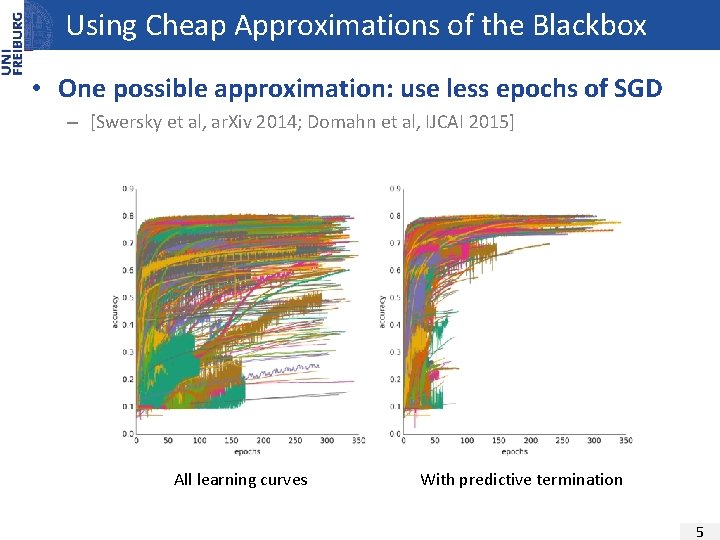

Using Cheap Approximations of the Blackbox • One possible approximation: use less epochs of SGD – [Swersky et al, ar. Xiv 2014; Domahn et al, IJCAI 2015] All learning curves With predictive termination 5

Using Cheap Approximations of the Blackbox • Cheap approximations exist in many applications – – – Subset of data Fewer epochs of iterative training algorithms (e. g. , SGD) Downsampled images in object recognition Shorter MCMC chains in Bayesian deep learning Fewer trials in deep reinforcement learning – Also applicable in different domains, e. g. , fluid simulations: • Less particles • Shorter simulations 6

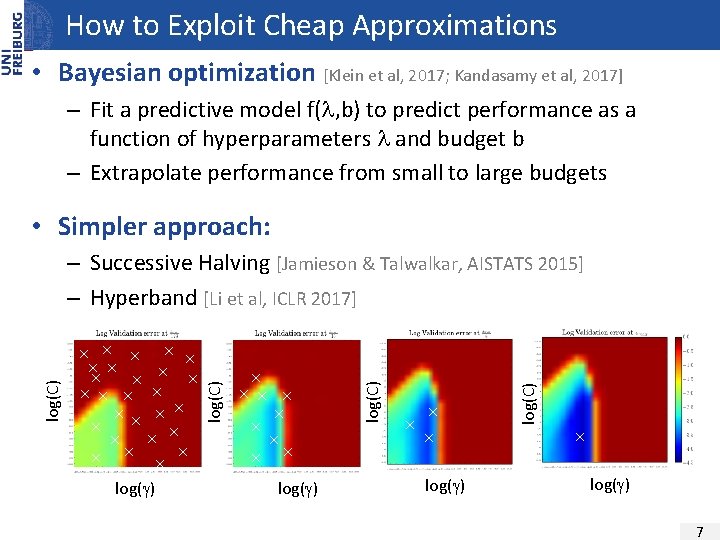

How to Exploit Cheap Approximations • Bayesian optimization [Klein et al, 2017; Kandasamy et al, 2017] – Fit a predictive model f( , b) to predict performance as a function of hyperparameters and budget b – Extrapolate performance from small to large budgets • Simpler approach: log( ) log(C) log( ) log(C) – Successive Halving [Jamieson & Talwalkar, AISTATS 2015] – Hyperband [Li et al, ICLR 2017] log( ) 7

![BOHB: Bayesian Optimization & Hyperband [Falkner, Klein & Hutter, ICML 2018] • Bayesian optimization BOHB: Bayesian Optimization & Hyperband [Falkner, Klein & Hutter, ICML 2018] • Bayesian optimization](http://slidetodoc.com/presentation_image/2b5041dc2c708d3e98f03dd0d7d2fb94/image-8.jpg)

BOHB: Bayesian Optimization & Hyperband [Falkner, Klein & Hutter, ICML 2018] • Bayesian optimization – for choosing the configuration to evaluate • Hyperband – for deciding how to allocate budgets • Advantages – Strong performance – General-purpose • Low-dimensional continuous spaces • High-dimensional spaces with conditionality, categorical dimensions, etc – Easy to implement – Scalable – Easily parallelizable 8

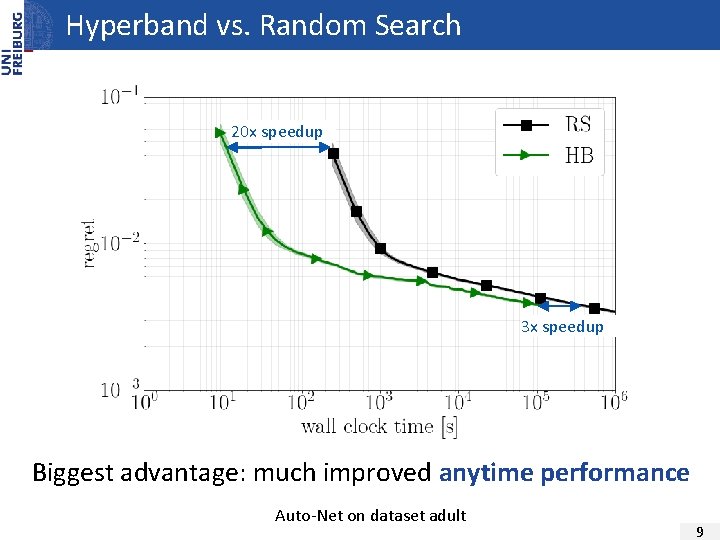

Hyperband vs. Random Search 20 x speedup 3 x speedup Biggest advantage: much improved anytime performance Auto-Net on dataset adult 9

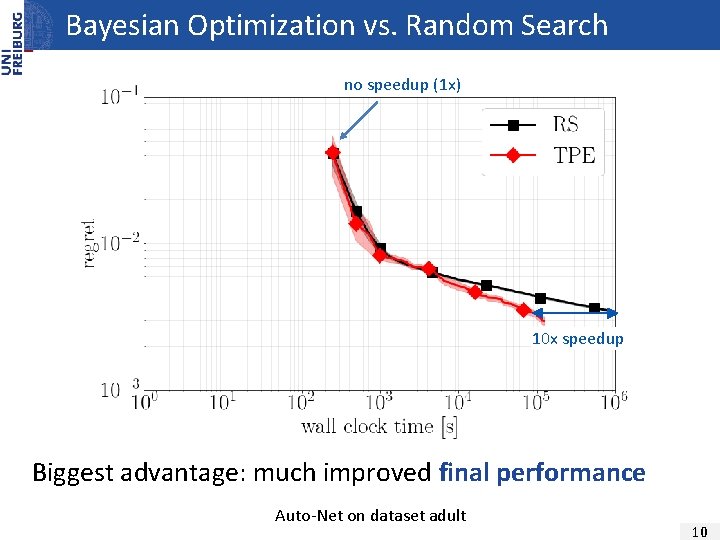

Bayesian Optimization vs. Random Search no speedup (1 x) 10 x speedup Biggest advantage: much improved final performance Auto-Net on dataset adult 10

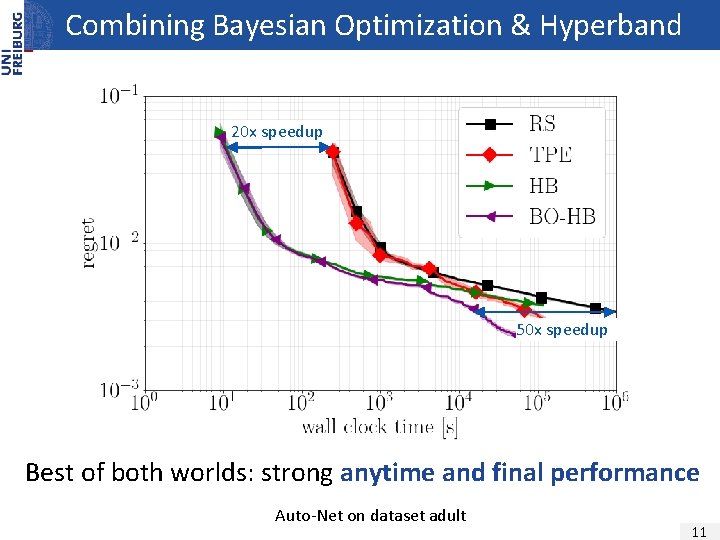

Combining Bayesian Optimization & Hyperband 20 x speedup 50 x speedup Best of both worlds: strong anytime and final performance Auto-Net on dataset adult 11

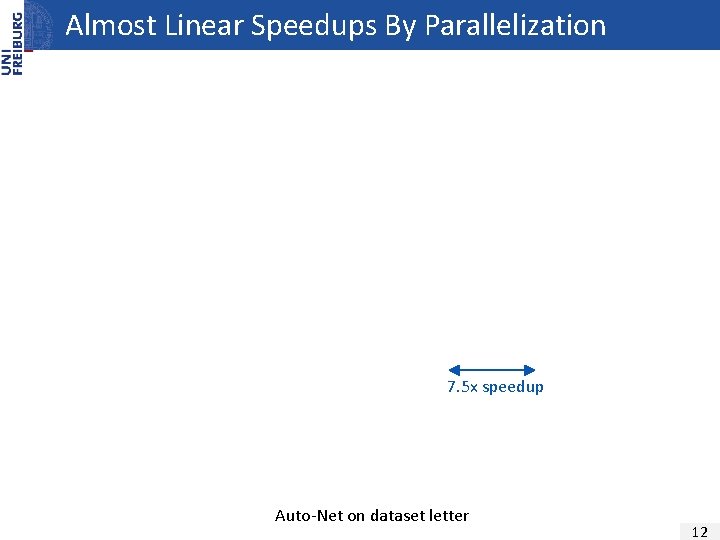

Almost Linear Speedups By Parallelization 7. 5 x speedup Auto-Net on dataset letter 12

Application to Bayesian Deep Learning • Stochastic Gradient Hamiltonian Monte Carlo • Budget: MCMC steps 13

Application to Deep Reinforcement Learning • Proximal policy optimization on cartpole benchmark • Budget: trials (to find a robust policy) 14

Application to Second Auto. ML Challenge • Auto-sklearn 2. 0 – – Uses base algorithms from scikit-learn and XGBoost Optimized using BOHB Budgets: dataset size; number of training epochs More efficient for large datasets than Auto-sklearn 1. 0 • Use meta-learning across datasets to warmstart BOHB – 16 complementary configurations for the first phase of successive halving pre-selected with SMAC • Won the second international Auto. ML challenge (2017 – 2018) 15

Application to Tuning CNNs on a Budget • Four design decisions for a CIFAR-10 network – Learning rate, batch size, weight decay, momentum • Budgets: 22, 66, 200, and 600 epochs of SGD – Ran BOHB for 22 hours on 38 GPUs • Result: – 2. 78% test error (in 33 GPU days) – RL [Zoph et al, 2017]: 2. 4% test error (in 2000 GPU days) 16

Conclusion • Large speedups by going beyond blackbox optimization • Multi-fidelity methods – Do most of the work on cheap approximations of the blackbox – Can be directly combined with Bayesian optimization • Available as an off-the-shelf tool – Github: https: //github. com/automl/Hp. Band. Ster • More details in our book: http: //automl. org/book 17

Thanks! Funding sources My fantastic team EU project Rob. DREAM I‘m looking for additional great postdocs 18

- Slides: 18