Binary Classification by Logistic Regression In multivariate linear

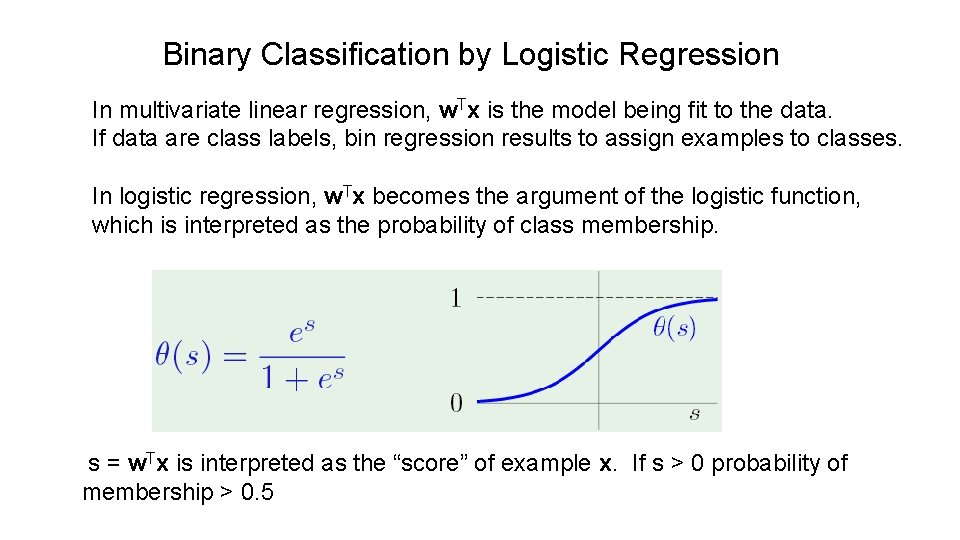

Binary Classification by Logistic Regression In multivariate linear regression, w. Tx is the model being fit to the data. If data are class labels, bin regression results to assign examples to classes. In logistic regression, w. Tx becomes the argument of the logistic function, which is interpreted as the probability of class membership. s = w. Tx is interpreted as the “score” of example x. If s > 0 probability of membership > 0. 5

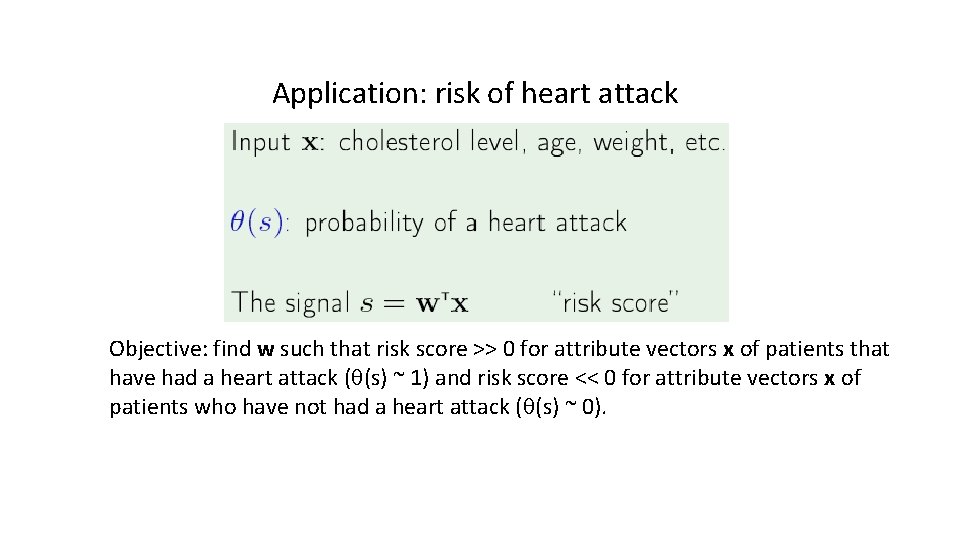

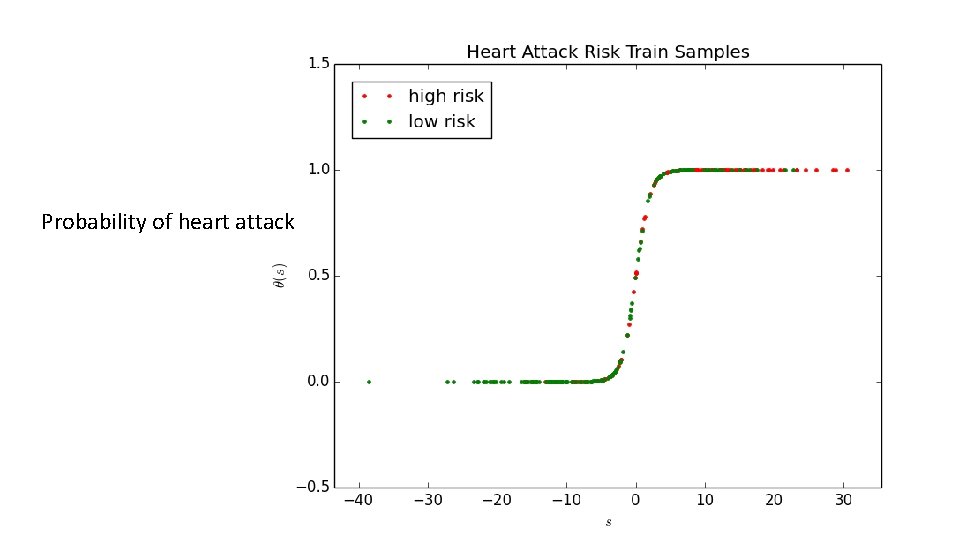

Application: risk of heart attack Objective: find w such that risk score >> 0 for attribute vectors x of patients that have had a heart attack (q(s) ~ 1) and risk score << 0 for attribute vectors x of patients who have not had a heart attack (q(s) ~ 0).

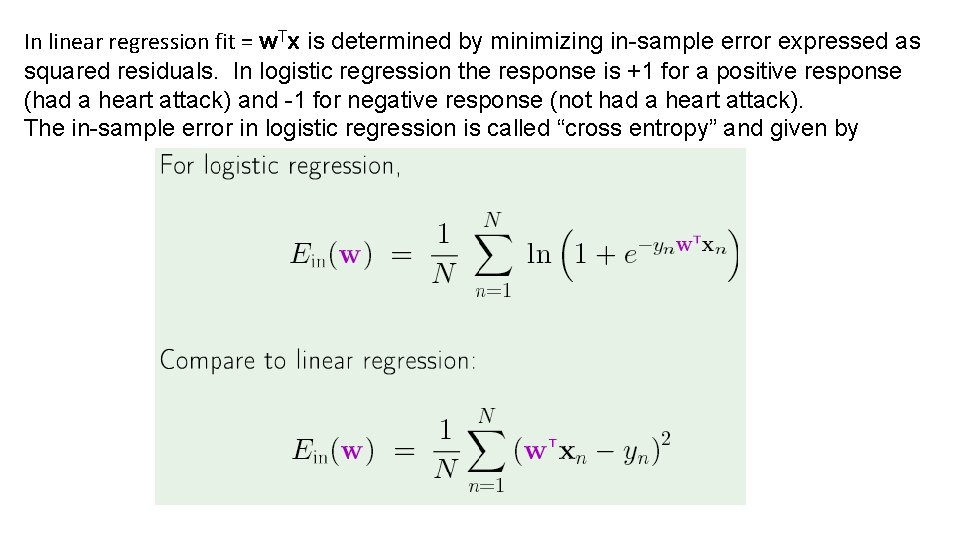

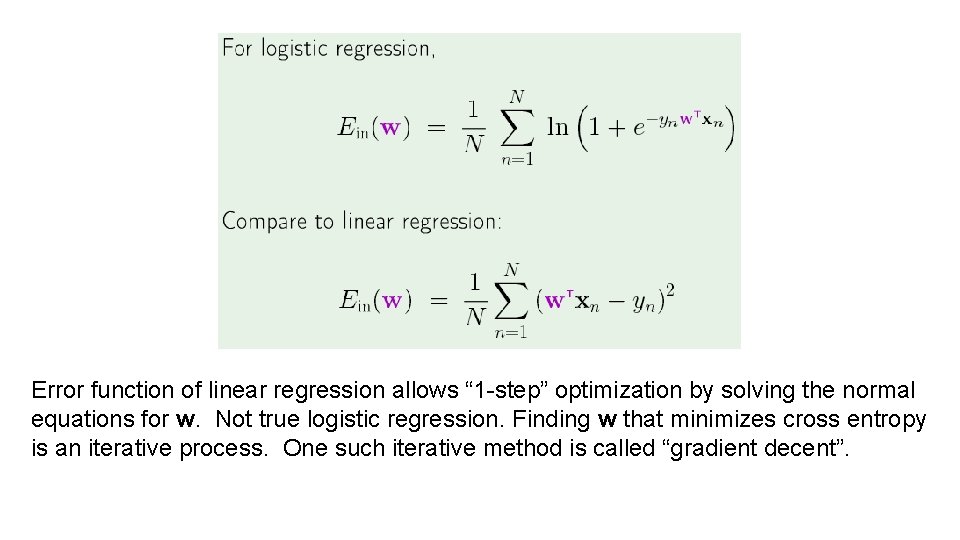

In linear regression fit = w. Tx is determined by minimizing in-sample error expressed as squared residuals. In logistic regression the response is +1 for a positive response (had a heart attack) and -1 for negative response (not had a heart attack). The in-sample error in logistic regression is called “cross entropy” and given by

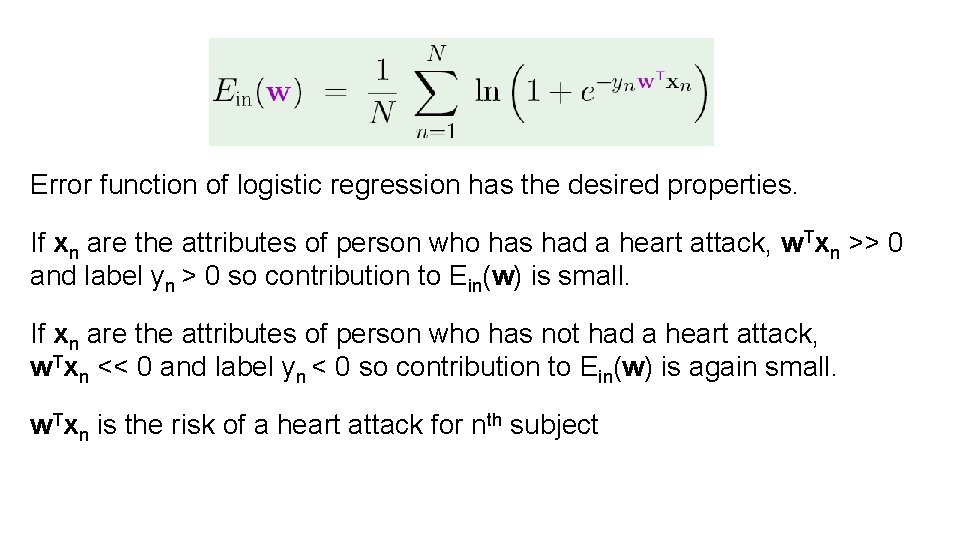

Error function of logistic regression has the desired properties. If xn are the attributes of person who has had a heart attack, w. Txn >> 0 and label yn > 0 so contribution to Ein(w) is small. If xn are the attributes of person who has not had a heart attack, w. Txn << 0 and label yn < 0 so contribution to Ein(w) is again small. w. Txn is the risk of a heart attack for nth subject

Error function of linear regression allows “ 1 -step” optimization by solving the normal equations for w. Not true logistic regression. Finding w that minimizes cross entropy is an iterative process. One such iterative method is called “gradient decent”.

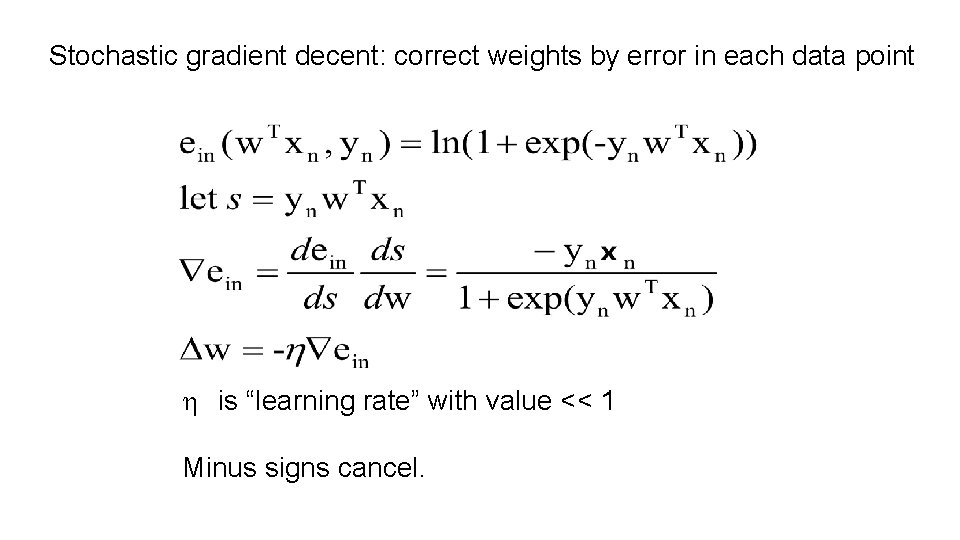

Stochastic gradient decent: correct weights by error in each data point h is “learning rate” with value << 1 Minus signs cancel.

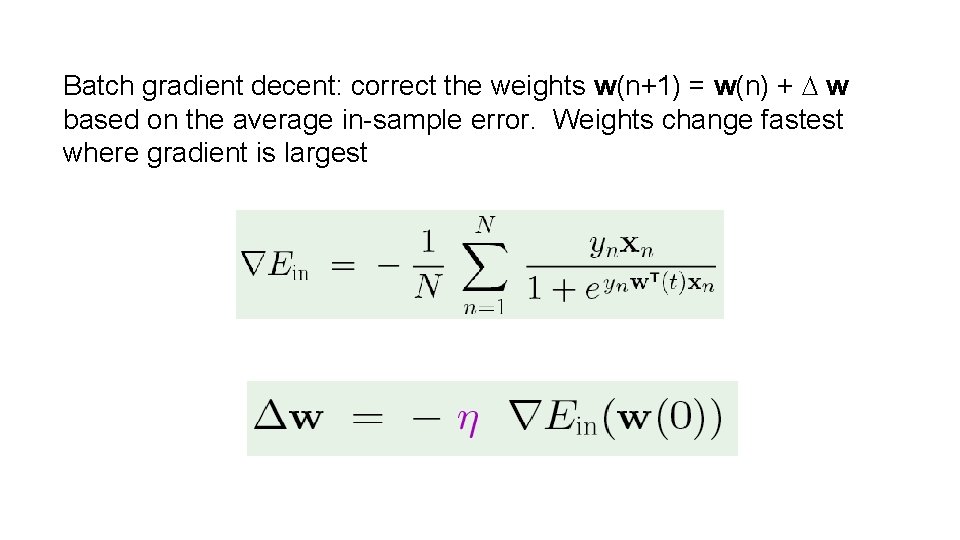

Batch gradient decent: correct the weights w(n+1) = w(n) + D w based on the average in-sample error. Weights change fastest where gradient is largest

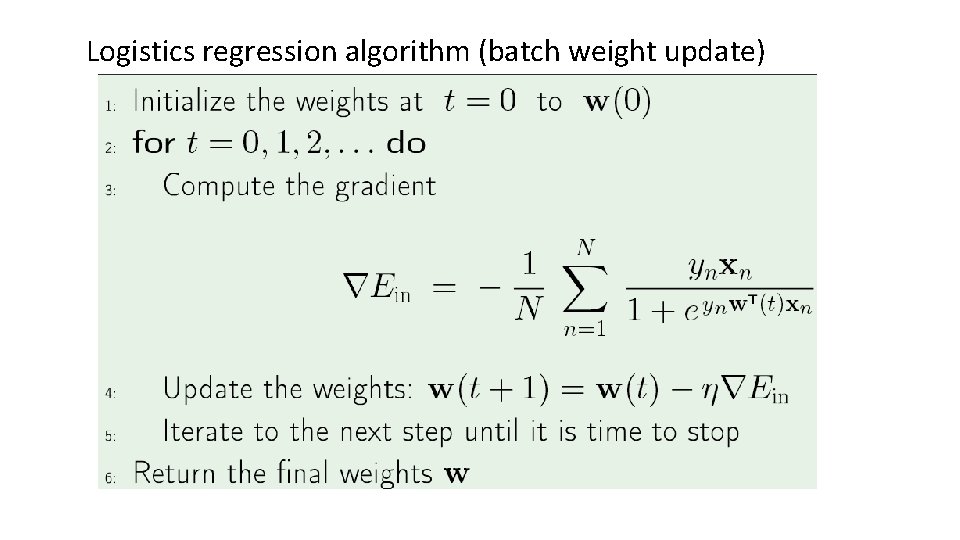

Logistics regression algorithm (batch weight update)

![Chose w(0) randomly on [0, 1] Chose a learning rate of 0. 001 or Chose w(0) randomly on [0, 1] Chose a learning rate of 0. 001 or](http://slidetodoc.com/presentation_image_h2/0ed2c5804dbf045d9206a6a238dba138/image-9.jpg)

Chose w(0) randomly on [0, 1] Chose a learning rate of 0. 001 or less How do you avoid overflow in calculation of the gradient of Ein ?

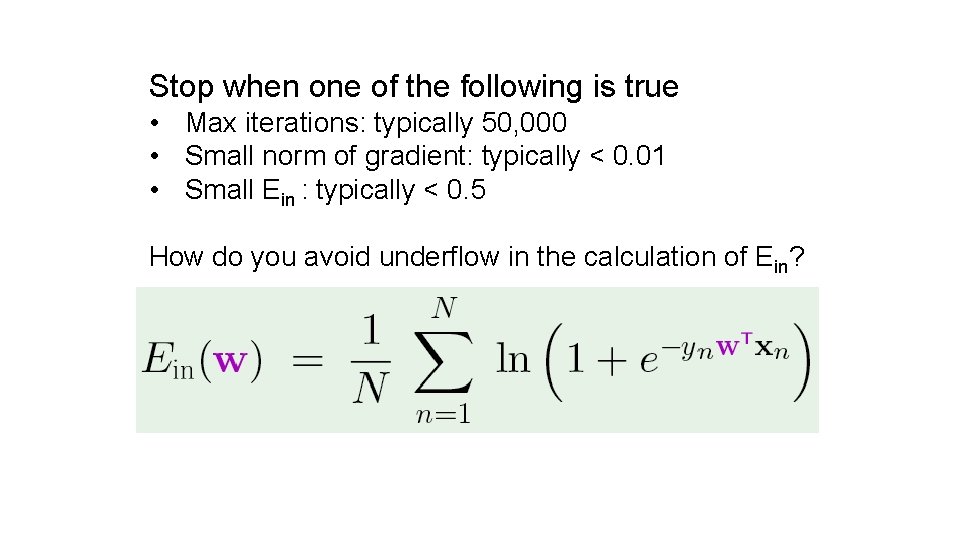

Stop when one of the following is true • Max iterations: typically 50, 000 • Small norm of gradient: typically < 0. 01 • Small Ein : typically < 0. 5 How do you avoid underflow in the calculation of Ein?

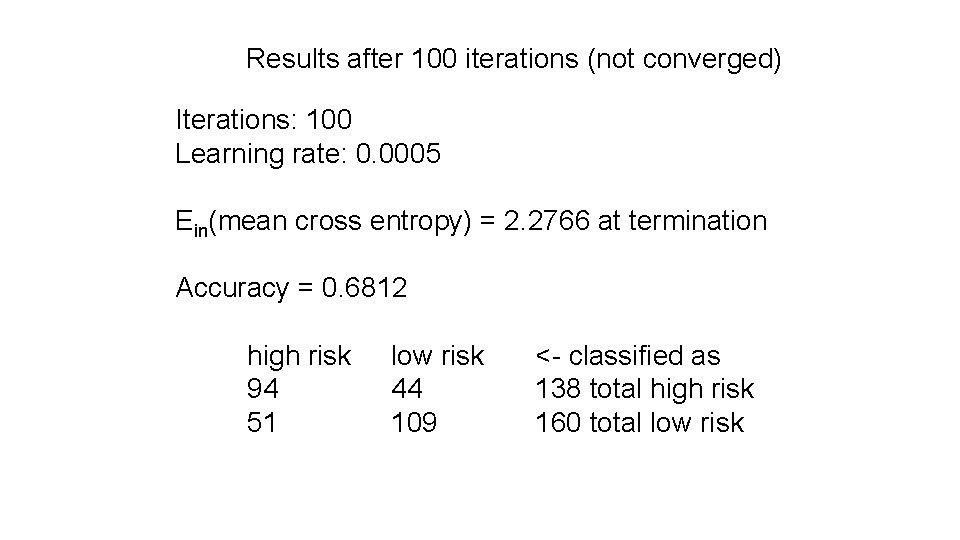

Results after 100 iterations (not converged) Iterations: 100 Learning rate: 0. 0005 Ein(mean cross entropy) = 2. 2766 at termination Accuracy = 0. 6812 high risk 94 51 low risk 44 109 <- classified as 138 total high risk 160 total low risk

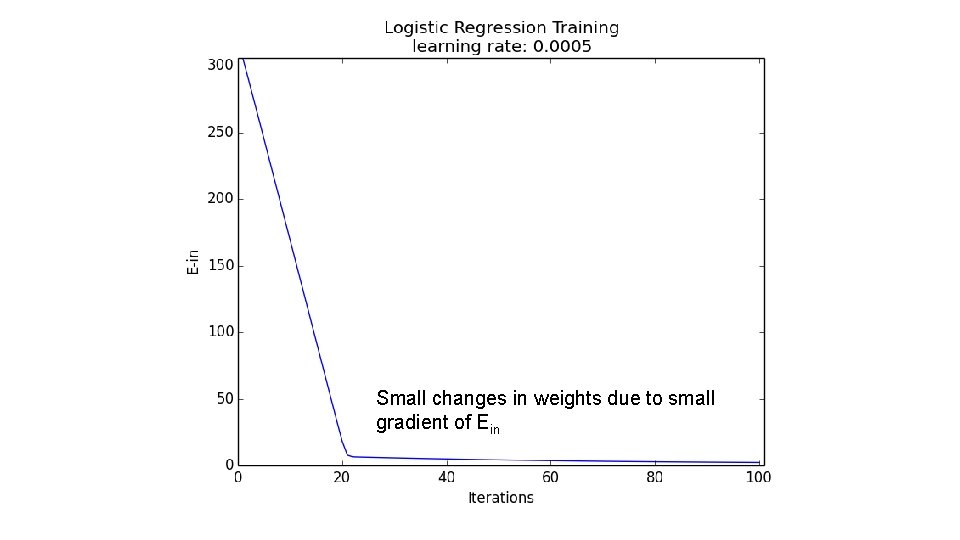

Small changes in weights due to small gradient of Ein

Probability of heart attack

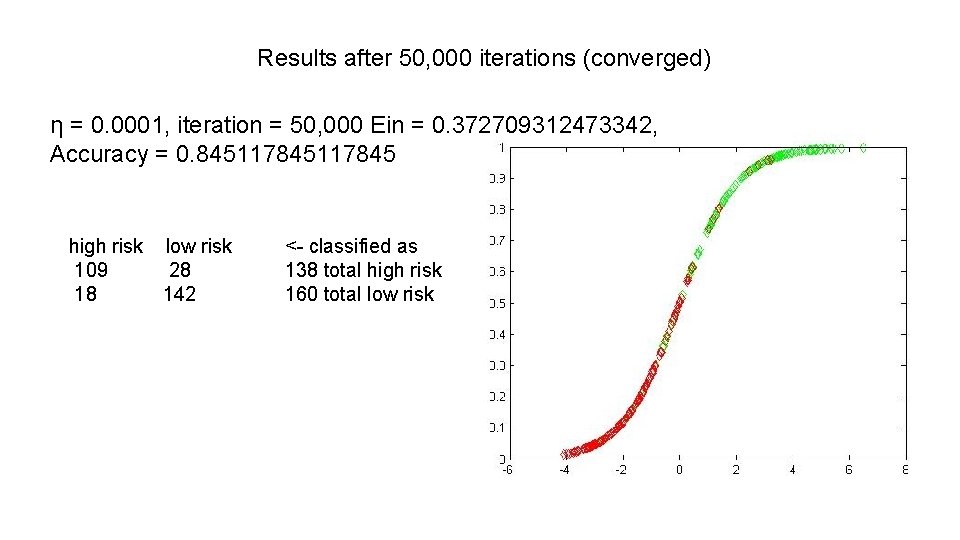

Results after 50, 000 iterations (converged) η = 0. 0001, iteration = 50, 000 Ein = 0. 372709312473342, Accuracy = 0. 845117845 high risk low risk 109 28 18 142 <- classified as 138 total high risk 160 total low risk

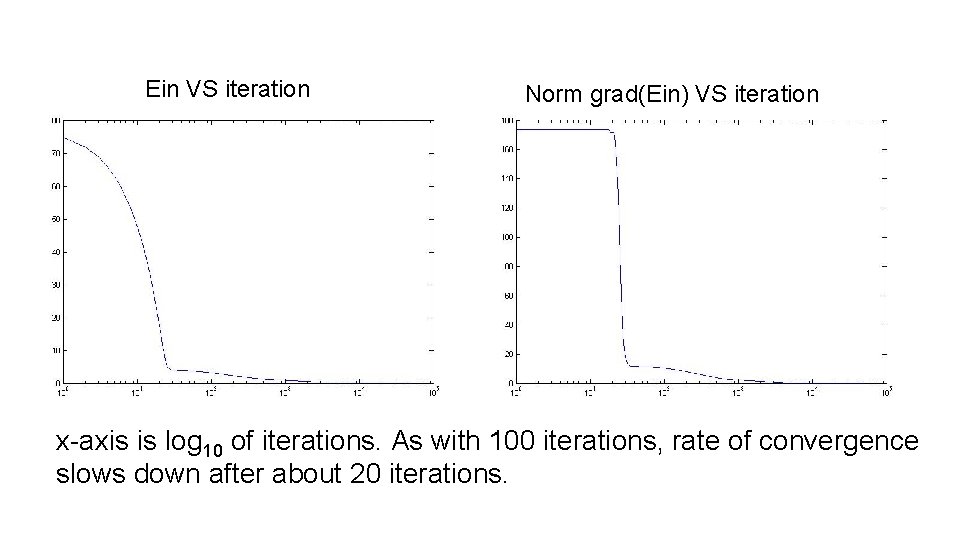

Ein VS iteration Norm grad(Ein) VS iteration x-axis is log 10 of iterations. As with 100 iterations, rate of convergence slows down after about 20 iterations.

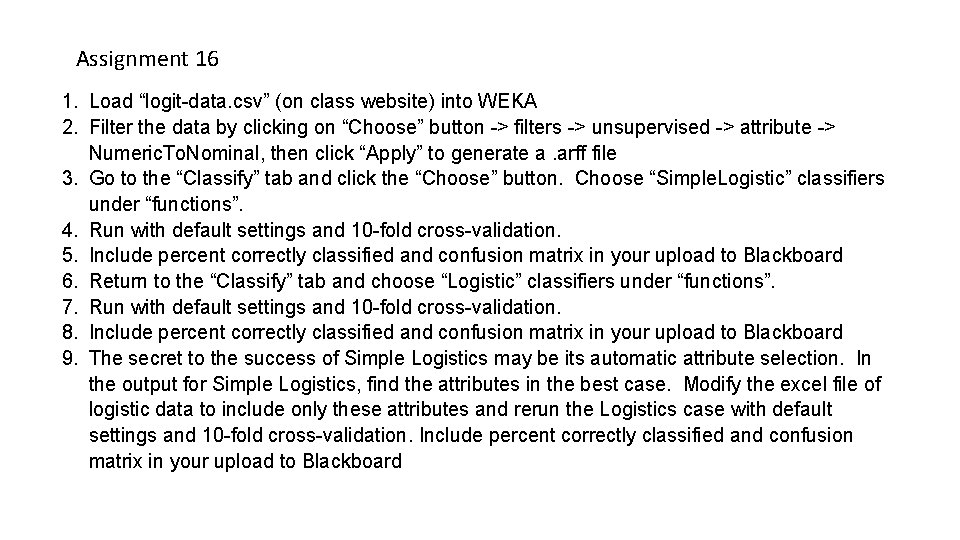

Assignment 16 1. Load “logit-data. csv” (on class website) into WEKA 2. Filter the data by clicking on “Choose” button -> filters -> unsupervised -> attribute -> Numeric. To. Nominal, then click “Apply” to generate a. arff file 3. Go to the “Classify” tab and click the “Choose” button. Choose “Simple. Logistic” classifiers under “functions”. 4. Run with default settings and 10 -fold cross-validation. 5. Include percent correctly classified and confusion matrix in your upload to Blackboard 6. Return to the “Classify” tab and choose “Logistic” classifiers under “functions”. 7. Run with default settings and 10 -fold cross-validation. 8. Include percent correctly classified and confusion matrix in your upload to Blackboard 9. The secret to the success of Simple Logistics may be its automatic attribute selection. In the output for Simple Logistics, find the attributes in the best case. Modify the excel file of logistic data to include only these attributes and rerun the Logistics case with default settings and 10 -fold cross-validation. Include percent correctly classified and confusion matrix in your upload to Blackboard

- Slides: 16