Big Sim A Parallel Simulator for Performance Prediction

Big. Sim: A Parallel Simulator for Performance Prediction of Extremely Large Parallel Machines Gengbin Zheng Gunavardhan Kakulapati Laxmikant V. Kale University of Illinois at Urbana-Champaign IPDPS 4/29/2004

Motivations l Extremely large parallel machines around the corner • • l Examples: • ASCI Purple (12 K, 100 TF) • Blue. Gene/L (64 K, 360 TF) • Blue. Gene/C (8 M, 1 PF) PF machines likely to have 100 k+ processors (1 M? ) Would existing parallel applications scale? • • Machines are not there Parallel performance is hard to model without actually running the program IPDPS 4/29/2004 2

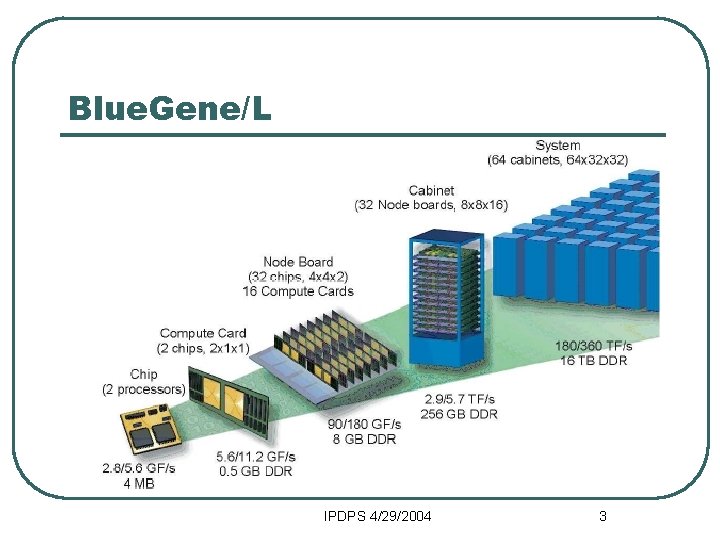

Blue. Gene/L IPDPS 4/29/2004 3

Roadmap l l l Explore suitable programming models • • Charm++ (Message-driven) MPI and its extension - AMPI (adaptive version of MPI) Use a parallel emulator to run applications Coarse-grained simulator for performance prediction (not hardware simulation) IPDPS 4/29/2004 4

Charm++ Object-based Programming Model l Processor virtualization • Divide computation into large number of pieces • Let system map objects to processors • Independent of number of processors • Typically larger than number of processors • Empowers an adaptive, intelligent runtime system System implementation User View IPDPS 4/29/2004 6

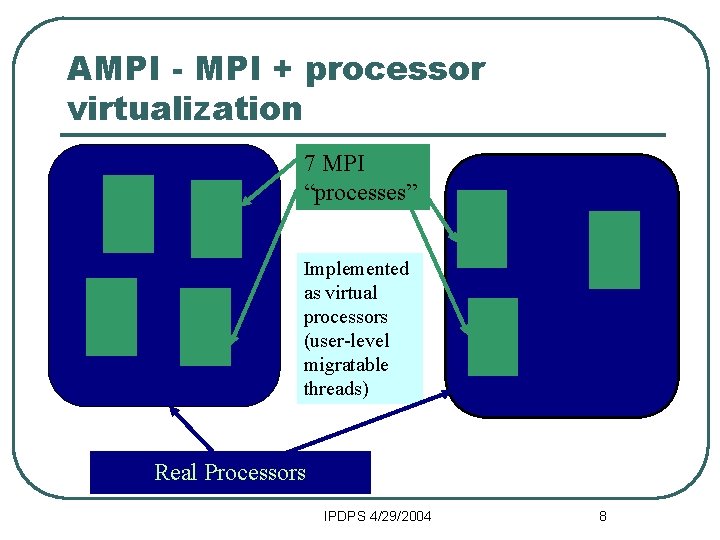

AMPI - MPI + processor virtualization 7 MPI “processes” Implemented as virtual processors (user-level migratable threads) Real Processors IPDPS 4/29/2004 8

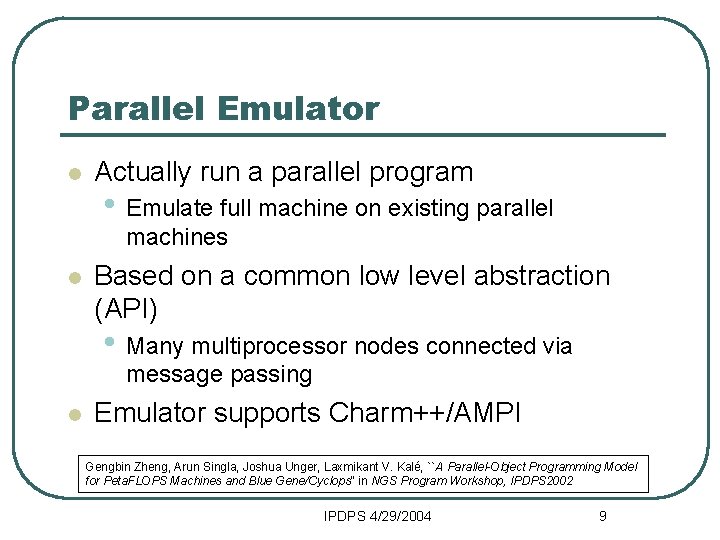

Parallel Emulator l Actually run a parallel program • Emulate full machine on existing parallel machines l Based on a common low level abstraction (API) • Many multiprocessor nodes connected via message passing l Emulator supports Charm++/AMPI Gengbin Zheng, Arun Singla, Joshua Unger, Laxmikant V. Kalé, ``A Parallel-Object Programming Model for Peta. FLOPS Machines and Blue Gene/Cyclops'' in NGS Program Workshop, IPDPS 2002 IPDPS 4/29/2004 9

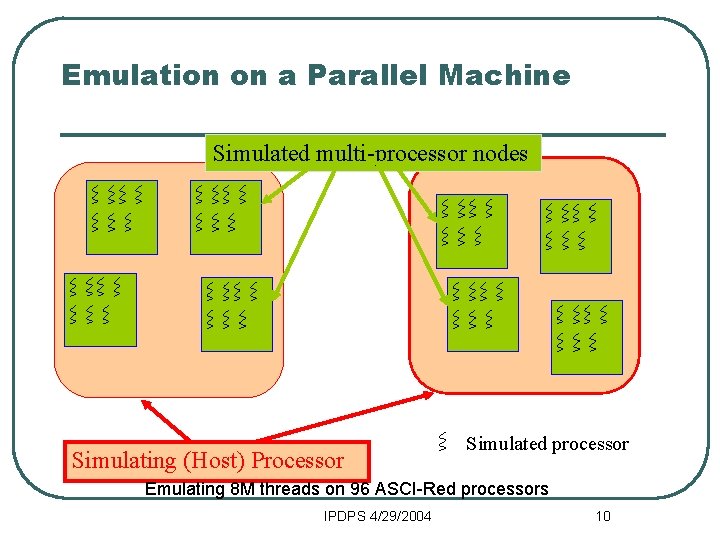

Emulation on a Parallel Machine Simulated multi-processor nodes Simulating (Host) Processor Simulated processor Emulating 8 M threads on 96 ASCI-Red processors IPDPS 4/29/2004 10

Emulator to Simulator l l Predicting parallel performance Modeling parallel performance accurately is challenging • Communication subsystem • Behavior of runtime system • Size of the machine is big IPDPS 4/29/2004 12

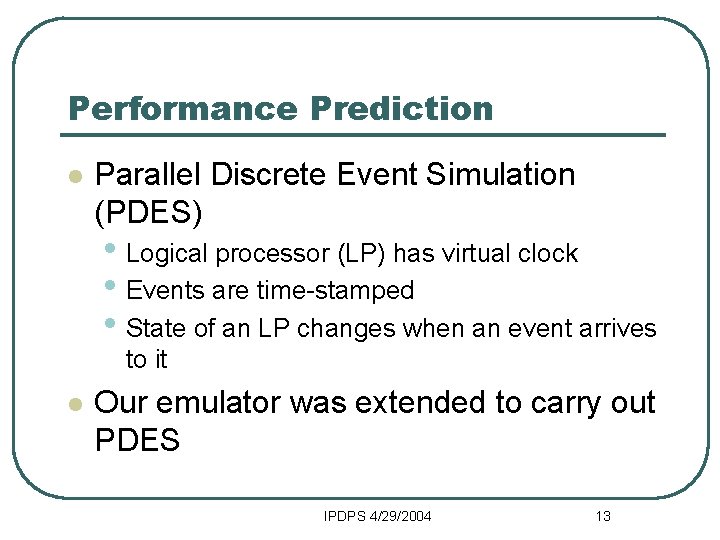

Performance Prediction l Parallel Discrete Event Simulation (PDES) • Logical processor (LP) has virtual clock • Events are time-stamped • State of an LP changes when an event arrives to it l Our emulator was extended to carry out PDES IPDPS 4/29/2004 13

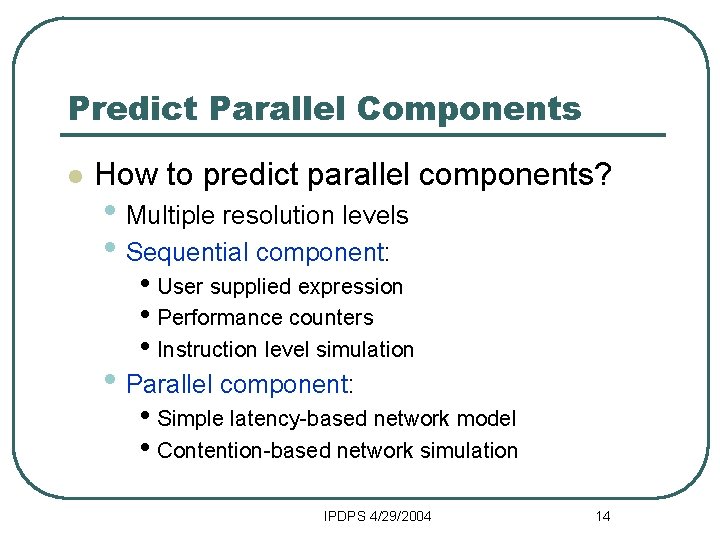

Predict Parallel Components l How to predict parallel components? • Multiple resolution levels • Sequential component: • User supplied expression • Performance counters • Instruction level simulation • Parallel component: • Simple latency-based network model • Contention-based network simulation IPDPS 4/29/2004 14

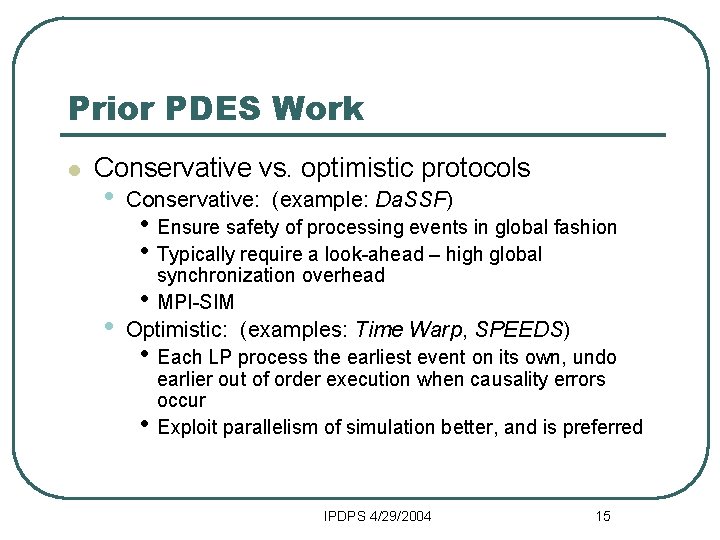

Prior PDES Work l Conservative vs. optimistic protocols • • Conservative: (example: Da. SSF) • Ensure safety of processing events in global fashion • Typically require a look-ahead – high global • synchronization overhead MPI-SIM Optimistic: (examples: Time Warp, SPEEDS) • Each LP process the earliest event on its own, undo • earlier out of order execution when causality errors occur Exploit parallelism of simulation better, and is preferred IPDPS 4/29/2004 15

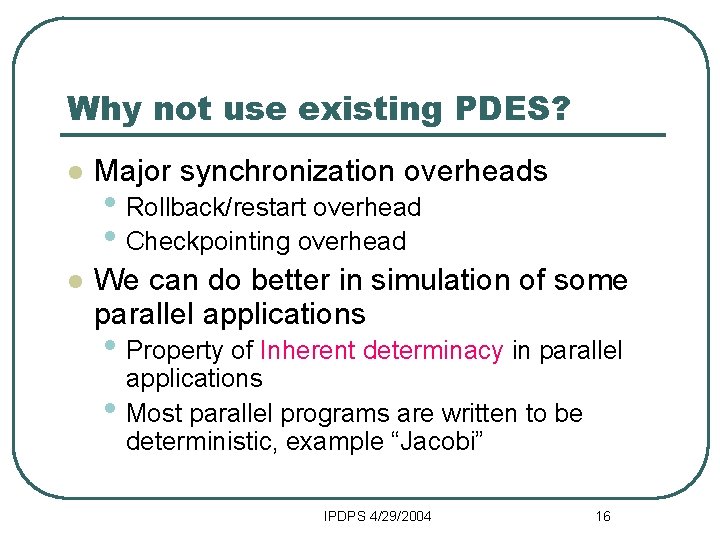

Why not use existing PDES? l Major synchronization overheads l We can do better in simulation of some parallel applications • Rollback/restart overhead • Checkpointing overhead • Property of Inherent determinacy in parallel • applications Most parallel programs are written to be deterministic, example “Jacobi” IPDPS 4/29/2004 16

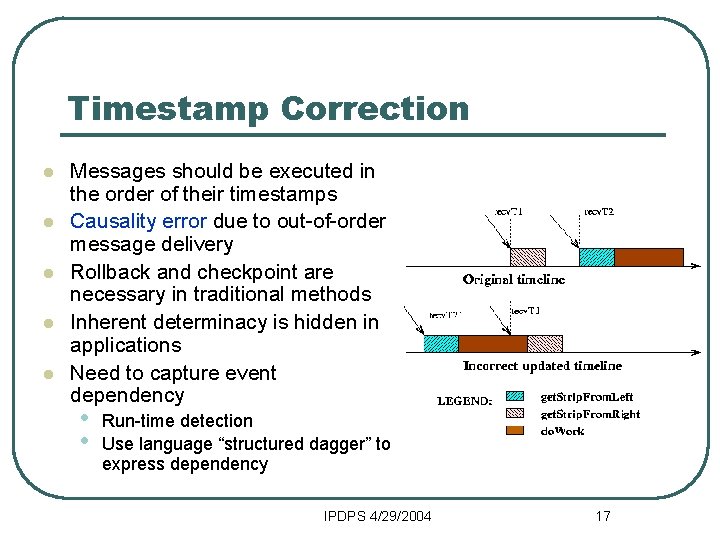

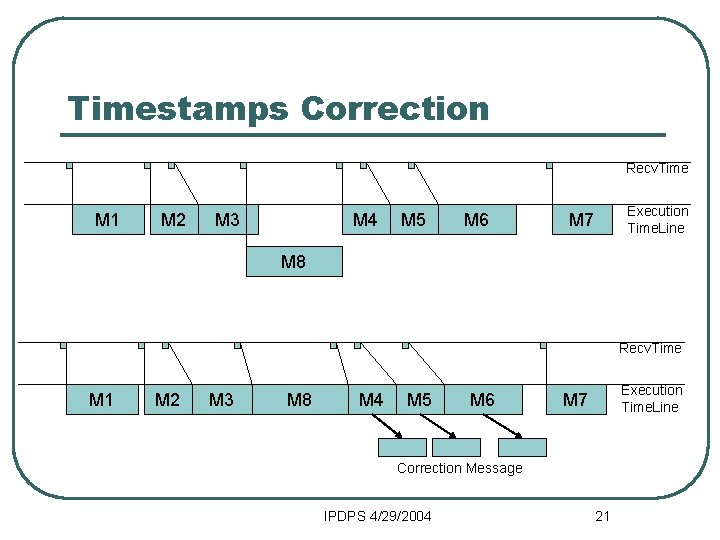

Timestamp Correction l l l Messages should be executed in the order of their timestamps Causality error due to out-of-order message delivery Rollback and checkpoint are necessary in traditional methods Inherent determinacy is hidden in applications Need to capture event dependency • • Run-time detection Use language “structured dagger” to express dependency IPDPS 4/29/2004 17

Simulation of Different Applications l Linear-order applications • No wildcard MPI receives • l l Strong determinacy, no timestamp correction necessary Reactive applications (atomic) • • Message driven objects Methods execute as corresponding messages arrive Multi-dependent applications • • Irecvs with Wait. All (MPI) Uses of structured dagger to capture dependency (Charm++) IPDPS 4/29/2004 18

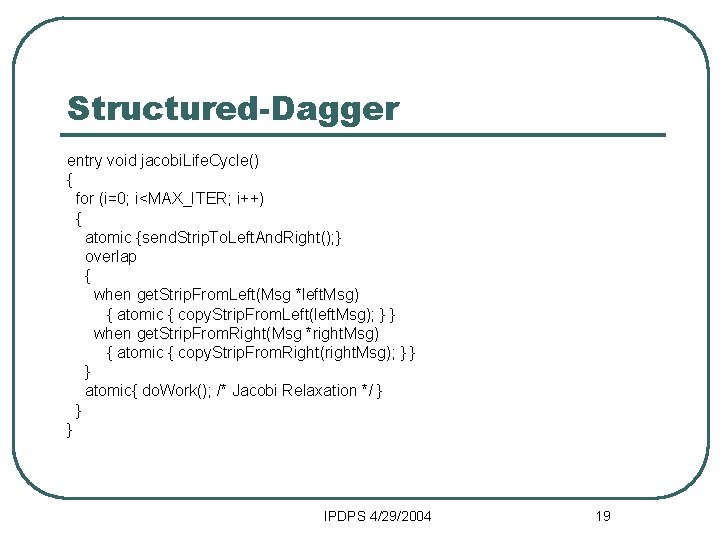

Structured-Dagger entry void jacobi. Life. Cycle() { for (i=0; i<MAX_ITER; i++) { atomic {send. Strip. To. Left. And. Right(); } overlap { when get. Strip. From. Left(Msg *left. Msg) { atomic { copy. Strip. From. Left(left. Msg); } } when get. Strip. From. Right(Msg *right. Msg) { atomic { copy. Strip. From. Right(right. Msg); } } } atomic{ do. Work(); /* Jacobi Relaxation */ } } } IPDPS 4/29/2004 19

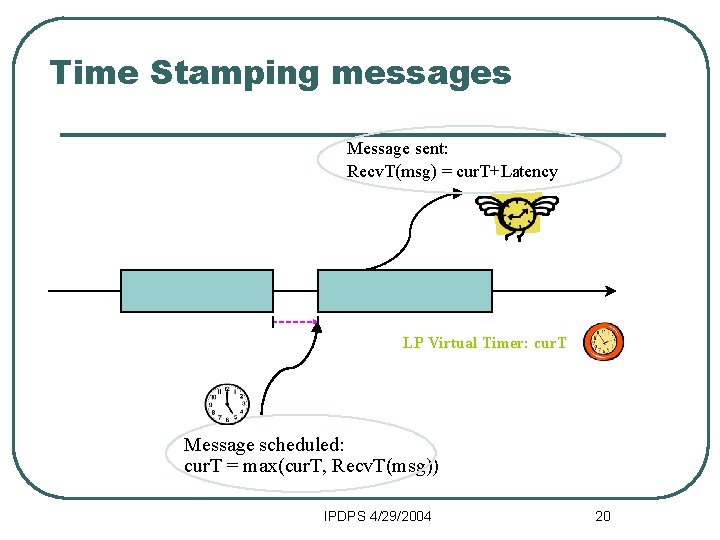

Time Stamping messages Message sent: Recv. T(msg) = cur. T+Latency LP Virtual Timer: cur. T Message scheduled: cur. T = max(cur. T, Recv. T(msg)) IPDPS 4/29/2004 20

Timestamps Correction Recv. Time M 1 M 2 M 3 M 4 M 5 M 6 Execution Time. Line M 7 M 8 Recv. Time M 1 M 2 M 3 M 8 M 4 M 5 M 6 Execution Time. Line M 7 Correction Message IPDPS 4/29/2004 21

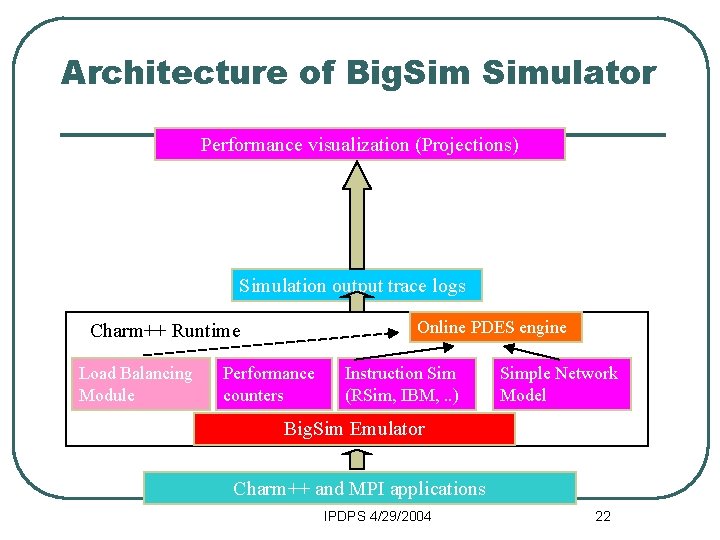

Architecture of Big. Simulator Performance visualization (Projections) Simulation output trace logs Online PDES engine Charm++ Runtime Load Balancing Module Performance counters Instruction Sim (RSim, IBM, . . ) Simple Network Model Big. Sim Emulator Charm++ and MPI applications IPDPS 4/29/2004 22

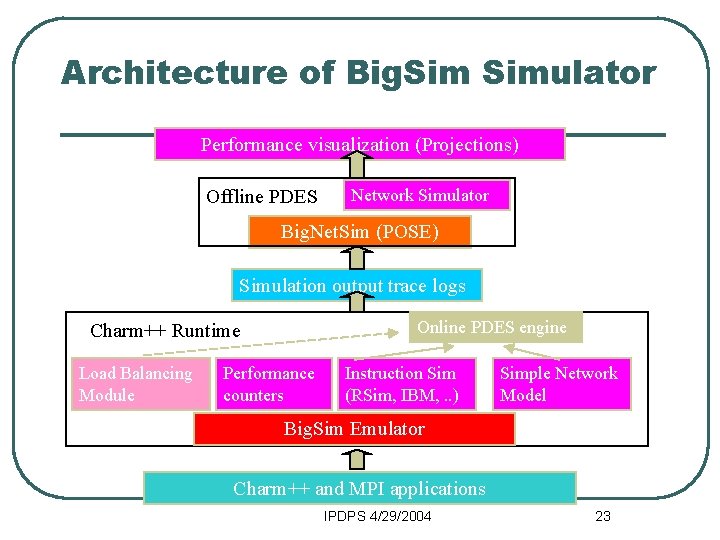

Architecture of Big. Simulator Performance visualization (Projections) Offline PDES Network Simulator Big. Net. Sim (POSE) Simulation output trace logs Online PDES engine Charm++ Runtime Load Balancing Module Performance counters Instruction Sim (RSim, IBM, . . ) Simple Network Model Big. Sim Emulator Charm++ and MPI applications IPDPS 4/29/2004 23

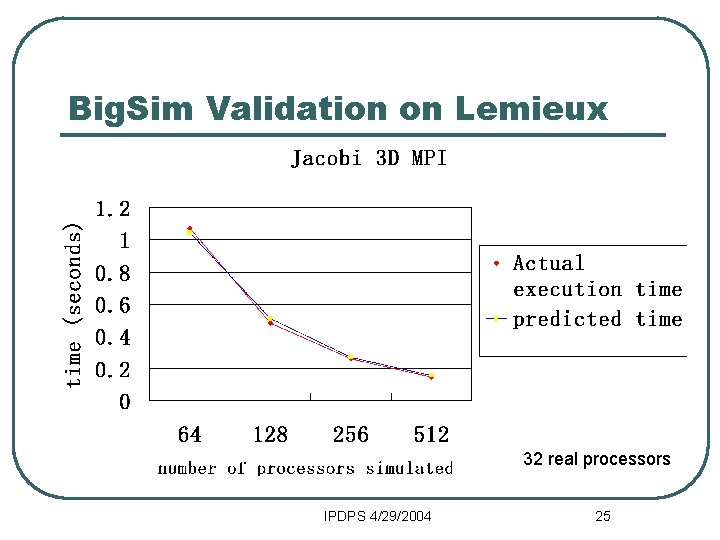

Big. Sim Validation on Lemieux 32 real processors IPDPS 4/29/2004 25

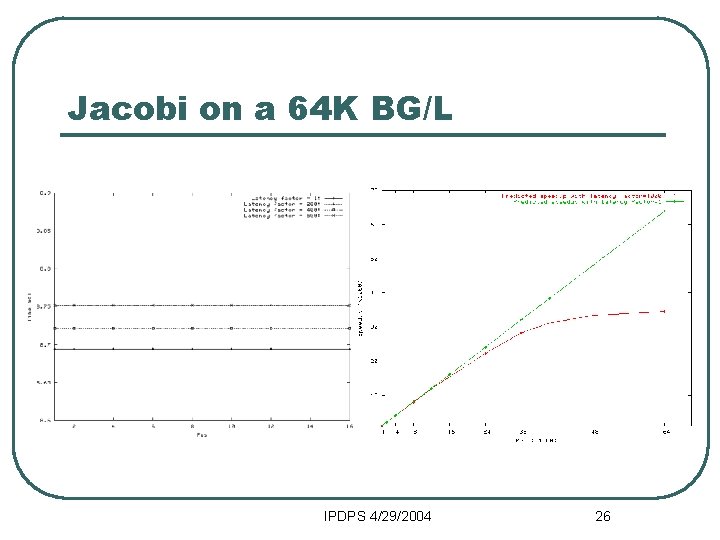

Jacobi on a 64 K BG/L IPDPS 4/29/2004 26

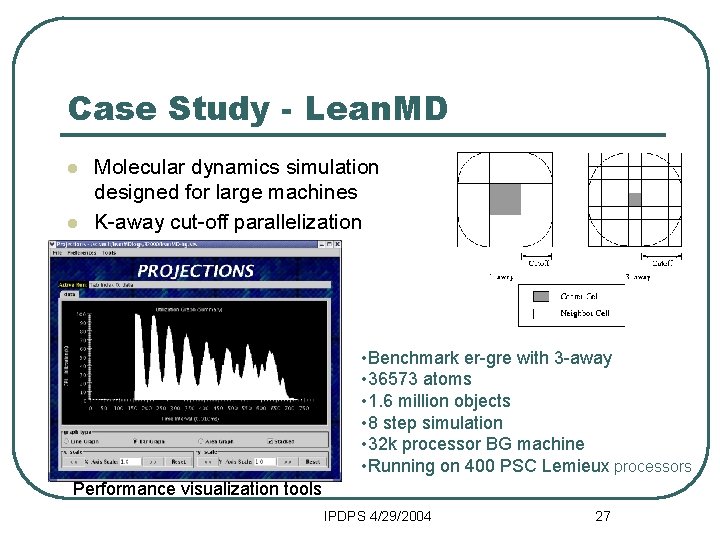

Case Study - Lean. MD l l Molecular dynamics simulation designed for large machines K-away cut-off parallelization • Benchmark er-gre with 3 -away • 36573 atoms • 1. 6 million objects • 8 step simulation • 32 k processor BG machine • Running on 400 PSC Lemieux processors Performance visualization tools IPDPS 4/29/2004 27

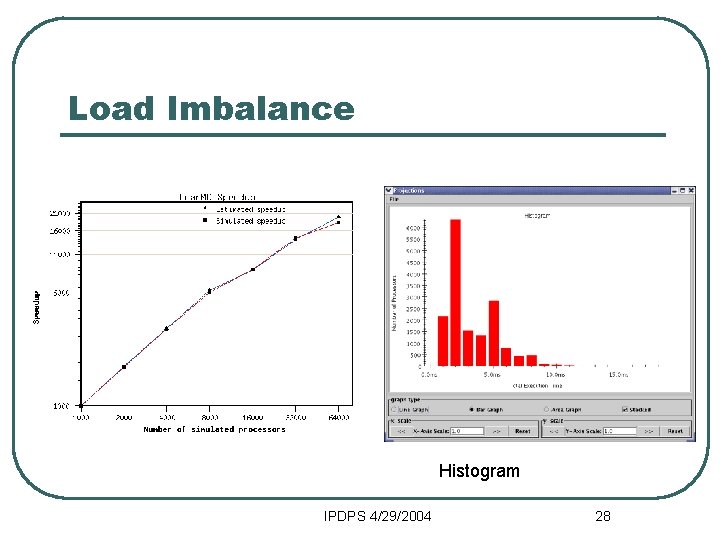

Load Imbalance Histogram IPDPS 4/29/2004 28

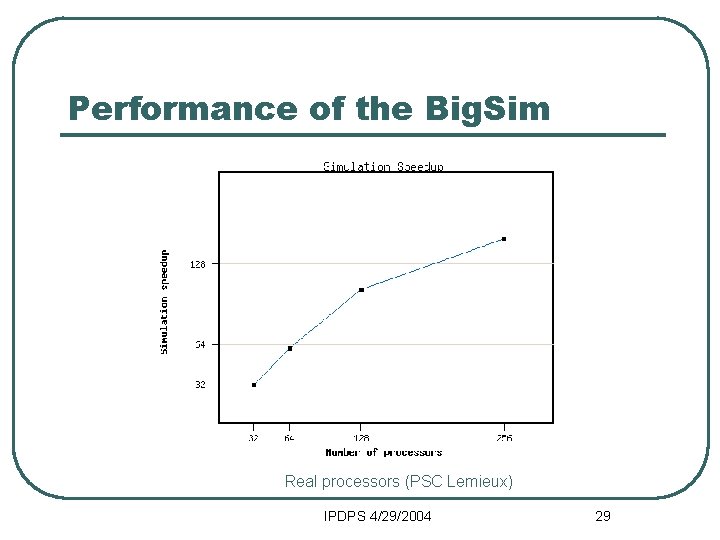

Performance of the Big. Sim Real processors (PSC Lemieux) IPDPS 4/29/2004 29

Conclusions l l l Improved the simulation efficiency by taking advantage of “inherent determinacy” of parallel applications Explored simulation techniques show good parallel scalability http: //charm. cs. uiuc. edu IPDPS 4/29/2004 30

Future Work l Improving simulation accuracy l Developing run-time techniques (load balancing) for very large machines using the simulator • Instruction level simulator • Network simulator IPDPS 4/29/2004 31

- Slides: 27