Big Data Tools Seyyed mohammad Razavi Outline Introduction

Big. Data Tools Seyyed mohammad Razavi

Outline Introduction Hbase Cassandra Spark Acumulo Blur Mongo. DB Hive Giraph Pig

Introduction Hadoop consists of three primary resources: The Hadoop Distributed File System (HDFS) The Map. Reduce Programming platform The Hadoop ecosystem, a collection of tools that use of sit beside Map. Reduce and HDFS

Hbase No. SQL database system included in the standard Hadoop distributions. It is a key-value store. Rows are defined by a key, and have associated with them a number of columns where the associated values are stored. The only data type is the byte string. FULLY INTEGERATED

Hbase is accessed via java code, but APIs exist for using Hbase with pig, Thrift, Jython, … Hbase is often used for applications that may require sparse rows. That is, each row may use only a few of the defined columns. Unlike traditional HDFS applications, it permits random access to rows, rather than sequential searches.

Cassandra Distributed key-value database designed with simplicity and scalability in mind. API COMPATIBLE

Cassandra VS Cassandra HBase is an all-inclusive system, which means it does not require Hadoop environment or any other big data tools. Cassandra is completely master less: it operates as a peer-to-peer system. This make it easier to configure and highly resilient.

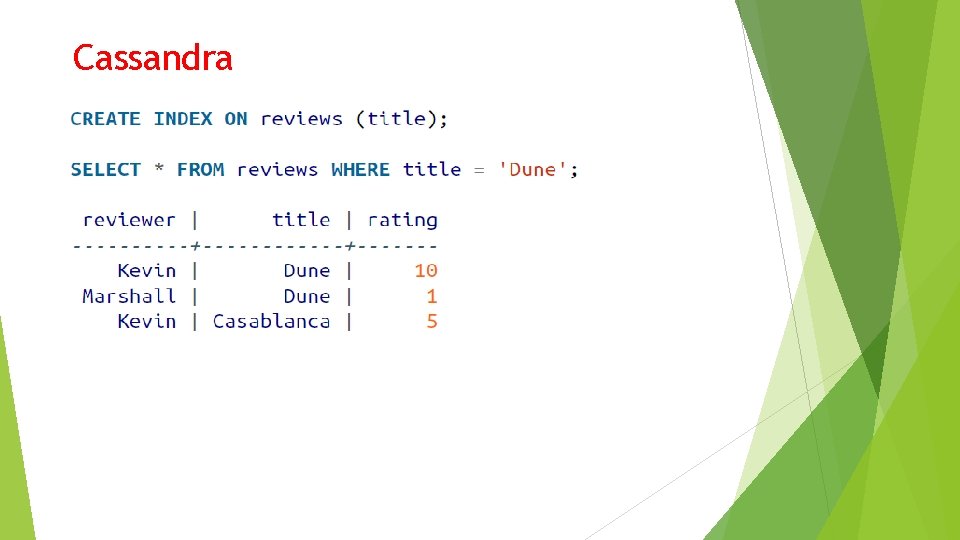

Cassandra Oftentimes you may need to simply organize some of your big data for easy retrieval.

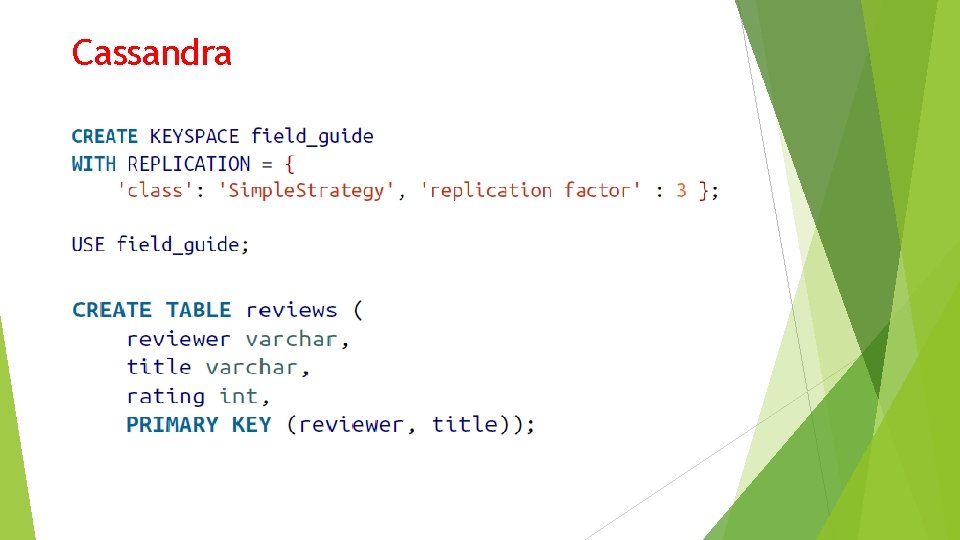

Cassandra You need to create a keyspace. Keyspaces are similar to schemas in traditional relational databases.

Cassandra

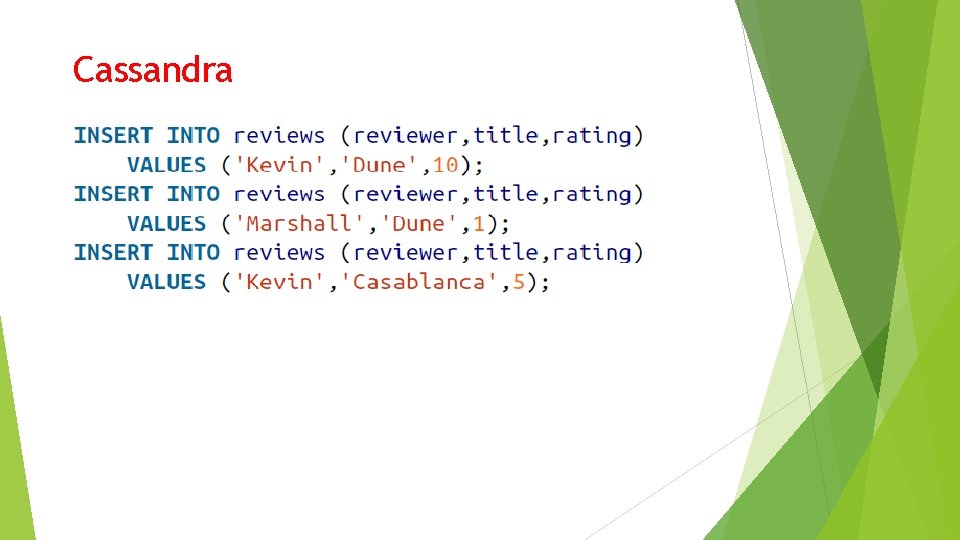

Cassandra

Cassandra

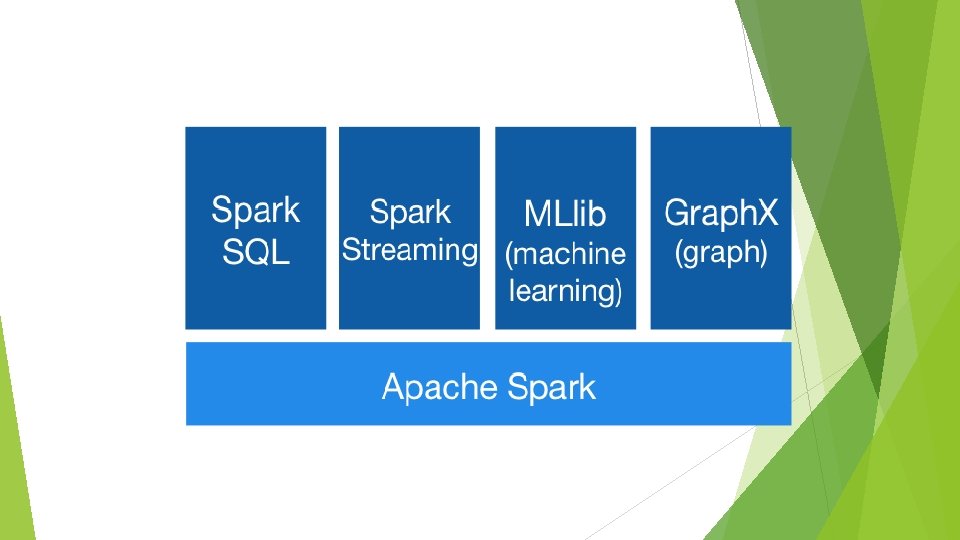

Spark Map. Reduce has proven to be suboptimal for applications like graph analysis that require iterative processing and data sharing. Spark is designed to provide a more flexible model that supports many of the multipass applications that falter in Map. Reduce. API COMPATIBLE

Spark is a fast and general engine for largescale data processing. Spark has an advanced DAG execution engine that supports cyclic data flow and in-memory computing

Spark Unlike Pig and Hive, Spark is not a tool for making Map. Reduce easier to use. It is a complete replacement for Map. Reduce that includes its own work execution engine.

Spark Operates with three core ideas: Resilient Distributed Dataset (RDD) Transformation Action

Spark VS Hadoop Spark is a fast and general processing engine compatible with Hadoop data. It is designed to perform both batch processing (similar to Map. Reduce) and new workloads like streaming, interactive queries, and machine learning.

Spark VS Hadoop It has been used to sort 100 TB of data 3 X faster than Hadoop Map. Reduce on 1/10 th of the machines.

Accumulo Control which users can see which cells in your data. U. S. National Security Agency (NSA) developed Accumulo and then donated the code to the Apache foundation. FULLY INTEGERATED

Accumulo VS HBase Accumulo improves on that model with its focus on security and cell-based access control. With security labels and Boolean expressions.

Blur Tool for indexing and searching text with Hadoop. Because it has Lucene at its core, it has many useful features, including fuzzy matching, wildcard searches, and paged results. It allows you to search through unstructured data in a way that would otherwise be very difficult. FULLY INTEGERATED

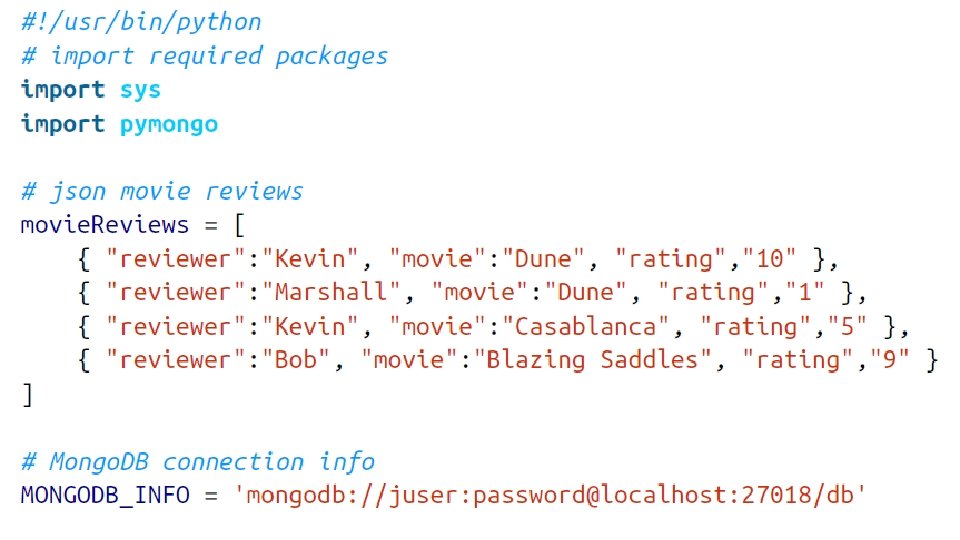

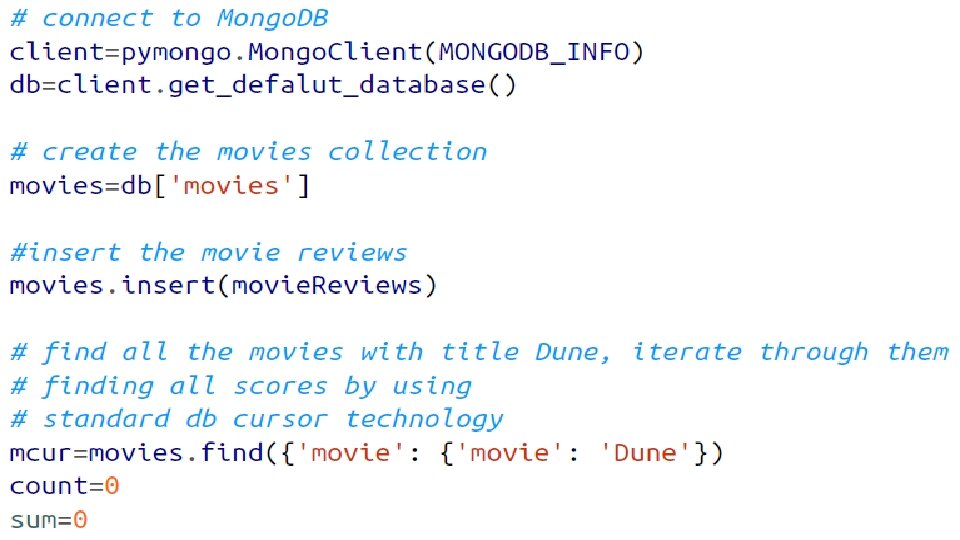

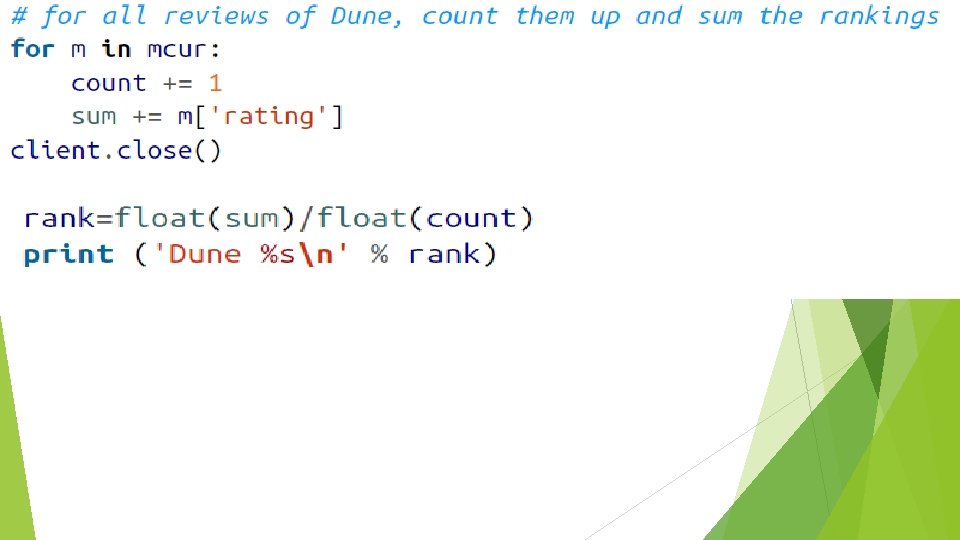

mongo. DB If you have large number of JSON documents in your Hadoop cluster and need some data management tool to effectively use them consider mongo. DB API COMPATIBLE

mongo. DB In relational databases, you have tables and rows. In Mongo. DB, the equivalent of a row is a JSON document, and the analog to a table is a collection, a set of JSON documents.

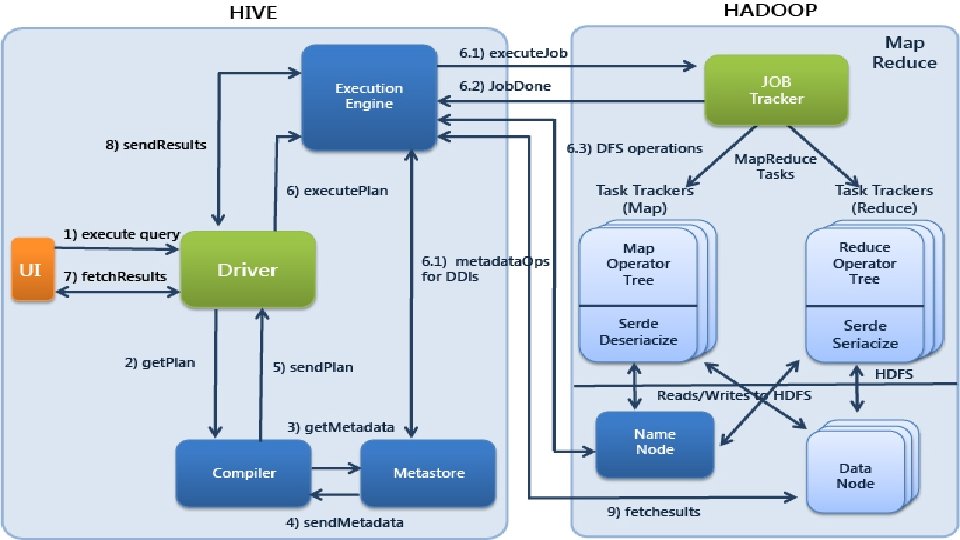

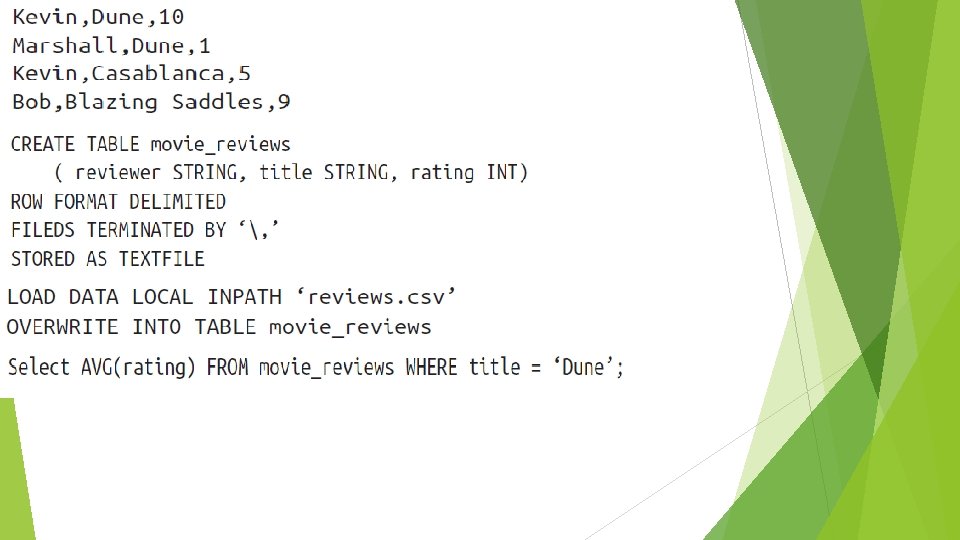

Hive The goal of Hive is to allow SQL access to data in the HDFS. The Apache Hive data-warehouse software facilitates querying and managing large datasets residing in HDFS. Hive defines a simple SQL-like query language, called HQL, that enables users familiar with SQL to query the data. FULLY INTEGERATED

Hive Queries written in HQL are converted into Map. Reduce code by Hive and executed by Hadoop.

Giraph Graph Database Graphs useful in describing relationships between entities FULLY INTEGERATED

Giraph Apache Giraph is derived from a Google project called Pregel and has been used by Facebook to build analyze a graph with a trillion nodes, admittedly on a very large Hadoop cluster. It is built using a technology called Bulk Synchronous Parallel (BSP).

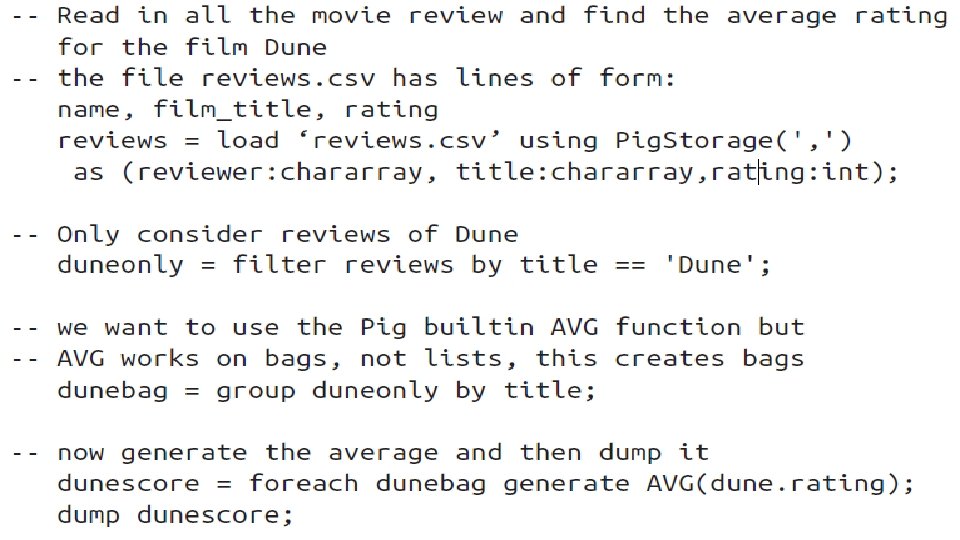

Pig A data flow language in which datasets are read in and transformed to other data sets. Pig is so called because “pigs eat everything, ” meaning that Pig can accommodate many different forms of input, but is frequently used for transforming text datasets. FULLY INTEGERATED

Pig is an admirable extract, transform, and load (ETL) tool. There is a library of shared Pig routines available in the Piggy Bank.

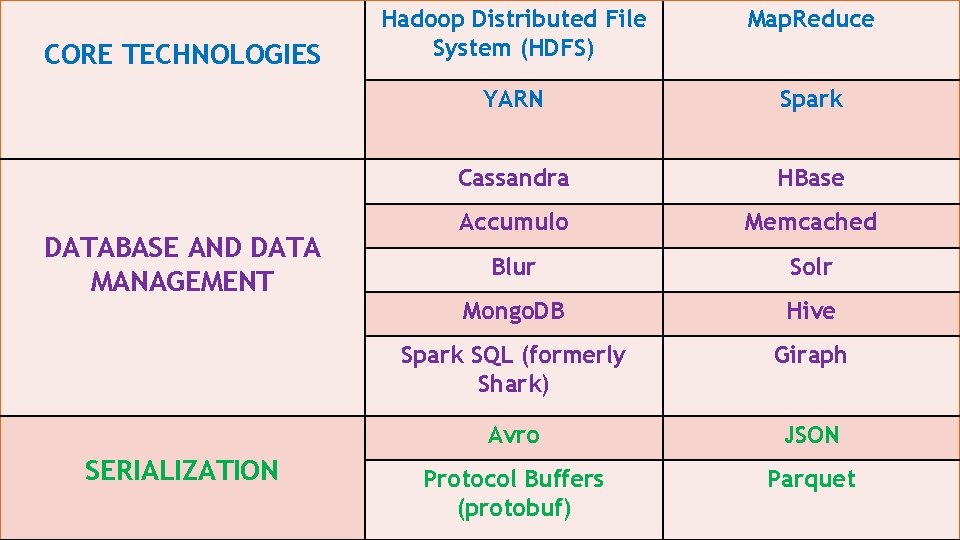

CORE TECHNOLOGIES DATABASE AND DATA MANAGEMENT SERIALIZATION Hadoop Distributed File System (HDFS) Map. Reduce YARN Spark Cassandra HBase Accumulo Memcached Blur Solr Mongo. DB Hive Spark SQL (formerly Shark) Giraph Avro JSON Protocol Buffers (protobuf) Parquet

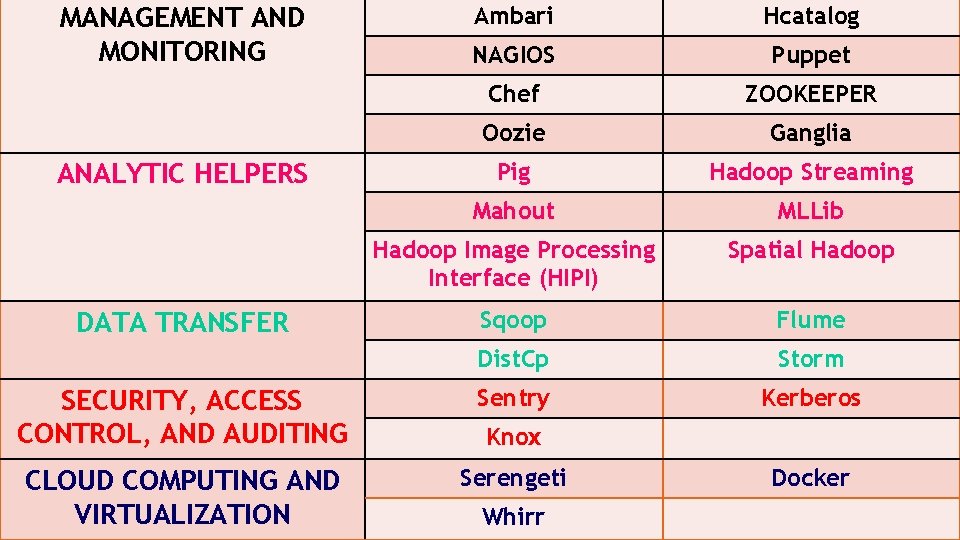

Ambari Hcatalog NAGIOS Puppet Chef ZOOKEEPER Oozie Ganglia Pig Hadoop Streaming Mahout MLLib Hadoop Image Processing Interface (HIPI) Spatial Hadoop Sqoop Flume Dist. Cp Storm SECURITY, ACCESS CONTROL, AND AUDITING Sentry Kerberos CLOUD COMPUTING AND VIRTUALIZATION Serengeti MANAGEMENT AND MONITORING ANALYTIC HELPERS DATA TRANSFER Knox Whirr Docker

- Slides: 40