Big data data mining and discrimination a perspective

Big data, data mining, and discrimination – a perspective (not only) from Computer Science Bettina Berendt KU Leuven, DTAI Research group Publications and materials on privacy, privacy education, discrimination, and ethics at https: //people. cs. kuleuven. be/~bettina. berendt/ This presentation’s core: (Berendt & Preibusch, 2014, 2017) See end of this slide set for references and URLs

Why this topic in Pa. BD? • Why do people worry about the disclosure of certain “private” information? • Can the processing of Big personal data, including “public” information, have consequences beyond privacy violations? • See also Laurens Naudts’ lecture

Hard questions to start with • What is your society’s worst discrimination problem at the moment? • What has been your own worst discrimination experience?

Background: Data mining Discrimination

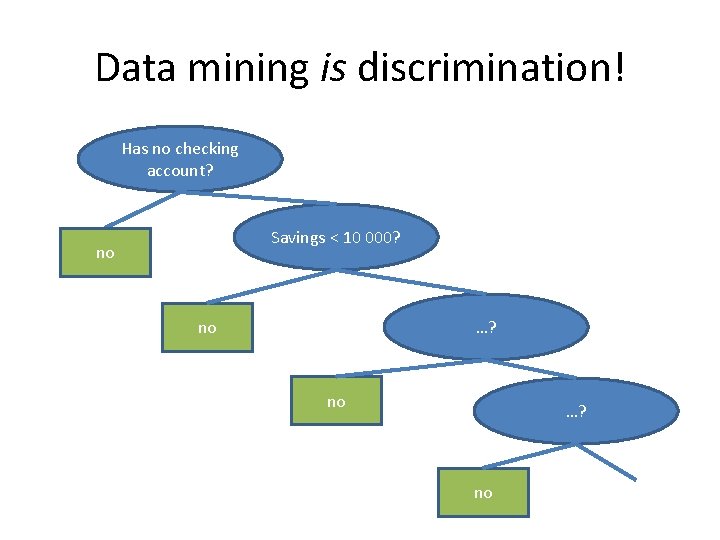

Data mining is discrimination! Has no checking account? Savings < 10 000? no no …? no

Legal view: Differentiation Has no checking account? Savings < 10 000? no no …? no

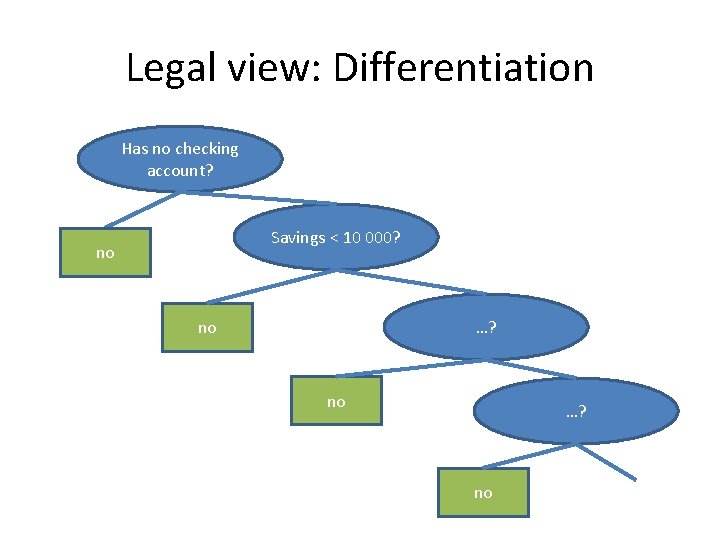

Unlawful direct discrimination Has no checking account? Savings < 10 000? no no Male? no …? no

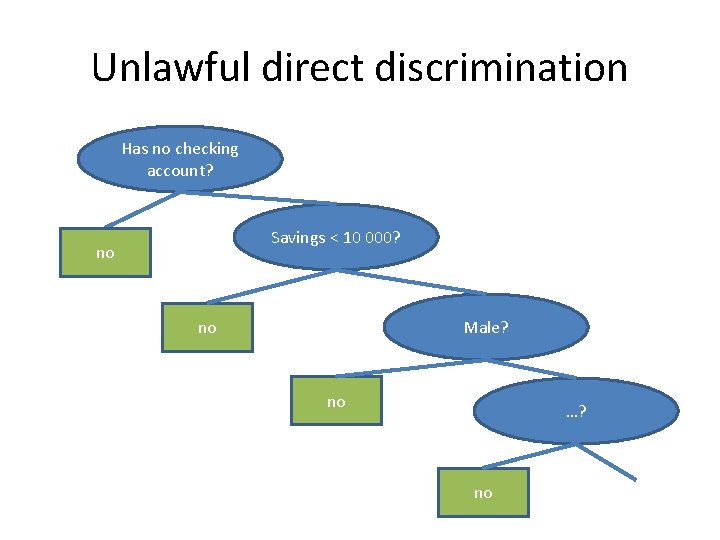

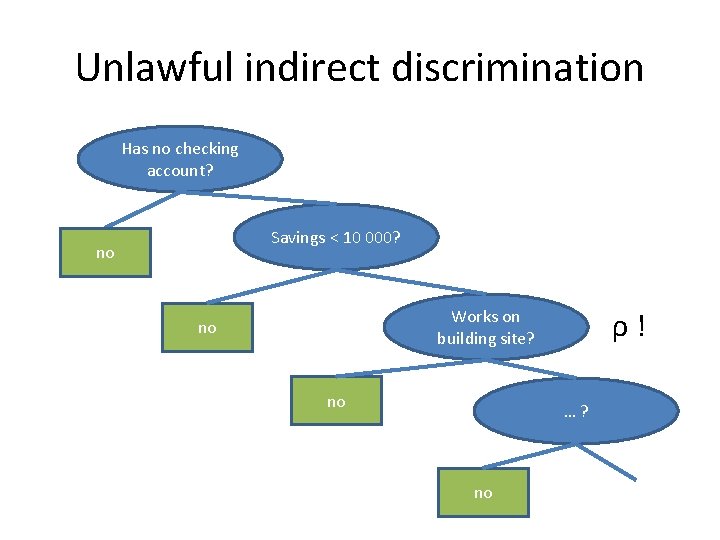

Unlawful indirect discrimination Has no checking account? Savings < 10 000? no Works on building site? no no ρ! …? no

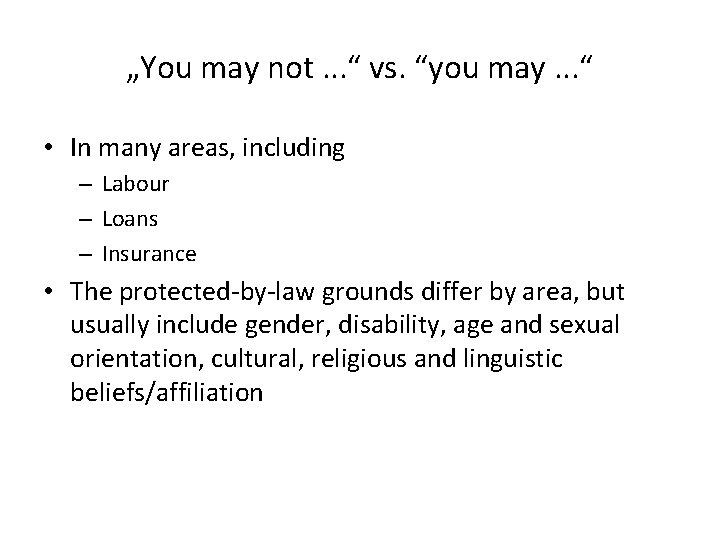

„You may not. . . “ vs. “you may. . . “ • In many areas, including – Labour – Loans – Insurance • The protected-by-law grounds differ by area, but usually include gender, disability, age and sexual orientation, cultural, religious and linguistic beliefs/affiliation

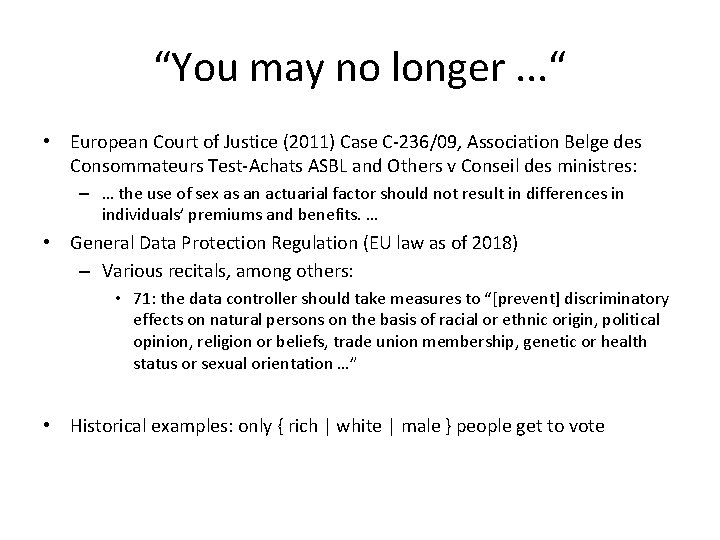

“You may no longer. . . “ • European Court of Justice (2011) Case C-236/09, Association Belge des Consommateurs Test-Achats ASBL and Others v Conseil des ministres: – … the use of sex as an actuarial factor should not result in differences in individuals’ premiums and benefits. … • General Data Protection Regulation (EU law as of 2018) – Various recitals, among others: • 71: the data controller should take measures to “[prevent] discriminatory effects on natural persons on the basis of racial or ethnic origin, political opinion, religion or beliefs, trade union membership, genetic or health status or sexual orientation …” • Historical examples: only { rich | white | male } people get to vote

Society is discrimination!

Approach: Discrimination-aware data mining (Pedreschi, Ruggieri, & Turini, 2008; & many others since)

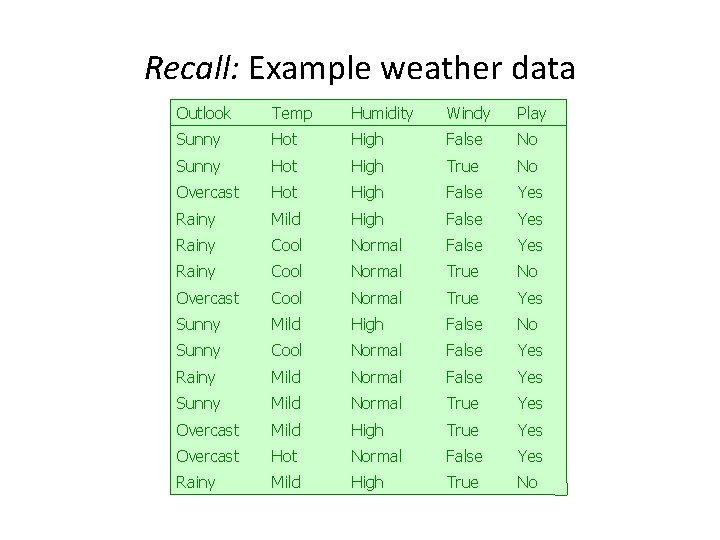

Recall: Example weather data Outlook Temp Humidity Windy Play Sunny Hot High False No Sunny Hot High True No Overcast Hot High False Yes Rainy Mild High False Yes Rainy Cool Normal True No Overcast Cool Normal True Yes Sunny Mild High False No Sunny Cool Normal False Yes Rainy Mild Normal False Yes Sunny Mild Normal True Yes Overcast Mild High True Yes Overcast Hot Normal False Yes Rainy Mild High True No

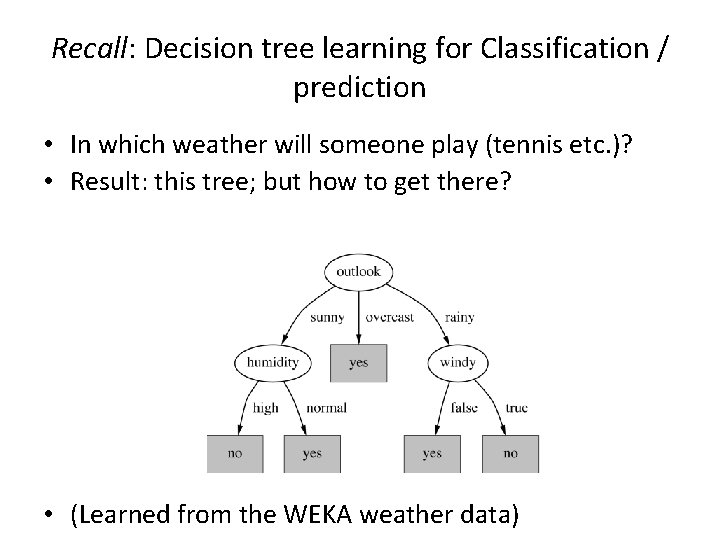

Recall: Decision tree learning for Classification / prediction • In which weather will someone play (tennis etc. )? • Result: this tree; but how to get there? • (Learned from the WEKA weather data)

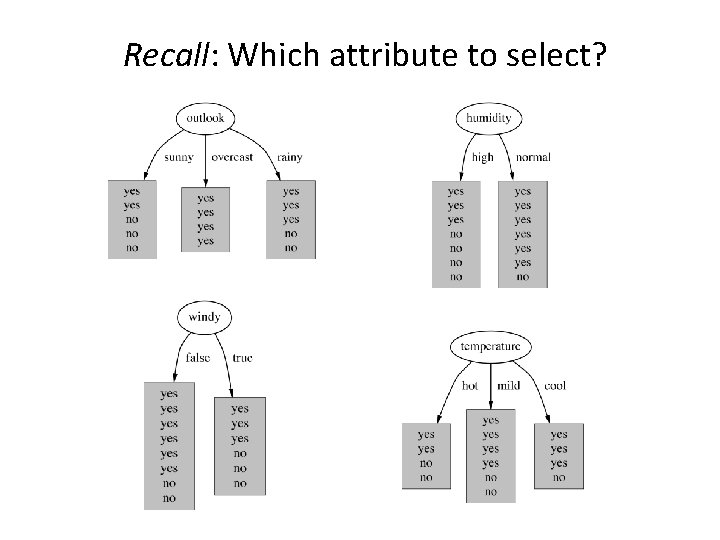

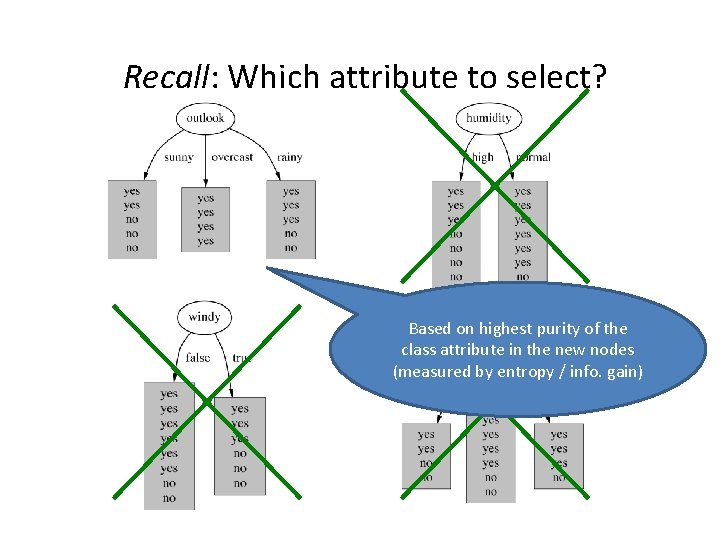

Recall: Which attribute to select?

Recall: Which attribute to select? Based on highest purity of the class attribute in the new nodes (measured by entropy / info. gain)

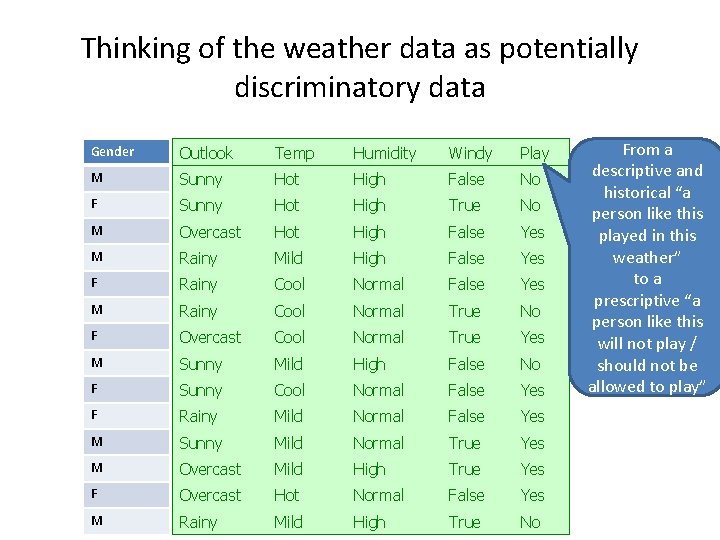

Thinking of the weather data as potentially discriminatory data Gender Outlook Temp Humidity Windy Play M Sunny Hot High False No F Sunny Hot High True No M Overcast Hot High False Yes M Rainy Mild High False Yes F Rainy Cool Normal False Yes M Rainy Cool Normal True No F Overcast Cool Normal True Yes M Sunny Mild High False No F Sunny Cool Normal False Yes F Rainy Mild Normal False Yes M Sunny Mild Normal True Yes M Overcast Mild High True Yes F Overcast Hot Normal False Yes M Rainy Mild High True No From a descriptive and historical “a person like this played in this weather” to a prescriptive “a person like this will not play / should not be allowed to play”

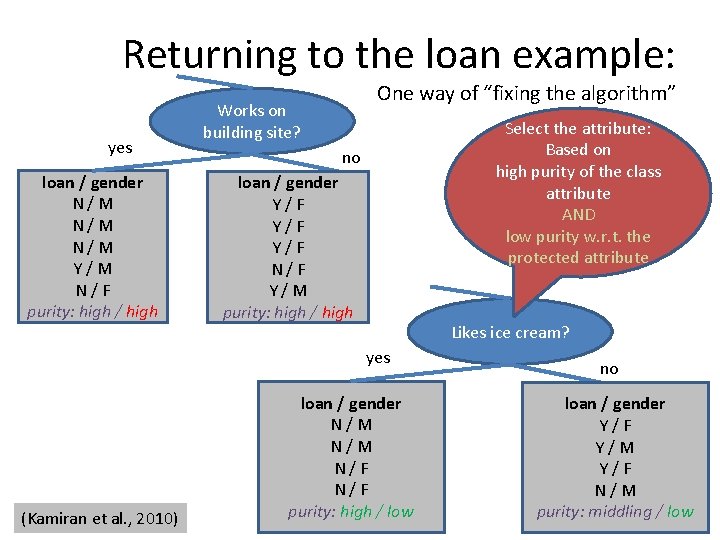

Returning to the loan example: yes loan / gender N/M N/M Y/M N/F purity: high / high One way of “fixing the algorithm” Works on building site? Select the attribute: Based on high purity of the class attribute AND low purity w. r. t. the protected attribute no loan / gender Y/F Y/F N/F Y/M purity: high / high Likes ice cream? yes (Kamiran et al. , 2010) loan / gender N/M N/F purity: high / low no loan / gender Y/F Y/M Y/F N/M purity: middling / low

Problem solved?

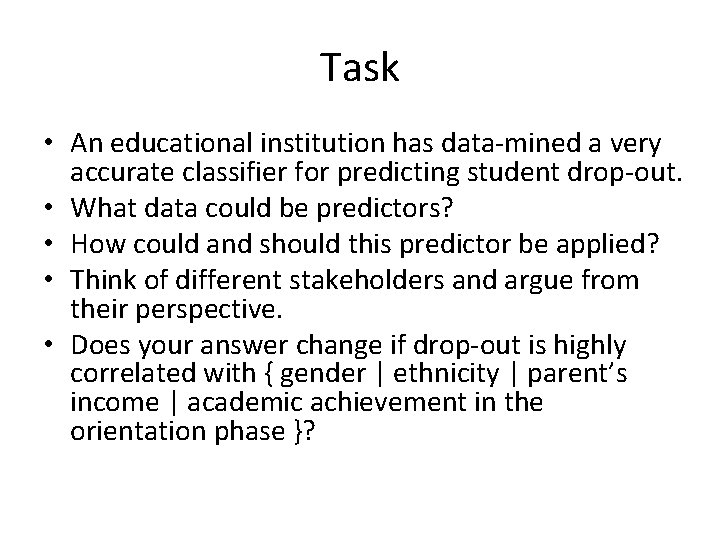

Task • An educational institution has data-mined a very accurate classifier for predicting student drop-out. • What data could be predictors? • How could and should this predictor be applied? • Think of different stakeholders and argue from their perspective. • Does your answer change if drop-out is highly correlated with { gender | ethnicity | parent’s income | academic achievement in the orientation phase }?

More thoughts from my side

Conceptual challenges The notion of discrimination

Challenge 1: Intersectionality

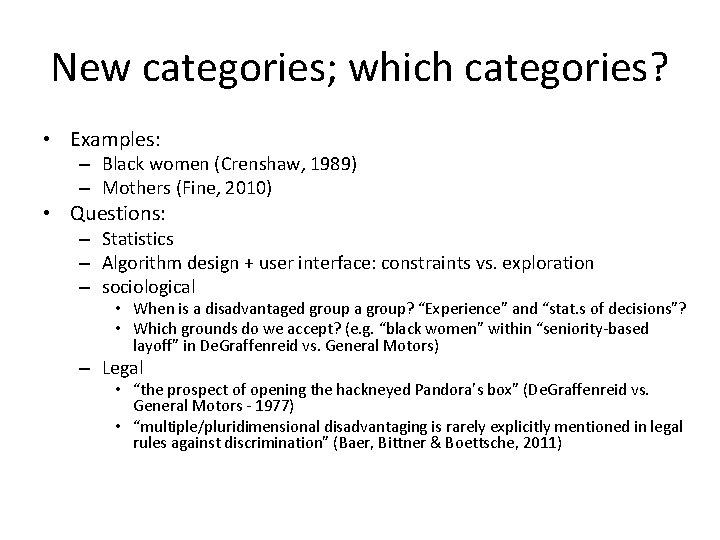

New categories; which categories? • Examples: – Black women (Crenshaw, 1989) – Mothers (Fine, 2010) • Questions: – Statistics – Algorithm design + user interface: constraints vs. exploration – sociological • When is a disadvantaged group a group? “Experience” and “stat. s of decisions”? • Which grounds do we accept? (e. g. “black women” within “seniority-based layoff” in De. Graffenreid vs. General Motors) – Legal • “the prospect of opening the hackneyed Pandora’s box” (De. Graffenreid vs. General Motors - 1977) • “multiple/pluridimensional disadvantaging is rarely explicitly mentioned in legal rules against discrimination” (Baer, Bittner & Boettsche, 2011)

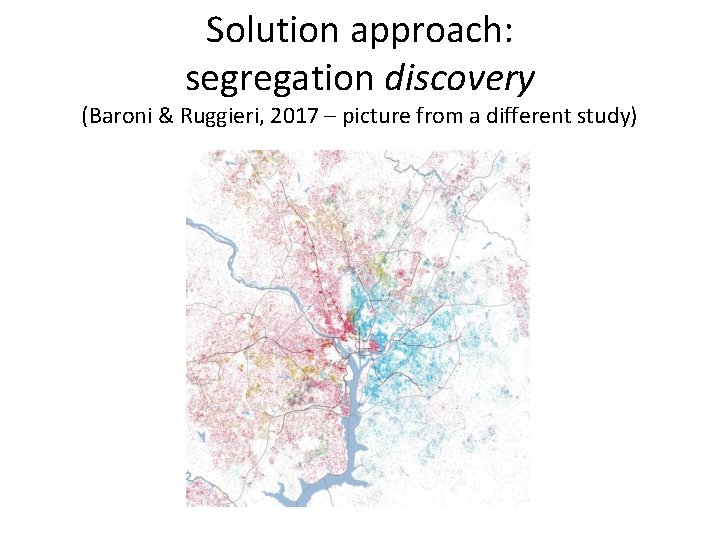

Solution approach: segregation discovery (Baroni & Ruggieri, 2017 – picture from a different study)

Challenge 2: Discrimination =? = not distinguishing by a given attribute (with some notes on possibly fundamental limits on formalization)

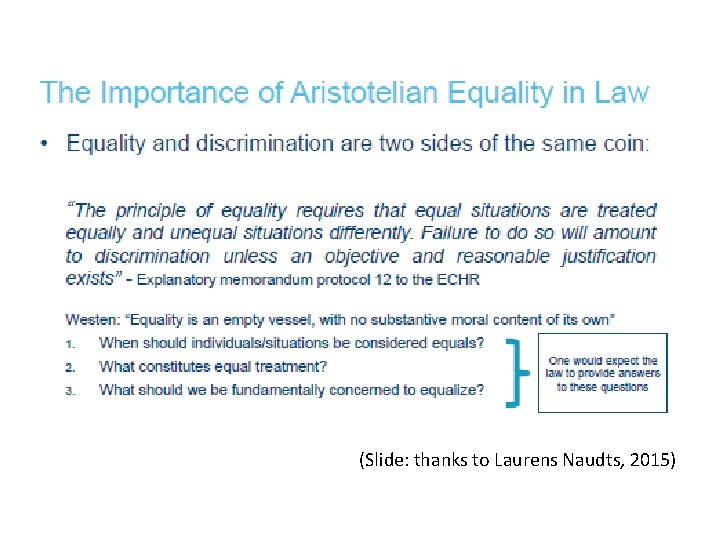

(Slide: thanks to Laurens Naudts, 2015)

Equal treatment = non-discrimination? “I treat all my employees the same – They enter the office by the stairs. ”

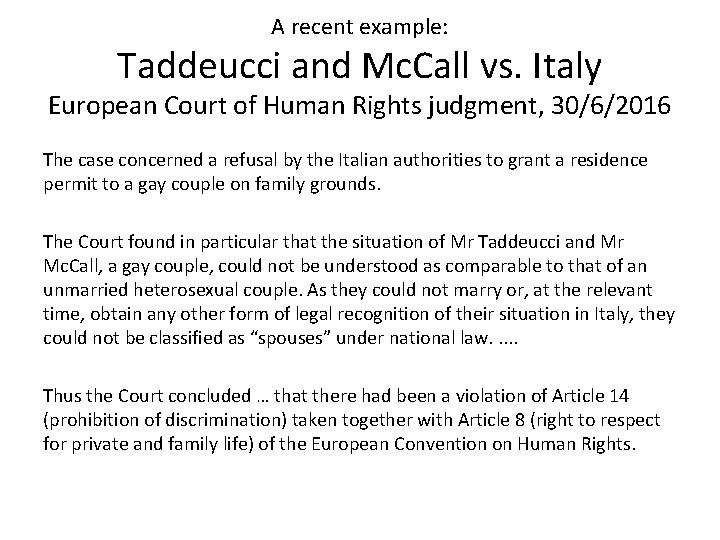

A recent example: Taddeucci and Mc. Call vs. Italy European Court of Human Rights judgment, 30/6/2016 The case concerned a refusal by the Italian authorities to grant a residence permit to a gay couple on family grounds. The Court found in particular that the situation of Mr Taddeucci and Mr Mc. Call, a gay couple, could not be understood as comparable to that of an unmarried heterosexual couple. As they could not marry or, at the relevant time, obtain any other form of legal recognition of their situation in Italy, they could not be classified as “spouses” under national law. . . Thus the Court concluded … that there had been a violation of Article 14 (prohibition of discrimination) taken together with Article 8 (right to respect for private and family life) of the European Convention on Human Rights.

Solution approaches • Similarity measures – Dwork et al. (2012) – Situation testing (Luong et al. , 2011) • Counterfactual reasoning

Challenge 3: Representation (1), or: Categories can serve to detect, but also to perpetuate discrimination

A computer scientist’s view of people

A fundamental question regarding categories • Are categories of humans necessary to identify discrimination? – See also Challenge 3 below, on Why not just remove category information in data • Or does their use (also in positive senses, cf. identity politics) serve to perpetuate structures of inequality, segregation, discrimination …? – Categories of humans can become dis-used and “disappear”, ex. Estates of the realm, religion (in some countries) – Or does their use create discrimination problems (and worse)? – see http: //genocidewatch. org/genocide/tenstagesofgenocide. html and its criticisms • This has been debated for a long time. • There is no easy answer.

As computer scientists … • … we (believe we can) define anything • We then believe this is the truth • We then believe we can “solve” it Solutionism (Morozov)

Identity politics revisited – do the categories themselves induce biases? • "Have you ever noticed that […] we talk incessantly about <Anerkennung> [recognition, appreciation, acknowledgement, acceptance, respect] and diversity, but hardly ever any more about social inequality? • The obsession with which, in rich societies, for example the <Anerkennung> of even the most peculiar sexual orientation is struggled for, is symptomatic of a setting in which one should not any more talk about social, i. e. changeable inequality. • This is not any more about the abolition of inequality, but only about the <Anerkennung> of diversity, and all of this in a morally hypercharged discourse. “ (Welzer, 2016) • What do you believe are the two most self-reported grounds of discrimination in Germany in 2016? (as reported in Berghahn et al. , 2016)

Challenge 3: About vicious cycles (and virtuous ones)

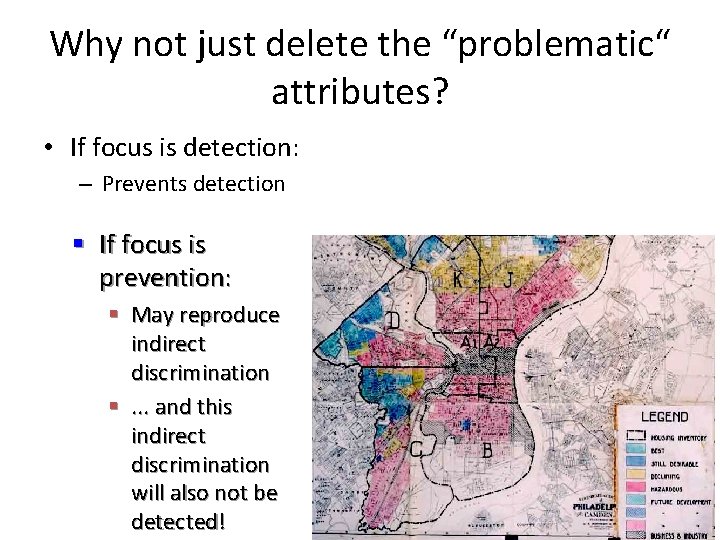

Why not just delete the “problematic“ attributes? • If focus is detection: – Prevents detection § If focus is prevention: § May reproduce indirect discrimination §. . . and this indirect discrimination will also not be detected!

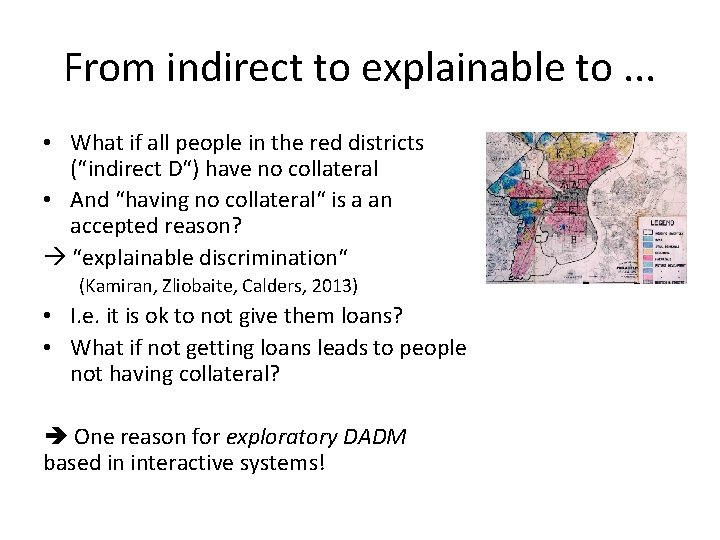

From indirect to explainable to. . . • What if all people in the red districts (“indirect D“) have no collateral • And “having no collateral“ is a an accepted reason? “explainable discrimination“ (Kamiran, Zliobaite, Calders, 2013) • I. e. it is ok to not give them loans? • What if not getting loans leads to people not having collateral? One reason for exploratory DADM based in interactive systems!

Dynamics: Feedback loops

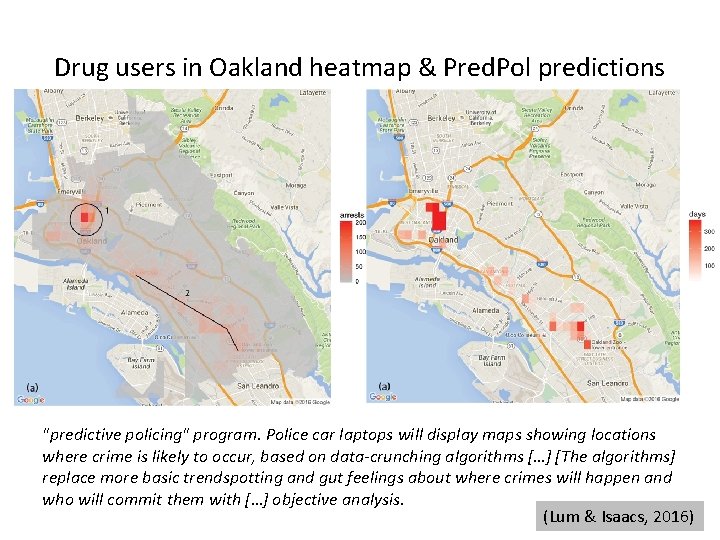

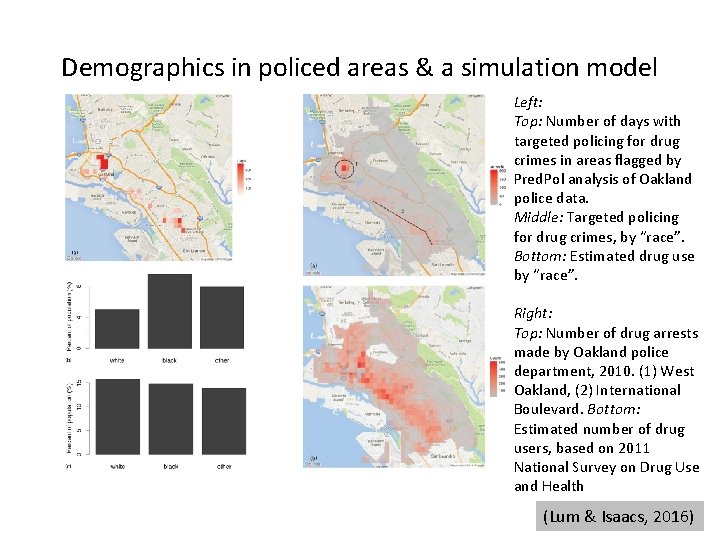

Drug users in Oakland heatmap & Pred. Pol predictions "predictive policing" program. Police car laptops will display maps showing locations where crime is likely to occur, based on data-crunching algorithms […] [The algorithms] replace more basic trendspotting and gut feelings about where crimes will happen and who will commit them with […] objective analysis. (Lum & Isaacs, 2016)

Demographics in policed areas & a simulation model Left: Top: Number of days with targeted policing for drug crimes in areas flagged by Pred. Pol analysis of Oakland police data. Middle: Targeted policing for drug crimes, by “race”. Bottom: Estimated drug use by “race”. Right: Top: Number of drug arrests made by Oakland police department, 2010. (1) West Oakland, (2) International Boulevard. Bottom: Estimated number of drug users, based on 2011 National Survey on Drug Use and Health (Lum & Isaacs, 2016)

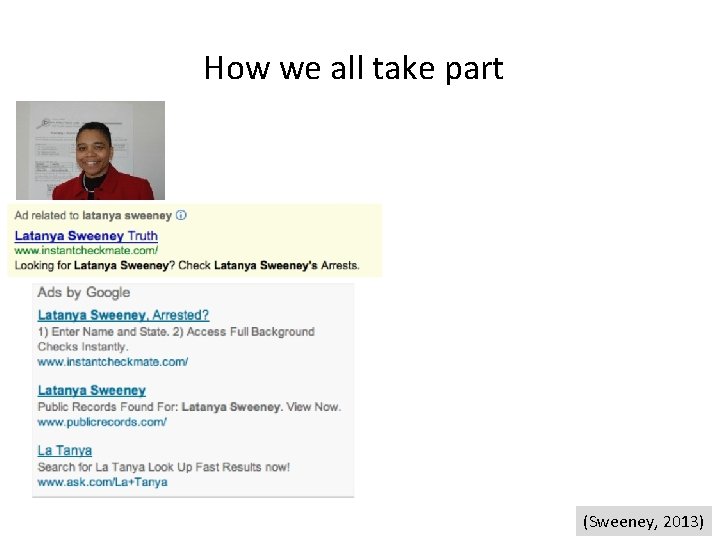

How we all take part (Sweeney, 2013)

A well-known phenomenon? !

Solution approach • An approach based on reinforcement learning • Ensign et al. 2017: Runaway feedback loops in predictive policing (currently on arxiv, to appear in FAT* 2018) • “In order to act near optimally, the agent must reason about the long term consequences of its actions (i. e. , maximize future income), although the immediate reward associated with this might be negative. ” (from Wikipedia on reinforcement learning)

Solution approach • An approach based on reinforcement learning • Ensign et al. 2017: Runaway feedback loops in predictive policing (currently on arxiv, to appear in FAT* 2018) • “In order to act near optimally, the agent must reason about the long term consequences of its actions (i. e. , maximize future income), although the immediate reward associated with this might be negative. ” (https: //en. wikipedia. org/wiki/Reinforcement_learning)

The human in the loops challenge(s) Algorithms don’t discriminate, people do.

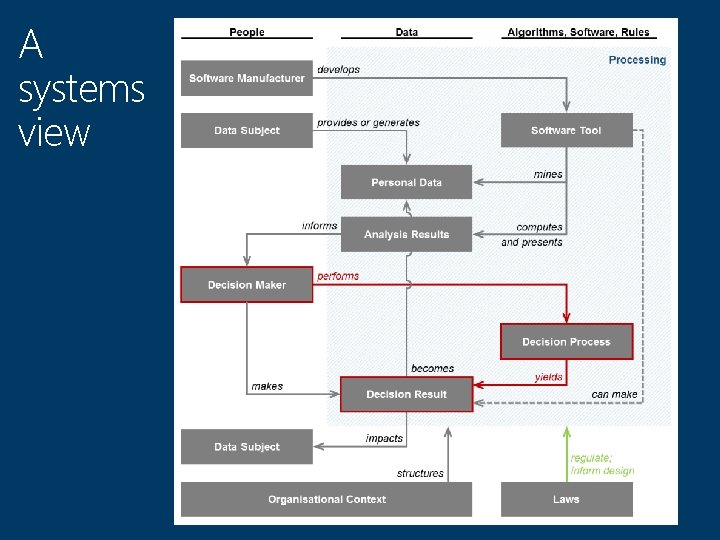

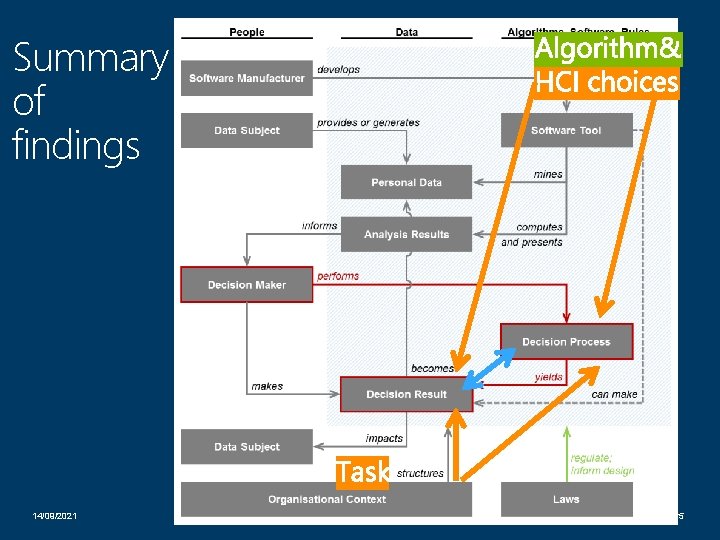

Decisions are generally made/helped by algorithms in a decision context • Human • Organisational • Wider systems • Decisions data decisions … • Legal notion of “safeguards”: human involvement

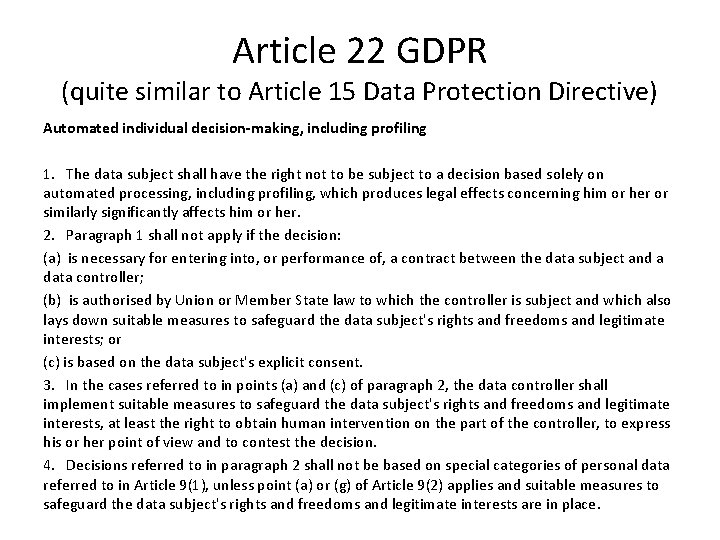

Article 22 GDPR (quite similar to Article 15 Data Protection Directive) Automated individual decision-making, including profiling 1. The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her. 2. Paragraph 1 shall not apply if the decision: (a) is necessary for entering into, or performance of, a contract between the data subject and a data controller; (b) is authorised by Union or Member State law to which the controller is subject and which also lays down suitable measures to safeguard the data subject's rights and freedoms and legitimate interests; or (c) is based on the data subject's explicit consent. 3. In the cases referred to in points (a) and (c) of paragraph 2, the data controller shall implement suitable measures to safeguard the data subject's rights and freedoms and legitimate interests, at least the right to obtain human intervention on the part of the controller, to express his or her point of view and to contest the decision. 4. Decisions referred to in paragraph 2 shall not be based on special categories of personal data referred to in Article 9(1), unless point (a) or (g) of Article 9(2) applies and suitable measures to safeguard the data subject's rights and freedoms and legitimate interests are in place.

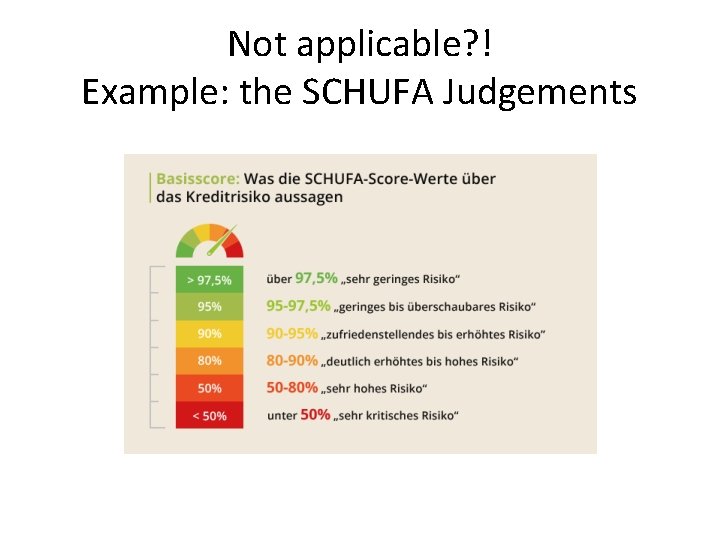

Not applicable? ! Example: the SCHUFA Judgements

An experimental user study 14/09/2021 (Berendt & Preibusch, 2014, 532017)

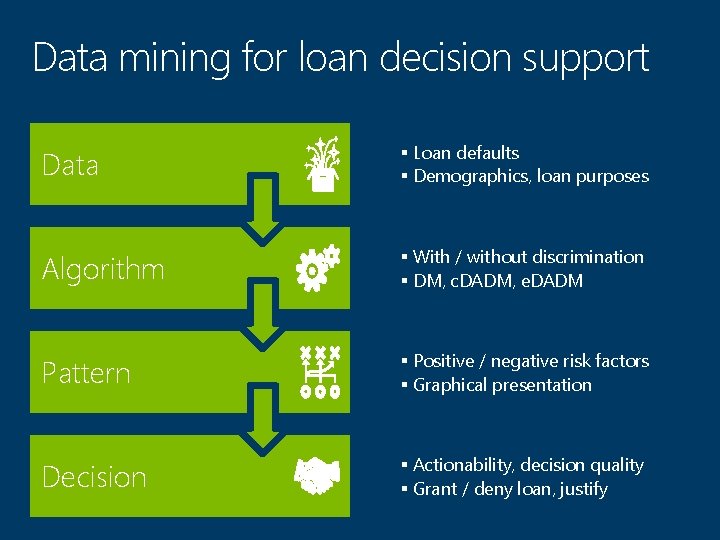

Data mining for loan decision support Data § Loan defaults § Demographics, loan purposes Algorithm § With / without discrimination § DM, c. DADM, e. DADM Pattern § Positive / negative risk factors § Graphical presentation Decision § Actionability, decision quality § Grant / deny loan, justify

A systems view

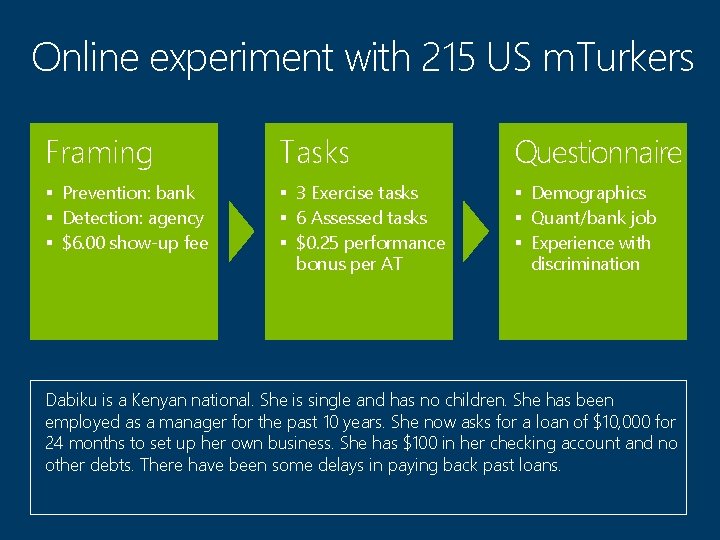

Online experiment with 215 US m. Turkers Framing Tasks Questionnaire § Prevention: bank § Detection: agency § $6. 00 show-up fee § 3 Exercise tasks § 6 Assessed tasks § $0. 25 performance bonus per AT § Demographics § Quant/bank job § Experience with discrimination Dabiku is a Kenyan national. She is single and has no children. She has been employed as a manager for the past 10 years. She now asks for a loan of $10, 000 for 24 months to set up her own business. She has $100 in her checking account and no other debts. There have been some delays in paying back past loans.

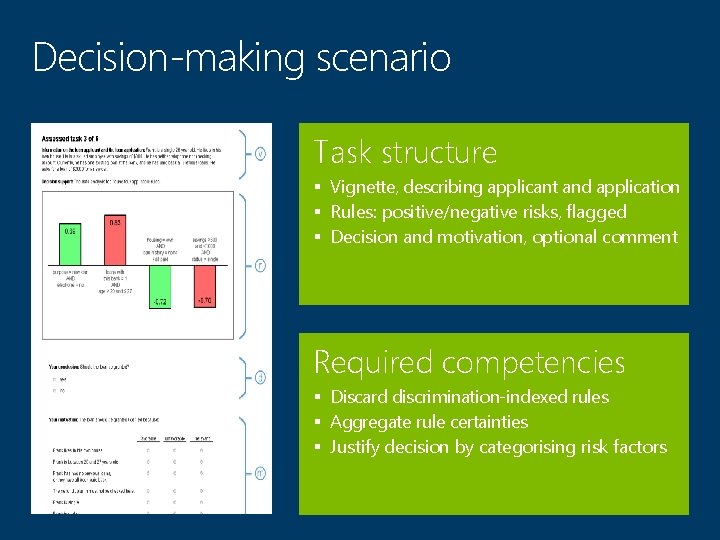

Decision-making scenario Task structure § Vignette, describing applicant and application § Rules: positive/negative risks, flagged § Decision and motivation, optional comment Required competencies § Discard discrimination-indexed rules § Aggregate rule certainties § Justify decision by categorising risk factors

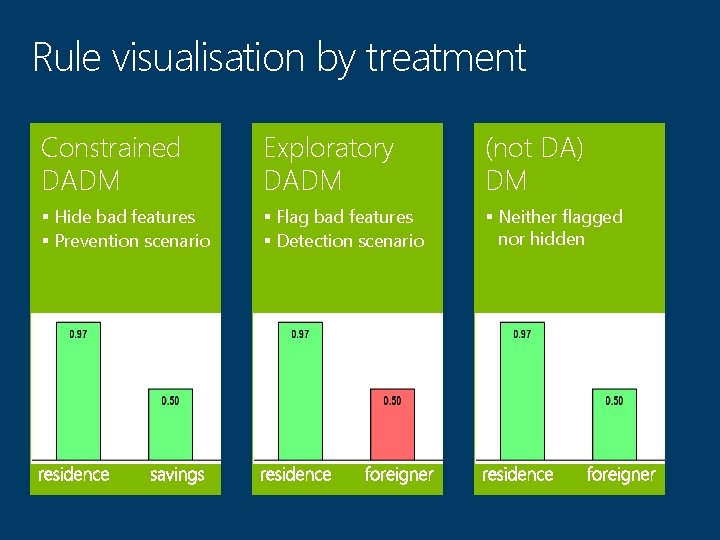

Rule visualisation by treatment Constrained DADM Exploratory DADM (not DA) DM § Hide bad features § Prevention scenario § Flag bad features § Detection scenario § Neither flagged nor hidden

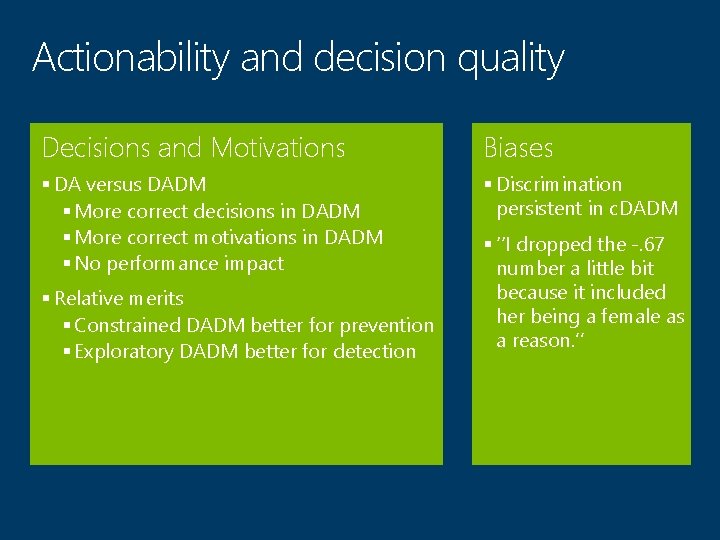

Actionability and decision quality Decisions and Motivations Biases § DA versus DADM § More correct decisions in DADM § More correct motivations in DADM § No performance impact § Discrimination persistent in c. DADM § Relative merits § Constrained DADM better for prevention § Exploratory DADM better for detection § ‘‘I dropped the -. 67 number a little bit because it included her being a female as a reason. ’’

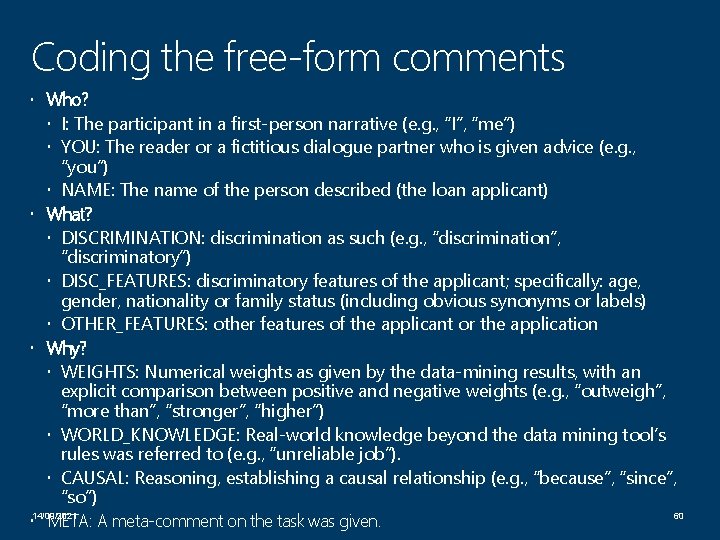

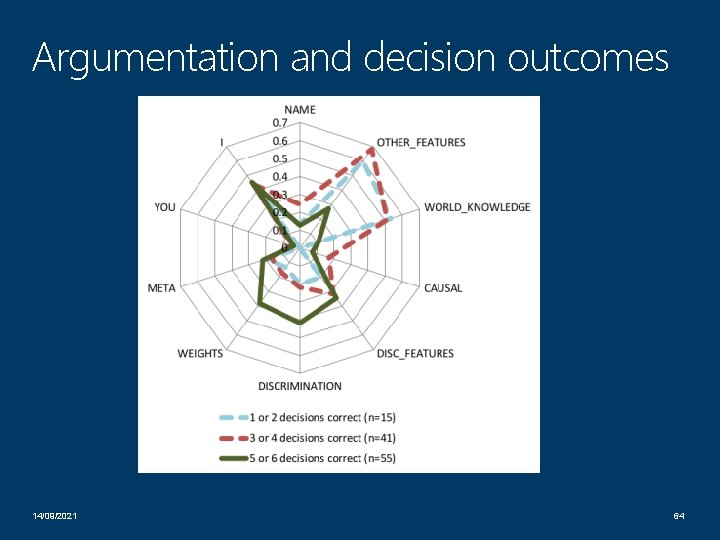

Coding the free-form comments Who? I: The participant in a first-person narrative (e. g. , “I”, “me”) YOU: The reader or a fictitious dialogue partner who is given advice (e. g. , “you”) NAME: The name of the person described (the loan applicant) What? DISCRIMINATION: discrimination as such (e. g. , “discrimination”, “discriminatory”) DISC_FEATURES: discriminatory features of the applicant; specifically: age, gender, nationality or family status (including obvious synonyms or labels) OTHER_FEATURES: other features of the applicant or the application Why? WEIGHTS: Numerical weights as given by the data-mining results, with an explicit comparison between positive and negative weights (e. g. , “outweigh”, “more than”, “stronger”, “higher”) WORLD_KNOWLEDGE: Real-world knowledge beyond the data mining tool’s rules was referred to (e. g. , “unreliable job”). CAUSAL: Reasoning, establishing a causal relationship (e. g. , “because”, “since”, “so”) 14/09/2021 60 META: A meta-comment on the task was given.

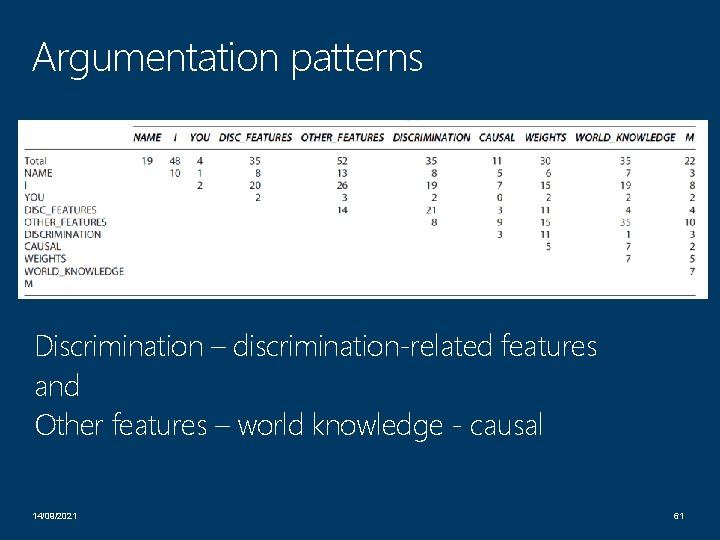

Argumentation patterns Discrimination – discrimination-related features and Other features – world knowledge - causal 14/09/2021 61

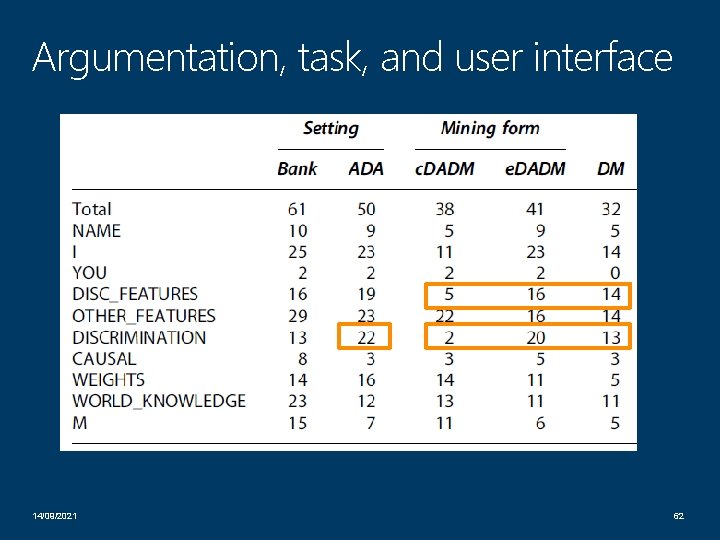

Argumentation, task, and user interface 14/09/2021 62

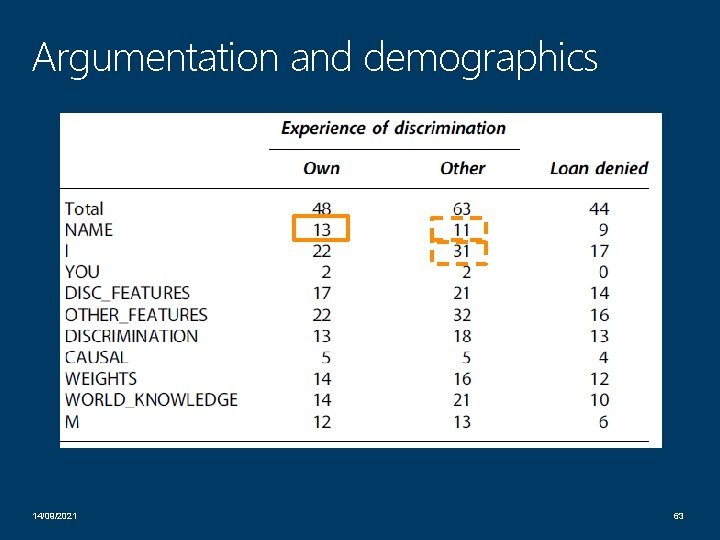

Argumentation and demographics 14/09/2021 63

Argumentation and decision outcomes 14/09/2021 64

Summary of findings 14/09/2021 65

Conclusion: “Fixing the algorithm”? “Sanitizing the algorithm” • Is not sufficient to avoid discriminatory reasoning • Cannot be considered independently of context.

Solution approach • • (our proposal) Consider the whole system Draw on value-based design Use tried-and-tested structures and procedures – E. g. legal principles

Outlook: Discrimination and accountability

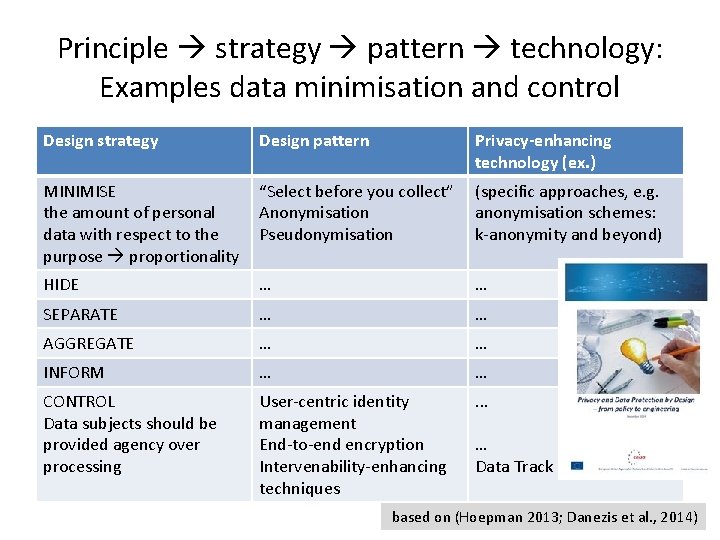

Principle strategy pattern technology: Examples data minimisation and control Design strategy Design pattern Privacy-enhancing technology (ex. ) MINIMISE the amount of personal data with respect to the purpose proportionality “Select before you collect” Anonymisation Pseudonymisation (specific approaches, e. g. anonymisation schemes: k-anonymity and beyond) HIDE … … SEPARATE … … AGGREGATE … … INFORM … … CONTROL Data subjects should be provided agency over processing User-centric identity management End-to-end encryption Intervenability-enhancing techniques . . . … Data Track based on (Hoepman 2013; Danezis et al. , 2014)

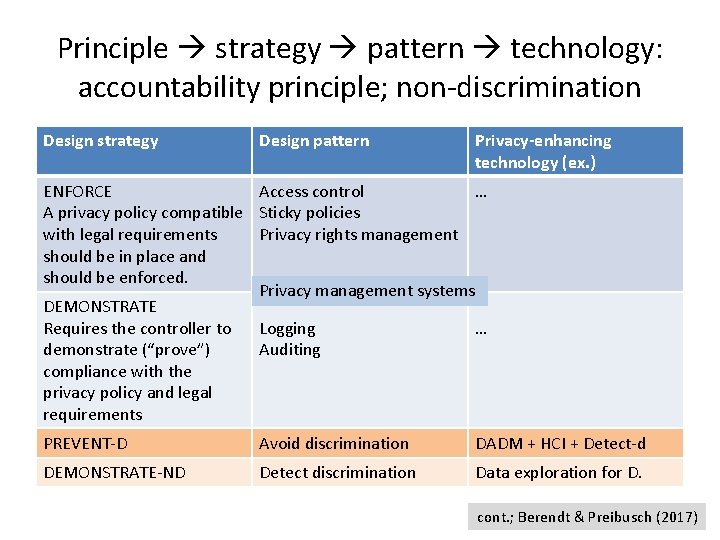

Principle strategy pattern technology: accountability principle; non-discrimination Design strategy Design pattern Privacy-enhancing technology (ex. ) ENFORCE Access control … A privacy policy compatible Sticky policies with legal requirements Privacy rights management should be in place and should be enforced. Privacy management systems DEMONSTRATE Requires the controller to Logging … demonstrate (“prove”) Auditing compliance with the privacy policy and legal requirements PREVENT-D Avoid discrimination DADM + HCI + Detect-d DEMONSTRATE-ND Detect discrimination Data exploration for D. cont. ; Berendt & Preibusch (2017)

Outlook: representation (2) – “collectivisation”

Next week

Image credits p. 3: http: //eyeingchicago. com/storage/northpo http: //i. dailymail. co. uk/i/pix/2015/07/01/13/2 A 23 A 22200000578 -0 Google_has_issued_an_apology_after_computer_programmer_Jacky_Alc-a 28_1435752503903. jpgint. png? __SQUARESPACE_CACHEVERSION=1465952722226 p. 26: http: //www. visionlearning. com/img/library/large_images/image_2555. png pp. 34 f. : http: //onlinelibrary. wiley. com/doi/10. 1111/j. 1740 -9713. 2016. 00960. x/full http: //www. sciencemag. org/news/2016/09/can-predictive-policing-prevent-crime-it-happens p. 36: http: //latanyasweeney. org/index. html p. 37: https: //en. wikipedia. org/wiki/File: An. American. Dilemma. jpg http: //www. blackwestchester. com/wp-content/uploads/2017/03/13 th-e 1489199239580. png http: //www. radio 3. rai. it/dl/img/2017/05/1494496317916 LETTERA-A-UNA-PROFESSORESSA 640. jpg p. 43: https: //www. aktuelle-bauzinsen. info/images/desktop/schufa-score-bewertung. png p. 62: http: //www. rp-online. de/polopoly_fs/this-photo-provided-on-thursday-feb 1. 5424969. 1505916915!image/984164559. jpg_gen/derivatives/d 940 x 528/984164559. jpg

References Berendt, B. & Preibusch, S. (2014). Better decision support through exploratory discrimination-aware data mining: foundations and empirical evidence. Artificial Intelligence and Law, 22 (2), 175 -209. https: //people. cs. kuleuven. be/~bettina. berendt/Papers/berendt_preibusch_2014. pdf Berendt, B. & Preibusch, S. (2017). Toward accountable discrimination-aware data mining: The importance of keeping the human in the loop – and under the looking-glass. Big Data, 5(2). DOI: 10. 1089/big. 2016. 0055. https: //people. cs. kuleuven. be/~bettina. berendt/Papers/berendt_preibusch_2017_last_author_version. pdf Baer, S. , Bittner, M. , & Göttsche, A. L. (2011). Mehrdimensionale Diskriminierung - Begriffe, Theorien und juristische Analyse. Antidiskriminierungsstelle des Bundes. http: //www. antidiskriminierungsstelle. de/Shared. Docs/Downloads/DE/publikationen/Expertise_Mehrdimensionale_Diskriminierung_jur_Analyse. pdf ? __blob=pu blication. File Berghahn, S. , Egenberger, V. , Klapp, M. , Klose, A. , Liebscher, D. , Supik, L. , , & Tischbirek, A. (2016). Evaluation des Allgemeinen Gleichbehandlungsgesetzes [Evaluation of the German General Anti-discrimination Law], erstellt im Auftrag der Antidiskriminierungsstelle des Bundes. http: //www. antidiskriminierungsstelle. de/Shared. Docs/Downloads/DE/publikationen/AGG_Evaluation. pdf? __blob=publication. File&v=14 Baroni, A. (2017). Segregation Aware Data Mining. Ph. D Thesis, Dipartimento di Informatica, Università di Pisa. (thesis supervisor: Salvatore Ruggieri) Crenshaw, K. (1989). Demarginalizing the Intersection of Race and Sex: A Black Feminist Critique of Antidiscrimination Doctrine. In: The University of Chicago Legal Forum, S. 139– 167. http: //chicagounbound. uchicago. edu/cgi/viewcontent. cgi? article=1052&context=uclf Danezis, G. , Domingo-Ferrer, J. , Hansen, M. , Hoepman, J. -H. , Le Métayer, D. , Tirtea, R. , & Schiffner, S. (2014). Privacy and Data Protection by Design – from Policy to Engineering. ENISA Report. https: //www. enisa. europa. eu/publications/privacy-and-data-protection-by-design Dwork C, Hardt M, Pitassi T, et al. Fairness through awareness. Proc. ITCS 2012; 2012: 214– 226. Ensign, D. , Friedler, S. A. , Neville, S. , Scheidegger, C. , & Venkatasubramanian, S. (2017). Runaway feedback loops in predictive policing. To appear in Proc. FAT* 2018. https: //arxiv. org/abs/1706. 09847 European Court of Justice (2011) Case C-236/09, Association Belge des Consommateurs Test-Achats ASBL and Others v Conseil des ministres. http: //curia. europa. eu/juris/liste. jsf? language=en&num=C-236/M 09 Fine, C. (2010). Delusions of gender. The real science behind sex differences. Icon Books, London. Hoepman, J. -H. (2013/14). Privacy design strategies – (extended abstract). In ICT Systems Security and Privacy Protection - 29 th IFIP TC 11 International Conference, SEC 2014, Marrakech, Morocco, June 2 -4, 2014. Proceedings, pages 446– 459, 2014. Cited from the 2013 preprint at https: //arxiv. org/abs/1210. 6621 v 2 Kamiran, F. , Calders, T. , & Pechenizkiy, M. (2010). Discrimination Aware Decision Tree Learning. ICDM 2010: 869 -874. http: //wwwis. win. tue. nl/~tcalders/pubs/TR 10 -13. pdf Kamiran F, Zliobaite I, Calders T (2013) Quantifying explainable discrimination and removing illegal discrimination in automated decision making. Knowl Inf Syst 35(3): 613– 644. http: //repository. tue. nl/737123 Luong BT, Ruggieri S, Turini F (2011). k-nn as an implementation of situation testing for discrimination discovery and prevention. In: KDD, pp 502– 510. ACM. Naudts, L. (2015). Algorithms – Legal Framework. Presentation in the Privacy and Big Data course, KU Leuven. Pedreschi D, Ruggieri S, Turini F (2008) Discrimination-aware data mining. In: Proceedings of KDD’ 08, pp 560– 568. ACM. http: //www. di. unipi. it/~ruggieri/Papers/kdd 2008. pdf Sweeney, L. (2013). Discrimination in Online Ad Delivery. Communications of the ACM, 56(5), 44 -54. cited from earlier version available at SSRN: http: //ssrn. com/abstract=2208240 or http: //dx. doi. org/10. 2139/ssrn. 2208240 Welzer, H. (2016). Die smarte Diktatur. Der Angriff auf unsere Freiheit. [The Smart Dictatorship. The Attack on our Freedom]. Frankfurt am Main: S. Fischer Verlag.

- Slides: 72