Big Data Bench a Big Data Benchmark Suite

Big. Data. Bench: a Big Data Benchmark Suite from Internet Services HPCA 2014 INSTITUTE OF COMPUTING TECHNOLOGY Lei Wang, Jianfeng Zhan, Chunjie Luo, Yuqing Zhu, Qiang Yang, Yongqiang He, Wanling Gao, Zhen Jia, Yingjie Shi, Shujie Zhang, Gang Lu, Kent Zhang, Xiaona Li, and Bizhu Qiu 1

Why Big Data Benchmarking? Measuring big data systems and architectures quantitatively HPCA 2014 Orlando, 2014. 2. 18

What is Big. Data. Bench? n An open source big data benchmarking project • http: //prof. ict. ac. cn/Big. Data. Bench/ • 6 real-world data sets – • Generate (4 V) big data 19 workloads OLTP, Cloud OLTP, OLAP, and offline analytics – Same workloads: different implementations – HPCA 2014 Orlando, 2014. 2. 18

Executive summary n Big Data Benchmarks n Do we know enough about big data benchmarking? n Big Data workload characterization n n What are differences from traditional workloads? Exploring best big data architectures n brawny-core or wimpy multi-core or wimpy many-core? HPCA 2014 Orlando, 2014. 2. 18

Outline n Benchmarking Methodology and Decision n Big Data Workload Characterization 2 3 n Evaluating Hardware Systems with Big Data 3 n Conclusion HPCA 2014 Orlando, 2014. 2. 18

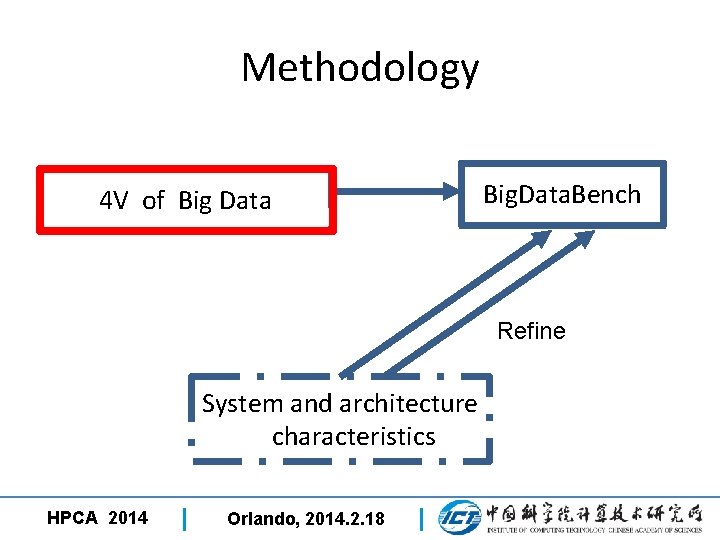

Methodology 4 V of Big Data Big. Data. Bench Refine System and architecture characteristics HPCA 2014 Orlando, 2014. 2. 18

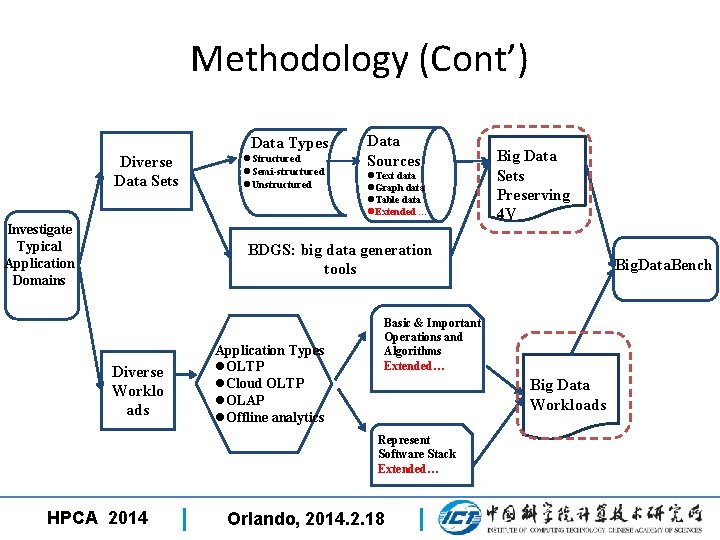

Methodology (Cont’) Data Types Diverse Data Sets Investigate Typical Application Domains l. Structured l. Semi-structured l. Unstructured Data Sources l. Text data l. Graph data l. Table data l. Extended … Big Data Sets Preserving 4 V BDGS: big data generation tools Diverse Worklo ads Application Types l. OLTP l. Cloud OLTP l. OLAP l. Offline analytics Basic & Important Operations and Algorithms Extended… Big Data Workloads Represent Software Stack Extended… HPCA 2014 Big. Data. Bench Orlando, 2014. 2. 18

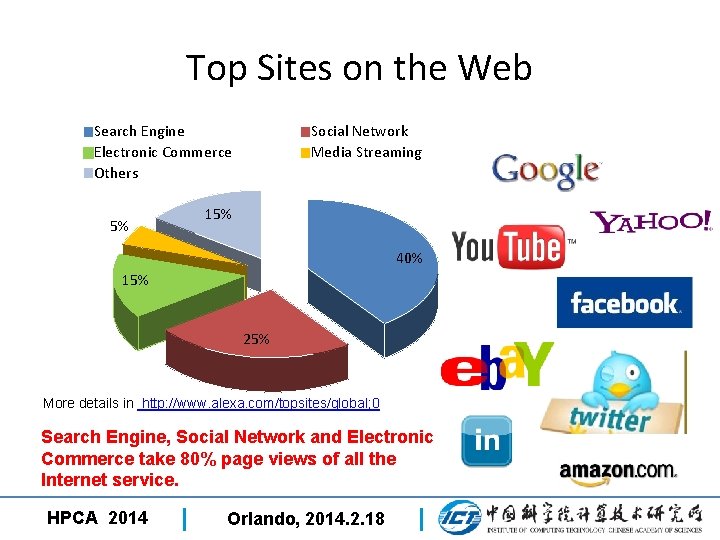

Top Sites on the Web Search Engine Electronic Commerce Others 5% Social Network Media Streaming 15% 40% 15% 25% More details in http: //www. alexa. com/topsites/global; 0 Search Engine, Social Network and Electronic Commerce take 80% page views of all the Internet service. HPCA 2014 Orlando, 2014. 2. 18

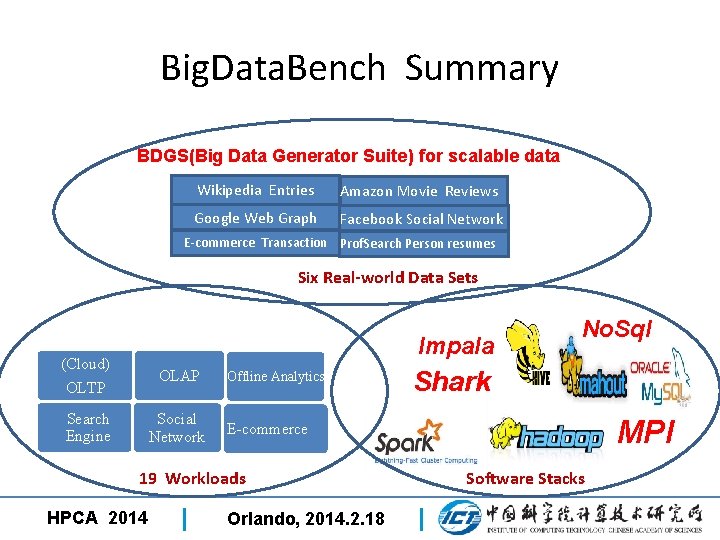

Big. Data. Bench Summary Data Generator Suite) for scalable data BDGS(Big Wikipedia Entries Amazon Movie Reviews Google Web Graph Facebook Social Network E-commerce Transaction Prof. Search Person resumes Six Real-world Data Sets Impala (Cloud) OLTP OLAP Search Engine Social Network Offline Analytics Shark MPI E-commerce 19 Workloads HPCA 2014 No. Sql Orlando, 2014. 2. 18 Software Stacks

Outline n Benchmarking Methodology and Decision n Big Data Workload Characterization 2 3 n Evaluating Hardware Systems with Big Data 3 5 n Conclusion HPCA 2014 Orlando, 2014. 2. 18

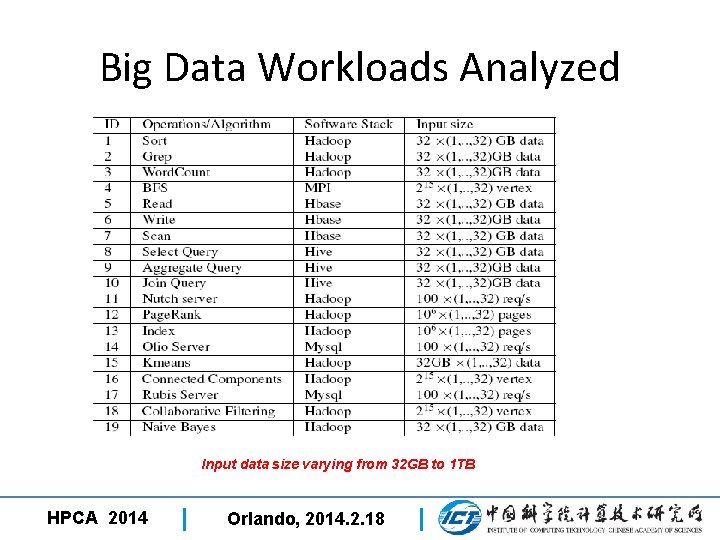

Big Data Workloads Analyzed Input data size varying from 32 GB to 1 TB HPCA 2014 Orlando, 2014. 2. 18

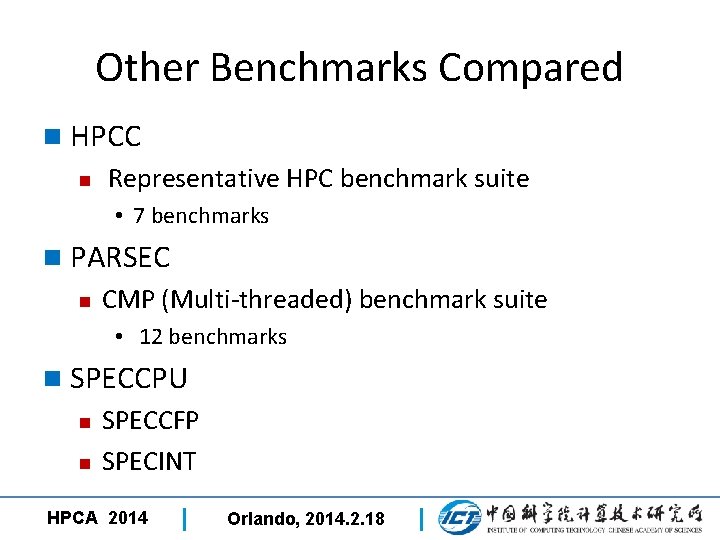

Other Benchmarks Compared n HPCC n Representative HPC benchmark suite • 7 benchmarks n PARSEC n CMP (Multi-threaded) benchmark suite • 12 benchmarks n SPECCPU n n SPECCFP SPECINT HPCA 2014 Orlando, 2014. 2. 18

Metrics n User-perceivable metrics n n n OLTP services: requests per second(RPS) Cloud OLTP: operations per second(OPS) OLAP and Offline analytics: data processed per second(DPS) n Micro-architecture characteristics n Hardware performance counter HPCA 2014 Orlando, 2014. 2. 18

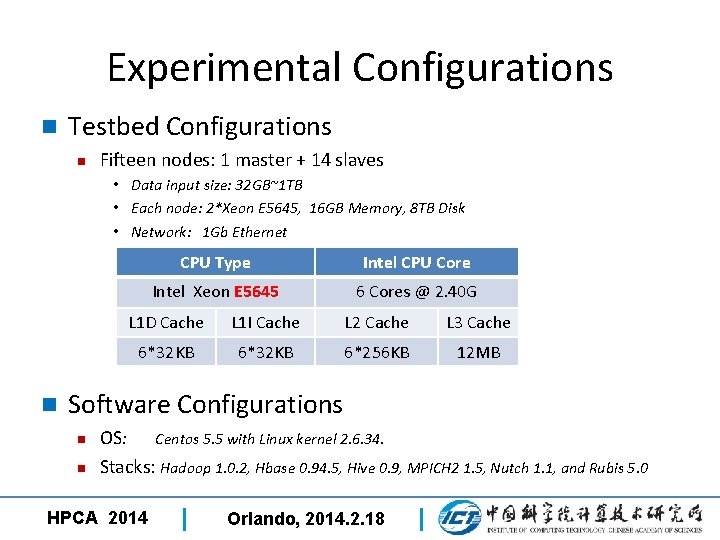

Experimental Configurations n Testbed Configurations n Fifteen nodes: 1 master + 14 slaves • Data input size: 32 GB~1 TB • Each node: 2*Xeon E 5645, 16 GB Memory, 8 TB Disk • Network: 1 Gb Ethernet n CPU Type Intel CPU Core Intel Xeon E 5645 6 Cores @ 2. 40 G L 1 D Cache L 1 I Cache L 2 Cache L 3 Cache 6*32 KB 6*256 KB 12 MB Software Configurations n n OS: Centos 5. 5 with Linux kernel 2. 6. 34. Stacks: Hadoop 1. 0. 2, Hbase 0. 94. 5, Hive 0. 9, MPICH 2 1. 5, Nutch 1. 1, and Rubis 5. 0 HPCA 2014 Orlando, 2014. 2. 18

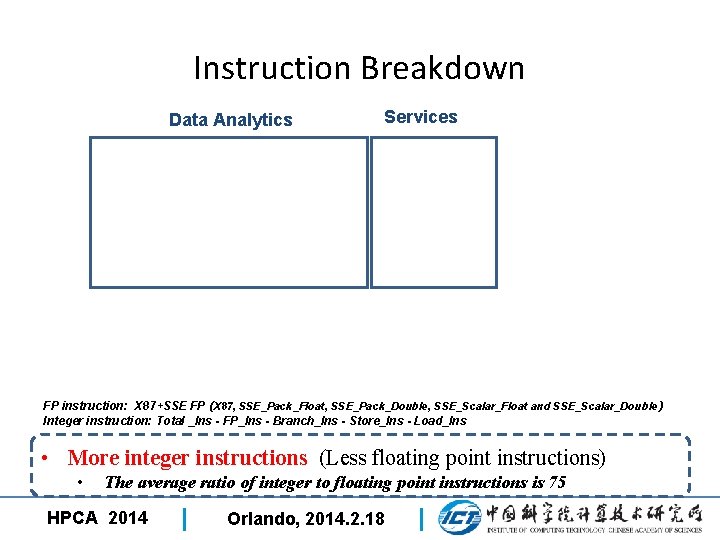

Instruction Breakdown Data Analytics Services FP instruction: X 87+SSE FP ( X 87, SSE_Pack_Float, SSE_Pack_Double, SSE_Scalar_Float and SSE_Scalar_Double ) Integer instruction: Total _Ins - FP_Ins - Branch_Ins - Store_Ins - Load_Ins • More integer instructions (Less floating point instructions) • The average ratio of integer to floating point instructions is 75 HPCA 2014 Orlando, 2014. 2. 18

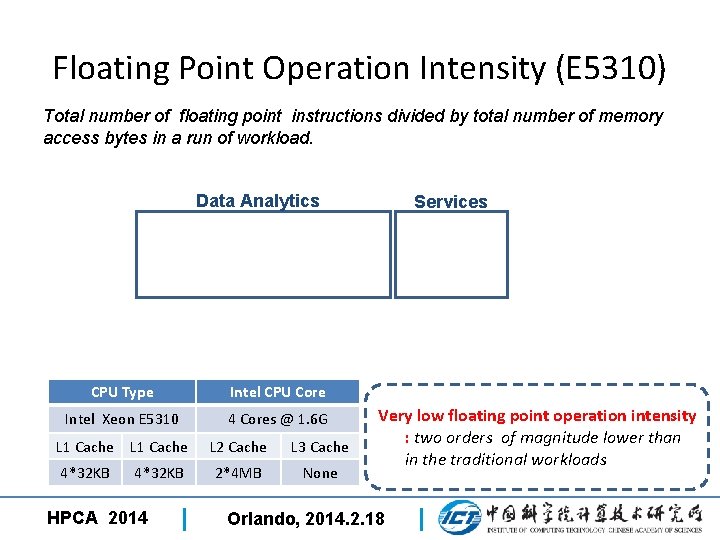

Floating Point Operation Intensity (E 5310) Total number of floating point instructions divided by total number of memory access bytes in a run of workload. Data Analytics CPU Type Intel CPU Core Intel Xeon E 5310 4 Cores @ 1. 6 G L 1 Cache L 2 Cache L 3 Cache 4*32 KB 2*4 MB None HPCA 2014 Services Very low floating point operation intensity : two orders of magnitude lower than in the traditional workloads Orlando, 2014. 2. 18

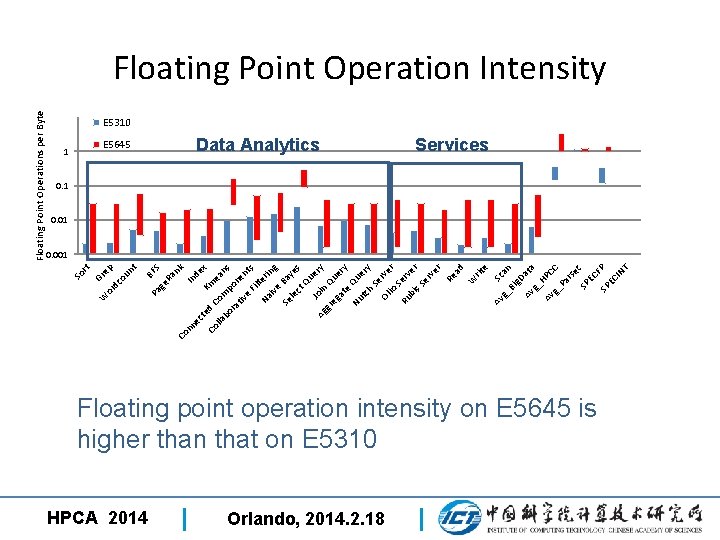

rt G W r or ep dc ou nt Pa BFS ge Ra nk Co nn In de ec t x Co ed C Km lla om ean bo s ra pon tiv en e Fil ts Na teri ive ng Se Bay le ct es Q ue J o Ag in ry gr eg Que at ry e Nu Qu tc ery h Se Ol rve io S r Ru erv e bi s S r er ve r Re ad W rit e Av Sc a g_ Bi n g. D Av ata g_ Av HPC C g_ Pa rs e SP c EC F SP P EC IN T So Floating Point Operations per Byte Floating Point Operation Intensity E 5310 1 E 5645 HPCA 2014 Data Analytics Orlando, 2014. 2. 18 Services 0. 1 0. 001 Floating point operation intensity on E 5645 is higher than that on E 5310

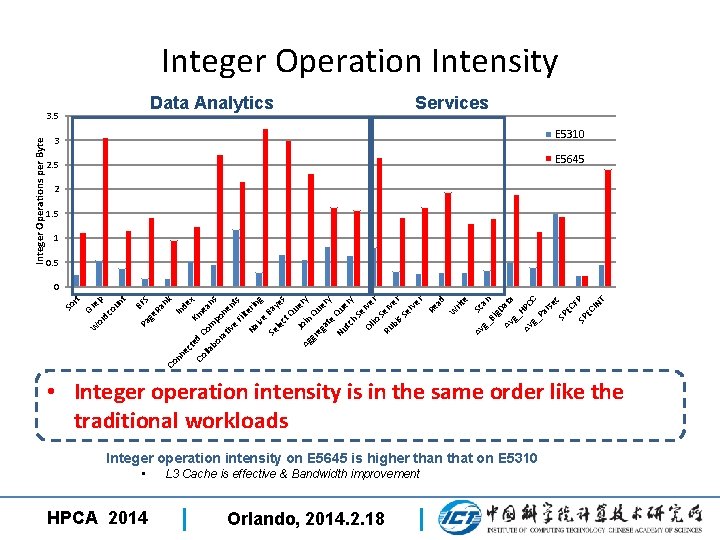

Integer Operation Intensity Data Analytics Services 3 E 5310 2. 5 E 5645 2 1. 5 1 0. 5 de Km x d Co lla Com ean bo s ra pon tiv e e nts Fil t Na erin ive g Se Bay le ct es Q ue J o Ag in ry gr eg Que at ry e Nu Qu tc ery h Se Ol rve r io S Ru erv e bi s S r er ve r Re ad W rit e Av Sc a g_ Bi n g. D Av ata g_ H Av PC g_ C Pa rs e SP c EC F SP P EC IN T nk Co nn ec te In S Ra BF ge Pa nt ou dc ep W or Gr rt 0 So Integer Operations per Byte 3. 5 • Integer operation intensity is in the same order like the traditional workloads Integer operation intensity on E 5645 is higher than that on E 5310 • HPCA 2014 L 3 Cache is effective & Bandwidth improvement Orlando, 2014. 2. 18

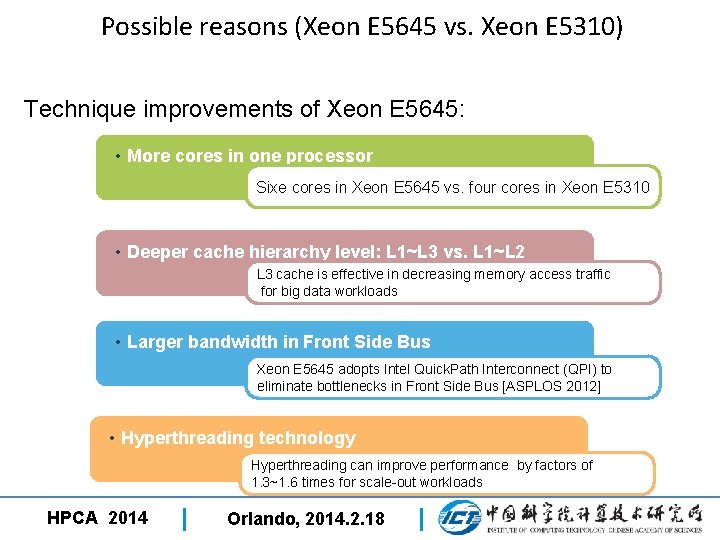

Possible reasons (Xeon E 5645 vs. Xeon E 5310) Technique improvements of Xeon E 5645: • More cores in one processor Sixe cores in Xeon E 5645 vs. four cores in Xeon E 5310 • Deeper cache hierarchy level: L 1~L 3 vs. L 1~L 2 L 3 cache is effective in decreasing memory access traffic for big data workloads • Larger bandwidth in Front Side Bus Xeon E 5645 adopts Intel Quick. Path Interconnect (QPI) to eliminate bottlenecks in Front Side Bus [ASPLOS 2012] • Hyperthreading technology Hyperthreading can improve performance by factors of 1. 3~1. 6 times for scale-out workloads HPCA 2014 Orlando, 2014. 2. 18

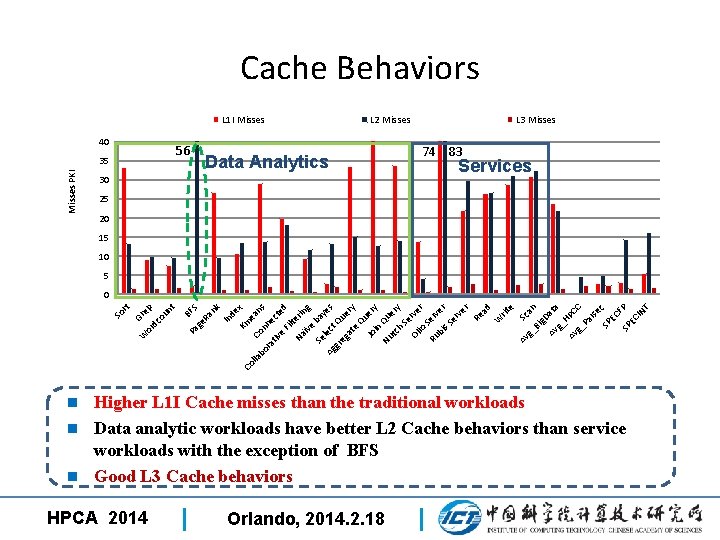

Cache Behaviors L 1 I Misses 40 56 Misses PKI 35 L 2 Misses Data Analytics 30 L 3 Misses 74 83 Services 25 20 15 10 5 x bo Con ns n ra tiv ect e e Fil d te Na r ïve ing Se bay Ag lect es gr eg Que at r e y Qu e Jo in ry Q Nu u tc ery h Se Ol rve io r S Ru erv e bi s S r er ve r Re ad W rit e Av Sc a g_ Bi n g. D Av ata g_ H Av PC g_ C Pa rs e SP c EC F SP P EC IN T ea de Co lla Km nk In S Ra BF ge Pa nt ou ep W or dc Gr So rt 0 Higher L 1 I Cache misses than the traditional workloads n Data analytic workloads have better L 2 Cache behaviors than service workloads with the exception of BFS n Good L 3 Cache behaviors n HPCA 2014 Orlando, 2014. 2. 18

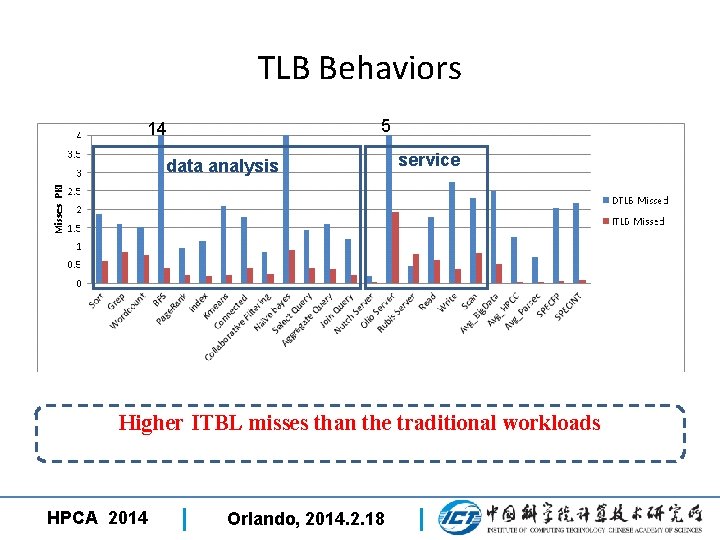

TLB Behaviors 5 14 data analysis service Higher ITBL misses than the traditional workloads HPCA 2014 Orlando, 2014. 2. 18

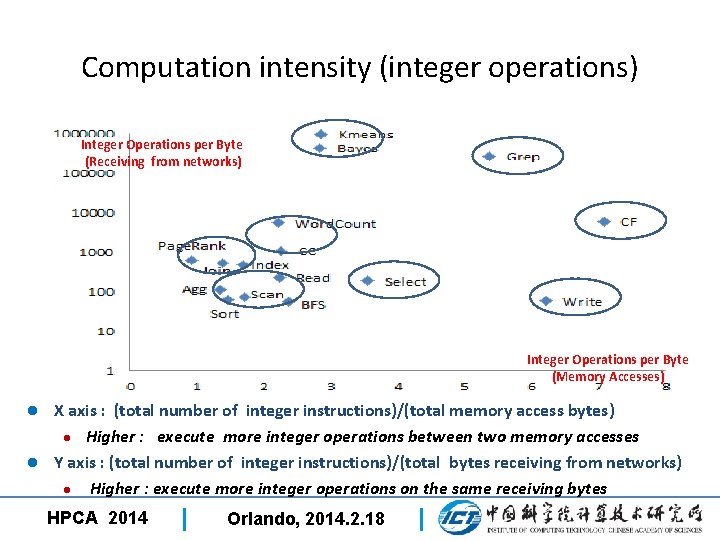

Computation intensity (integer operations) Integer Operations per Byte (Receiving from networks) Integer Operations per Byte (Memory Accesses) X axis : (total number of integer instructions)/(total memory access bytes) l Higher : execute more integer operations between two memory accesses l Y axis : (total number of integer instructions)/(total bytes receiving from networks) l Higher : execute more integer operations on the same receiving bytes l HPCA 2014 Orlando, 2014. 2. 18

Big Workloads Characterization Summary n Data movement dominated computing n n Low computation intensity Cache Behaviors (Xeon E 5645) n n Very high L 1 I MPKI L 3 Cache is effective n Diverse workload behaviors n Computation/communication vs. computation/memory accesses HPCA 2014 Orlando, 2014. 2. 18

Outline n Benchmarking Methodology and Decision n Big Data Workload Characterization 3 n Evaluating Hardware Systems with Big Data n 3 Y. Shi, S. A. Mc. Kee et al. Performance and Energy Efficiency Implications from Evaluating Four Big Data Systems, Submitted to IEEE Micro. n Conclusion HPCA 2014 Orlando, 2014. 2. 18

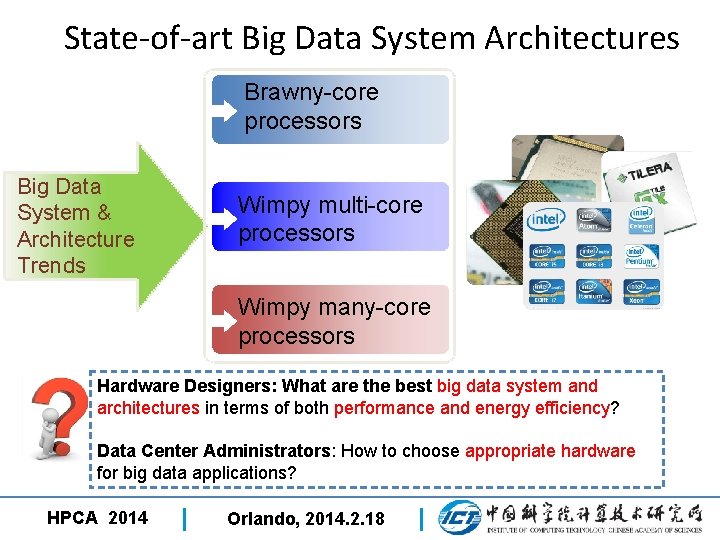

State-of-art Big Data System Architectures Brawny-core processors Big Data System & Architecture Trends Wimpy multi-core processors Wimpy many-core processors Hardware Designers: What are the best big data system and architectures in terms of both performance and energy efficiency? Data Center Administrators: How to choose appropriate hardware for big data applications? HPCA 2014 Orlando, 2014. 2. 18

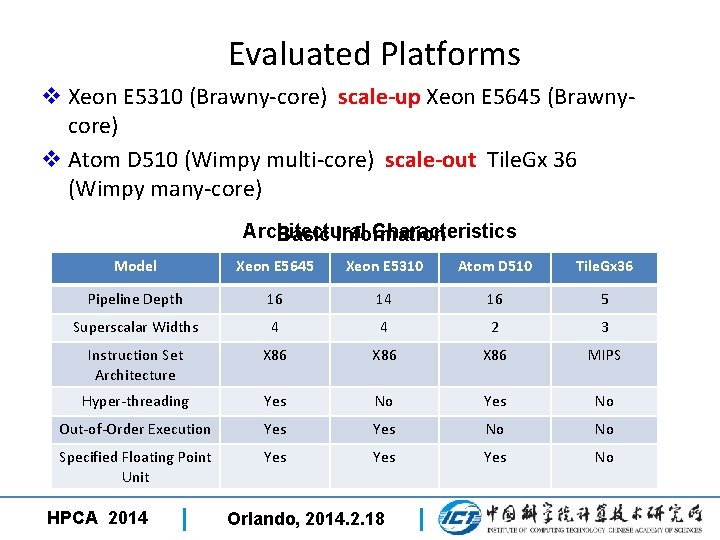

Evaluated Platforms v Xeon E 5310 (Brawny-core) scale-up Xeon E 5645 (Brawnycore) v Atom D 510 (Wimpy multi-core) scale-out Tile. Gx 36 (Wimpy many-core) Architectural Characteristics Basic Information Model Xeon E 5645 Xeon E 5310 Atom D 510 Tile. Gx 36 No. of Processors Pipeline Depth 16 2 14 1 16 1 1 5 Superscalar Widths No. of Cores/CPU 6 4 4 2 36 3 Instruction Set Frequency Architecture L 1 Cache (I/D) Hyper-threading L 2 Cache Out-of-Order Execution L 3 Cache Specified Floating Point TDP Unit 2. 4 GHz X 86 1. 66 GHz X 86 1. 2 GHz MIPS 32 KB/32 KB Yes 256 KB*6 Yes 12 MB Yes 80 W 32 KB/32 KB No 4096 KB*2 Yes NONE Yes 80 W 32 KB/24 KB Yes 512 KB*2 No NONE Yes 13 W 32 KB/32 KB No 256 KB*36 No NONE No 45 W HPCA 2014 Orlando, 2014. 2. 18

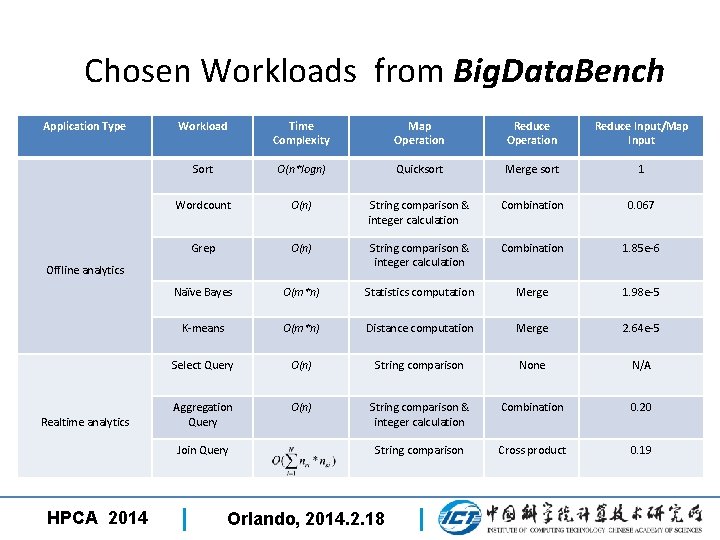

Chosen Workloads from Big. Data. Bench Application Type Workload Time Complexity Map Operation Reduce Input/Map Input Sort O(n*logn) Quicksort Merge sort 1 Wordcount O(n) String comparison & integer calculation Combination 0. 067 Grep O(n) String comparison & integer calculation Combination 1. 85 e-6 Naïve Bayes O(m*n) Statistics computation Merge 1. 98 e-5 K-means O(m*n) Distance computation Merge 2. 64 e-5 Select Query O(n) String comparison None N/A Aggregation Query O(n) String comparison & integer calculation Combination 0. 20 String comparison Cross product 0. 19 Offline analytics Realtime analytics Join Query HPCA 2014 Orlando, 2014. 2. 18

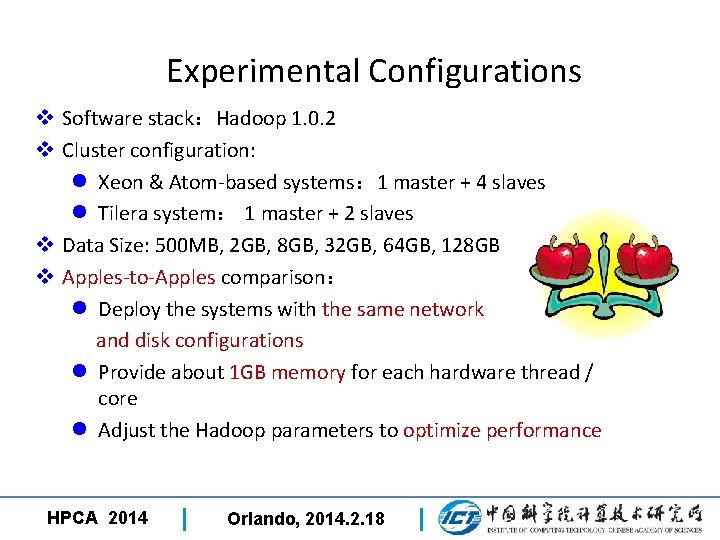

Experimental Configurations v Software stack:Hadoop 1. 0. 2 v Cluster configuration: l Xeon & Atom-based systems: 1 master + 4 slaves l Tilera system: 1 master + 2 slaves v Data Size: 500 MB, 2 GB, 8 GB, 32 GB, 64 GB, 128 GB v Apples-to-Apples comparison: l Deploy the systems with the same network and disk configurations l Provide about 1 GB memory for each hardware thread / core l Adjust the Hadoop parameters to optimize performance HPCA 2014 Orlando, 2014. 2. 18

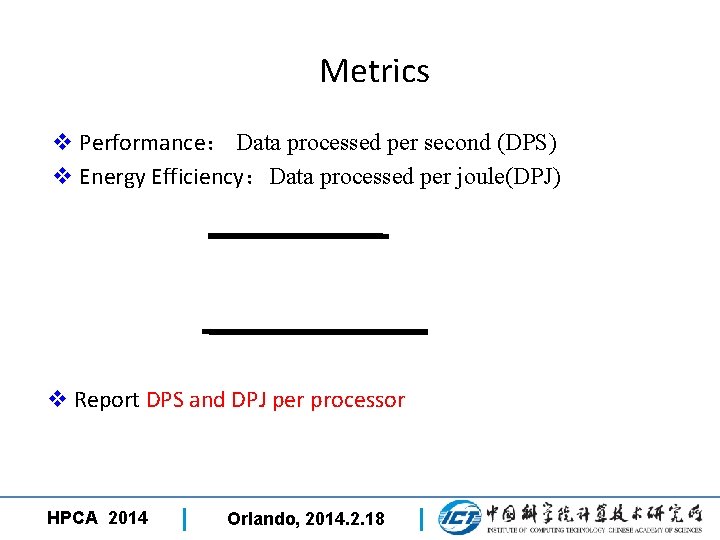

Metrics v Performance: Data processed per second (DPS) v Energy Efficiency:Data processed per joule(DPJ) v Report DPS and DPJ per processor HPCA 2014 Orlando, 2014. 2. 18

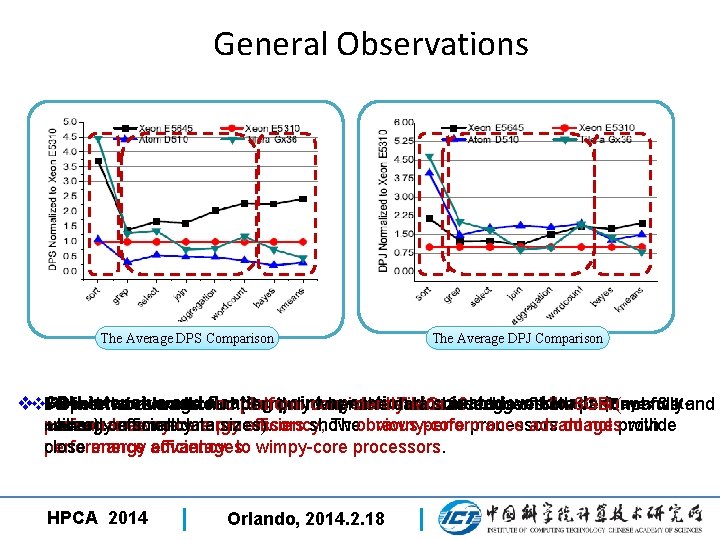

General Observations The Average DPS Comparison The Average DPJ Comparison vv. CPU-intensive and number floating point operation dominated workloads (Bayes & KI/O Report Other intensive the workloads: average workload no platform (Sort):many-core only consistently when the data Tile. Gx 36 wins sizes in terms achieves bigger ofthan both the 8 GB performance best (not fully and means) : brawny-core processors show performance advantages with performance utilized energyon efficiency. small anddata energy sizes). efficiency, Theobvious brawny-core processors do not provide performance close energy advantages. efficiency to wimpy-core processors. HPCA 2014 Orlando, 2014. 2. 18

Improvements from Scaling-out the Wimpy Core (Tile. Gx 36 vs. Atom D 510) • The core of Tile. Gx 36 is more wimpy than Atom D 510 l. Adopts MIPS-derived VLIW instruction set. l. Does not support hyperthreading. l Less stages in the pipeline depth. l. Does not have dedicated floating point units. • Tile. Gx 36 integrates more cores on the NOC(Network on Chip) 36 cores in Tile. Gx 36 vs. 4 cores Atom D 510 HPCA 2014 Orlando, 2014. 2. 18

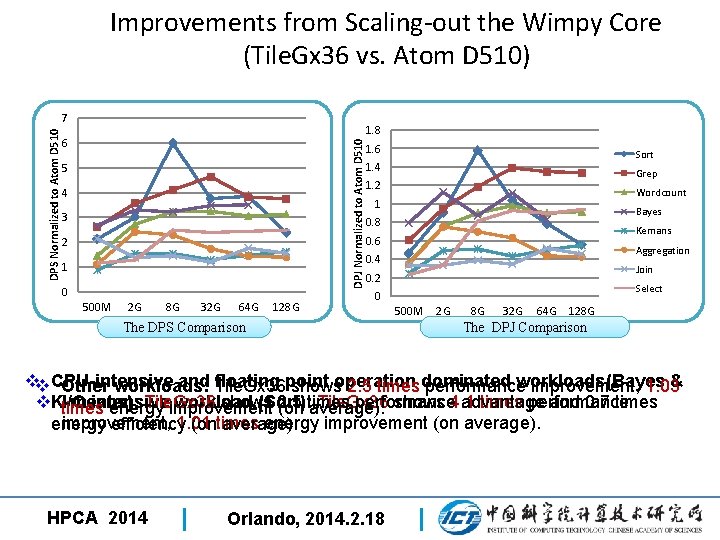

Improvements from Scaling-out the Wimpy Core (Tile. Gx 36 vs. Atom D 510) 1. 8 6 DPJ Normalized to Atom D 510 DPS Normalized to Atom D 510 7 5 4 3 2 1 0 500 M 2 G 8 G 32 G 64 G 128 G 1. 6 Sort 1. 4 Grep 1. 2 Wordcount 1 Bayes 0. 8 Kemans 0. 6 Aggregation 0. 4 Join 0. 2 Select 0 The DPS Comparison 500 M 2 G 8 G 32 G 64 G 128 G The DPJ Comparison vv. CPU-intensive and Tile. Gx 36 floating point workloads(Bayes & Other workloads: showsoperation 2. 5 timesdominated performance improvement, 1. 03 v. K-means): I/O intensive workload (Sort): Tile. Gx 36 shows 4. 1 times performance Tile. Gx 36 shows 2. 5 performance advantage and 0. 7 times energy improvement (on times average). improvement, 1. 01 energy improvement (on average). energy efficiency (ontimes average). HPCA 2014 Orlando, 2014. 2. 18

Improvements from Scaling-out the Wimpy Core (Tile. Gx 36 vs. Atom D 510) • The core of Tile. Gx 36 is more wimpy than Atom D 510 l. Adopts MIPS-derived VLIW instruction set. l. Does not support hyperthreading. l Less stages in the pipeline depth. l. Does not have dedicated floating point units. • Tile. Gx 36 integrates more cores on the NOC(Network on Chip) 36 cores in Tile. Gx 36 vs. 4 cores Atom D 510 ØScaling out the wimpy core can bring performance advantage by improving execution parallelism. ØSimplifying the wimpy cores and integrating more cores on the NOC is an option for Big Data workloads. HPCA 2014 Orlando, 2014. 2. 18

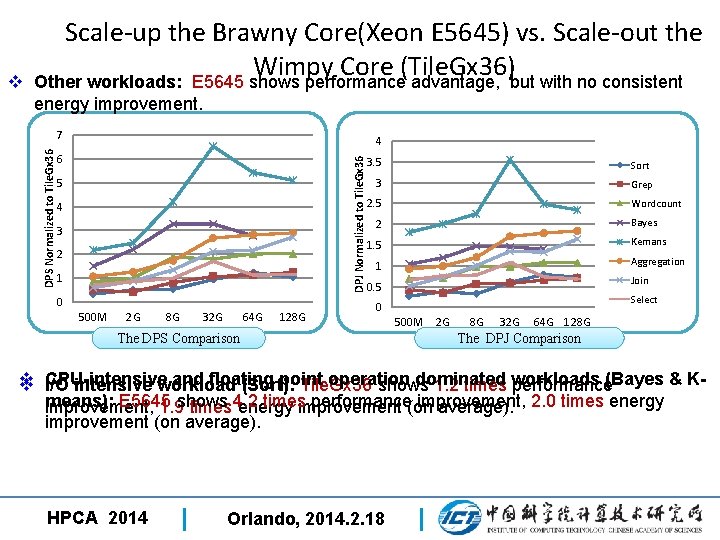

v Scale-up the Brawny Core(Xeon E 5645) vs. Scale-out the Wimpy Core (Tile. Gx 36) Other workloads: E 5645 shows performance advantage, but with no consistent 7 4 6 3. 5 DPJ Normalized to Tile. Gx 36 DPS Normalized to Tile. Gx 36 energy improvement. 5 4 3 2 1 0 500 M 2 G 8 G 32 G 64 G 128 G Sort 3 Grep 2. 5 Wordcount Bayes 2 Kemans 1. 5 Aggregation 1 Join 0. 5 Select 0 The DPS Comparison 500 M 2 G 8 G 32 G 64 G 128 G The DPJ Comparison v and floating point operation dominated v CPU-intensive I/O intensive workload (Sort): Tile. Gx 36 shows 1. 2 times workloads performance(Bayes & Kmeans): E 5645 timesimprovement performance(on improvement, 1. 9 shows times 4. 2 energy average). 2. 0 times energy improvement (on average). HPCA 2014 Orlando, 2014. 2. 18

Hardware Evaluation Summary v No one-size-fits-all solution n None of the microprocessors consistently wins in terms of both performance and energy efficiency for all of our Big Data workloads v One-size-fits-a-bunch solution n There are different classes of Big Data workloads, and each class of workload realizes better performance and energy efficiency on different architectures. HPCA 2014 Orlando, 2014. 2. 18

Outline n Benchmarking Methodology and Decision n Big Data Workload Characterization 3 n Evaluating hardware systems With Big Data 3 n Conclusion HPCA 2014 Orlando, 2014. 2. 18

Conclusion n An open source big data benchmark suite n n n Data-centric benchmarking methodology http: //prof. ict. ac. cn/Big. Data. Bench Big Data workload characterization n n Data movement dominated computing Diverse behaviors • Must including diversity of data and workloads n Eschew one-size-fits-all solution n Tailor system designs to specific workload requirements. HPCA 2014 Orlando, 2014. 2. 18

THANKs HPCA 2014 Orlando, 2014. 2. 18

- Slides: 38