Beyond mapreduce functions partitioner combiner and parameter configuration

Beyond map/reduce functions partitioner, combiner and parameter configuration Gang Luo Sept. 9, 2010

Partitioner • Determine which reducer/partition one record should go to • Given the key, value and number of partitions, return an integer – Partition: (K 2, V 2, #Partitions) integer

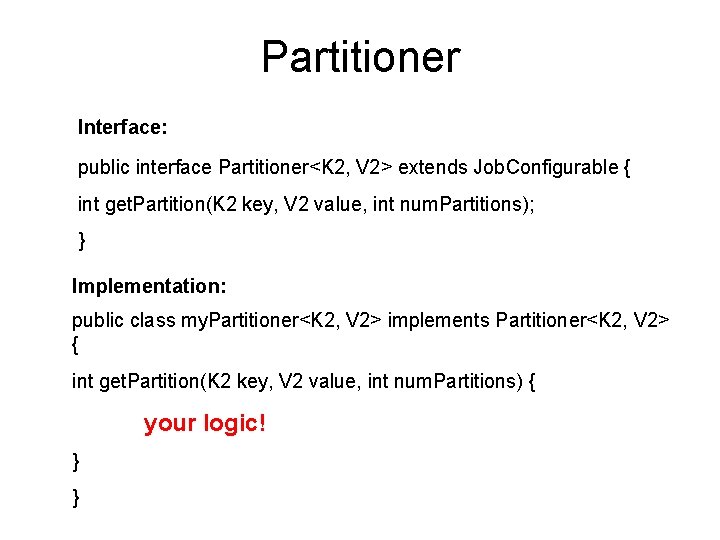

Partitioner Interface: public interface Partitioner<K 2, V 2> extends Job. Configurable { int get. Partition(K 2 key, V 2 value, int num. Partitions); } Implementation: public class my. Partitioner<K 2, V 2> implements Partitioner<K 2, V 2> { int get. Partition(K 2 key, V 2 value, int num. Partitions) { your logic! } }

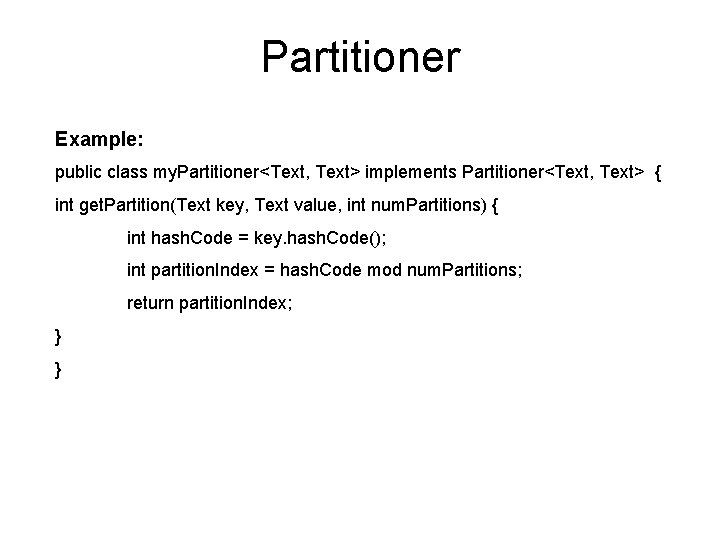

Partitioner Example: public class my. Partitioner<Text, Text> implements Partitioner<Text, Text> { int get. Partition(Text key, Text value, int num. Partitions) { int hash. Code = key. hash. Code(); int partition. Index = hash. Code mod num. Partitions; return partition. Index; } }

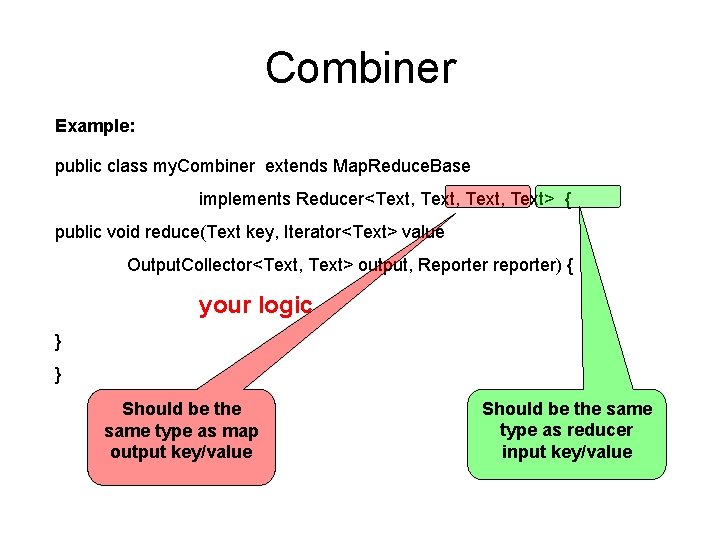

Combiner • Reduce the amount of intermediate data before sending them to reducers. • Pre-aggregation • The interface is exactly the same as reducer.

Combiner Example: public class my. Combiner extends Map. Reduce. Base implements Reducer<Text, Text> { public void reduce(Text key, Iterator<Text> value Output. Collector<Text, Text> output, Reporter reporter) { your logic } } Should be the same type as map output key/value Should be the same type as reducer input key/value

Parameter • Cluster-level parameters (e. g. HDFS block size) • Job-specific parameters (e. g. number of reducers, map output buffer size) – Configurable. Important for job performance • User-define parameters – Used to pass information from driver to mapper/reducer. – Help to make your mapper/reducer more generic

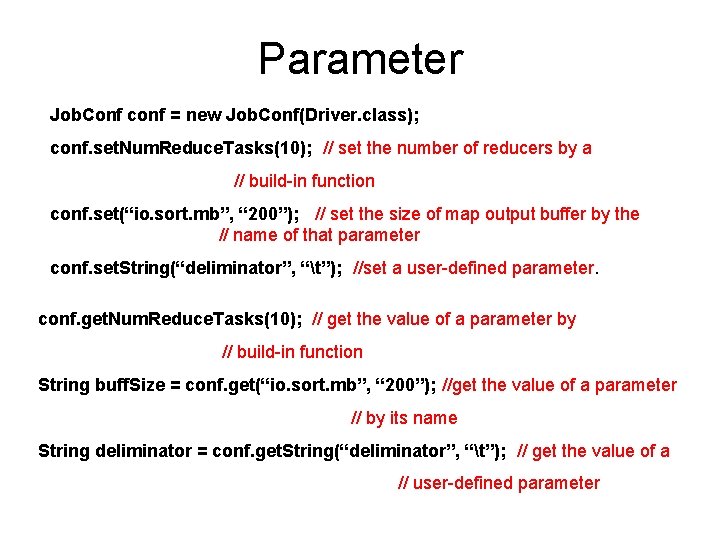

Parameter Job. Conf conf = new Job. Conf(Driver. class); conf. set. Num. Reduce. Tasks(10); // set the number of reducers by a // build-in function conf. set(“io. sort. mb”, “ 200”); // set the size of map output buffer by the // name of that parameter conf. set. String(“deliminator”, “t”); //set a user-defined parameter. conf. get. Num. Reduce. Tasks(10); // get the value of a parameter by // build-in function String buff. Size = conf. get(“io. sort. mb”, “ 200”); //get the value of a parameter // by its name String deliminator = conf. get. String(“deliminator”, “t”); // get the value of a // user-defined parameter

Parameter • There are some built-in parameters managed by Hadoop. We are not supposed to change them, but can read them – String input. File = job. Conf. get("map. input. file"); – Get the path to the current input – Used in joining datasets *for new api, you should use: File. Split split = (File. Split)context. get. Input. Split();

More about Hadoop • Identity Mapper/Reducer – Output == input. No modification • Why do we need map/reduce function without any logic in them? – Sorting! – More generally, when you only want to use the basic functionality provided by Hadoop (e. g. sorting/grouping)

More about Hadoop • How to determine the number of splits? – If a file is large enough and splitable, it will be splited into multiple pieces (split size = block size) – If a file is non-splitable, only one split. – If a file is small (smaller than a block), one split for file, unless. . .

More about Hadoop • Combine. File. Input. Format – Merge multiple small files into one split, which will be processed by one mapper – Save mapper slots. Reduce the overhead • Other options to handle small files? – hadoop fs -getmerge src dest

- Slides: 12