Beyond binary classification The slides are closely adapted

Beyond binary classification The slides are closely adapted from Subhransu Maji’s slides

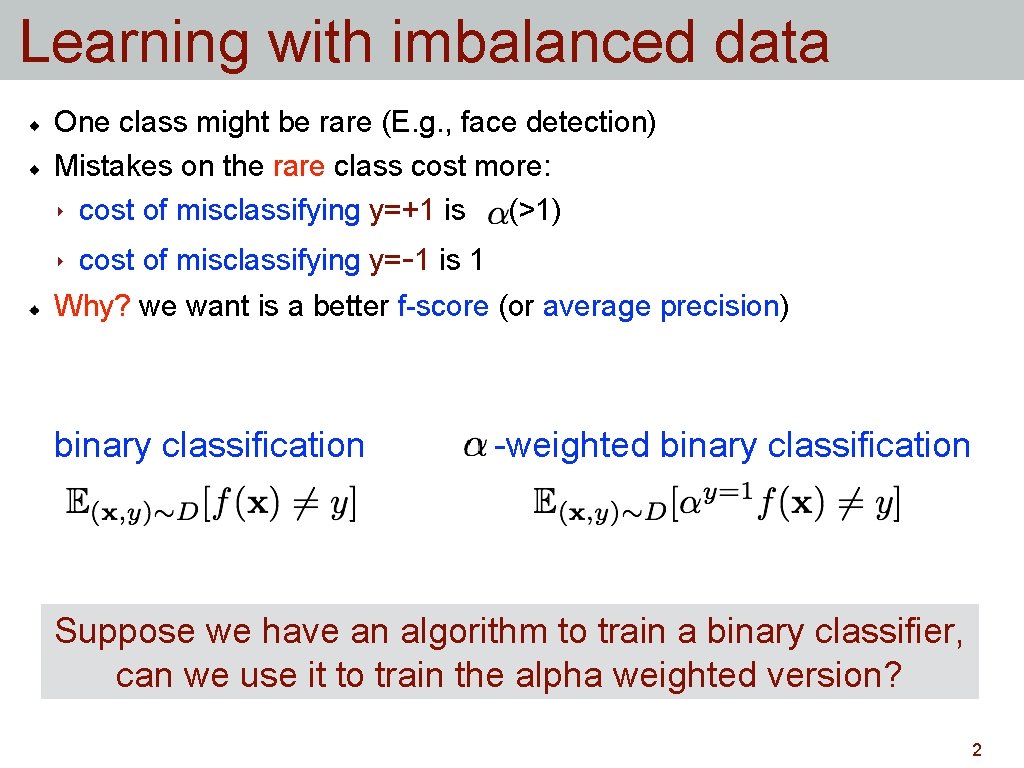

Learning with imbalanced data One class might be rare (E. g. , face detection) Mistakes on the rare class cost more: ‣ cost of misclassifying y=+1 is (>1) ‣ cost of misclassifying y=-1 is 1 Why? we want is a better f-score (or average precision) binary classification -weighted binary classification Suppose we have an algorithm to train a binary classifier, can we use it to train the alpha weighted version? 2

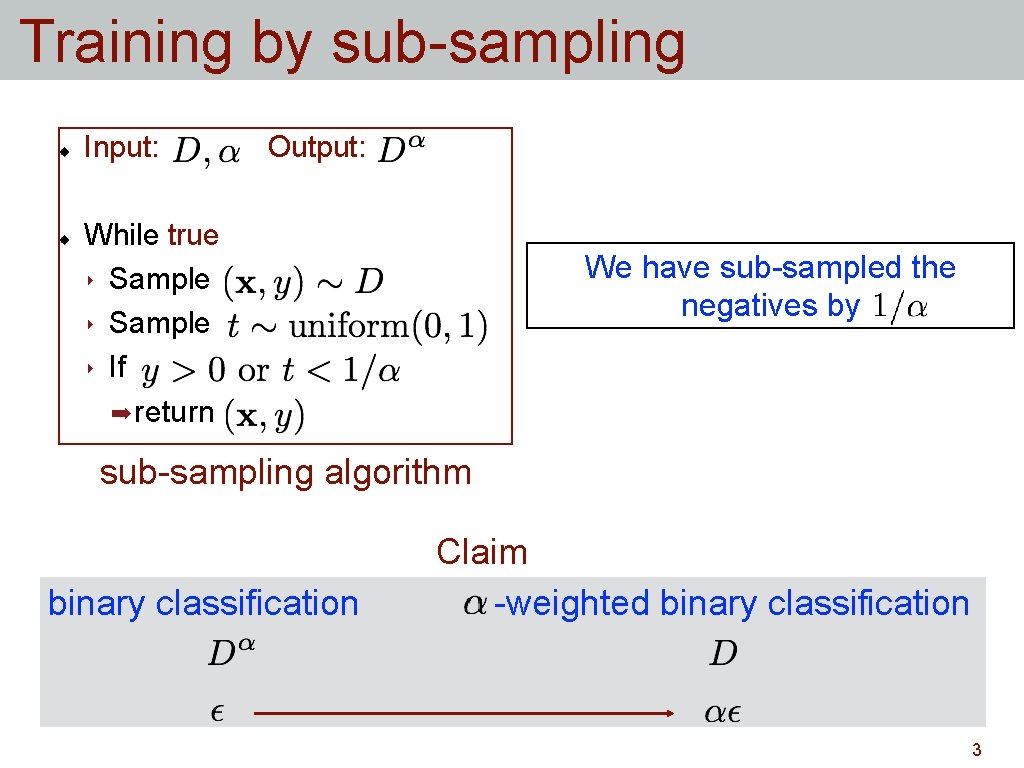

Training by sub-sampling Input: Output: While true ‣ Sample ‣ If ➡ return We have sub-sampled the negatives by sub-sampling algorithm binary classification Claim -weighted binary classification 3

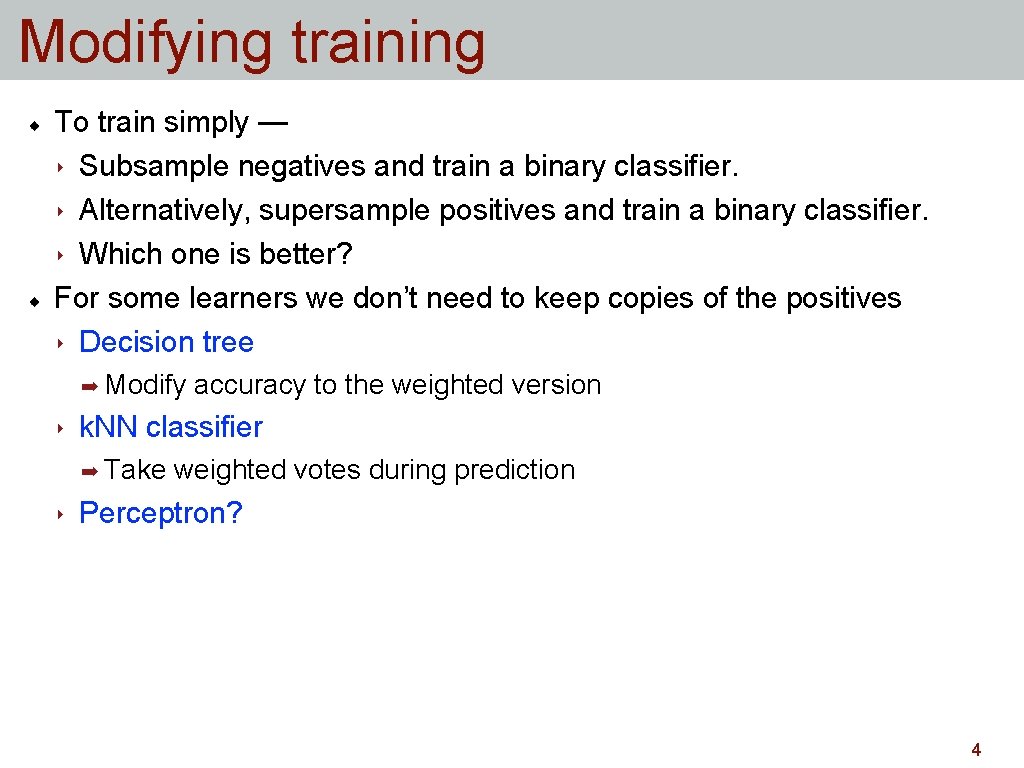

Modifying training To train simply — ‣ Subsample negatives and train a binary classifier. ‣ Alternatively, supersample positives and train a binary classifier. ‣ Which one is better? For some learners we don’t need to keep copies of the positives ‣ Decision tree ➡ Modify ‣ k. NN classifier ➡ Take ‣ accuracy to the weighted version weighted votes during prediction Perceptron? 4

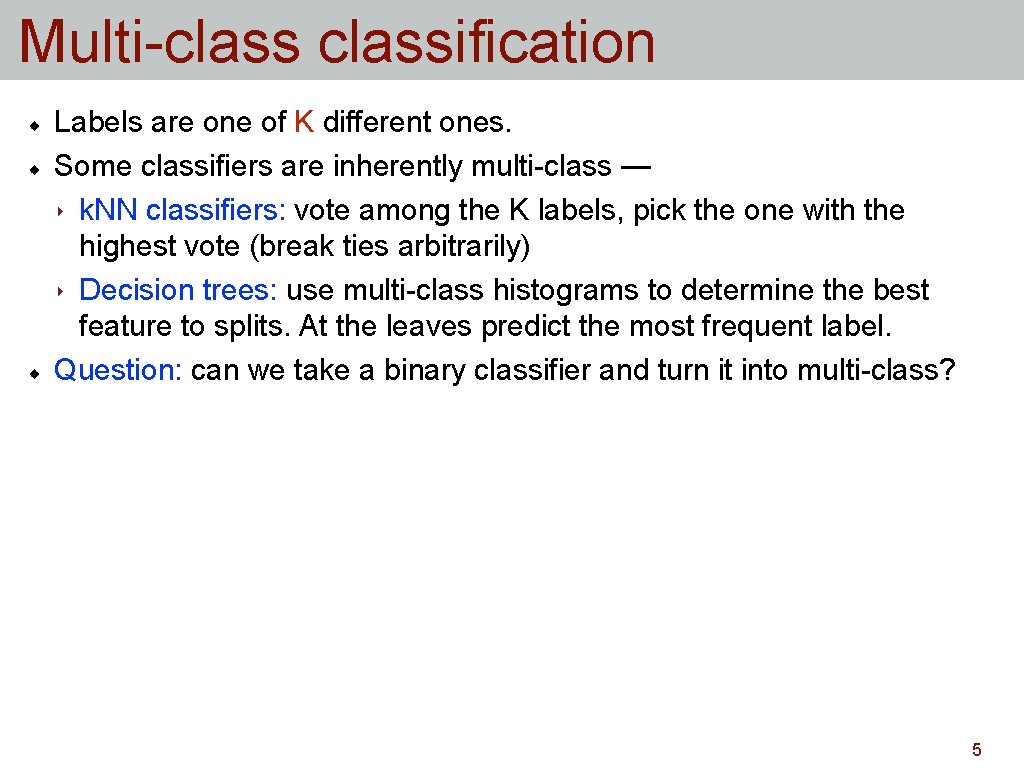

Multi-classification Labels are one of K different ones. Some classifiers are inherently multi-class — ‣ k. NN classifiers: vote among the K labels, pick the one with the highest vote (break ties arbitrarily) ‣ Decision trees: use multi-class histograms to determine the best feature to splits. At the leaves predict the most frequent label. Question: can we take a binary classifier and turn it into multi-class? 5

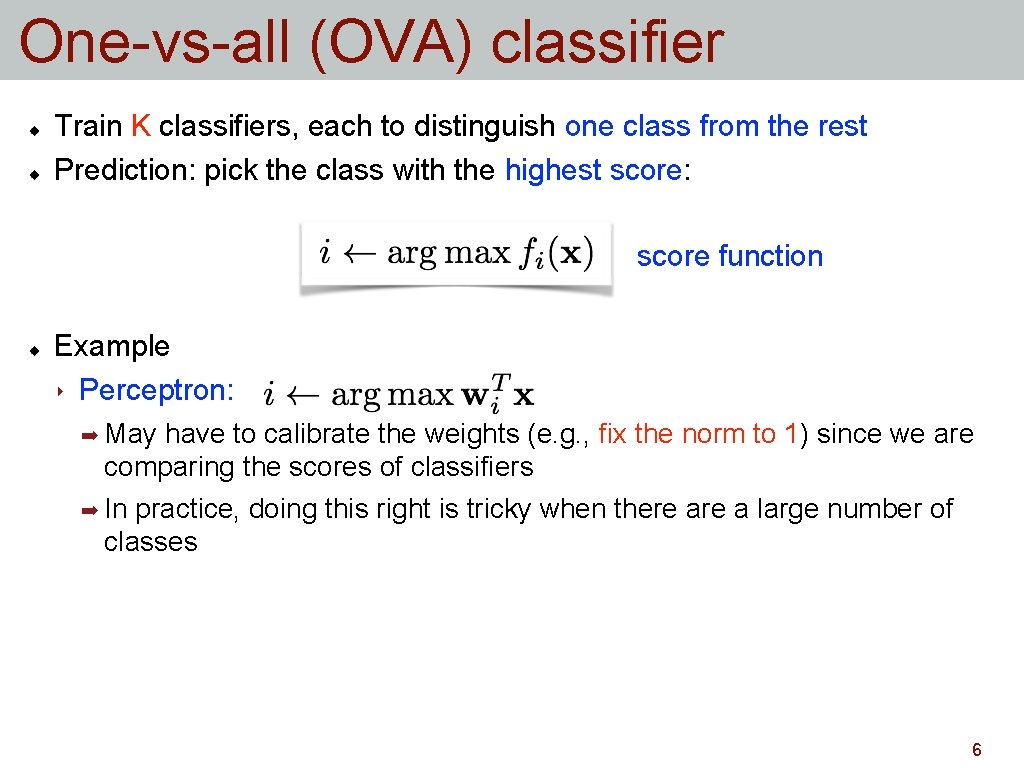

One-vs-all (OVA) classifier Train K classifiers, each to distinguish one class from the rest Prediction: pick the class with the highest score: score function Example ‣ Perceptron: ➡ May have to calibrate the weights (e. g. , fix the norm to 1) since we are comparing the scores of classifiers ➡ In practice, doing this right is tricky when there a large number of classes 6

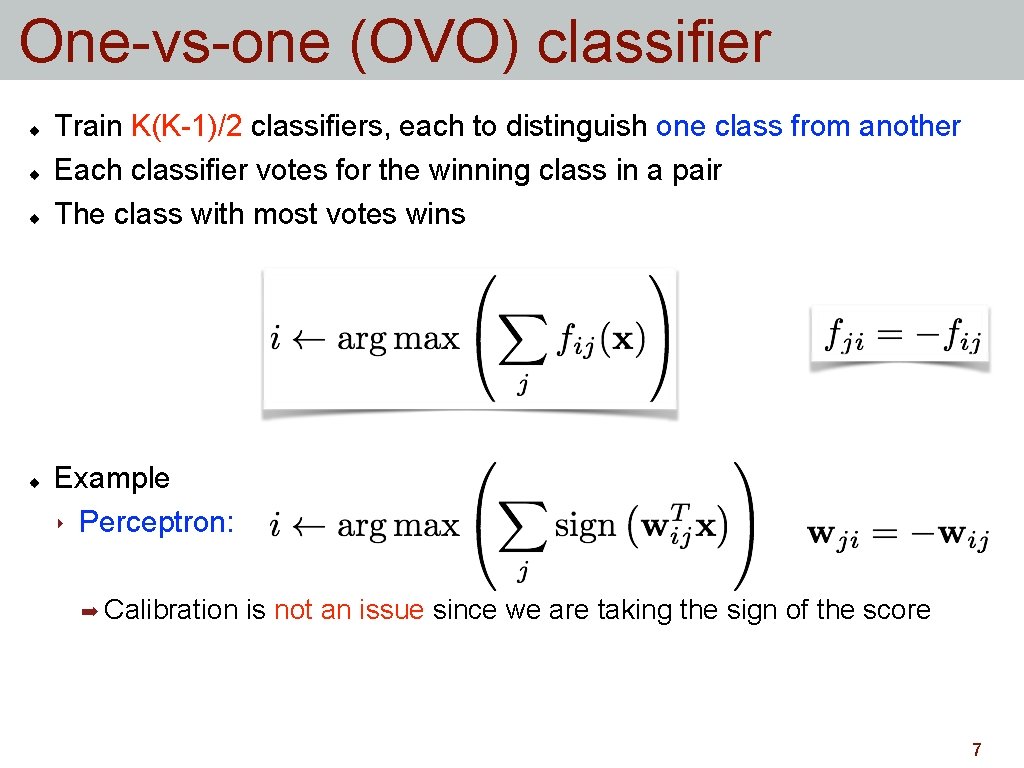

One-vs-one (OVO) classifier Train K(K-1)/2 classifiers, each to distinguish one class from another Each classifier votes for the winning class in a pair The class with most votes wins Example ‣ Perceptron: ➡ Calibration is not an issue since we are taking the sign of the score 7

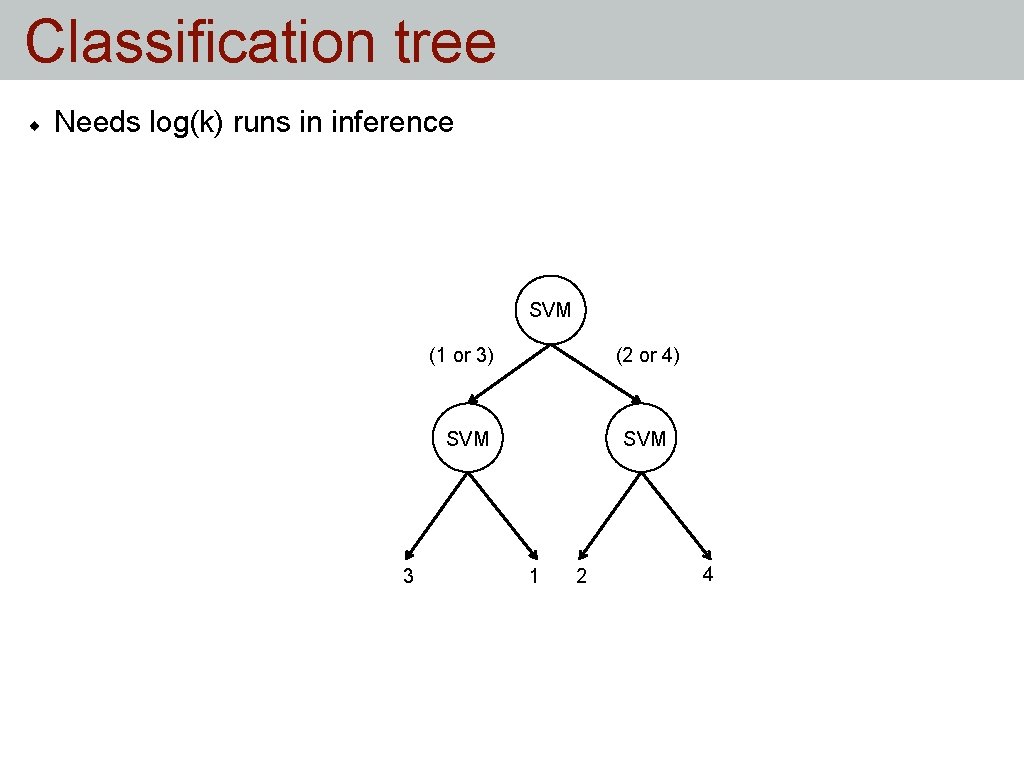

Classification tree Needs log(k) runs in inference SVM (1 or 3) (2 or 4) SVM 3 SVM 1 2 4

![Directed acyclic graph (DAG) classifier DAG SVM [Platt et al. , NIPS 2000] ‣ Directed acyclic graph (DAG) classifier DAG SVM [Platt et al. , NIPS 2000] ‣](http://slidetodoc.com/presentation_image_h2/e948e3c8c5f0e8fa9d365c8b14e7c639/image-9.jpg)

Directed acyclic graph (DAG) classifier DAG SVM [Platt et al. , NIPS 2000] ‣ Faster testing: O(K) instead of O(K(K-1)/2) ‣ Has some theoretical guarantees Figure from Platt et al. 9

Ranking 10

Ranking Input: query (e. g. “cats”) Output: a sorted list of items How should we measure performance? The loss function is trickier than in the binary classification case ‣ Example 1: All items in the first page should be relevant ‣ Example 2: All relevant items should be ahead of irrelevant items 11

Learning to rank For simplicity lets assume we are learning to rank for a given query. Learning to rank: ‣ Input: a list of items ‣ Output: a function that takes a set of items and returns a sorted list Approaches ‣ Pointwise approach: ➡ Assumes that each document has a numerical score. ➡ Learn a model to predict the score (e. g. linear regression). ‣ Pairwise approach: ➡ Ranking is approximated by a classification problem. ➡ Learn a binary classifier that can tell which item is better given a pair. 12

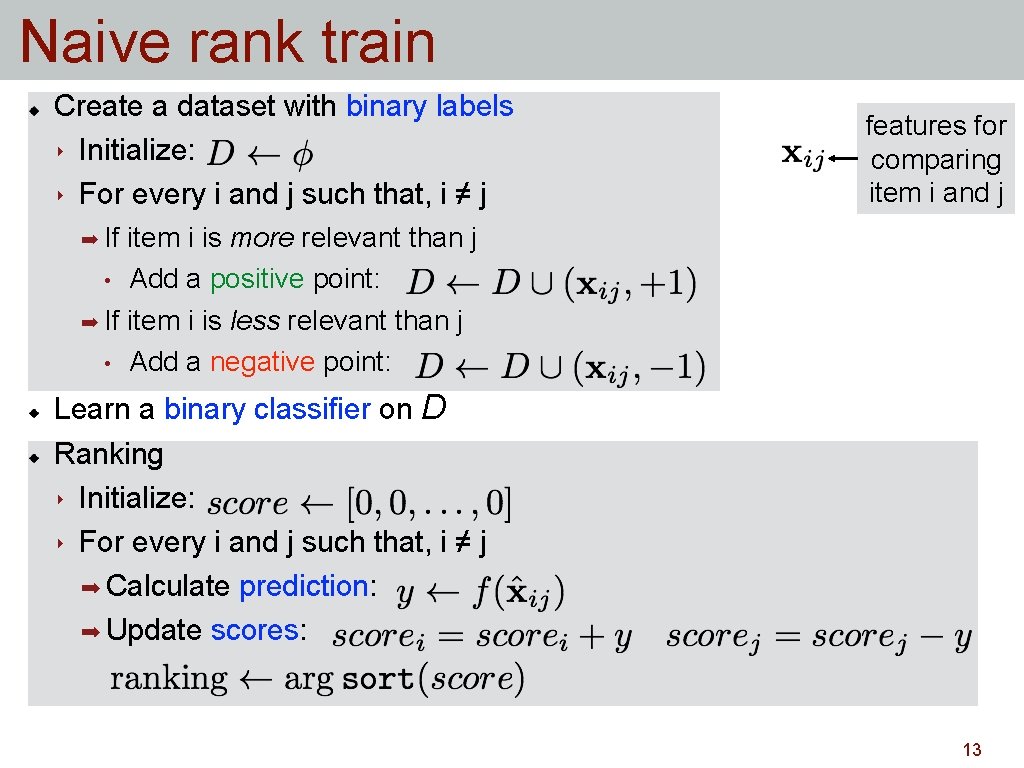

Naive rank train Create a dataset with binary labels ‣ Initialize: ‣ For every i and j such that, i ≠ j features for comparing item i and j ➡ If item i is more relevant than j • Add a positive point: ➡ If item i is less relevant than j • Add a negative point: Learn a binary classifier on D Ranking ‣ Initialize: ‣ For every i and j such that, i ≠ j ➡ Calculate prediction: ➡ Update scores: 13

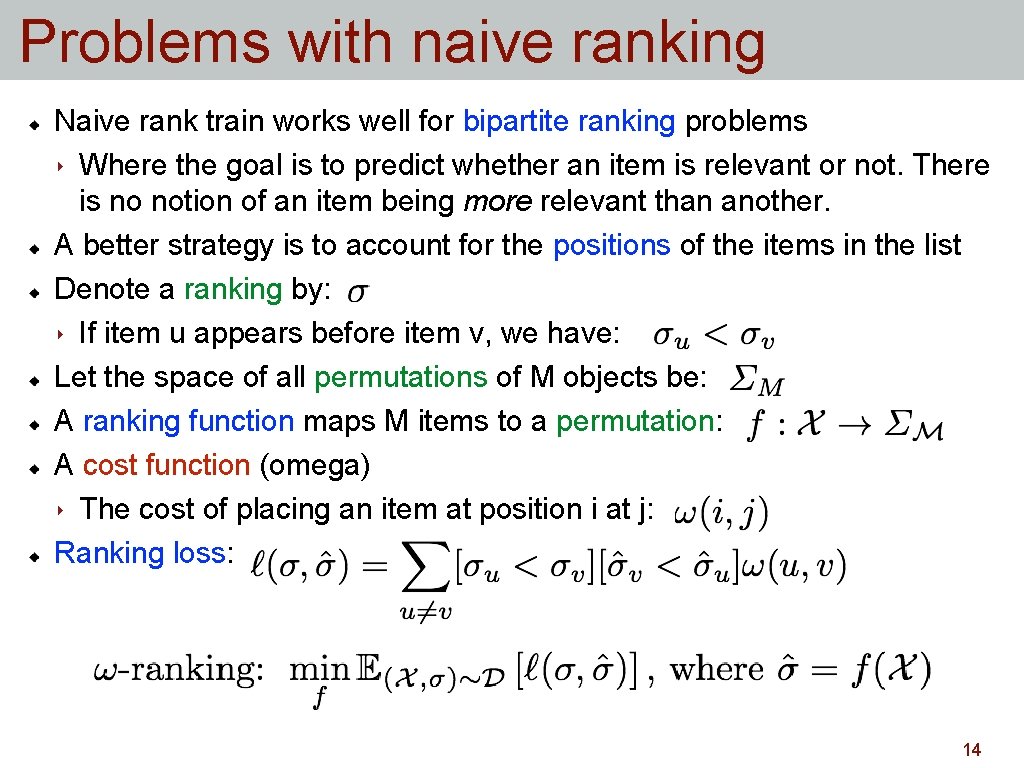

Problems with naive ranking Naive rank train works well for bipartite ranking problems ‣ Where the goal is to predict whether an item is relevant or not. There is no notion of an item being more relevant than another. A better strategy is to account for the positions of the items in the list Denote a ranking by: ‣ If item u appears before item v, we have: Let the space of all permutations of M objects be: A ranking function maps M items to a permutation: A cost function (omega) ‣ The cost of placing an item at position i at j: Ranking loss: 14

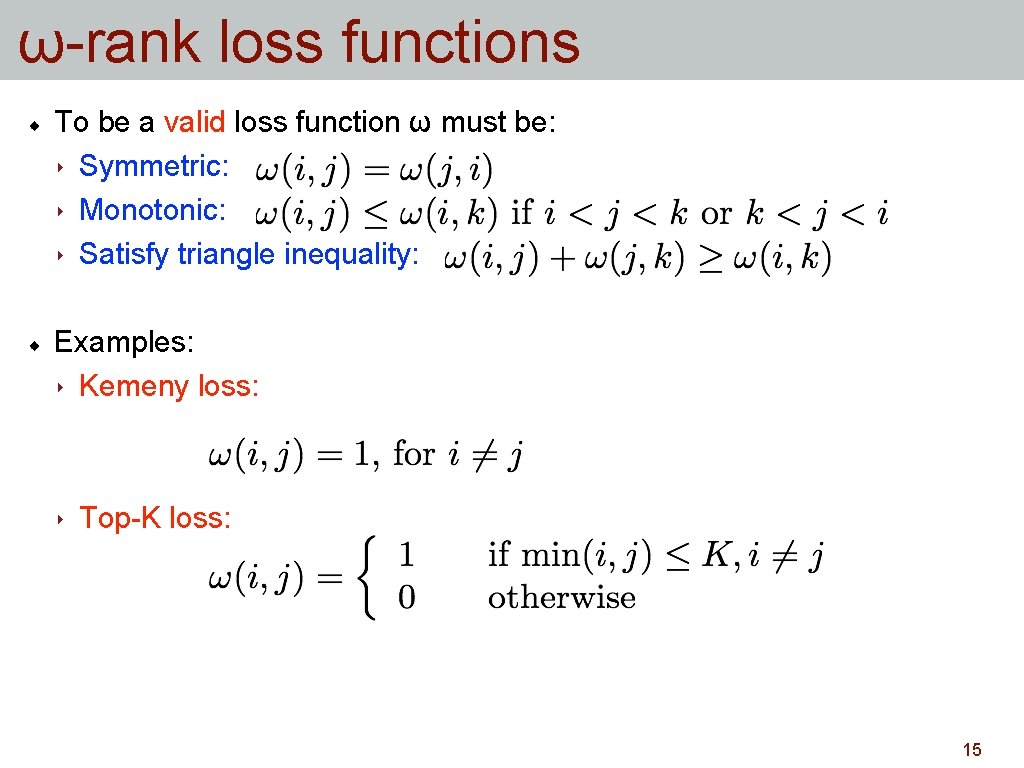

ω-rank loss functions To be a valid loss function ω must be: ‣ Symmetric: ‣ Monotonic: ‣ Satisfy triangle inequality: Examples: ‣ Kemeny loss: ‣ Top-K loss: 15

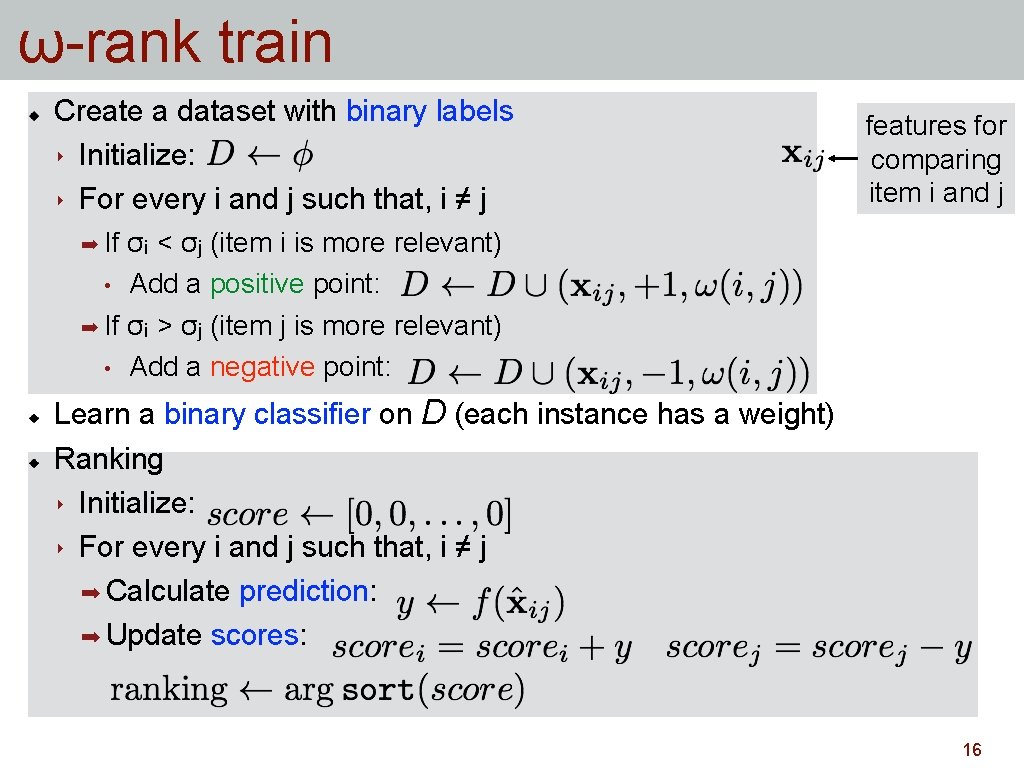

ω-rank train Create a dataset with binary labels ‣ Initialize: ‣ For every i and j such that, i ≠ j features for comparing item i and j ➡ If σᵢ < σⱼ (item i is more relevant) • Add a positive point: ➡ If σᵢ > σⱼ (item j is more relevant) • Add a negative point: Learn a binary classifier on D (each instance has a weight) Ranking ‣ Initialize: ‣ For every i and j such that, i ≠ j ➡ Calculate prediction: ➡ Update scores: 16

Structured SVM

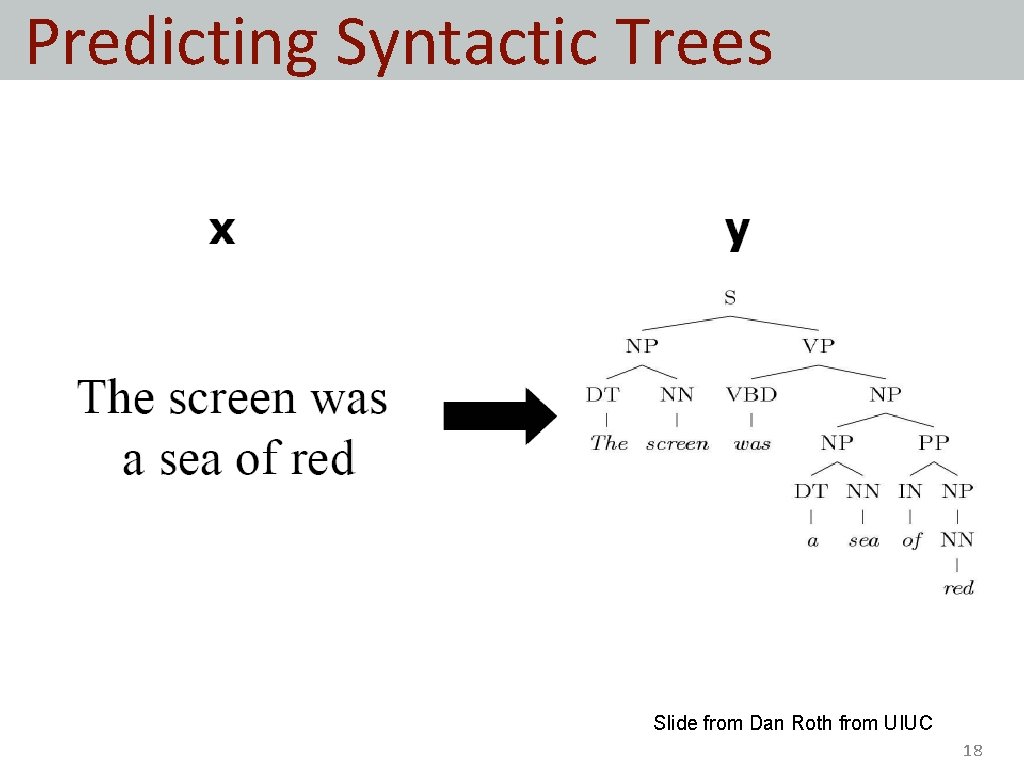

Predicting Syntactic Trees • Slide from Dan Roth from UIUC 18

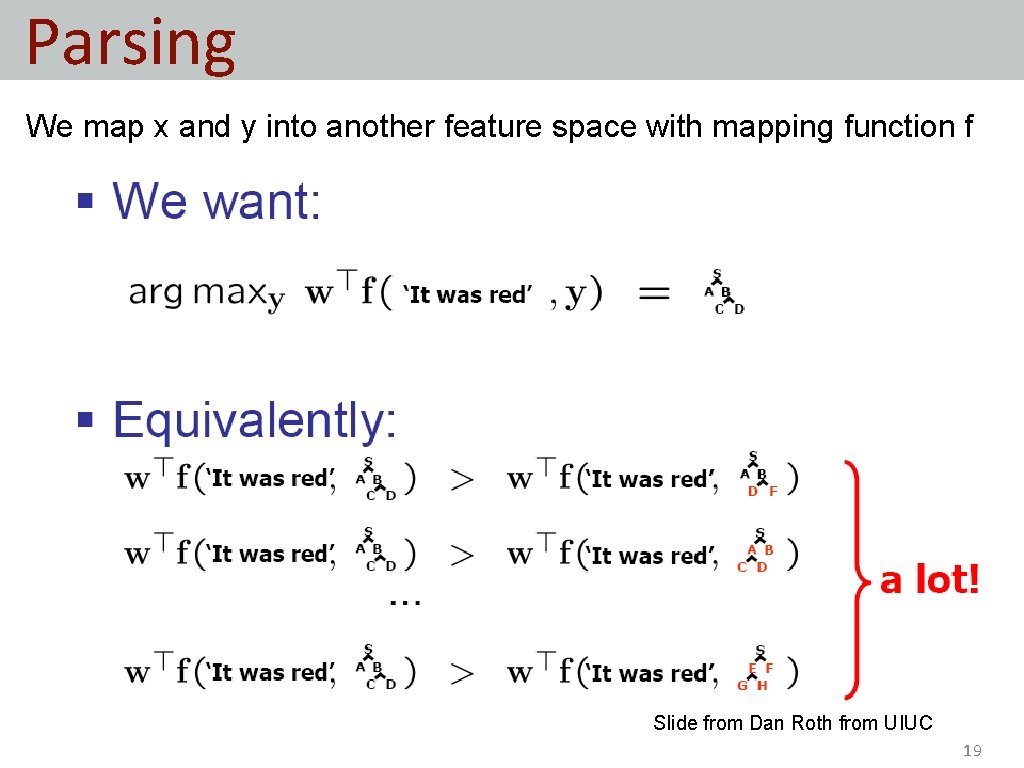

Parsing We map x and y into another feature space with mapping function f • Slide from Dan Roth from UIUC 19

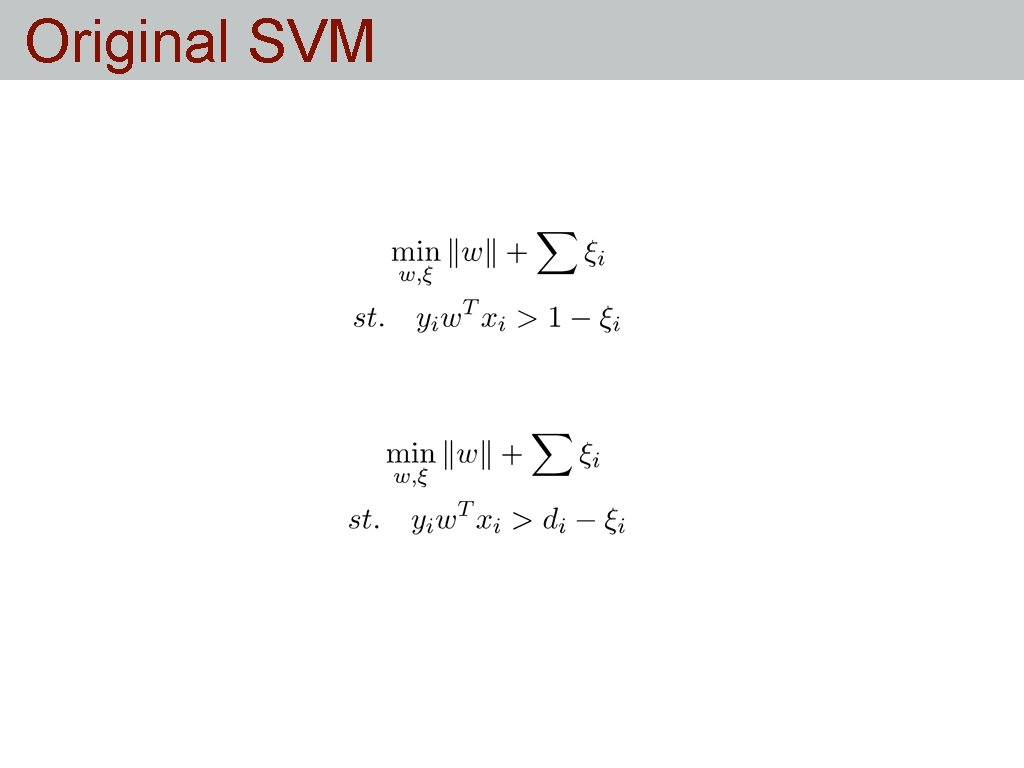

Original SVM

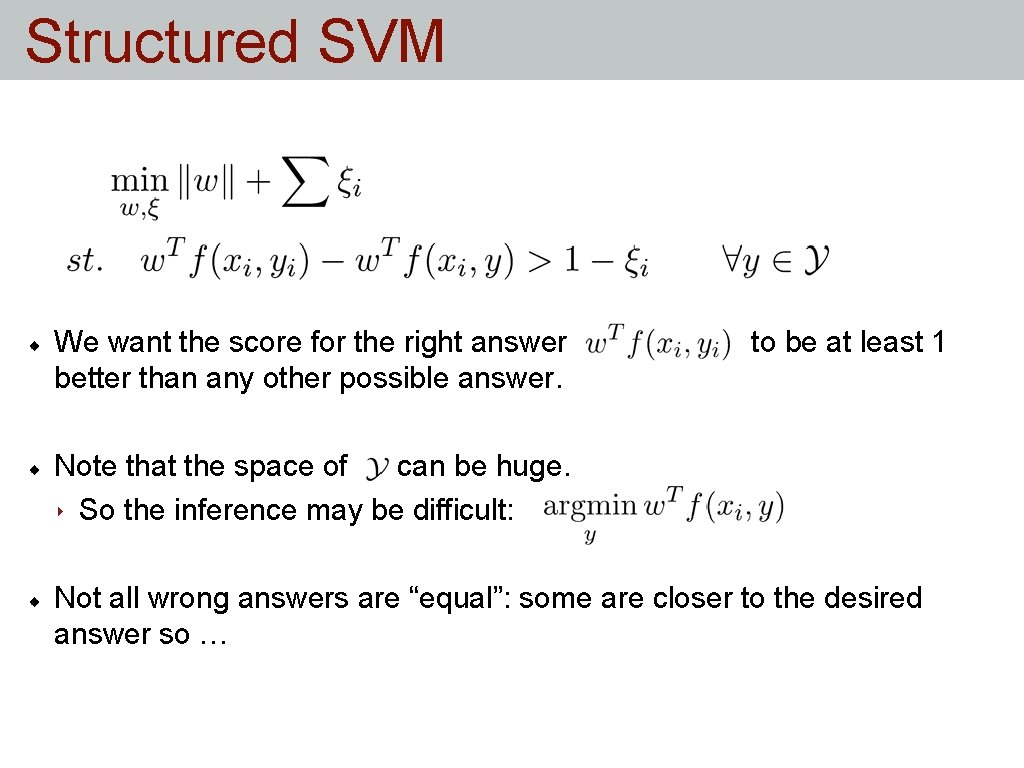

Structured SVM We want the score for the right answer better than any other possible answer. to be at least 1 Note that the space of can be huge. ‣ So the inference may be difficult: Not all wrong answers are “equal”: some are closer to the desired answer so …

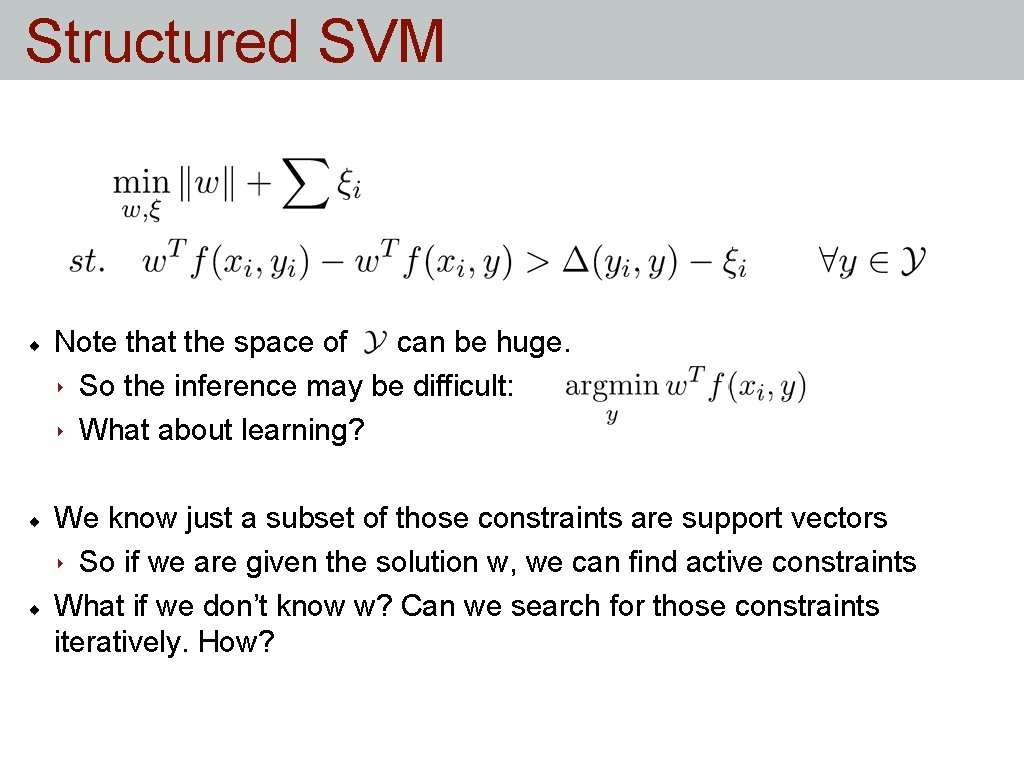

Structured SVM Note that the space of can be huge. ‣ So the inference may be difficult: ‣ What about learning? We know just a subset of those constraints are support vectors ‣ So if we are given the solution w, we can find active constraints What if we don’t know w? Can we search for those constraints iteratively. How?

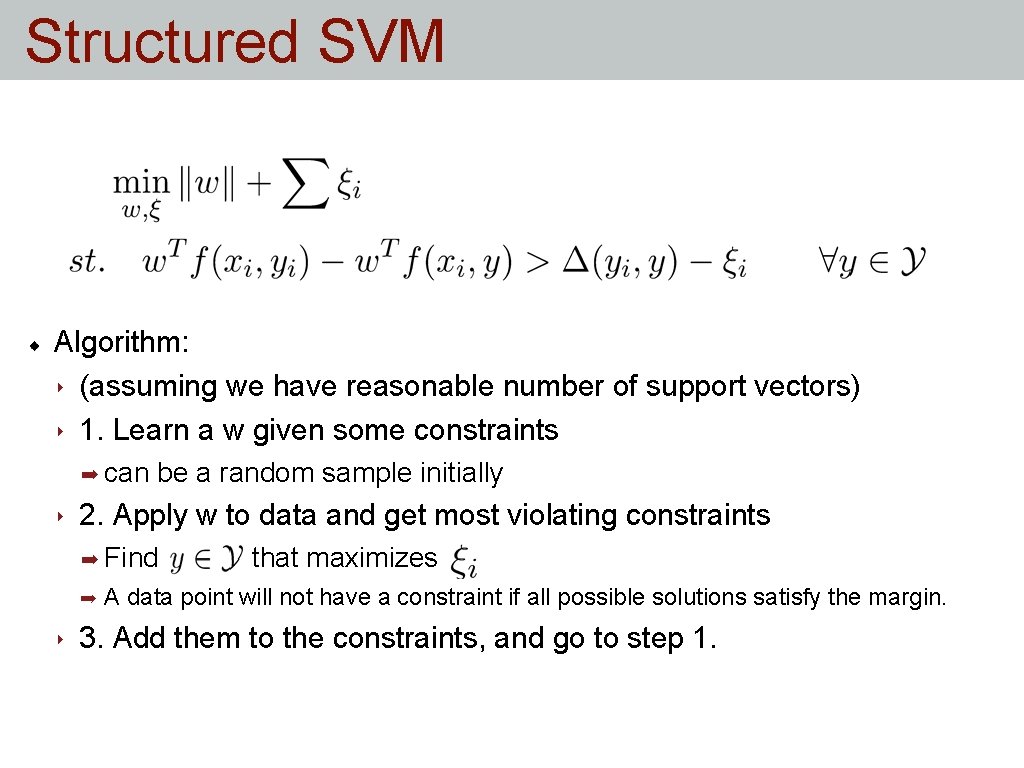

Structured SVM Algorithm: ‣ (assuming we have reasonable number of support vectors) ‣ 1. Learn a w given some constraints ➡ can ‣ be a random sample initially 2. Apply w to data and get most violating constraints ➡ Find ➡ ‣ that maximizes A data point will not have a constraint if all possible solutions satisfy the margin. 3. Add them to the constraints, and go to step 1.

Structured SVM What is a good mapping function for multi-class SVM?

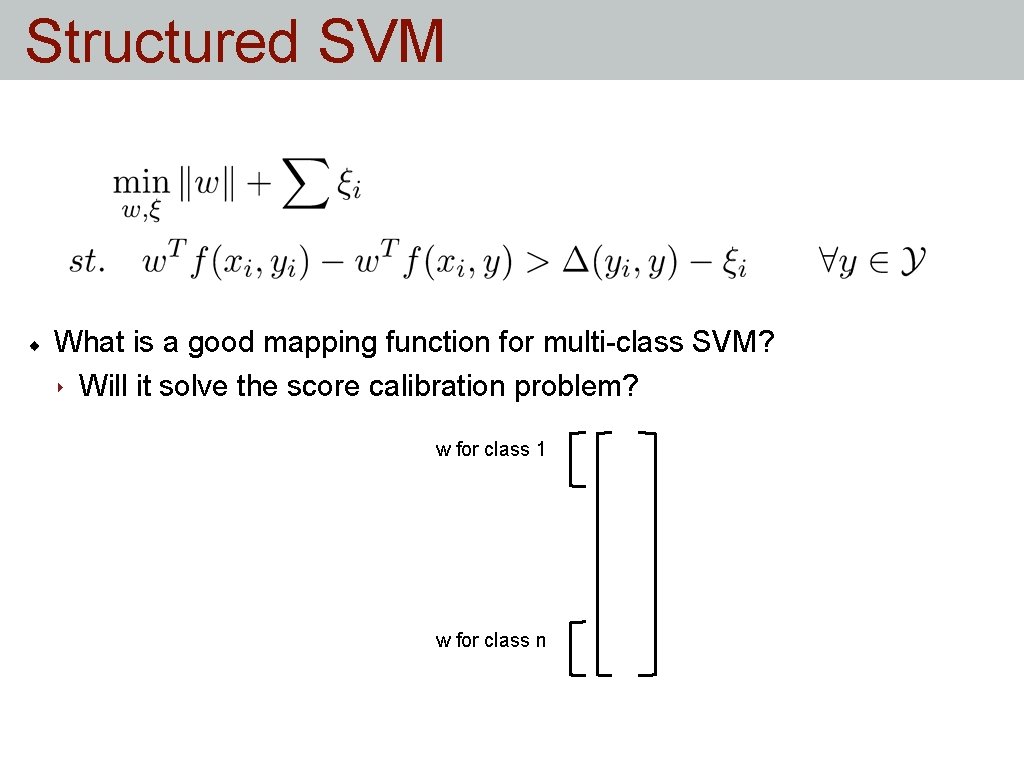

Structured SVM What is a good mapping function for multi-class SVM? ‣ Will it solve the score calibration problem? w for class 1 w for class n

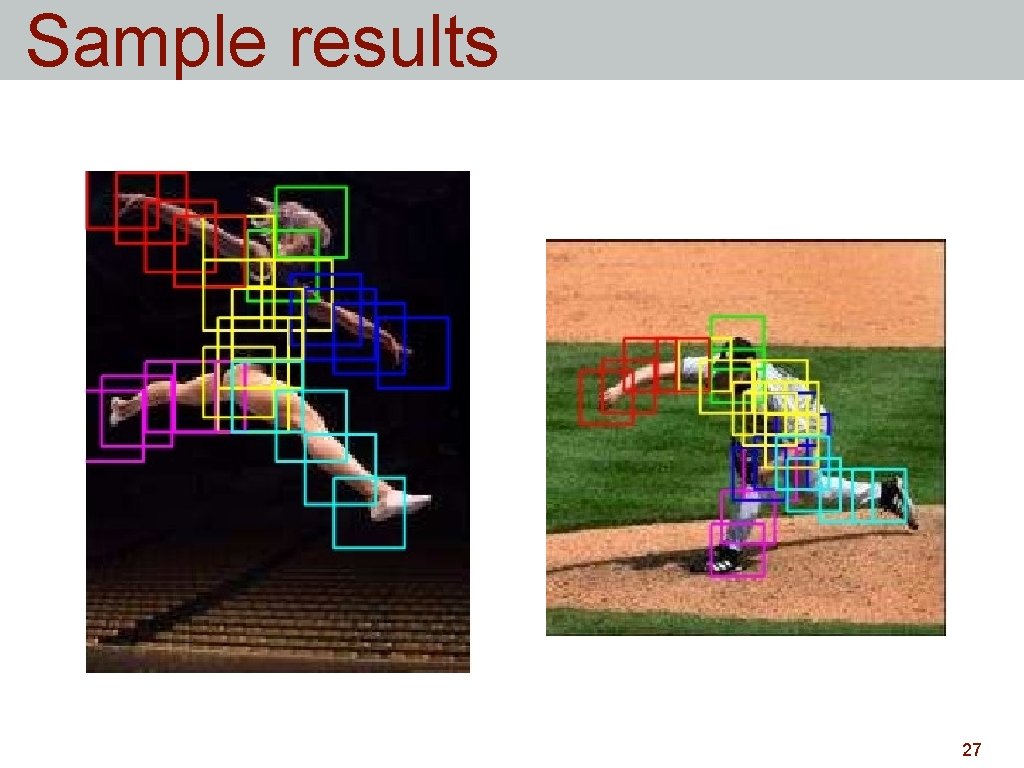

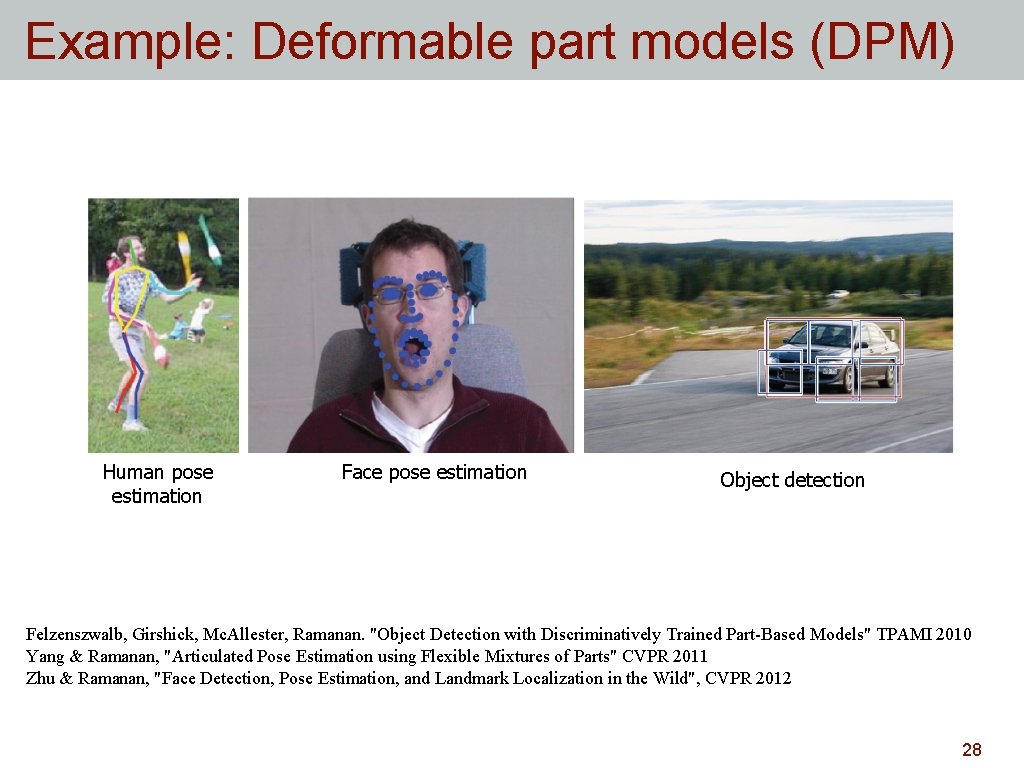

Example: Deformable part models (DPM) Human pose estimation 26

Sample results 27

Example: Deformable part models (DPM) Human pose estimation Face pose estimation Object detection Felzenszwalb, Girshick, Mc. Allester, Ramanan. "Object Detection with Discriminatively Trained Part-Based Models" TPAMI 2010 Yang & Ramanan, "Articulated Pose Estimation using Flexible Mixtures of Parts" CVPR 2011 Zhu & Ramanan, "Face Detection, Pose Estimation, and Landmark Localization in the Wild", CVPR 2012 28

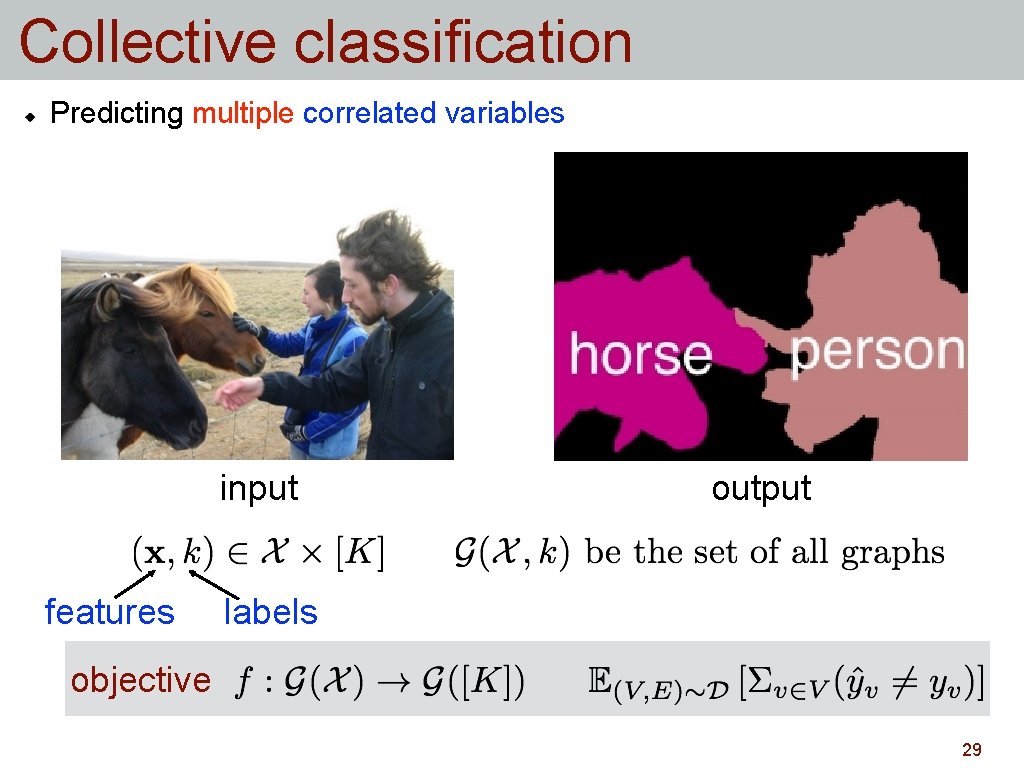

Collective classification Predicting multiple correlated variables input features output labels objective 29

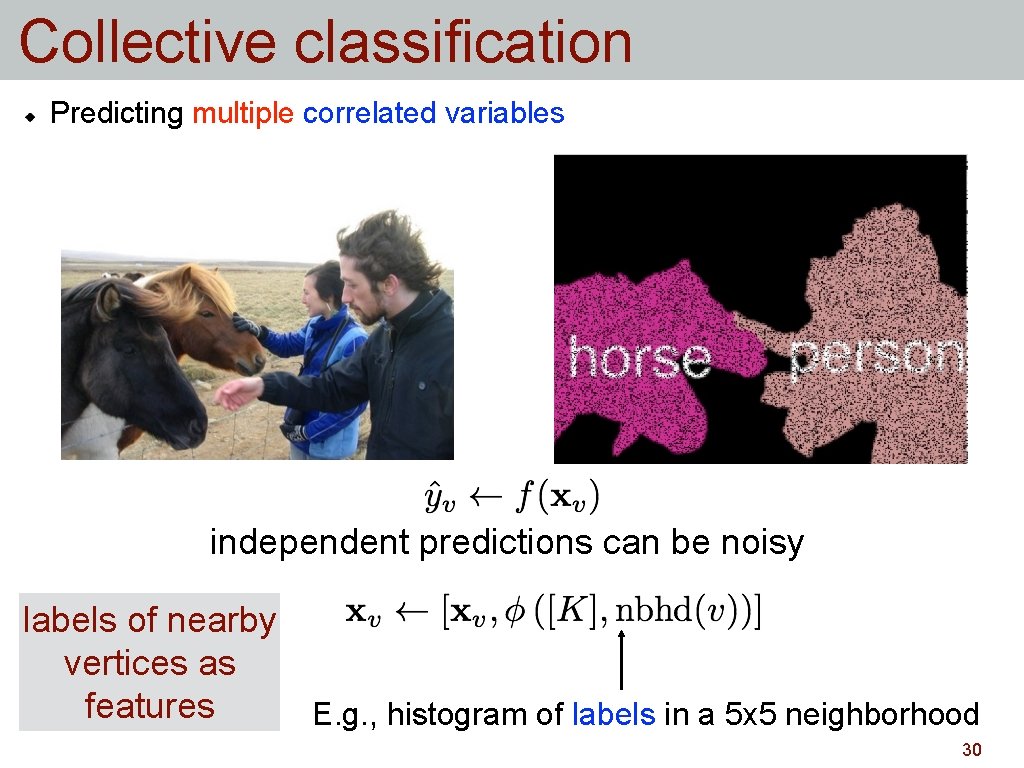

Collective classification Predicting multiple correlated variables independent predictions can be noisy labels of nearby vertices as features E. g. , histogram of labels in a 5 x 5 neighborhood 30

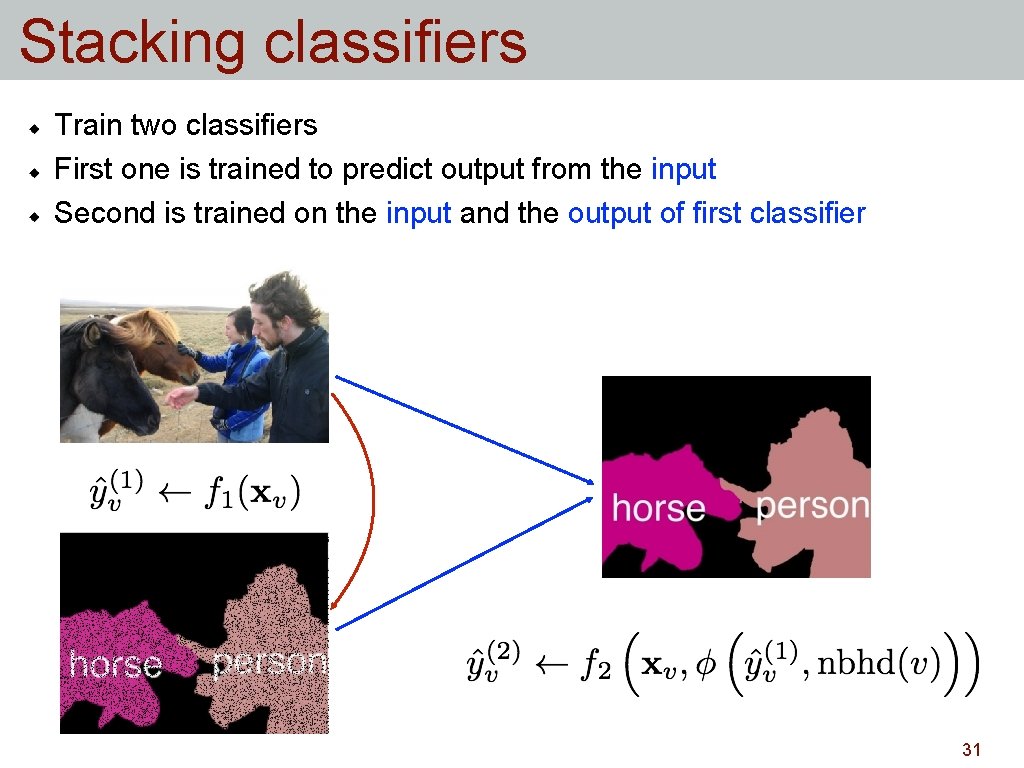

Stacking classifiers Train two classifiers First one is trained to predict output from the input Second is trained on the input and the output of first classifier 31

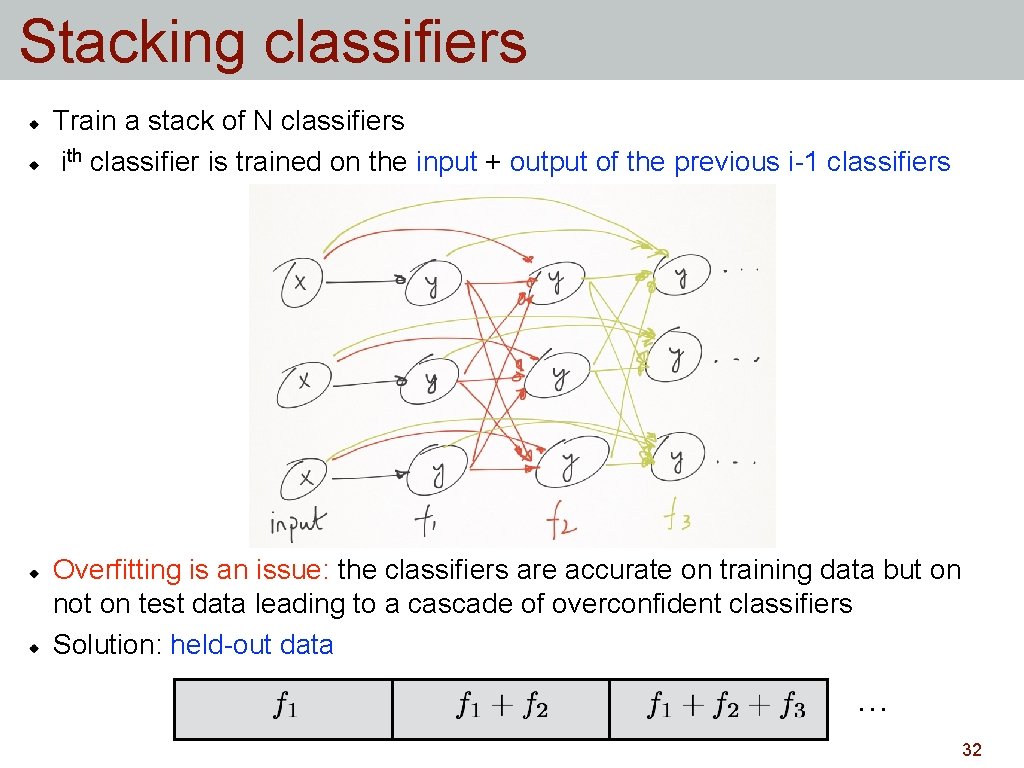

Stacking classifiers Train a stack of N classifiers ith classifier is trained on the input + output of the previous i-1 classifiers Overfitting is an issue: the classifiers are accurate on training data but on not on test data leading to a cascade of overconfident classifiers Solution: held-out data … 32

Summary Learning with imbalanced data ‣ Implicit and explicit sampling can be used to train binary classifiers for the weighted loss case Beyond binary classification ‣ Multi-classification ➡ Some classifiers are inherently multi-class ➡ Others can be combined using: one-vs-one, one-vs-all methods ‣ Ranking ➡ Ranking loss functions to capture distance between permutations ➡ Pointwise and pairwise methods ‣ Structured prediction ➡ Change SVM to predict structured output rather than binary ones. ‣ Collective classification ➡ Stacking classifiers trained with held-out data 33

Slides credit Slides are closely adapted from CIML book by Hal Daume and Subransu Maji’s course. Images for collective classification are from the PASCAL VOC dataset ‣ http: //pascallin. ecs. soton. ac. uk/challenges/VOC/ Some of the discussion is based on Wikipedia ‣ http: //en. wikipedia. org/wiki/Learning_to_rank 34

- Slides: 34