Better Speedups for Parallel Max Flow George C

Better Speedups for Parallel Max. Flow George C. Caragea Uzi Vishkin Dept. of Computer Science University of Maryland, College Park, USA June 4 th, 2011

Experience with an Easy-to. Program Parallel Architecture � XMT (e. Xplicit Multi-Threading) Platform ◦ Design goal: easy to program many-core architecture ◦ PRAM-based design, PRAM-On-Chip programming ◦ Ease of programming demonstrated by order-of-magnitude easeof-teaching/learning ◦ 64 -processor hardware, compiler, 20+ papers, 9 grad degrees, 6 US Patents ◦ Only one previous single-application paper (Dascal et. al, 1999) � Parallel Max-Flow results ◦ [IPDPS 2010] 2. 5 x speedup vs. serial using CUDA ◦ [Caragea and Vishkin, SPAA 2011] up to 108. 3 x speedup vs. serial using XMT � 3 -page paper 2

How to publish application papers on an easy-to-program platform? � Reward game is skewed � Easier to publish on “hard-to-program” platforms ◦ Remember STI Cell? � Application papers for easy-to-program architectures are considered “boring” ◦ Even when they show good results � Recipe for academic publication: ◦ Take simple application (e. g. Breadth-First Search in graph) ◦ Implement it on latest (difficult to program) parallel architecture ◦ Discuss challenges and work-arounds 3

![Parallel Programming Today Current Parallel Programming � High-friction navigation - by implementation [walk/crawl] � Parallel Programming Today Current Parallel Programming � High-friction navigation - by implementation [walk/crawl] �](http://slidetodoc.com/presentation_image_h2/d0d4c3145924b10edc9bf5f89a939d1f/image-4.jpg)

Parallel Programming Today Current Parallel Programming � High-friction navigation - by implementation [walk/crawl] � Initial program (1 week) begins trial & error tuning (½ year; architecture dependent) PRAM-On-Chip Programming � Low-friction navigation – mental design and analysis [fly] � No need to crawl Identify most efficient algorithm � Advance to efficient implementation � 4

PRAM-On-Chip Programming �High-school student comparing parallel programming approaches ◦ “I was motivated to solve all the XMT programming assignments we got, since I had to cope with solving the algorithmic problems themselves, which I enjoy doing. In contrast, I did not see the point of programming other parallel systems available to us at school, since too much of the programming was effort getting around the way the systems were engineered, and this was not fun” 5

Maximum Flow in Networks � Extensively studied problem ◦ Numerous algorithms and implementations (general graphs) ◦ Application domains �Network analysis �Airline scheduling �Image processing �DNA sequence alignment � Parallel Max-Flow algorithms and implementations ◦ Paper has overview ◦ SMPs and GPUs ◦ Difficult to obtain good speedups vs. serial �e. g. 2. 5 x for hybrid CPU-GPU solution 6

![XMT Max-Flow Parallel Solution � First stage: identify/design parallel algorithm ◦ [Shiloach, Vishkin 1982] XMT Max-Flow Parallel Solution � First stage: identify/design parallel algorithm ◦ [Shiloach, Vishkin 1982]](http://slidetodoc.com/presentation_image_h2/d0d4c3145924b10edc9bf5f89a939d1f/image-7.jpg)

XMT Max-Flow Parallel Solution � First stage: identify/design parallel algorithm ◦ [Shiloach, Vishkin 1982] designed O(n 2 log n) time, O(nm) space PRAM algorithm ◦ [Goldberg, Tarjan 1988] introduced distance labels in S-V: Push-Relabel algorithm with O(m) space complexity ◦ [Anderson, Setubal 1992] observed poor practical performance for G-T, augmented with S-V-inspired Global Relabeling heuristic ◦ Solution: Hybrid SV-GT PRAM algorithm � Second stage: write PRAM-On-Chip implementation ◦ Relax PRAM lock-step synchrony by grouping several PRAM steps in an XMT spawn block �Insert synchronization points (barriers) where needed for correctness ◦ Maintain active node set instead of polling all graph nodes for work ◦ Use hardware supported atomic operations to simplify reductions 7

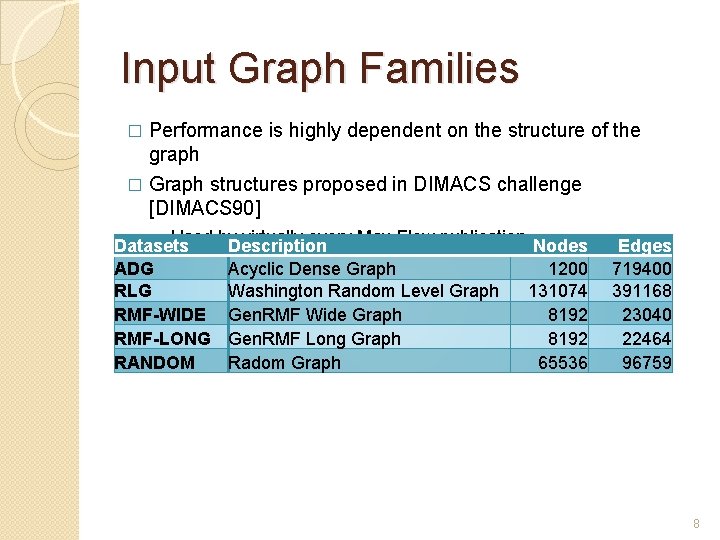

Input Graph Families � Performance is highly dependent on the structure of the graph � Graph structures proposed in DIMACS challenge [DIMACS 90] ◦ Used by. Description virtually every Max-Flow publication Nodes Datasets ADG Acyclic Dense Graph 1200 RLG Washington Random Level Graph 131074 RMF-WIDE Gen. RMF Wide Graph 8192 RMF-LONG Gen. RMF Long Graph 8192 RANDOM Radom Graph 65536 Edges 719400 391168 23040 22464 96759 8

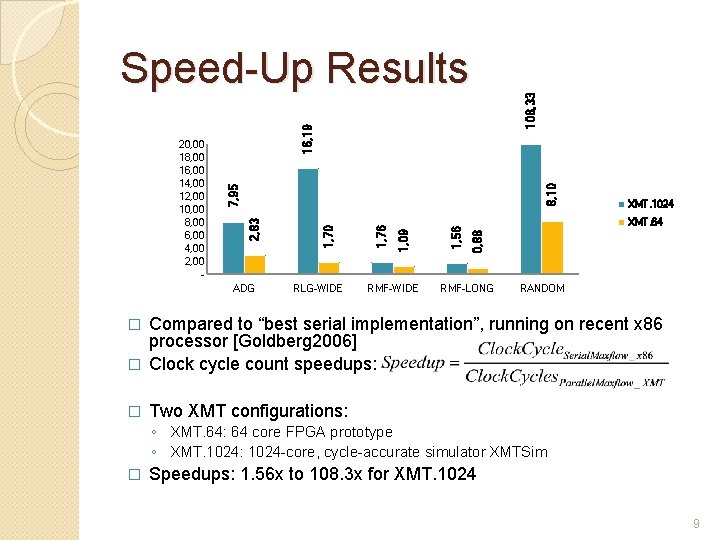

108, 33 8, 10 ADG RLG-WIDE RMF-WIDE XMT. 1024 XMT. 64 0, 88 1, 56 1, 09 1, 76 1, 70 2, 83 7, 95 20, 00 18, 00 16, 00 14, 00 12, 00 10, 00 8, 00 6, 00 4, 00 2, 00 - 16, 19 Speed-Up Results RMF-LONG RANDOM Compared to “best serial implementation”, running on recent x 86 processor [Goldberg 2006] � Clock cycle count speedups: � � Two XMT configurations: ◦ XMT. 64: 64 core FPGA prototype ◦ XMT. 1024: 1024 -core, cycle-accurate simulator XMTSim � Speedups: 1. 56 x to 108. 3 x for XMT. 1024 9

Conclusion � XMT aims at being easy-to-program, general-purpose architecture ◦ Performance improvements on hard-to-parallelize applications like Max-Flow ◦ Ease of programming: by showing order-of-magnitude improvement in ease-ofteaching/learning � Achieved difficult speedups at much earlier developmental stage (10 th graders in HS versus graduate students). UCSB/UMD experiment, Middle-School, Magnet HS, Inner City HS, freshmen course, UIUC/UMD-experiment: J. Sys. & SW 08 SIGCSE 10, Edu. Par 11. � Current stage of XMT project: develop more complex applications beyond benchmarks ◦ Max-Flow is a step in that direction ◦ More needed � Without an easy-to-program many-core architecture, rejection of parallelism by mainstream programmers is all but certain ◦ Affirmative action: drive more researchers to work and seek publications on easy-to-program architectures ◦ This work should not be dismissed as ‘too easy’ Thank you! 10

- Slides: 10