Benchmarking AnomalyBased Detection Systems Written by Roy A

Benchmarking Anomaly-Based Detection Systems Written by: Roy A. Maxion Kymie M. C. Tan Presented by: Yi Hu

Agenda Introduction n Benchmarking Approach n Structure in categorical data n Constructing the benchmark datasets n Experiment one n Experiment two n Conclusion & Suggestion n

Introduction Application of detection of anomaly; n Problems; n n n Difference in data regularities Environment variation

Benchmarking Approach Methodology that can provide quantitative results of running an anomaly detector on various datasets containing different structure. n Address: environment variation - structuring of the data n

Structure in Categorical Data Perfect regularity and perfect randomness(0—perfect regularity; 1— perfect randomness) n Entropy to measure the randomness n

Benchmarking datasets Training data(background) n Testing data(background+anomaly) n Anomaly data n

Benchmarking datasets (cont’d) n Defining the sequence ; n n Alphabet symbols. (English) Alphabet size. (2, 4, 6, 8, 10– 5 suites) Regularity. (0~1 at 0. 1 intervals) Sequence length. (All datasets-500, 000 characters)

Defining the anomalies n Anomalies: n n n Foreign-symbol anomalies; (Q from A, B, C, D, E) Foreign n-gram anomalies; (CC, not the input of A, B, C, D , but it is the bi-gram of datasets) Rare n-gram anomalies; (Usually <0. 05)

Generating the training and test data n n n 500, 000 random numbers in table 11 transition matrices used to produce the desired regularities. Regularity indices between 0~1, with. 1 increments

Generating the anomaly n n Independent of generating the test data. Each of the anomaly types is generated in a different way.

Injecting the anomalies into test data The system determines the maximum number of anomalies. (Not more than. 24% un-injected data. ) n Select the injection intervals. n

Experiment one: n Data sets: n n n Training dataset with rare-4 -gram anomalies less than 5% occurrence; All variables were held constant except for dataset regularity; Total 275 benchmark datasets, 165 of which were anomaly-injected;

Experiment one: n steps: n n n Training the detector— 11 training datasets and 55 training session are conducted; Testing the detector—For each of the 5 alphabet sizes, the detector was run on 33 test datasets, 11 for each anomaly type. Scoring the detection outcomes—event outcomes; ground truth; threshold; scope and presentation of results

Experiment one: n ROC analysis: n n Relative operating characteristic curve; Compare two aspects of detection systems: hits---Y axis and false alarm--- X axis

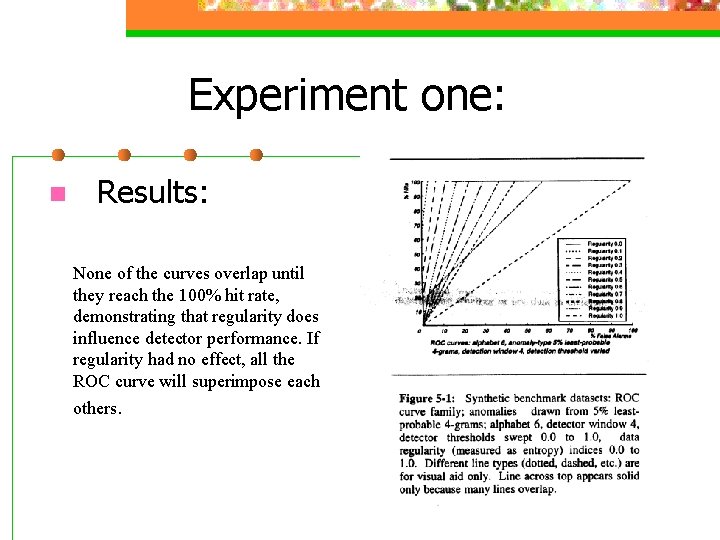

Experiment one: n Results: None of the curves overlap until they reach the 100% hit rate, demonstrating that regularity does influence detector performance. If regularity had no effect, all the ROC curve will superimpose each others.

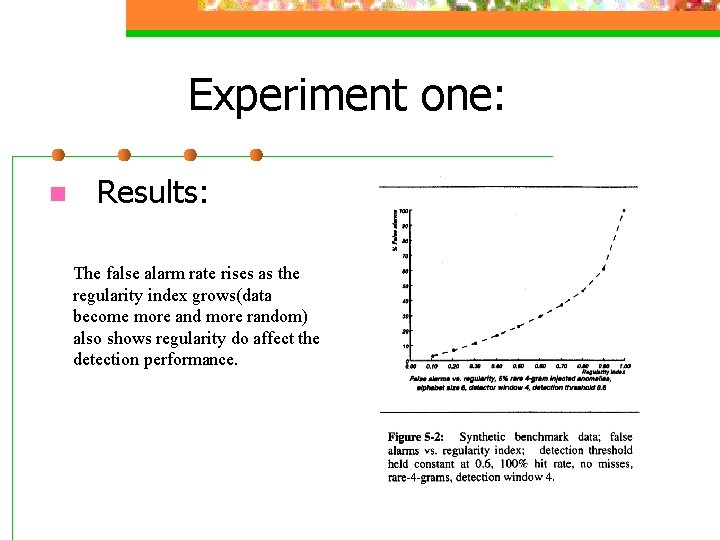

Experiment one: n Results: The false alarm rate rises as the regularity index grows(data become more and more random) also shows regularity do affect the detection performance.

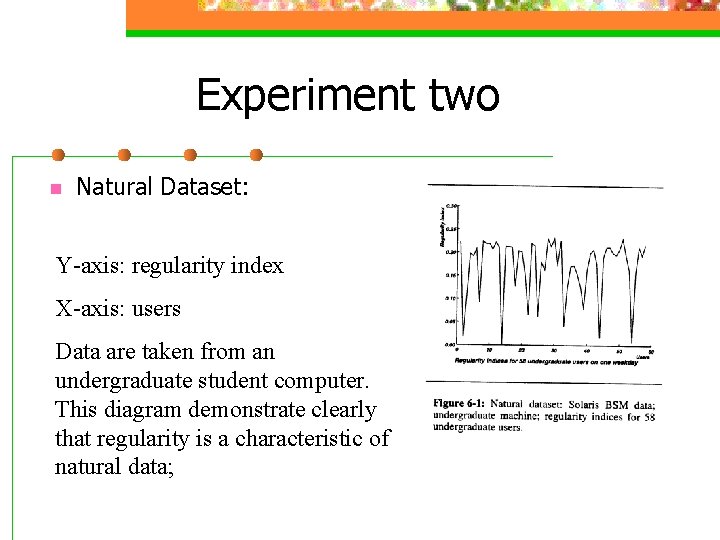

Experiment two n Natural Dataset: Y-axis: regularity index X-axis: users Data are taken from an undergraduate student computer. This diagram demonstrate clearly that regularity is a characteristic of natural data;

Conclusion n In the experiments conducted here, all variables were held constant except regularity, and it was established that a strong relationship exist between detector accuracy and regularity. n n An anomaly detector cannot be evaluated on the basis of its performance on a dataset of one regularity. Different regularity occur not only between different users and environment, but also within user sessions.

Suggestion n Overcoming this obstacle may require a mechanism to swap anomaly detectors or change the parameters of the current anomaly detector whenever regularity changes.

- Slides: 19