Benchmark Screening What Why and How A module

Benchmark Screening: What, Why and How A module for pre-service and in-service professional development MN RTI Center Author: Lisa H. Stewart, Ph. D Minnesota State University Moorhead www. scred. k 12. mn. us click on RTI Center MN Rt. I Center 1

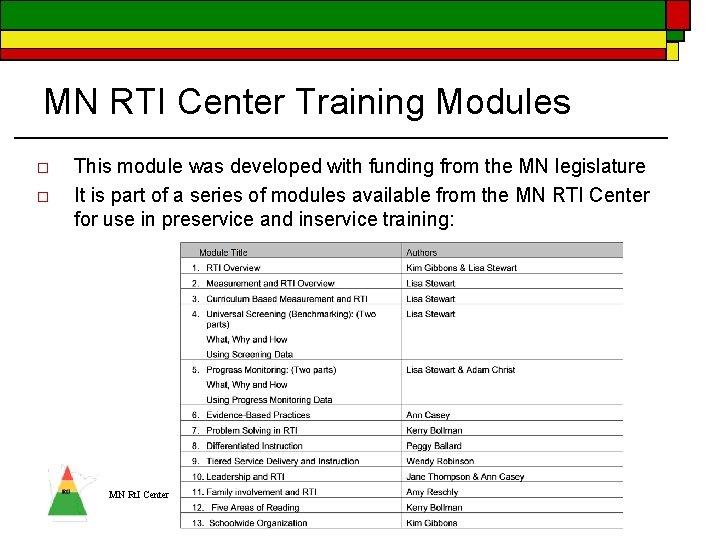

MN RTI Center Training Modules o o This module was developed with funding from the MN legislature It is part of a series of modules available from the MN RTI Center for use in preservice and inservice training: MN Rt. I Center

Overview o This module is Part 1 of 2 n Module 1: Benchmark Screening: What, Why and How o o n What is screening? Why screen students? Criteria for screeners/what tools? Screening logistics Module 2: Using Benchmark Screening Data MN Rt. I Center

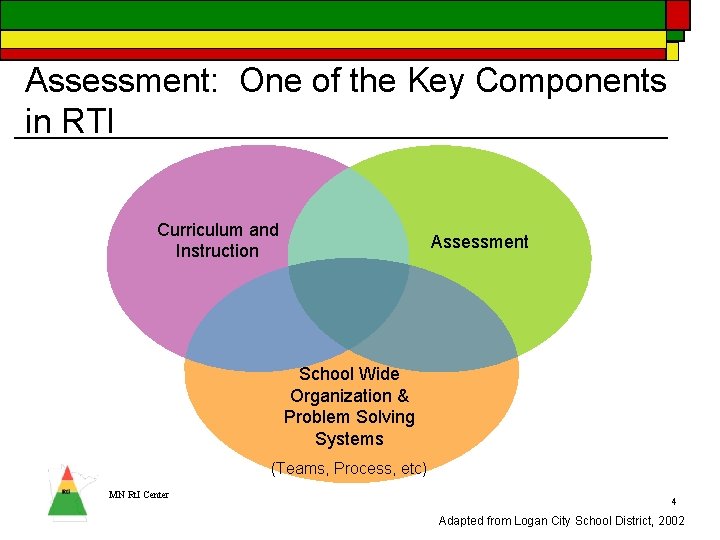

Assessment: One of the Key Components in RTI Curriculum and Instruction Assessment School Wide Organization & Problem Solving Systems (Teams, Process, etc) MN Rt. I Center 4 Adapted from Logan City School District, 2002

Assessment and Response to Intervention (RTI) o A core feature of RTI is identifying a measurement system n Screen large numbers of students o n n Identify students in need of additional intervention Monitor students of concern more frequently o 1 to 4 x per month o Typically weekly Diagnostic testing used for instructional planning to help target interventions as needed MN Rt. I Center 5

Why Do Screening? o Activity n What does it mean to “screen” students? n Why is screening so important in a Response to Intervention system? (e. g. , what assumptions of RTI require a good screening system? ) n What happens if you do NOT have an efficient, systematic screening system in place in the school? MN Rt. I Center

Screening is part of a problem-solving system o o Helps identify students at-risk in a PROACTIVE way Gives feedback to the system about how students progress throughout the year at a gross (3 x per year) level n n o If students are on track in the fall are they still on track in the winter? What is happening with students who started the year below target, are they catching up? Gives feedback to the system about changes from year to year n Is our new reading curriculum having the impact we were expecting? MN Rt. I Center 7

What Screening Looks Like in a Nutshell o o School decides on brief tests to be given at each grade level and trains staff in the administration, scoring and use of the data Students are given the tests 3 x per year (Fall, Winter, Spring) Person or team assigned in each building to organize data collection All students are given the tests for their grade level within a short time frame (e. g. , 1 -2 weeks or less). Some tests may be group administered, others are individually administered. n n o o Benchmark testing: about 5 minutes per student, desk to test (individually administered) Administered by special ed, reading, or general ed teachers or paras Entered into a computer/web based reporting system by clerical staff Reports show the spread of student skills and lists student scores, etc. to use in instructional and resource planning MN Rt. I Center

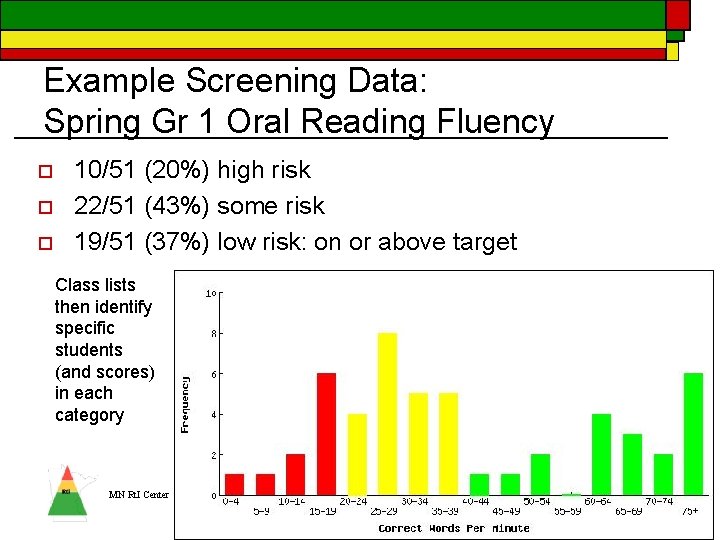

Example Screening Data: Spring Gr 1 Oral Reading Fluency o o o 10/51 (20%) high risk 22/51 (43%) some risk 19/51 (37%) low risk: on or above target Class lists then identify specific students (and scores) in each category MN Rt. I Center DRAFT May 27, 2009 9

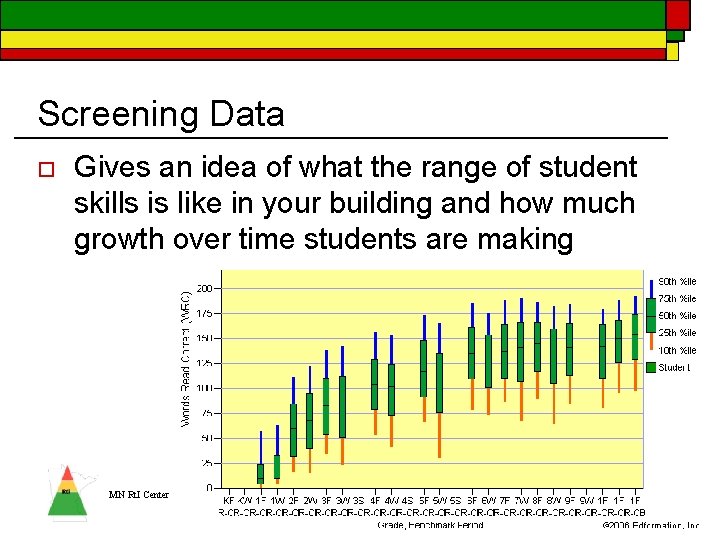

Screening Data o Gives an idea of what the range of student skills is like in your building and how much growth over time students are making MN Rt. I Center DRAFT May 27, 2009 10

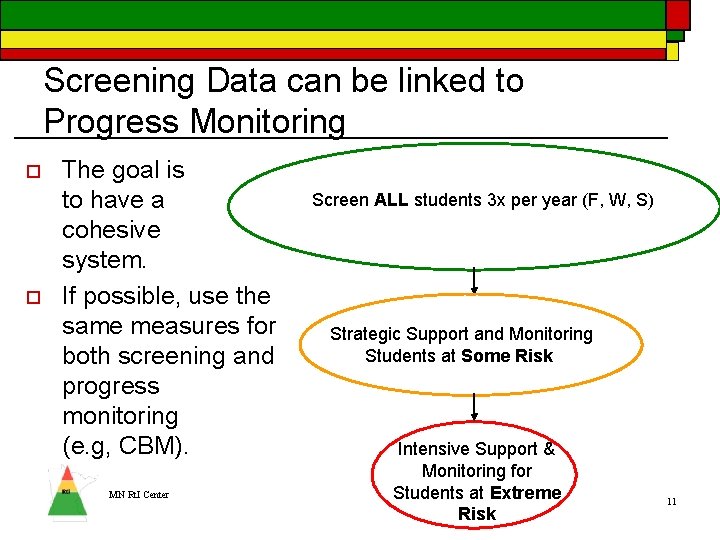

Screening Data can be linked to Progress Monitoring o o The goal is to have a cohesive system. If possible, use the same measures for both screening and progress monitoring (e. g, CBM). MN Rt. I Center Screen ALL students 3 x per year (F, W, S) Strategic Support and Monitoring Students at Some Risk Intensive Support & Monitoring for Students at Extreme Risk 11

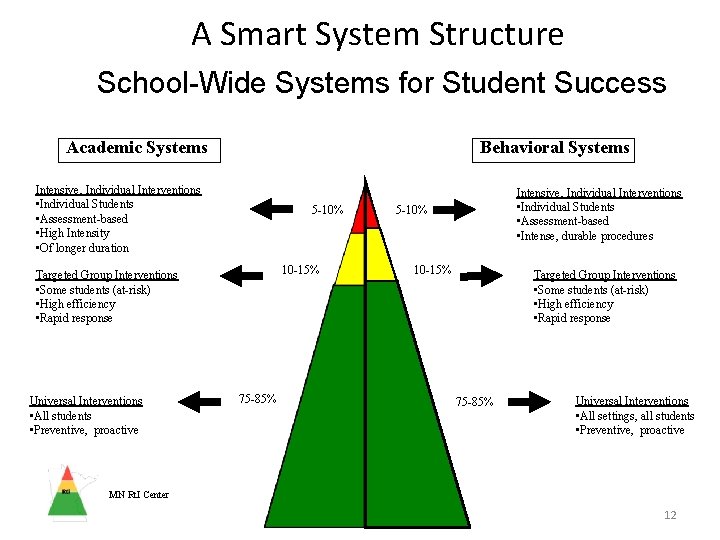

A Smart System Structure School-Wide Systems for Student Success Academic Systems Behavioral Systems Intensive, Individual Interventions • Individual Students • Assessment-based • High Intensity • Of longer duration 5 -10% 10 -15% Targeted Group Interventions • Some students (at-risk) • High efficiency • Rapid response Universal Interventions • All students • Preventive, proactive 75 -85% Intensive, Individual Interventions • Individual Students • Assessment-based • Intense, durable procedures 5 -10% 10 -15% Targeted Group Interventions • Some students (at-risk) • High efficiency • Rapid response 75 -85% Universal Interventions • All settings, all students • Preventive, proactive MN Rt. I Center 12

Terminology Check o Screening n o Universal Screening n o Collecting data on all or a targeted group of students in a grade level or in the school Same as above but implies that all students are screened Benchmarking n Often used synonymously with the terms above, but typically implies universal screening done 3 x per year and data are interpreted using criterion target or “benchmark” scores MN Rt. I Center

“Benchmark” Screening o Schools typically use cut off or criterion scores to decide if a student is at-risk or not. Those scores or targets are also referred to as “benchmarks”, thus the term “benchmarking” o Some states or published curriculum also use the term benchmarking but in a different way (e. g. , to refer to the documentation of achieving a specific state standard) that has nothing to do with screening. MN Rt. I Center

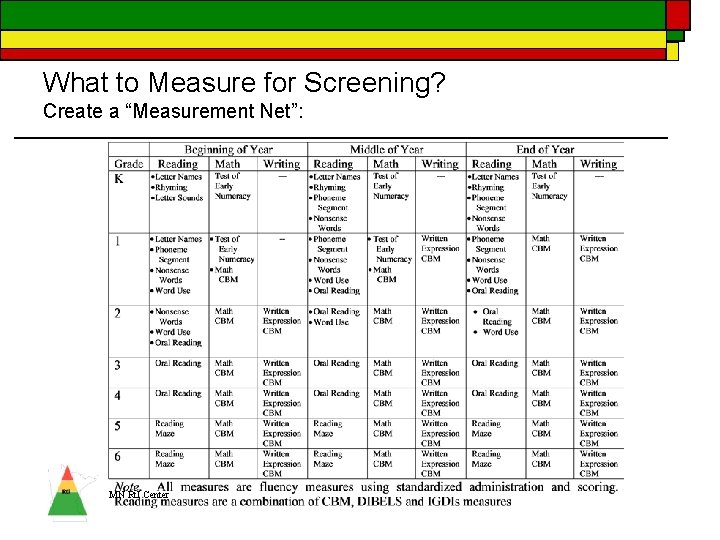

What to Measure for Screening? Create a “Measurement Net”: MN Rt. I Center

How do you decide what Measures to Use for Screening? o Lots of ways to measure reading in the schools: o o o o o Measure of Academic Progress (MAP) Guided Reading (Leveled Reading) Statewide Accountability Tests Published Curriculum Tests Teacher Made Tests General Outcome Measures (Curriculum-Based Measurement “family”) STAR Reading Etc Not all of these are appropriate. Some are not reliable enough for screening, others are designed for another purpose and are not valid or practical for screening all students 3 x per year MN Rt. I Center

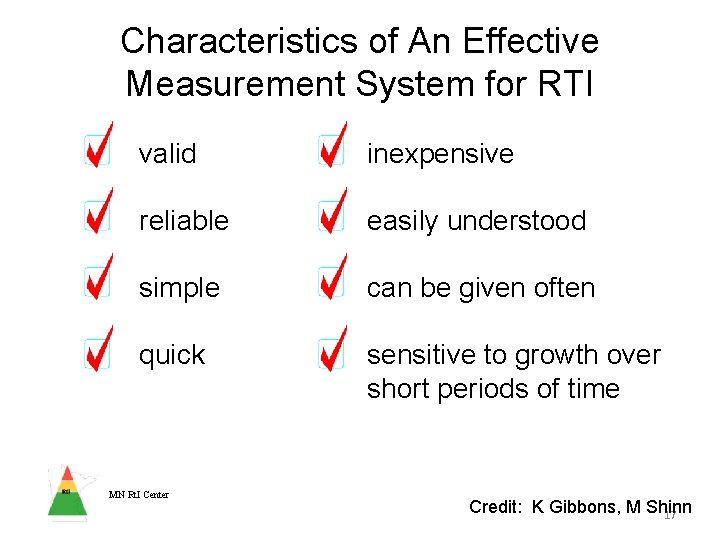

Characteristics of An Effective Measurement System for RTI valid inexpensive reliable easily understood simple can be given often quick sensitive to growth over short periods of time MN Rt. I Center Credit: K Gibbons, M Shinn 17

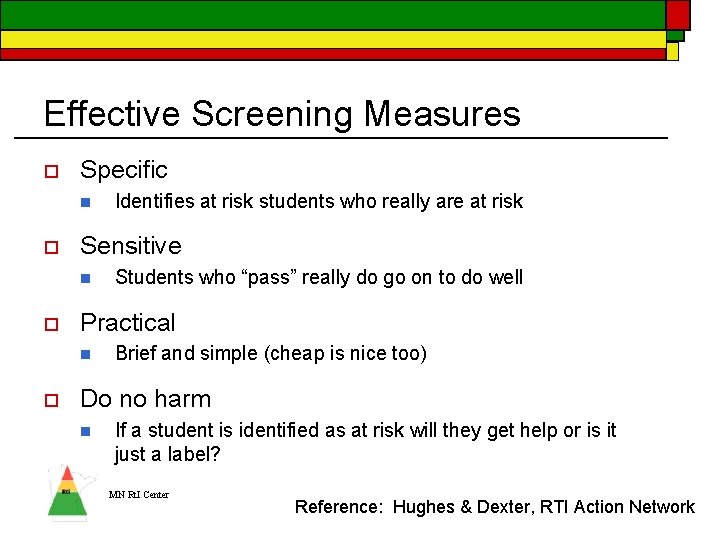

Effective Screening Measures o Specific n o Sensitive n o Students who “pass” really do go on to do well Practical n o Identifies at risk students who really are at risk Brief and simple (cheap is nice too) Do no harm n If a student is identified as at risk will they get help or is it just a label? MN Rt. I Center Reference: Hughes & Dexter, RTI Action Network

Buyer Beware! o Many tools may make claims about being a good “screener” MN Rt. I Center 19

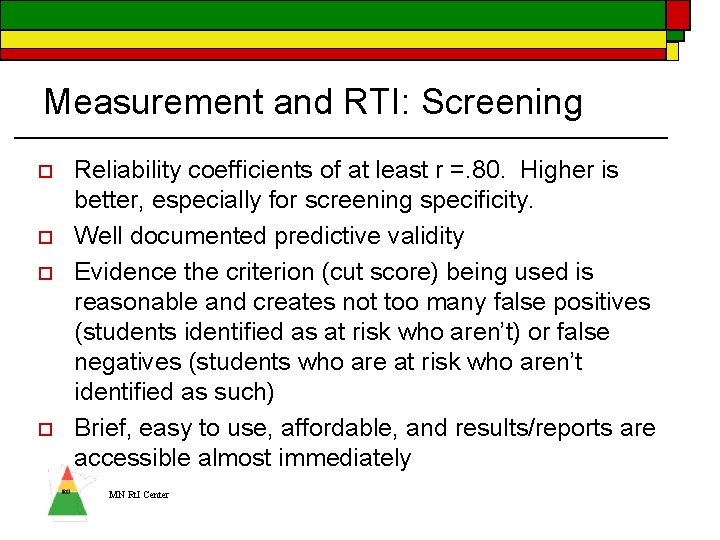

Measurement and RTI: Screening o o Reliability coefficients of at least r =. 80. Higher is better, especially for screening specificity. Well documented predictive validity Evidence the criterion (cut score) being used is reasonable and creates not too many false positives (students identified as at risk who aren’t) or false negatives (students who are at risk who aren’t identified as such) Brief, easy to use, affordable, and results/reports are accessible almost immediately MN Rt. I Center

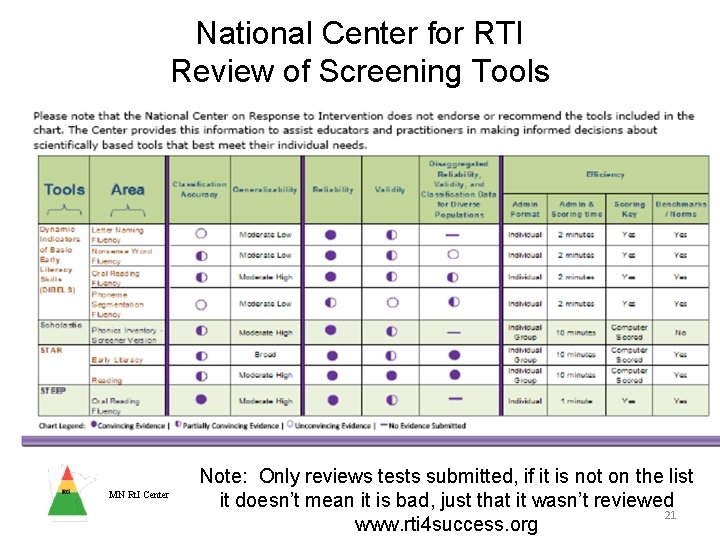

National Center for RTI Review of Screening Tools MN Rt. I Center Note: Only reviews tests submitted, if it is not on the list it doesn’t mean it is bad, just that it wasn’t reviewed 21 www. rti 4 success. org

RTI, General Outcome Measures and Curriculum Based Measurement o Many schools use Curriculum Based Measurement (CBM) general outcome measures for screening and progress monitoring n You don’t “have to “ use CBM, but many schools do o Most common CBM tool in Grades 1 - 8 is Oral Reading Fluency (ORF) n Measure of reading rate (# of words correct per minute on a grade level passage) and a strong indicator of overall reading skill, including comprehension o Early Literacy Measures are also available such as Nonsense Word Fluency (NWF), Phoneme Segmentation Fluency (PSF), MN Rt. I Center Letter Name Fluency (LNF) and Letter Sound Fluency (LSF) 22

Why GOMs/CBM? o Typically meet the criteria needed for RTI screening and progress monitoring n n o Reliable, valid, specific, sensitive, practical Also, some utility for instructional planning (e. g. , grouping) They are INDICATORS of whethere might be a problem, not diagnostic! n n n Like taking your temperature or sticking a toothpick into a cake Oral reading fluency is a great INDICATOR of reading decoding, fluency and reading comprehension Fluency based because automaticity helps discriminate between students at different points of learning a skill MN Rt. I Center 23

GOM…CBM… DIBELS… AIMSweb… MN Rt. I Center DRAFT May 27, 2009 24

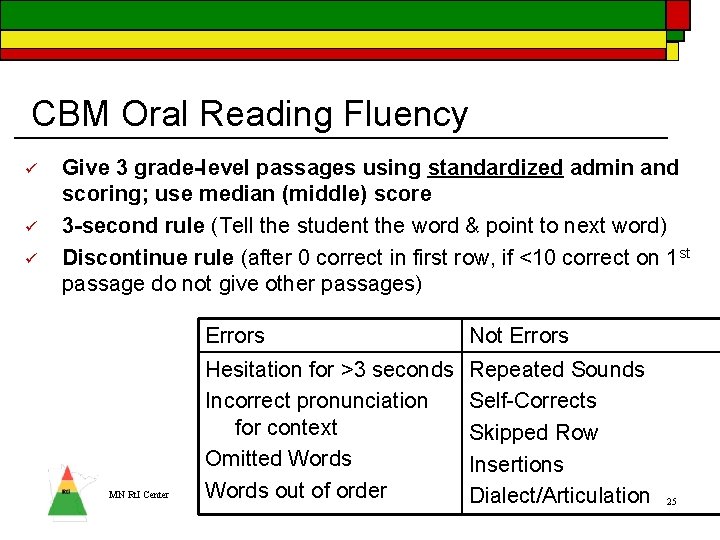

CBM Oral Reading Fluency ü ü ü Give 3 grade-level passages using standardized admin and scoring; use median (middle) score 3 -second rule (Tell the student the word & point to next word) Discontinue rule (after 0 correct in first row, if <10 correct on 1 st passage do not give other passages) MN Rt. I Center Errors Not Errors Hesitation for >3 seconds Incorrect pronunciation for context Omitted Words out of order Repeated Sounds Self-Corrects Skipped Row Insertions Dialect/Articulation 25

Fluency and Comprehension The purpose of reading is comprehension A good measures of overall reading proficiency is reading fluency because of its strong correlation to measures of comprehension. MN Rt. I Center

Screening Logistics o What materials? o When to collect? o Who collects it? o How to enter and report the data? MN Rt. I Center

What Materials? o o Use computer or PDA-based testing system -ORDownload reading passages, early literacy probes, etc. from the internet n n n Many sources of CBM materials available free or low cost: Aimsweb, DIBELS, edcheckup, etc. Often organized as “booklets” for ease of use Can use plastic cover and markers for scoring to save copy costs MN Rt. I Center

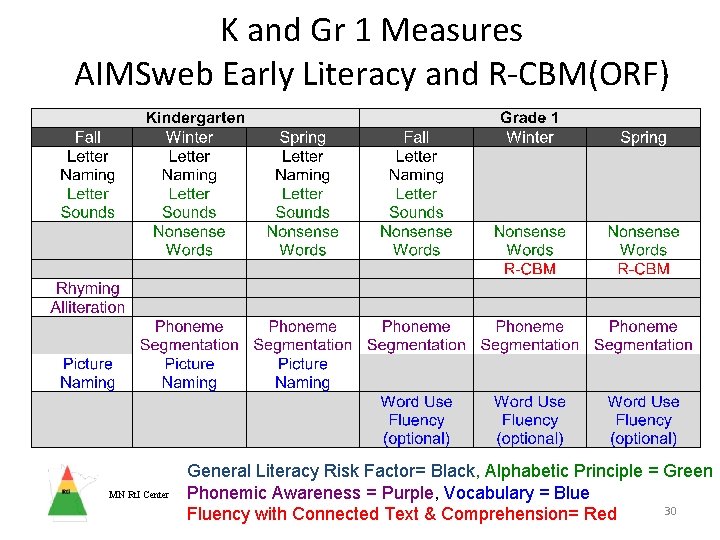

Screening Materials in K and Gr 1 o Screening Measures will change from Fall to Winter to Spring slightly o Early literacy “subskill” measurement is dropped as reading develops o Downloaded materials and booklets MN Rt. I Center

K and Gr 1 Measures AIMSweb Early Literacy and R-CBM(ORF) MN Rt. I Center General Literacy Risk Factor= Black, Alphabetic Principle = Green Phonemic Awareness = Purple, Vocabulary = Blue 30 Fluency with Connected Text & Comprehension= Red

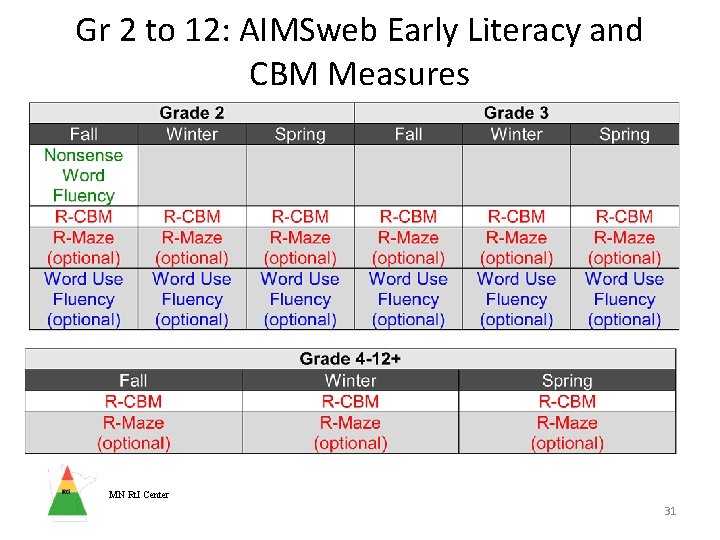

Gr 2 to 12: AIMSweb Early Literacy and CBM Measures MN Rt. I Center 31

Screening Logistics: Timing o Typically 3 x per year: Fall, Winter, Spring n n o Have a district-wide testing window! (all grades and schools collect data within the same 2 week period) In Fall K sometimes either test right away and again a month later or wait a little while to test Benchmark testing: about 5 minutes per student (individually administered) n n In the classroom In stations in a commons area, lunchroom, etc. MN Rt. I Center

Screening Logistics: People o Administered by trained staff n n o paras, special ed teachers, reading teachers, general ed teachers, school psychologists, speech language, etc. Good training is essential! Measurement person assigned in each building to organize data collection n Either collected electronically or entered into a webbased data management tool by clerical staff MN Rt. I Center

Screening Logistics Math Quiz o If you have a classroom with 25 students and to administer the screening measures takes approx. 5 min. per student (individual assessment time)… o How long would it take 5 people to “screen” the entire classroom? MN Rt. I Center

Remember: Garbage IN…. Garbage OUT…. o Make sure your data are reliable and valid indicators or they won’t be good for nuthin… n n n Training Assessment Integrity checks/refreshers Well chosen tasks/indicators MN Rt. I Center

Use Technology to Facilitate Screening MN Rt. I Center

Using Technology to Capture Data o Collect the data using technology such as a PDA n Example: http: //www. wirelessgeneration. com/ http: //www. aimsweb. com o Students take the test on a computer n Example: STAR Reading http: //www. renlearn. com/sr/ MN Rt. I Center

Using Technology to Organize and Report Data o Enter data into web-based data management system o Data gets back into the hands of the teachers and teams quickly and in meaningful reports for problem solving o Examples n http: //dibels. uoregon. edu n http: //www. aimsweb. com n http: //www. edcheckup. com MN Rt. I Center

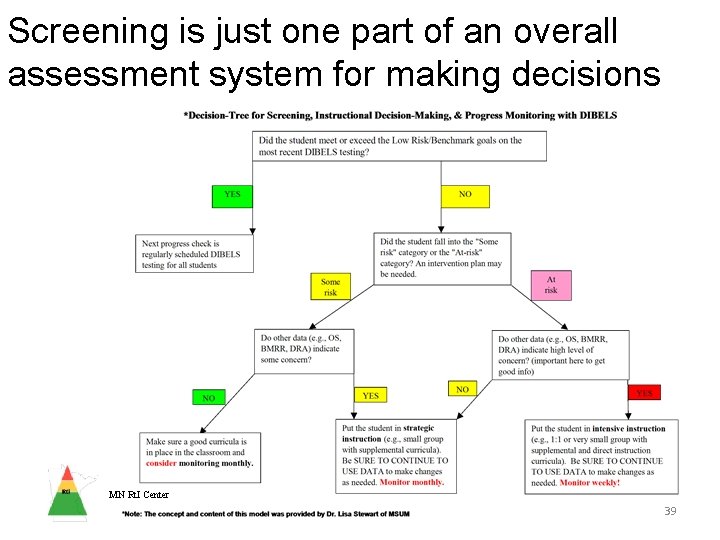

Screening is just one part of an overall assessment system for making decisions MN Rt. I Center 39

Remember: Screening is part of a problem-solving system o o Helps identify students at-risk in a PROACTIVE way Gives feedback to the system about how students progress throughout the year at a gross (3 x per year) level n n o If students are on track in the fall are they still on track in the winter? What is happening with students who started the year below target, are they catching up? Gives feedback to the system about changes from year to year n Is our new reading curriculum having the impact we were expecting? MN Rt. I Center

Build in Time to USE the Data! Schedule data “retreats” or grade level meeting times immediately after screening so you can look at and USE the data for planning. MN Rt. I Center

Common Mistakes o o o o Not enough professional development and communication about why these measures were picked, what the scores do and don’t mean, the rationale for screening, etc Low or questionable quality of administration and scoring Too much reliance on a small group of people for data collection Teaching to the test Limited sample of students tested (e. g. , only Title students! ) Slow turn around on reports Data are not used MN Rt. I Center

Using Screening Data: See Module 2! MN Rt. I Center 43

Articles available with this module o o o Stewart & Silberglitt. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds. ) Best Practices in School Psychology, V, NASP Publications. (pp. 225 -242). NCRLD RTI Manual (2006). Chapter 1: School-wide screening Retrieved from http: //www. nrcld. org/rti_manual/pages/RTIManual. Section 1. pdf 6/26/09 Jenkins & Johnson. Universal screening for reading problems: Why and how should we do this? Retrieved 6/23/09, from RTI Action Network site: http: //www. rtinetwork. org/Essential/Assessment/Universal/ar/Reading. Problems Kovaleski & Pederson (2008) Best practices in data analysis teaming. In A. Thomas & J. Grimes (Eds. ) Best Practices in School Psychology, V, NASP Ikeda, Neessen, & Witt. (2008). Best practices in universal screening. In A. Thomas & J. Grimes (Eds. ) Best Practices in School Psychology, V, NASP Publications. (pp. 103 -114). Gibbons, K (2008). Necessary Assessments in RTI. Retrieved from http: //www. tqsource. org/forum/documents/Gibbons. Paper. doc on 6/26/09 MN Rt. I Center 44

RTI Related Resources o National Center on RTI o n RTI Action Network – links for Assessment and Universal Screening o o http: //www. scred. k 12. mn. us/ and click on link National Center on Student Progress Monitoring o o http: //www. rtinetwork. org MN RTI Center o o http: //www. rti 4 success. org/ http: //www. studentprogress. org/ Research Institute on Progress Monitoring http: //progressmonitoring. net/ o. Rt. I Center MN

RTI Related Resources (Cont’d) o National Association of School Psychologists o o National Association of State Directors of Special Education (NADSE) o o www. nasdse. org Council of Administrators of Special Education o o www. nasponline. org www. casecec. org Office of Special Education Programs (OSEP) toolkit and RTI materials o http: //www. osepideasthatwork. org/toolkit/ta_responsiveness_in tervention. asp MN Rt. I Center

Key Sources for Reading Research, Assessment and Intervention… o University of Oregon IDEA (Institute for the Development of Educational Achievement) Big Ideas of Reading Site o o Florida Center for Reading Research o o http: //www. texasreading. org/utcrla/ American Federation of Teachers Reading resources (what works 1999 publications) o o http: //www. fcrr. org/ Texas Vaughn Gross Center for Reading and Language Arts o o http: //reading. uoregon. edu/ http: //www. aft. org/teachers/pubs-reports/index. htm#reading National Reading Panel o http: //www. nationalreadingpanel. org/ MN Rt. I Center

Recommended Sites with Multiple Resources o Intervention Central- by Jim Wright (school psych from central NY) o n Center on Instruction o o http: //www. interventioncentral. org http: //www. centeroninstruction. org St. Croix River Education District o http: //scred. k 12. mn. us MN Rt. I Center

Quiz o 1. ) A core feature of RTI is identifying a(n) _____ system. o 2. ) Collecting data on all or a targeted group of students in a grade level or in the school is called what? n n A. ) Curriculum B. ) Screening C. ) Intervention D. ) Review MN Rt. I Center

Quiz (Cont’d) o 3. ) What is a characteristic of an efficient measurement system for RTI? n n n A. ) Valid B. ) Reliable C. ) Simple D. ) Quick E. ) All of the above MN Rt. I Center

Quiz (Cont’d) o 4) Why screen students? o 5) Why would general education teachers need to be trained on the measures used if they aren’t part of the data collection? MN Rt. I Center

Quiz (Cont’d) o o 6) True or False? If possible the same tools should be used for screening and progress monitoring. 7. ) List at least 3 common mistakes when doing screening and how they can be avoided. MN Rt. I Center

The End o Note: The MN RTI Center does not endorse any particular product. Examples used are for instructional purposes only. o Special Thanks: n n Thank you to Dr. Ann Casey, director of the MN RTI Center, for her leadership Thank you to Aimee Hochstein, Kristen Bouwman, and Nathan Rowe, Minnesota State University Moorhead graduate students, for editing work, writing quizzes, and enhancing the quality of these training materials MN Rt. I Center

- Slides: 53