Behavioural priors Learning to search efficiently in action

Behavioural priors: Learning to search efficiently in action planning Aapo Hyvärinen Depts of Computer Science and Psychology University of Helsinki

Abstract Prior knowledge important in perception Formalized as prior probabilities in Bayesian inference We propose same needed in action planning What kinds of objects/scenes are typical/frequent? What kind of action sequences are typically good? Number of possible action sequences large: computationally more efficient to constrain search those which are typically good

Basic framework: Planning Thoroughly investigated in classic AI Agent is in state A and wants to state B: What sequence of actions is needed? Agent assumed to have a world model Exponential explosion in computation: for a actions and t time steps, at possibilities Exhaustive search impossible

Biological agents are different A biological agent faces the same planning problems many times Moving the same limbs Navigation in the same environment Manipulating similar objects, etc. Good action sequences obey regularities, due to the physical structure of the world “Good” means action sequences selected by careful, computationally intensive, planning

Learning regularities aids in planning Initially, agent considers whole search space It can learn from information on which action sequences were good / typically executed “Typical” and “good” are strongly correlated because only rather good sequences are executed Search can then be constrained Examples of regularities: No point in moving limb back and forth Many sequences contain detours Skills: learning more regularities in particular task

A prior model on good sequences A probabilistic approach: build a model on the statistical structure of those sequences which were executed (= lead to goal, or close) Use a model which can generate candidate sequences in future planning E. g. A Markov model After sufficient experience, search only using action sequences generated by the prior model

Simulation 1 Grid world, actions: “up”, “down”, “left”, “right” Food randomly scattered Initially, planning is random Markov prior learned, used in later test period Result 1: Markov model learns the “rules” Do not go back and forth Do not change direction too often Result 2: Performance improved

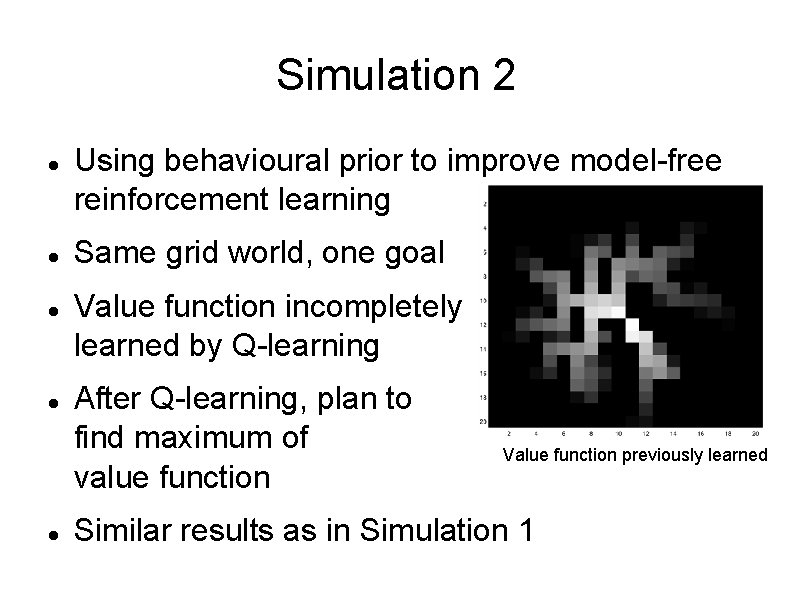

Simulation 2 Using behavioural prior to improve model-free reinforcement learning Same grid world, one goal Value function incompletely learned by Q-learning After Q-learning, plan to find maximum of value function Value function previously learned Similar results as in Simulation 1

Related and future work A lot of work on chunking actions, “macro-actions”: A special form of priors Options (Sutton et al): probabilistic interpretation also possible? Case-based planning: different because new action considered also depends on world state Behavioural priors in model-free reinforcement learning? motor control? (motor synergies)

Conclusion Perceptual priors are widely considered important for perception to work We propose action planning needs behavioural priors Priors tell which action sequences are typically useful Improves planning by constraining search More simulations needed to verify utility

- Slides: 10