Bayesian Models Honors 207 Intro to Cognitive Science

Bayesian Models Honors 207, Intro to Cognitive Science David Allbritton An introduction to Bayes' Theorem and Bayesian models of human cognition

Bayes Theorem: An Introduction ● ● ● What is Bayes' Theorem? What does it mean? An application: Calculating a probability What are distributions? Bayes' Theorem using distributions An application to cognitive modelling: perception

What is Bayes' Theorem? ● ● A theorem of probability theory Concerns “conditional probabilities” Example 1: Probability class is cancelled if it snows ● ● Example 2: Probability that it snowed, if class was cancelled ● ● P (A | B) “Probability of A given B, ” where A = “class is cancelled” and B = “it snows. ” P (B | A) Bayes' Theorem tells how these two conditional probabilities are related

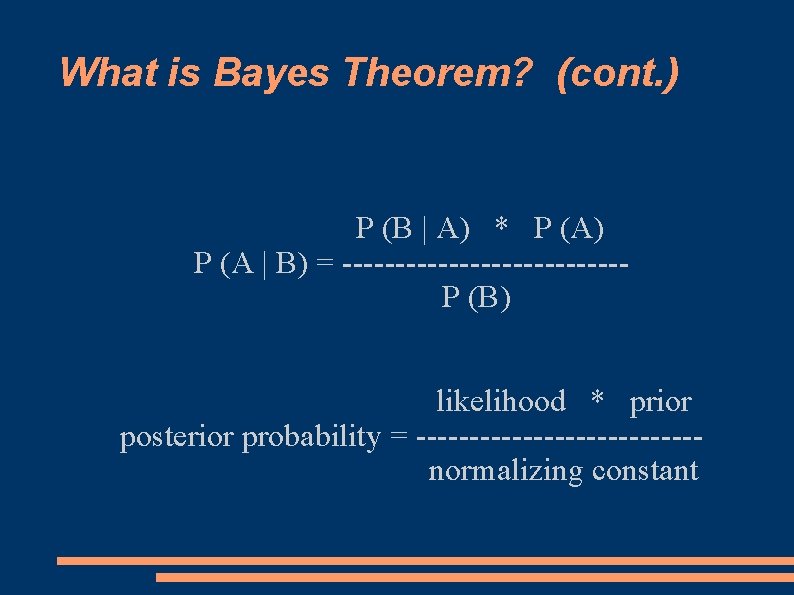

What is Bayes Theorem? (cont. ) P (B | A) * P (A) P (A | B) = -------------P (B) likelihood * prior posterior probability = -------------normalizing constant

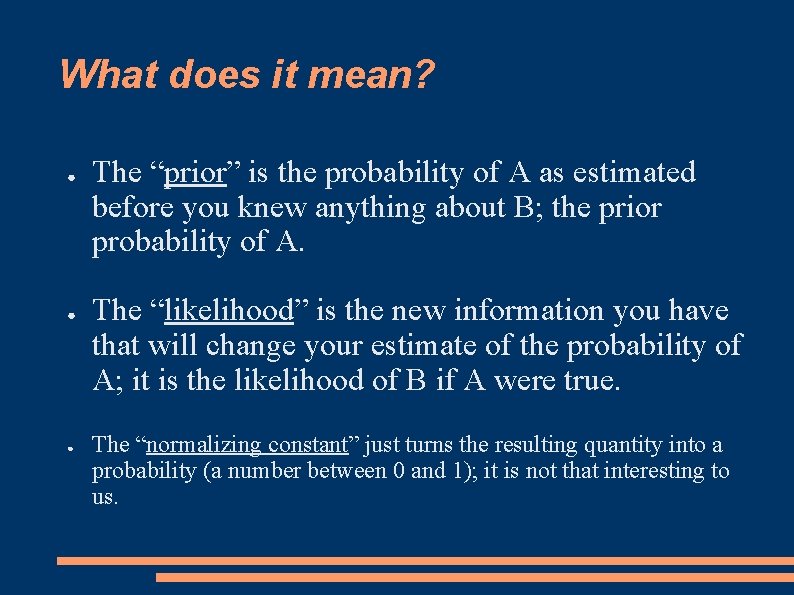

What does it mean? ● ● ● The “prior” is the probability of A as estimated before you knew anything about B; the prior probability of A. The “likelihood” is the new information you have that will change your estimate of the probability of A; it is the likelihood of B if A were true. The “normalizing constant” just turns the resulting quantity into a probability (a number between 0 and 1); it is not that interesting to us.

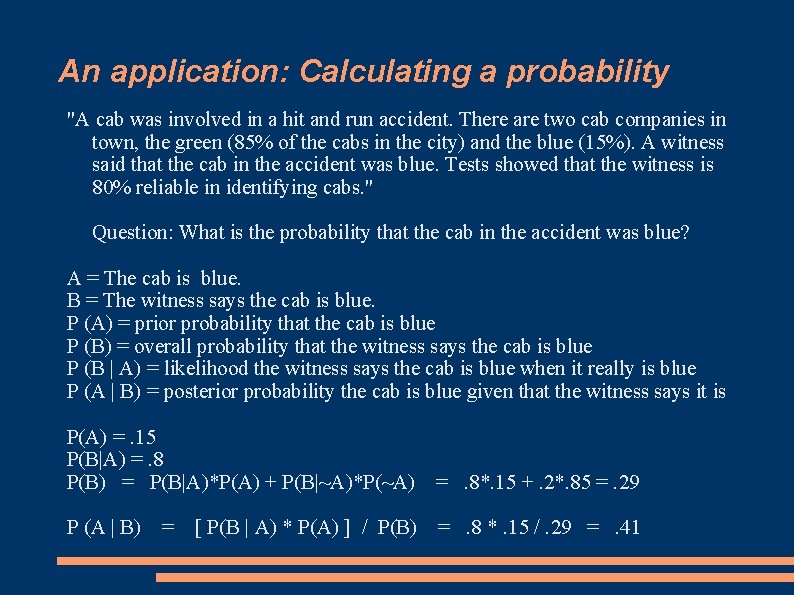

An application: Calculating a probability "A cab was involved in a hit and run accident. There are two cab companies in town, the green (85% of the cabs in the city) and the blue (15%). A witness said that the cab in the accident was blue. Tests showed that the witness is 80% reliable in identifying cabs. " Question: What is the probability that the cab in the accident was blue? A = The cab is blue. B = The witness says the cab is blue. P (A) = prior probability that the cab is blue P (B) = overall probability that the witness says the cab is blue P (B | A) = likelihood the witness says the cab is blue when it really is blue P (A | B) = posterior probability the cab is blue given that the witness says it is P(A) =. 15 P(B|A) =. 8 P(B) = P(B|A)*P(A) + P(B|~A)*P(~A) =. 8*. 15 +. 2*. 85 =. 29 P (A | B) =. 8 *. 15 /. 29 =. 41 = [ P(B | A) * P(A) ] / P(B)

What are distributions? ● Demonstration: ● http: //condor. depaul. edu/~dallbrit/extra/psy 241/chi-square-demonstration 3. xls Terms Probability density function: area under the curve = 1 Normal distribution, Gaussian distribution Standard deviation Uniform distribution

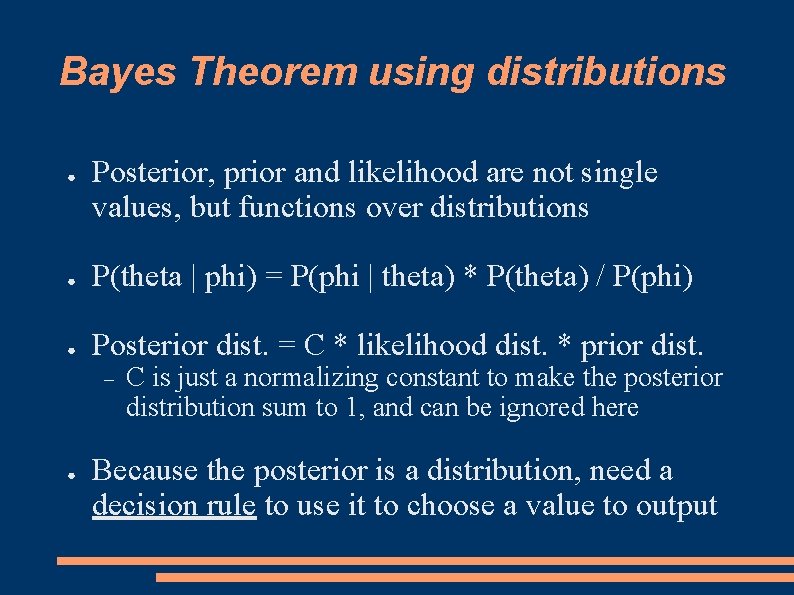

Bayes Theorem using distributions ● Posterior, prior and likelihood are not single values, but functions over distributions ● P(theta | phi) = P(phi | theta) * P(theta) / P(phi) ● Posterior dist. = C * likelihood dist. * prior dist. ● C is just a normalizing constant to make the posterior distribution sum to 1, and can be ignored here Because the posterior is a distribution, need a decision rule to use it to choose a value to output

An application to cognitive modeling: perception ● Task: guess the distal angle theta that produced the observed proximal angle phi ● ● ● The viewing angle is unknown, so theta is unknown p (theta | phi) = posterior; result of combining our prior knowledge and perceptual information p (phi | theta) = likelihood; probabilities for various values of theta to produce the observed value of phi p (theta) = prior; probabilities of various values of theta that were known before phi was observed Gain function = rewards for correct guess, penalties for incorrect guesses Decision rule = function of posterior and gain function

More information: http: //en. wikipedia. org/wiki/Bayes'_theorem ● http: //yudkowsky. net/bayes. html ●

- Slides: 10