Basic Learning Processes Robert C Kennedy Ph D

Basic Learning Processes Robert C. Kennedy, Ph. D University of Central Florida robert. kennedy@ucf. edu

Chapter 7: Schedules of Reinforcement

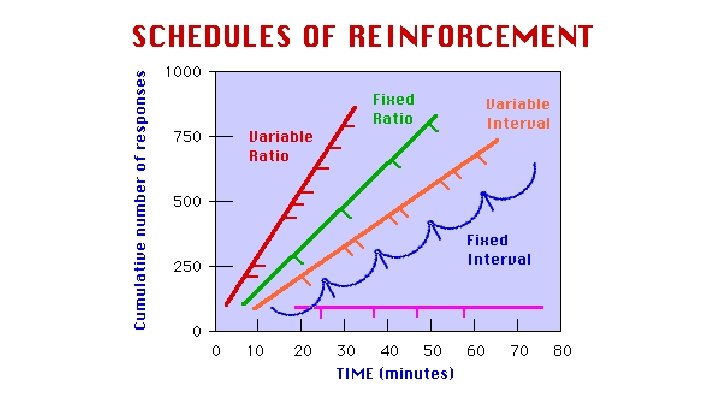

Reinforcement • Not every action needs to be reinforced to produce learning • Schedules of reinforcement: rules describing the contingency between a behavior and reinforcement • Particular reinforcement schedule can produce a particular rate and pattern of performance Video: Operant Conditioning: Schedules of Reinforcement

Vocabulary § § § § § Between-ratio pause: Another name for, and view of, post-reinforcement pause. Chain schedule: A complex reinforcement schedule that consists of a series of simple schedules, each of which is associated with a particular stimulus, with reinforcement delivered only on completion of the last schedule in the series. Concurrent schedule: A complex reinforcement schedule in which two or more simple schedules are available at the same time. Continuous reinforcement (CRF): A reinforcement schedule in which a behavior is reinforced each time it occurs. Cooperative schedule: A reinforcement schedule in which reinforcement is dependent on the behavior of two or more individuals Discrimination hypothesis: The proposal that the PRE occurs because it is harder to discriminate between intermittent reinforcement and extinction than between continuous reinforcement and extinction. Fixed duration (FD) schedule: A reinforcement schedule in which reinforcement is contingent on the continuous performance of a behavior for a fixed period of time. Fixed interval (FI) schedule: A reinforcement schedule in which a behavior is reinforced the first time it occurs following a specified interval since the last reinforcement. Fixed ratio (FR) schedule: A reinforcement schedule in which every nth performance of a behavior is reinforced. Fixed time (FT) schedule: A reinforcement schedule in which reinforcement is delivered independently of behavior at fixed intervals.

Vocabulary § § § Frustration hypothesis: The proposal that the PRE occurs because non-reinforcement is frustrating and during intermittent reinforcement frustration becomes an S+ for responding. Intermittent schedule: Any of several reinforcement schedules in which a behavior is sometimes reinforced. Matching law: The principle that, given the opportunity to respond on two or more reinforcement schedules, the rate of responding on each schedule will match the reinforcement available on each schedule. Mixed schedule: A complex reinforcement schedule in which two or more simple schedules, neither associated with a particular stimulus, alternate. Multiple schedule: A complex reinforcement schedule in which two or more simple schedules alternate, with each schedule associated with a particular stimulus. Noncontingent reinforcement (NCR): Any schedule in which delivery of reinforcers is not contingent on behavior. Examples are fixed time and variable time. Partial reinforcement effect (PRE): The tendency for a behavior to be more resistant to extinction following partial reinforcement than following continuous reinforcement. (It’s probably worth noting that some people now refer to this as the PREE, the partial reinforcement extinction effect. ) Post-reinforcement pause: A pause in responding following reinforcement. Pre-ratio pause: Another name for, and view of, post-reinforcement pause. Ratio strain: Disruption of the pattern of responding due to stretching the ratio too abruptly or too far. Response unit hypothesis: The idea that the PRE is due to differences in the definition of a behavior during intermittent and continuous reinforcement.

Vocabulary § § § Run rate: The rate at which a behavior occurs once it has resumed following reinforcement. Schedule effects: The distinctive rate and pattern of responding associated with a particular schedule. Schedule of reinforcement: A rule describing the delivery of reinforcers for a behavior. Sequential hypothesis: The idea that the PRE occurs because the sequence of reinforced and nonreinforced behaviors during intermittent reinforcement becomes an S+ for responding during extinction. Stretching the ratio: The procedure of gradually increasing the number of responses required for reinforcement. Tandem schedule: A complex reinforcement schedule that consists of a series of simple schedules, with reinforcement delivered only on completion of the last schedule in the series. The simple schedules are not associated with different stimuli. Variable duration (VD) schedule: A reinforcement schedule in which reinforcement is contingent on the continuous performance of a behavior for a period of time, with the length of the time varying around an average. Variable interval (VI) schedule: A reinforcement schedule in which a behavior is reinforced the first time it occurs following an interval since the last reinforcement, with the interval varying around a specified average. Variable ratio (VR) schedule: A reinforcement schedule in which, on average, every nth performance of a behavior is reinforced. Variable time (VT) schedule: A reinforcement schedule in which reinforcement is delivered at varying intervals regardless of what the organism does. Progressive ratio schedule: A schedule in which the contingencies of reinforcement change systematically.

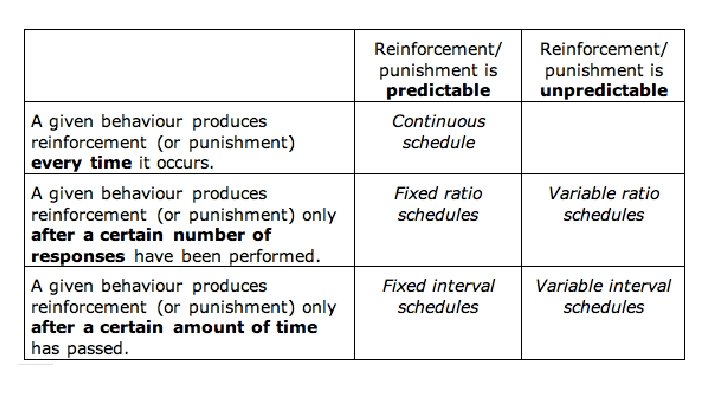

Simple Schedules • Continuous reinforcement: a behavior is reinforced every time it occurs - Example: a rat receives food every time it presses a lever • Intermittent schedule: reinforcement occurs on some occasions but not others

Simple Schedules • Fixed ratio schedule: A behavior is reinforced when it has occurred a fixed number of times – Example: every third lever press by a rat is reinforced (ratio of three lever presses to each reinforcement also known as FR 3 schedule) – Animals on fixed ratio schedules perform at a high rate

Simple Schedule • Post-reinforcement pauses (also known as pre -ratio pauses or between-ratio pauses) are pauses that follow reinforcement • The more work required for each reinforcement, the longer the postreinforcement pause

Variable Ratio • Variable ratio schedule provides the reinforcer around some average - Example: instead of reinforcing every fifth lever press, reinforce after eighth, sixth, fourth, etc. - VR 5 schedule: reinforcement might occur after one to ten lever presses but overall average is one reinforcement for every five presses

Variable Ratio • Variable ratio schedules produce steady performance • Post-reinforcement pauses that occur are less often and shorter than the fixed ratio schedule (influenced by size of average ratio and by lowest ratio) • Variable ratio common in human society. - Example: Casino gambling

Fixed Interval • Fixed Interval schedule: behavior under study is reinforced the first time it occurs after a constant interval - Example: employee receives a paycheck every 14 days which reinforces showing up to work (much more likely to show up to work as payday approaches) • Fixed interval schedule does not produce as steady performance compared to fixed ratio schedule

Variable Interval • Variable interval schedules: the length of the interval during which performance is not reinforced varies around some average • Produces high, steady run rates (higher than fixed interval schedule but not as high as fixed ratio and variable ratio schedule)

Extinction • In operant learning, extinction means that a previously reinforced behavior is never followed by a reinforcer • Extinction reduces the frequency of behavior, but an extinction burst may occur where the frequency of behavior actually increases for a time • Resurgence is also possible, where previously reinforced behavior reappears

Other Simple Schedules • Fixed duration schedule: reinforcement contingent on the continuous performance of a behavior for some period of time -Example: child required to play piano for 30 minutes to receive cake • Variable duration schedule: required period of performance varies around some average -Example: on average, student will practice for half an hour before receiving cake, but no telling when the cake will appear

Other Simple Schedules • Possible to create a schedule where reinforcers are delivered independently of behavior (non-contingent reinforcement schedules) • Fixed time schedule: a reinforcer is delivered after a given period of time regardless of what behavior occurs -Example: Child gets cake after 30 minutes regardless if he practiced piano or not • Variable time schedule: a reinforcer is delivered periodically at irregular intervals regardless of what behavior occurs -Example: Child gets cake at irregular intervals regardless of if he practiced piano or not

Other Simple Schedules • Progressive schedules: the rules describing the contingencies change systematically • Progressive ratio schedule: the requirement for reinforcement typically increases in a predetermined way -Example: rat receives food after pressing a lever twice, then press lever four times to receive food, then six times, then eight times, etc. until the behavior stops entirely (break point)

Stretching the ratio • First start by giving cake with a fixed ratio of 3; then when schedule has been in place for some time, increase to fixed ratio of 5, then fixed ratio 8, then fixed ratio 10, etc. • Ratio strain: stretching the ratio too rapidly may cause the performance to break down - Example: overworked and underpaid employees

Compound Schedules • Multiple schedule: A behavior is under the influence of two or more simple schedules, each associated with a particular stimulus • Mixed schedule: same as multiple schedule, but no stimuli are associated with the change in reinforcement contingencies

Compound Schedules • Chain schedule: reinforcement is delivered only on completion of the last in a series of schedules • Tandem schedule: identical to a chain schedule except no distinctive event that signals the end of one schedule and the beginning of the next

Compound Schedules • Cooperative schedules: arrange schedules to make reinforcement dependent on the behavior of two or more individuals • Concurrent schedules: two or more schedules are available at once (involves a choice for the person or animal)

Partial Reinforcement • Partial Reinforcement Effect (PRE): tendency of behavior that has been maintained on intermittent schedule to be more resistant to extinction than behavior that has been on continuous reinforcement • Possible explanations: - Discrimination hypothesis Frustration hypothesis Sequential hypothesis Response Unit hypothesis

Partial Reinforcement • Discrimination hypothesis: extinction takes longer after intermittent reinforcement because it is harder to distinguish between extinction and an intermittent schedule compared to extinction and continuous reinforcement

Partial Reinforcement • Frustration hypothesis: non-reinforcement of previously reinforced behavior is frustrating; anything that reduces frustration will be reinforcing. Frustration becomes a signal for producing the behavior.

Partial Reinforcement • Sequential hypothesis: differences in the sequence of cues during training. Sequence of reinforcement and non-reinforcement become signals for producing the behavior.

Partial Reinforcement • Response Unit hypothesis: the behavior is defined by the number of times it must occur to produce reinforcement (take into account the response units required for reinforcement)

Next Class • • Next lecture Monday, 11/9, Chapter 8 Practice Quizzes Exam #2 11/14

- Slides: 29