Basic CUDA Programming Computer Architecture 2015 Prof ChihWei

Basic CUDA Programming Computer Architecture 2015 (Prof. Chih-Wei Liu) Final Project – CUDA Tutorial TA Cheng-Yen Yang (chenyen. yang@gmail. com)

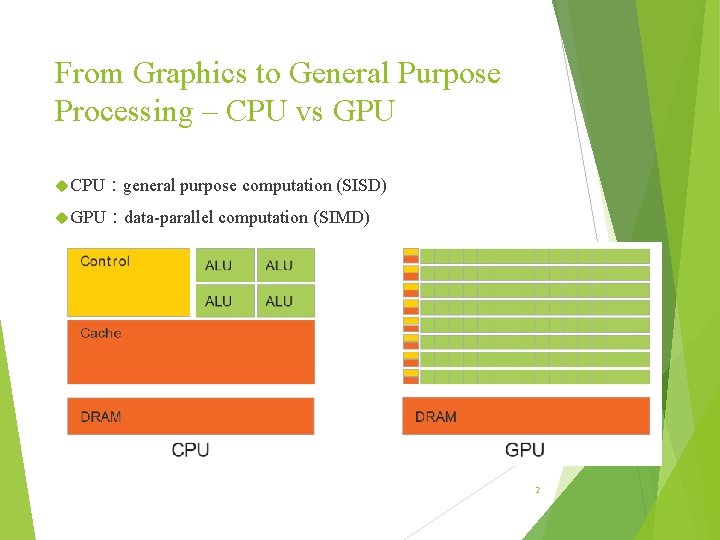

From Graphics to General Purpose Processing – CPU vs GPU CPU:general purpose computation (SISD) GPU:data-parallel computation (SIMD) 2

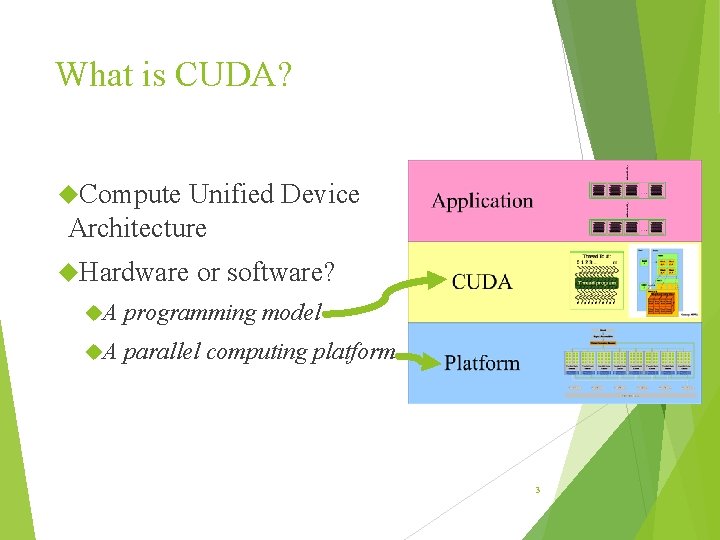

What is CUDA? Compute Unified Device Architecture Hardware or software? A programming model A parallel computing platform 3

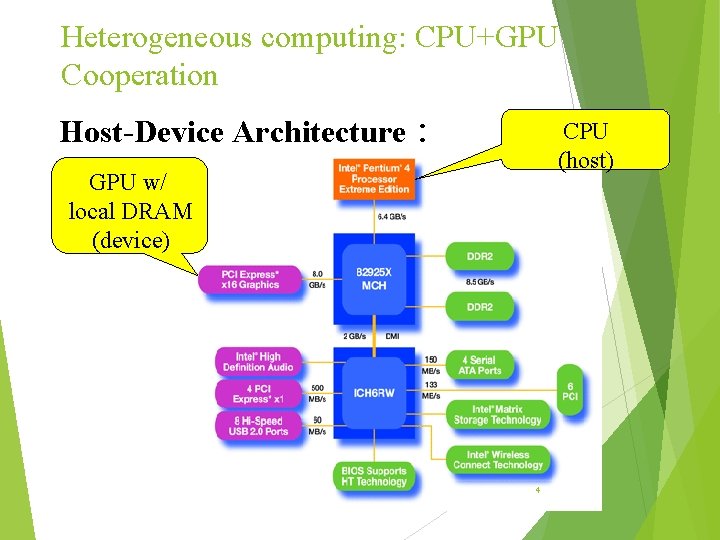

Heterogeneous computing: CPU+GPU Cooperation Host-Device Architecture: CPU (host) GPU w/ local DRAM (device) 4

Heterogeneous computing: CUDA Code Execution (1/2) 5

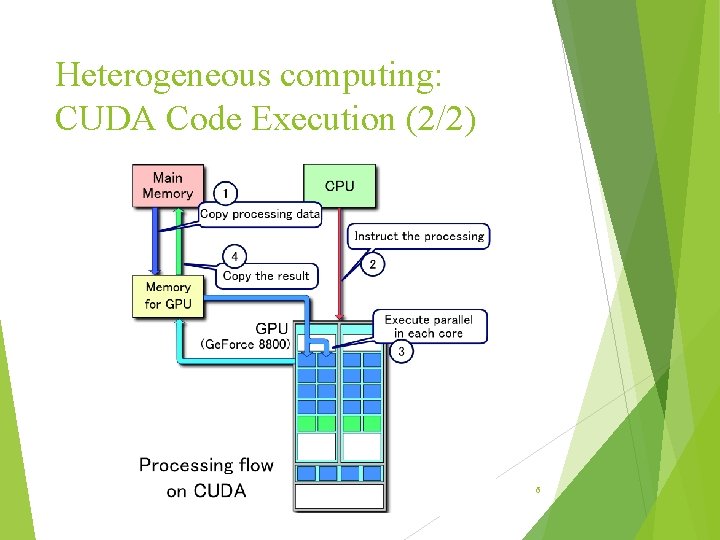

Heterogeneous computing: CUDA Code Execution (2/2) 6

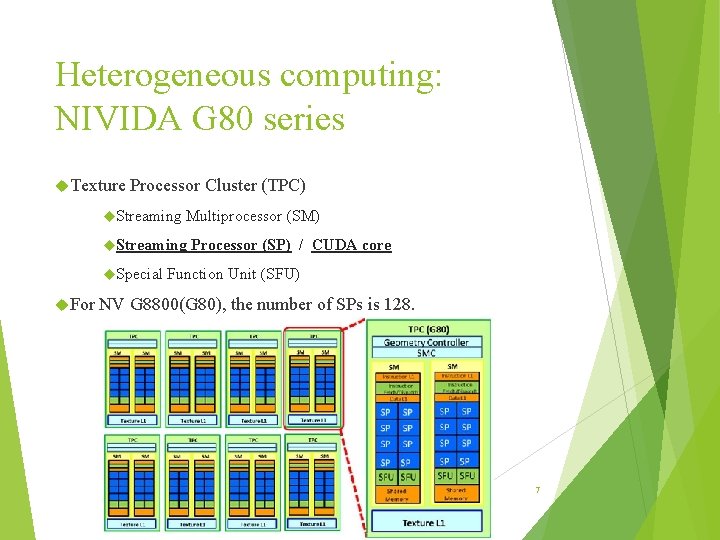

Heterogeneous computing: NIVIDA G 80 series Texture Processor Cluster (TPC) Streaming Multiprocessor (SM) Streaming Special For Processor (SP) / CUDA core Function Unit (SFU) NV G 8800(G 80), the number of SPs is 128. 7

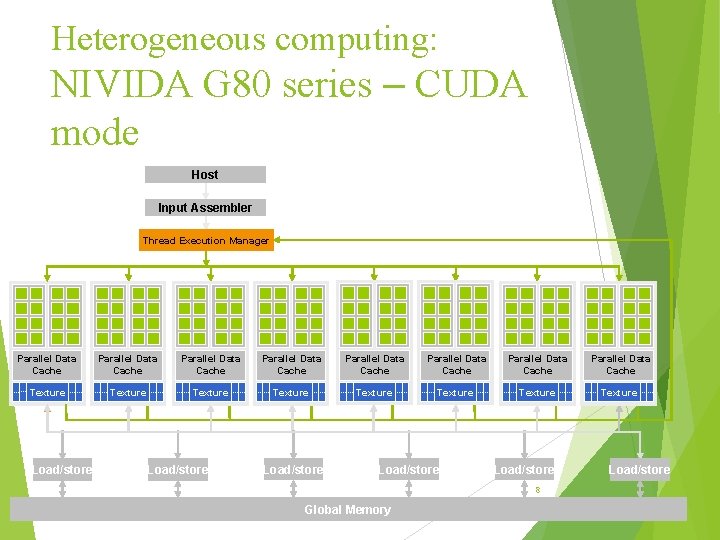

Heterogeneous computing: NIVIDA G 80 series – CUDA mode Host Input Assembler Thread Execution Manager Parallel Data Cache Parallel Data Cache Texture Texture Texture Load/store Load/store 8 Global Memory Load/store

Heterogeneous computing: NVIDIA CUDA Compiler (NVCC) NVCC separates CPU and GPU source code into two parts. For host codes, NVCC invokes typical C compiler like GCC, Intel C compiler, or MS C compiler. All the device codes are compiled by NVCC. The extension of device source files should be “. cu”. All executable with CUDA code requires: CUDA core library (cuda) CUDA runtime library (cudart) 9

CUDA Programming Model (1/7) Define Programming model Memory model Help developers map the current applications or algorithms onto CUDA devices more easily and clearly. NVIDIA GPUs have different architecture compared with common CPUs. It is important to follow CUDA’s programming model to obtain higher performance of program execution. 10

CUDA Programming Model (2/7) C/C++ for CUDA Subset of C with extensions C++ templates for GPU code CUDA goals: Scale code to 100 s of cores and 1000 s of parallel threads. Facilitate heterogeneous computing: CPU + GPU CUDA defines: Programming model Memory model 11

CUDA Programming Model (3/7) CUDA Kernels and Threads: Parallel portions of an application are executed on the device as kernels. And only one kernel is executed at a time. All the threads execute the same kernel at a time. Differences between CUDA and GPU threads CUDA threads are extremely lightweight Very little creation overhead Fast switching CUDA uses 1000 s of threads to achieve efficiency Multi-core CPUs can only use a few 12

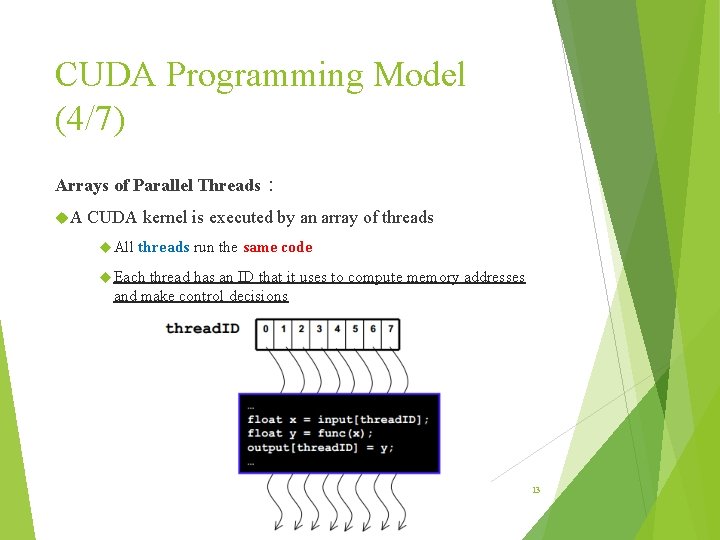

CUDA Programming Model (4/7) Arrays of Parallel Threads: A CUDA kernel is executed by an array of threads All threads run the same code Each thread has an ID that it uses to compute memory addresses and make control decisions 13

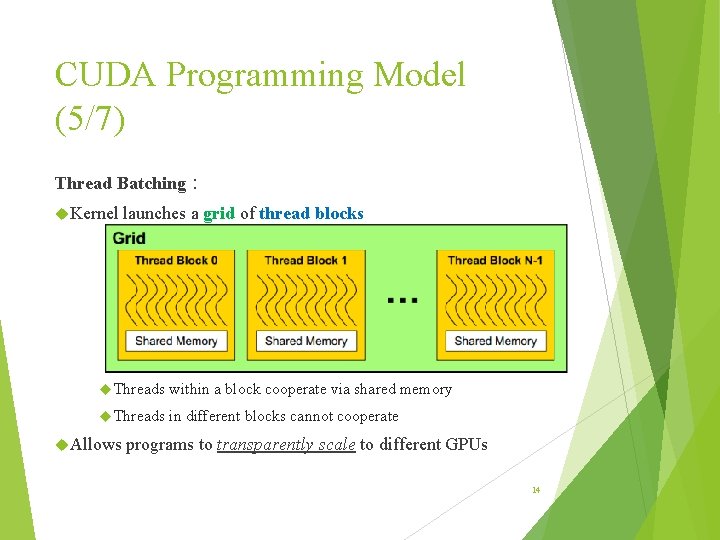

CUDA Programming Model (5/7) Thread Batching: Kernel launches a grid of thread blocks Threads within a block cooperate via shared memory Threads in different blocks cannot cooperate Allows programs to transparently scale to different GPUs 14

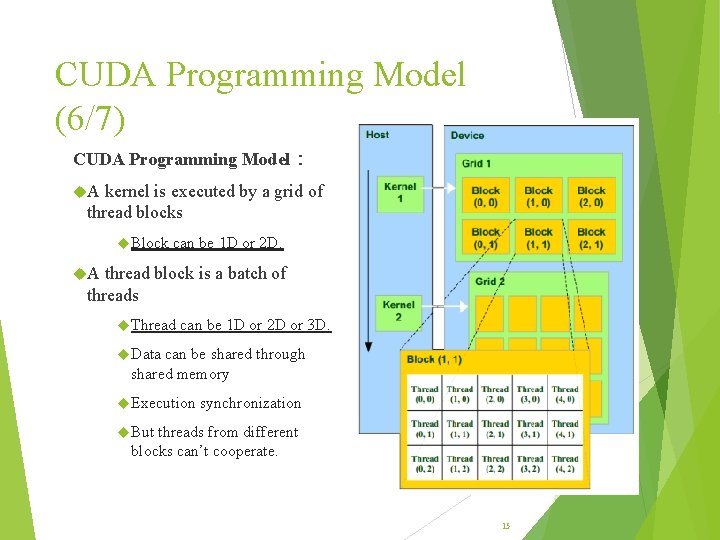

CUDA Programming Model (6/7) CUDA Programming Model: A kernel is executed by a grid of thread blocks Block can be 1 D or 2 D. A thread block is a batch of threads Thread can be 1 D or 2 D or 3 D. Data can be shared through shared memory Execution synchronization But threads from different blocks can’t cooperate. 15

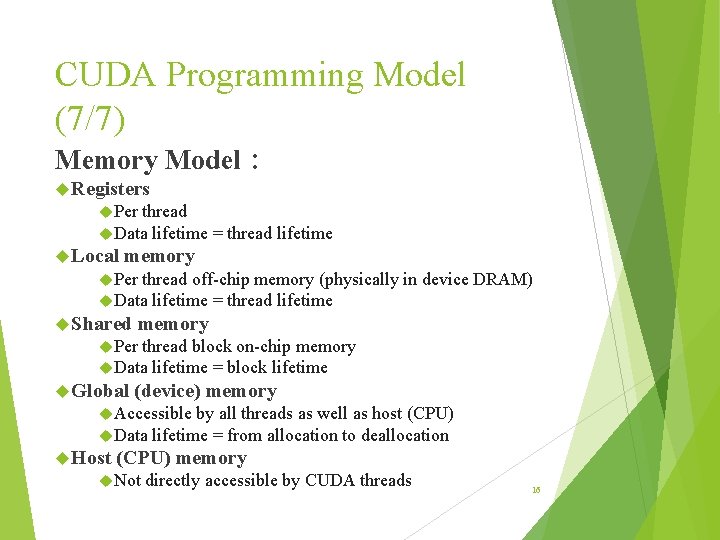

CUDA Programming Model (7/7) Memory Model: Registers Per thread Data lifetime Local = thread lifetime memory Per thread off-chip memory (physically Data lifetime = thread lifetime Shared in device DRAM) memory Per thread block on-chip memory Data lifetime = block lifetime Global (device) memory Accessible by all threads as well as host (CPU) Data lifetime = from allocation to deallocation Host (CPU) memory Not directly accessible by CUDA threads 16

CUDA C Basic 17

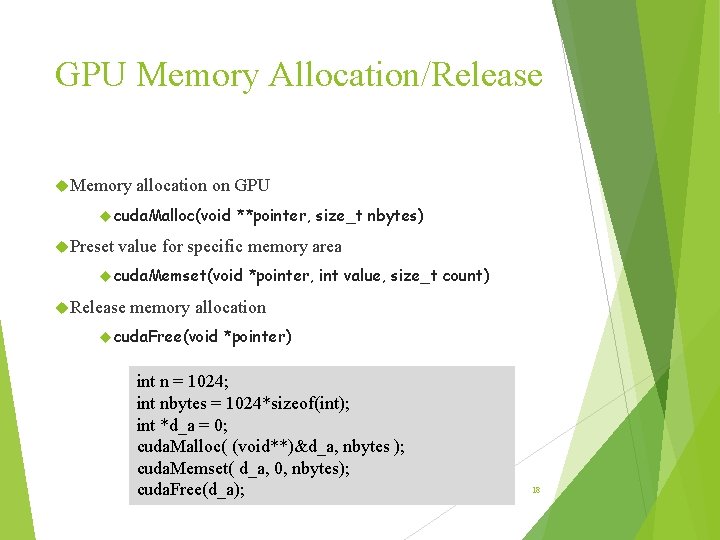

GPU Memory Allocation/Release Memory allocation on GPU cuda. Malloc(void Preset **pointer, size_t nbytes) value for specific memory area cuda. Memset(void Release *pointer, int value, size_t count) memory allocation cuda. Free(void *pointer) int n = 1024; int nbytes = 1024*sizeof(int); int *d_a = 0; cuda. Malloc( (void**)&d_a, nbytes ); cuda. Memset( d_a, 0, nbytes); cuda. Free(d_a); 18

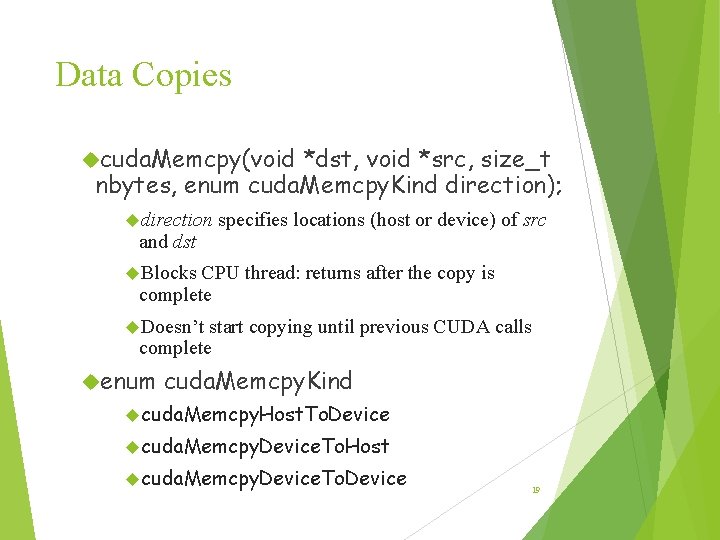

Data Copies cuda. Memcpy(void *dst, void *src, size_t nbytes, enum cuda. Memcpy. Kind direction); direction and dst specifies locations (host or device) of src Blocks CPU thread: returns after the copy is complete Doesn’t start copying until previous CUDA calls complete enum cuda. Memcpy. Kind cuda. Memcpy. Host. To. Device cuda. Memcpy. Device. To. Host cuda. Memcpy. Device. To. Device 19

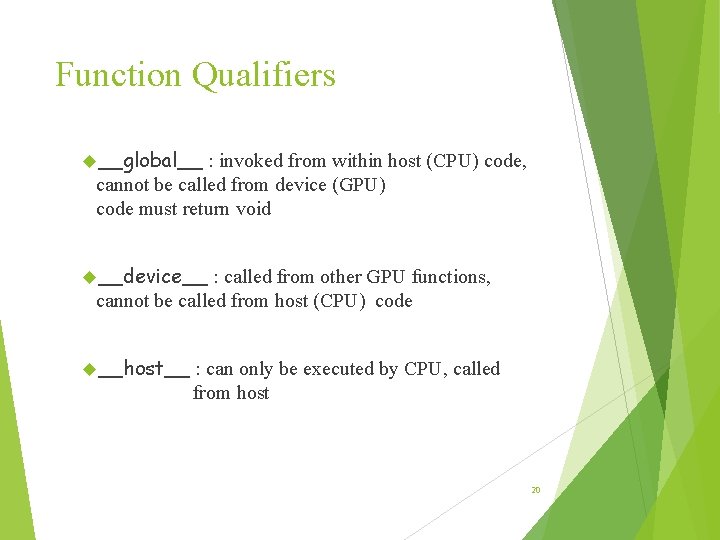

Function Qualifiers __global__ : invoked from within host (CPU) code, cannot be called from device (GPU) code must return void __device__ : called from other GPU functions, cannot be called from host (CPU) code __host__ : can only be executed by CPU, called from host 20

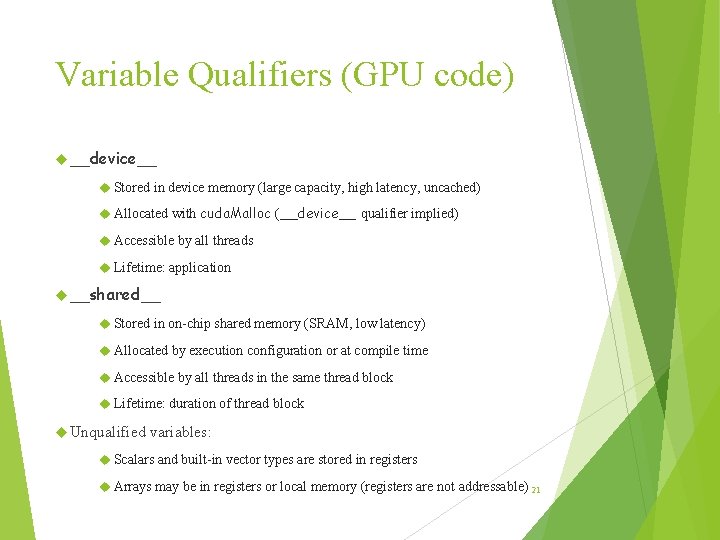

Variable Qualifiers (GPU code) __device__ Stored in device memory (large capacity, high latency, uncached) Allocated with cuda. Malloc (__device__ qualifier implied) Accessible by all threads Lifetime: application __shared__ Stored in on-chip shared memory (SRAM, low latency) Allocated by execution configuration or at compile time Accessible by all threads in the same thread block Lifetime: duration of thread block Unqualified variables: Scalars and built-in vector types are stored in registers Arrays may be in registers or local memory (registers are not addressable) 21

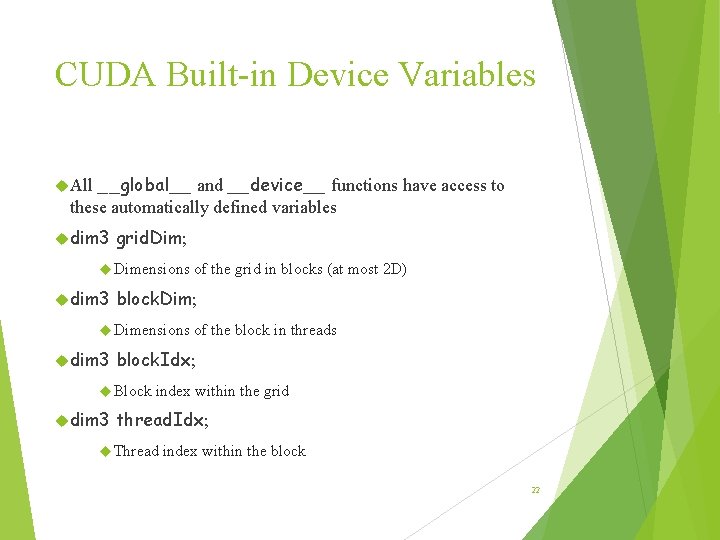

CUDA Built-in Device Variables __global__ and __device__ functions have access to these automatically defined variables All dim 3 grid. Dim; Dimensions dim 3 block. Dim; Dimensions dim 3 of the block in threads block. Idx; Block dim 3 of the grid in blocks (at most 2 D) index within the grid thread. Idx; Thread index within the block 22

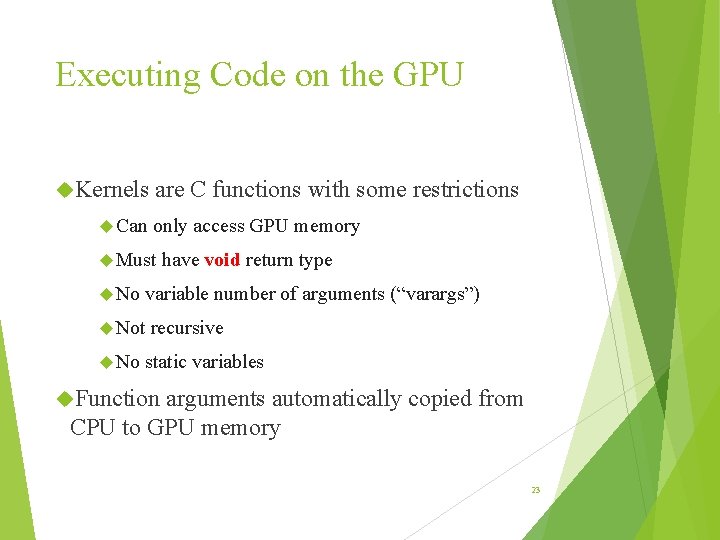

Executing Code on the GPU Kernels Can are C functions with some restrictions only access GPU memory Must No variable number of arguments (“varargs”) Not No have void return type recursive static variables Function arguments automatically copied from CPU to GPU memory 23

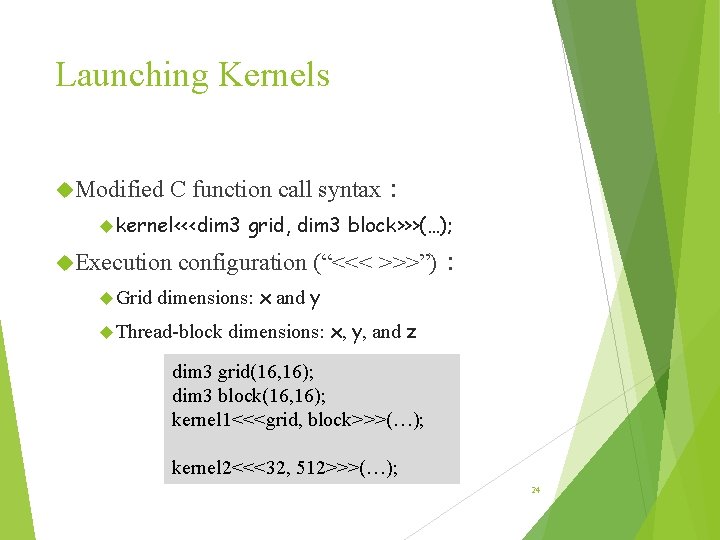

Launching Kernels Modified C function call syntax: kernel<<<dim 3 Execution Grid grid, dim 3 block>>>(…); configuration (“<<< >>>”): dimensions: x and y Thread-block dimensions: x, y, and z dim 3 grid(16, 16); dim 3 block(16, 16); kernel 1<<<grid, block>>>(…); kernel 2<<<32, 512>>>(…); 24

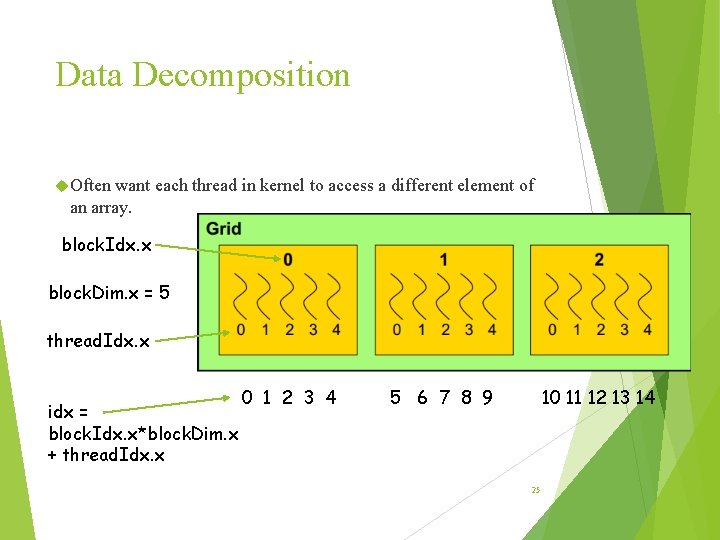

Data Decomposition Often want each thread in kernel to access a different element of an array. block. Idx. x block. Dim. x = 5 thread. Idx. x idx = block. Idx. x*block. Dim. x + thread. Idx. x 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 25

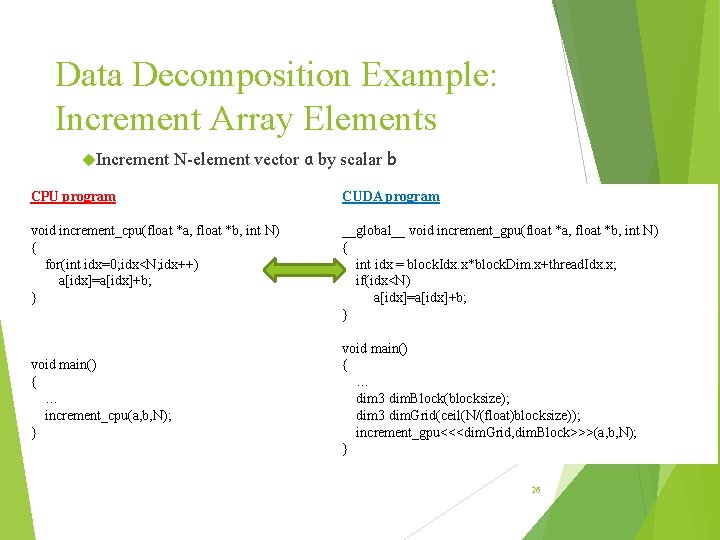

Data Decomposition Example: Increment Array Elements Increment N-element vector a by scalar b CPU program CUDA program void increment_cpu(float *a, float *b, int N) { for(int idx=0; idx<N; idx++) a[idx]=a[idx]+b; } __global__ void increment_gpu(float *a, float *b, int N) { int idx = block. Idx. x*block. Dim. x+thread. Idx. x; if(idx<N) a[idx]=a[idx]+b; } void main() { … increment_cpu(a, b, N); } void main() { … dim 3 dim. Block(blocksize); dim 3 dim. Grid(ceil(N/(float)blocksize)); increment_gpu<<<dim. Grid, dim. Block>>>(a, b, N); } 26

LAB 0: Setup CUDA Environment & Device Query 27

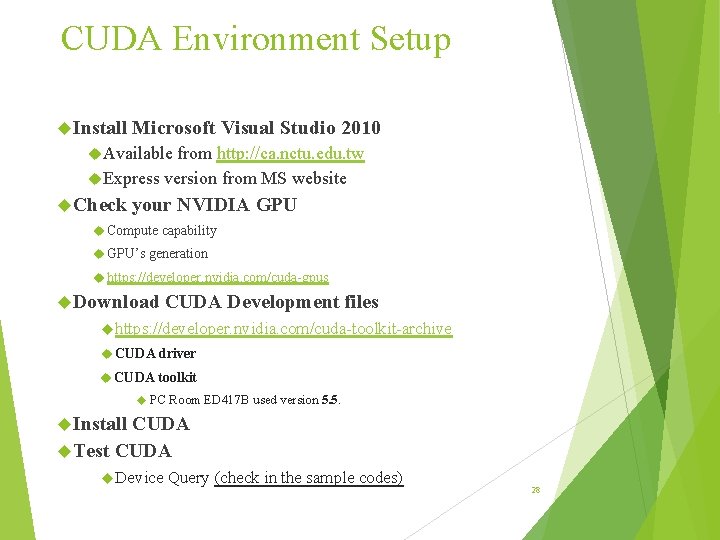

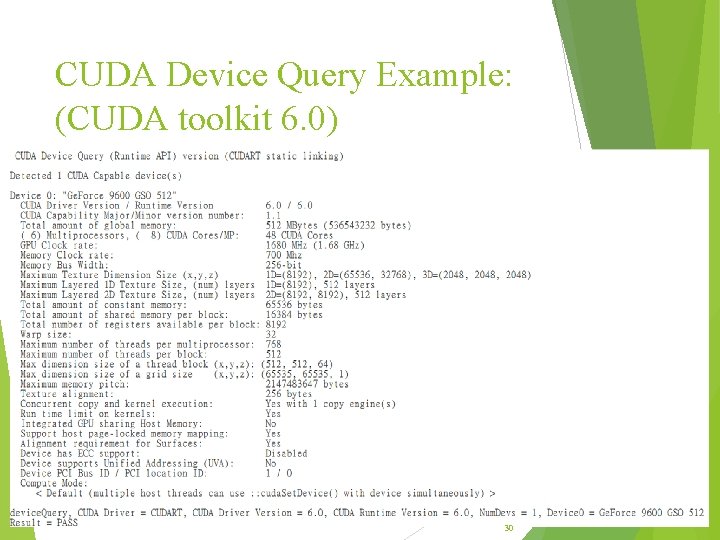

CUDA Environment Setup Install Microsoft Visual Studio 2010 Available from http: //ca. nctu. edu. tw Express version from MS website Check your NVIDIA GPU Compute capability GPU’s generation https: //developer. nvidia. com/cuda-gpus Download CUDA Development files https: //developer. nvidia. com/cuda-toolkit-archive CUDA driver CUDA toolkit PC Room ED 417 B used version 5. 5. Install CUDA Test CUDA Device Query (check in the sample codes) 28

Setup CUDA for MS Visual Studio (ED 417 B) In PC Room ED 417 B : CUDA device: NV 8400 GS CUDA toolkit version: 5. 5 Visual Studio 2010 Modified from existing project CUDA Please Sample codes refer to http: //twins. ee. nctu. edu. tw/courses/ca_15/Projects/create_cuda_project. pdf 29

CUDA Device Query Example: (CUDA toolkit 6. 0) 30

Lab 1: First CUDA Program Yet Another Random Number Sequence Generator 31

Yet Another Random Number Sequence Generator Implemented by CPU and GPU Functionality: Given an random integer array A holding 8192 elements Generated by rand() Re-generate random number by multiplying itself 256 times without regard to overflow occurred. B[i]new = B[i]old*A[i] (GPU) C[i]new = C[i]old*A[i] (CPU) Check the consistency between the execution results of CPU and GPU. 32

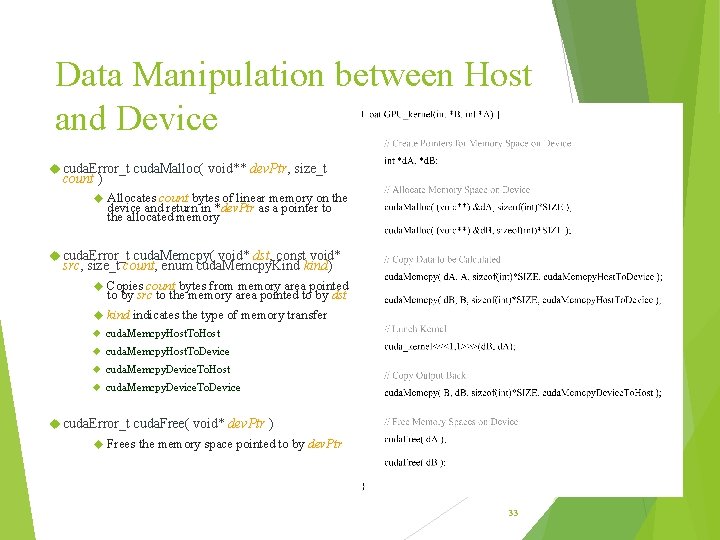

Data Manipulation between Host and Device cuda. Error_t cuda. Malloc( void** dev. Ptr, size_t count ) Allocates count bytes of linear memory on the device and return in *dev. Ptr as a pointer to the allocated memory cuda. Error_t cuda. Memcpy( void* dst, const void* src, size_t count, enum cuda. Memcpy. Kind kind) Copies count bytes from memory area pointed to by src to the memory area pointed to by dst kind indicates the type of memory transfer cuda. Memcpy. Host. To. Host cuda. Memcpy. Host. To. Device cuda. Memcpy. Device. To. Host cuda. Memcpy. Device. To. Device cuda. Error_t cuda. Free( void* dev. Ptr ) Frees the memory space pointed to by dev. Ptr 33

Now, go and finish your first CUDA program !!! 34

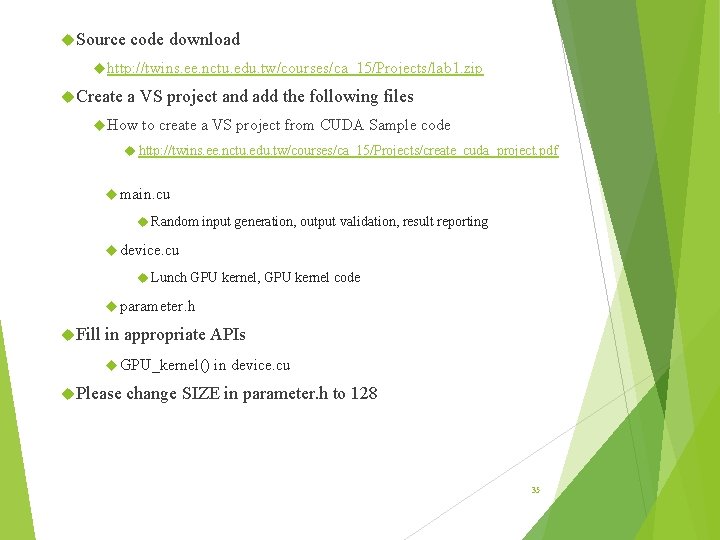

Source code download http: //twins. ee. nctu. edu. tw/courses/ca_15/Projects/lab 1. zip Create a VS project and add the following files How to create a VS project from CUDA Sample code http: //twins. ee. nctu. edu. tw/courses/ca_15/Projects/create_cuda_project. pdf main. cu Random input generation, output validation, result reporting device. cu Lunch GPU kernel, GPU kernel code parameter. h Fill in appropriate APIs GPU_kernel() Please in device. cu change SIZE in parameter. h to 128 35

Lab 2: Make the Parallel Code Faster Yet Another Random Number Sequence Generator 36

Parallel Processing in CUDA Parallel threads code can be partitioned into blocks and cuda_kernel<<<n. Blk, n. Tid>>>(…) Multiple tasks will be initialized, each with different block id and thread id The tasks are dynamically scheduled Tasks within the same block will be scheduled on the same stream multiprocessor Each task take care of single data partition according to its block id and thread id 37

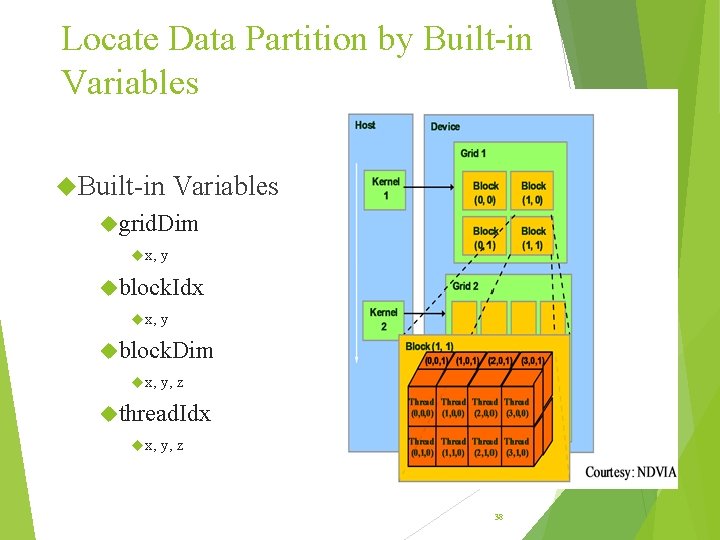

Locate Data Partition by Built-in Variables grid. Dim x, y block. Idx x, y block. Dim x, y, z thread. Idx x, y, z 38

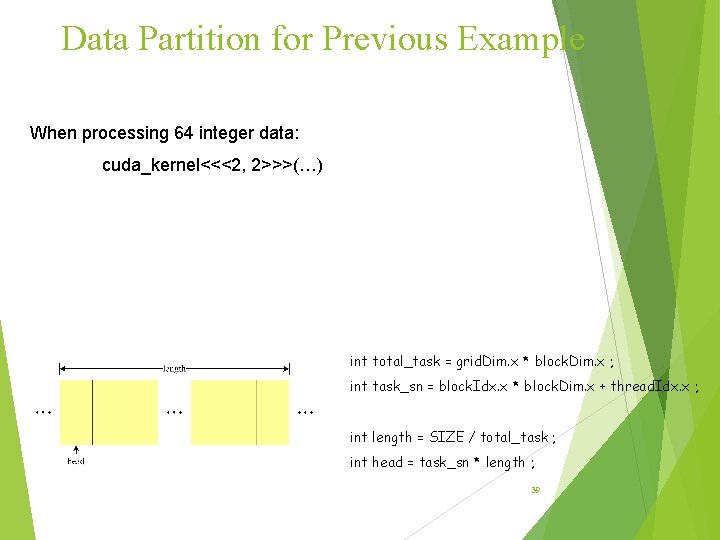

Data Partition for Previous Example When processing 64 integer data: cuda_kernel<<<2, 2>>>(…) int total_task = grid. Dim. x * block. Dim. x ; int task_sn = block. Idx. x * block. Dim. x + thread. Idx. x ; int length = SIZE / total_task ; int head = task_sn * length ; 39

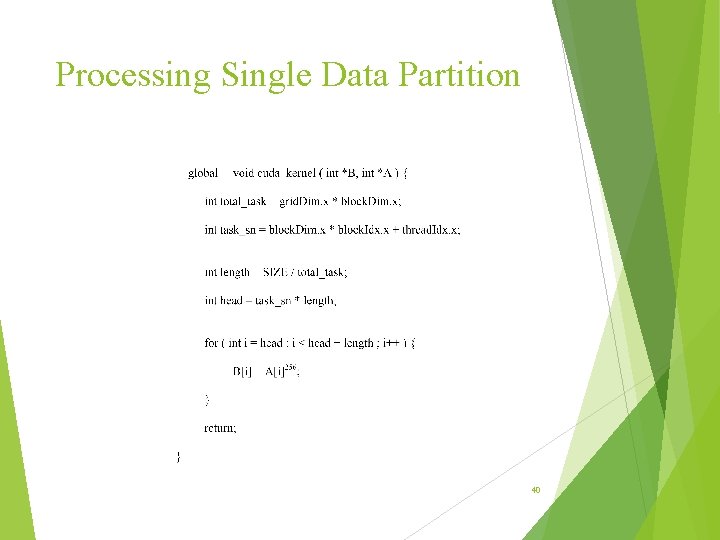

Processing Single Data Partition 40

Parallelize Your Program !!! 41

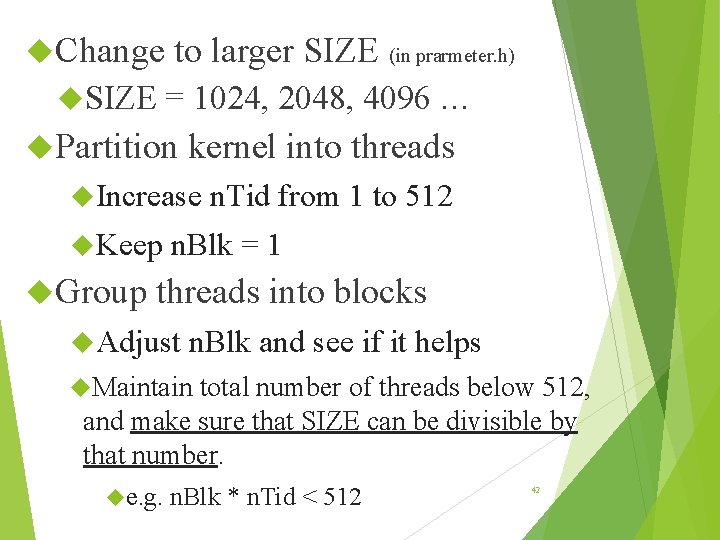

Change SIZE to larger SIZE (in prarmeter. h) = 1024, 2048, 4096 … Partition kernel into threads Increase Keep Group n. Tid from 1 to 512 n. Blk = 1 threads into blocks Adjust n. Blk and see if it helps Maintain total number of threads below 512, and make sure that SIZE can be divisible by that number. e. g. n. Blk * n. Tid < 512 42

Lab 3: Resolve Memory Contention Yet Another Random Number Sequence Generator 43

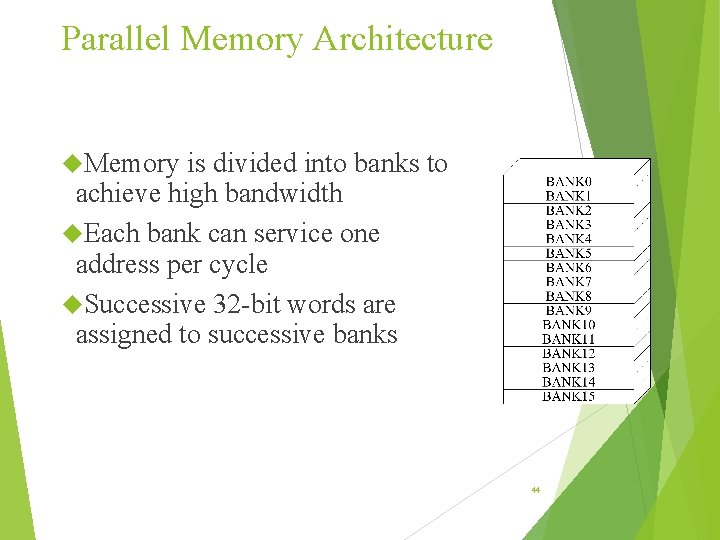

Parallel Memory Architecture Memory is divided into banks to achieve high bandwidth Each bank can service one address per cycle Successive 32 -bit words are assigned to successive banks 44

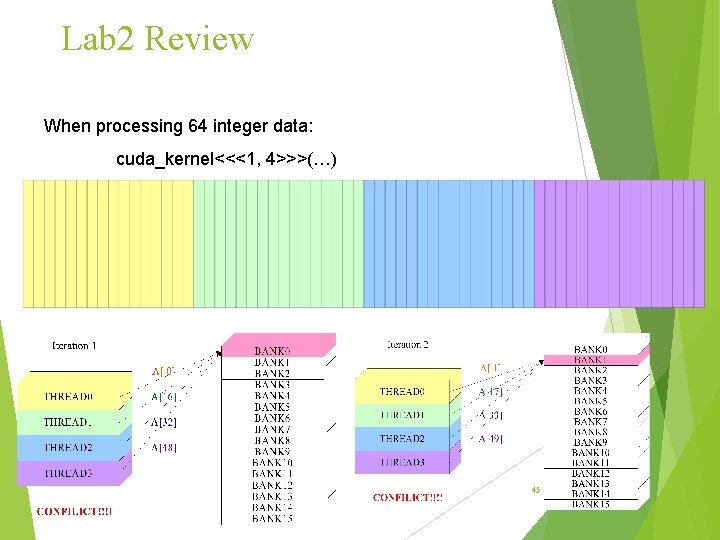

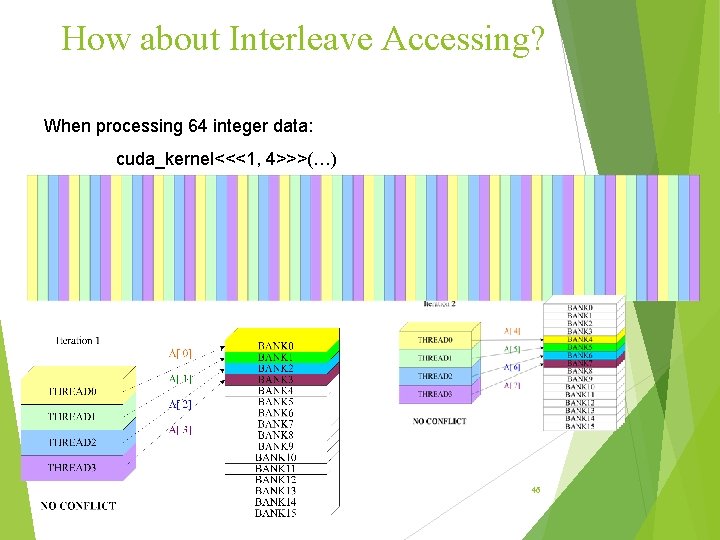

Lab 2 Review When processing 64 integer data: cuda_kernel<<<1, 4>>>(…) 45

How about Interleave Accessing? When processing 64 integer data: cuda_kernel<<<1, 4>>>(…) 46

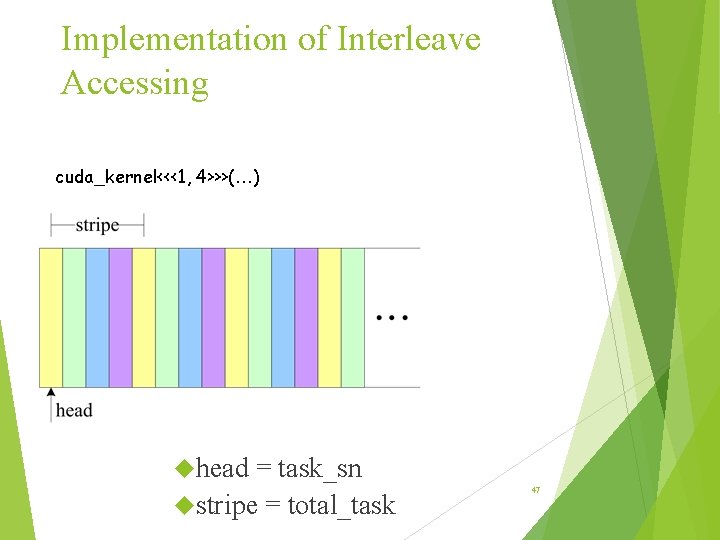

Implementation of Interleave Accessing cuda_kernel<<<1, 4>>>(…) head = task_sn stripe = total_task 47

Improve Your Program !!! 48

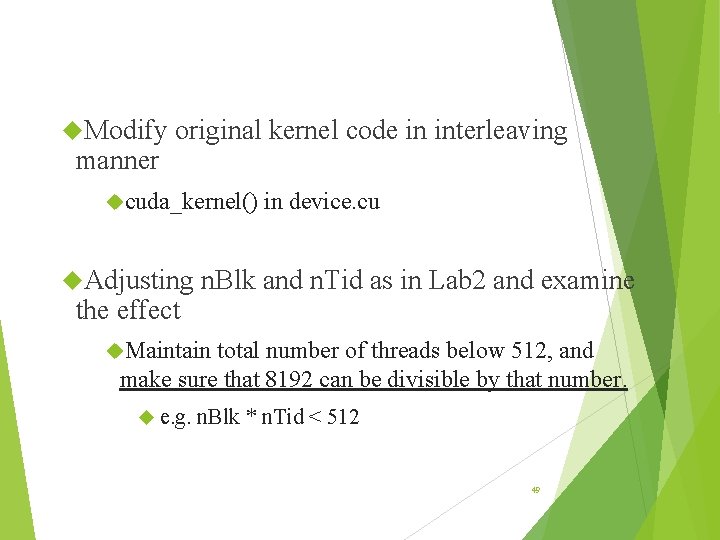

Modify manner original kernel code in interleaving cuda_kernel() Adjusting the effect in device. cu n. Blk and n. Tid as in Lab 2 and examine Maintain total number of threads below 512, and make sure that 8192 can be divisible by that number. e. g. n. Blk * n. Tid < 512 49

Thank You Lab 3 answer: http: //twins. ee. nctu. edu. tw/courses/ca_15/Projects/lab 3. zip * Group member & demo time should be registered after final exam @ ED 412 50

- Slides: 50