Bab 4 Classification Basic Concepts Decision Trees Model

Bab 4 Classification: Basic Concepts, Decision Trees & Model Evaluation Part 1 Classification With Decision tree Bab 4. 1 - 1/44

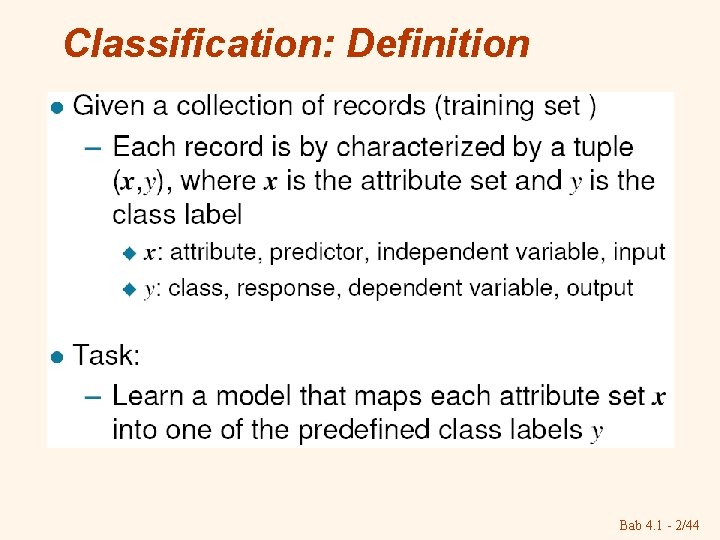

Classification: Definition Bab 4. 1 - 2/44

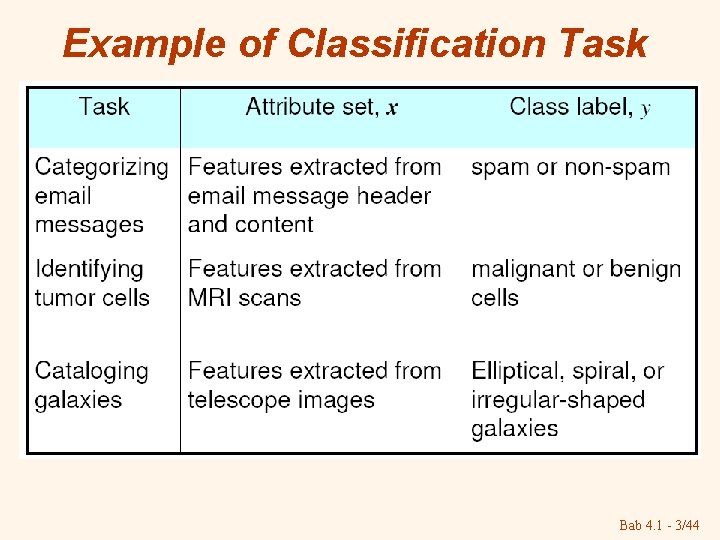

Example of Classification Task Bab 4. 1 - 3/44

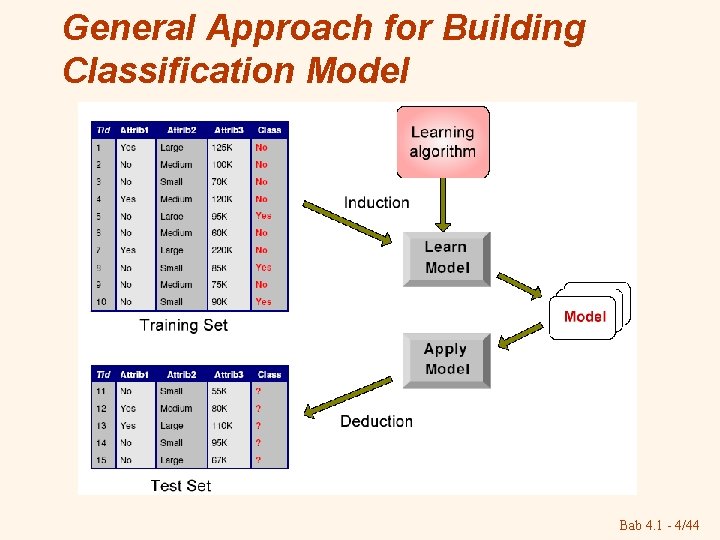

General Approach for Building Classification Model Bab 4. 1 - 4/44

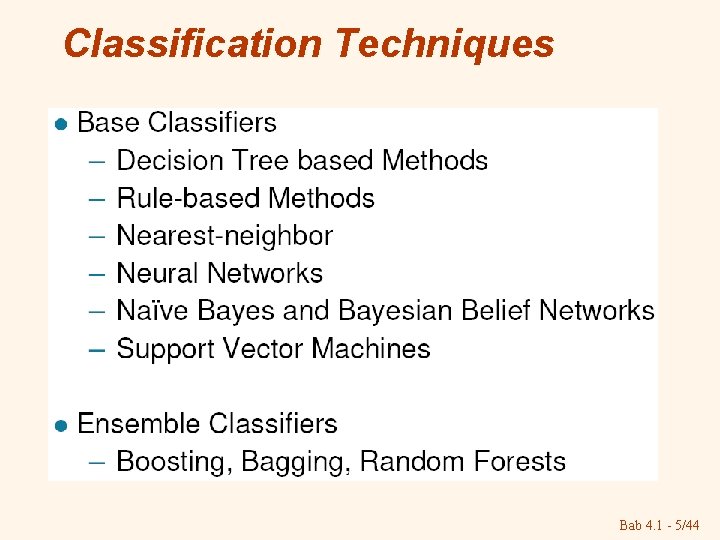

Classification Techniques Bab 4. 1 - 5/44

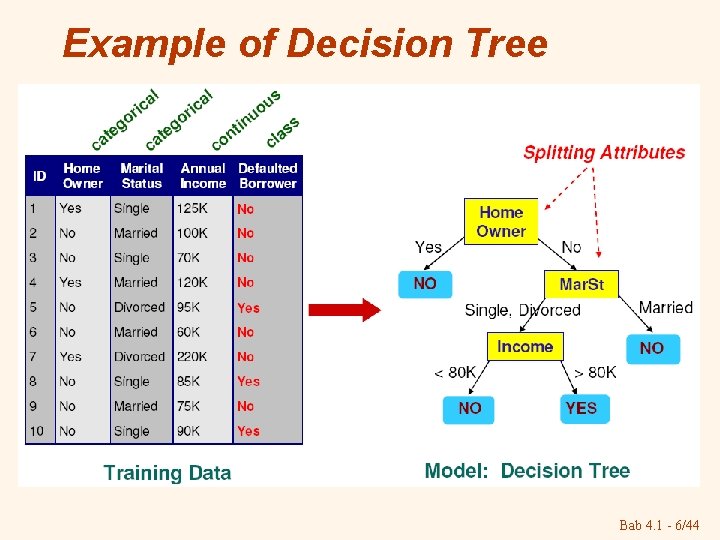

Example of Decision Tree Bab 4. 1 - 6/44

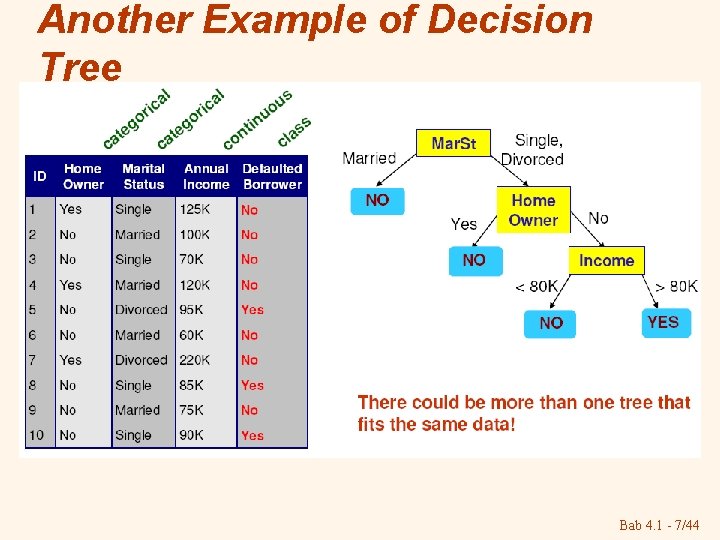

Another Example of Decision Tree Bab 4. 1 - 7/44

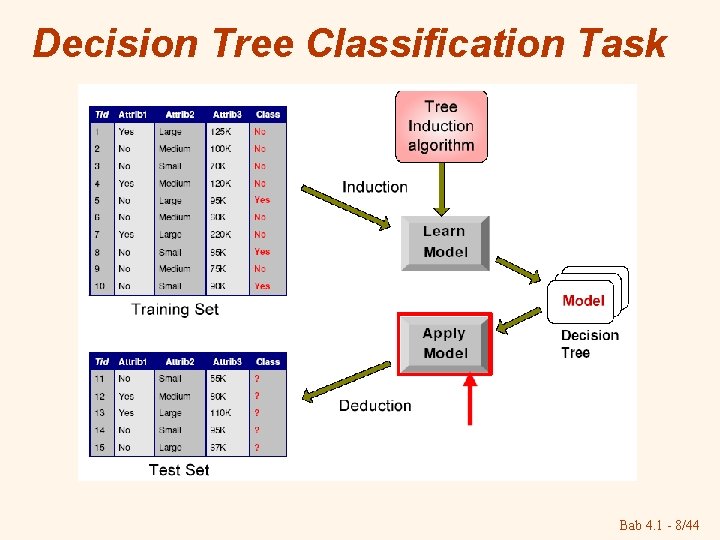

Decision Tree Classification Task Bab 4. 1 - 8/44

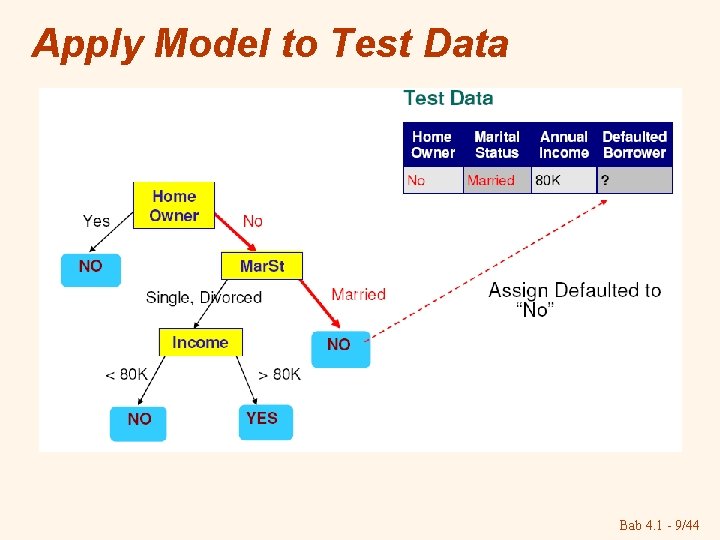

Apply Model to Test Data Bab 4. 1 - 9/44

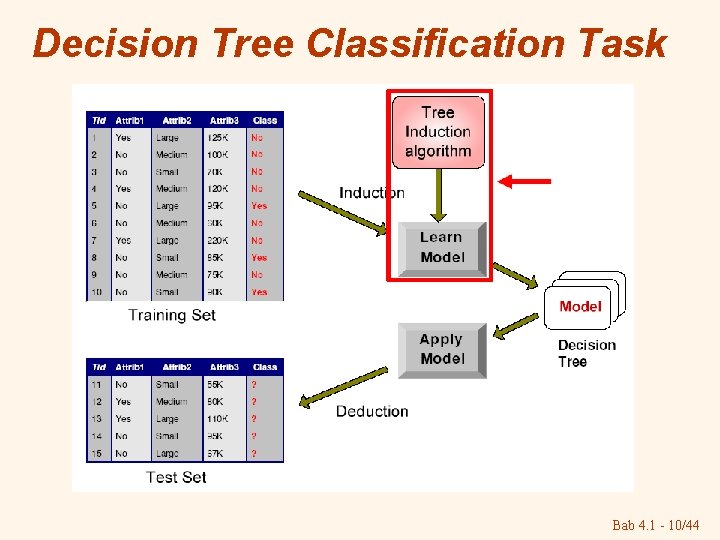

Decision Tree Classification Task Bab 4. 1 - 10/44

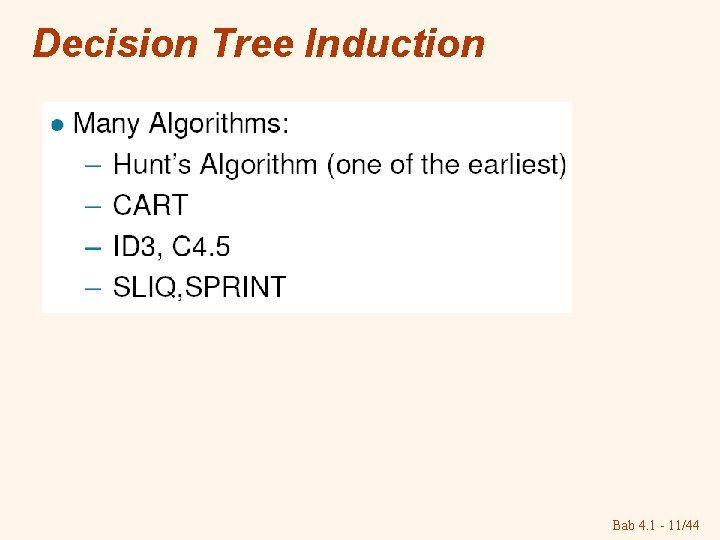

Decision Tree Induction Bab 4. 1 - 11/44

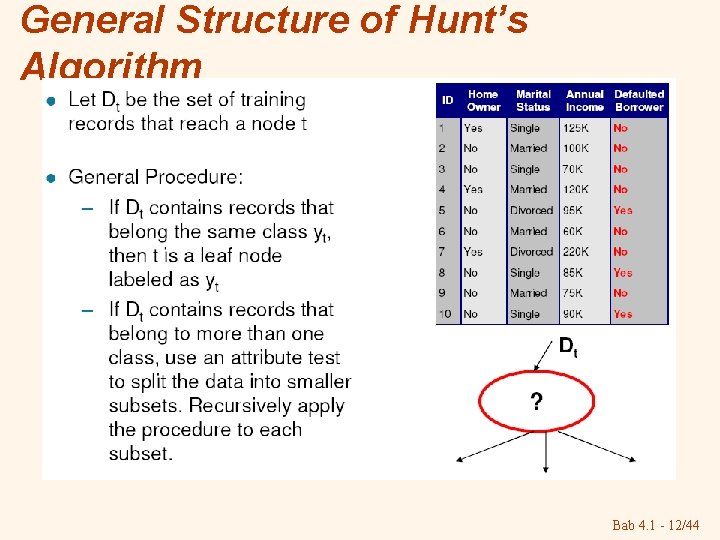

General Structure of Hunt’s Algorithm Bab 4. 1 - 12/44

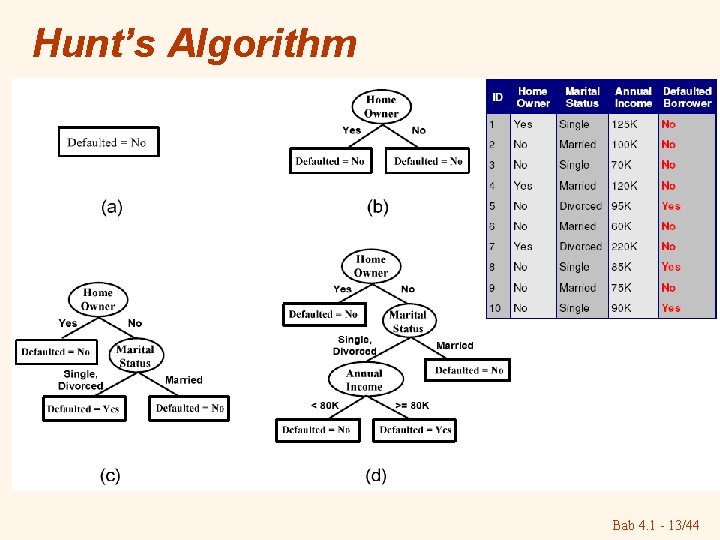

Hunt’s Algorithm Bab 4. 1 - 13/44

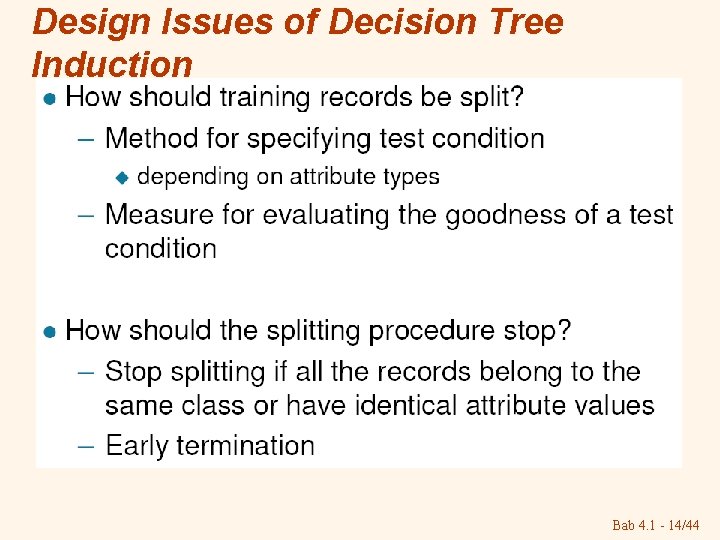

Design Issues of Decision Tree Induction Bab 4. 1 - 14/44

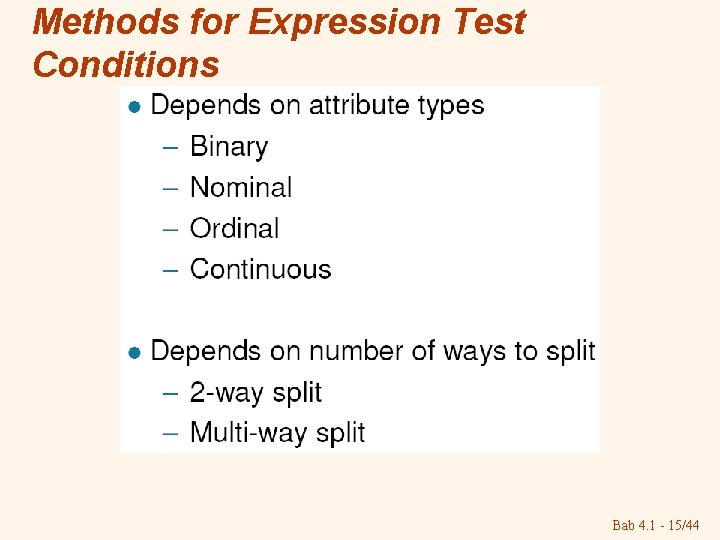

Methods for Expression Test Conditions Bab 4. 1 - 15/44

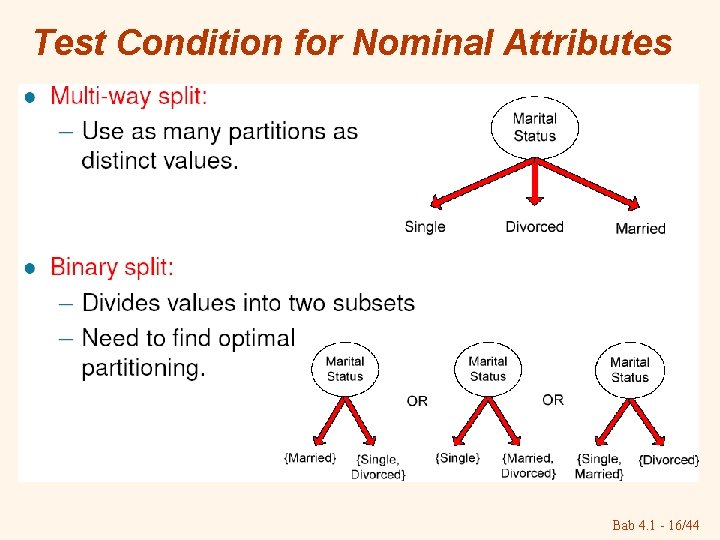

Test Condition for Nominal Attributes Bab 4. 1 - 16/44

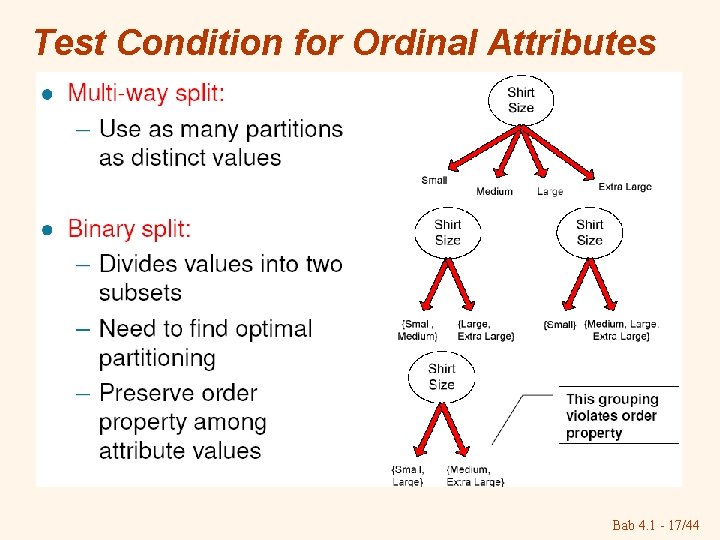

Test Condition for Ordinal Attributes Bab 4. 1 - 17/44

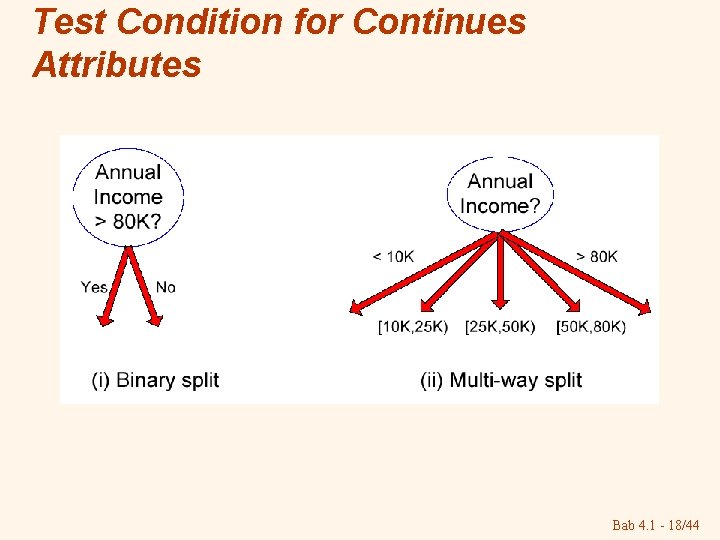

Test Condition for Continues Attributes Bab 4. 1 - 18/44

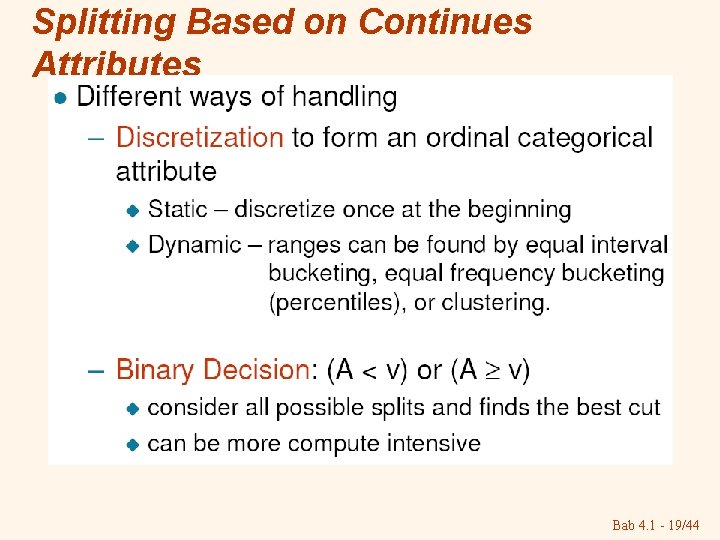

Splitting Based on Continues Attributes Bab 4. 1 - 19/44

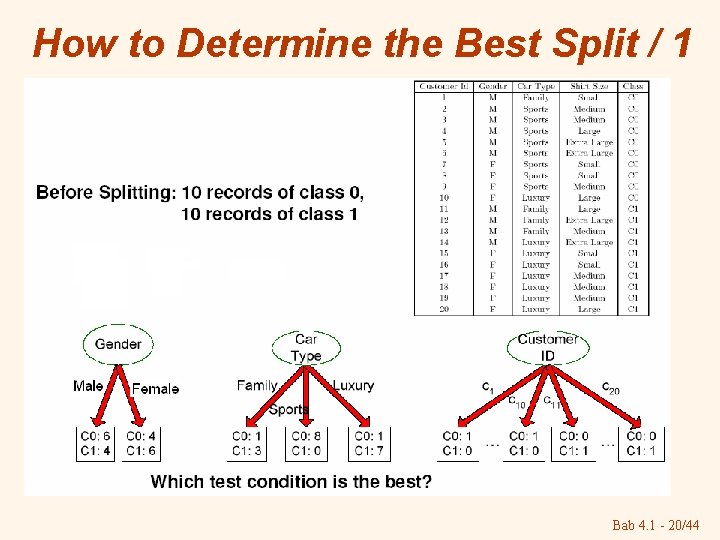

How to Determine the Best Split / 1 Bab 4. 1 - 20/44

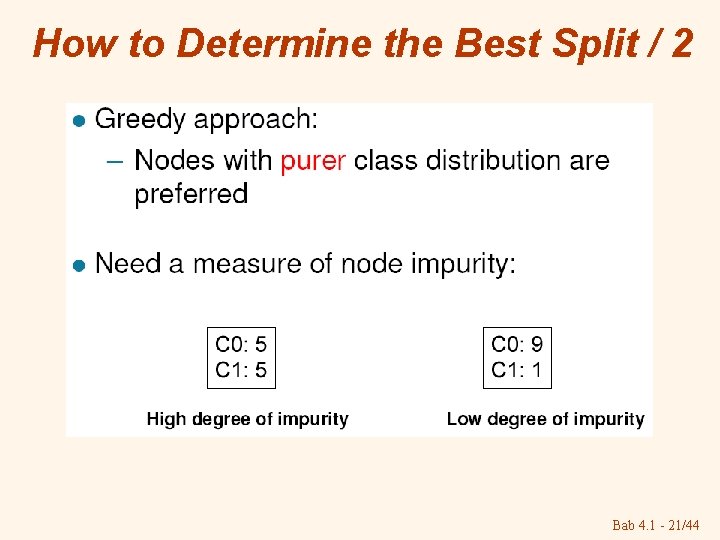

How to Determine the Best Split / 2 Bab 4. 1 - 21/44

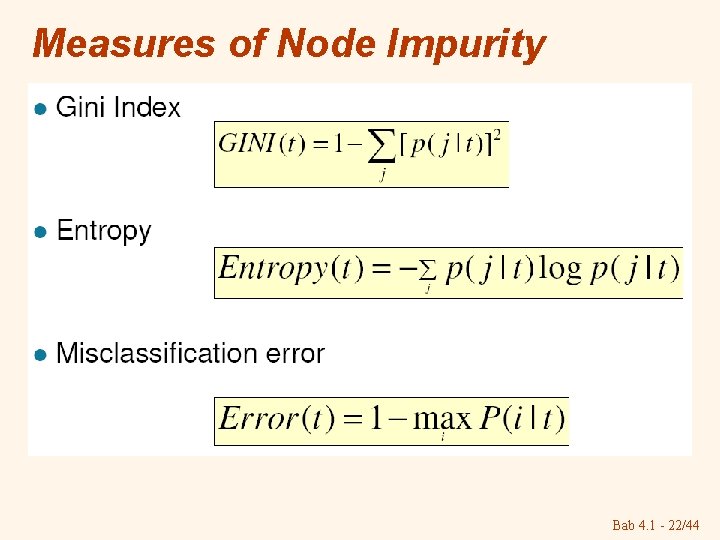

Measures of Node Impurity Bab 4. 1 - 22/44

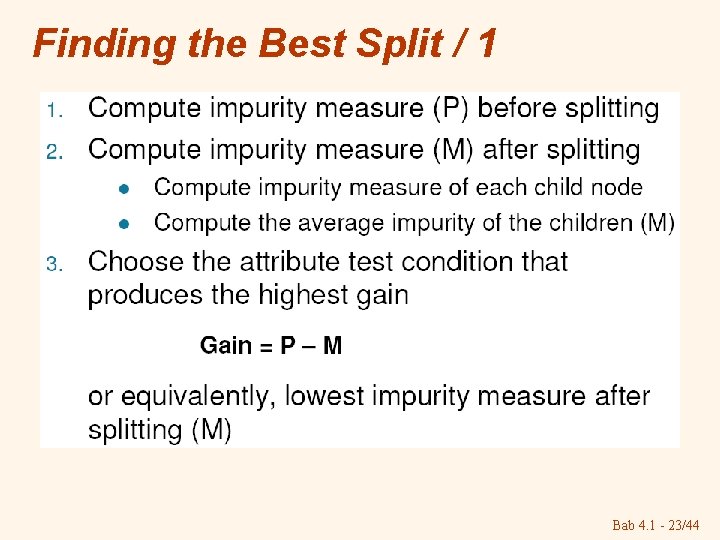

Finding the Best Split / 1 Bab 4. 1 - 23/44

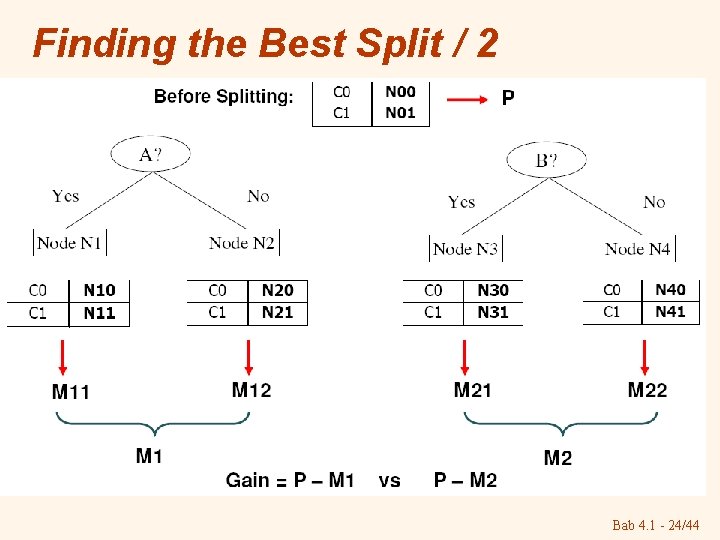

Finding the Best Split / 2 Bab 4. 1 - 24/44

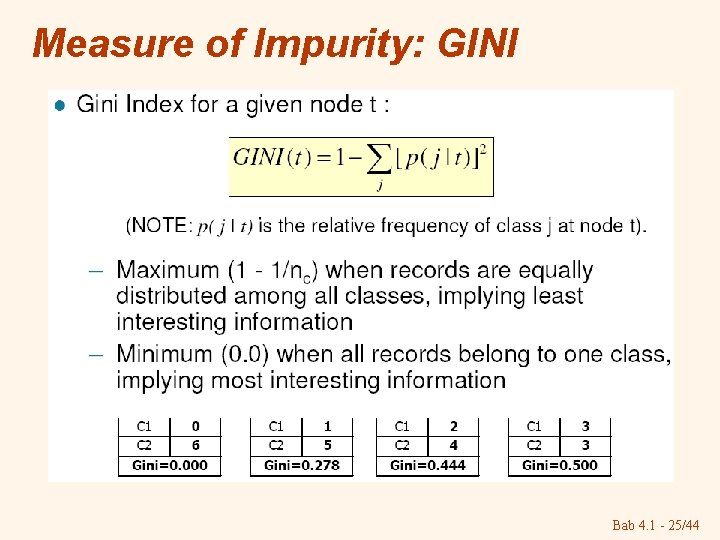

Measure of Impurity: GINI Bab 4. 1 - 25/44

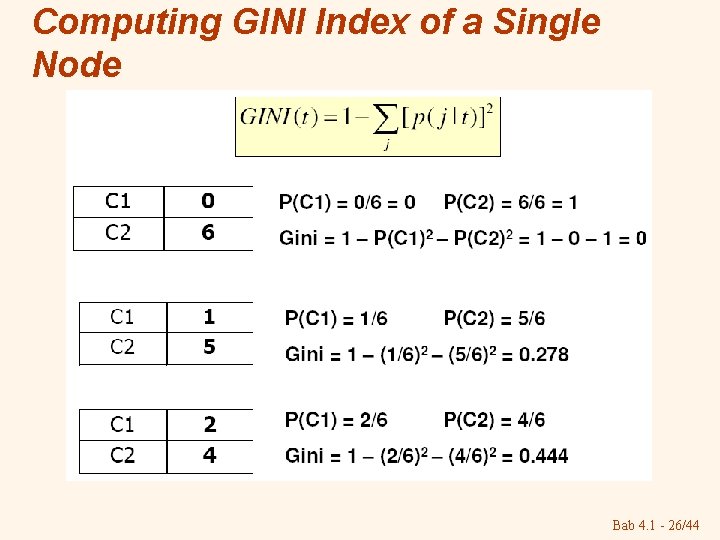

Computing GINI Index of a Single Node Bab 4. 1 - 26/44

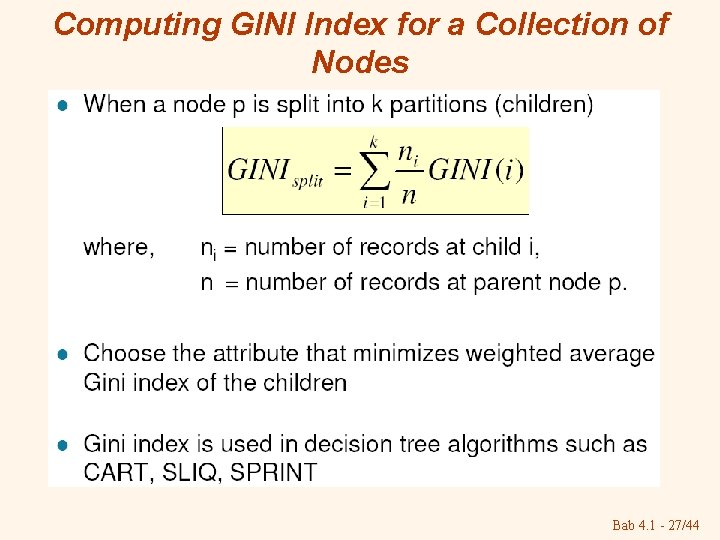

Computing GINI Index for a Collection of Nodes Bab 4. 1 - 27/44

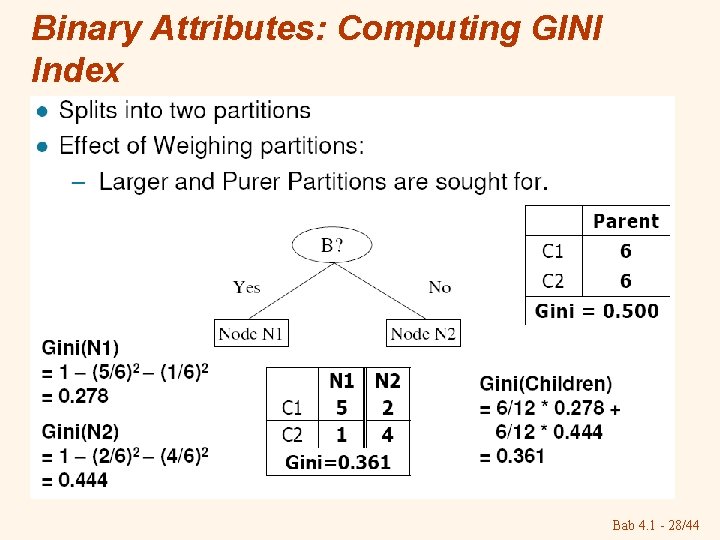

Binary Attributes: Computing GINI Index Bab 4. 1 - 28/44

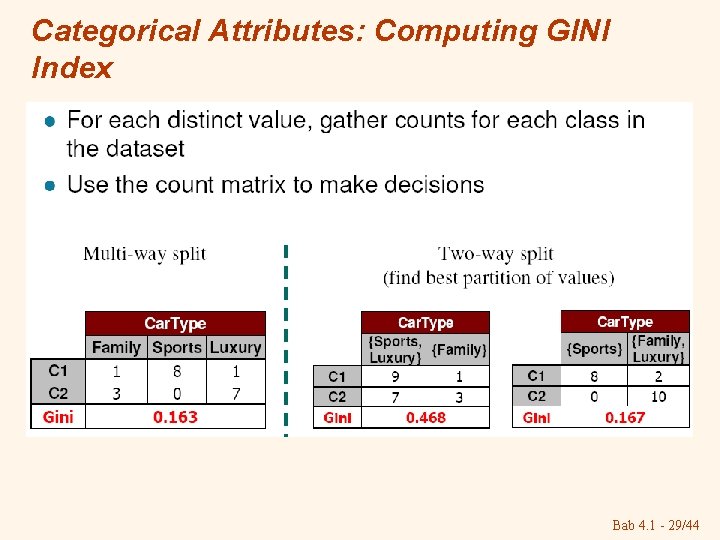

Categorical Attributes: Computing GINI Index Bab 4. 1 - 29/44

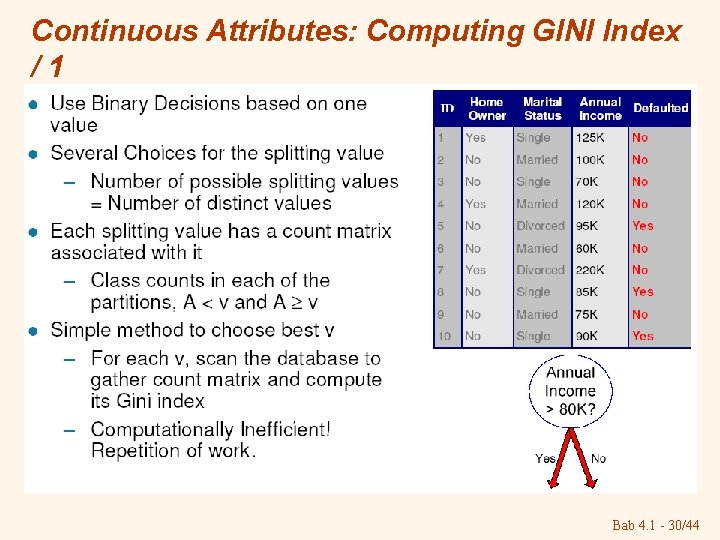

Continuous Attributes: Computing GINI Index /1 Bab 4. 1 - 30/44

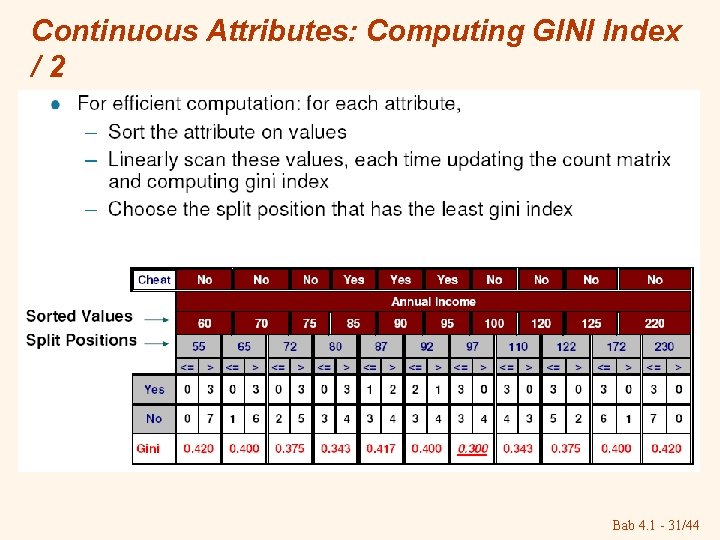

Continuous Attributes: Computing GINI Index /2 Bab 4. 1 - 31/44

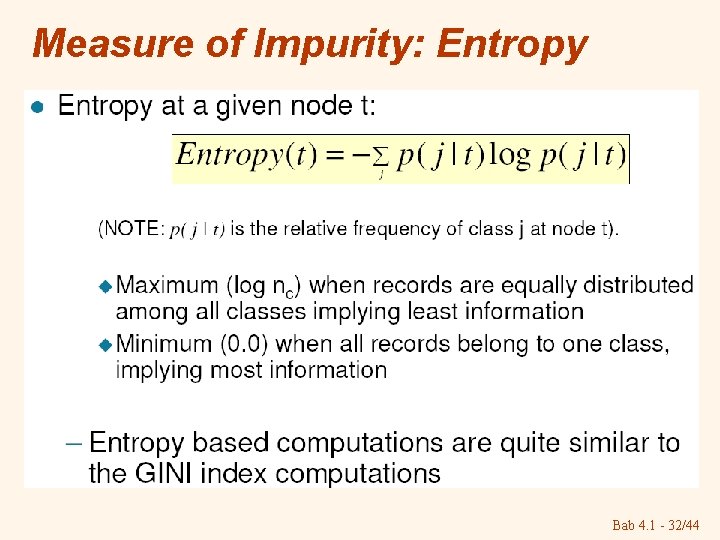

Measure of Impurity: Entropy Bab 4. 1 - 32/44

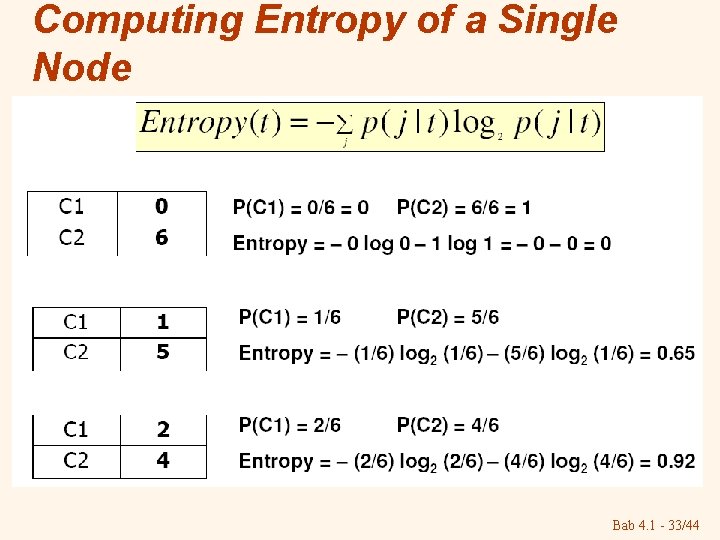

Computing Entropy of a Single Node Bab 4. 1 - 33/44

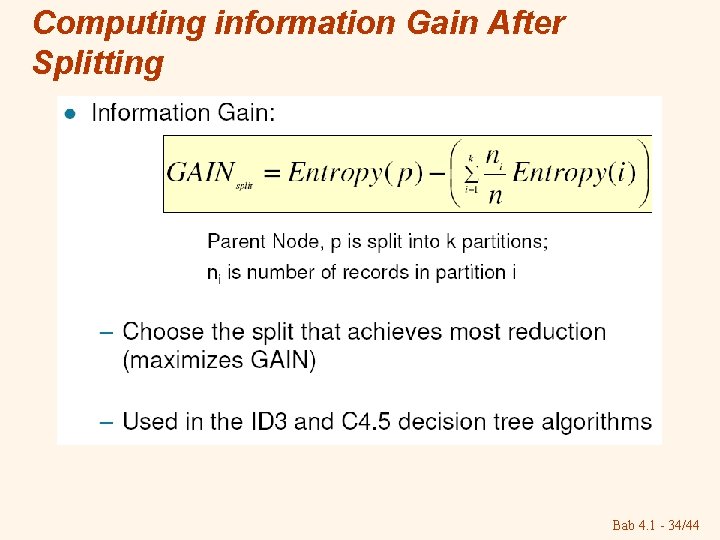

Computing information Gain After Splitting Bab 4. 1 - 34/44

Problems with Information Gain Bab 4. 1 - 35/44

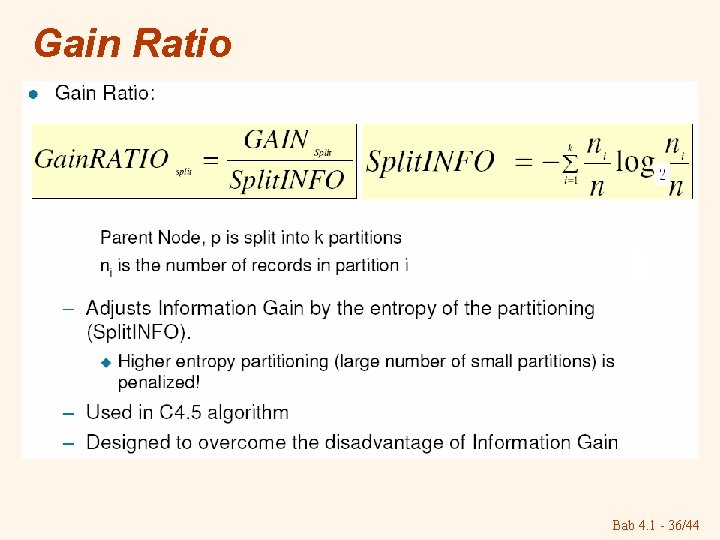

Gain Ratio Bab 4. 1 - 36/44

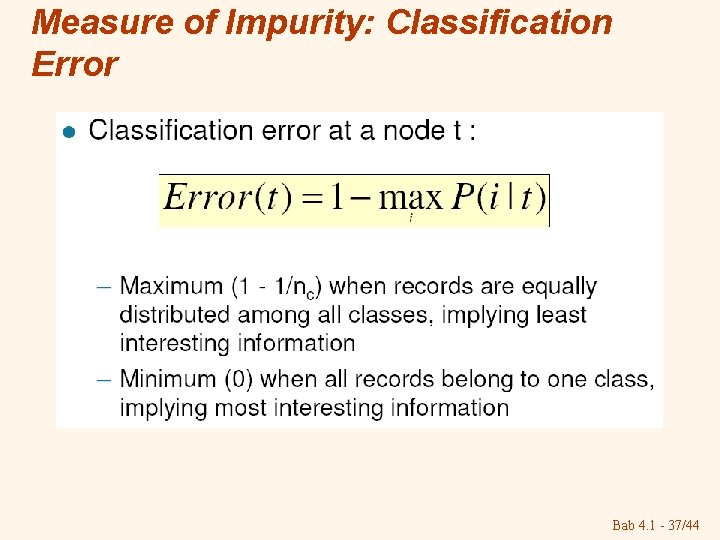

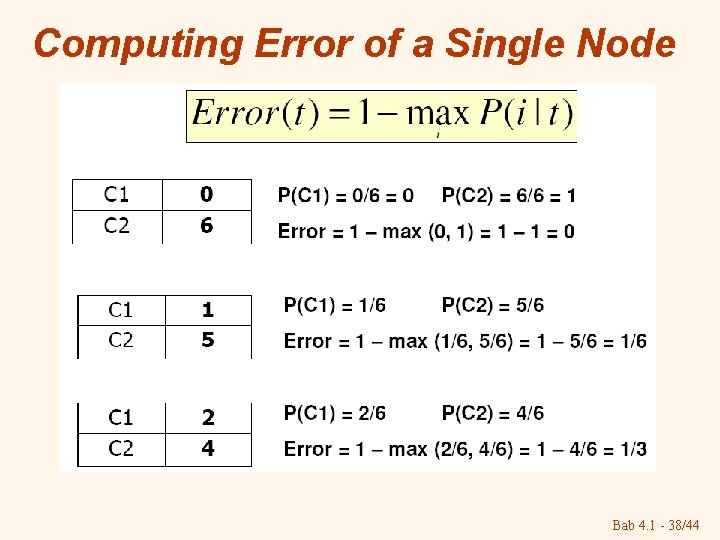

Measure of Impurity: Classification Error Bab 4. 1 - 37/44

Computing Error of a Single Node Bab 4. 1 - 38/44

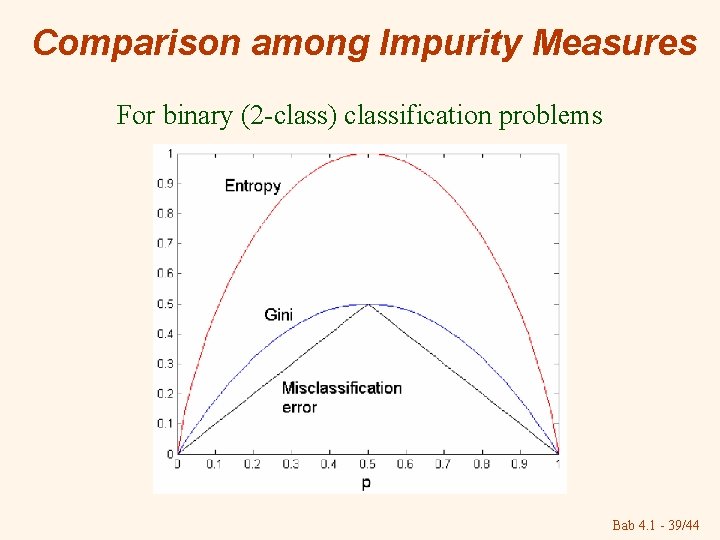

Comparison among Impurity Measures For binary (2 -class) classification problems Bab 4. 1 - 39/44

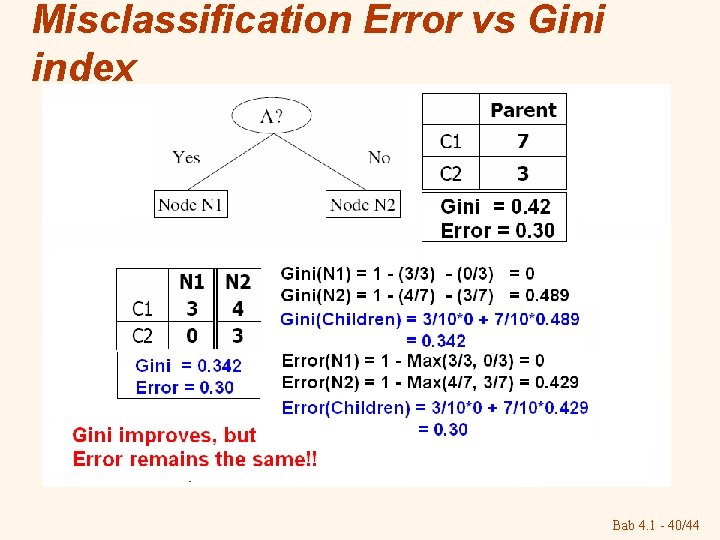

Misclassification Error vs Gini index Bab 4. 1 - 40/44

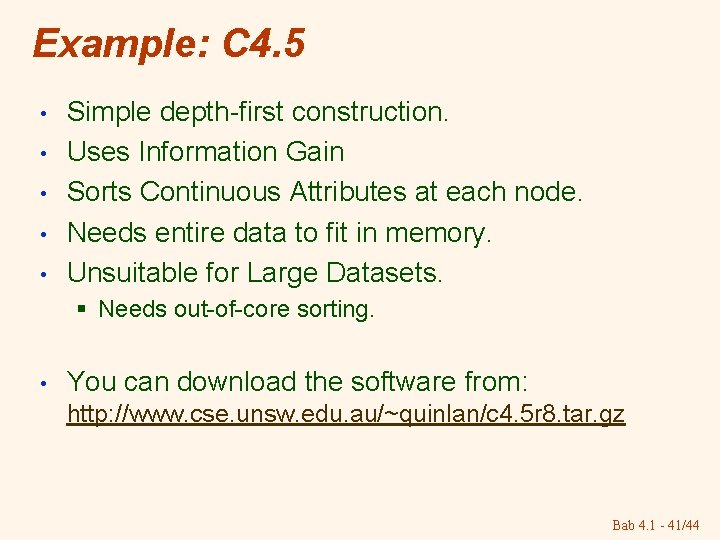

Example: C 4. 5 • • • Simple depth-first construction. Uses Information Gain Sorts Continuous Attributes at each node. Needs entire data to fit in memory. Unsuitable for Large Datasets. § Needs out-of-core sorting. • You can download the software from: http: //www. cse. unsw. edu. au/~quinlan/c 4. 5 r 8. tar. gz Bab 4. 1 - 41/44

Scalable Decision Tree Induction / 1 • How scalable is decision tree induction? § Particularly suitable for small data set • SLIQ (EDBT’ 96 — Mehta et al. ) § Builds an index for each attribute and only class list and the current attribute list reside in memory Bab 4. 1 - 42/44

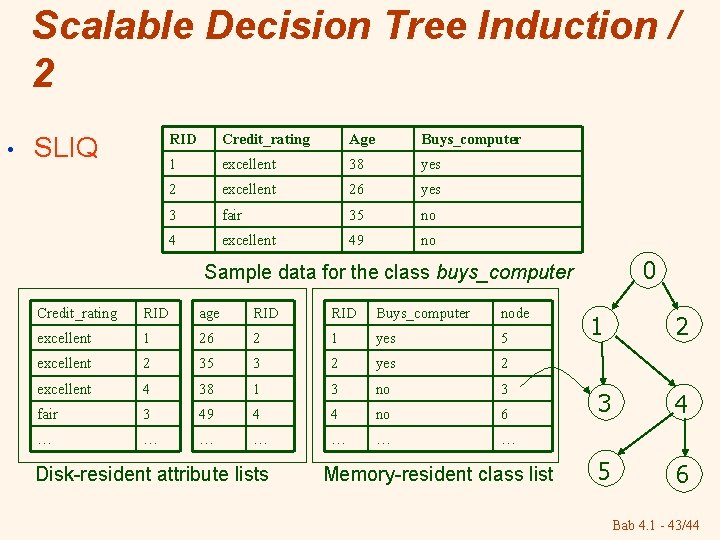

Scalable Decision Tree Induction / 2 • SLIQ RID Credit_rating Age Buys_computer 1 excellent 38 yes 2 excellent 26 yes 3 fair 35 no 4 excellent 49 no 0 Sample data for the class buys_computer Credit_rating RID age RID Buys_computer node excellent 1 26 2 1 yes 5 excellent 2 35 3 2 yes 2 excellent 4 38 1 3 no 3 fair 3 49 4 4 no 6 … … … … Disk-resident attribute lists Memory-resident class list 1 2 3 4 5 6 Bab 4. 1 - 43/44

Decision Tree Based Classification • Advantages § § • Inexpensive to construct Extremely fast at classifying unknown records Easy to interpret for small-sized tress Accuracy is comparable to other classification techniques for many data sets Practical Issues of Classification § Underfitting and Overfitting § Missing Values § Costs of Classification Bab 4. 1 - 44/44

- Slides: 44