B E TECHNICAL PROJECT Autonomous visionbased robot navigation

B. E. TECHNICAL PROJECT Autonomous vision-based robot navigation using artificial intelligence technique of backtracking Project Mentor: Dr. Sachin Maheshwari HOD, MPAE Dept. Submitted By: Ankit Kulshreshtha : 615/MP/11 Prachi Sharma: 304/CO/11 Priyanshi Gupta: 310/CO/11

OUTLINE Problem statement 2. Background work 3. Motivation 4. Methodology : 1. Tools used 2. Steps involved Applications 6. Progress 7. References 5.

1. Problem Statement �Enabling a robot to search and reach its destination from starting location avoiding obstacles in its path in any unfamiliar arena.

![2. Background work Previously used sensors[1]: ACTIVE SENSORS 2. Background work Previously used sensors[1]: ACTIVE SENSORS](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-4.jpg)

2. Background work Previously used sensors[1]: ACTIVE SENSORS

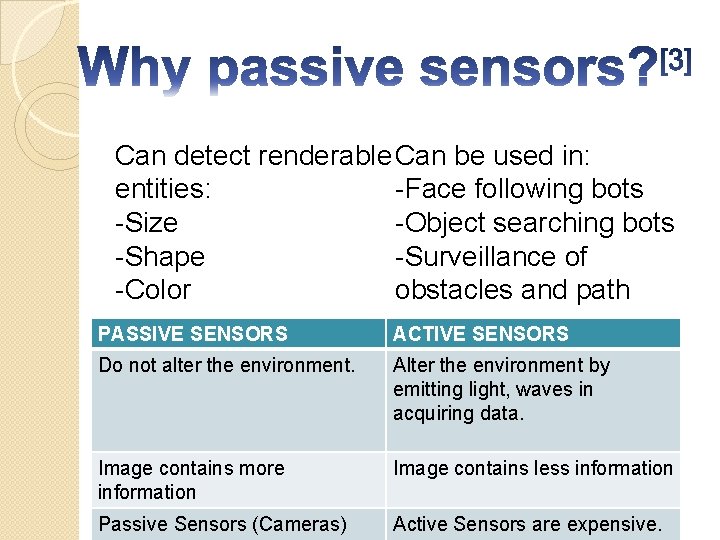

We use: PASSIVE SENSOR

Can detect renderable Can be used in: entities: -Face following bots -Size -Object searching bots -Shape -Surveillance of -Color obstacles and path PASSIVE SENSORS ACTIVE SENSORS Do not alter the environment. Alter the environment by emitting light, waves in acquiring data. Image contains more information Image contains less information Passive Sensors (Cameras) Active Sensors are expensive.

![Algorithms used previously: A* algorithm[4] Artificial neural network[2] Genetic algorithms[5] Works only for small Algorithms used previously: A* algorithm[4] Artificial neural network[2] Genetic algorithms[5] Works only for small](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-7.jpg)

Algorithms used previously: A* algorithm[4] Artificial neural network[2] Genetic algorithms[5] Works only for small graphs and restricted and quantized moves Only works for simple graphs. Gets trapped in complex graphs. Computationally expensive

![We use: Backtracking[6] �Can be implemented for complex graphs �Finds most (or all) possible We use: Backtracking[6] �Can be implemented for complex graphs �Finds most (or all) possible](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-8.jpg)

We use: Backtracking[6] �Can be implemented for complex graphs �Finds most (or all) possible solutions to the problem, which can be used in map creation or surveillance purposes of the arena. �Most relevant to the problem at hand.

3. Motivation �A lot of work has been done in the field of autonomous navigation of Robots using Active sensors but Navigation based on Computer Vision using the Backtracking technique is still in its Nascent stages.

Whom do we aim to serve? �A visually impaired student on a powered wheelchair �Increasing needs of Assistive Technology (intelligent wheelchair) �Building a prototype (small scale) of autonomous robot navigation

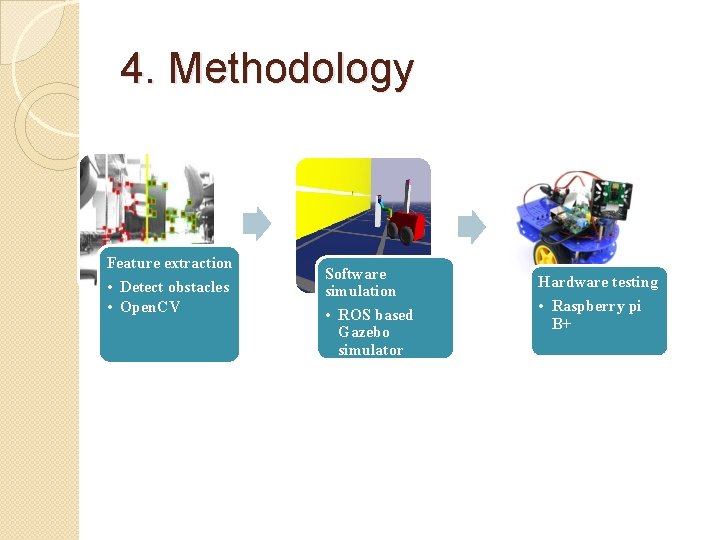

4. Methodology Feature extraction • Detect obstacles • Open. CV Software simulation • ROS based Gazebo simulator Hardware testing • Raspberry pi B+

Tools used

Outline: Software

![Why ROS[7]? �Robotics Engineers keep re-inventing the wheel, by writing software for standard equipment Why ROS[7]? �Robotics Engineers keep re-inventing the wheel, by writing software for standard equipment](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-14.jpg)

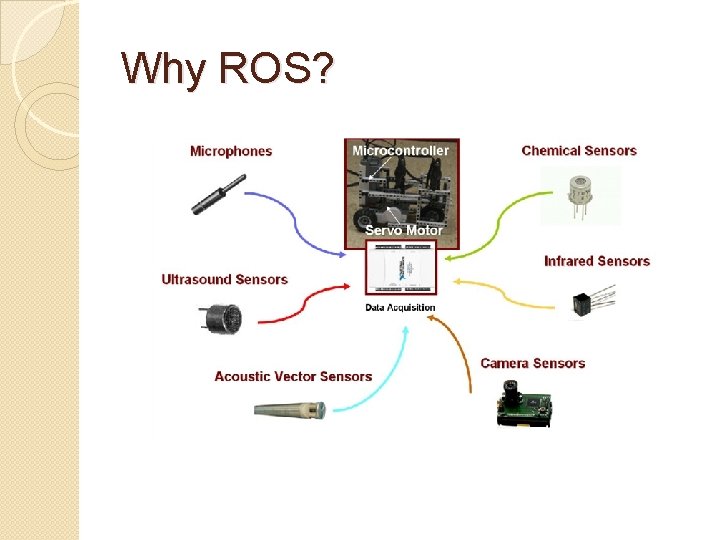

Why ROS[7]? �Robotics Engineers keep re-inventing the wheel, by writing software for standard equipment again and again. �ROS helps us avoid this problem by standardizing the way code is written for different platforms. �It also helps people who wish to bypass hardware intricacies!

Why ROS?

![Open. CV[8]: �Library of programming functions, aimed at real time computer vision. Capabilities[9]: Open. CV[8]: �Library of programming functions, aimed at real time computer vision. Capabilities[9]:](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-16.jpg)

Open. CV[8]: �Library of programming functions, aimed at real time computer vision. Capabilities[9]:

![Gazebo[10]: �A well-designed simulator to rapidly test algorithms, design robots, and perform regression testing Gazebo[10]: �A well-designed simulator to rapidly test algorithms, design robots, and perform regression testing](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-17.jpg)

Gazebo[10]: �A well-designed simulator to rapidly test algorithms, design robots, and perform regression testing using realistic scenarios

Why Gazebo?

Outline : Hardware Robot Chassis Mini Driver Raspberry Pi Model B+ Power Bank

![Outline : Hardware[11] Outline : Hardware[11]](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-20.jpg)

Outline : Hardware[11]

![Raspberry Pi B+[12] �A credit card size mini computer used as the micro controller Raspberry Pi B+[12] �A credit card size mini computer used as the micro controller](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-21.jpg)

Raspberry Pi B+[12] �A credit card size mini computer used as the micro controller

![Raspberry Pi vs Beaglebone black[13] Raspberry Pi vs Beaglebone black[13]](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-22.jpg)

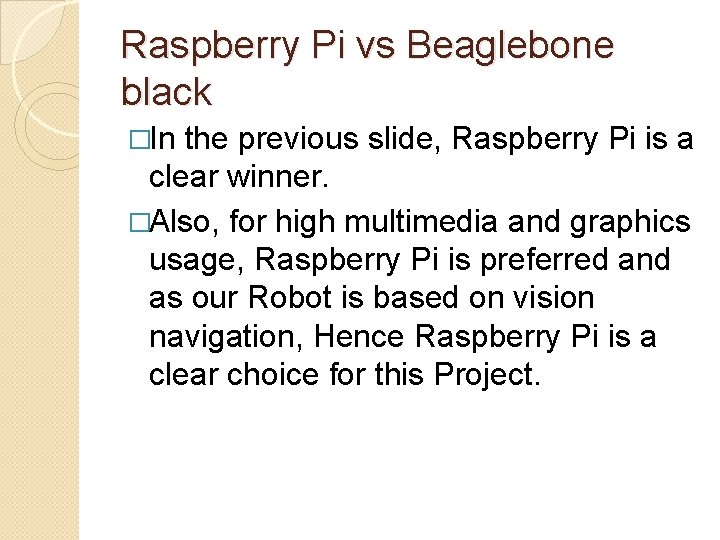

Raspberry Pi vs Beaglebone black[13]

Raspberry Pi vs Beaglebone black �In the previous slide, Raspberry Pi is a clear winner. �Also, for high multimedia and graphics usage, Raspberry Pi is preferred and as our Robot is based on vision navigation, Hence Raspberry Pi is a clear choice for this Project.

Steps involved:

![Feature extraction[14]: 1. Image Segmentation � � Gaussian smoothing filter Sobel edge detector Adaptive Feature extraction[14]: 1. Image Segmentation � � Gaussian smoothing filter Sobel edge detector Adaptive](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-25.jpg)

Feature extraction[14]: 1. Image Segmentation � � Gaussian smoothing filter Sobel edge detector Adaptive thresholding Thinning operator 160 x 120 RGB Grayscale Gaussian filter Thinning Thresholdin g Sobel detector 2. Feature Extraction and Recognition Hough transform Histogram-based intensity analysis Final Result Corridor: YES Wall: NO Obstacle: NO

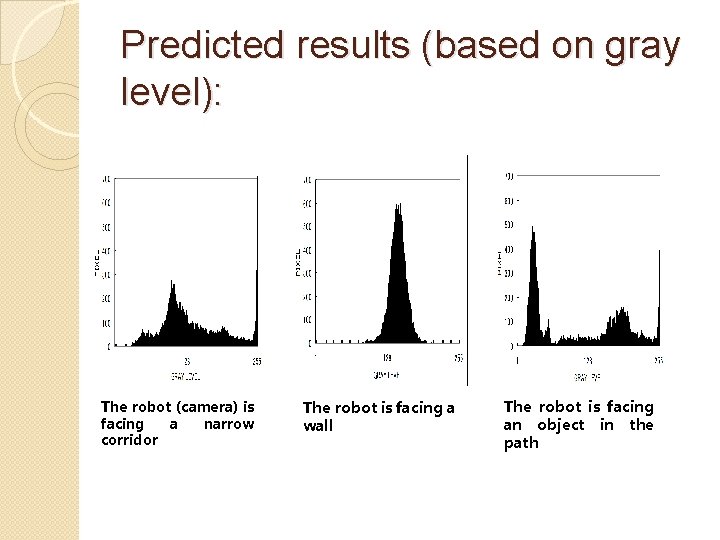

Predicted results (based on gray level): The robot (camera) is facing a narrow corridor The robot is facing a wall The robot is facing an object in the path

![Obstacle avoidance [15] algorithm Obstacle avoidance [15] algorithm](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-27.jpg)

Obstacle avoidance [15] algorithm

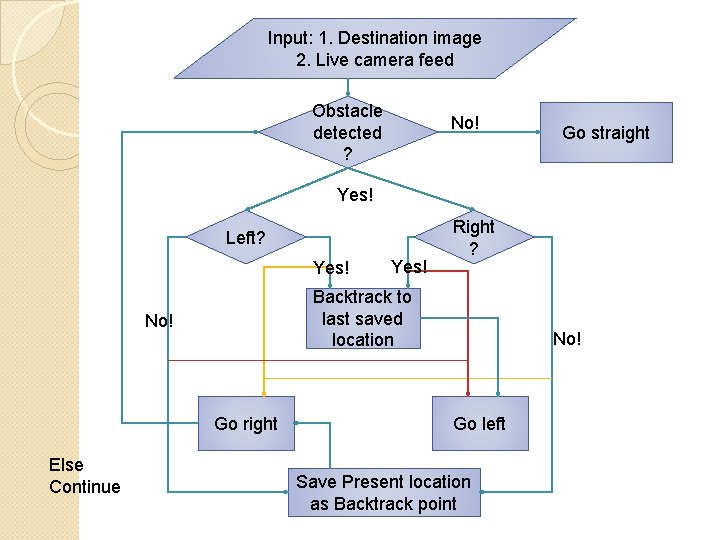

Input: 1. Destination image 2. Live camera feed Obstacle detected ? No! Go straight Yes! Left? Yes! Backtrack to last saved location No! Go right Else Continue Yes! Right ? No! Go left Save Present location as Backtrack point

![Algorithm: Recursion[16] Explore will call itself recursively Mark x and y position Check situations Algorithm: Recursion[16] Explore will call itself recursively Mark x and y position Check situations](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-29.jpg)

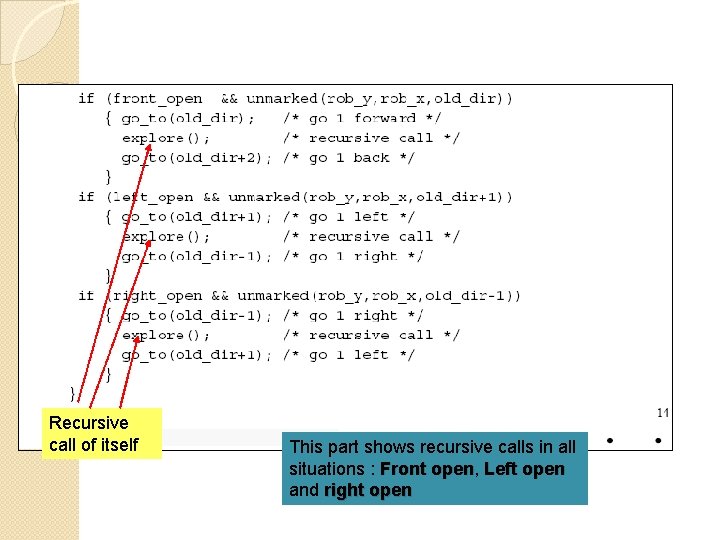

Algorithm: Recursion[16] Explore will call itself recursively Mark x and y position Check situations if front open etc Set flags front open etc Use flags front open etc PSD is signal from camera module

Recursive call of itself This part shows recursive calls in all situations : Front open, open Left open and right open

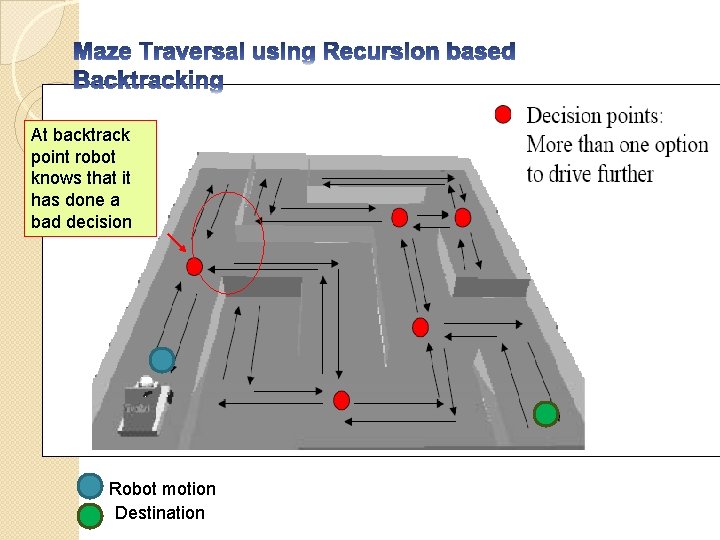

At backtrack point robot knows that it has done a bad decision Robot motion Destination

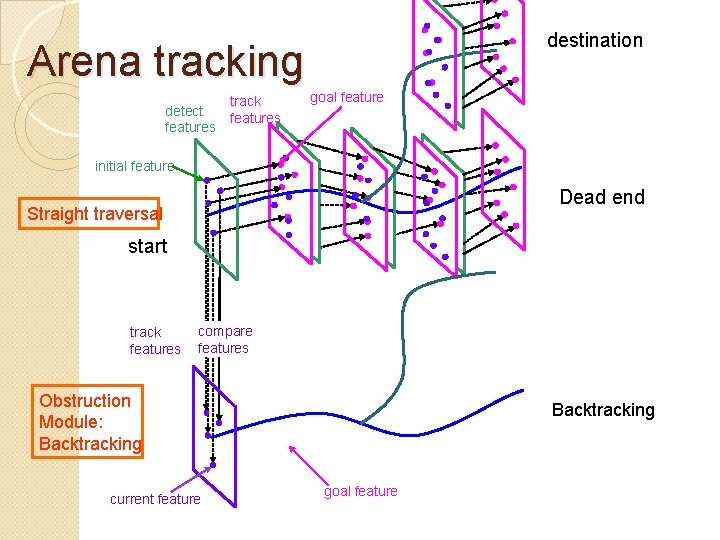

destination Arena tracking detect features track features goal feature initial feature Dead end Straight traversal start track features compare features Obstruction Module: Backtracking current feature Backtracking goal feature

5. Applications �Autonomous vehicular system �Assisting motor-disability persons by providing automated navigation. �Security systems and in areas with hazardous chemicals. �Fire security rescual systems.

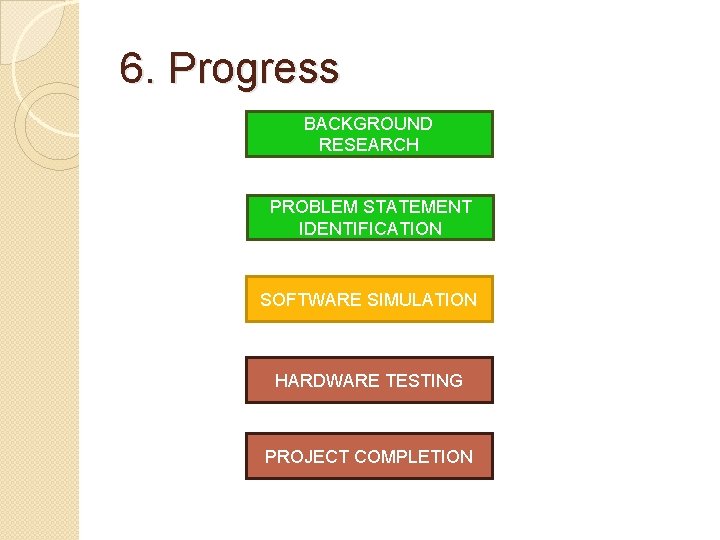

6. Progress BACKGROUND RESEARCH PROBLEM STATEMENT IDENTIFICATION SOFTWARE SIMULATION HARDWARE TESTING PROJECT COMPLETION

![7. References [1] Edward Y. C. Huang. Semi Autonomous Vision Based navigation System for 7. References [1] Edward Y. C. Huang. Semi Autonomous Vision Based navigation System for](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-35.jpg)

7. References [1] Edward Y. C. Huang. Semi Autonomous Vision Based navigation System for a mobile robotic vehicle. Master’s thesis, Massachusetts Institute of Technology, June 2003. [2] Jefferson R. Souza, Gustavo Pessin, Fernando S. Osório, Denis F. Wolf. Vision-Based Autonomous Navigation Using Supervised Learning Techniques. University of Sao Paulo, 2011. [3] Chatterjee, Amitava, Rakshit, Anjan, Nirmal Singh, N. Vision Based Autonomous Robot Navigation. Springer , 2013. [4] Introduction to A*. http: //theory. stanford. edu/~amitp/Game. Programming/AStar. Comparison. html [5] Aditia Hermanu , Theodore W. Manikas , Kaveh , Roger L. Wainwright. Autonomous Robot Navigation Using A Genetic Algorithm With An Efficient Genotype Structure. University of Tulsa. [6] El-Hussieny, H. , Assal, S. F. M. , Abdellatif, M. . Improved Backtracking Algorithm for Efficient Sensor-Based Random Tree Exploration. International Conference on Communication Systems and Networks (CICSy. N), June 2013. [7] ROS. org. http: //www. ros. org [8] Open. CV. http: //opencv. org/

![7. References [9] Eric T. Baumgartner and Steven B. Skaar. An Autonomous Vision-Based Mobile 7. References [9] Eric T. Baumgartner and Steven B. Skaar. An Autonomous Vision-Based Mobile](http://slidetodoc.com/presentation_image_h2/68a9f0dcced1ee32034643f35aae48f0/image-36.jpg)

7. References [9] Eric T. Baumgartner and Steven B. Skaar. An Autonomous Vision-Based Mobile Robot. IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 39, NO. 3, MARCH 1994 [10] Gazebo. http: //gazebosim. org [11] Programming a Raspberry Pi Robot Using Python and Open. CV. http: //blog. dawnrobotics. co. uk/2014/06/programming-raspberry-pi-robotusing-python-opencv/ [12] Raspberry Pi Model B+ | Raspberry Pi. http: //www. raspberrypi. org/products/model-b-plus/ [13] Raspberry Pi, Beaglebone Black, Intel Edison – Benchmarked. http: //www. davidhunt. ie/raspberry-pi-beaglebone-black-intel-edisonbenchmarked/ [14] Mark Nixon. Feature Extraction & Image Processing. Academic Press, 2008. [15] Borenstein, J. ; Koren, Y. The vector field histogram-fast obstacle avoidance for mobile robots. Robotics and Automation, IEEE Transactions on (Volume: 7 , Issue: 3 ), August 2002 [16] R. Siegwart, I. Nourbakhsh. http: //web. eecs. utk. edu/~leparker/Courses/CS 594 fall 08/Lectures/Oct-21 -Obstacle-Avoidance-I. pdf

- Slides: 36