Azure Databricks for the Traditional DBA Rodney Landrum

Azure Databricks for the Traditional DBA Rodney Landrum Boston SQL Saturday BI Edition 2019

About Me(2008 – 2016) MVP Data Platform Senior Database/BI Consultant with Ntirety Author of SQL Server Tacklebox et al Not a Data Scientist Does not understand Dev. Ops Has a redgate tattoo WFA rodneylandrum@Hotmail. com

Session Goals • Relay the challenges and successes of deploying two real world Azure Databricks projects • Provide consolidated information on what the Traditional DBA needs to know to successfully deploy and manage Azure Databricks

The Traditional DBA • • • Responsible for company’s data assets Comfortable managing multiple SQL Server instances Does not shy away from long hours Works well with other teams Gets assigned technologies with the word “Data” in it like Data Factory and Data. Bricks

What the DBA Needs To Know For a Databricks Project • • How to use Google or Bing Very basic R and Python How to move about in Azure Portal How to interpret and resolve a crazy new world of syntax errors Who in your network you should contact when you hit a data brick wall What you can pawn off on the data scientists and what you have to do yourself How to conceal your excitement when some piece of code you wrote in a language you do not know actually works

Specific Challenges and Requirements • • Migrate 2000+ lines of R code to Azure Databricks Notebook from Rstudio Assign role-based user access to Databricks notebooks Use Azure Key Vault to avoid tokens and passwords in code Load R libraries Connect to SQL and Blob storage from Notebook Pass parameters to a Notebook Orchestrate Notebook run and data load tasks via Data Factory

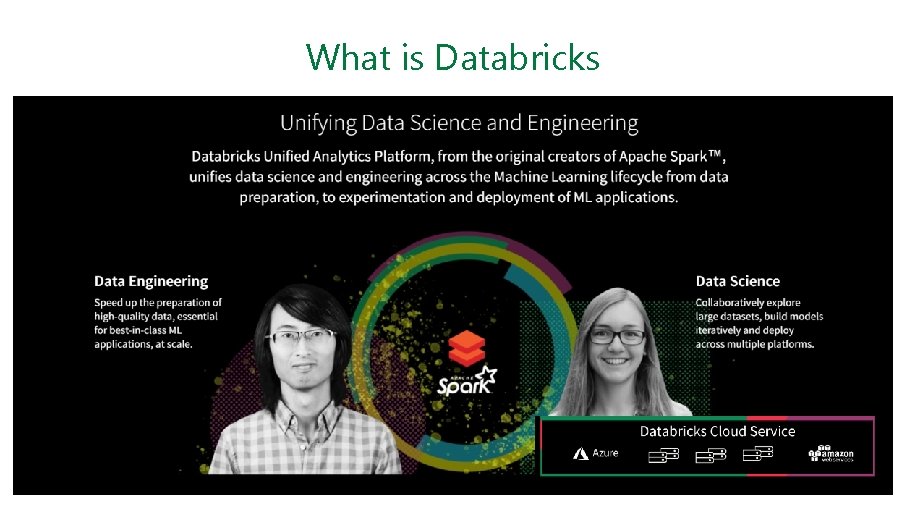

What is Databricks

Who Uses Databricks

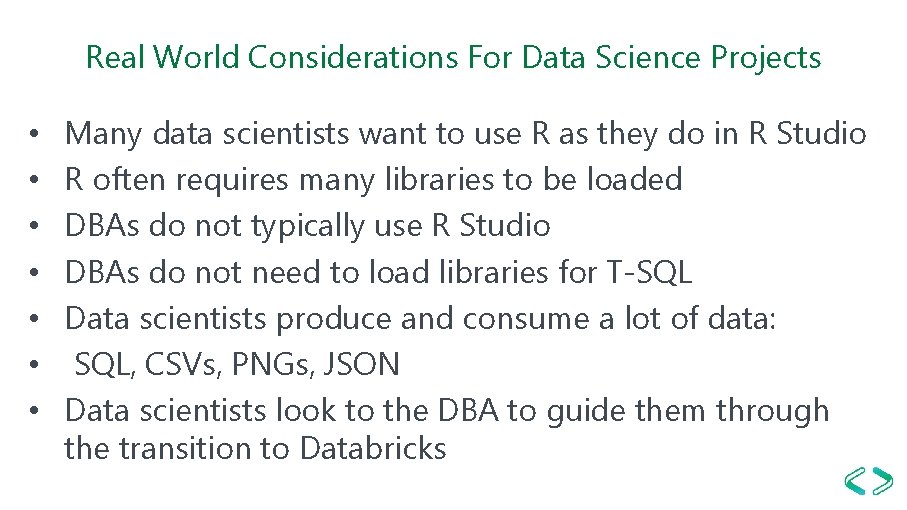

Real World Considerations For Data Science Projects • • Many data scientists want to use R as they do in R Studio R often requires many libraries to be loaded DBAs do not typically use R Studio DBAs do not need to load libraries for T-SQL Data scientists produce and consume a lot of data: SQL, CSVs, PNGs, JSON Data scientists look to the DBA to guide them through the transition to Databricks

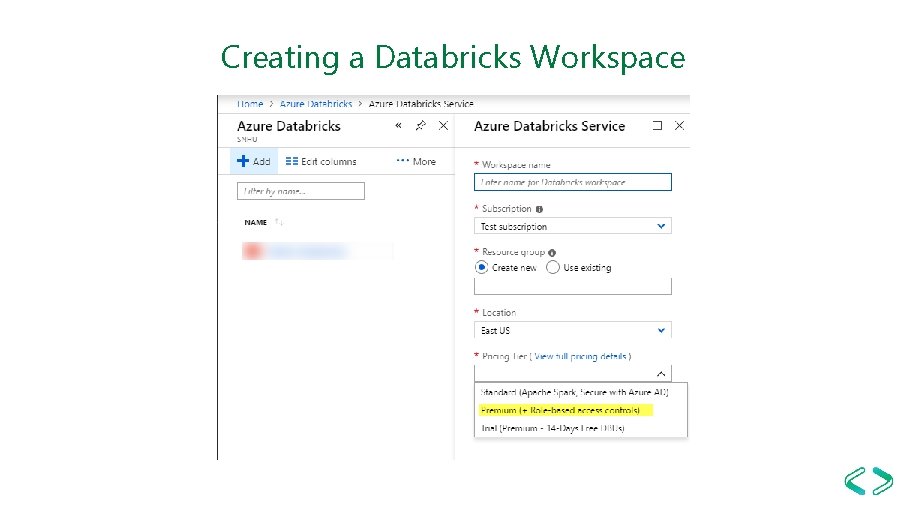

Creating a Databricks Workspace

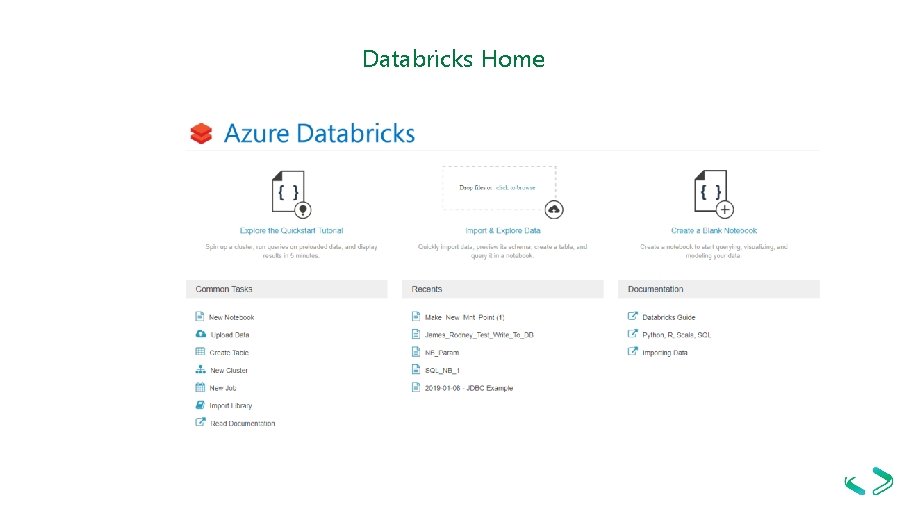

Databricks Home

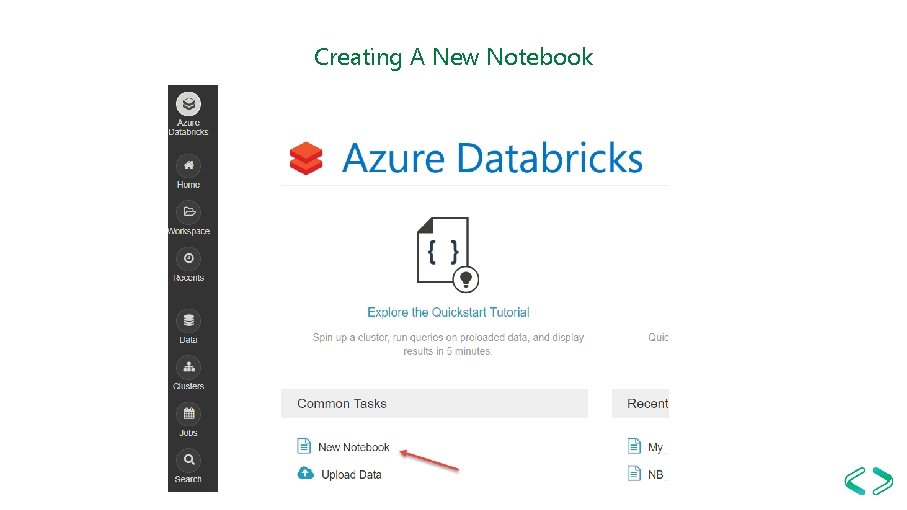

Creating A New Notebook

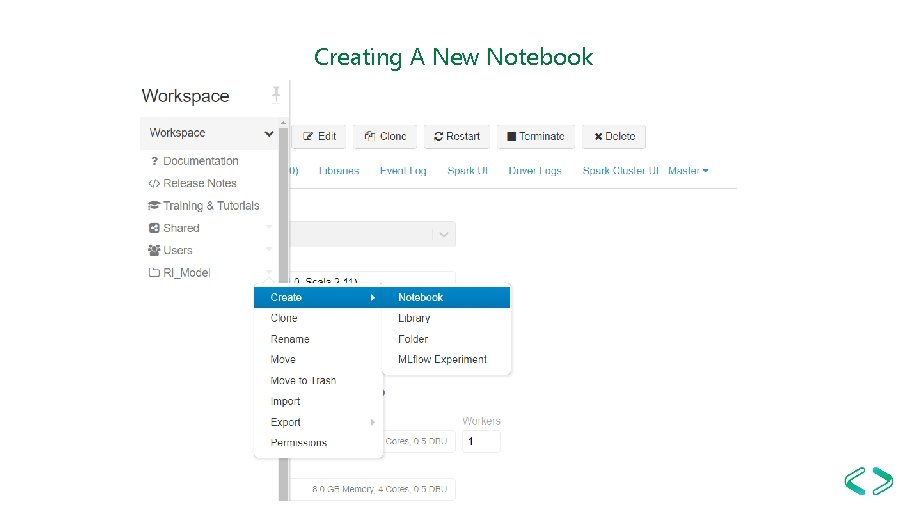

Creating A New Notebook

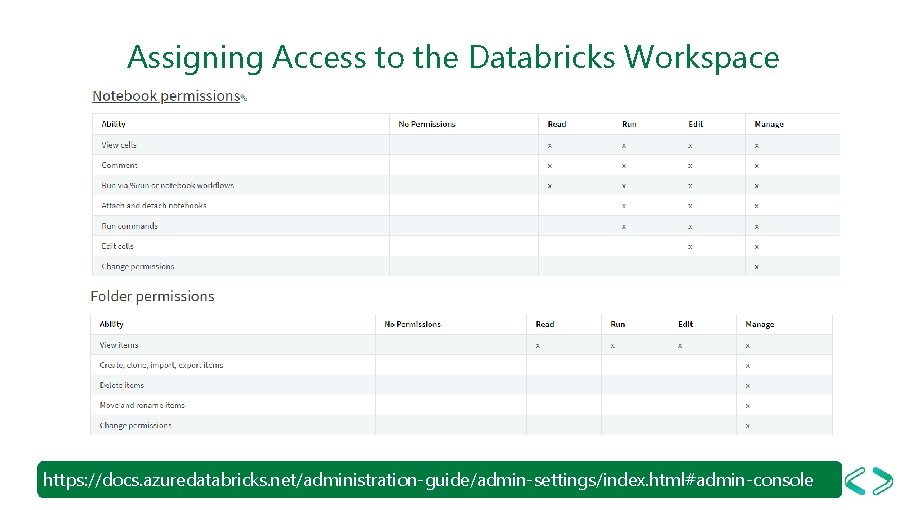

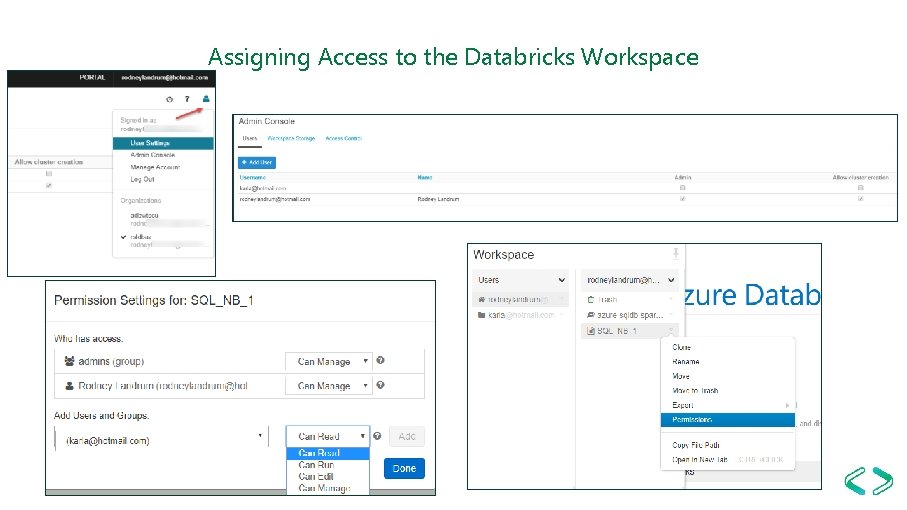

Assigning Access to the Databricks Workspace https: //docs. azuredatabricks. net/administration-guide/admin-settings/index. html#admin-console

Assigning Access to the Databricks Workspace

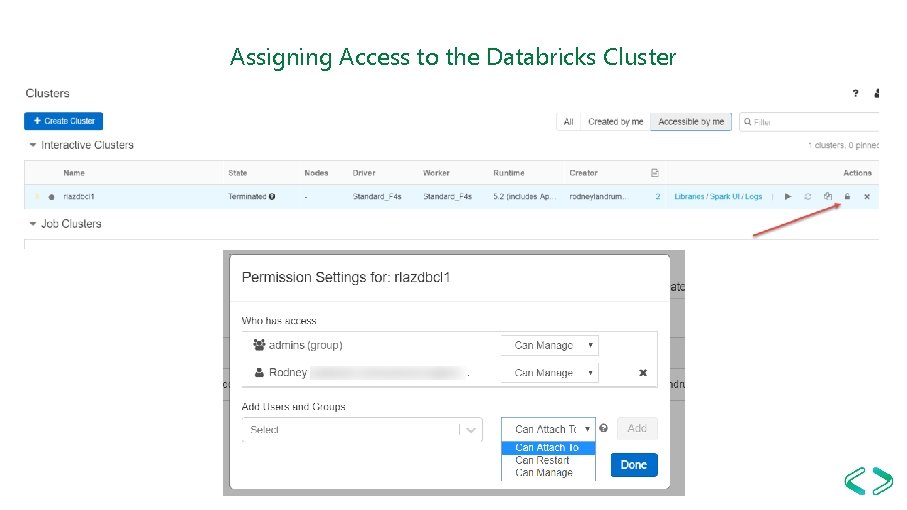

Assigning Access to the Databricks Cluster

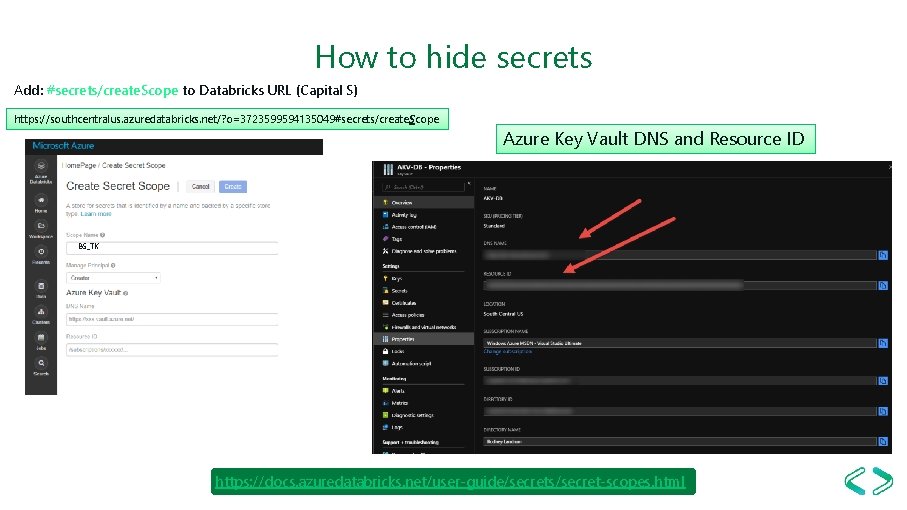

How to hide secrets Add: #secrets/create. Scope to Databricks URL (Capital S) https: //southcentralus. azuredatabricks. net/? o=3723599594135049#secrets/create. Scope Azure Key Vault DNS and Resource ID BS_TK https: //docs. azuredatabricks. net/user-guide/secrets/secret-scopes. html

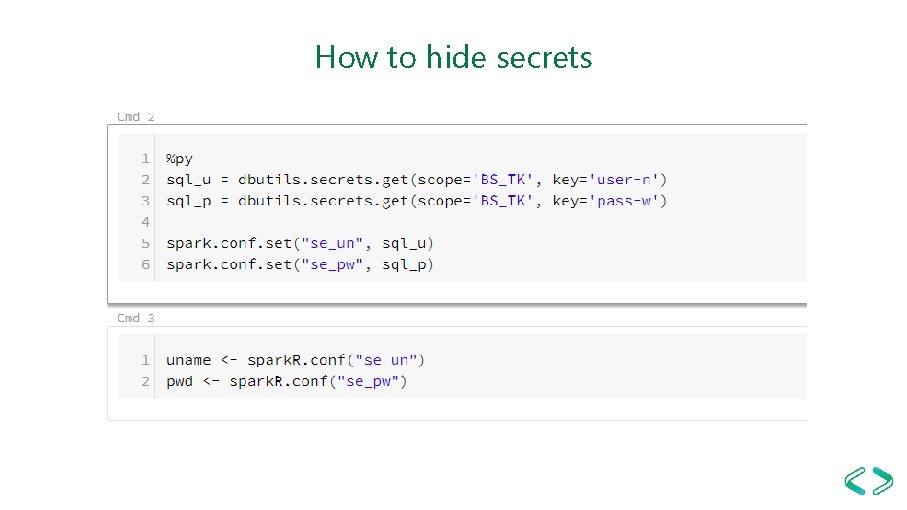

How to hide secrets

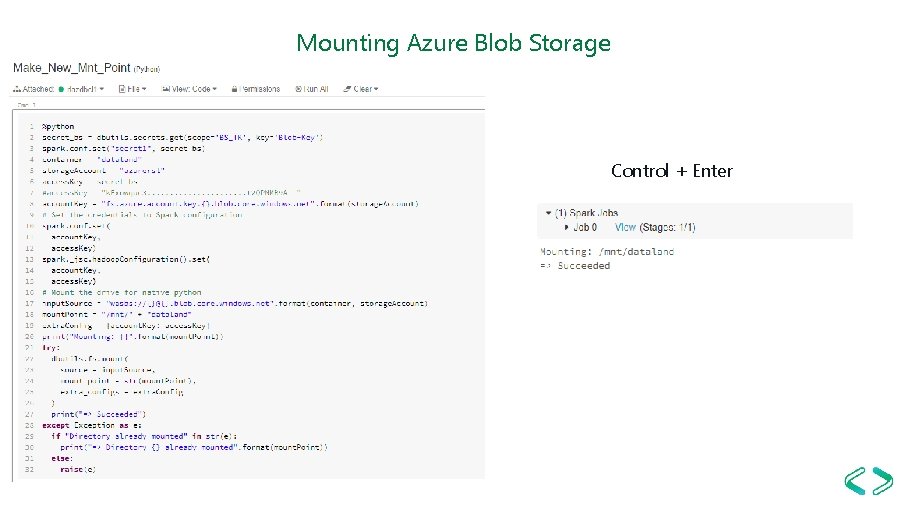

Mounting Azure Blob Storage Control + Enter

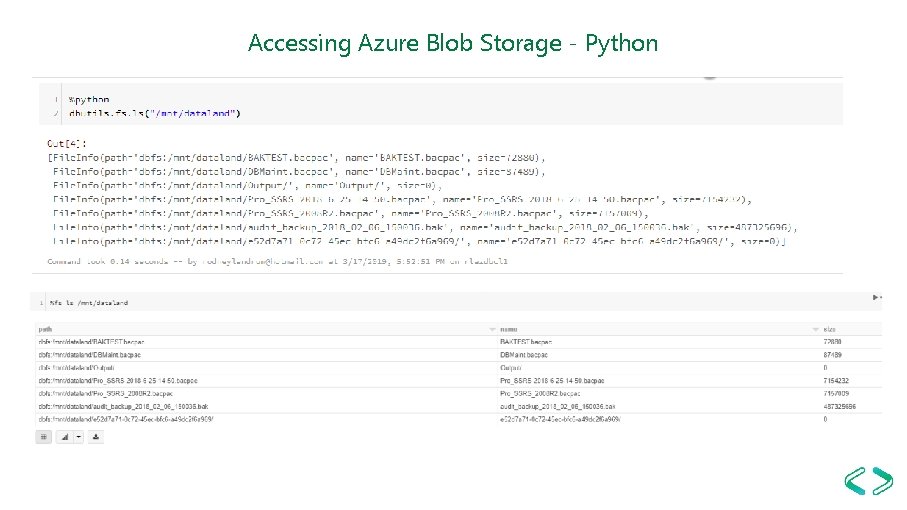

Accessing Azure Blob Storage - Python

Thanks to this video https: //www. youtube. com/watch? v=Duk 0 scd. O 9 P 0&t=307 s

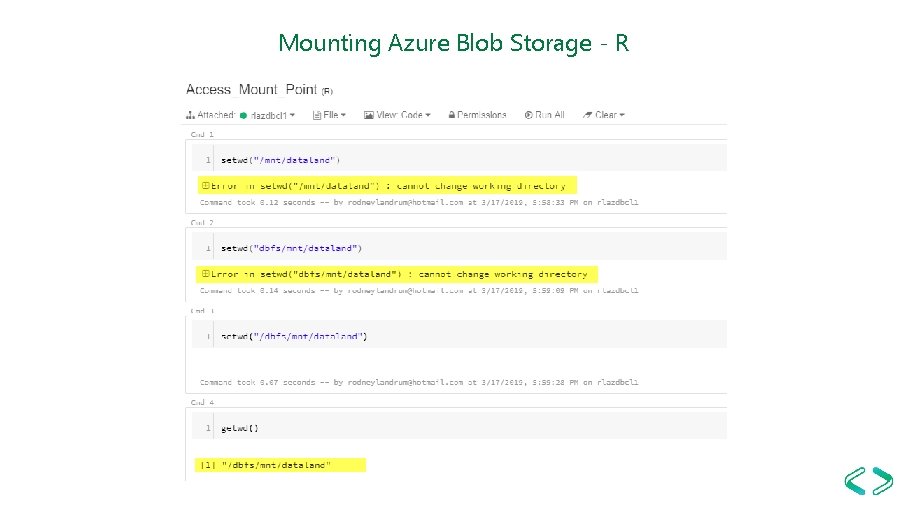

Mounting Azure Blob Storage - R

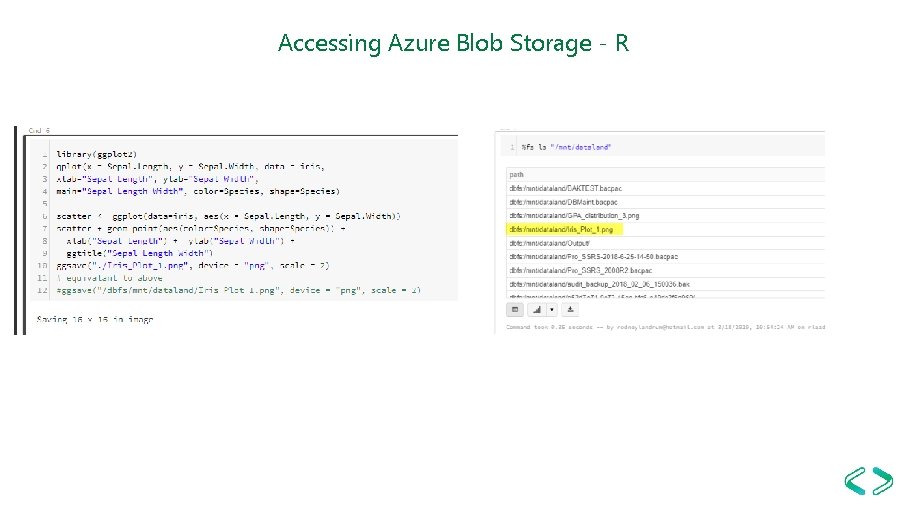

Accessing Azure Blob Storage - R

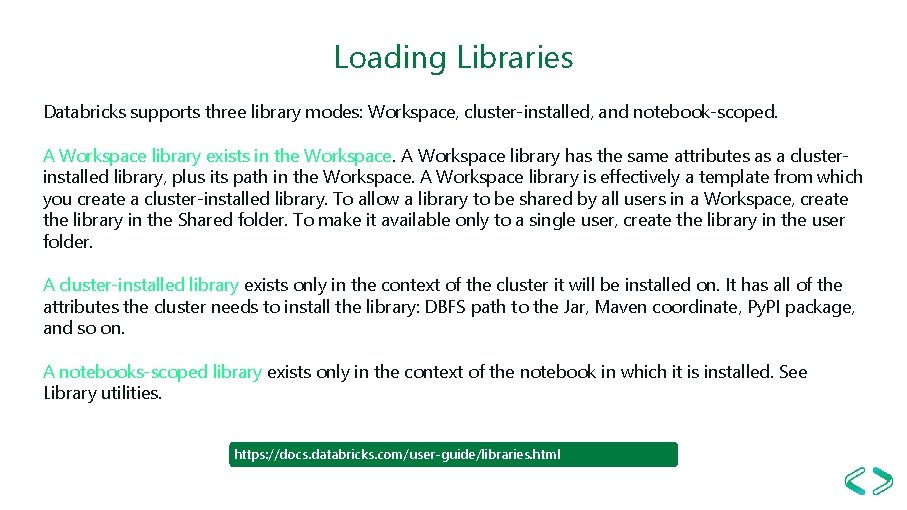

Loading Libraries Databricks supports three library modes: Workspace, cluster-installed, and notebook-scoped. A Workspace library exists in the Workspace. A Workspace library has the same attributes as a clusterinstalled library, plus its path in the Workspace. A Workspace library is effectively a template from which you create a cluster-installed library. To allow a library to be shared by all users in a Workspace, create the library in the Shared folder. To make it available only to a single user, create the library in the user folder. A cluster-installed library exists only in the context of the cluster it will be installed on. It has all of the attributes the cluster needs to install the library: DBFS path to the Jar, Maven coordinate, Py. PI package, and so on. A notebooks-scoped library exists only in the context of the notebook in which it is installed. See Library utilities. https: //docs. databricks. com/user-guide/libraries. html

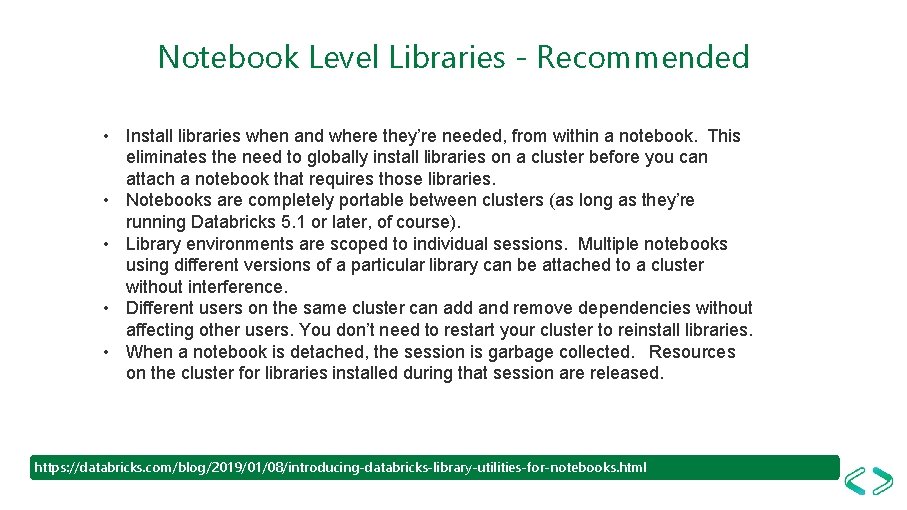

Notebook Level Libraries - Recommended • Install libraries when and where they’re needed, from within a notebook. This eliminates the need to globally install libraries on a cluster before you can attach a notebook that requires those libraries. • Notebooks are completely portable between clusters (as long as they’re running Databricks 5. 1 or later, of course). • Library environments are scoped to individual sessions. Multiple notebooks using different versions of a particular library can be attached to a cluster without interference. • Different users on the same cluster can add and remove dependencies without affecting other users. You don’t need to restart your cluster to reinstall libraries. • When a notebook is detached, the session is garbage collected. Resources on the cluster for libraries installed during that session are released. https: //databricks. com/blog/2019/01/08/introducing-databricks-library-utilities-for-notebooks. html

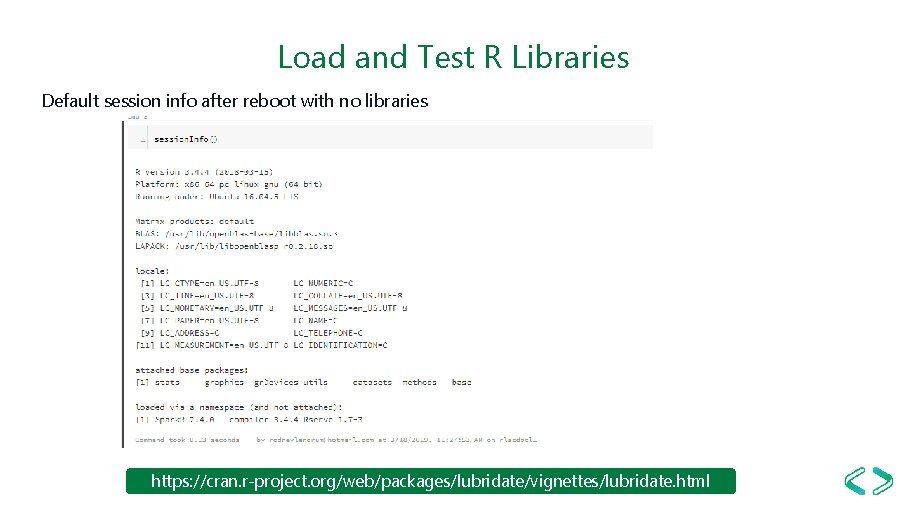

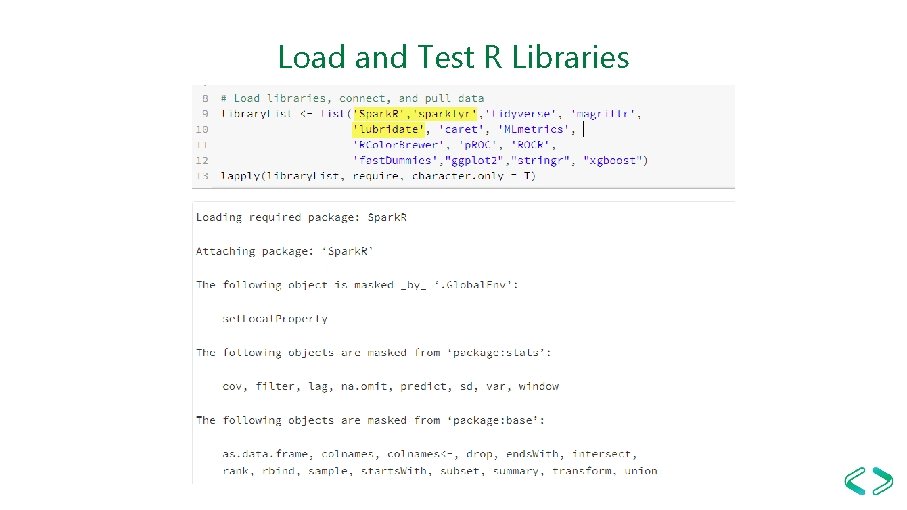

Load and Test R Libraries Default session info after reboot with no libraries https: //cran. r-project. org/web/packages/lubridate/vignettes/lubridate. html

Load and Test R Libraries

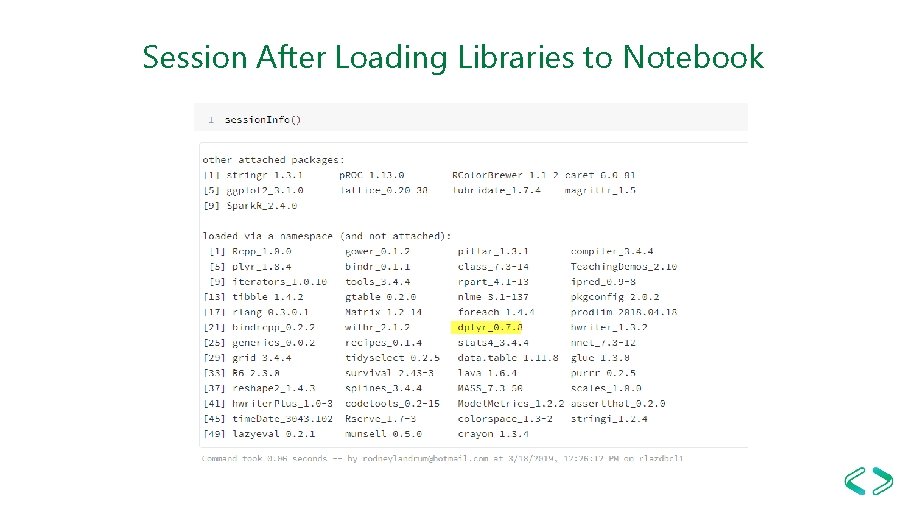

Session After Loading Libraries to Notebook

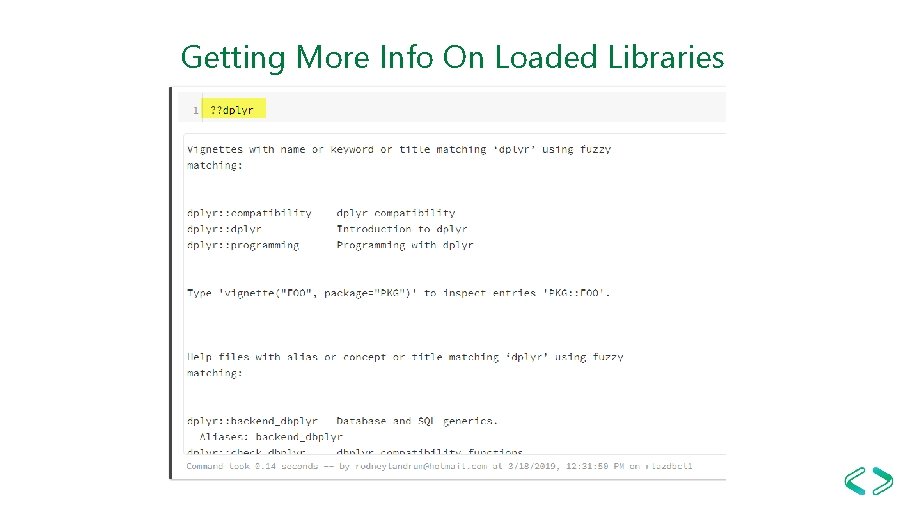

Getting More Info On Loaded Libraries

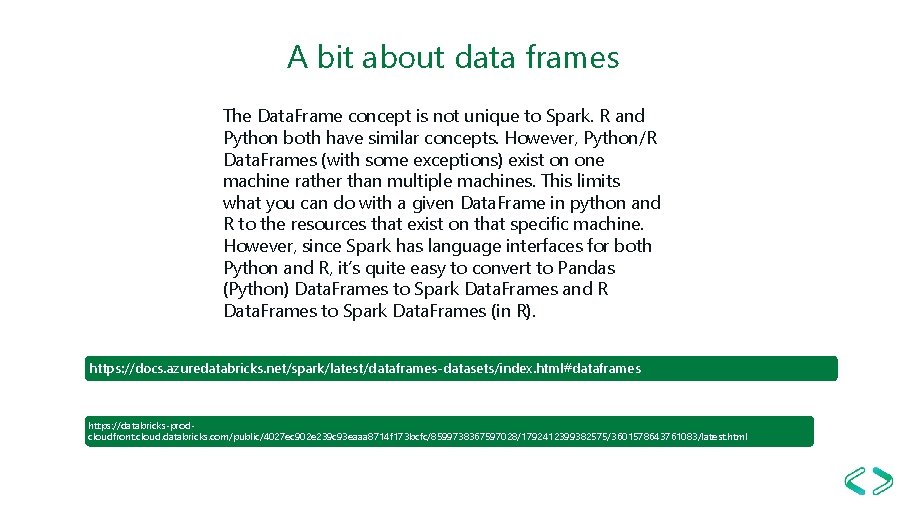

A bit about data frames The Data. Frame concept is not unique to Spark. R and Python both have similar concepts. However, Python/R Data. Frames (with some exceptions) exist on one machine rather than multiple machines. This limits what you can do with a given Data. Frame in python and R to the resources that exist on that specific machine. However, since Spark has language interfaces for both Python and R, it’s quite easy to convert to Pandas (Python) Data. Frames to Spark Data. Frames and R Data. Frames to Spark Data. Frames (in R). https: //docs. azuredatabricks. net/spark/latest/dataframes-datasets/index. html#dataframes https: //databricks-prodcloudfront. cloud. databricks. com/public/4027 ec 902 e 239 c 93 eaaa 8714 f 173 bcfc/8599738367597028/1792412399382575/3601578643761083/latest. html

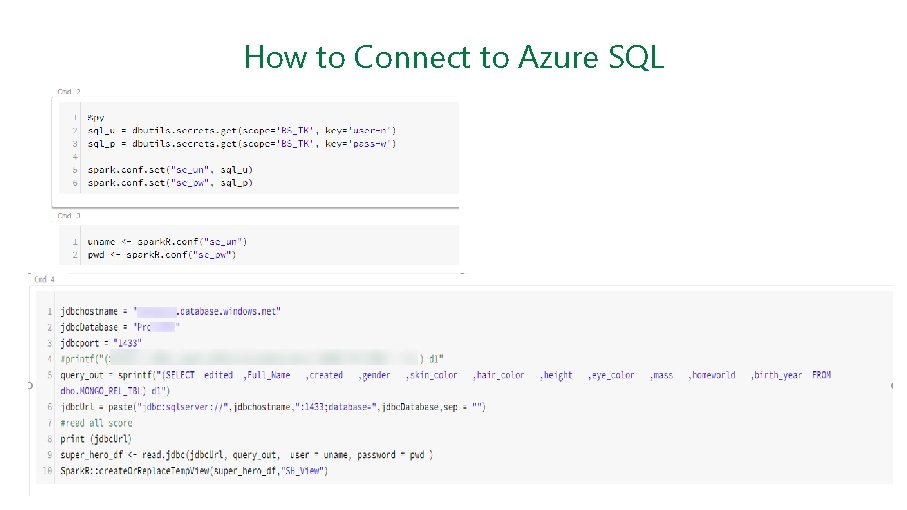

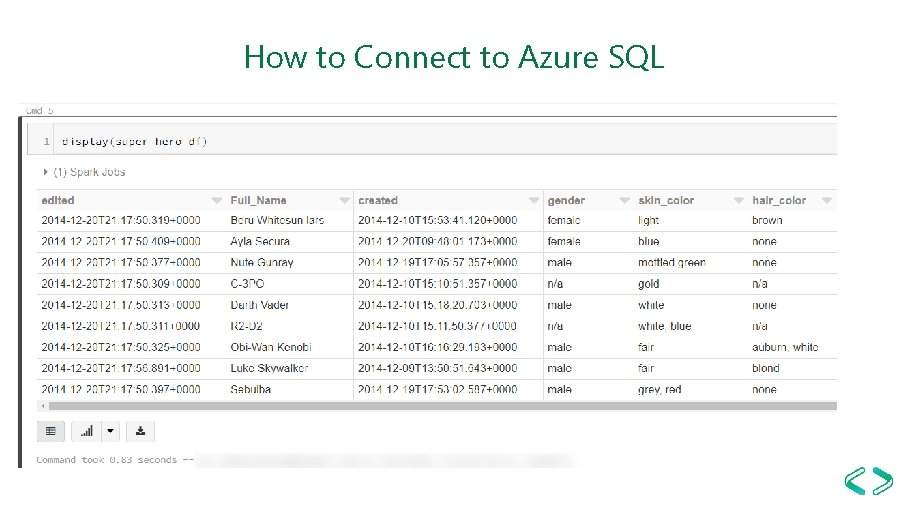

How to Connect to Azure SQL

How to Connect to Azure SQL

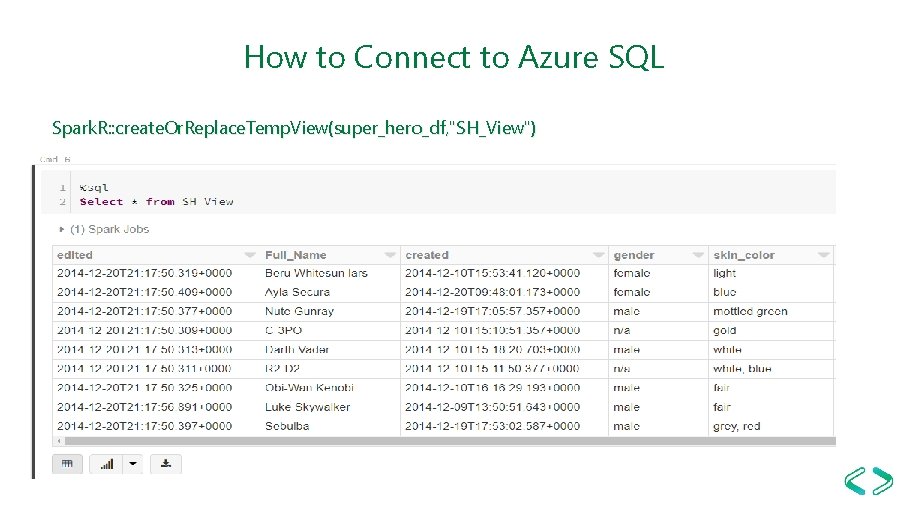

How to Connect to Azure SQL Spark. R: : create. Or. Replace. Temp. View(super_hero_df, "SH_View")

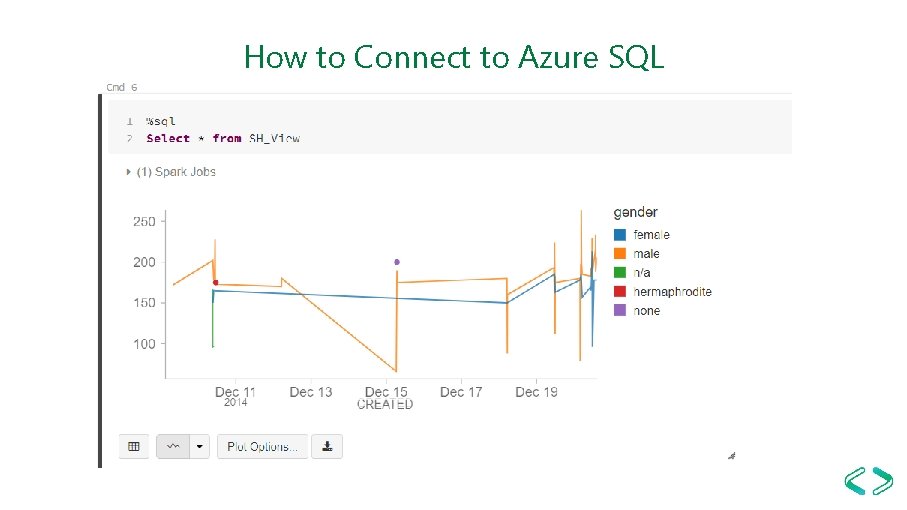

How to Connect to Azure SQL

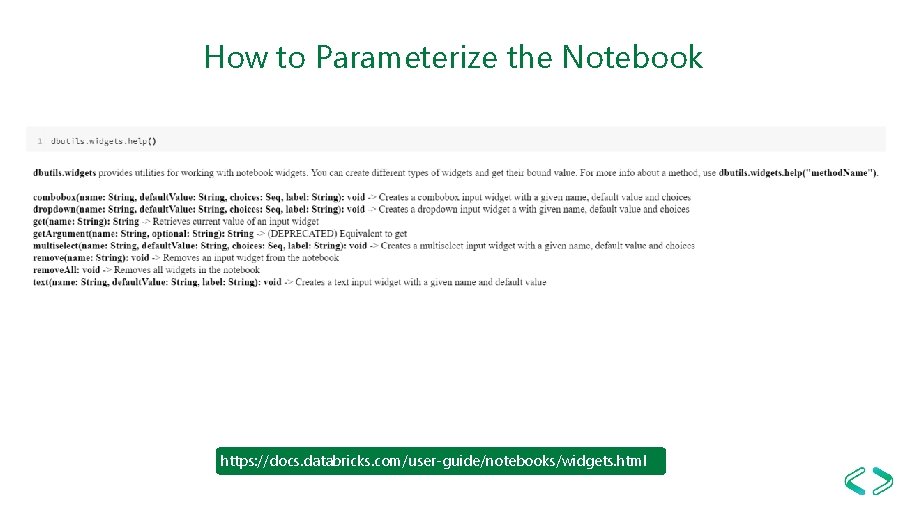

How to Parameterize the Notebook https: //docs. databricks. com/user-guide/notebooks/widgets. html

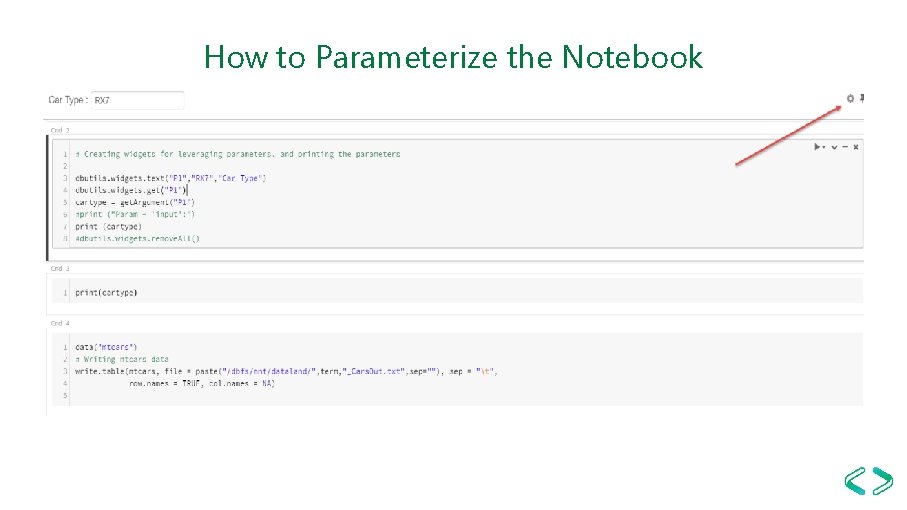

How to Parameterize the Notebook

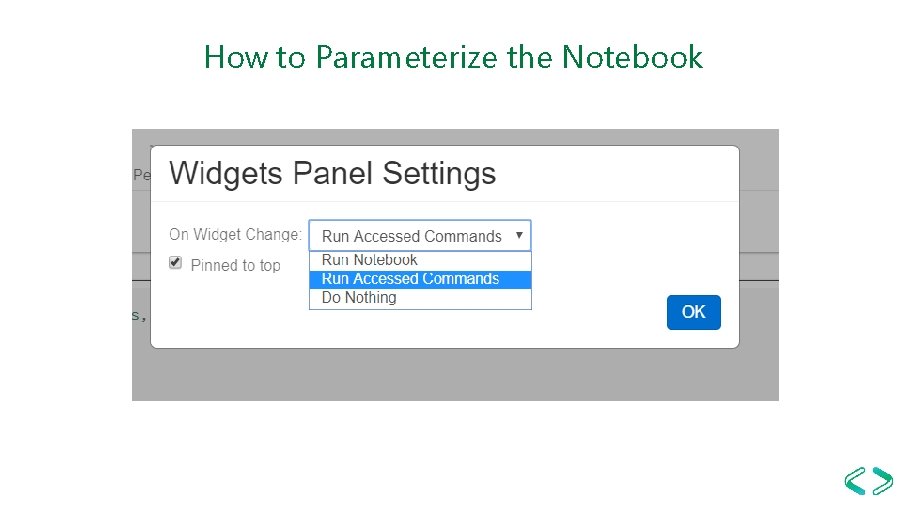

How to Parameterize the Notebook

In Sum • • • Assigned Permissions Loaded Libraries for R Accessed SQL and Blob with secrets Returned datasets for R and Spark R data frames Created parameters for notebook

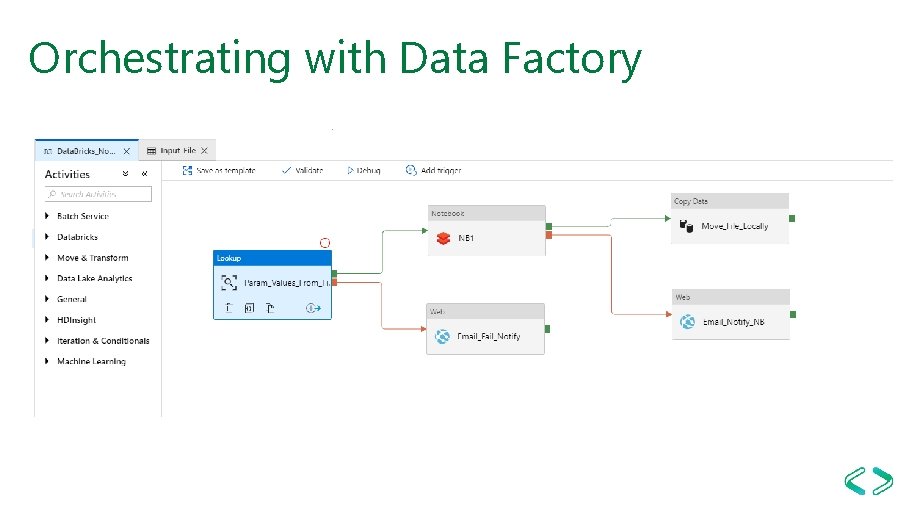

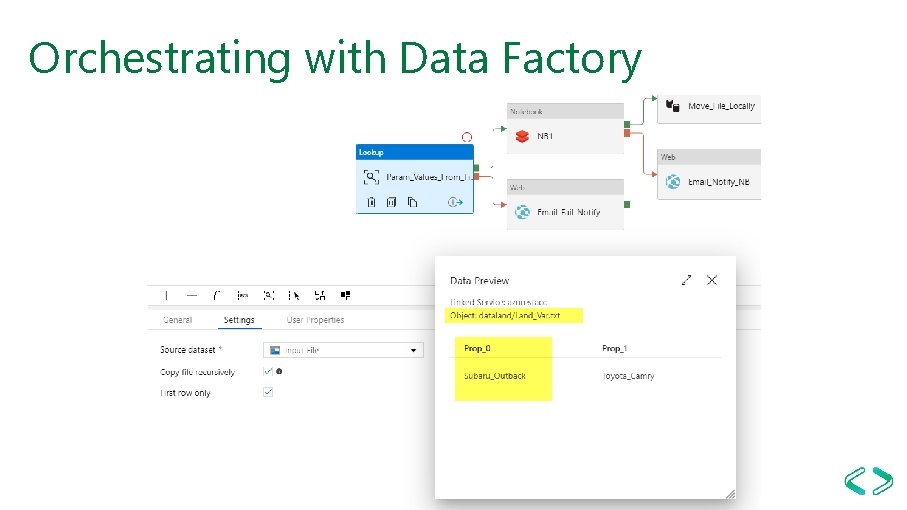

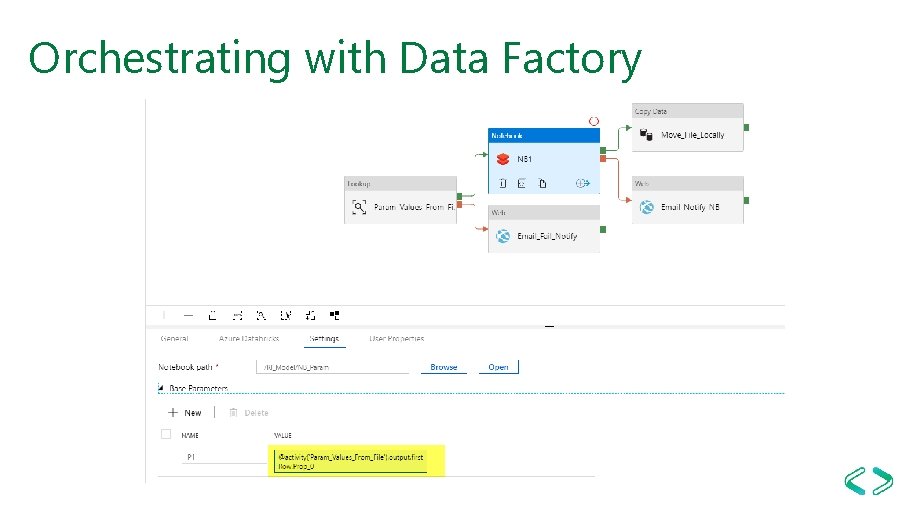

Orchestrating with Data Factory

Orchestrating with Data Factory

Orchestrating with Data Factory

Databricks and Data Factory Demo

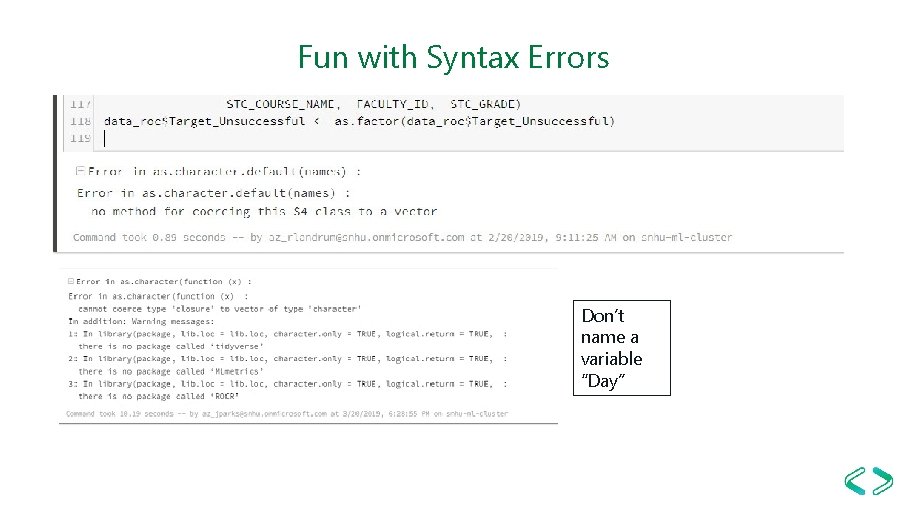

Fun with Syntax Errors Don’t name a variable “Day”

Questions

- Slides: 44