Automatic speech recognition on the articulation index corpus

- Slides: 18

Automatic speech recognition on the articulation index corpus Guy J. Brown and Amy Beeston Department of Computer Science University of Sheffield g. brown@dcs. shef. ac. uk EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 1

Aims • Eventual aim is to develop a ‘perceptual constancy’ frontend for automatic speech recognition (ASR). • Should be compatible with Watkins et al. findings but also validated on a ‘real world’ ASR task. – – – wider vocabulary range of reverberation conditions variety of speech contexts naturalistic speech, rather than interpolated stimuli consider phonetic confusions in reverberation in general • Initial ASR studies using articulation index corpus • Aim to compare human performance (Amy experiment) and machine performance on same task EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 2

Articulation index (AI) corpus • Recorded by Jonathan Wright (University of Pennsylvania) • Intended for speech recognition in noise experiments similar to those of Fletcher. • Suggested to us by Hynek Hermansky; utterances are similar to those used by Watkins: – American English – Target syllables are mostly nonsense, but some correspond to real words (including “sir” and “stir”) – Target syllables are embedded in a context sentence drawn from a limited vocabulary EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 3

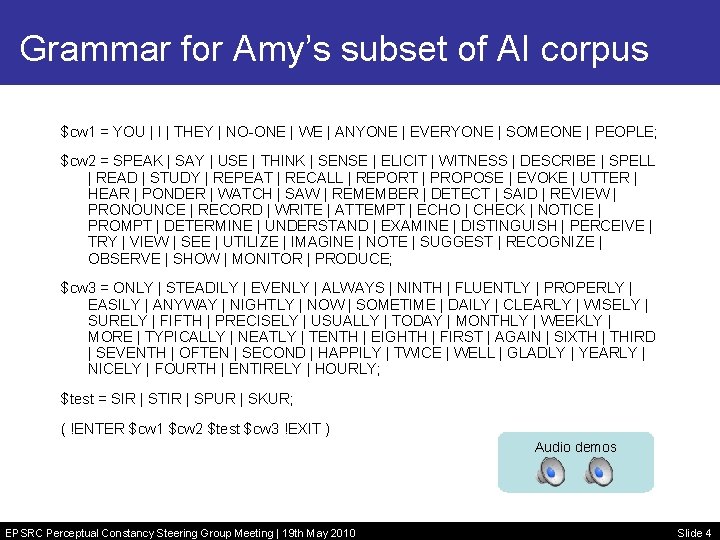

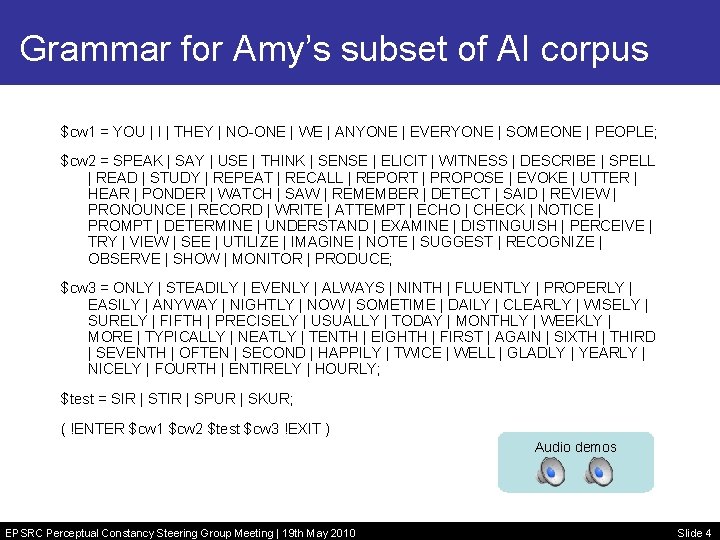

Grammar for Amy’s subset of AI corpus $cw 1 = YOU | I | THEY | NO-ONE | WE | ANYONE | EVERYONE | SOMEONE | PEOPLE; $cw 2 = SPEAK | SAY | USE | THINK | SENSE | ELICIT | WITNESS | DESCRIBE | SPELL | READ | STUDY | REPEAT | RECALL | REPORT | PROPOSE | EVOKE | UTTER | HEAR | PONDER | WATCH | SAW | REMEMBER | DETECT | SAID | REVIEW | PRONOUNCE | RECORD | WRITE | ATTEMPT | ECHO | CHECK | NOTICE | PROMPT | DETERMINE | UNDERSTAND | EXAMINE | DISTINGUISH | PERCEIVE | TRY | VIEW | SEE | UTILIZE | IMAGINE | NOTE | SUGGEST | RECOGNIZE | OBSERVE | SHOW | MONITOR | PRODUCE; $cw 3 = ONLY | STEADILY | EVENLY | ALWAYS | NINTH | FLUENTLY | PROPERLY | EASILY | ANYWAY | NIGHTLY | NOW | SOMETIME | DAILY | CLEARLY | WISELY | SURELY | FIFTH | PRECISELY | USUALLY | TODAY | MONTHLY | WEEKLY | MORE | TYPICALLY | NEATLY | TENTH | EIGHTH | FIRST | AGAIN | SIXTH | THIRD | SEVENTH | OFTEN | SECOND | HAPPILY | TWICE | WELL | GLADLY | YEARLY | NICELY | FOURTH | ENTIRELY | HOURLY; $test = SIR | STIR | SPUR | SKUR; ( !ENTER $cw 1 $cw 2 $test $cw 3 !EXIT ) Audio demos EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 4

ASR system • HMM-based phone recogniser – – implemented in HTK monophone models 20 Gaussian mixtures per state adapted from scripts by Tony Robinson/Dan Ellis • Bootstrapped by training on TIMIT then further 10 -12 iterations of embedded training on AI corpus • Word-level transcripts in AI corpus expanded to phones using the CMU pronunciation dictionary • All of AI corpus used for training, except the 80 utterances in Amy’s experimental stimuli EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 5

MFCC features • Baseline system trained using mel-frequency cepstral coefficients (MFCCs) – 12 MFCCs + energy + delta+acceleration (total 39 features per frame) – cepstral mean normalization • Baseline system performance on Amy’s clean subset of AI corpus (80 utterances, no reverberation): – 98. 75% context words correct – 96. 25% test words correct EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 6

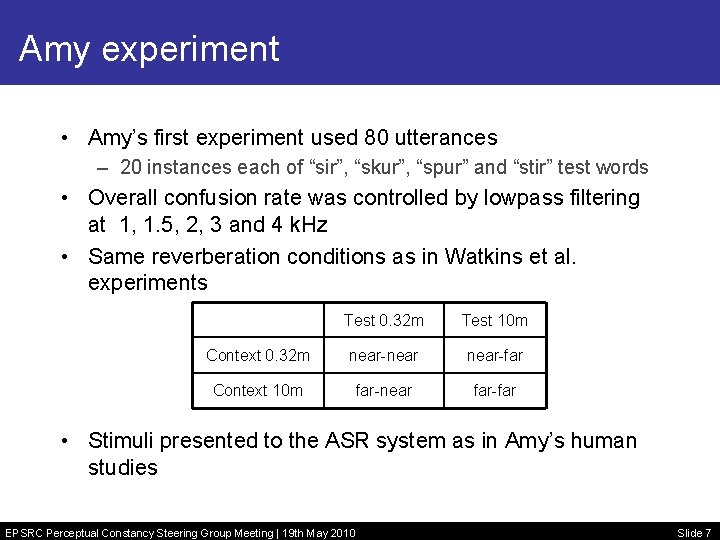

Amy experiment • Amy’s first experiment used 80 utterances – 20 instances each of “sir”, “skur”, “spur” and “stir” test words • Overall confusion rate was controlled by lowpass filtering at 1, 1. 5, 2, 3 and 4 k. Hz • Same reverberation conditions as in Watkins et al. experiments Test 0. 32 m Test 10 m Context 0. 32 m near-near-far Context 10 m far-near far-far • Stimuli presented to the ASR system as in Amy’s human studies EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 7

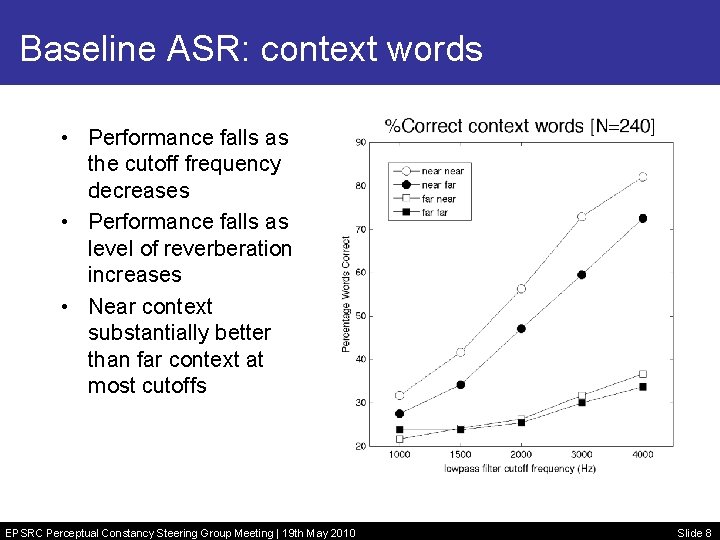

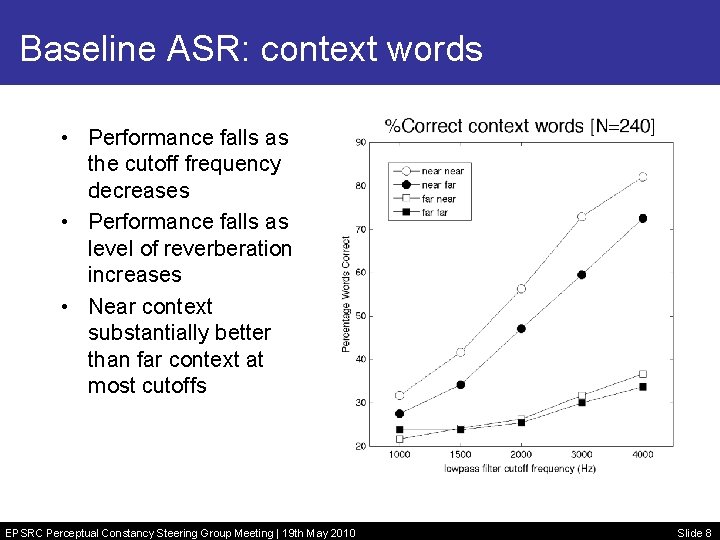

Baseline ASR: context words • Performance falls as the cutoff frequency decreases • Performance falls as level of reverberation increases • Near context substantially better than far context at most cutoffs EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 8

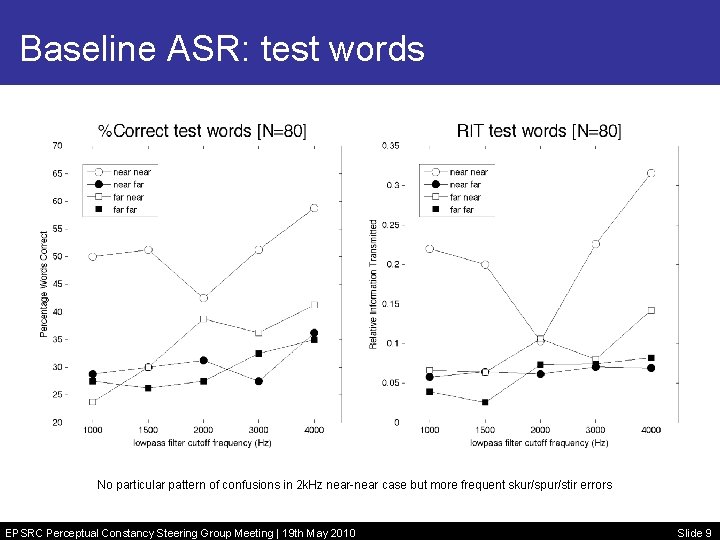

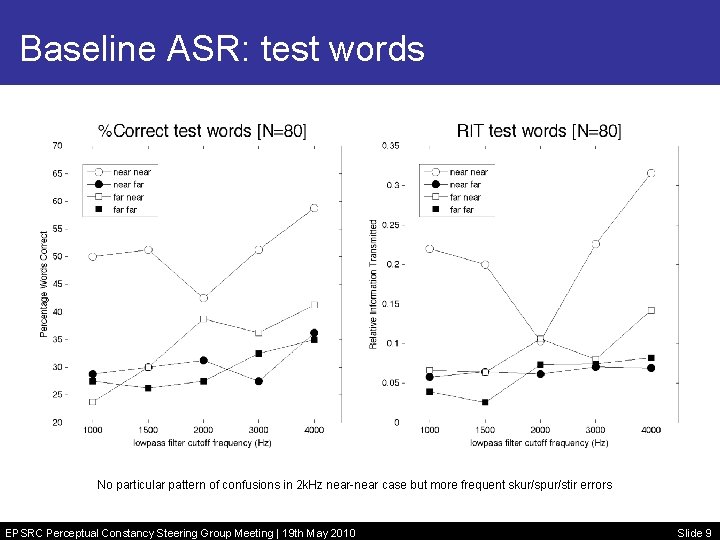

Baseline ASR: test words No particular pattern of confusions in 2 k. Hz near-near case but more frequent skur/spur/stir errors EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 9

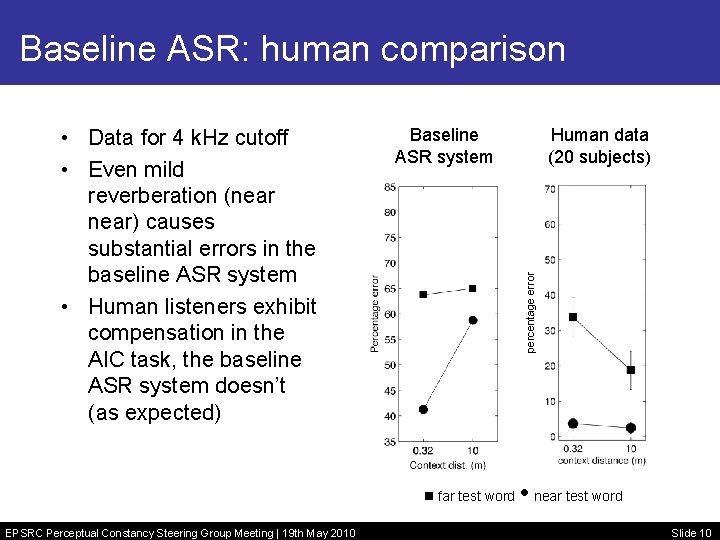

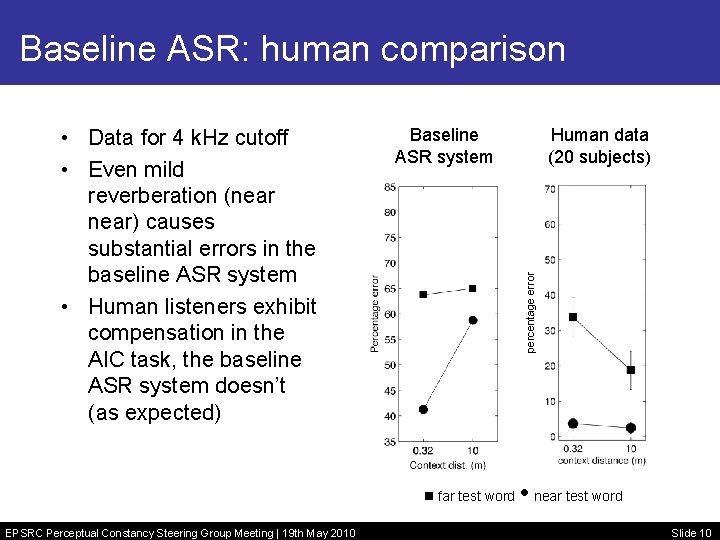

Baseline ASR: human comparison Baseline ASR system far test word EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Human data (20 subjects) percentage error • Data for 4 k. Hz cutoff • Even mild reverberation (near) causes substantial errors in the baseline ASR system • Human listeners exhibit compensation in the AIC task, the baseline ASR system doesn’t (as expected) near test word Slide 10

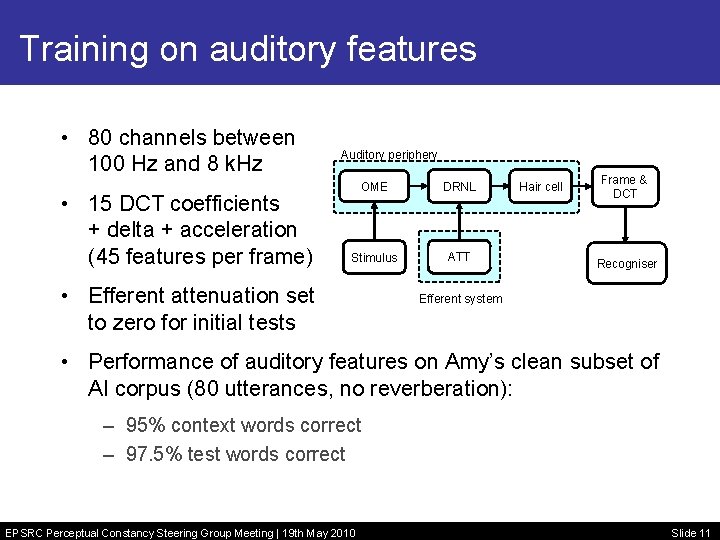

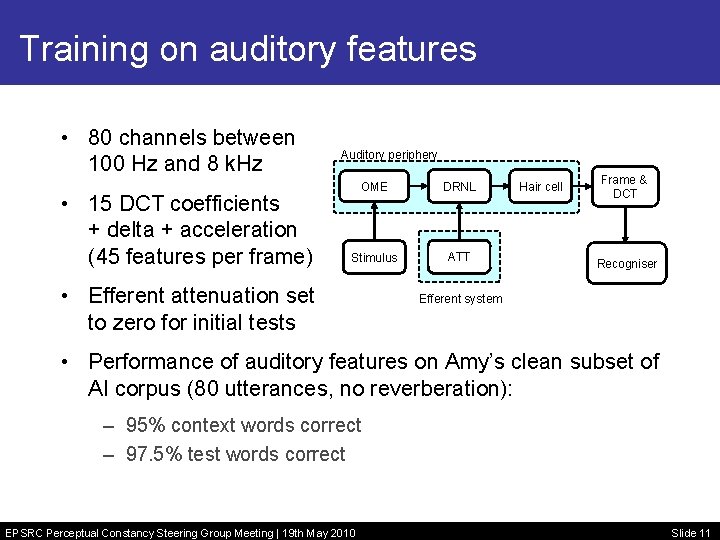

Training on auditory features • 80 channels between 100 Hz and 8 k. Hz • 15 DCT coefficients + delta + acceleration (45 features per frame) Auditory periphery OME DRNL Stimulus ATT • Efferent attenuation set to zero for initial tests Hair cell Frame & DCT Recogniser Efferent system • Performance of auditory features on Amy’s clean subset of AI corpus (80 utterances, no reverberation): – 95% context words correct – 97. 5% test words correct EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 11

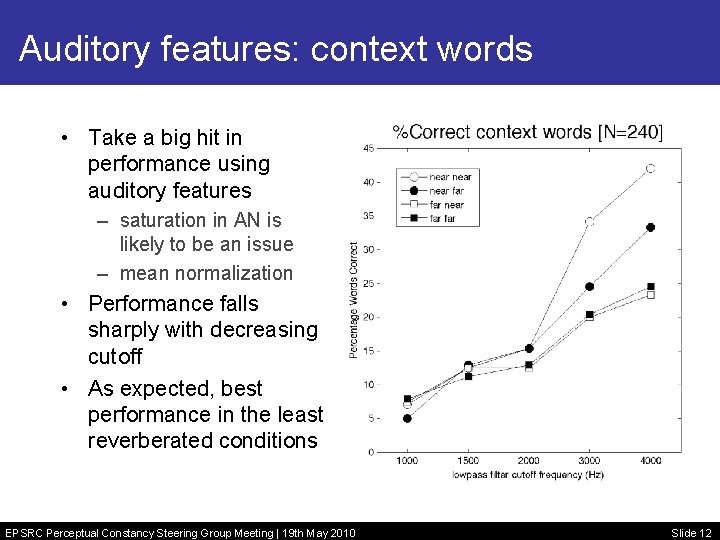

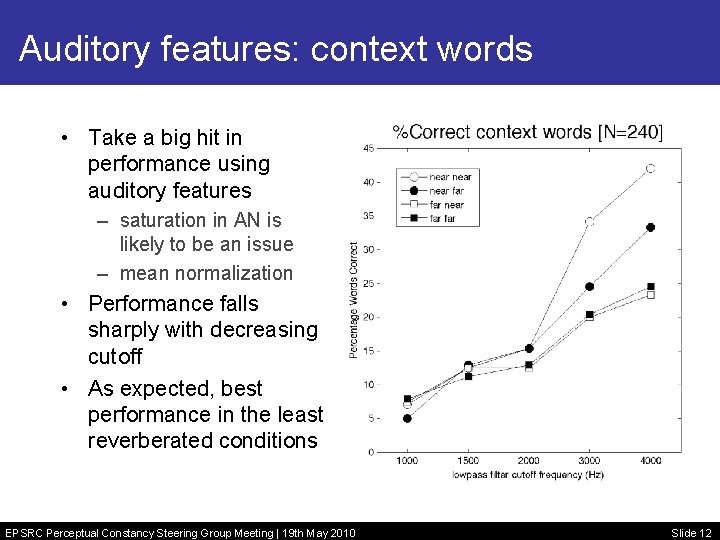

Auditory features: context words • Take a big hit in performance using auditory features – saturation in AN is likely to be an issue – mean normalization • Performance falls sharply with decreasing cutoff • As expected, best performance in the least reverberated conditions EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 12

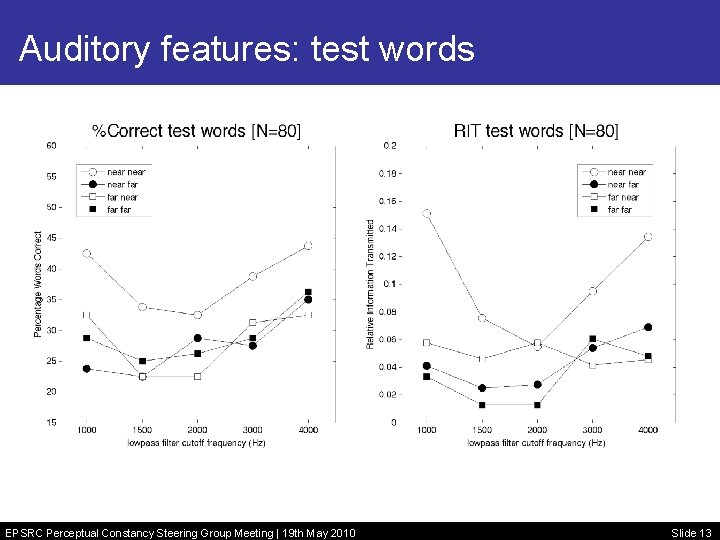

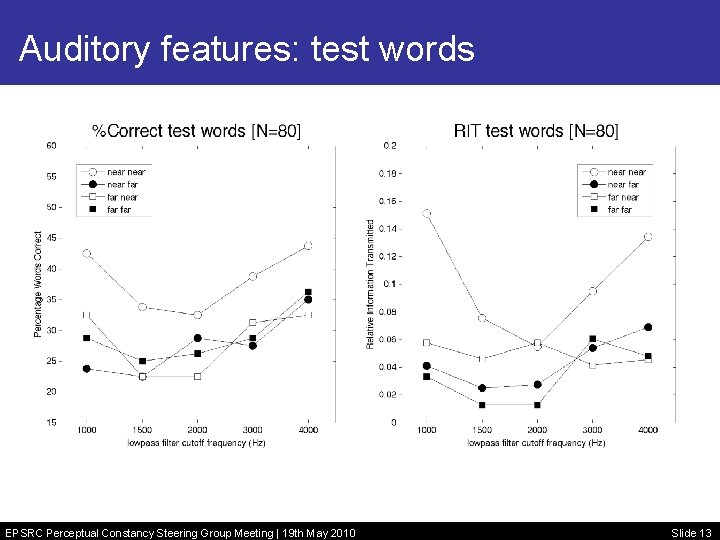

Auditory features: test words EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 13

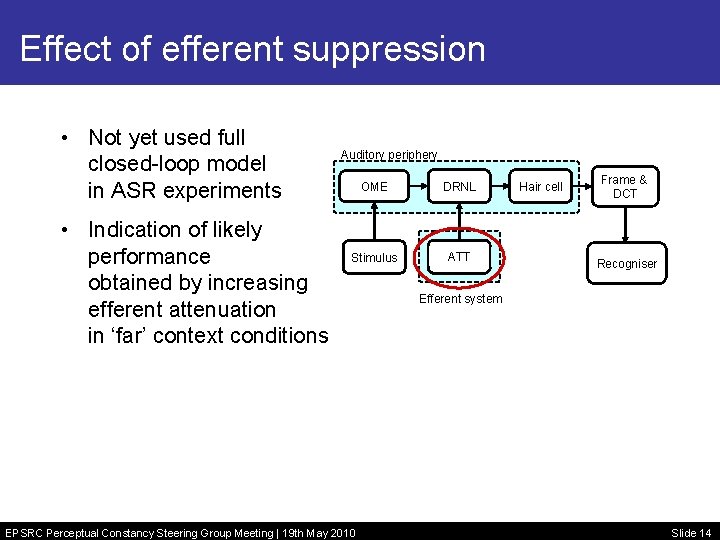

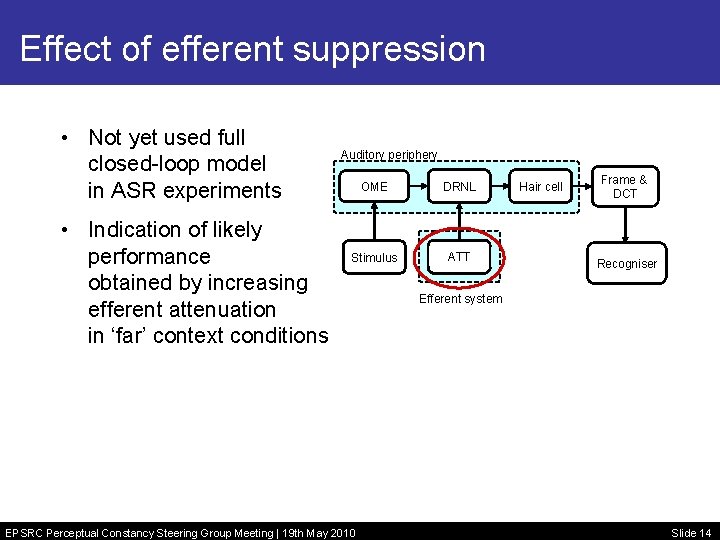

Effect of efferent suppression • Not yet used full closed-loop model in ASR experiments • Indication of likely performance obtained by increasing efferent attenuation in ‘far’ context conditions Auditory periphery OME DRNL Stimulus ATT EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Hair cell Frame & DCT Recogniser Efferent system Slide 14

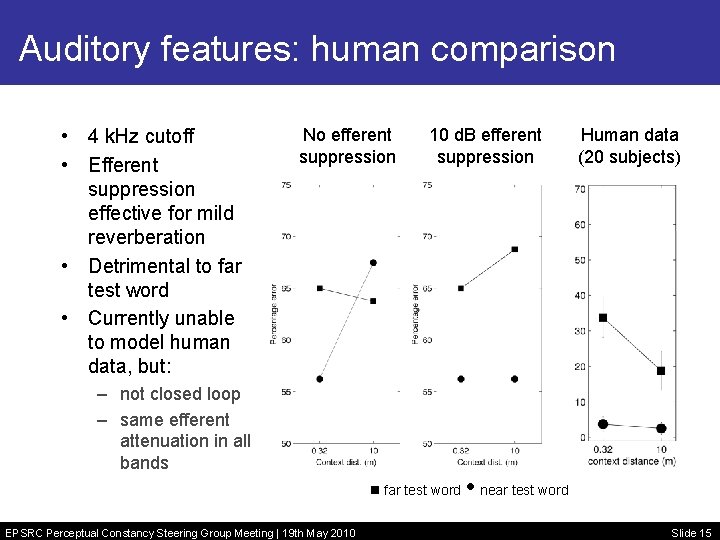

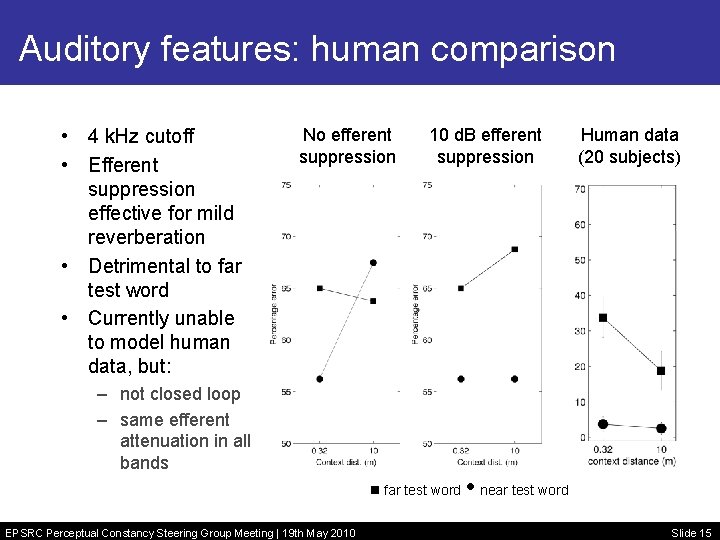

Auditory features: human comparison • 4 k. Hz cutoff • Efferent suppression effective for mild reverberation • Detrimental to far test word • Currently unable to model human data, but: No efferent suppression 10 d. B efferent suppression Human data (20 subjects) – not closed loop – same efferent attenuation in all bands far test word EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 near test word Slide 15

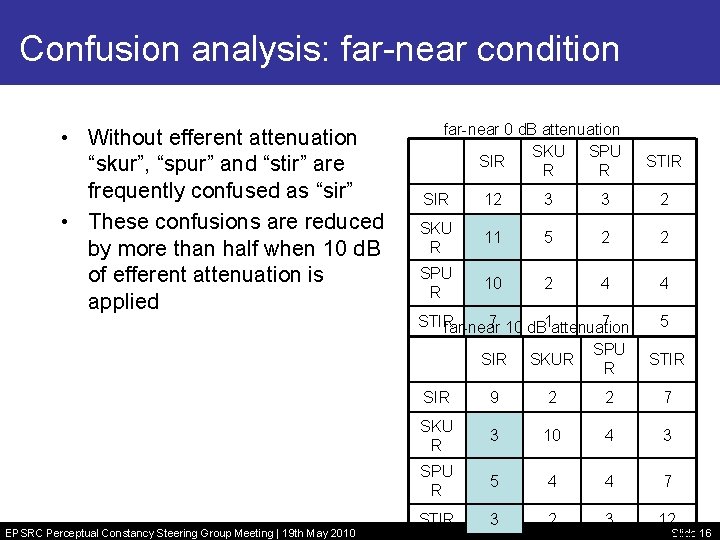

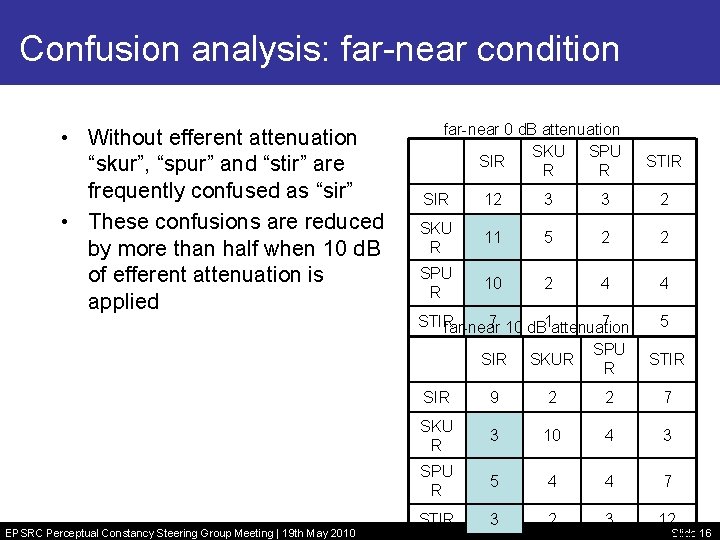

Confusion analysis: far-near condition • Without efferent attenuation “skur”, “spur” and “stir” are frequently confused as “sir” • These confusions are reduced by more than half when 10 d. B of efferent attenuation is applied EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 far-near 0 d. B attenuation SKU SPU SIR R R STIR SIR 12 3 3 2 SKU R 11 5 2 2 SPU R 10 2 4 4 STIR 7 10 d. B 1 attenuation 7 far-near SPU SIR SKUR R 5 STIR SIR 9 2 2 7 SKU R 3 10 4 3 SPU R 5 4 4 7 STIR 3 2 3 12 Slide 16

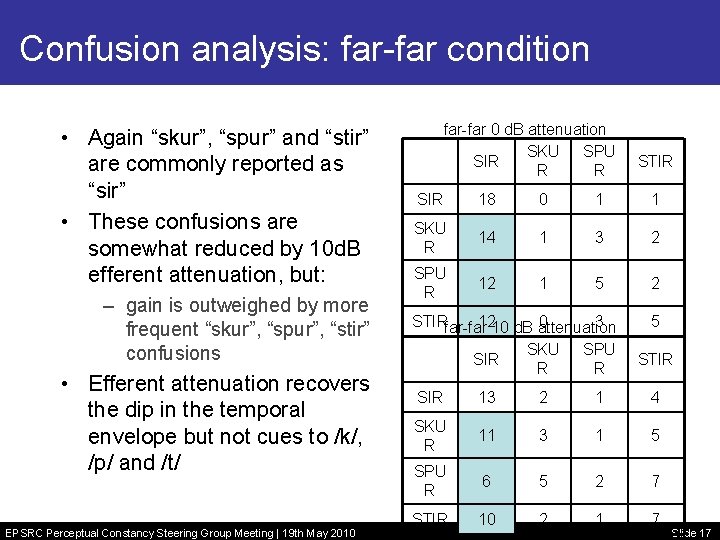

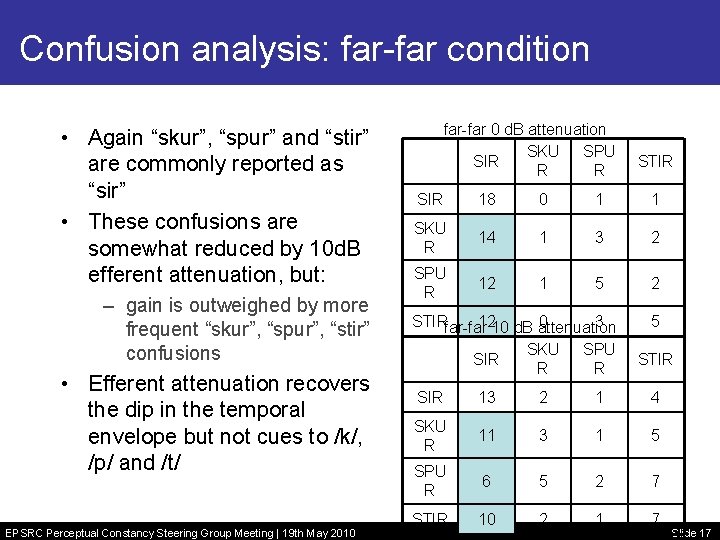

Confusion analysis: far-far condition • Again “skur”, “spur” and “stir” are commonly reported as “sir” • These confusions are somewhat reduced by 10 d. B efferent attenuation, but: – gain is outweighed by more frequent “skur”, “spur”, “stir” confusions • Efferent attenuation recovers the dip in the temporal envelope but not cues to /k/, /p/ and /t/ EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 far-far 0 d. B attenuation SKU SPU SIR R R STIR SIR 18 0 1 1 SKU R 14 1 3 2 SPU R 12 1 5 2 STIRfar-far 1210 d. B attenuation 0 3 SKU SPU SIR R R 5 STIR SIR 13 2 1 4 SKU R 11 3 1 5 SPU R 6 5 2 7 STIR 10 2 1 7 Slide 17

Summary • ASR framework in place for the AI corpus experiments • We can compare human and machine performance on the AIC task • Reasonable performance from baseline MFCC system • Need to address shortfall in performance when using auditory features • Haven’t yet tried the full within-channel model as a front end EPSRC Perceptual Constancy Steering Group Meeting | 19 th May 2010 Slide 18