AUTOMATIC PARTITIONING OF DATABASE APPLICATION Alvin Cheung Samuel

AUTOMATIC PARTITIONING OF DATABASE APPLICATION Alvin Cheung, Samuel Madden, Owen Arden, Andrew C. Myers Proceedings of the VLDB Endowment, Vol 5 No. 11, 2012 2014/12/02 M 1 Arnaud

The authors Alvin Cheung MIT CSAIL Andrew C. Myers Cornell University Departmentpt of Computer Science Owen Arden Cornell University Departmentpt of Computer Science Samuel Adden MIT CSAIL

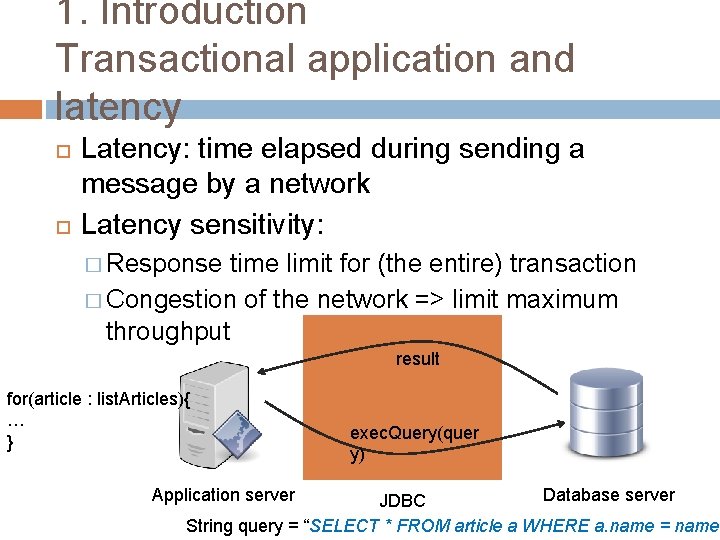

1. Introduction Transactional application and latency Latency: time elapsed during sending a message by a network Latency sensitivity: � Response time limit for (the entire) transaction � Congestion of the network => limit maximum throughput result for(article : list. Articles){ … } Application server exec. Query(quer y) Database server JDBC String query = “SELECT * FROM article a WHERE a. name = name”

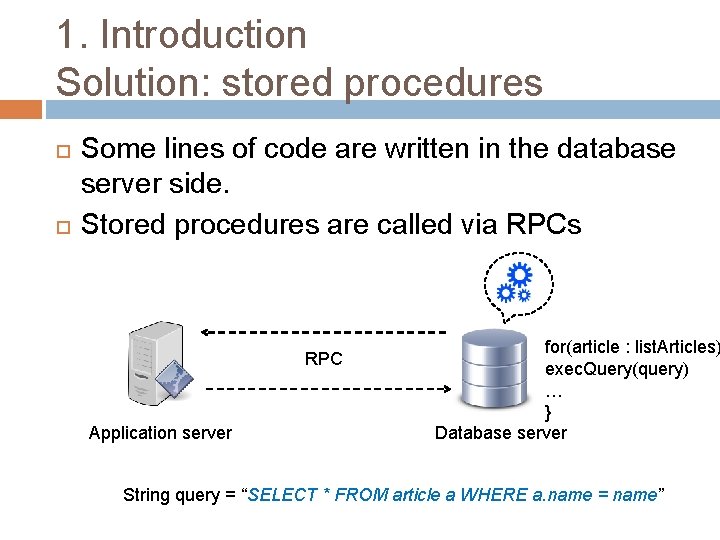

1. Introduction Solution: stored procedures Some lines of code are written in the database server side. Stored procedures are called via RPCs RPC Application server for(article : list. Articles) exec. Query(query) … } Database server String query = “SELECT * FROM article a WHERE a. name = name”

1. Introduction Issued with stored procedures Portability: stored procedure languages (PL/SQL, Transact. SQL…) are databasevendor specifics. Conversion effort: difficult to state which part of the code would be more efficient to be remote. Dynamic server load: if the database server is heavily loaded, it is not efficient to execute all the code on its side

1. Introduction The system Pyxis Automatically partition a database application into 2 pieces The partitioning is expected to be optimal for the latency due to network round trips. Switch into different partition architecture following the CPU load of the database server.

Plan of the presentation 1. Introduction 2. Overview of Pyxis 3. Detailed mechanisms of each step of the process 4. Experimentations 5. Conclusion

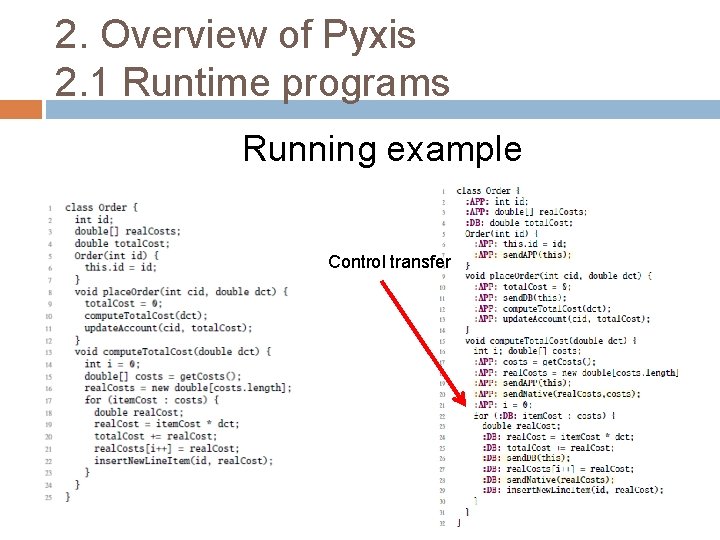

2. Overview of Pyxis 2. 1 Runtime programs 2 runtime java programs: one for each server Each statement of the source program is assigned to a partition. When 2 consecutive statement are placed in 2 different side => control transfer.

2. Overview of Pyxis 2. 1 Runtime programs Running example Control transfer

2. Overview of Pyxis 2. 1 Runtime programs Management of variables 2 important structures: stack and heap Stack: native local variables, arrays (address) Heap: objects, values of arrays Stack is intrinsically shared thanks to RPC Heap has to be explicitly up to date

2. Overview of Pyxis 2. 1 Runtime programs Management of variables 2 heaps in each partition: local heap and remote cache Local heap: variables defined in the local partition Remote heap: variables defined in the remote partition

2. Overview of Pyxis 2. 1 Runtime programs Management of variables Synchronization of heap by eager batched updates Modification of data in remote cache must be sent at each control transfer Modification of data in the local heap doesn’t need to be sent if they are not called in the

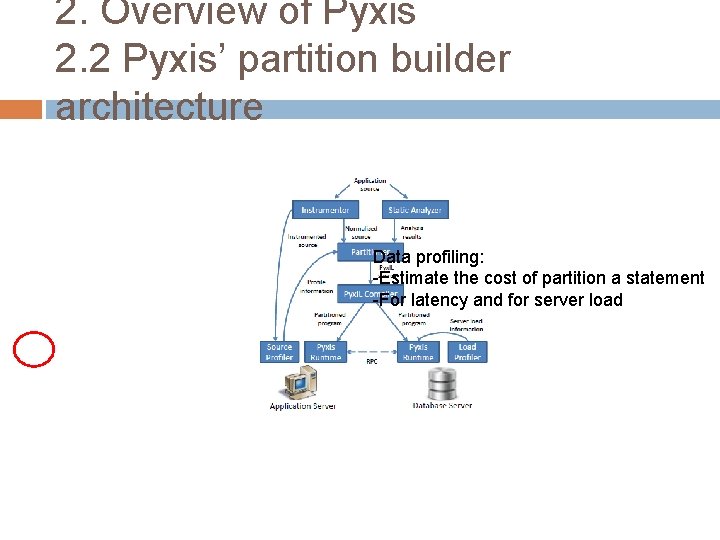

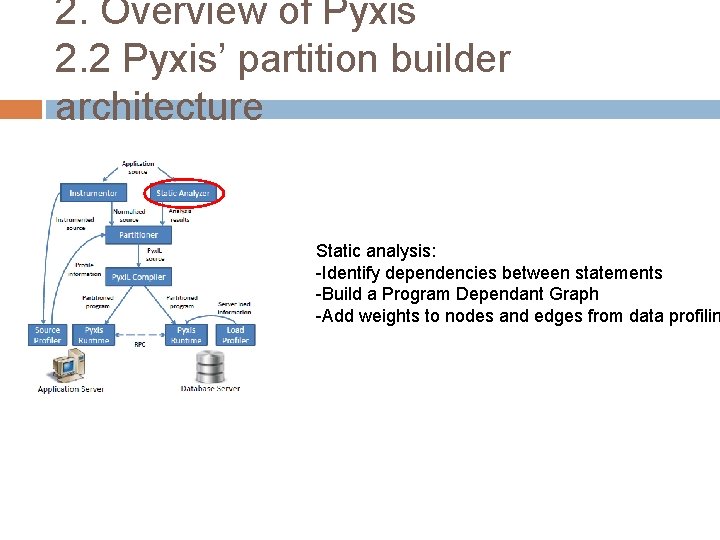

2. Overview of Pyxis 2. 2 Pyxis’ partition builder architecture Data profiling: -Estimate the cost of partition a statement -For latency and for server load

2. Overview of Pyxis 2. 2 Pyxis’ partition builder architecture Static analysis: -Identify dependencies between statements -Build a Program Dependant Graph -Add weights to nodes and edges from data profilin

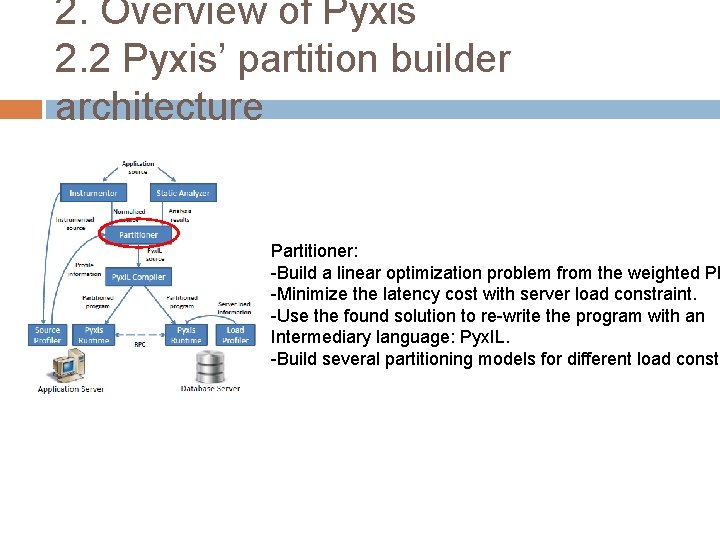

2. Overview of Pyxis 2. 2 Pyxis’ partition builder architecture Partitioner: -Build a linear optimization problem from the weighted PD -Minimize the latency cost with server load constraint. -Use the found solution to re-write the program with an Intermediary language: Pyx. IL. -Build several partitioning models for different load constr

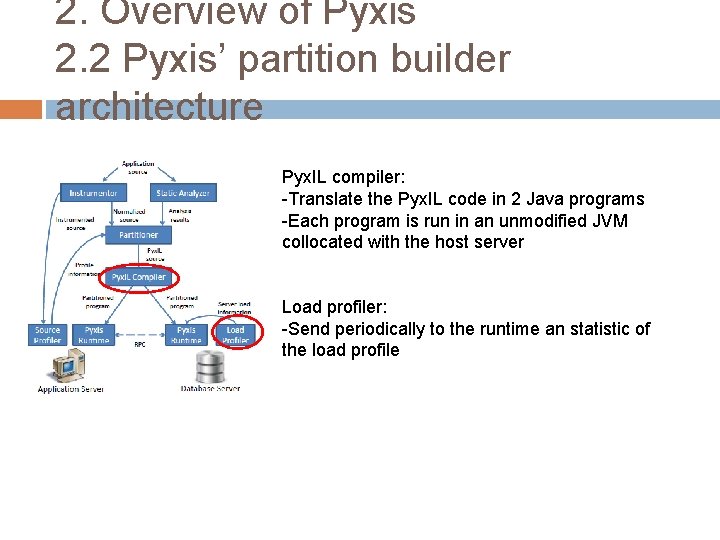

2. Overview of Pyxis 2. 2 Pyxis’ partition builder architecture Pyx. IL compiler: -Translate the Pyx. IL code in 2 Java programs -Each program is run in an unmodified JVM collocated with the host server Load profiler: -Send periodically to the runtime an statistic of the load profile

Plan of the presentation 1. Introduction 2. Overview of Pyxis 3. Detailed mechanisms of each step of the process 4. Experimentations 5. Conclusion

3. 1 Partitions conception 3. 1. 1 Profile data Goal: estimate the cost of latency and the load on the server for each statement. Execution of the application in order to retrieve information : � Number of time a statement is executed � Average size of assigned objects Information used to build a weight model in the graph dependency This step has to be run again each time the app is updated

3. 1 Partitions conception 3. 1. 2 Partition graph Goal: determine dependencies between statement Two types of dependencies: � Data dependencies : each statement which is an assignment is linked to statements which observe them. � Control dependencies: each statement whose result influence the execution of other statement is linked to each of those statements (conditions, loops) Conservative analysis: some identified dependencies may not be necessary, but accuracy � => Not necessary optimal model

3. 1 Partitions conception 3. 1. 2 Partition graph Different kind of edges: � Control edge: refers to a control dependency between 2 statements � Data edge: refers to a data dependency between a defined variable statement and one which uses this variable. � Update edge: refers to a data dependency between a defined variable statement and one which sets this variable.

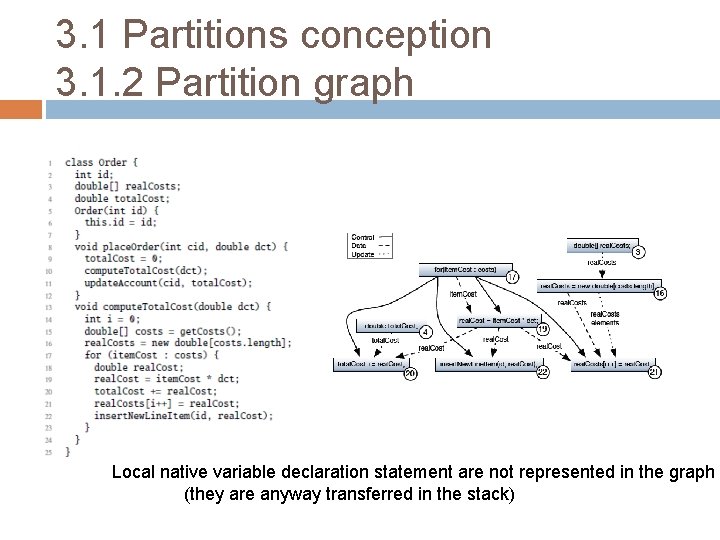

3. 1 Partitions conception 3. 1. 2 Partition graph Local native variable declaration statement are not represented in the graph (they are anyway transferred in the stack)

3. 1 Partitions conception 3. 1. 2 Partition graph Weights With information from data profile, nodes and edges are weighted. Statement node’s weight influence the server load Dependencies node’s weight influence the latency

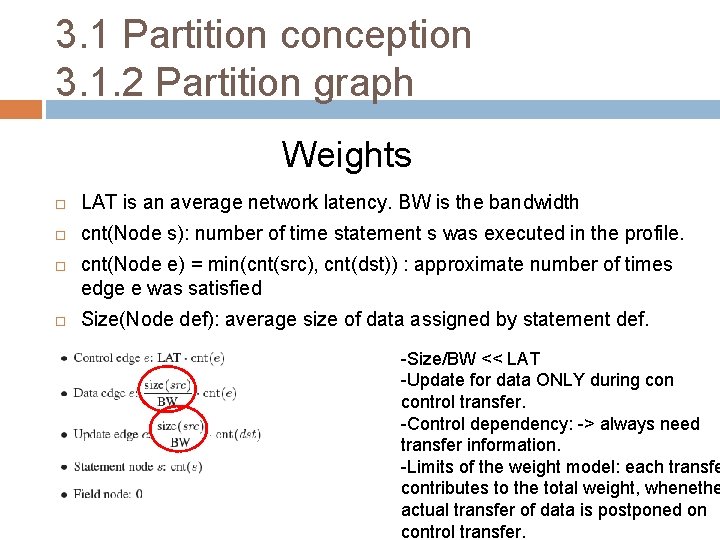

3. 1 Partition conception 3. 1. 2 Partition graph Weights LAT is an average network latency. BW is the bandwidth cnt(Node s): number of time statement s was executed in the profile. cnt(Node e) = min(cnt(src), cnt(dst)) : approximate number of times edge e was satisfied Size(Node def): average size of data assigned by statement def. -Size/BW << LAT -Update for data ONLY during control transfer. -Control dependency: -> always need transfer information. -Limits of the weight model: each transfe contributes to the total weight, whenethe actual transfer of data is postponed on control transfer.

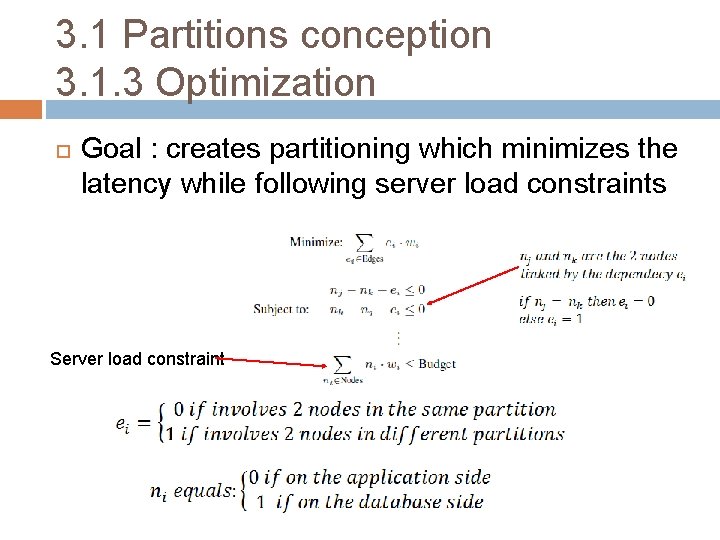

3. 1 Partitions conception 3. 1. 3 Optimization Goal : creates partitioning which minimizes the latency while following server load constraints Server load constraint

3. 1 Partitions conception 3. 1. 3 Optimization Additional constraints Some statement are imposed to be stored in a specific server Examples: � JDBD API has all to be on the same partition (JDBC drivers has unserializable native states). � Code that prints on the user’s console => application server

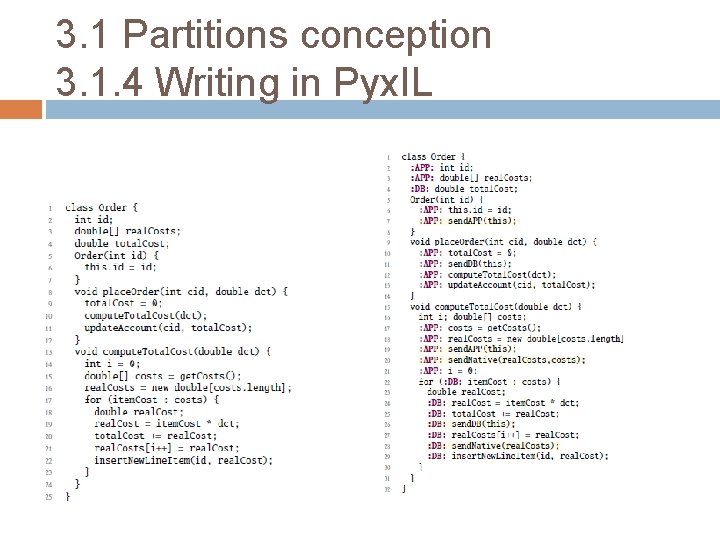

3. 1 Partitions conception 3. 1. 4 Writing in Pyx. IL Each statement is assigned a label: APP or DB… about local native objects. Heap synchronization each time a dependency edge is cut (but synchronization is always postponed to control transfer) send. APP(Obj o) and send. DB(Obj o): aggregate the modifications of the object’s locally placed attributes in parameter and send it in next control transfer. send. Nativ(native n): used to transfer arrays’ values.

3. 1 Partitions conception 3. 1. 4 Writing in Pyx. IL

3. 1 Partitions conception 3. 1. 4 Writing in Pyx. IL Eagerly sync versus lazy sync Eagerly sync: send modification each time a dependency is cut. Lazy synchronization: send the modification when it is asked by the dependent statement. � Useful is the dependent statement is hardly to occurs. � Pyxis can handle lazy synchronization, but is not yet hybrid… => future work

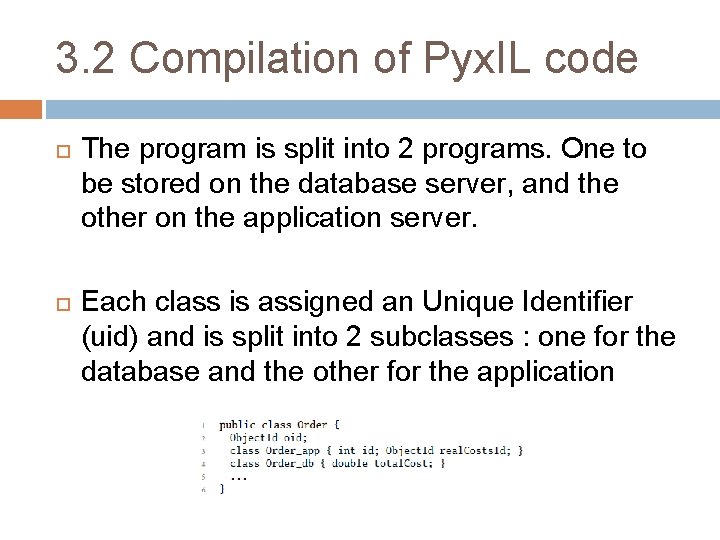

3. 2 Compilation of Pyx. IL code The program is split into 2 programs. One to be stored on the database server, and the other on the application server. Each class is assigned an Unique Identifier (uid) and is split into 2 subclasses : one for the database and the other for the application

3. 2 Compilation of Pyx. IL code Each method of the program is split into execution blocks. Execution blocs are straight-line code fragment. They stop: � When another method inherited from the original source code is called � When a control transfer occurs � When a loop or a condition is called

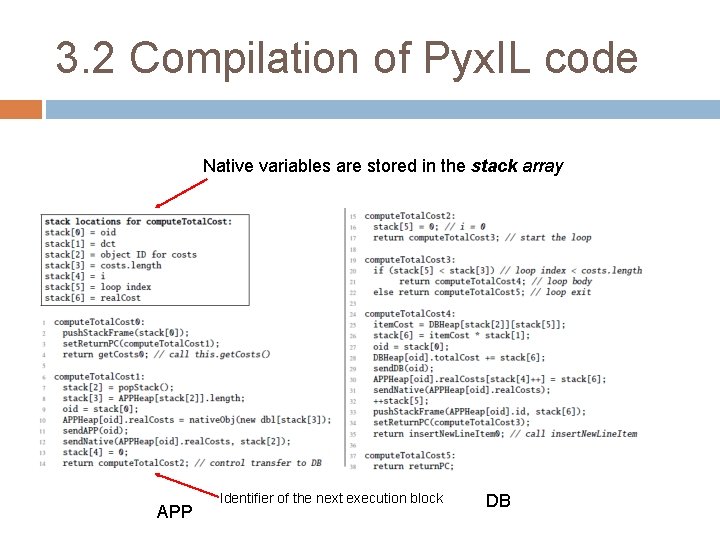

3. 2 Compilation of Pyx. IL code Native variables are stored in the stack array APP Identifier of the next execution block DB

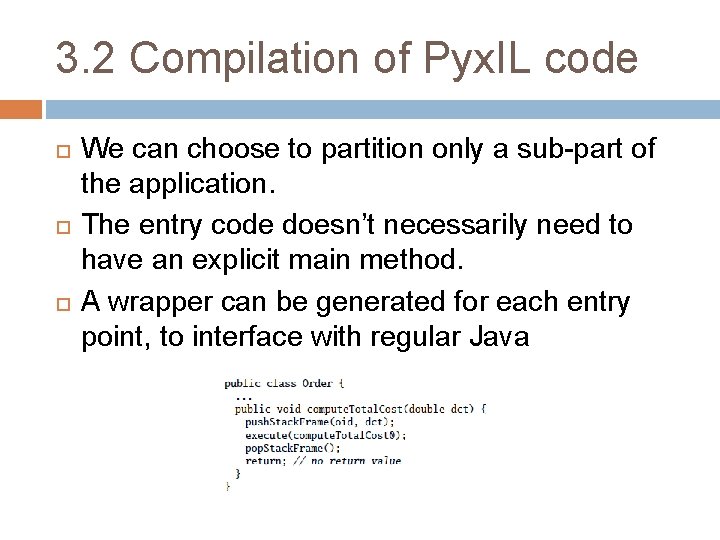

3. 2 Compilation of Pyx. IL code We can choose to partition only a sub-part of the application. The entry code doesn’t necessarily need to have an explicit main method. A wrapper can be generated for each entry point, to interface with regular Java

3. 3 Runtime Each execution block is turned into a java class with a call method. Each call method include the program logic of the block When a call method encounter a control transfer, the runtime blocks and wait the control transfer message of the remote host. Pyxis doesn’t allow multithreading inside the partitioned code.

3. 3 Running Dynamic partition switch Programmer input switching partition constraints for a set of threshold values for the server load. The server computes with a certain frequency the current load of the server St. In order not to have violent switches, the server send to the runtime a weighted moving average Lt of the load: alpha is between 0 and 1.

Plan of the presentation 1. Introduction 2. Overview of Pyxis 3. Detailed mechanisms of each step of the process 4. Experimentations 5. Conclusion

4. Experiments 4. 1: overview of the experiments Using TPC-C and TPC-W benchmarks. For each Benchmark, 3 different version � JDBC: the program runs entirely on the application server using JDBC to connect to the database � Manual: the program is manually split into 2 partitions. The application server’s one is only an interface to call the method stored on database server. � Pyxis: 2 partition deployed for different values of CPU budget.

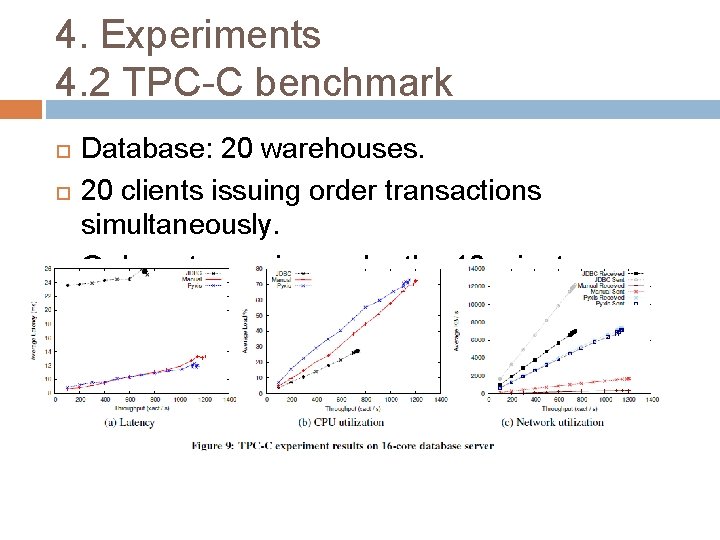

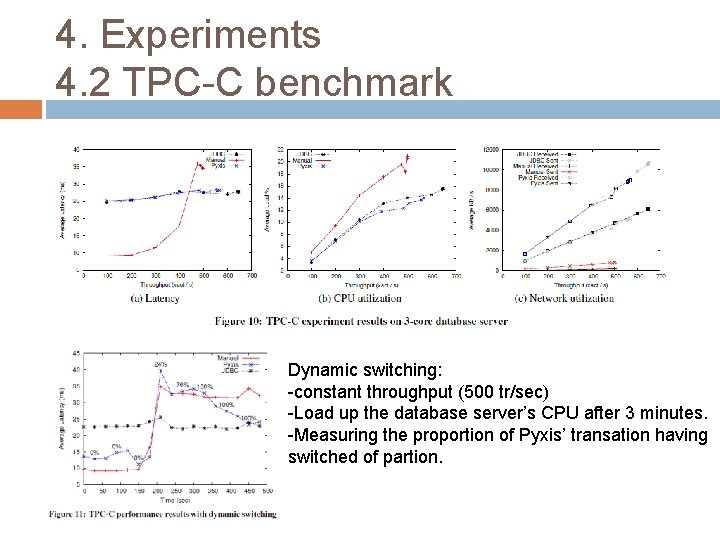

4. Experiments 4. 2 TPC-C benchmark Database: 20 warehouses. 20 clients issuing order transactions simultaneously. Order rate varying under the 10 minutes

4. Experiments 4. 2 TPC-C benchmark Dynamic switching: -constant throughput (500 tr/sec) -Load up the database server’s CPU after 3 minutes. -Measuring the proportion of Pyxis’ transation having switched of partion.

4. Experiments 4. 3 TPC-W benchmark is more complex than TPC-C. Includes more operation not related to database operations. Useful to see the overhead of Pyxil due to the weight of synchronization heap operations. First experimentation: database and application server on the same machine => 6 times overhead compared to the simple Java implementation Second experimentations: similar to TCP-C ones

Conclusion Pyxis is a system for automatically partitioning database, in order to reduce the latency due to the round-trip with the database server. Benefits: � Users don’t need to convert their code manually into stored procedures � Dynamic identification of server load constraints. Result from the experiences: Pyxis reduces 3 x latency and can handle 1. 7 x more throughput than JDBC-based implementation on TPC-C

- Slides: 40