Automatic Fluency Assessment Suma Bhat The Problem Language

Automatic Fluency Assessment Suma Bhat

The Problem • Language fluency – Component of oral proficiency – Indicative of effort of speech production – Indicates effectiveness of speech • Language proficiency testing – Automated methods of language assessment • Fundamental importance – Automatic assessment of language fluency 2

Why is it hard? • Fluency a subjective quantity • Measurement of fluency requires – Choice of right quantifiers – Means of measuring the quantifiers • Automatic scores should – Correlate well with human assessment – Interpretable 3

Automatic Speech Scoring • Automatic scoring of predictable speech – factual information in short answers (Leacock & Chodorow, 2003) – read speech • Phone. Pass (Bernstein, 1999) • Automatic scoring of unpredictable speech – spontaneous speech • Speech. Rater (Zechner, 2009) 4

State of the art • Speech. Rater from Educational Testing Services (2008, 2009) – Uses ASR for automatic assessment of English speaking proficiency – In use as online practice test for TOEFL internet based test (i. BT) takers since 2006 5

Proficiency assessment in Speech. Rater • Test aspects of language competence – Delivery (fluency, pronunciation) – Language use (vocabulary and grammar) – Topical development (content, coherence and organization) • Current system – Scores fluency and language use • Overall proficiency score – Combination of measures of fluency and language use – Multiple Regression and CART scoring module 6

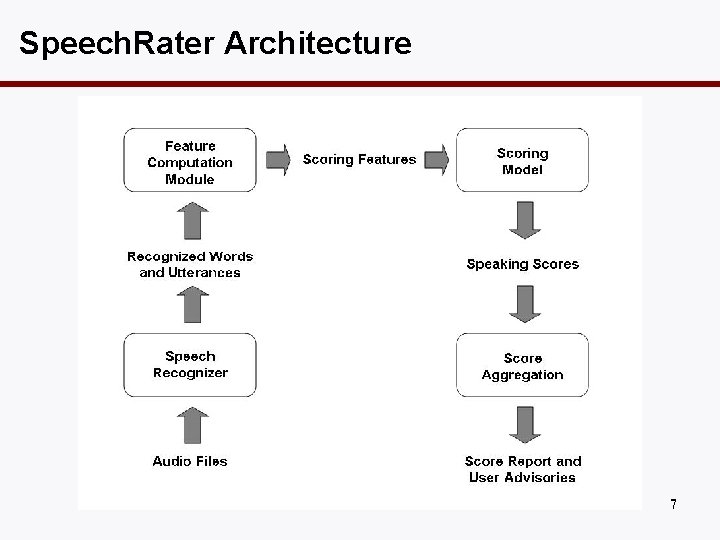

Speech. Rater Architecture 7

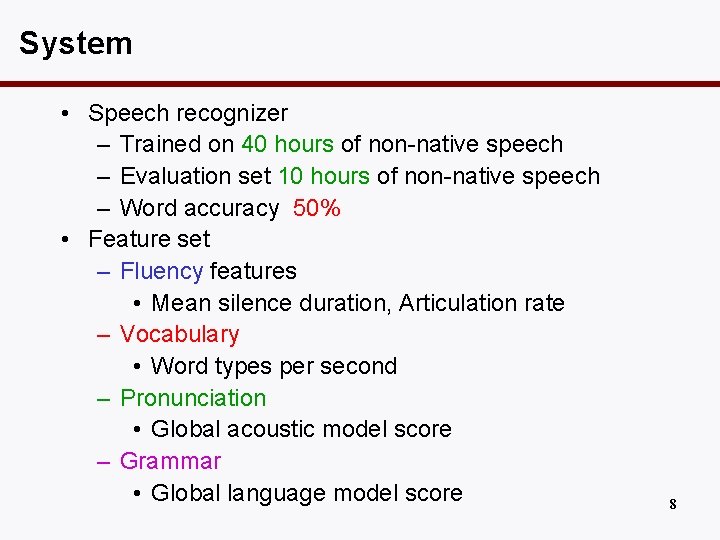

System • Speech recognizer – Trained on 40 hours of non-native speech – Evaluation set 10 hours of non-native speech – Word accuracy 50% • Feature set – Fluency features • Mean silence duration, Articulation rate – Vocabulary • Word types per second – Pronunciation • Global acoustic model score – Grammar • Global language model score 8

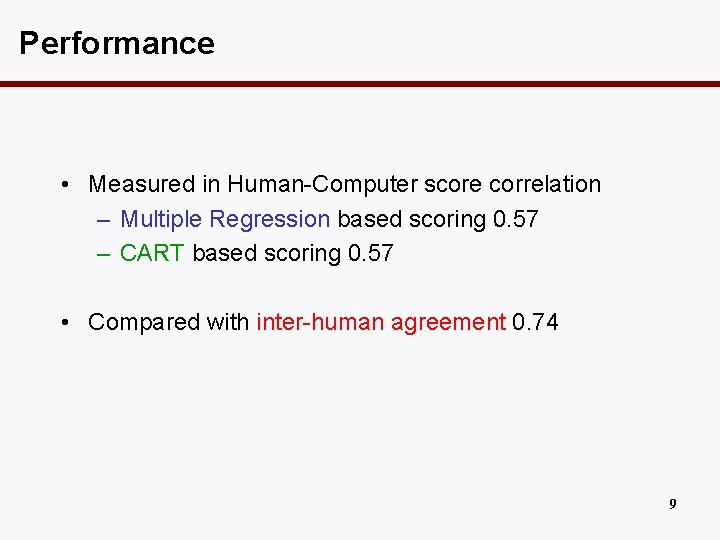

Performance • Measured in Human-Computer score correlation – Multiple Regression based scoring 0. 57 – CART based scoring 0. 57 • Compared with inter-human agreement 0. 74 9

Requirements • Superior quality audio recordings for ASR training • tens of hours of language specific speech • tens of hours of transcription Language-specific resources 10

Is this the end? • What if language-specific resources are scarce? – superior quality audio recordings for ASR training – hours of language specific speech – hours of transcription • Tested language is a minority language ASR performance affected Alternative methods sought 11

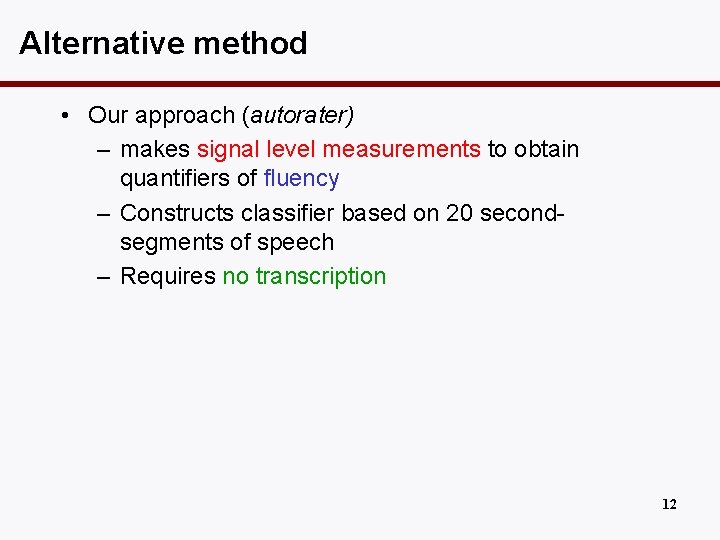

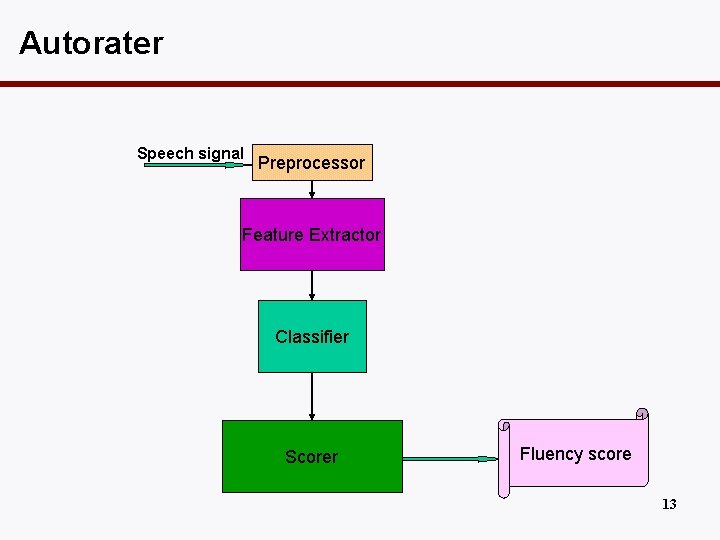

Alternative method • Our approach (autorater) – makes signal level measurements to obtain quantifiers of fluency – Constructs classifier based on 20 secondsegments of speech – Requires no transcription 12

Autorater Speech signal Preprocessor Feature Extractor Classifier Scorer Fluency score 13

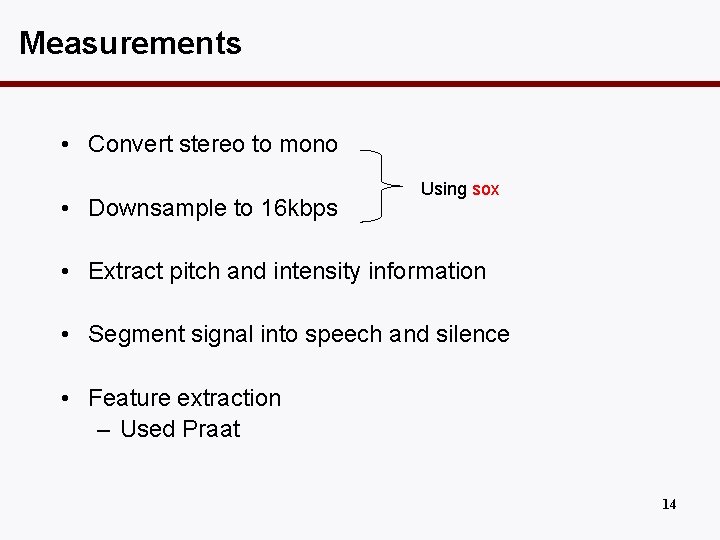

Measurements • Convert stereo to mono • Downsample to 16 kbps Using sox • Extract pitch and intensity information • Segment signal into speech and silence • Feature extraction – Used Praat 14

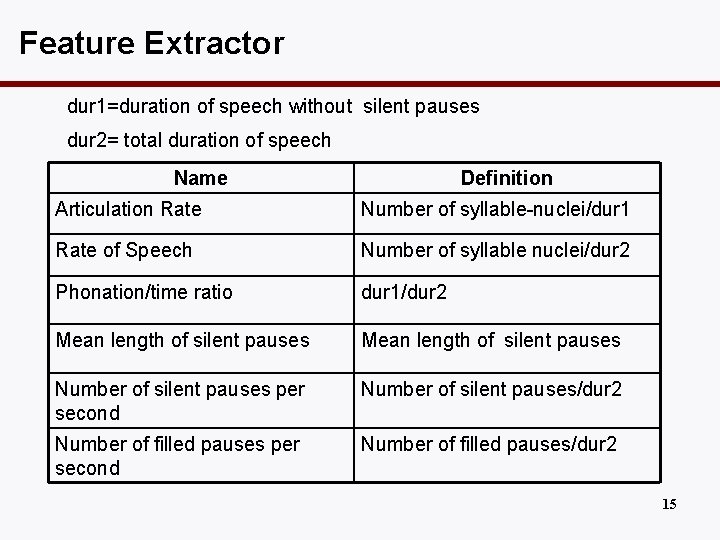

Feature Extractor dur 1=duration of speech without silent pauses dur 2= total duration of speech Name Definition Articulation Rate Number of syllable-nuclei/dur 1 Rate of Speech Number of syllable nuclei/dur 2 Phonation/time ratio dur 1/dur 2 Mean length of silent pauses Number of silent pauses per second Number of silent pauses/dur 2 Number of filled pauses per second Number of filled pauses/dur 2 15

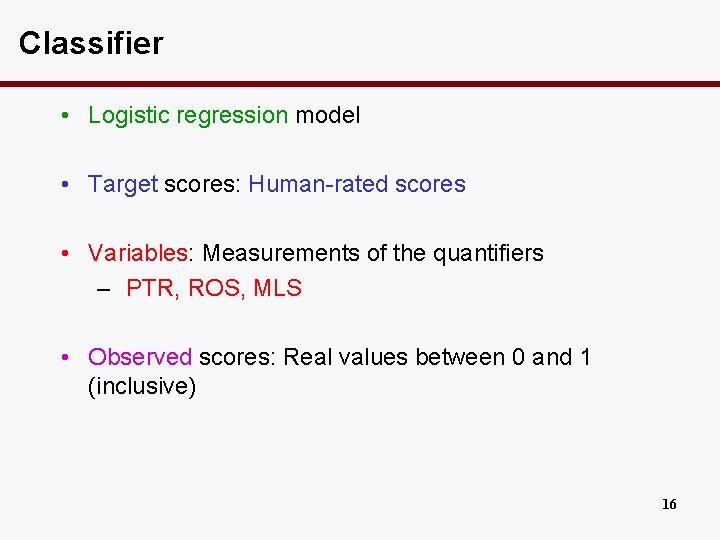

Classifier • Logistic regression model • Target scores: Human-rated scores • Variables: Measurements of the quantifiers – PTR, ROS, MLS • Observed scores: Real values between 0 and 1 (inclusive) 16

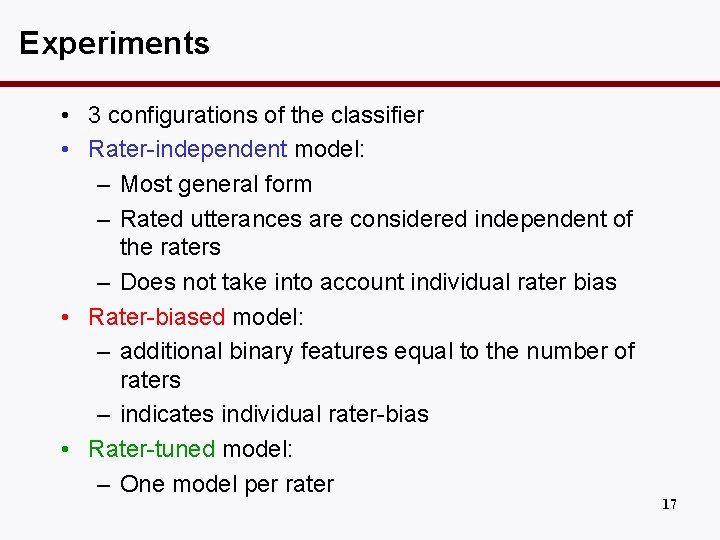

Experiments • 3 configurations of the classifier • Rater-independent model: – Most general form – Rated utterances are considered independent of the raters – Does not take into account individual rater bias • Rater-biased model: – additional binary features equal to the number of raters – indicates individual rater-bias • Rater-tuned model: – One model per rater 17

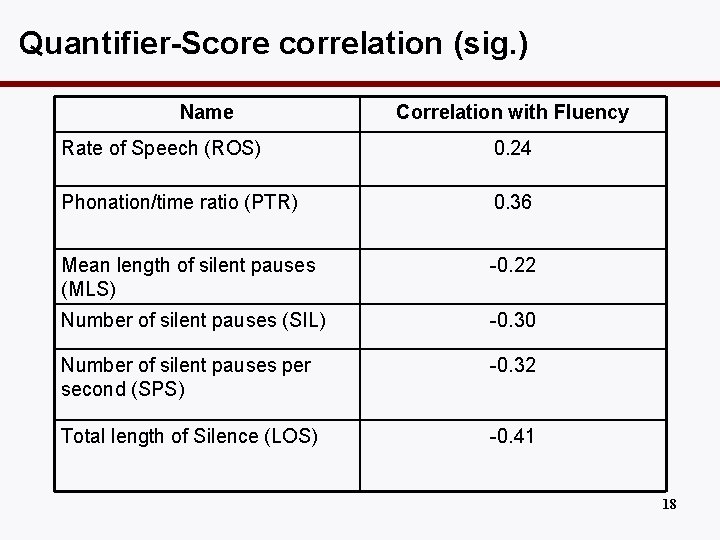

Quantifier-Score correlation (sig. ) Name Correlation with Fluency Rate of Speech (ROS) 0. 24 Phonation/time ratio (PTR) 0. 36 Mean length of silent pauses (MLS) -0. 22 Number of silent pauses (SIL) -0. 30 Number of silent pauses per second (SPS) -0. 32 Total length of Silence (LOS) -0. 41 18

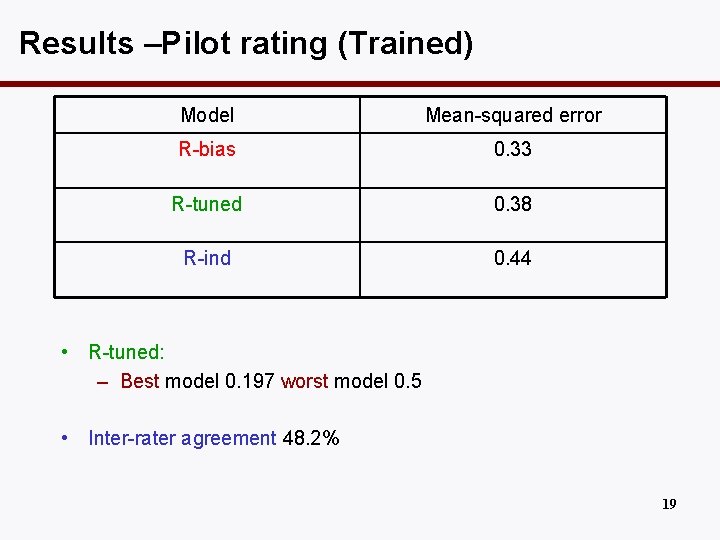

Results –Pilot rating (Trained) Model Mean-squared error R-bias 0. 33 R-tuned 0. 38 R-ind 0. 44 • R-tuned: – Best model 0. 197 worst model 0. 5 • Inter-rater agreement 48. 2% 19

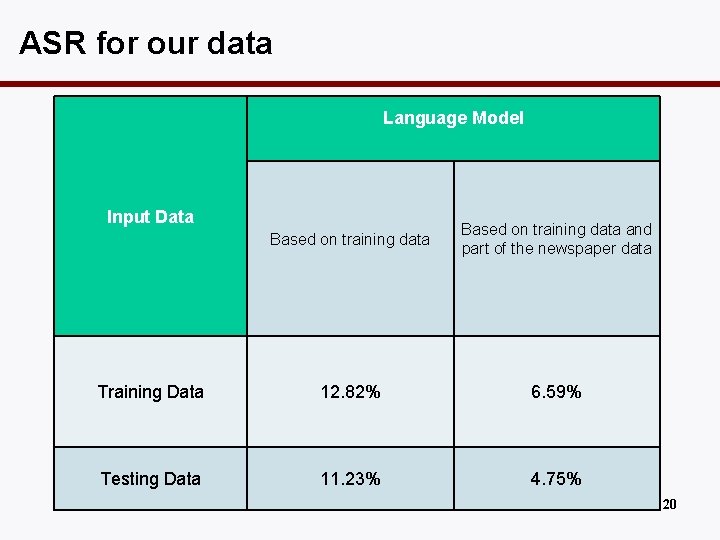

ASR for our data Language Model Input Data Based on training data and part of the newspaper data Training Data 12. 82% 6. 59% Testing Data 11. 23% 4. 75% 20

Summary • Quantifiers obtained from low-level acoustic measurements are good indicators of fluency • Logistic regression models for automated scoring of spontaneous speech appropriate • Main contribution – Alternative method of automatic fluency assessment – Useful in resource-scarce testing • Main Result: – Rater-biased logistic regression model for scoring fluency 21

- Slides: 21