Automatic Command Queue Scheduling for TaskParallel Workloads in

![Related Work for Task Scheduling Open. MP-like: Core. TSAR [IPDPS 12], Kaleem[PACT 14], Omp. Related Work for Task Scheduling Open. MP-like: Core. TSAR [IPDPS 12], Kaleem[PACT 14], Omp.](https://slidetodoc.com/presentation_image/2f2d68d4d12023514338093b37d6fdb1/image-18.jpg)

![// Initialize the context cl_context_properties prop[]={. . . , CL_CONTEXT_SCHEDULER, CL_CONTEXT_SCHED_AUTO_FIT, 0}; context = // Initialize the context cl_context_properties prop[]={. . . , CL_CONTEXT_SCHEDULER, CL_CONTEXT_SCHED_AUTO_FIT, 0}; context =](https://slidetodoc.com/presentation_image/2f2d68d4d12023514338093b37d6fdb1/image-31.jpg)

- Slides: 42

Automatic Command Queue Scheduling for Task-Parallel Workloads in Open. CL Ashwin M. Aji, Antonio J. Pena, Pavan Balaji and Wu-Chun Feng Virginia Tech and Argonne National Lab.

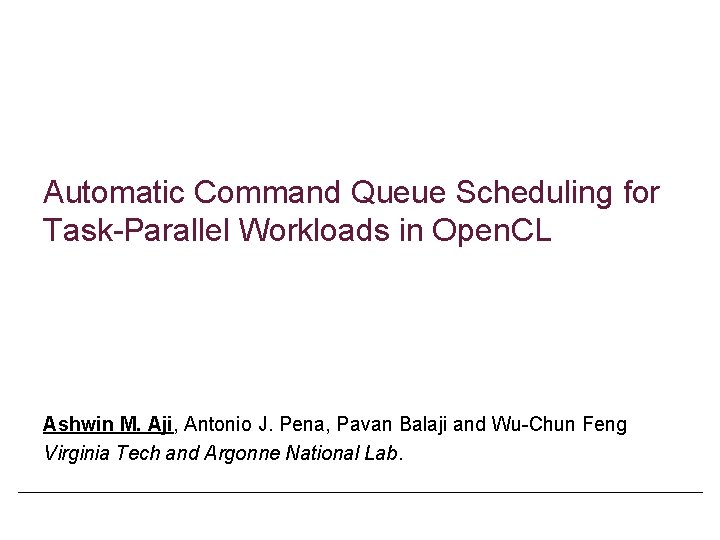

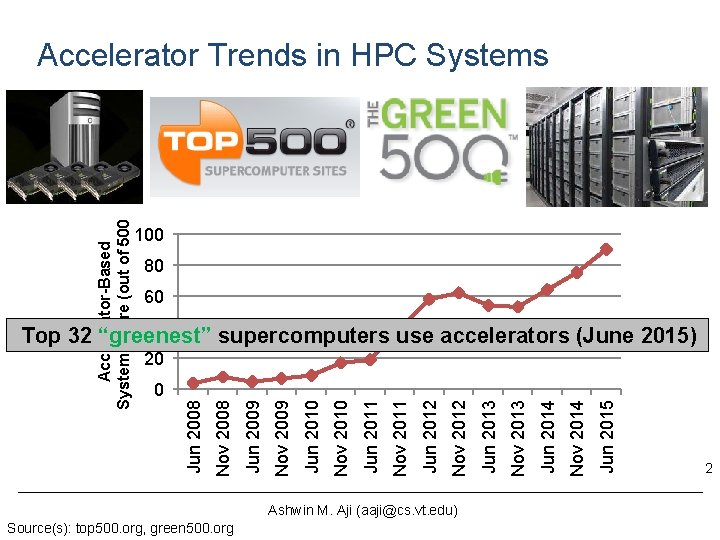

Accelerator-Based System Share (out of 500) Accelerator Trends in HPC Systems 100 80 60 40 Top 32 “greenest” supercomputers use accelerators (June 2015) 20 Ashwin M. Aji (aaji@cs. vt. edu) Source(s): top 500. org, green 500. org Jun 2015 Nov 2014 Jun 2014 Nov 2013 Jun 2013 Nov 2012 Jun 2012 Nov 2011 Jun 2011 Nov 2010 Jun 2010 Nov 2009 Jun 2009 Nov 2008 Jun 2008 0 2

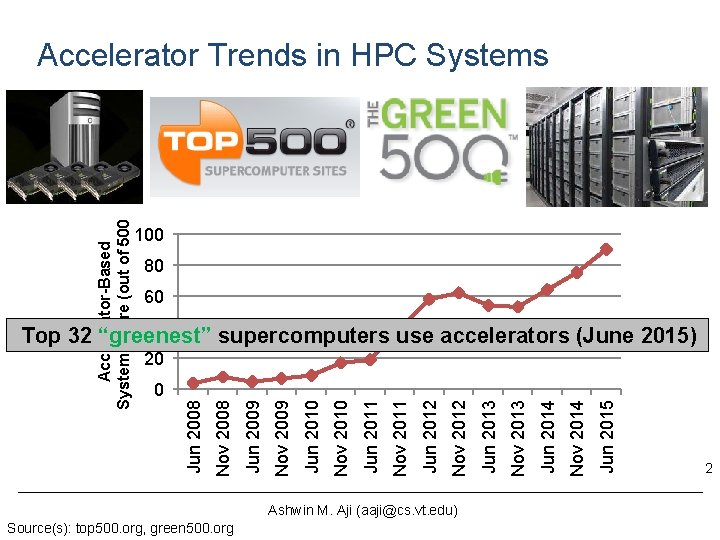

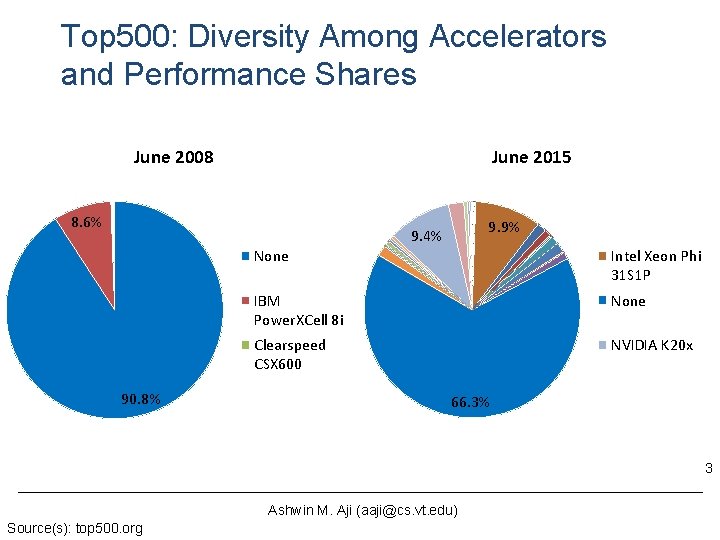

Top 500: Diversity Among Accelerators and Performance Shares June 2008 June 2015 8. 6% 9. 9% 9. 4% 90. 8% None Intel Xeon Phi 31 S 1 P IBM Power. XCell 8 i None Clearspeed CSX 600 NVIDIA K 20 x 66. 3% 3 Ashwin M. Aji (aaji@cs. vt. edu) Source(s): top 500. org

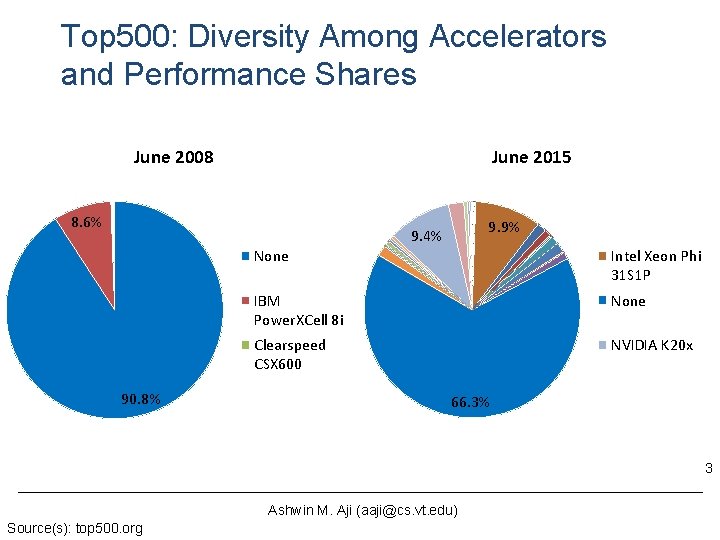

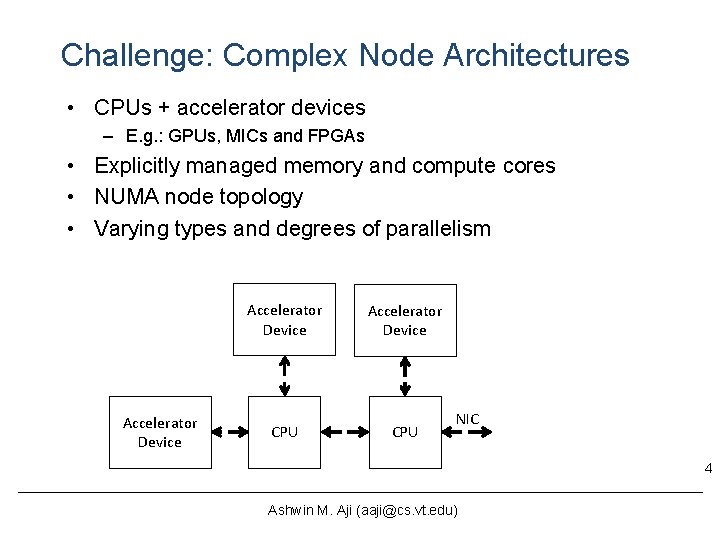

Challenge: Complex Node Architectures • CPUs + accelerator devices – E. g. : GPUs, MICs and FPGAs • Explicitly managed memory and compute cores • NUMA node topology • Varying types and degrees of parallelism Accelerator Device CPU NIC 4 Ashwin M. Aji (aaji@cs. vt. edu)

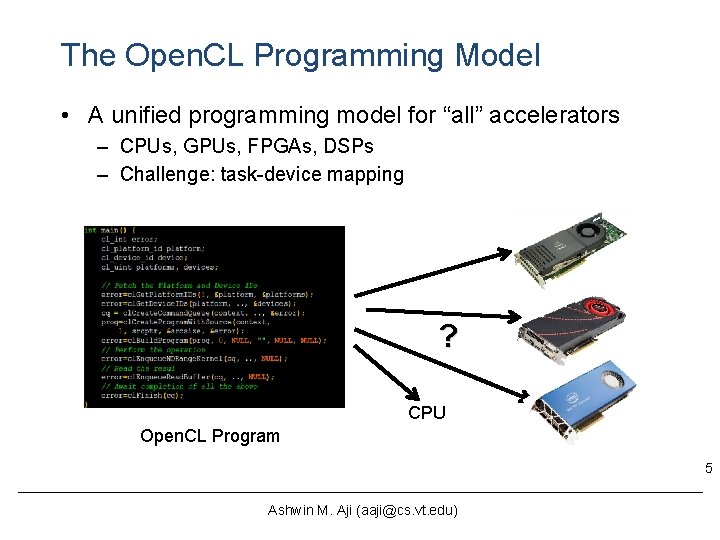

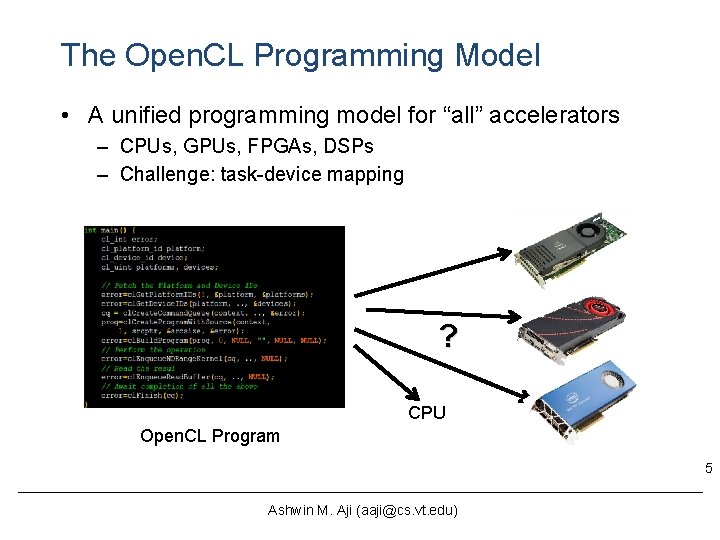

The Open. CL Programming Model • A unified programming model for “all” accelerators – CPUs, GPUs, FPGAs, DSPs – Challenge: task-device mapping ? CPU Open. CL Program 5 Ashwin M. Aji (aaji@cs. vt. edu)

Open. CL Class Diagram 6 Source(s): Open. CL 1. 2 Specification Ashwin M. Aji (aaji@cs. vt. edu)

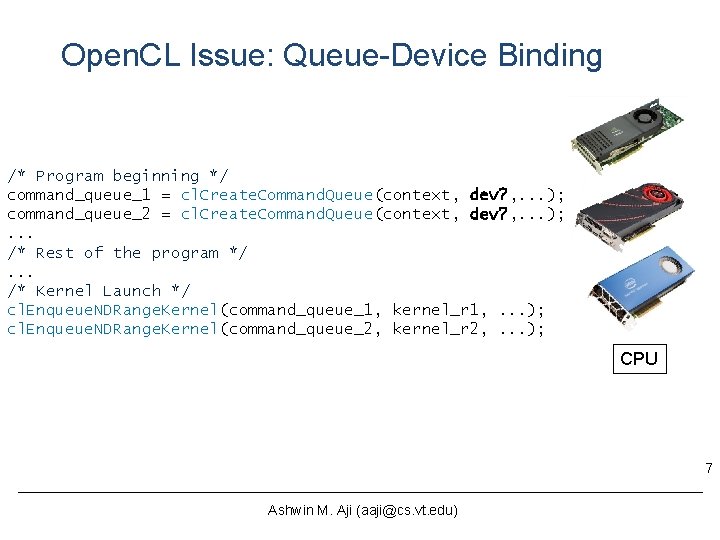

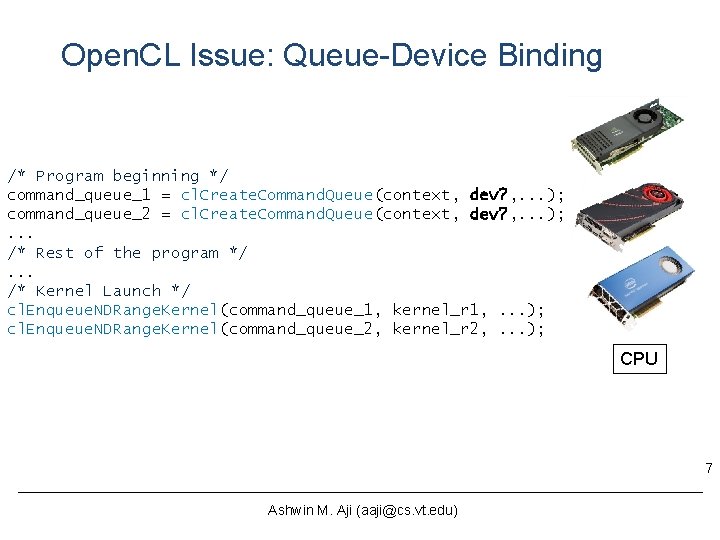

Open. CL Issue: Queue-Device Binding /* Program beginning */ command_queue_1 = cl. Create. Command. Queue(context, dev? , . . . ); command_queue_2 = cl. Create. Command. Queue(context, dev? , . . . ); . . . /* Rest of the program */. . . /* Kernel Launch */ cl. Enqueue. NDRange. Kernel(command_queue_1, kernel_r 1, . . . ); cl. Enqueue. NDRange. Kernel(command_queue_2, kernel_r 2, . . . ); CPU 7 Ashwin M. Aji (aaji@cs. vt. edu)

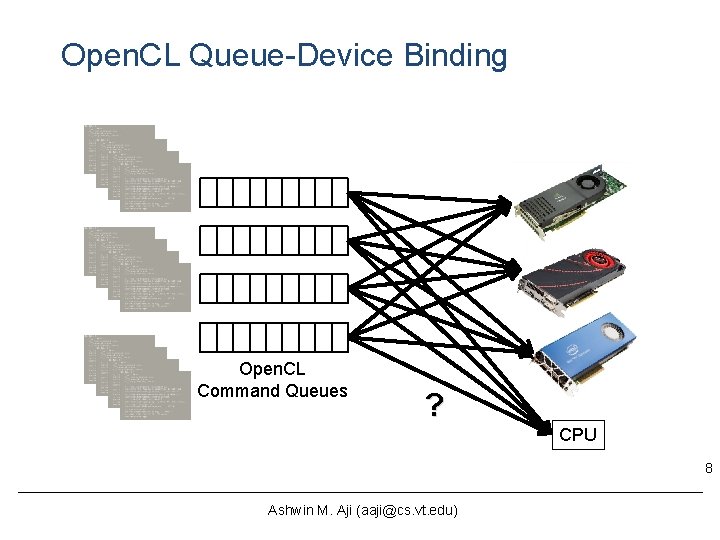

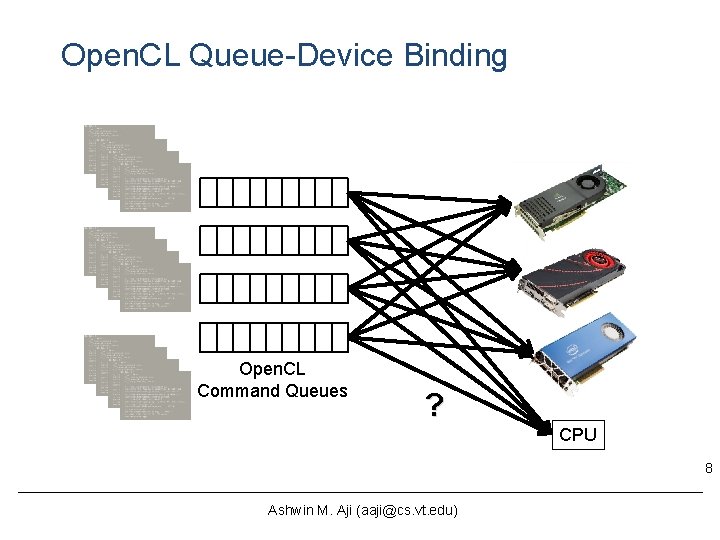

Open. CL Queue-Device Binding Open. CL Command Queues ? CPU 8 Ashwin M. Aji (aaji@cs. vt. edu)

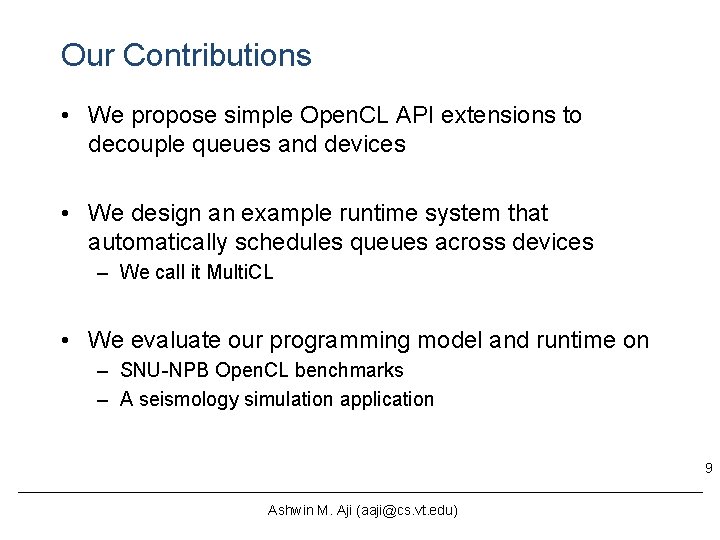

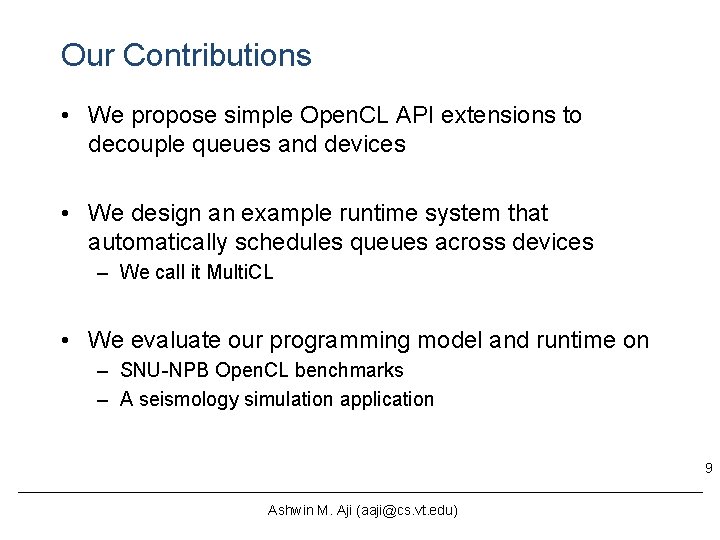

Our Contributions • We propose simple Open. CL API extensions to decouple queues and devices • We design an example runtime system that automatically schedules queues across devices – We call it Multi. CL • We evaluate our programming model and runtime on – SNU-NPB Open. CL benchmarks – A seismology simulation application 9 Ashwin M. Aji (aaji@cs. vt. edu)

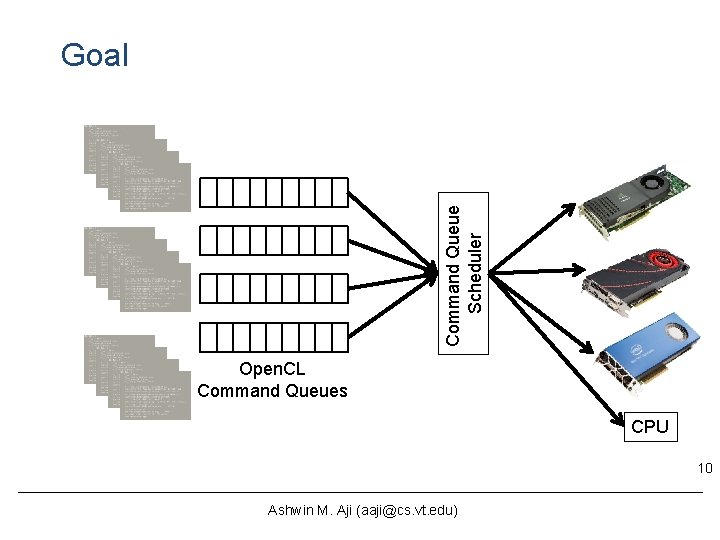

Command Queue Scheduler Goal Open. CL Command Queues CPU 10 Ashwin M. Aji (aaji@cs. vt. edu)

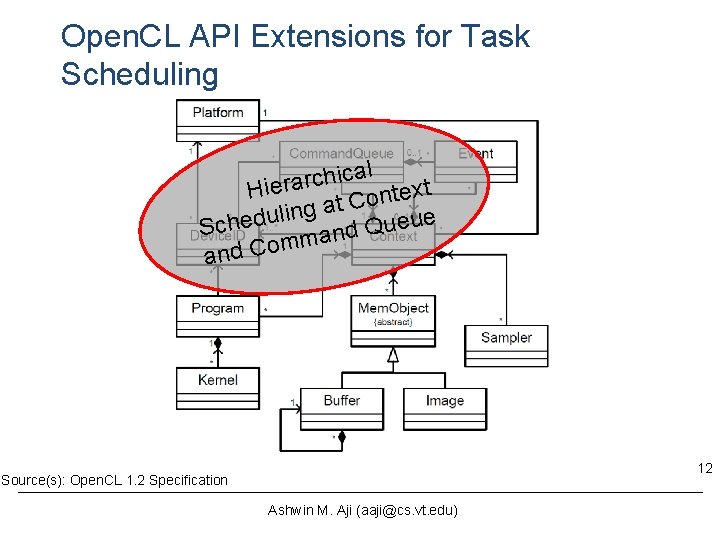

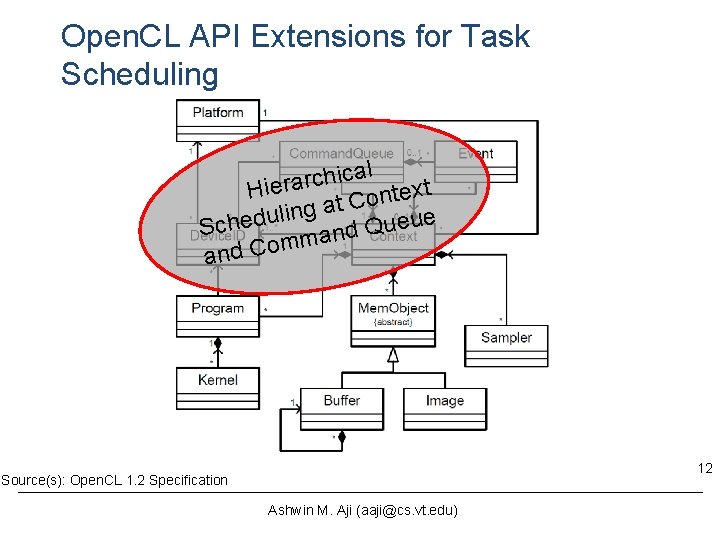

Open. CL API Extensions for Task Scheduling al c i h c r a Hier text n o C t a g n i l u d ue e u Q Sche d an m m o and C 12 Source(s): Open. CL 1. 2 Specification Ashwin M. Aji (aaji@cs. vt. edu)

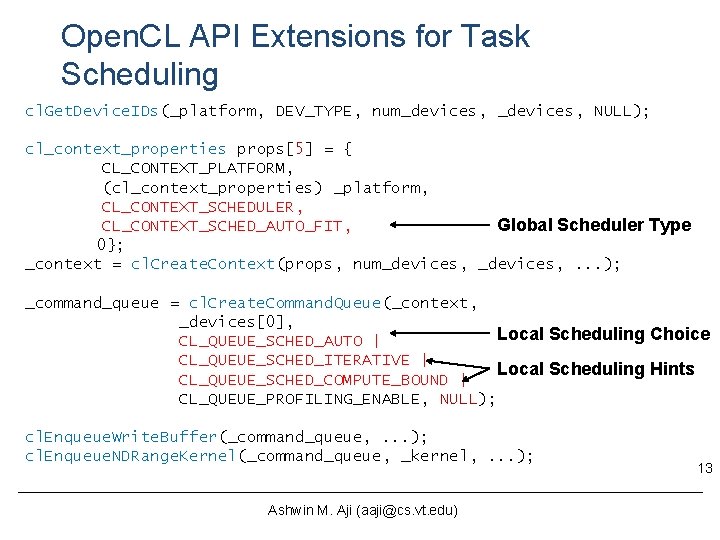

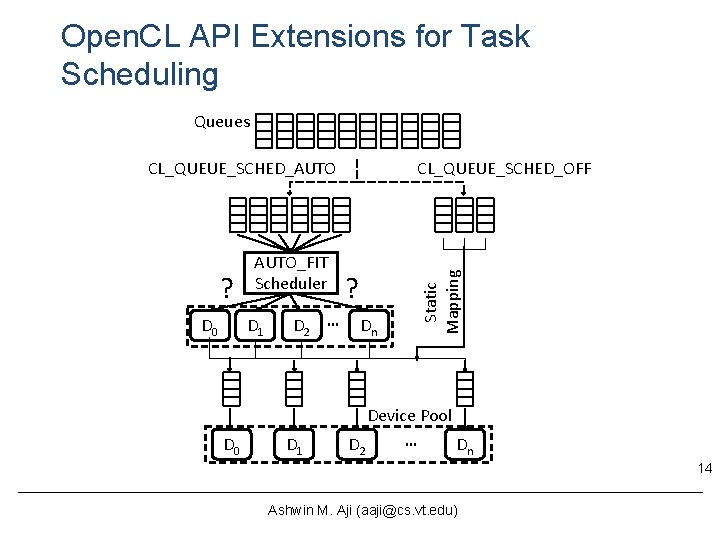

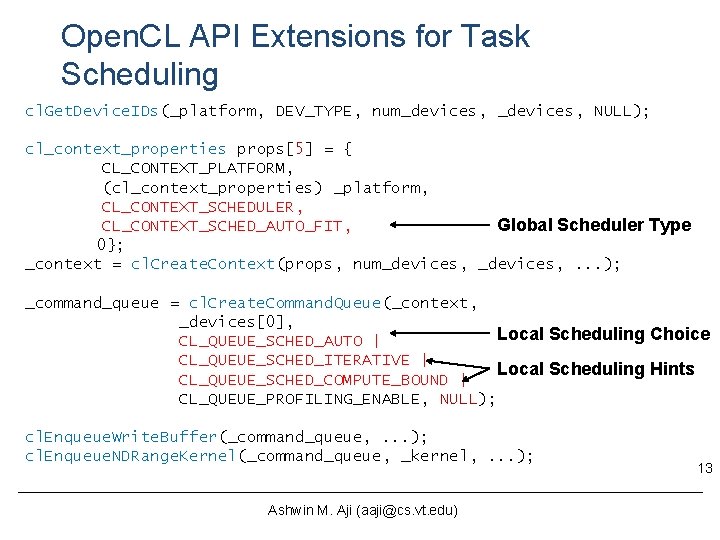

Open. CL API Extensions for Task Scheduling cl. Get. Device. IDs(_platform, DEV_TYPE, num_devices, NULL); cl_context_properties props[5] = { CL_CONTEXT_PLATFORM, (cl_context_properties) _platform, CL_CONTEXT_SCHEDULER, CL_CONTEXT_SCHED_AUTO_FIT, Global Scheduler Type 0}; _context = cl. Create. Context(props, num_devices, . . . ); _command_queue = cl. Create. Command. Queue(_context, _devices[0], Local Scheduling Choice CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_ITERATIVE | Local Scheduling Hints CL_QUEUE_SCHED_COMPUTE_BOUND | CL_QUEUE_PROFILING_ENABLE, NULL); cl. Enqueue. Write. Buffer(_command_queue, . . . ); cl. Enqueue. NDRange. Kernel(_command_queue, _kernel, . . . ); Ashwin M. Aji (aaji@cs. vt. edu) 13

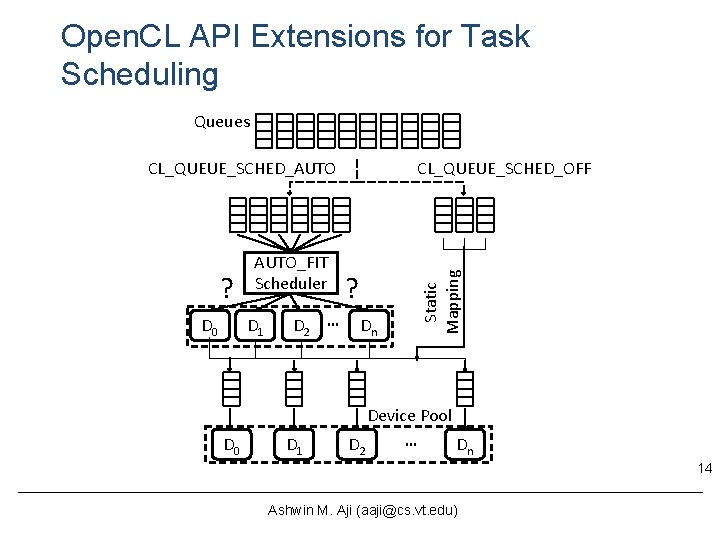

Open. CL API Extensions for Task Scheduling Queues ? D 0 AUTO_FIT Scheduler D 1 D 0 D 2 … D 1 CL_QUEUE_SCHED_OFF ? Dn Static Mapping CL_QUEUE_SCHED_AUTO Device Pool … D 2 Dn 14 Ashwin M. Aji (aaji@cs. vt. edu)

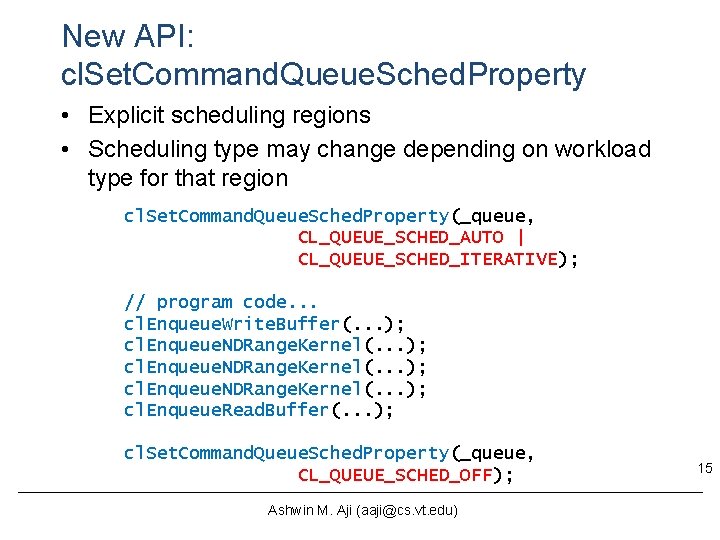

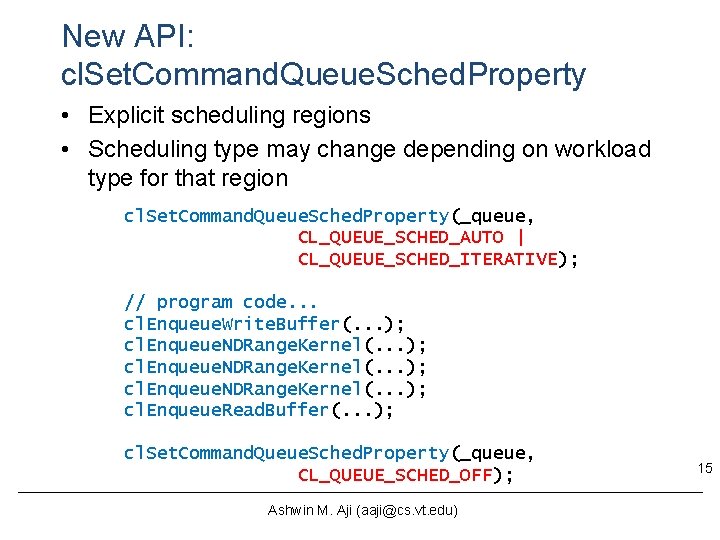

New API: cl. Set. Command. Queue. Sched. Property • Explicit scheduling regions • Scheduling type may change depending on workload type for that region cl. Set. Command. Queue. Sched. Property(_queue, CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_ITERATIVE); // program code. . . cl. Enqueue. Write. Buffer(. . . ); cl. Enqueue. NDRange. Kernel(. . . ); cl. Enqueue. Read. Buffer(. . . ); cl. Set. Command. Queue. Sched. Property(_queue, CL_QUEUE_SCHED_OFF); Ashwin M. Aji (aaji@cs. vt. edu) 15

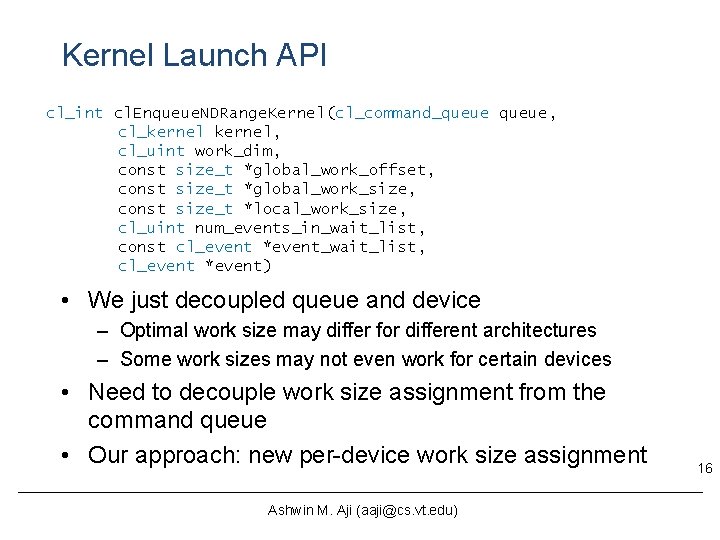

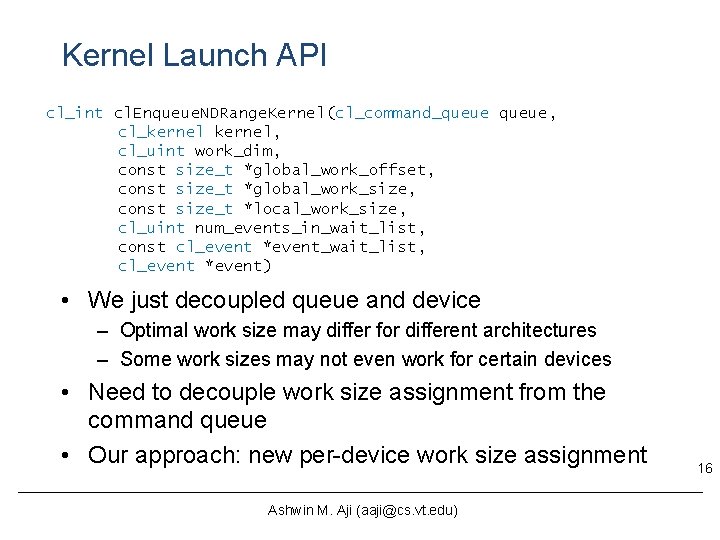

Kernel Launch API cl_int cl. Enqueue. NDRange. Kernel(cl_command_queue, cl_kernel, cl_uint work_dim, const size_t *global_work_offset, const size_t *global_work_size, const size_t *local_work_size, cl_uint num_events_in_wait_list, const cl_event *event_wait_list, cl_event *event) • We just decoupled queue and device – Optimal work size may differ for different architectures – Some work sizes may not even work for certain devices • Need to decouple work size assignment from the command queue • Our approach: new per-device work size assignment Ashwin M. Aji (aaji@cs. vt. edu) 16

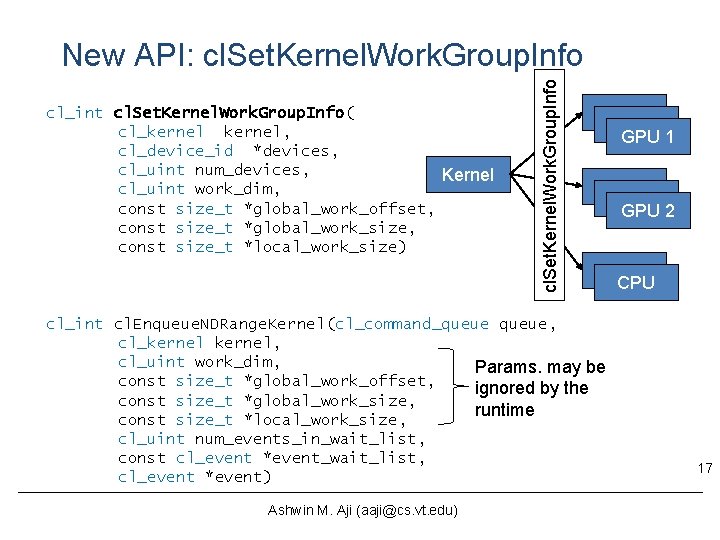

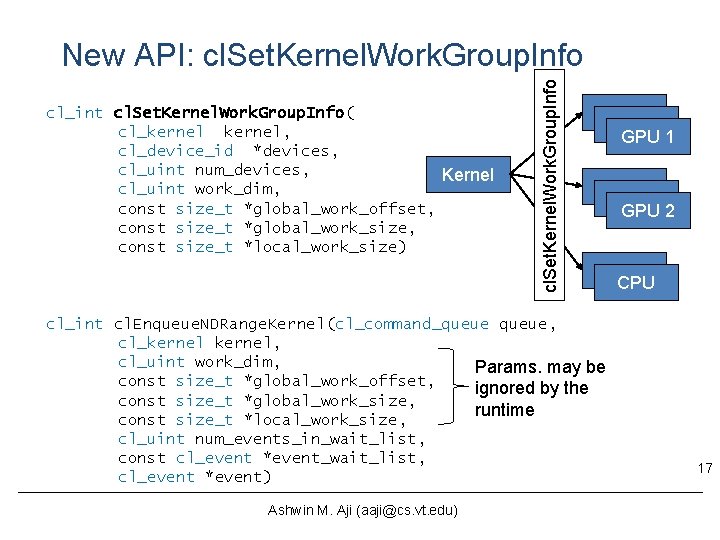

cl_int cl. Set. Kernel. Work. Group. Info( cl_kernel, cl_device_id *devices, cl_uint num_devices, Kernel cl_uint work_dim, const size_t *global_work_offset, const size_t *global_work_size, const size_t *local_work_size) cl. Set. Kernel. Work. Group. Info New API: cl. Set. Kernel. Work. Group. Info GPU 1 GPU 1 GPU 2 GPU 1 CPU cl_int cl. Enqueue. NDRange. Kernel(cl_command_queue, cl_kernel, cl_uint work_dim, Params. may be const size_t *global_work_offset, ignored by the const size_t *global_work_size, runtime const size_t *local_work_size, cl_uint num_events_in_wait_list, const cl_event *event_wait_list, cl_event *event) Ashwin M. Aji (aaji@cs. vt. edu) 17

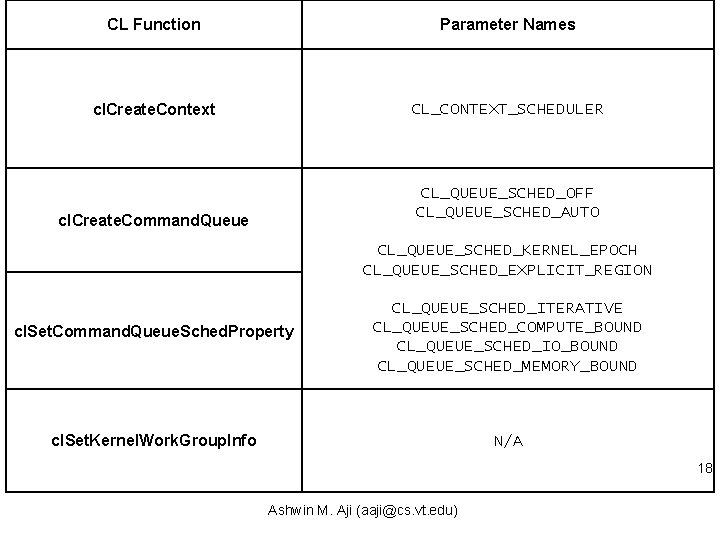

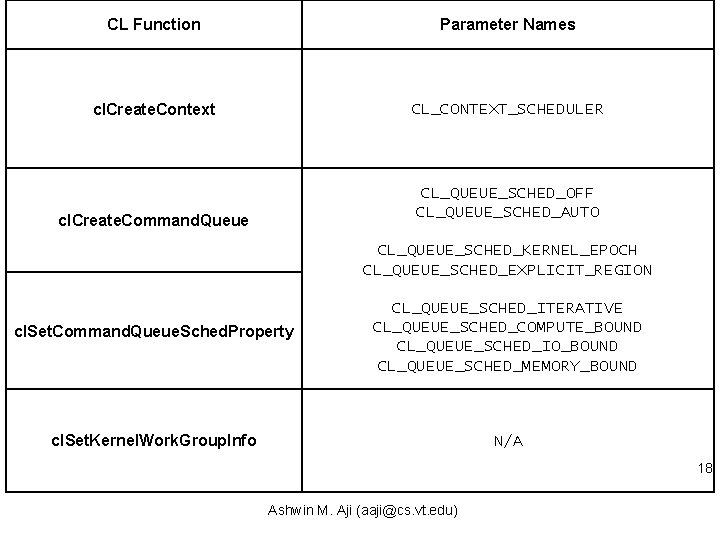

CL Function Parameter Names cl. Create. Context CL_CONTEXT_SCHEDULER CL_QUEUE_SCHED_OFF CL_QUEUE_SCHED_AUTO cl. Create. Command. Queue CL_QUEUE_SCHED_KERNEL_EPOCH CL_QUEUE_SCHED_EXPLICIT_REGION cl. Set. Command. Queue. Sched. Property CL_QUEUE_SCHED_ITERATIVE CL_QUEUE_SCHED_COMPUTE_BOUND CL_QUEUE_SCHED_IO_BOUND CL_QUEUE_SCHED_MEMORY_BOUND cl. Set. Kernel. Work. Group. Info N/A 18 Ashwin M. Aji (aaji@cs. vt. edu)

![Related Work for Task Scheduling Open MPlike Core TSAR IPDPS 12 KaleemPACT 14 Omp Related Work for Task Scheduling Open. MP-like: Core. TSAR [IPDPS 12], Kaleem[PACT 14], Omp.](https://slidetodoc.com/presentation_image/2f2d68d4d12023514338093b37d6fdb1/image-18.jpg)

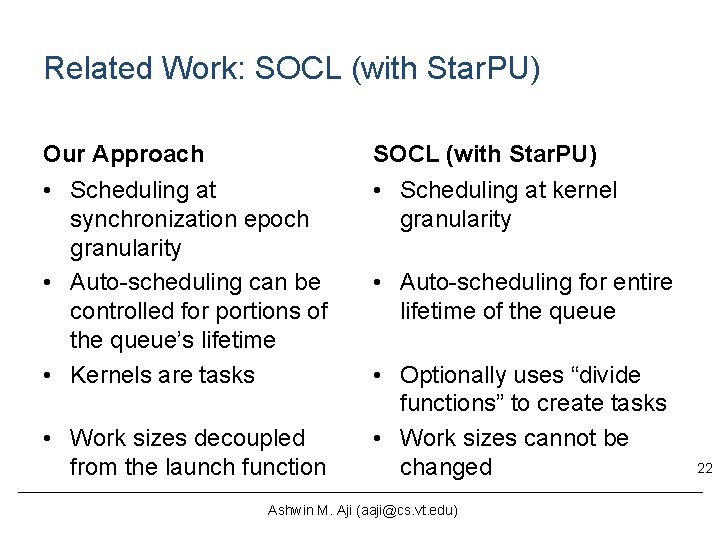

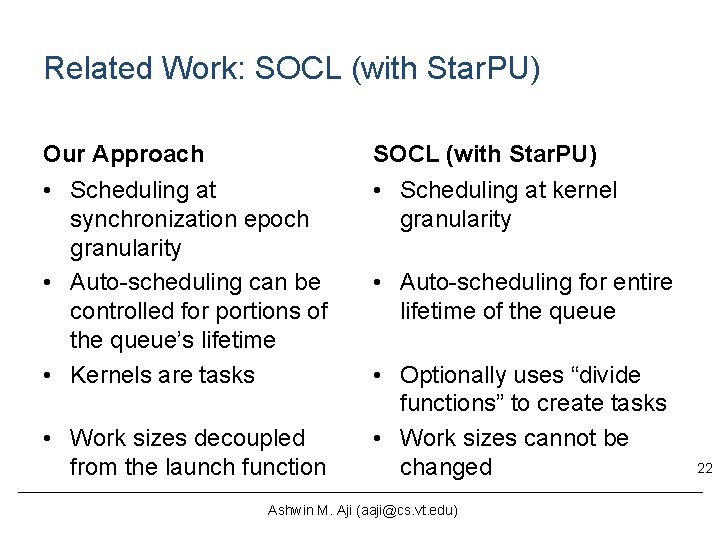

Related Work for Task Scheduling Open. MP-like: Core. TSAR [IPDPS 12], Kaleem[PACT 14], Omp. Ss [IPDPS 12] Intra-Application Scheduling Data/Loop Level Parallelism (Fine Grained Scheduling) Task/Kernel Level Parallelism (Coarse Grained Scheduling) Inter-Application Scheduling Custom API: Qilin [MICRO 09] Open. CL-based: Fluidi. CL [CGO 14], Kim [PPo. PP 11], de la Lama [ISPA 12], Maestro [Euro. Par 10] Multi. CL (This Talk), Star. PU [JCC 11] (SOCL[Euro. Par 14]) Wen[Hi. PC 14], Ravi [HPDC 13, FGCS 13], VOCL [In. Par 12, CCGrid 12], r. CUDA [Hi. PC 12] 21 Ashwin M. Aji (aaji@cs. vt. edu)

Related Work: SOCL (with Star. PU) Our Approach SOCL (with Star. PU) • Scheduling at synchronization epoch granularity • Auto-scheduling can be controlled for portions of the queue’s lifetime • Kernels are tasks • Scheduling at kernel granularity • Work sizes decoupled from the launch function • Auto-scheduling for entire lifetime of the queue • Optionally uses “divide functions” to create tasks • Work sizes cannot be changed Ashwin M. Aji (aaji@cs. vt. edu) 22

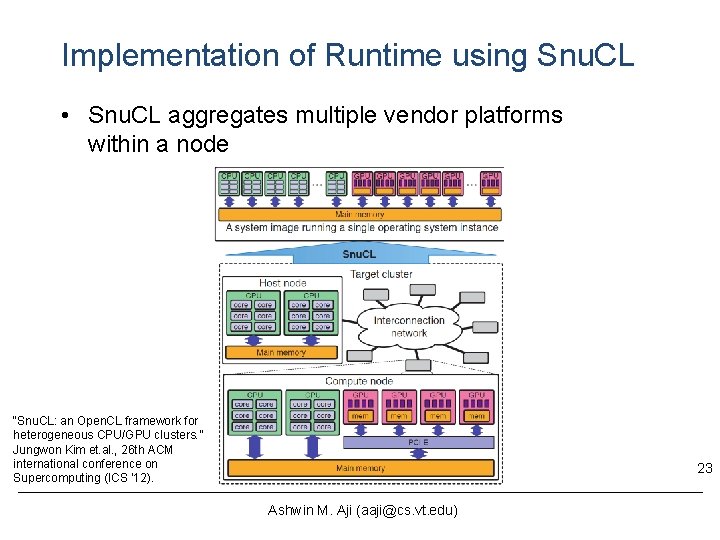

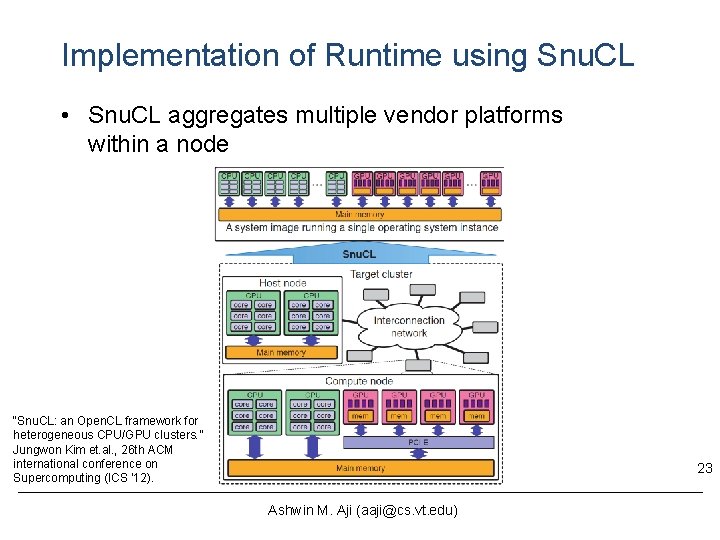

Implementation of Runtime using Snu. CL • Snu. CL aggregates multiple vendor platforms within a node “Snu. CL: an Open. CL framework for heterogeneous CPU/GPU clusters. ” Jungwon Kim et. al. , 26 th ACM international conference on Supercomputing (ICS '12). 23 Ashwin M. Aji (aaji@cs. vt. edu)

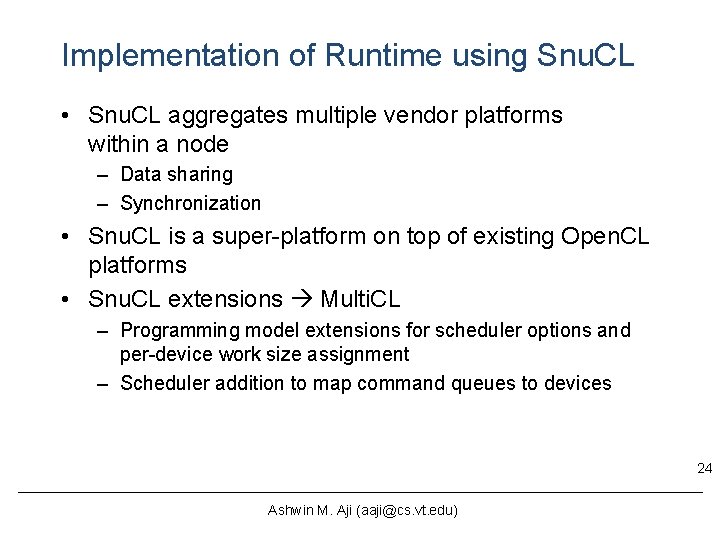

Implementation of Runtime using Snu. CL • Snu. CL aggregates multiple vendor platforms within a node – Data sharing – Synchronization • Snu. CL is a super-platform on top of existing Open. CL platforms • Snu. CL extensions Multi. CL – Programming model extensions for scheduler options and per-device work size assignment – Scheduler addition to map command queues to devices 24 Ashwin M. Aji (aaji@cs. vt. edu)

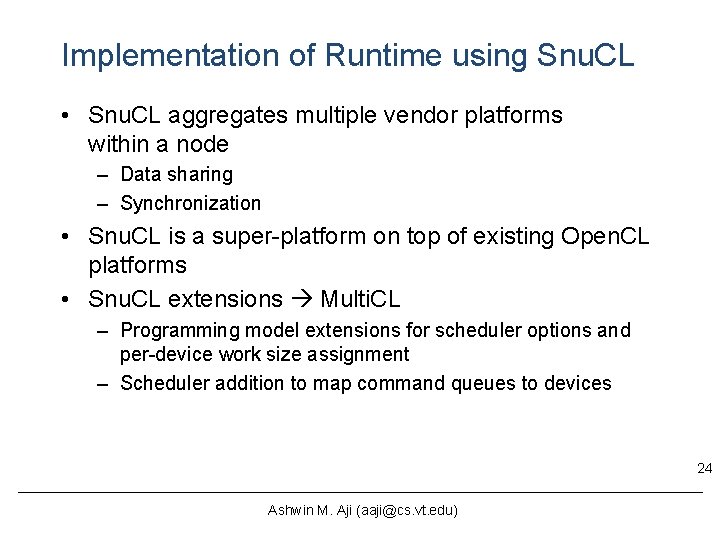

Runtime Modules in Multi. CL Synchronization Epoch • Device profiler • Command queue/kernel profiler • Queue-Device mapper cl. Get. Platform. Ids Device profiling (static) cl. Create. Program. With. Source cl. Build. Program Kernel profiling (static) (cl. Enqueue*)* Kernel profiling (dynamic) cl. Wait. For. Events/cl. Finish Device mapping(dynamic) 25 End of Program

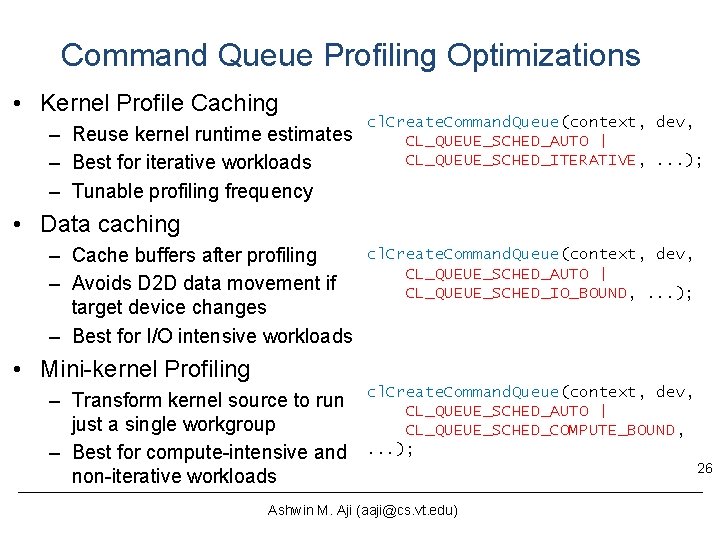

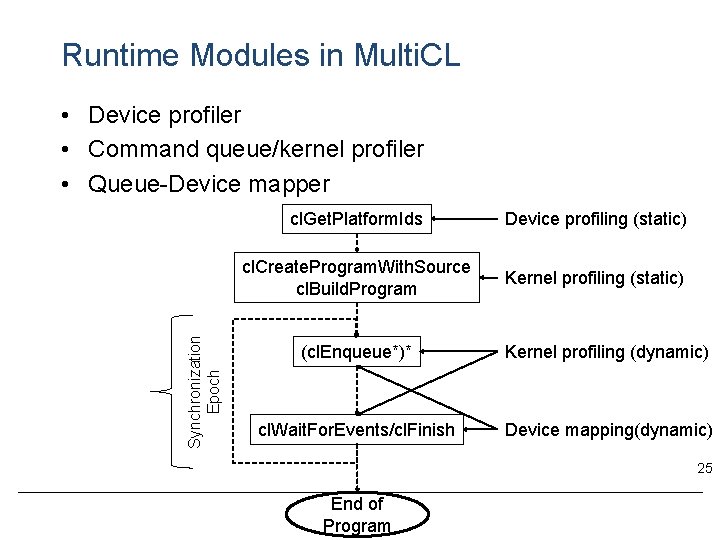

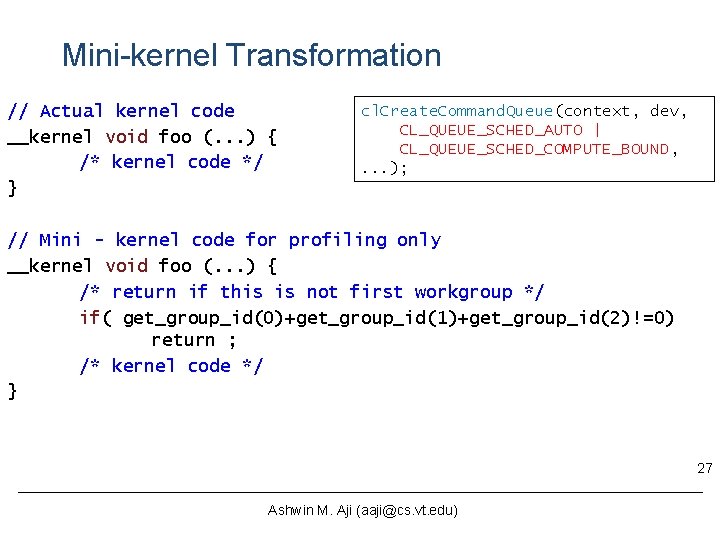

Command Queue Profiling Optimizations • Kernel Profile Caching – Reuse kernel runtime estimates – Best for iterative workloads – Tunable profiling frequency cl. Create. Command. Queue(context, dev, CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_ITERATIVE, . . . ); • Data caching – Cache buffers after profiling – Avoids D 2 D data movement if target device changes – Best for I/O intensive workloads cl. Create. Command. Queue(context, dev, CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_IO_BOUND, . . . ); • Mini-kernel Profiling – Transform kernel source to run just a single workgroup – Best for compute-intensive and non-iterative workloads cl. Create. Command. Queue(context, dev, CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_COMPUTE_BOUND, . . . ); Ashwin M. Aji (aaji@cs. vt. edu) 26

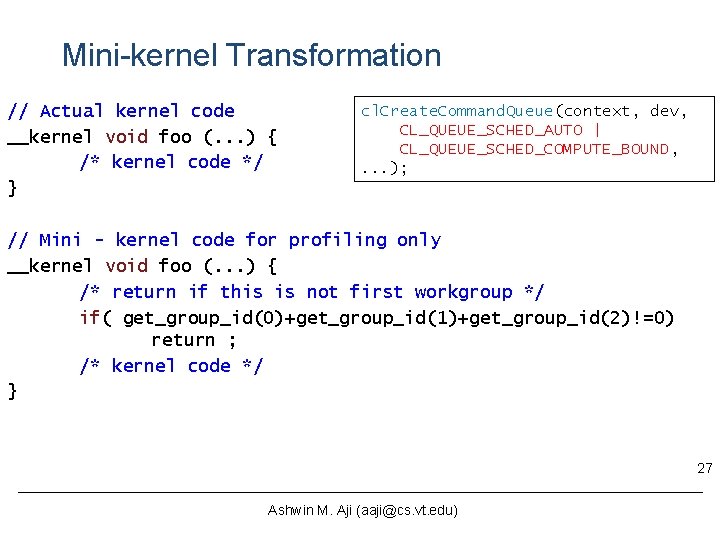

Mini-kernel Transformation // Actual kernel code __kernel void foo (. . . ) { /* kernel code */ } cl. Create. Command. Queue(context, dev, CL_QUEUE_SCHED_AUTO | CL_QUEUE_SCHED_COMPUTE_BOUND, . . . ); // Mini - kernel code for profiling only __kernel void foo (. . . ) { /* return if this is not first workgroup */ if( get_group_id(0)+get_group_id(1)+get_group_id(2)!=0) return ; /* kernel code */ } 27 Ashwin M. Aji (aaji@cs. vt. edu)

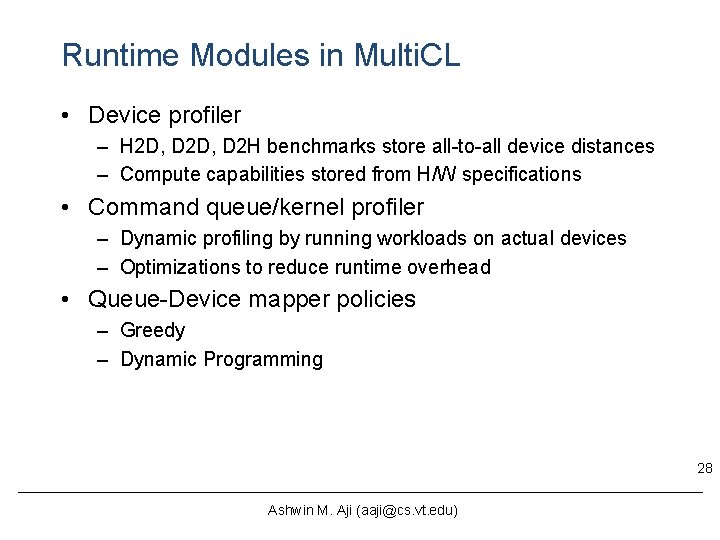

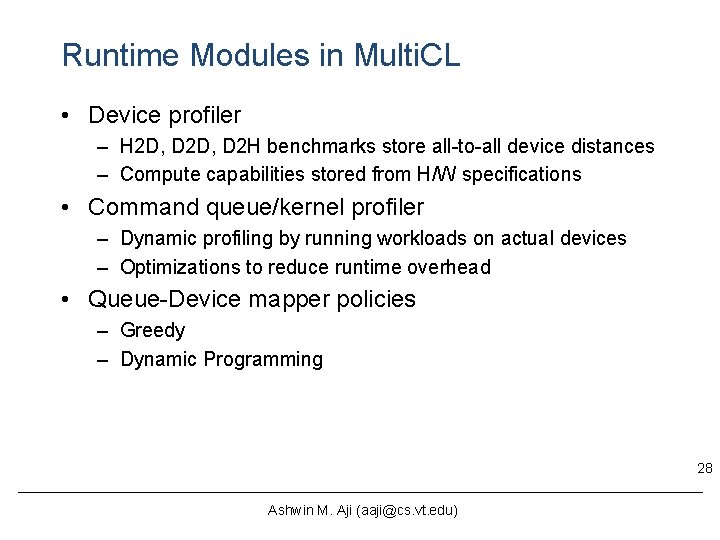

Runtime Modules in Multi. CL • Device profiler – H 2 D, D 2 H benchmarks store all-to-all device distances – Compute capabilities stored from H/W specifications • Command queue/kernel profiler – Dynamic profiling by running workloads on actual devices – Optimizations to reduce runtime overhead • Queue-Device mapper policies – Greedy – Dynamic Programming 28 Ashwin M. Aji (aaji@cs. vt. edu)

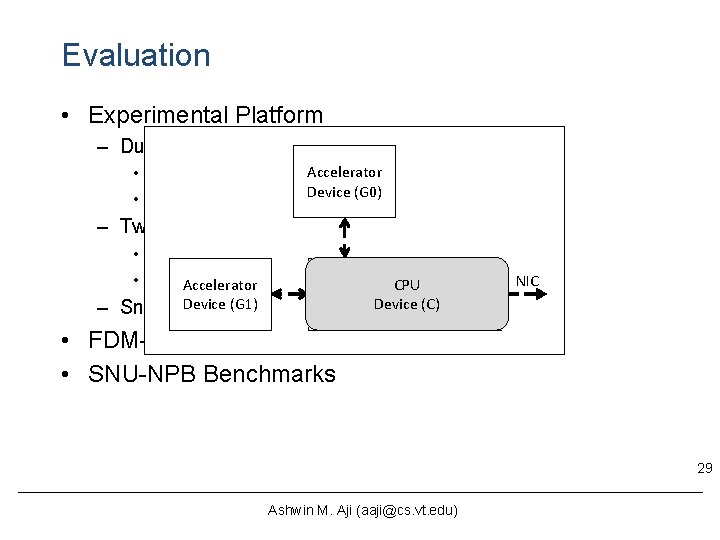

Evaluation • Experimental Platform – Dual-socket Oct-core AMD Opteron 6134 CPU • AMD Open. CL v 1. 2 Accelerator • AMD APP SDK 2. 9 Device (G 0) – Two NVIDIA Tesla C 2050 GPUs • NVIDIA Open. CL v 1. 1 • CUDA toolkit v 5. 0 and driver v 313 CPU Accelerator CPU NIC Device (C)to 3 devices (G 1) – Snu. CL Device aggregates above platforms • FDM-Seismology Simulation • SNU-NPB Benchmarks 29 Ashwin M. Aji (aaji@cs. vt. edu)

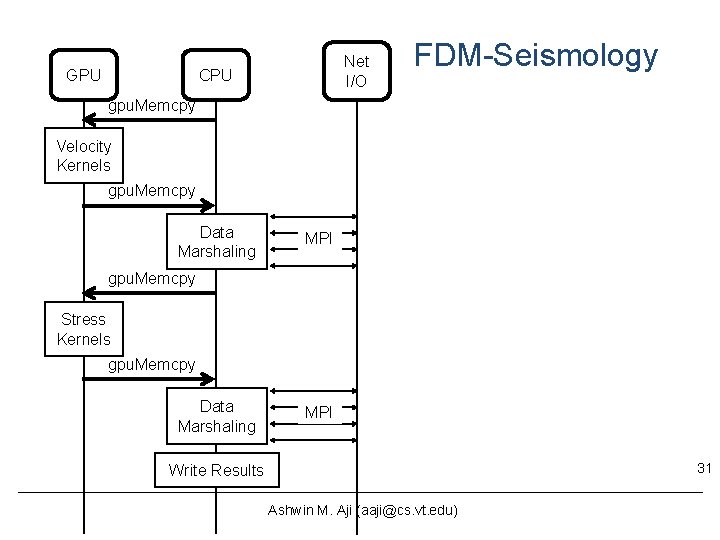

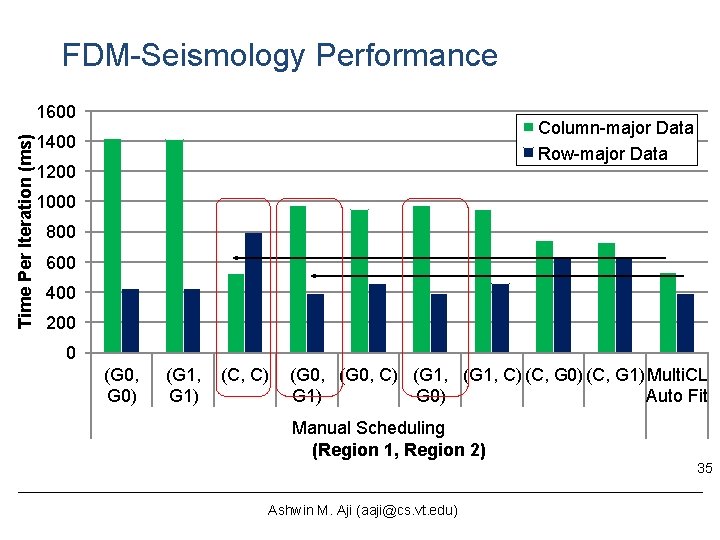

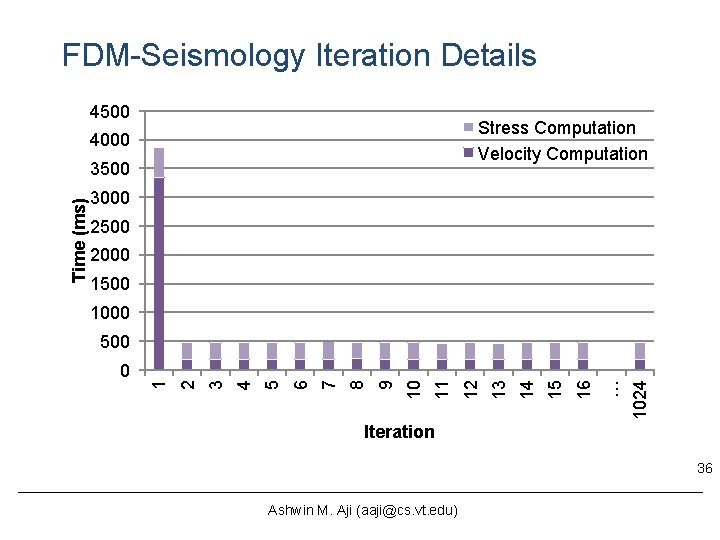

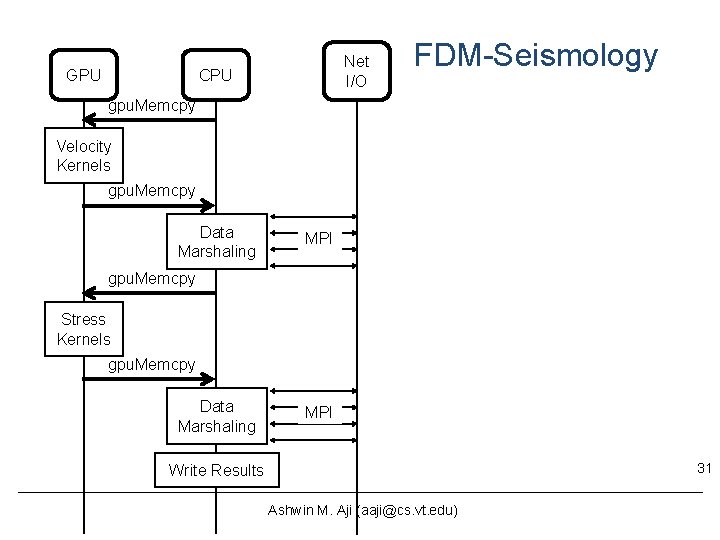

FDM-Seismology • Models the propagation of seismological waves using the finite difference method • Iterative Open. CL kernels to compute velocity and stress – 7 kernels for velocity – 25 kernels for stress 30 Ashwin M. Aji (aaji@cs. vt. edu)

GPU Net I/O CPU FDM-Seismology gpu. Memcpy Velocity Kernels gpu. Memcpy Data Marshaling MPI gpu. Memcpy Stress Kernels gpu. Memcpy Data Marshaling MPI 31 Write Results Ashwin M. Aji (aaji@cs. vt. edu)

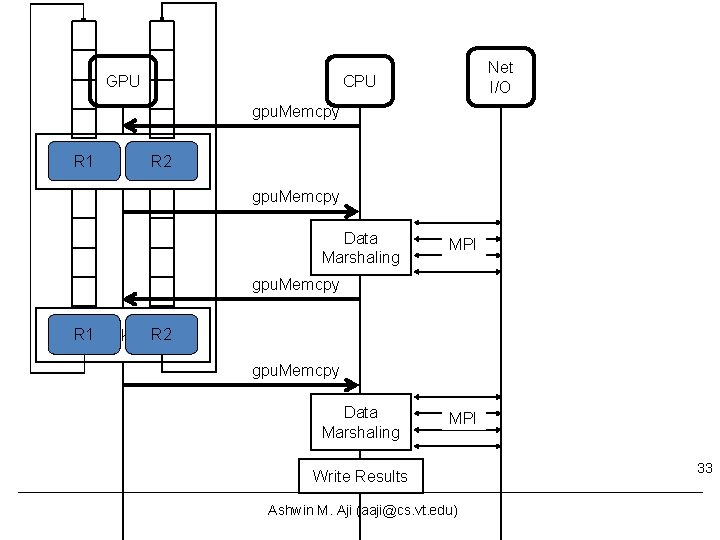

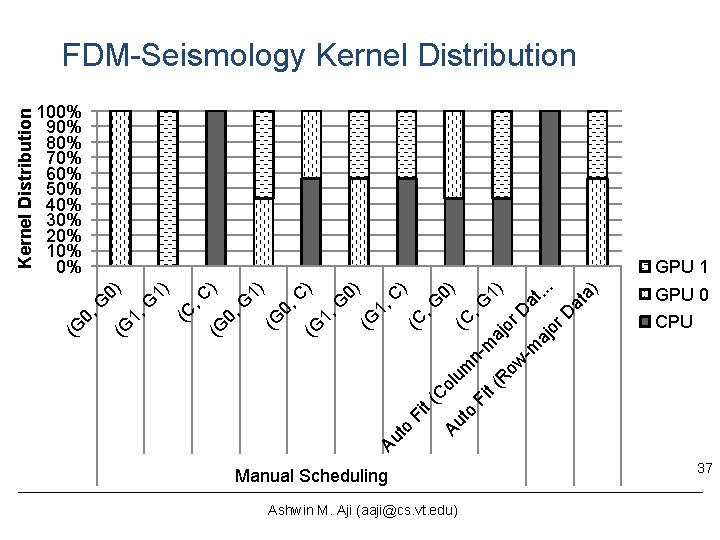

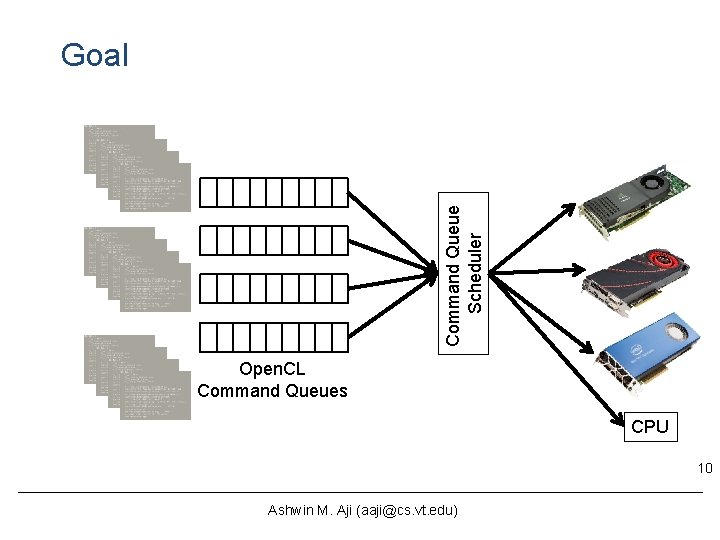

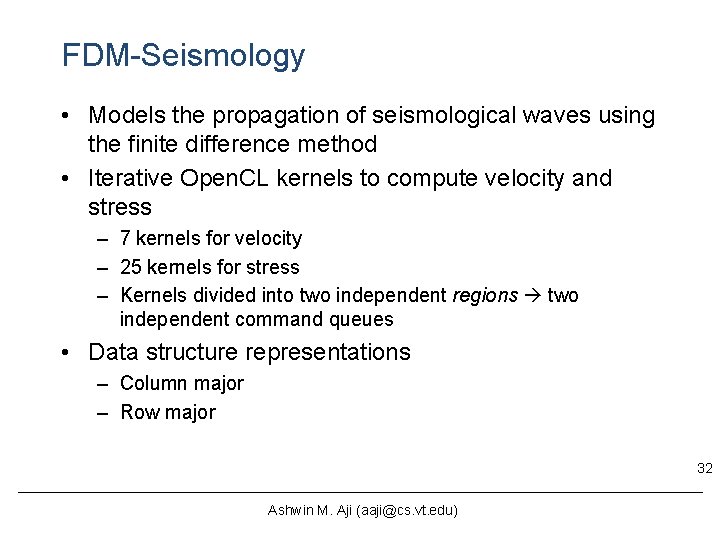

FDM-Seismology • Models the propagation of seismological waves using the finite difference method • Iterative Open. CL kernels to compute velocity and stress – 7 kernels for velocity – 25 kernels for stress – Kernels divided into two independent regions two independent command queues • Data structure representations – Column major – Row major 32 Ashwin M. Aji (aaji@cs. vt. edu)

GPU Net I/O CPU gpu. Memcpy R 1 R 2 Velocity Kernels gpu. Memcpy Data Marshaling MPI gpu. Memcpy R 1 R 2 Stress Kernels gpu. Memcpy Data Marshaling MPI Write Results Ashwin M. Aji (aaji@cs. vt. edu) 33

![Initialize the context clcontextproperties prop CLCONTEXTSCHEDULER CLCONTEXTSCHEDAUTOFIT 0 context // Initialize the context cl_context_properties prop[]={. . . , CL_CONTEXT_SCHEDULER, CL_CONTEXT_SCHED_AUTO_FIT, 0}; context =](https://slidetodoc.com/presentation_image/2f2d68d4d12023514338093b37d6fdb1/image-31.jpg)

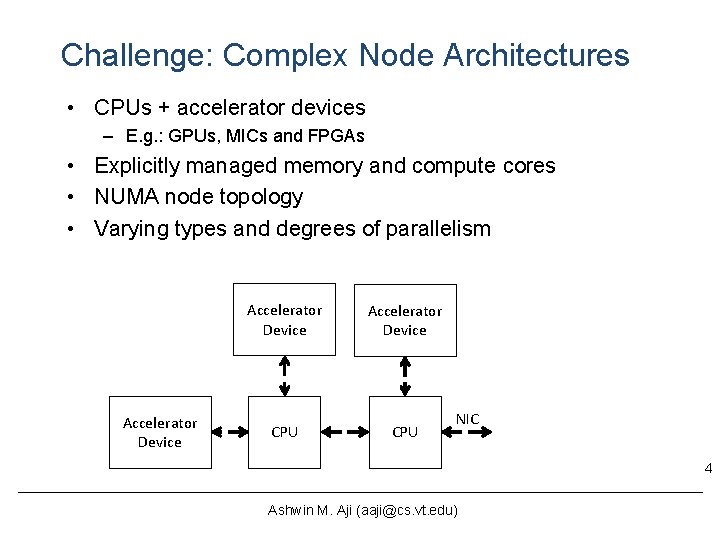

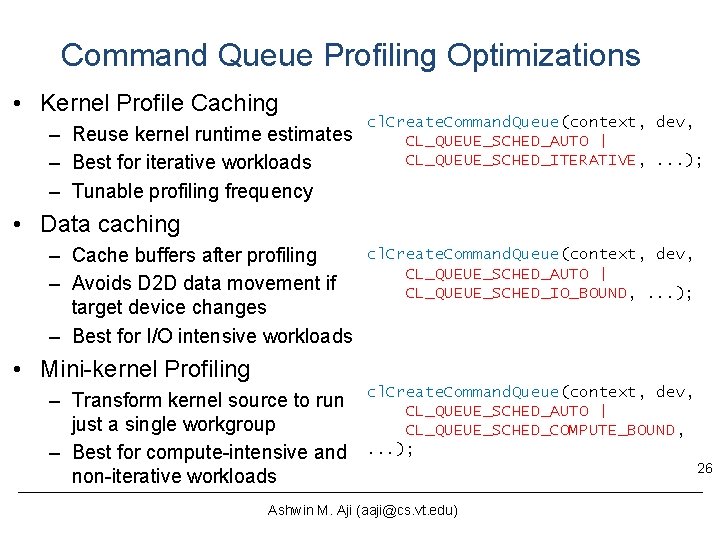

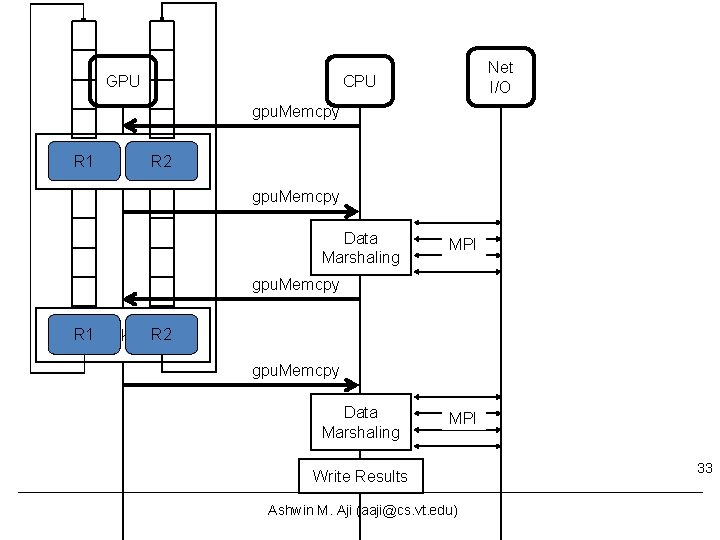

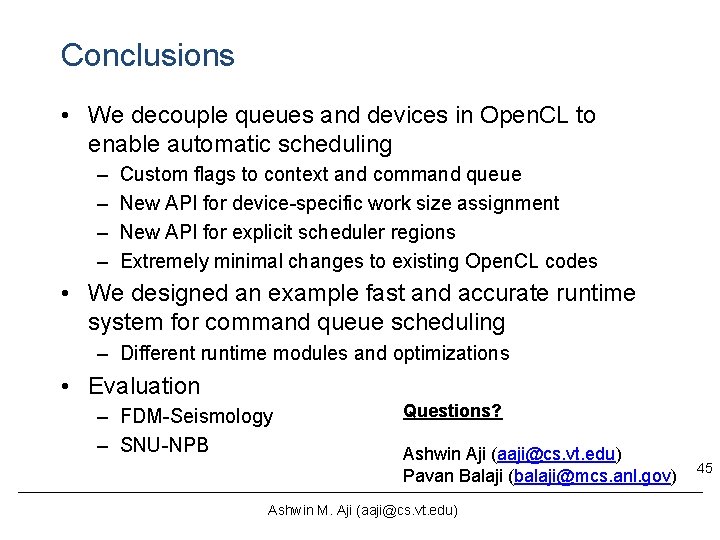

// Initialize the context cl_context_properties prop[]={. . . , CL_CONTEXT_SCHEDULER, CL_CONTEXT_SCHED_AUTO_FIT, 0}; context = cl. Create. Context(prop, . . . ); // Initialize all command queues cl_command_queue q 1, q 2; q 2 = cl. Create. Command. Queue(context, dev, cl_event; CL_QUEUE_AUTO | q 1 = cl. Create. Command. Queue(context, dev, CL_QUEUE_SCHED_ITERATIVE CL_QUEUE_AUTO |. . . ); CL_QUEUE_SCHED_ITERATIVE. . . ); // Main application loop for (. . . ) { // Velocity Computations compute_velocity_region_1(q 1); compute_velocity_region_2(q 2); sync(q 1); sync(q 2); copy_to_host(); marshal_velocity_data(); // MPI Communication MPI_Isend(buf, type, neighbor, . . . ); MPI_Irecv(buf, type, neighbor, . . . ); MPI_Waitall(. . . ); // Stress Computations compute_stress_region_1(q 1); compute_stress_region_2(q 2); sync(q 1); sync(q 2); copy_to_host(); marshal_stress_data(); // MPI Communication MPI_Isend(buf, type, neighbor, . . . ); MPI_Irecv(buf, type, neighbor, . . . ); MPI_Waitall(. . . ); } Ashwin M. Aji (aaji@cs. vt. edu) 34

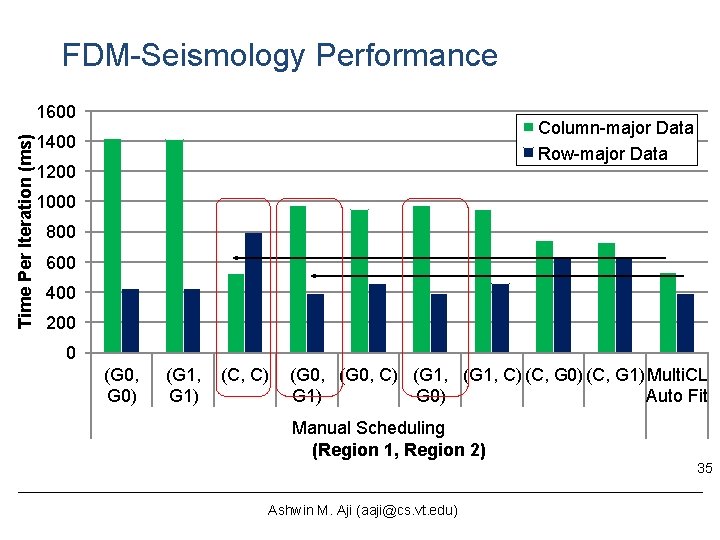

FDM-Seismology Performance Time Per Iteration (ms) 1600 Column-major Data Row-major Data 1400 1200 1000 800 600 400 200 0 (G 0, G 0) (G 1, G 1) (C, C) (G 0, C) (G 1, C) (C, G 0) (C, G 1)Multi. CL G 1) G 0) Auto Fit Manual Scheduling (Region 1, Region 2) 35 Ashwin M. Aji (aaji@cs. vt. edu)

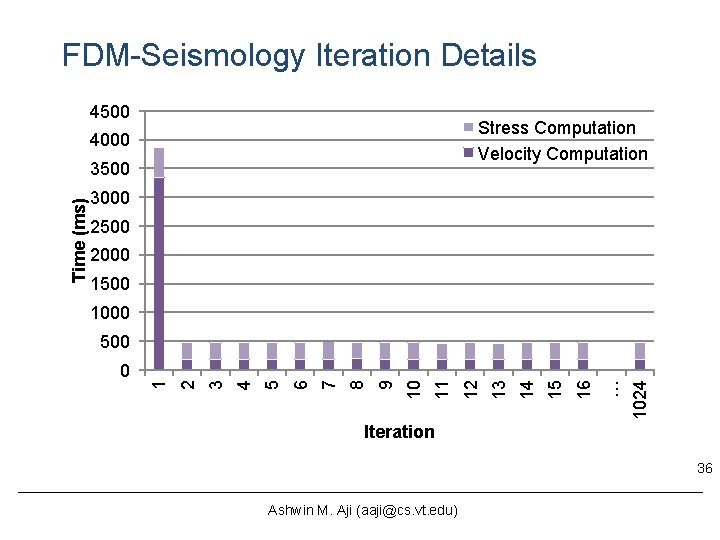

FDM-Seismology Iteration Details 4500 Stress Computation Velocity Computation 4000 3000 2500 2000 1500 1024 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 0 … 500 1 Time (ms) 3500 Iteration 36 Ashwin M. Aji (aaji@cs. vt. edu)

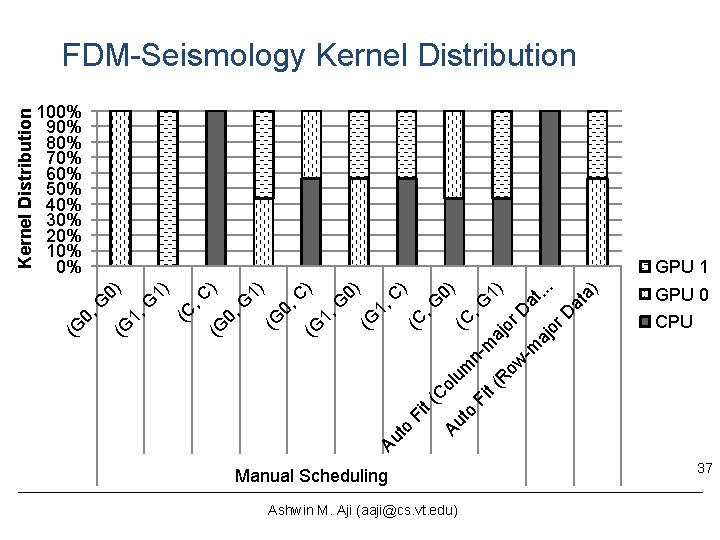

100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 1) aj (R or ow D -m at. . . aj or D at a) GPU 0 CPU Fi t Au to Fi t (C ol um nm (C , G 0) (C , G C ) 1, (G G 0) C ) (G 1, (G 0, G 1) ) (G 0, , C (C G 1) (G 1, G 0) GPU 1 (G 0, Kernel Distribution FDM-Seismology Kernel Distribution Manual Scheduling Ashwin M. Aji (aaji@cs. vt. edu) 37

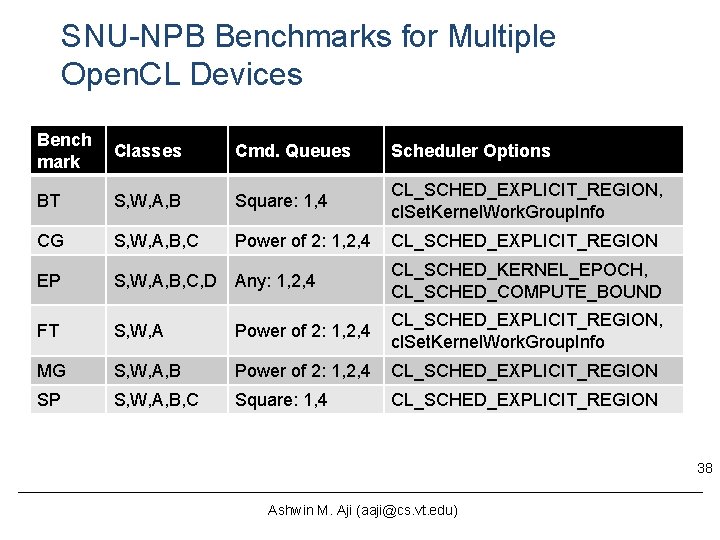

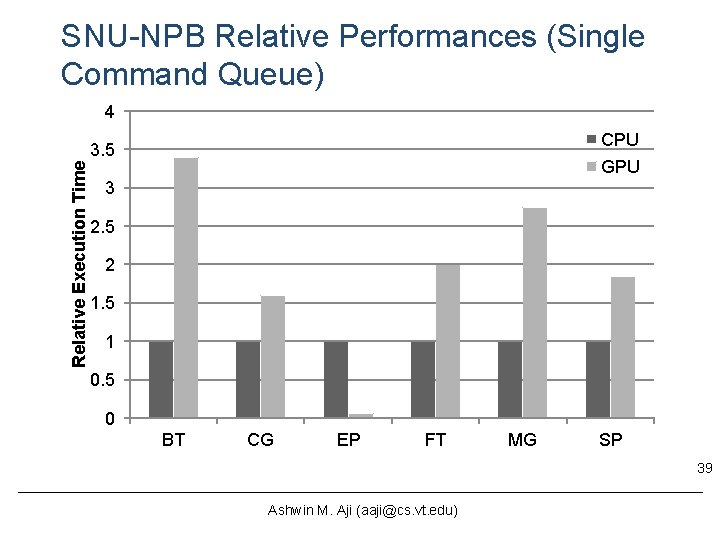

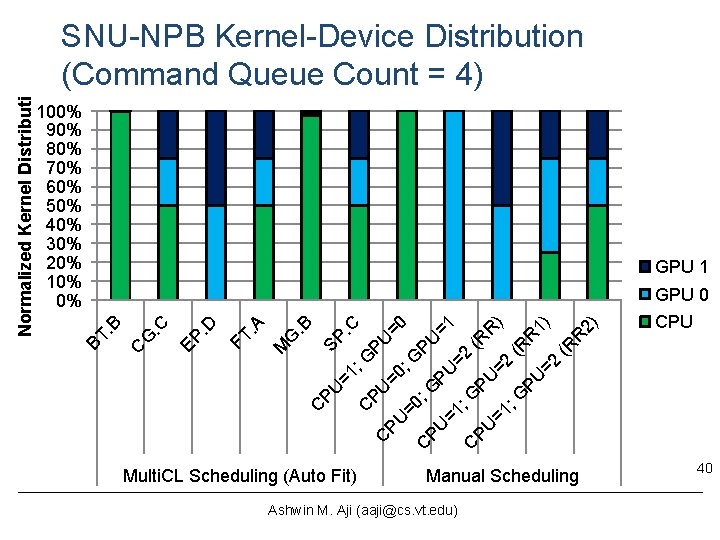

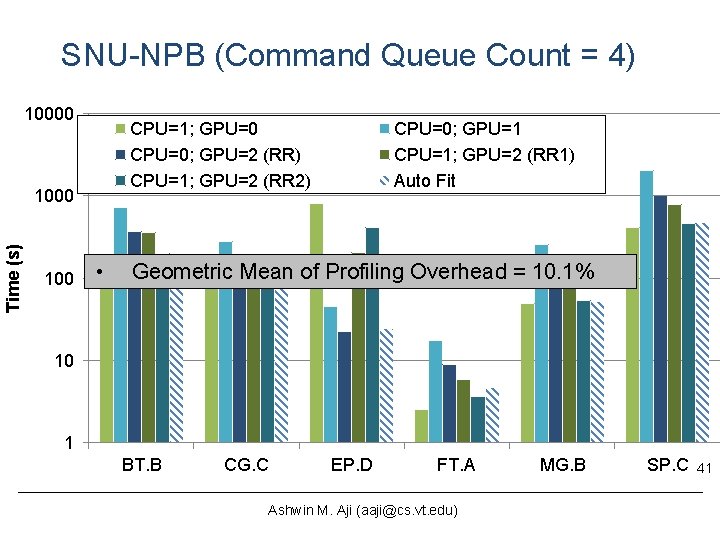

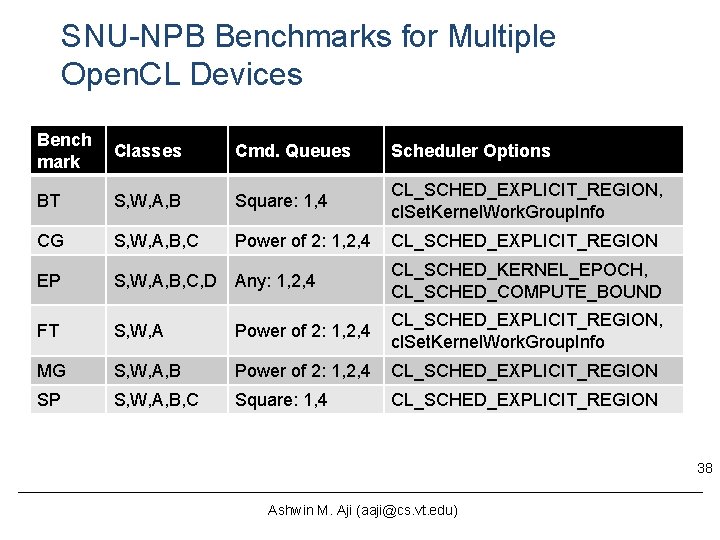

SNU-NPB Benchmarks for Multiple Open. CL Devices Bench mark Classes Cmd. Queues Scheduler Options BT S, W, A, B Square: 1, 4 CL_SCHED_EXPLICIT_REGION, cl. Set. Kernel. Work. Group. Info CG S, W, A, B, C Power of 2: 1, 2, 4 CL_SCHED_EXPLICIT_REGION EP S, W, A, B, C, D Any: 1, 2, 4 CL_SCHED_KERNEL_EPOCH, CL_SCHED_COMPUTE_BOUND FT S, W, A Power of 2: 1, 2, 4 CL_SCHED_EXPLICIT_REGION, cl. Set. Kernel. Work. Group. Info MG S, W, A, B Power of 2: 1, 2, 4 CL_SCHED_EXPLICIT_REGION SP S, W, A, B, C Square: 1, 4 CL_SCHED_EXPLICIT_REGION 38 Ashwin M. Aji (aaji@cs. vt. edu)

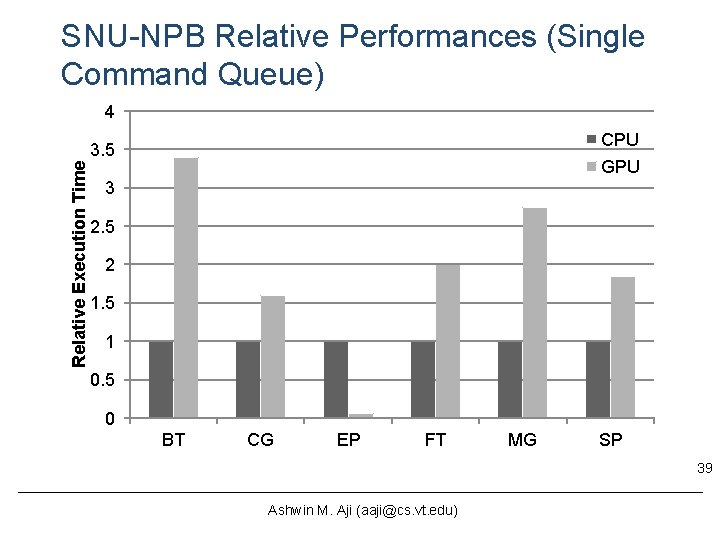

SNU-NPB Relative Performances (Single Command Queue) 4 CPU Relative Execution Time 3. 5 GPU 3 2. 5 2 1. 5 1 0. 5 0 BT CG EP FT MG SP 39 Ashwin M. Aji (aaji@cs. vt. edu)

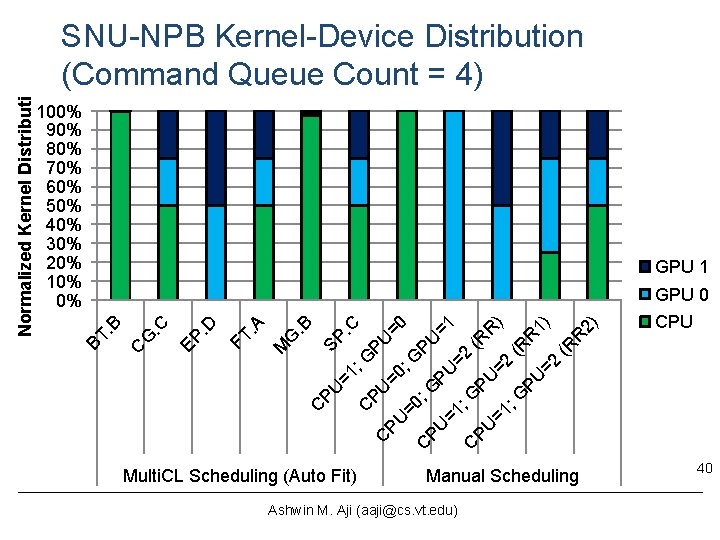

100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% GPU 1 =1 C ; C G P PU C U PU =0 =0 ; =0 G C PU ; G PU PU =1 =1 ; G =2 C PU PU (RR =1 =2 ) ; G ( PU RR =2 1) (R R 2) CPU C PU SP. G. B M FT. A D EP. G. C C . B GPU 0 BT Normalized Kernel Distribution SNU-NPB Kernel-Device Distribution (Command Queue Count = 4) Multi. CL Scheduling (Auto Fit) Manual Scheduling Ashwin M. Aji (aaji@cs. vt. edu) 40

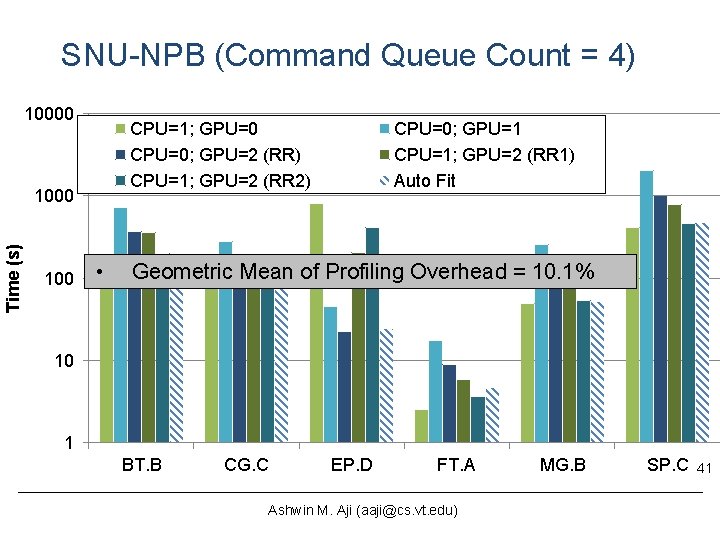

SNU-NPB (Command Queue Count = 4) 10000 CPU=1; GPU=0 CPU=0; GPU=2 (RR) CPU=1; GPU=2 (RR 2) Time (s) 1000 100 • CPU=0; GPU=1 CPU=1; GPU=2 (RR 1) Auto Fit Geometric Mean of Profiling Overhead = 10. 1% 10 1 BT. B CG. C EP. D FT. A Ashwin M. Aji (aaji@cs. vt. edu) MG. B SP. C 41

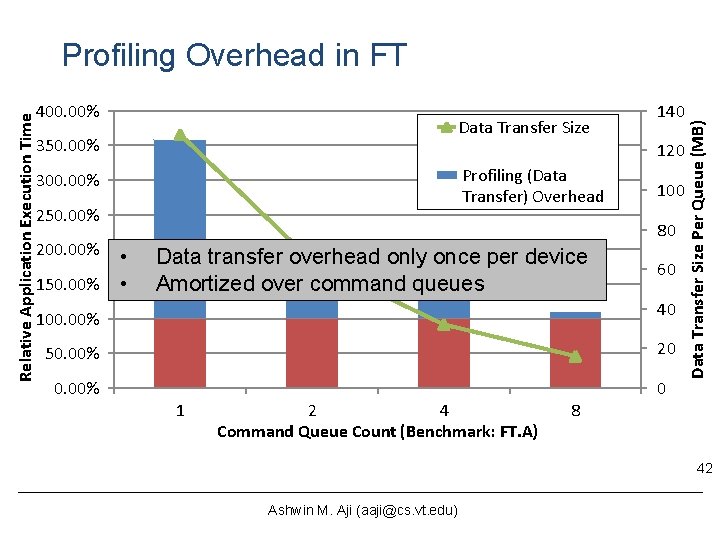

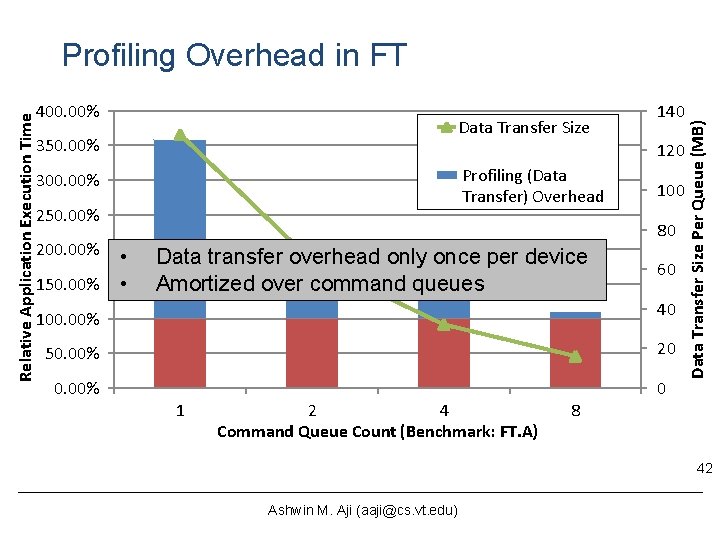

400. 00% Data Transfer Size 350. 00% 120 Profiling (Data Transfer) Overhead 300. 00% 250. 00% 200. 00% 150. 00% 140 100 80 • • Data transfer overhead only once per device Amortized over command queues 60 40 100. 00% 50. 00% 20 0. 00% 0 1 2 4 Command Queue Count (Benchmark: FT. A) 8 Data Transfer Size Per Queue (MB) Relative Application Execution Time Profiling Overhead in FT 42 Ashwin M. Aji (aaji@cs. vt. edu)

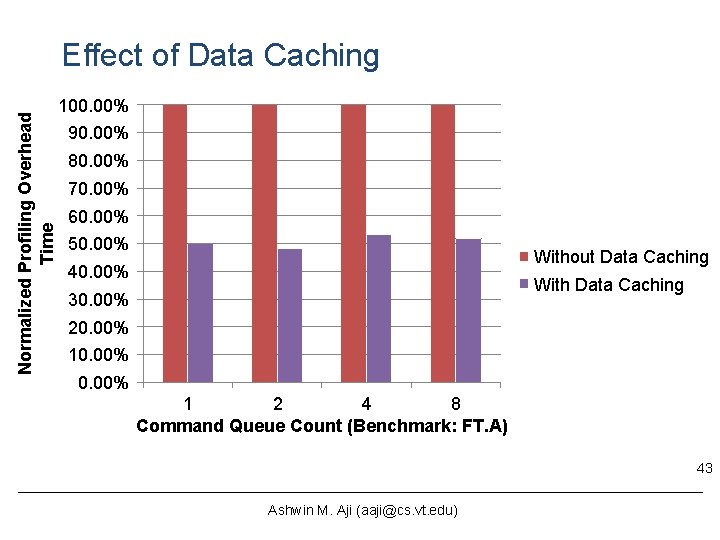

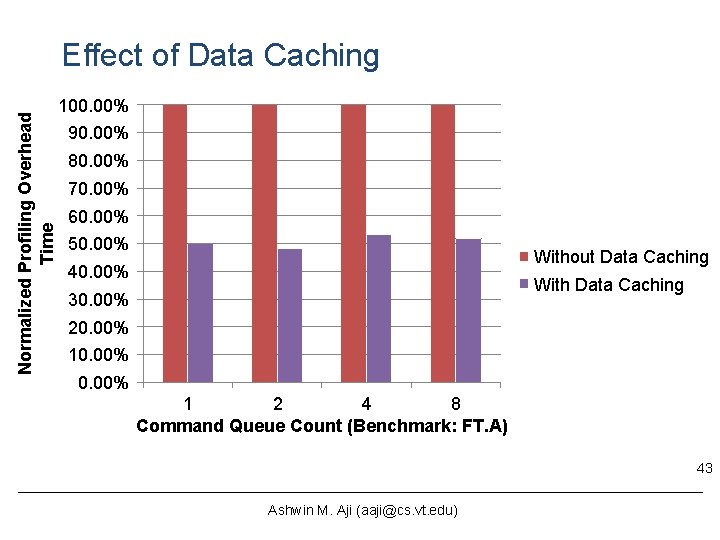

Normalized Profiling Overhead Time Effect of Data Caching 100. 00% 90. 00% 80. 00% 70. 00% 60. 00% 50. 00% Without Data Caching 40. 00% With Data Caching 30. 00% 20. 00% 10. 00% 1 2 4 8 Command Queue Count (Benchmark: FT. A) 43 Ashwin M. Aji (aaji@cs. vt. edu)

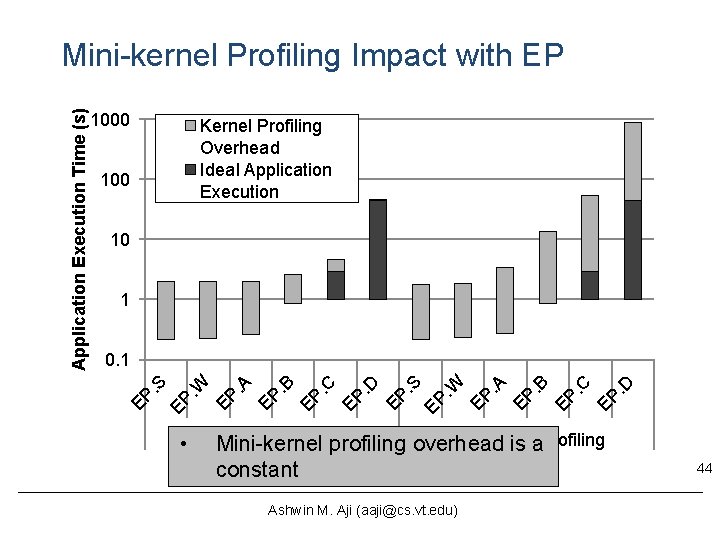

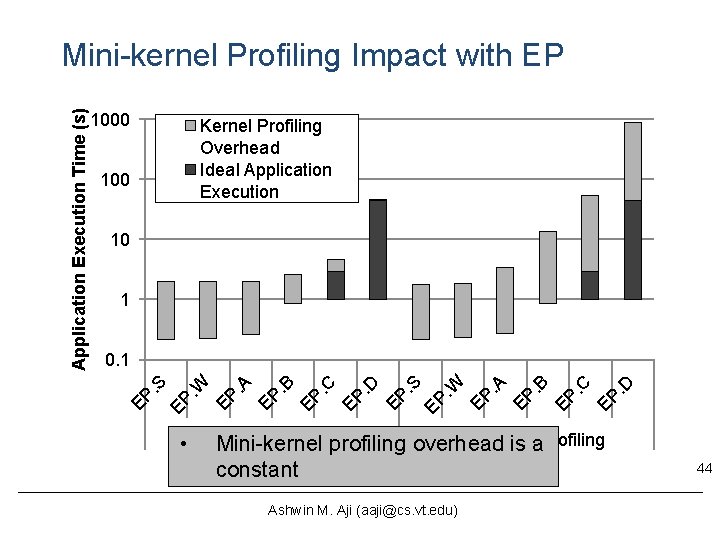

1000 Kernel Profiling Overhead Ideal Application Execution 100 10 1 • Minikernel Profiling Mini-kernel profiling D EP. C EP. . B EP . A EP . W EP . S EP D EP. C EP. . B EP . A EP . W EP . S 0. 1 EP Application Execution Time (s) Mini-kernel Profiling Impact with EP Full Kernel overhead is a. Profiling constant Ashwin M. Aji (aaji@cs. vt. edu) 44

Conclusions • We decouple queues and devices in Open. CL to enable automatic scheduling – – Custom flags to context and command queue New API for device-specific work size assignment New API for explicit scheduler regions Extremely minimal changes to existing Open. CL codes • We designed an example fast and accurate runtime system for command queue scheduling – Different runtime modules and optimizations • Evaluation – FDM-Seismology – SNU-NPB Questions? Ashwin Aji (aaji@cs. vt. edu) Pavan Balaji (balaji@mcs. anl. gov) Ashwin M. Aji (aaji@cs. vt. edu) 45