Automatic Classification of Speech Recognition Hypotheses Using Acoustic

- Slides: 35

Automatic Classification of Speech Recognition Hypotheses Using Acoustic and Pragmatic Features Malte Gabsdil Universität des Saarlandes Dissertation Defense Saarbrücken – November ? ? th 2004

The Problem (theoretical) • Grounding: establishing common ground between dialogue participants – “Did H correctly understand what S said? ” • Combination of bottom-up (“signal”) and top-down (“expectation”) information • Clark (1996): Action ladders – upward completion – downward evidence

The Problem (practical) • Assessment of recognition quality for spoken dialogue systems • Information sources – speech/recognition output (“acoustic”) – dialogue/task context (“pragmatic”) • Crucial for usability and user satisfaction – avoid misunderstandings – promote dialogue flow and efficiency

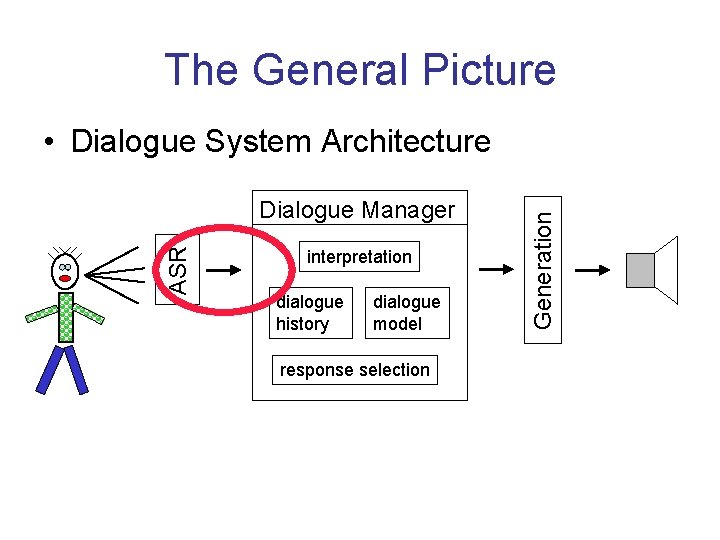

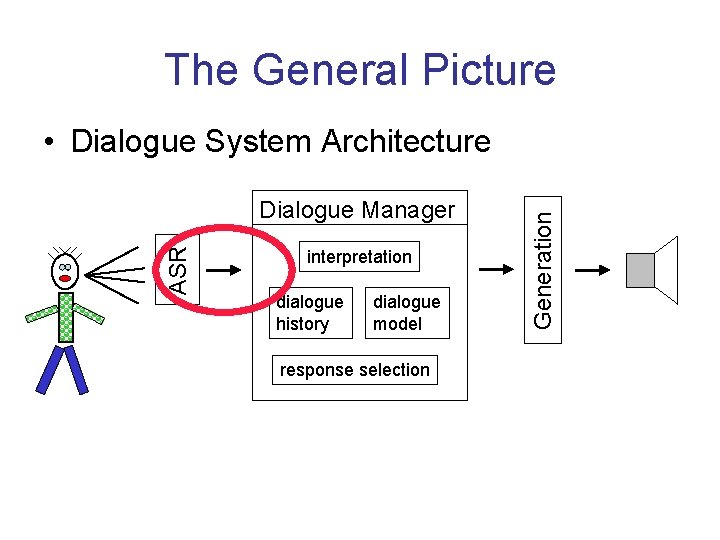

The General Picture ASR Dialogue Manager interpretation dialogue history dialogue model response selection Generation • Dialogue System Architecture

A Closer Look ASR – decision problem n-Best hypothesis hypotheses + confidence Acoustic features Confidence rejection. Classifier thresholds Machine Learning • How to assess recognition quality? Dialogue Manager interpretation dialogue history dialogue model response selection Pragmatic features

Overview • Machine learning classifiers • Acoustic and pragmatic features • Experiment 1: Chess – exemplary domain • Experiment 2: WITAS – complex spoken dialogue system • Conclusions & Topics for Future Work

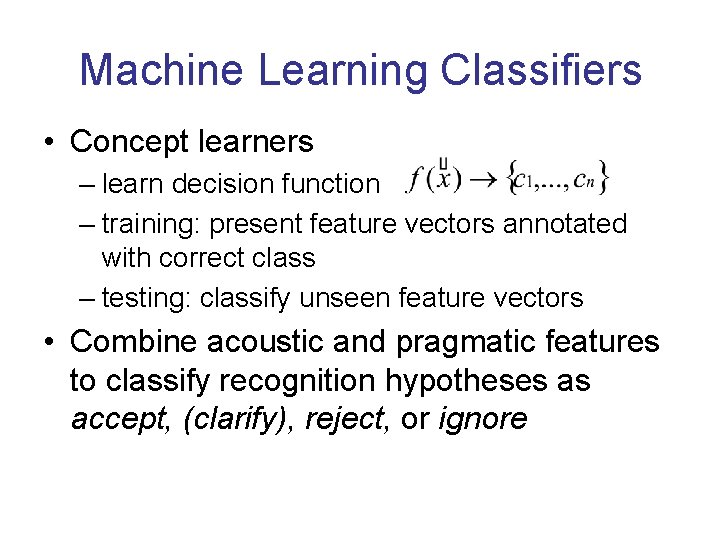

Machine Learning Classifiers • Concept learners – learn decision function – training: present feature vectors annotated with correct class – testing: classify unseen feature vectors • Combine acoustic and pragmatic features to classify recognition hypotheses as accept, (clarify), reject, or ignore

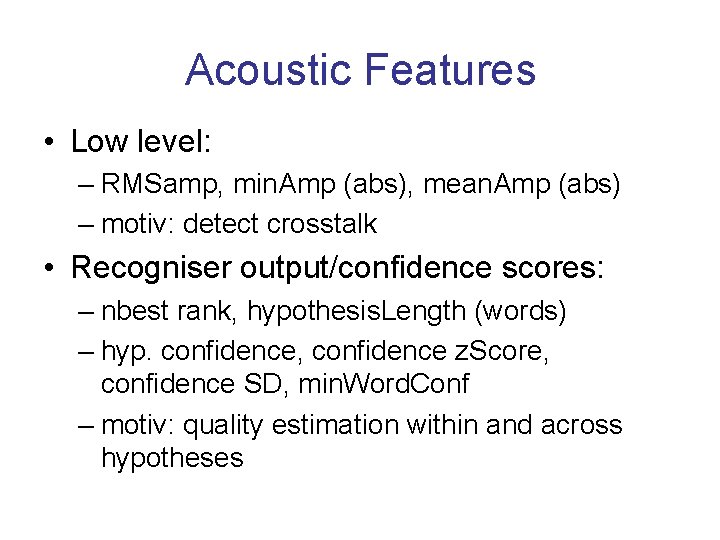

Acoustic Information • Derived from speech waveforms and recognition output • Low level features – amplitude, pitch (f 0), duration, tempo (e. g. Levow 1998, Litman et al. 2000) • Recogniser confidences – normalised probability that a sequence of recognised words is correct (e. g. Wessel et al. 2001)

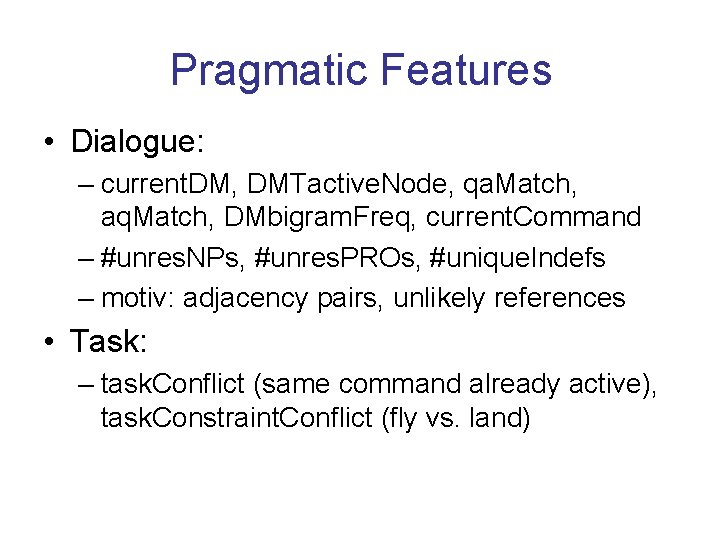

Pragmatic Information • Derived from the dialogue context and task knowledge • Dialogue features – adjacency pairs: current/previous dialogue move, DM bigram frequencies – reference: unresolvable definite NPs/PROs • Task features (scenario dependent) – evaluation of move scores (Chess), conflicts in action preconds and effects of (WITAS)

Experiment 1: Chess • Recognise spoken chess move instructions – speech interface to computer chess program • Exemplary domain to test methodology – nice properties, easy to control • Pragmatic features: automatic move evaluation scores (Crafty) • Acoustic features: recogniser confidence scores (Nuance 8. 0)

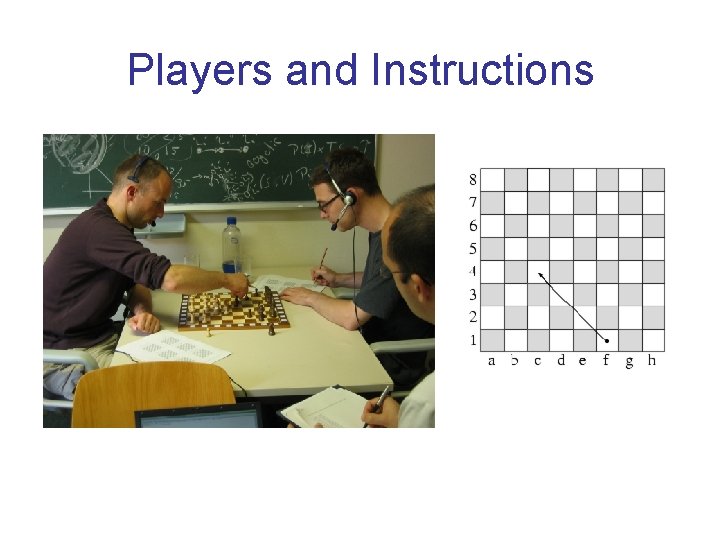

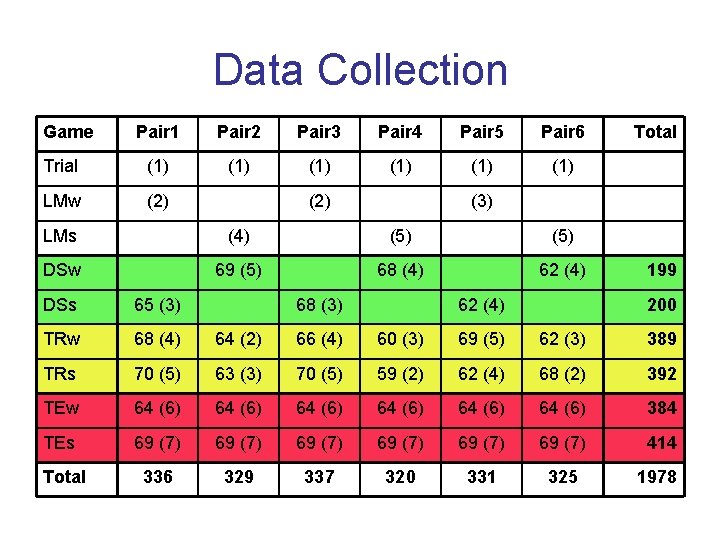

Data & Design • Subjects replay given chess games – instruct each other to move pieces – approx. 2000 move instructions in different data sets (devel, train, test) • 5 x 2 x 6 design – 5 systems for classifying recognition results (main effect) – 2 game levels (strong vs. weak) – 6 pairs of players

Players and Instructions

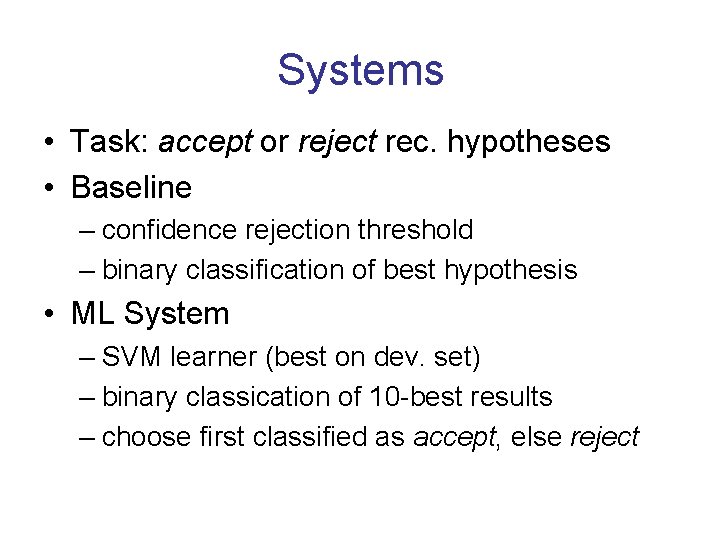

Systems • Task: accept or reject rec. hypotheses • Baseline – confidence rejection threshold – binary classification of best hypothesis • ML System – SVM learner (best on dev. set) – binary classication of 10 -best results – choose first classified as accept, else reject

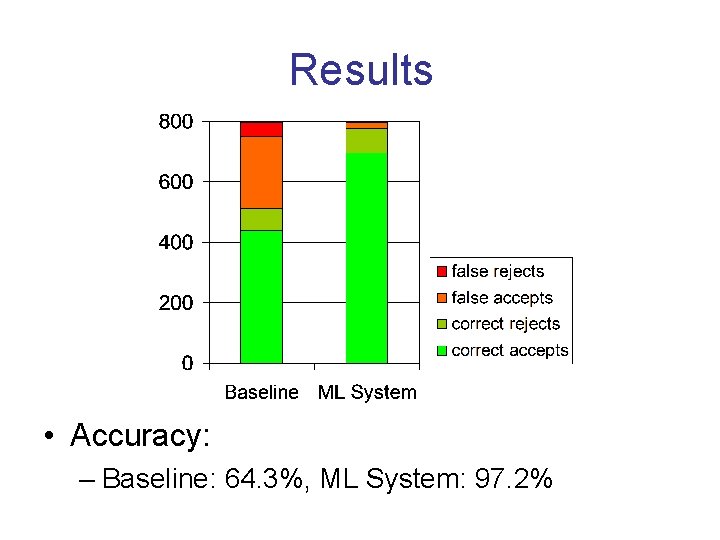

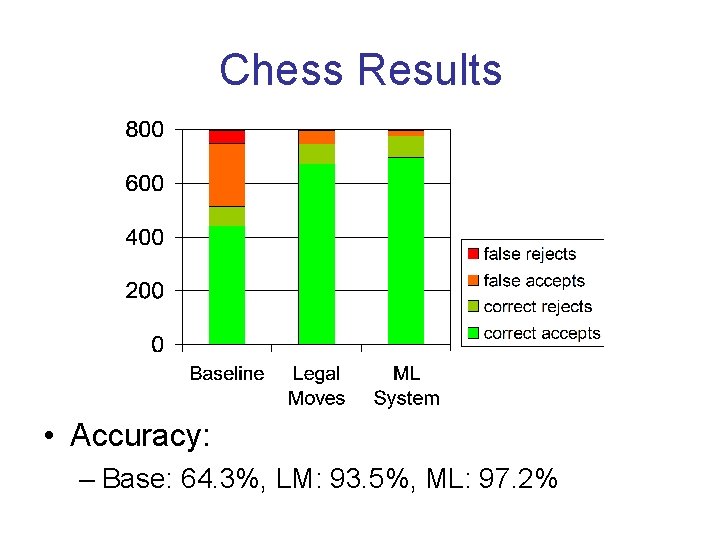

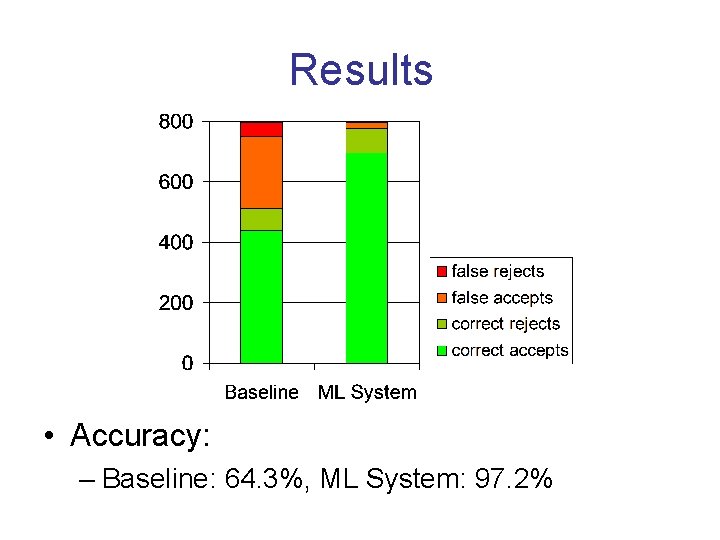

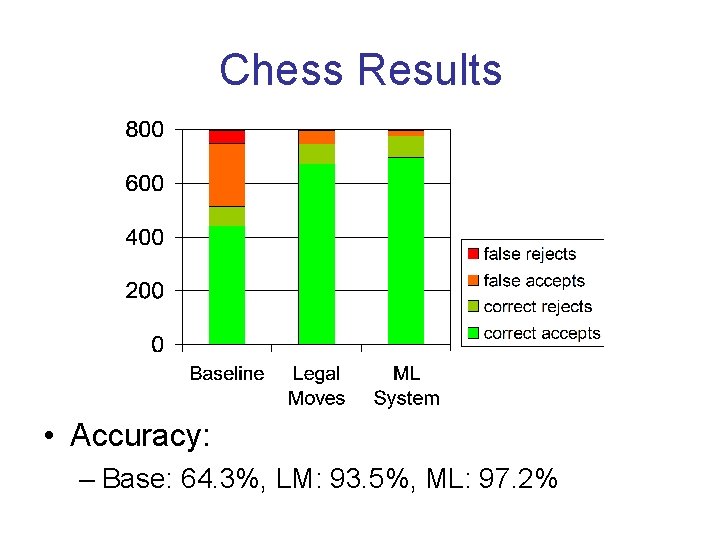

Results • Accuracy: – Baseline: 64. 3%, ML System: 97. 2%

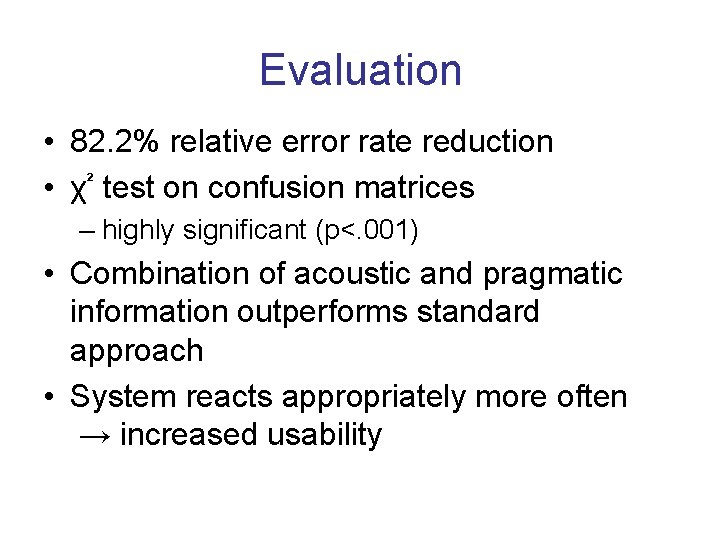

Evaluation • 82. 2% relative error rate reduction • χ² test on confusion matrices – highly significant (p<. 001) • Combination of acoustic and pragmatic information outperforms standard approach • System reacts appropriately more often → increased usability

Experiment 2: WITAS • Operator interaction with robot helicopter • Multi-modal references, collaborative activities, multi-tasking • Differences to chess experiment – complex dialogue scenario – complex system (ISU-based, planning, …) – much larger grammar and vocabulary • Chess 37 GR, Vocab 50 FEHLT WAS – open mic recordings (ignore class)

WITAS Screenshot

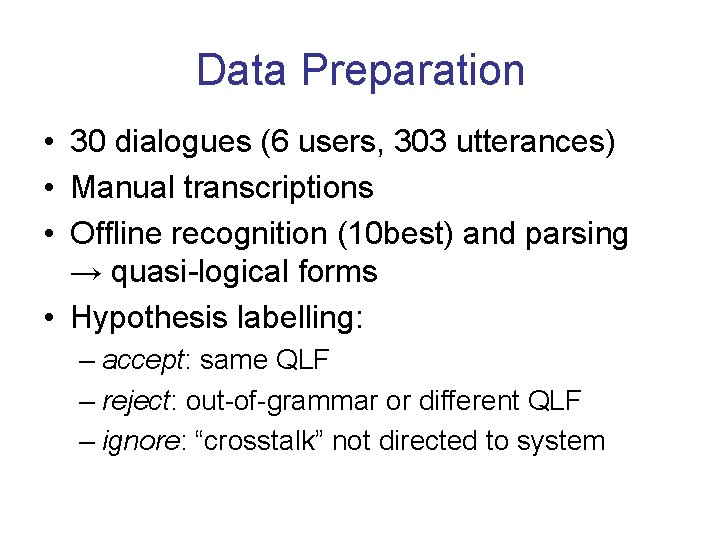

Data Preparation • 30 dialogues (6 users, 303 utterances) • Manual transcriptions • Offline recognition (10 best) and parsing → quasi-logical forms • Hypothesis labelling: – accept: same QLF – reject: out-of-grammar or different QLF – ignore: “crosstalk” not directed to system

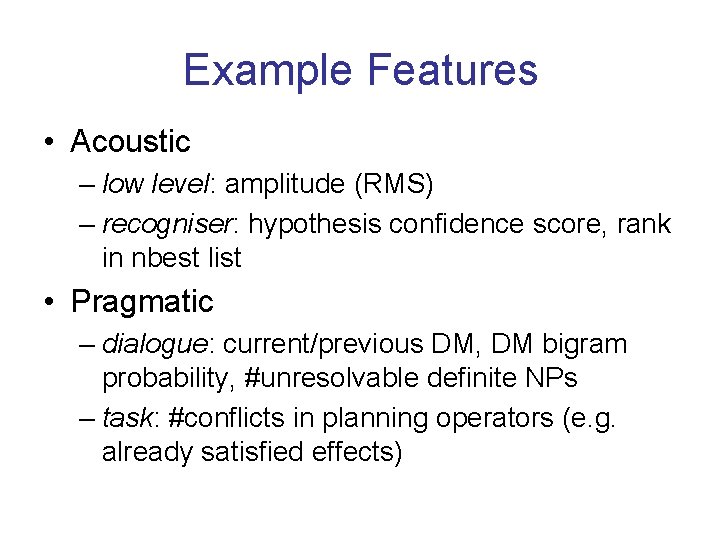

Example Features • Acoustic – low level: amplitude (RMS) – recogniser: hypothesis confidence score, rank in nbest list • Pragmatic – dialogue: current/previous DM, DM bigram probability, #unresolvable definite NPs – task: #conflicts in planning operators (e. g. already satisfied effects)

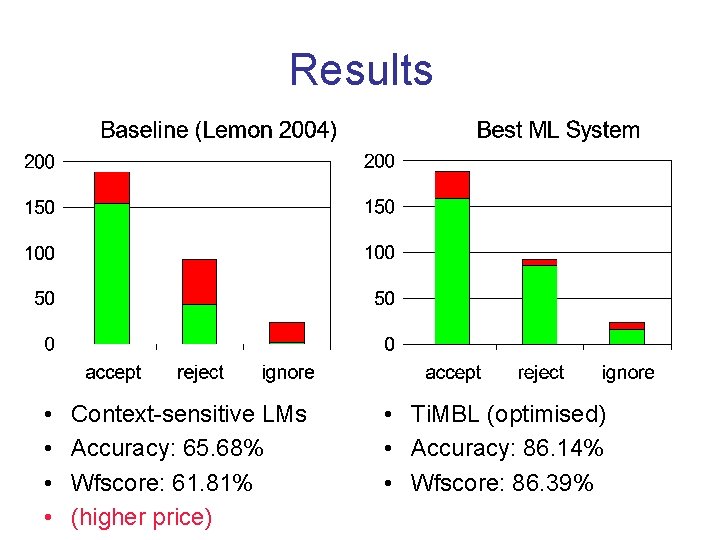

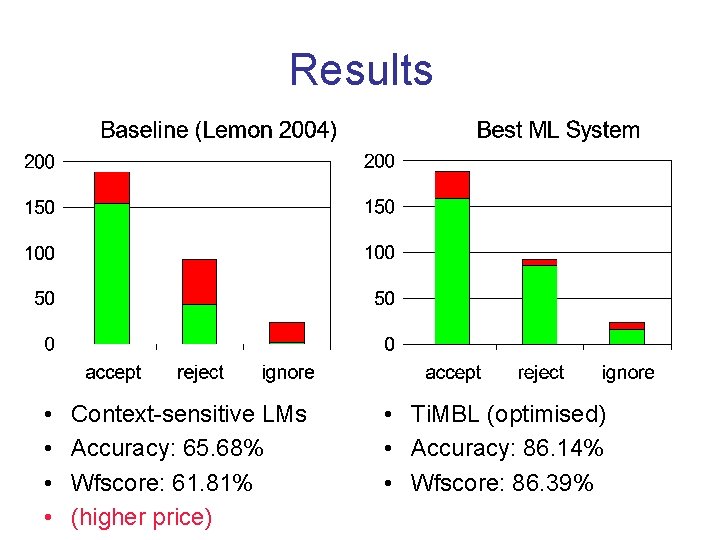

Results • • Context-sensitive LMs Accuracy: 65. 68% Wfscore: 61. 81% (higher price) • Ti. MBL (optimised) • Accuracy: 86. 14% • Wfscore: 86. 39%

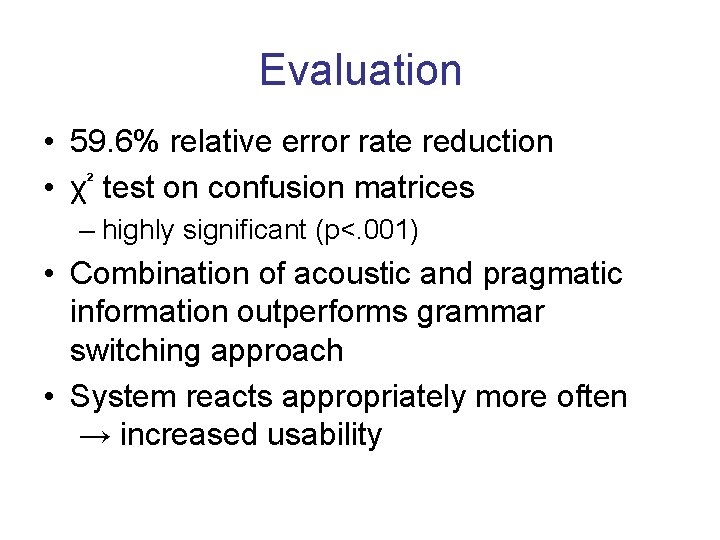

Evaluation • 59. 6% relative error rate reduction • χ² test on confusion matrices – highly significant (p<. 001) • Combination of acoustic and pragmatic information outperforms grammar switching approach • System reacts appropriately more often → increased usability

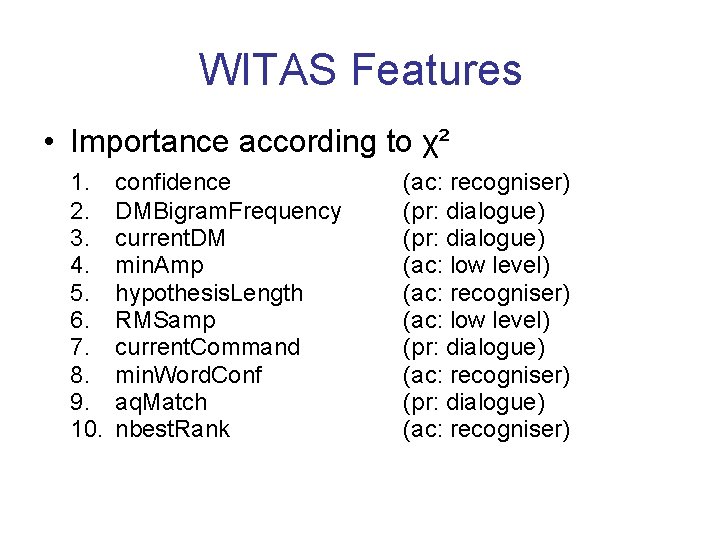

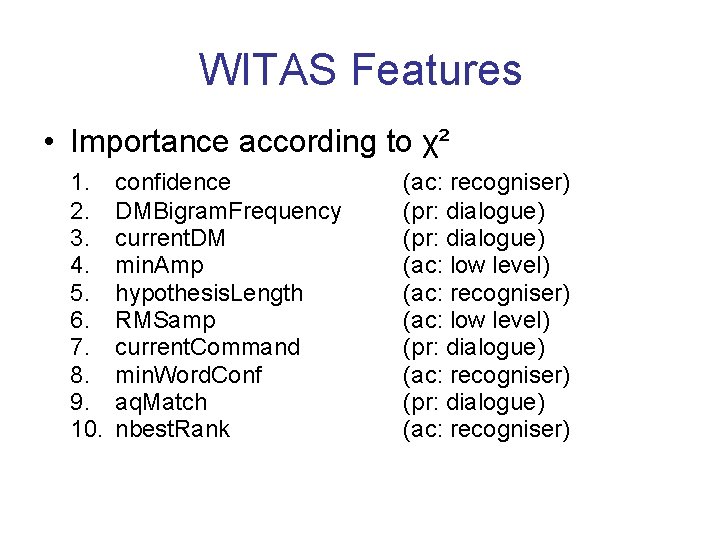

WITAS Features • Importance according to χ² 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. confidence DMBigram. Frequency current. DM min. Amp hypothesis. Length RMSamp current. Command min. Word. Conf aq. Match nbest. Rank (ac: recogniser) (pr: dialogue) (ac: low level) (ac: recogniser) (ac: low level) (pr: dialogue) (ac: recogniser)

Summary/Achievements • Assessment of recognition quality for spoken dialogue systems (grounding) • Combination of acoustic and pragmatic information via machine learning • Highly significant improvements in classification accuracy over standard methods (incl. “grammar switching”) • Expect better system behaviour and user satisfaction

Topics for Future Work • Usability evaluation – systems with and w/o classification module • Generic and system-specific features – which features are available across systems? • Tools for ISU-based systems – module in DIPPER software library • Clarification – flexible generation (alternative questions, word -level clarification)

APPENDIX

Our Proposal • Combine acoustic and pragmatic information in a principled way • Machine learning to predict the grounding status of competing recognition hypotheses of user utterances • Evaluation against standard methods in spoken dialogue system engineering – confidence rejection thresholds

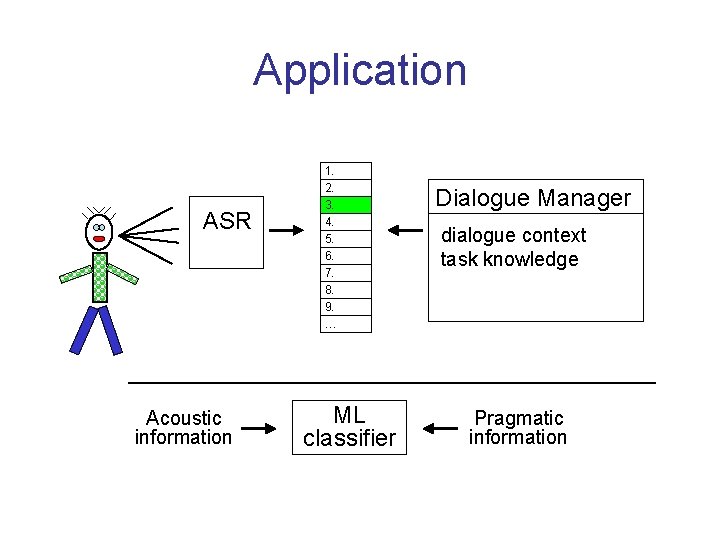

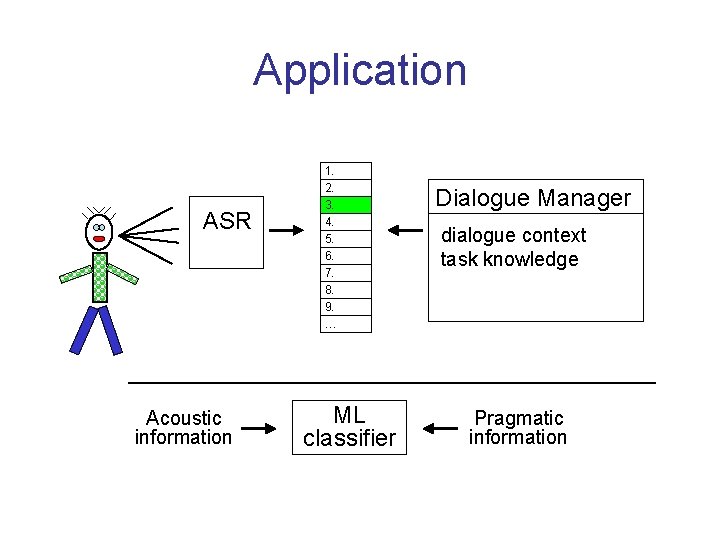

Application ASR Acoustic information 1. 2. 3. 4. 5. 6. 7. 8. 9. … ML classifier Dialogue Manager dialogue context task knowledge Pragmatic information

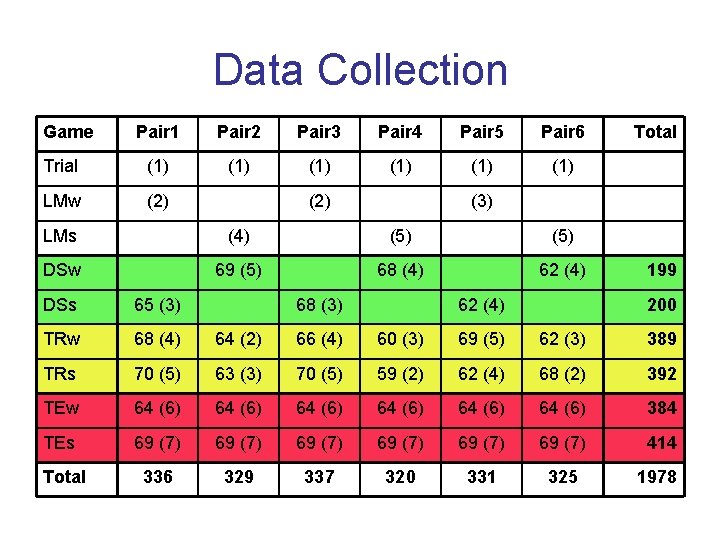

Data Collection Game Pair 1 Pair 2 Pair 3 Pair 4 Pair 5 Pair 6 Trial (1) (1) (1) LMw (2) Total (3) LMs (4) (5) DSw 69 (5) 68 (4) 62 (4) DSs 65 (3) TRw 68 (4) 64 (2) 66 (4) 60 (3) 69 (5) 62 (3) 389 TRs 70 (5) 63 (3) 70 (5) 59 (2) 62 (4) 68 (2) 392 TEw 64 (6) 64 (6) 384 TEs 69 (7) 69 (7) 414 336 329 337 320 331 325 1978 Total 68 (3) 62 (4) 199 200

Chess Results • Accuracy: – Base: 64. 3%, LM: 93. 5%, ML: 97. 2%

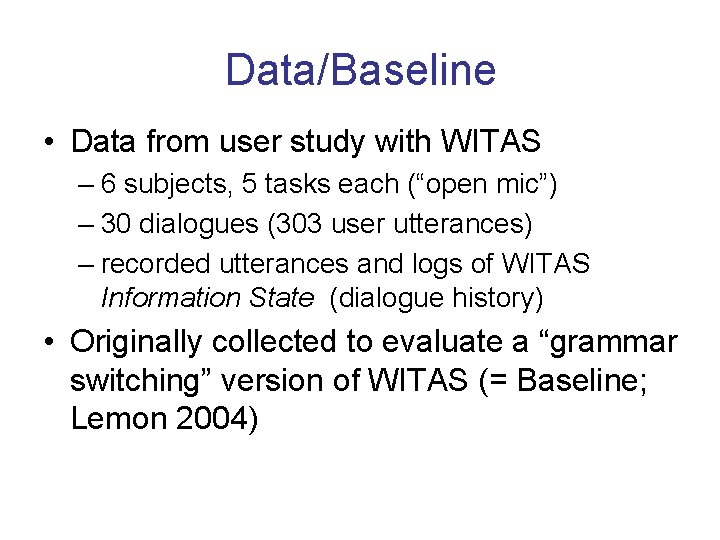

Data/Baseline • Data from user study with WITAS – 6 subjects, 5 tasks each (“open mic”) – 30 dialogues (303 user utterances) – recorded utterances and logs of WITAS Information State (dialogue history) • Originally collected to evaluate a “grammar switching” version of WITAS (= Baseline; Lemon 2004)

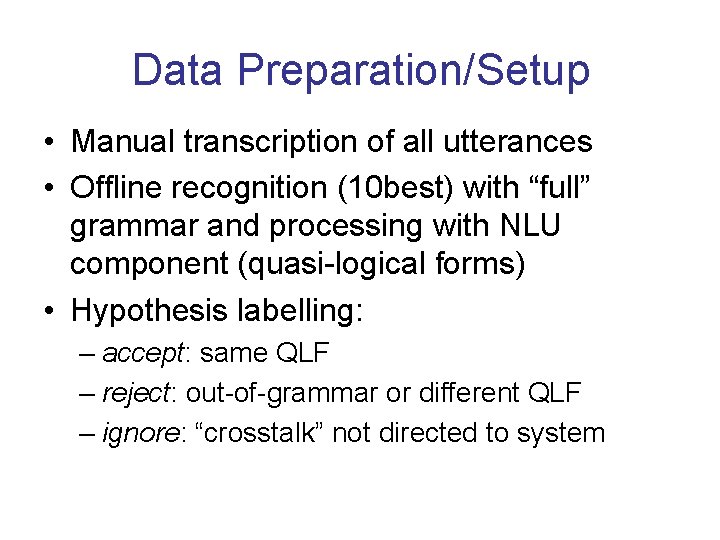

Data Preparation/Setup • Manual transcription of all utterances • Offline recognition (10 best) with “full” grammar and processing with NLU component (quasi-logical forms) • Hypothesis labelling: – accept: same QLF – reject: out-of-grammar or different QLF – ignore: “crosstalk” not directed to system

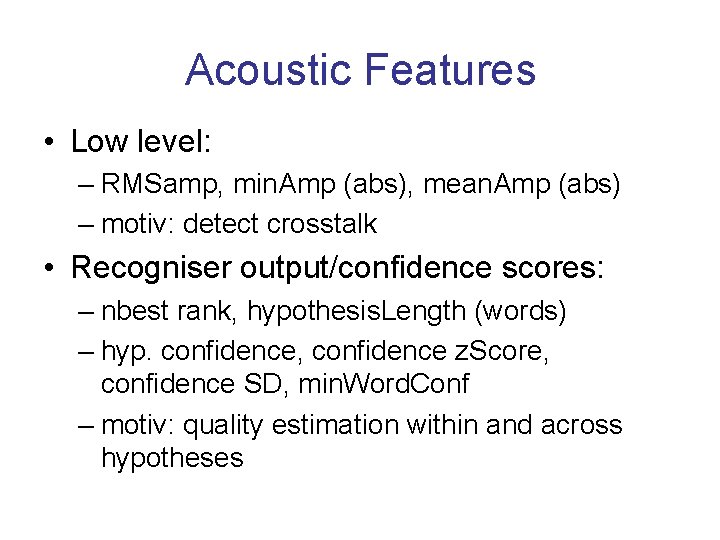

Acoustic Features • Low level: – RMSamp, min. Amp (abs), mean. Amp (abs) – motiv: detect crosstalk • Recogniser output/confidence scores: – nbest rank, hypothesis. Length (words) – hyp. confidence, confidence z. Score, confidence SD, min. Word. Conf – motiv: quality estimation within and across hypotheses

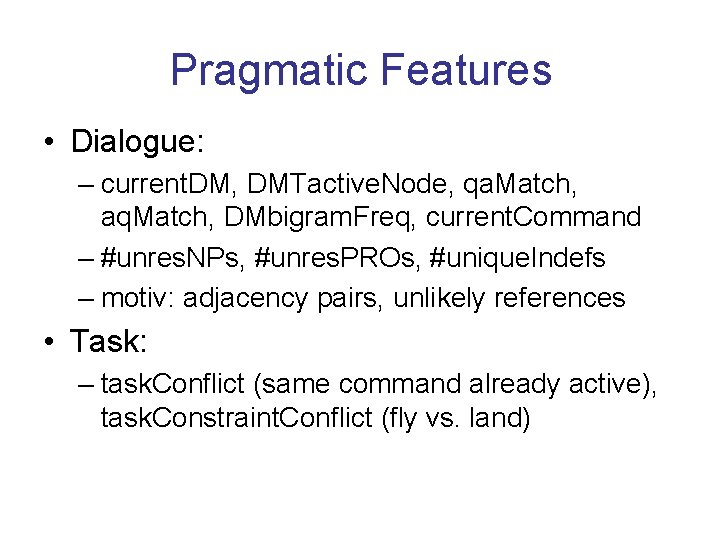

Pragmatic Features • Dialogue: – current. DM, DMTactive. Node, qa. Match, aq. Match, DMbigram. Freq, current. Command – #unres. NPs, #unres. PROs, #unique. Indefs – motiv: adjacency pairs, unlikely references • Task: – task. Conflict (same command already active), task. Constraint. Conflict (fly vs. land)

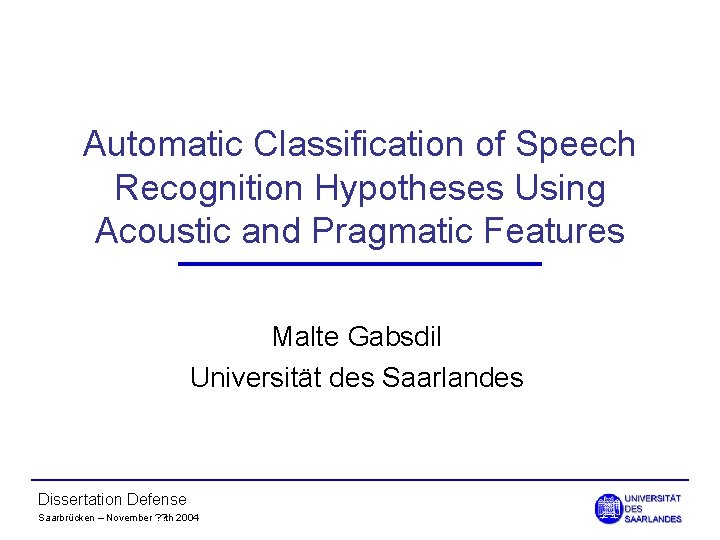

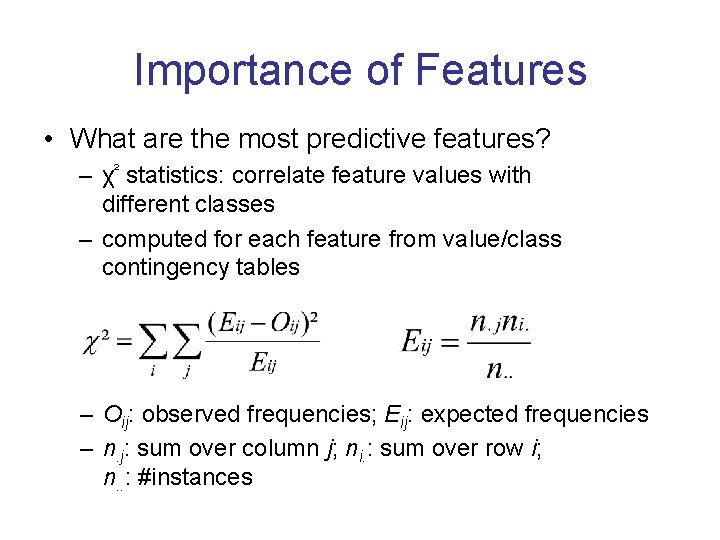

Importance of Features • What are the most predictive features? – χ² statistics: correlate feature values with different classes – computed for each feature from value/class contingency tables – Oij: observed frequencies; Eij: expected frequencies – n. j: sum over column j; ni. : sum over row i; n. . : #instances

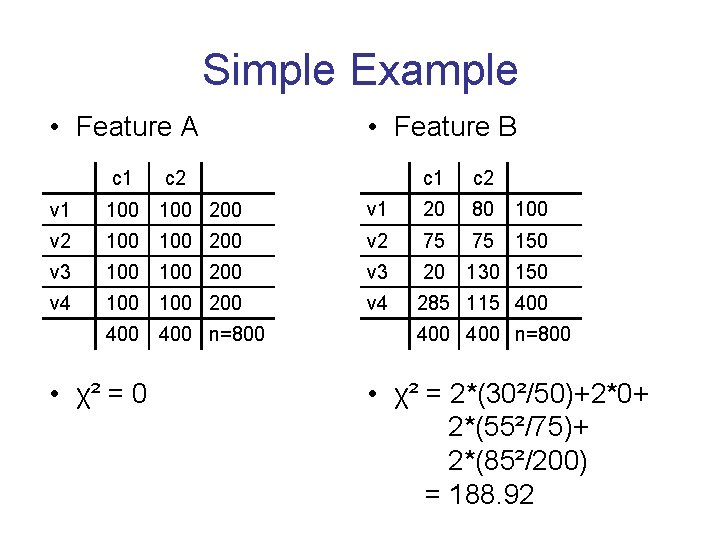

Simple Example • Feature A c 1 • Feature B c 2 c 1 c 2 v 1 100 200 v 1 20 80 100 v 2 100 200 v 2 75 75 150 v 3 100 200 v 3 20 130 150 v 4 100 200 v 4 285 115 400 400 n=800 • χ² = 2*(30²/50)+2*0+ 2*(55²/75)+ 2*(85²/200) = 188. 92