Automatic Chinese Text Categorization Feature Engineering and Comparison

- Slides: 14

Automatic Chinese Text Categorization Feature Engineering and Comparison of Classification Approaches Yi-An Lin and Yu-Te Lin

Motivation • Text categorization (TC) is extensively researched in English but not in Chinese. • What’s feature engineering help in Chinese? • Should Chinese content be segmented? • What’s ML best for TC? – Naïve Bayes, SVM, Decision Tree, k Nearest Neighbor, Max. Ent, or Language Model Methods?

Outline • • Data Preparation Feature Selection Feature Vector Encoding Comparison of Classifiers Feature Engineering Comparison after Feature Engineering Conclusion

Data Preparation • Tool: Yahoo News Crawler • Category • • Entertainment Politics Business Sports

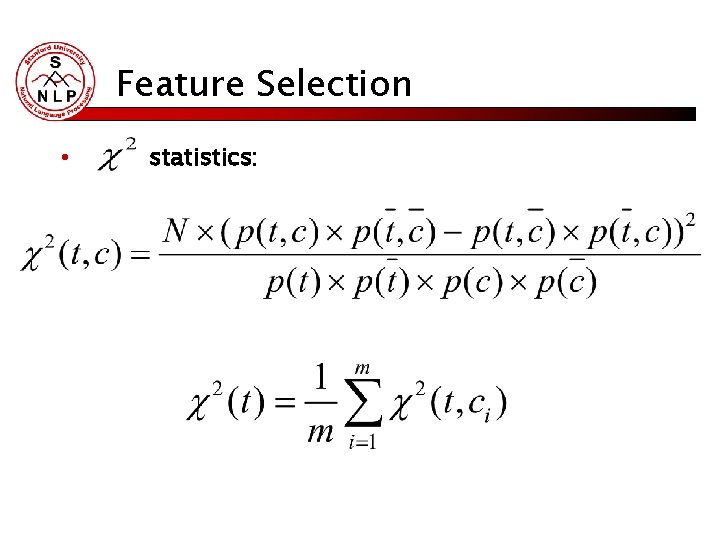

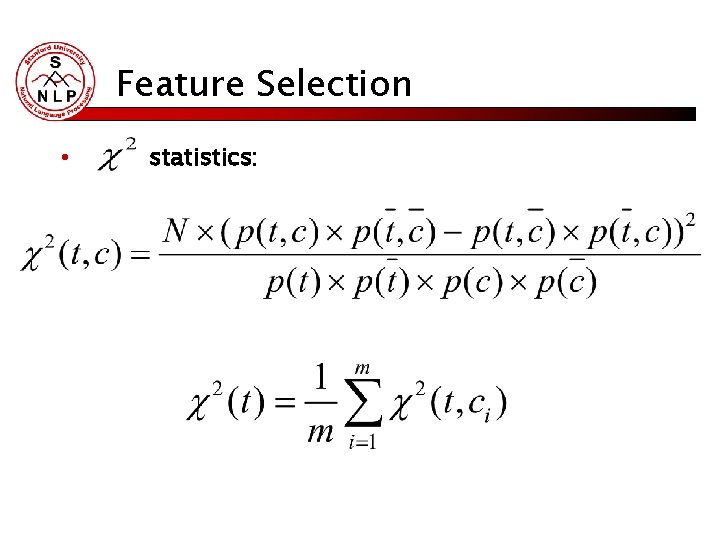

Feature Selection • statistics:

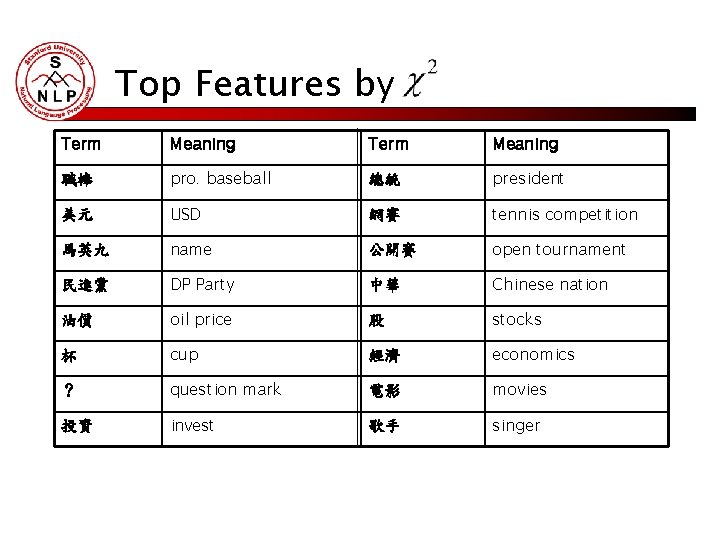

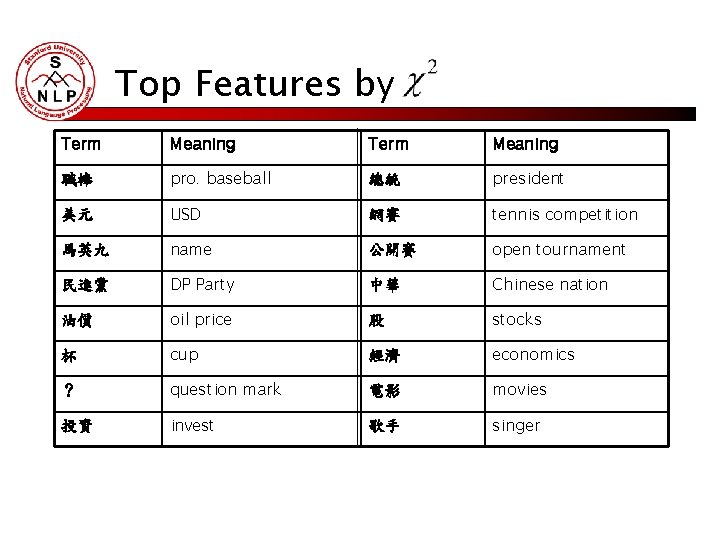

Top Features by Term Meaning 職棒 pro. baseball 總統 president 美元 USD 網賽 tennis competition 馬英九 name 公開賽 open tournament 民進黨 DP Party 中華 Chinese nation 油價 oil price 股 stocks 杯 cup 經濟 economics ? question mark 電影 movies 投資 invest 歌手 singer

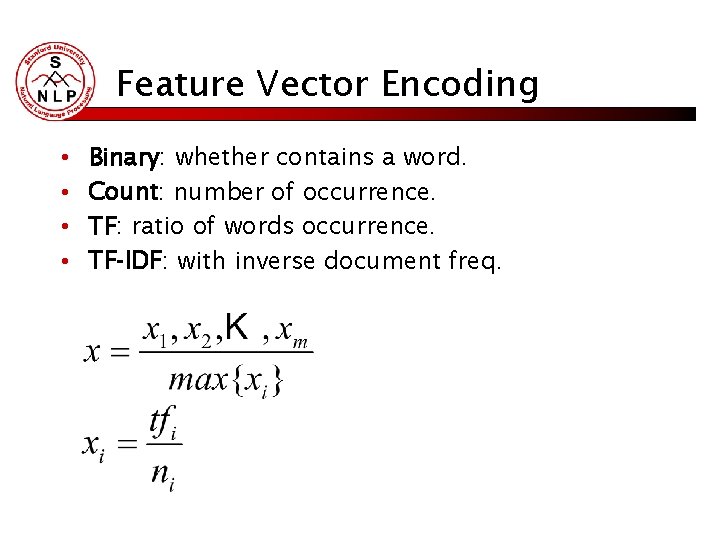

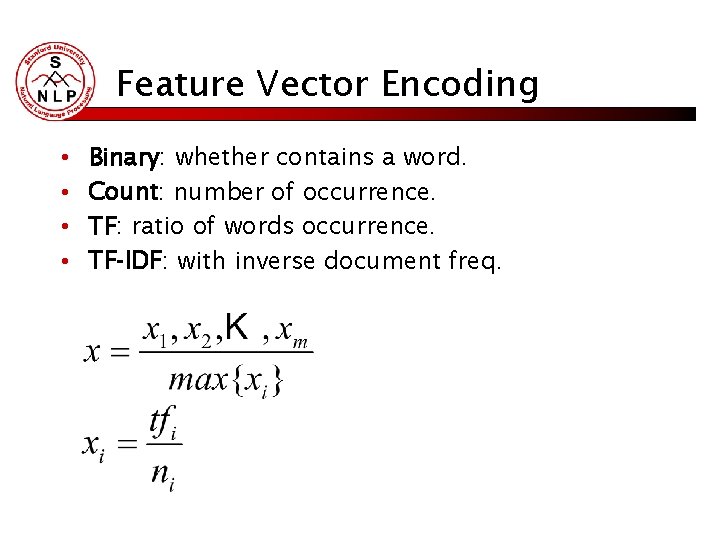

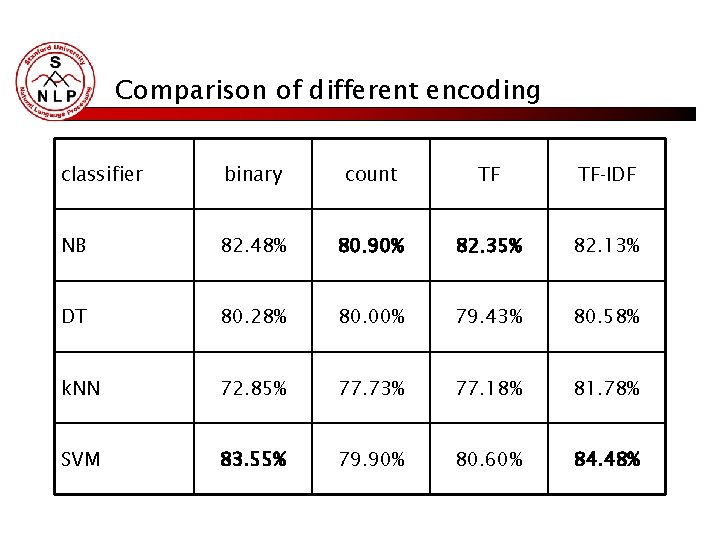

Feature Vector Encoding • • Binary: whether contains a word. Count: number of occurrence. TF: ratio of words occurrence. TF-IDF: with inverse document freq.

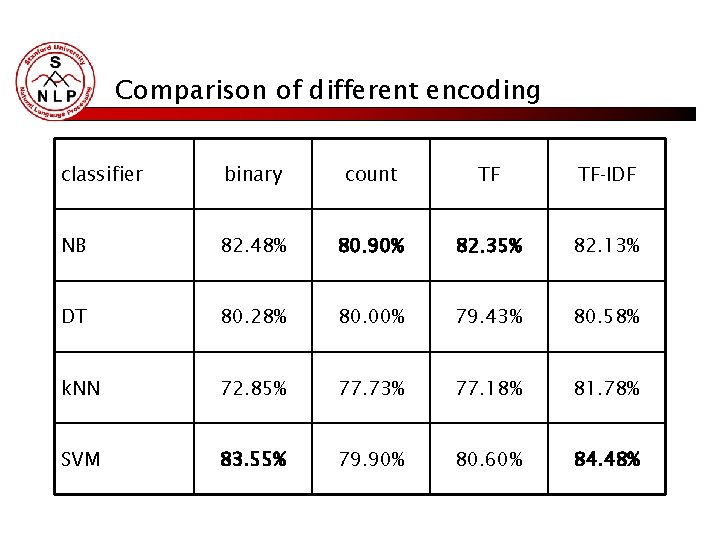

Comparison of different encoding classifier binary count TF TF-IDF NB 82. 48% 80. 90% 82. 35% 82. 13% DT 80. 28% 80. 00% 79. 43% 80. 58% k. NN 72. 85% 77. 73% 77. 18% 81. 78% SVM 83. 55% 79. 90% 80. 60% 84. 48%

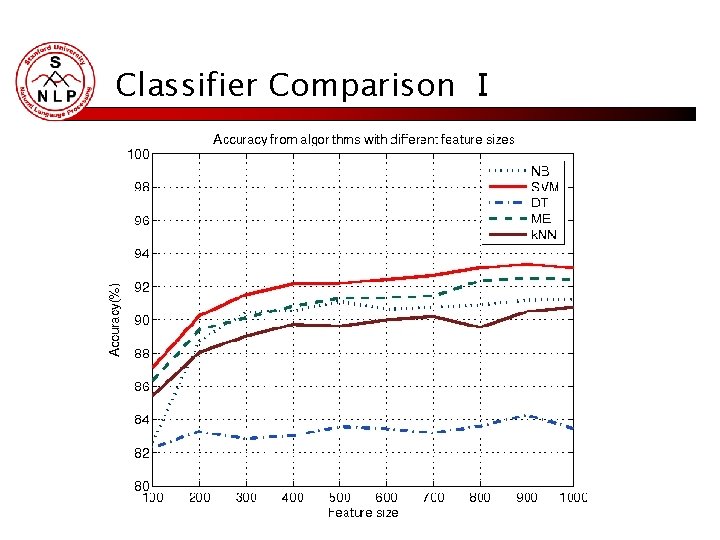

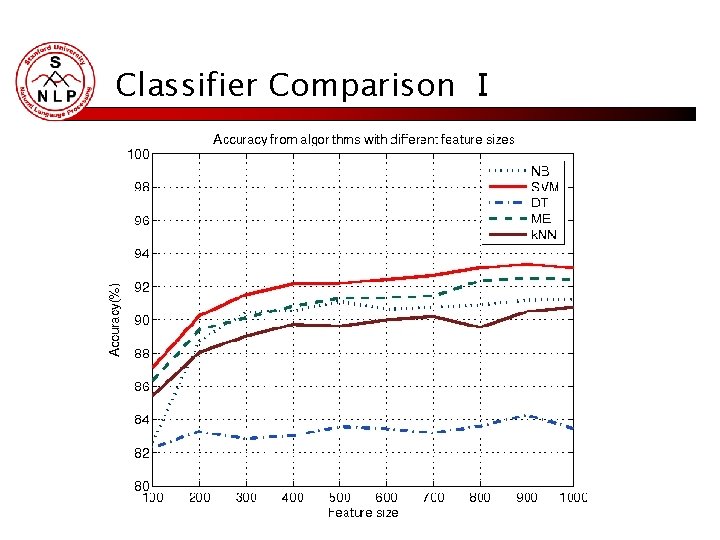

Classifier Comparison Ⅰ

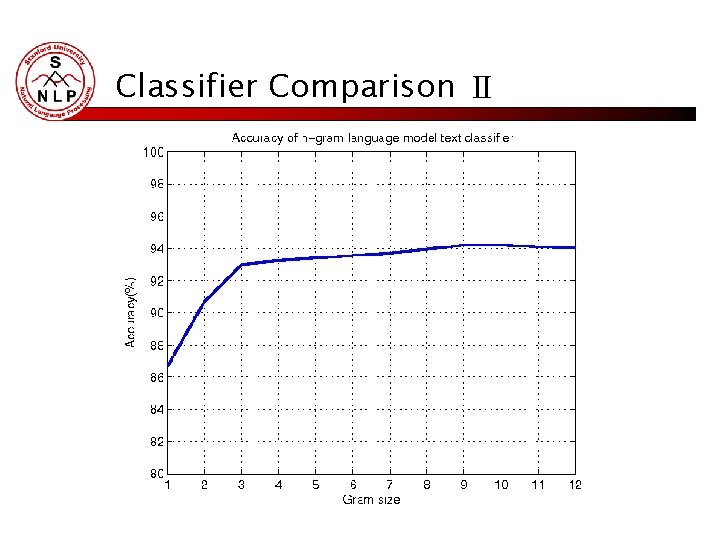

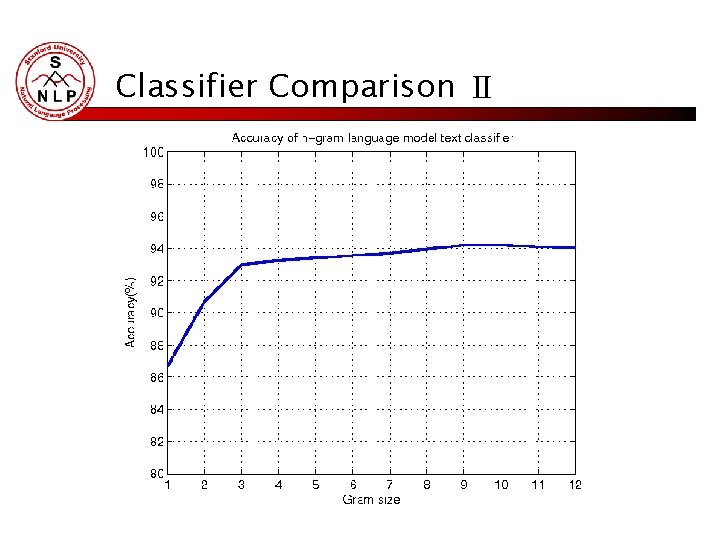

Classifier Comparison Ⅱ

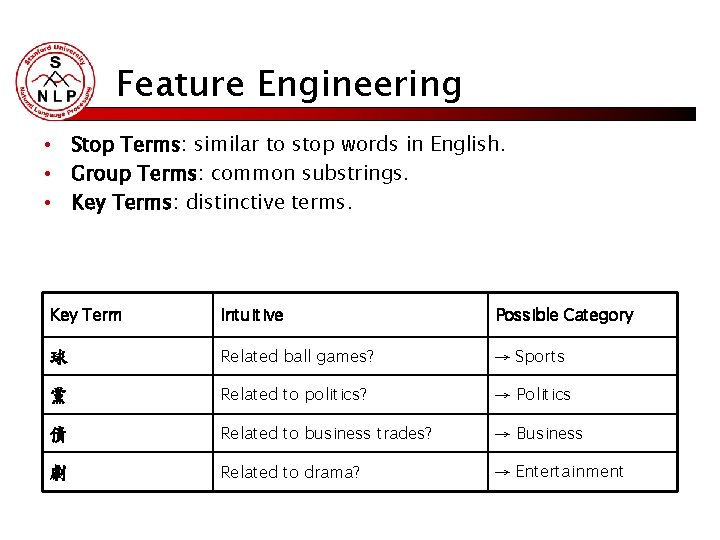

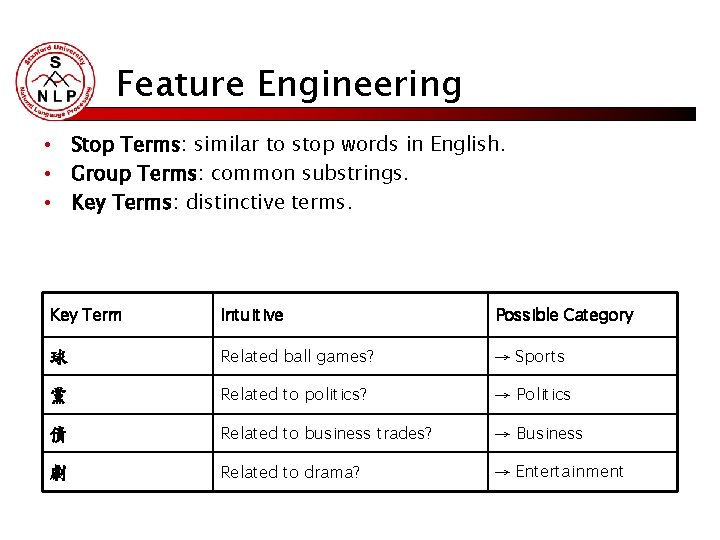

Feature Engineering • Stop Terms: similar to stop words in English. • Group Terms: common substrings. • Key Terms: distinctive terms. Key Term Intuitive Possible Category 球 Related ball games? → Sports 黨 Related to politics? → Politics 債 Related to business trades? → Business 劇 Related to drama? → Entertainment

Comparison of feature engineering methods S: stop terms G: group terms K: key terms

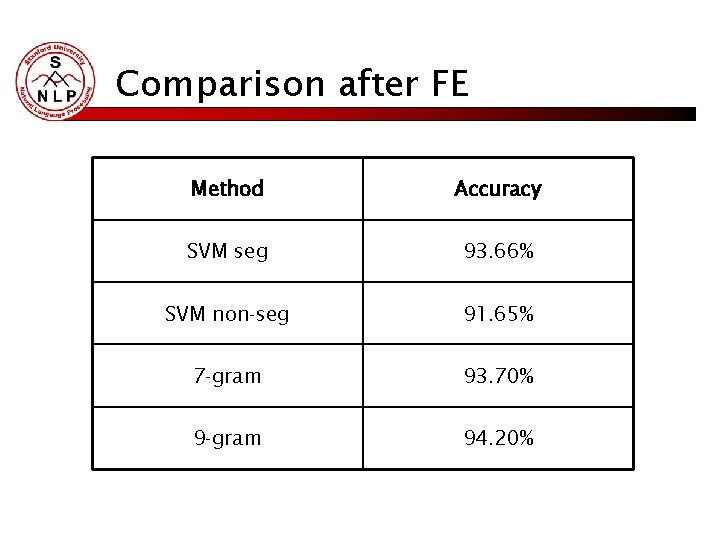

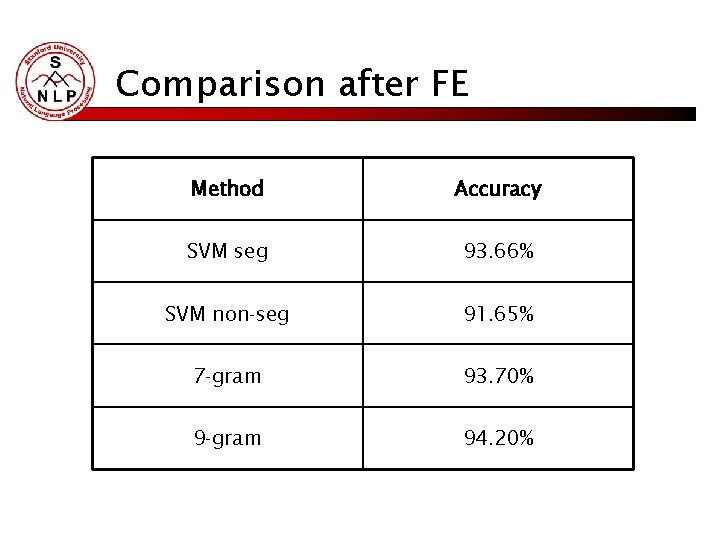

Comparison after FE Method Accuracy SVM seg 93. 66% SVM non-seg 91. 65% 7 -gram 93. 70% 9 -gram 94. 20%

Conclusion • N-gram model outperforms other methods: • Language Models’ nature: considering all features and avoid error-prone ones. • No restrictive independence (ex. NB). • Better smoothing. • Feature engineering also helps reducing the sparsity but may cause ambiguity. • Semantic understanding could be the next to try in future research.