Automated SpeakerAware AudioVideo Meeting Transcript Kelsey Ho Sarah

- Slides: 1

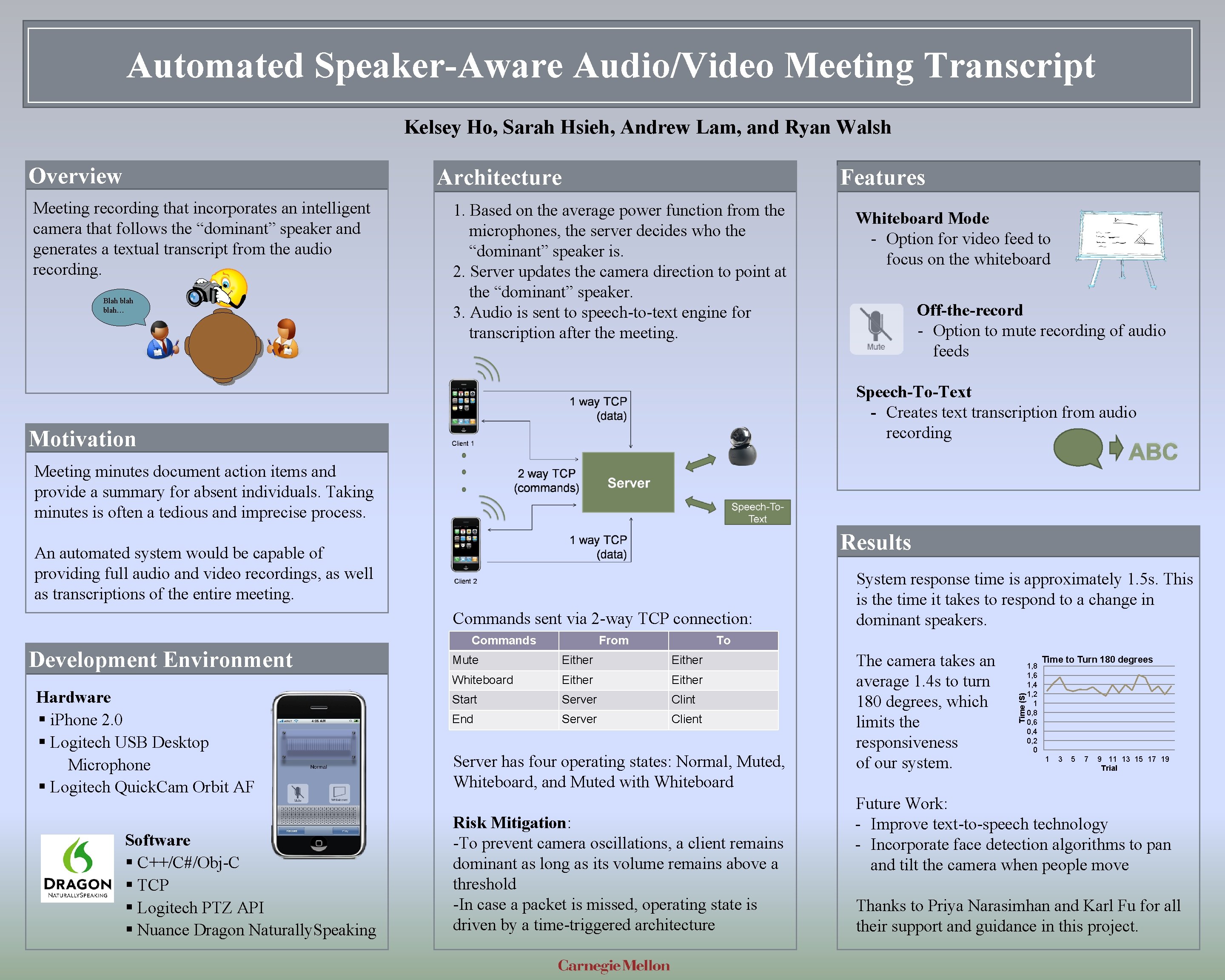

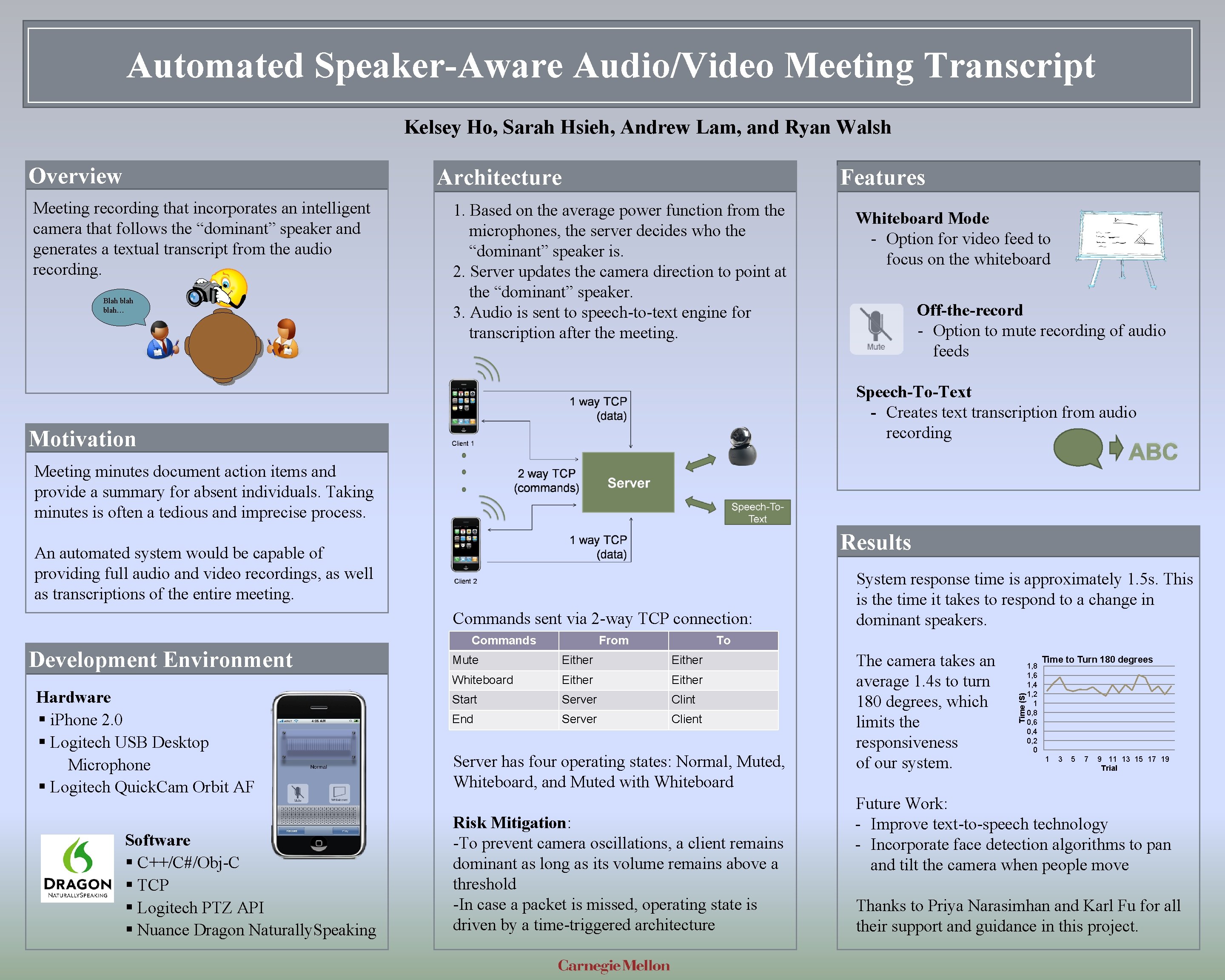

Automated Speaker-Aware Audio/Video Meeting Transcript Kelsey Ho, Sarah Hsieh, Andrew Lam, and Ryan Walsh Overview Architecture Meeting recording that incorporates an intelligent camera that follows the “dominant” speaker and generates a textual transcript from the audio recording. Blah blah… Features 1. Based on the average power function from the microphones, the server decides who the “dominant” speaker is. 2. Server updates the camera direction to point at the “dominant” speaker. 3. Audio is sent to speech-to-text engine for transcription after the meeting. Whiteboard Mode - Option for video feed to focus on the whiteboard Off-the-record - Option to mute recording of audio feeds Speech-To-Text - Creates text transcription from audio recording Motivation ABC Meeting minutes document action items and provide a summary for absent individuals. Taking minutes is often a tedious and imprecise process. Results An automated system would be capable of providing full audio and video recordings, as well as transcriptions of the entire meeting. Commands sent via 2 -way TCP connection: Hardware § i. Phone 2. 0 § Logitech USB Desktop Microphone § Logitech Quick. Cam Orbit AF Software § C++/C#/Obj-C § TCP § Logitech PTZ API § Nuance Dragon Naturally. Speaking From To Mute Either Whiteboard Either Start Server Clint End Server Client Server has four operating states: Normal, Muted, Whiteboard, and Muted with Whiteboard Risk Mitigation: -To prevent camera oscillations, a client remains dominant as long as its volume remains above a threshold -In case a packet is missed, operating state is driven by a time-triggered architecture The camera takes an average 1. 4 s to turn 180 degrees, which limits the responsiveness of our system. Time (S) Development Environment Commands System response time is approximately 1. 5 s. This is the time it takes to respond to a change in dominant speakers. 1, 8 1, 6 1, 4 1, 2 1 0, 8 0, 6 0, 4 0, 2 0 Time to Turn 180 degrees 1 3 5 7 9 11 13 15 17 19 Trial Future Work: - Improve text-to-speech technology - Incorporate face detection algorithms to pan and tilt the camera when people move Thanks to Priya Narasimhan and Karl Fu for all their support and guidance in this project.