Automated poisoning attacks and defenses in malware detection

- Slides: 21

Automated poisoning attacks and defenses in malware detection systems: An adversarial machine learning approach Source: Computers & Security, vol. 73, pp. 326 -344, Mar. 2018. Authors: Sen Chen, Minhui Xue, Lingling Fan, Shuang Hao, Lihu Xu, Haojin Zhu, and Bo Li Speaker: Hsinyu Lee Date: 2018. 10. 18

Outline • • • Introduction Problem definition & challenges Proposed scheme Experimental results Conclusions 01

Introduction (1/2) Third-party platform Malware detection 03

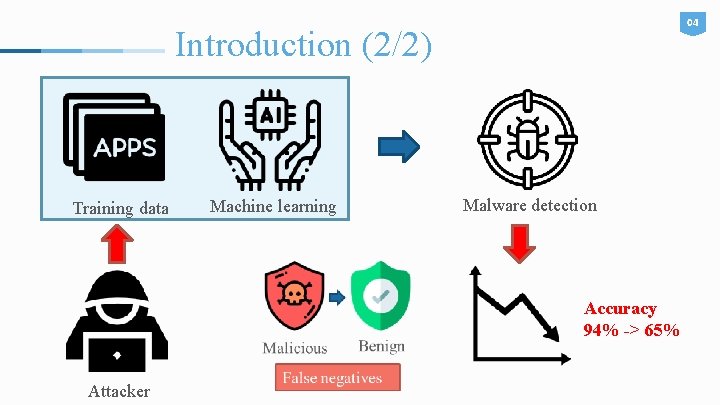

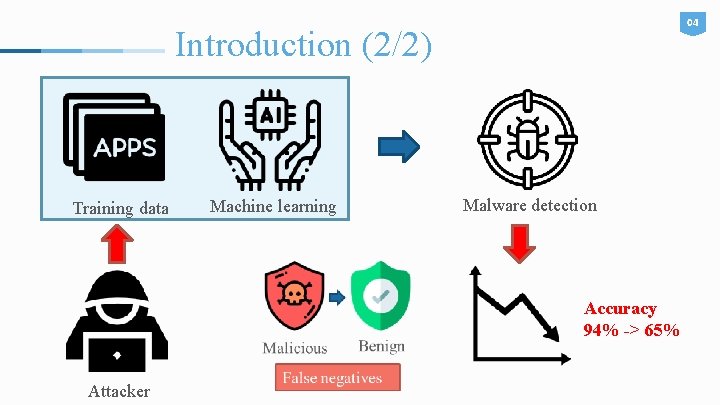

04 Introduction (2/2) Training data Machine learning Malware detection Accuracy 94% -> 65% Attacker

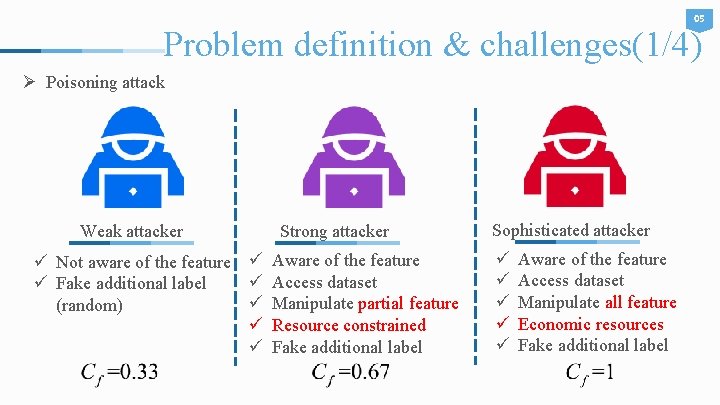

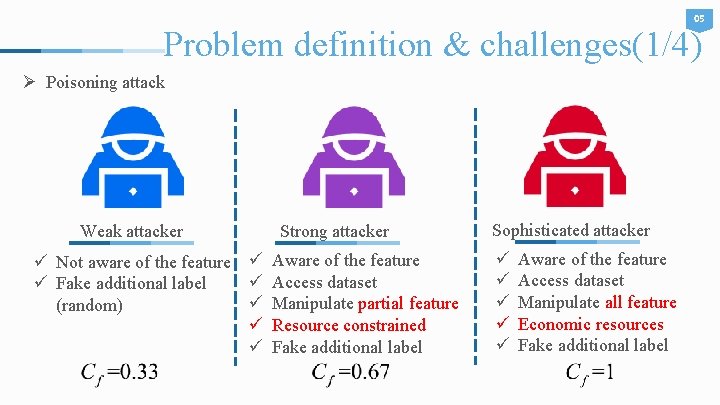

05 Problem definition & challenges(1/4) Ø Poisoning attack Weak attacker Strong attacker ü Not aware of the feature ü Access dataset ü Fake additional label ü Manipulate partial feature (random) ü Resource constrained ü Fake additional label Sophisticated attacker ü ü ü Aware of the feature Access dataset Manipulate all feature Economic resources Fake additional label

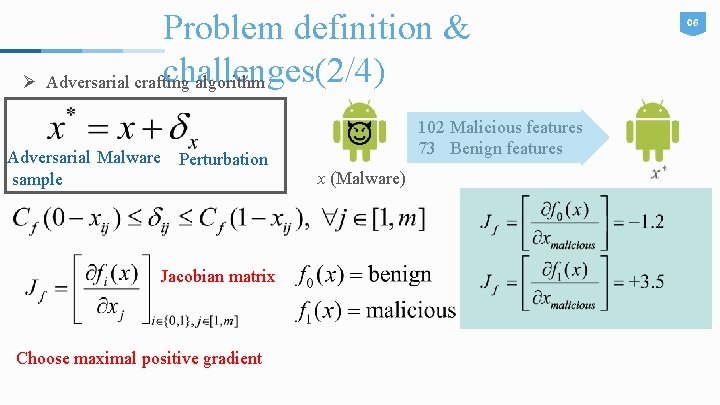

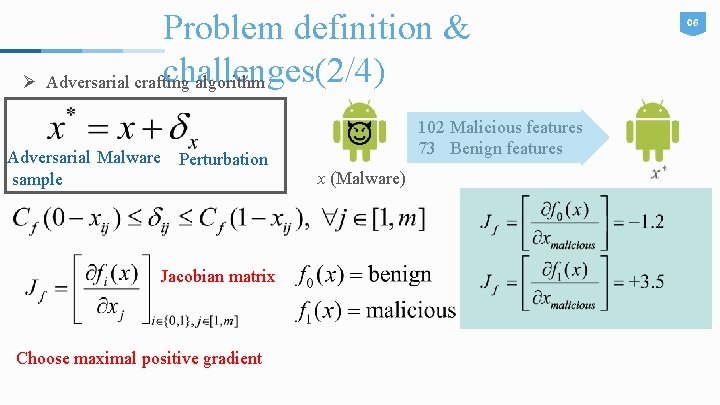

Problem definition & challenges(2/4) Ø Adversarial crafting algorithm Adversarial Malware sample Perturbation Jacobian matrix Choose maximal positive gradient 102 Malicious features 73 Benign features x (Malware) 06

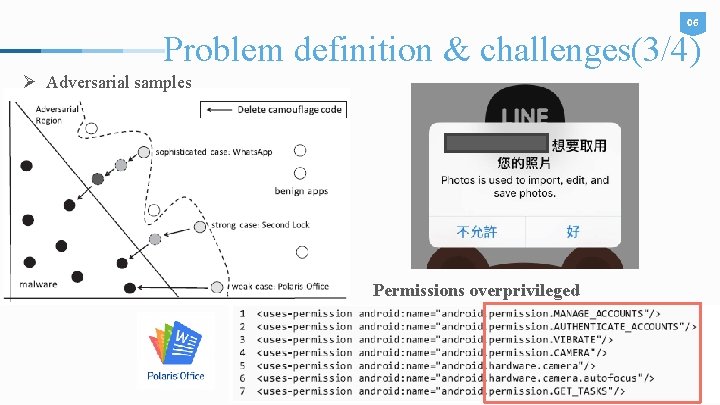

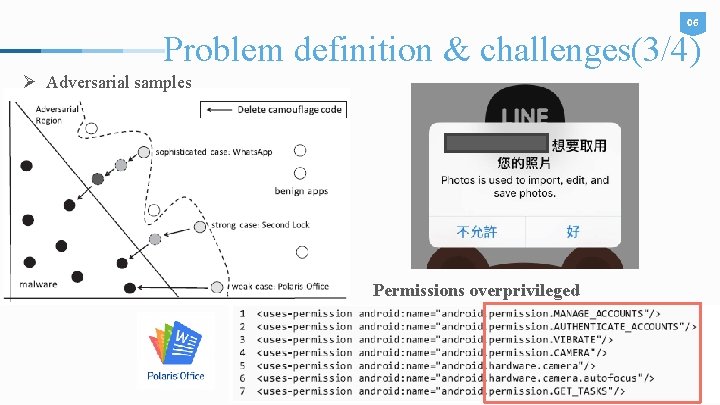

06 Problem definition & challenges(3/4) Ø Adversarial samples Permissions overprivileged

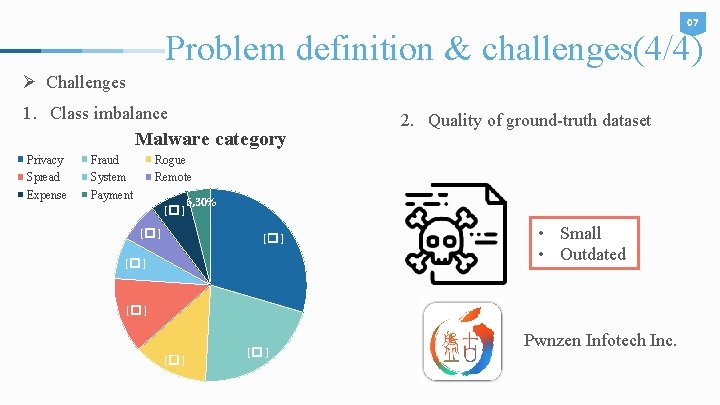

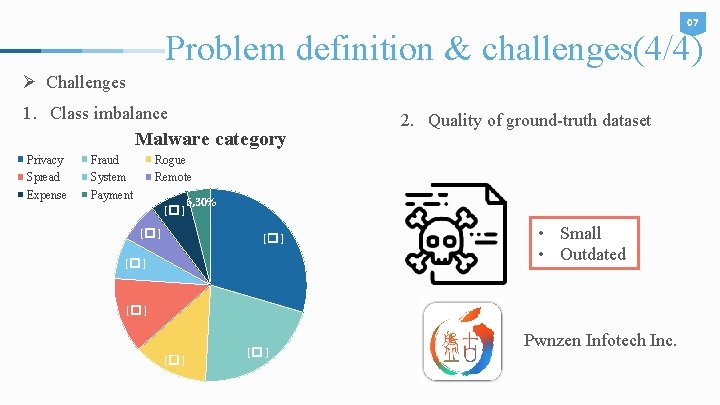

07 Problem definition & challenges(4/4) Ø Challenges 1. Class imbalance Malware category Privacy Spread Expense Fraud System Payment 2. Quality of ground-truth dataset Rogue Remote [� ] 6, 30% [� ] • Small • Outdated [� ] Pwnzen Infotech Inc.

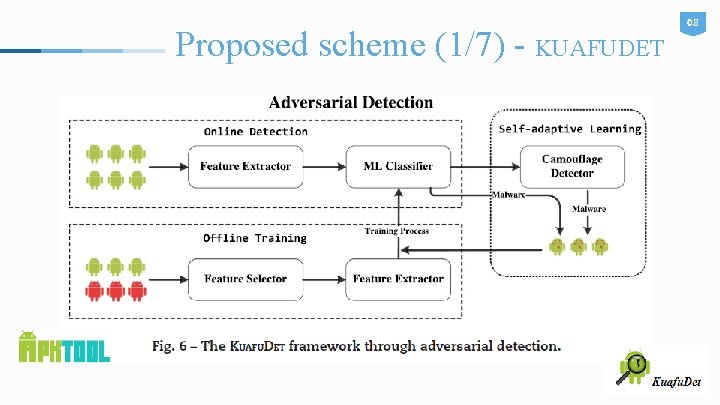

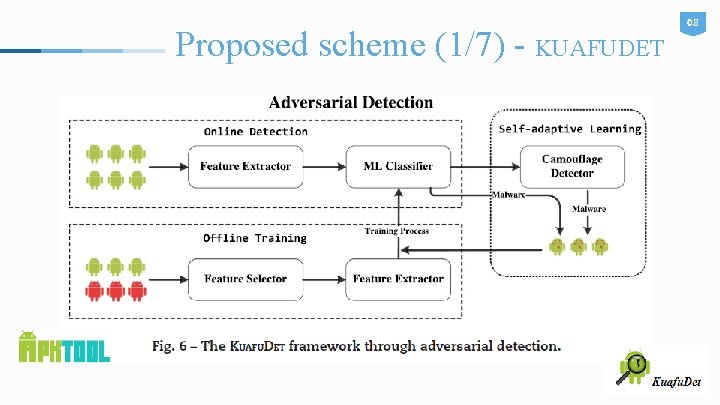

Proposed scheme (1/7) - KUAFUDET 08

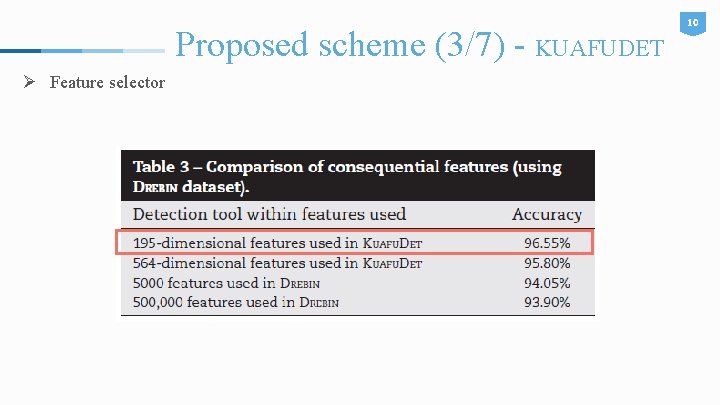

Proposed scheme (2/7) - KUAFUDET 09 Ø Feature selector • Syntax features • Semantic features • • • <Sensitive behaviors> “Send SMS” “Uninstall application” “Get location” “Get wifi info” “Start httpconnection. ” 195 -dimension

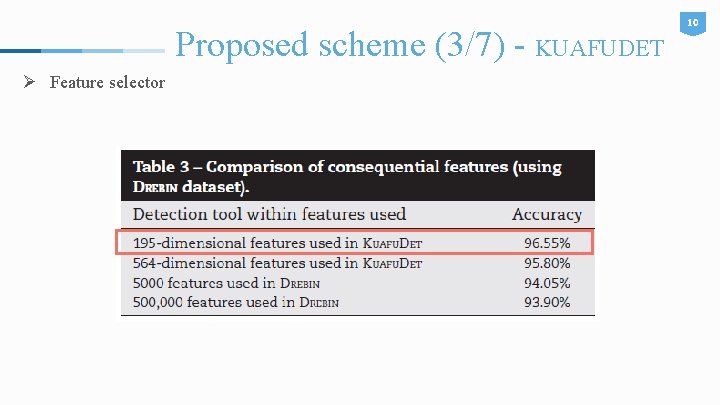

Proposed scheme (3/7) - KUAFUDET Ø Feature selector 10

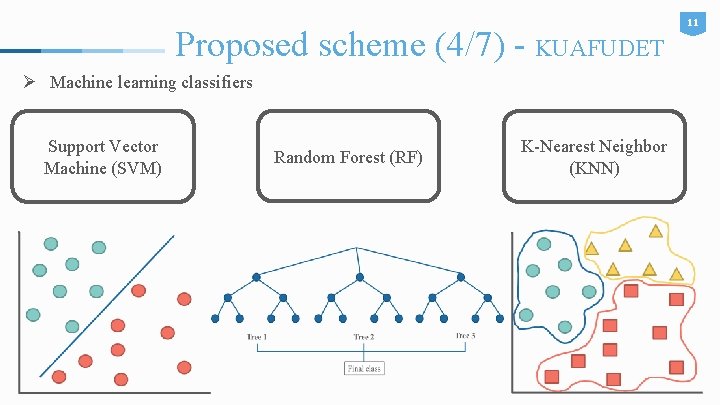

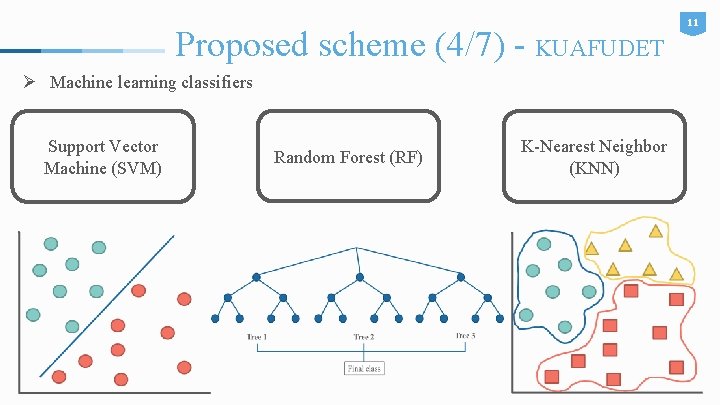

Proposed scheme (4/7) - KUAFUDET Ø Machine learning classifiers Support Vector Machine (SVM) Random Forest (RF) K-Nearest Neighbor (KNN) 11

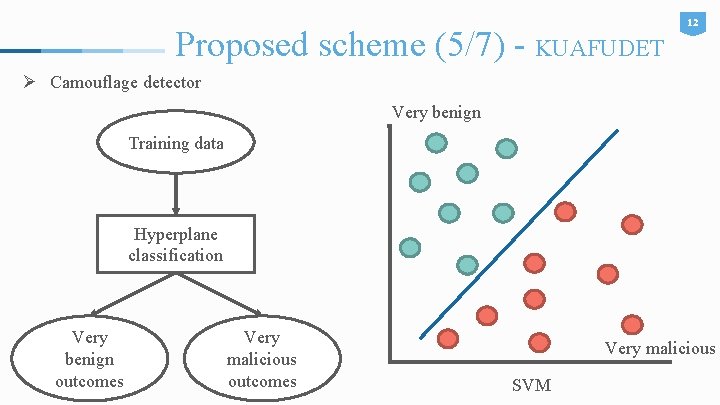

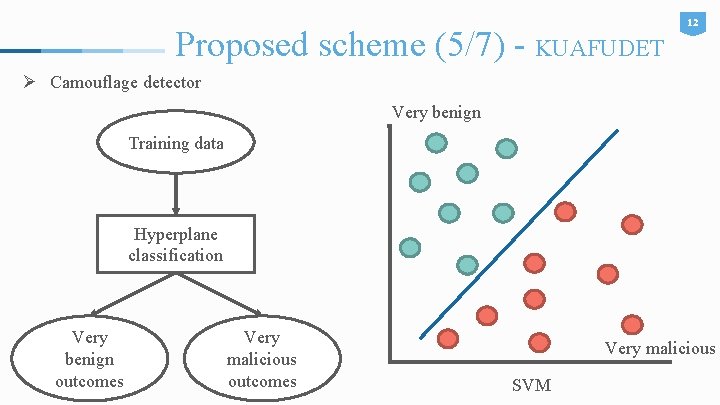

Proposed scheme (5/7) - KUAFUDET 12 Ø Camouflage detector Very benign Training data Hyperplane classification Very benign outcomes Very malicious SVM

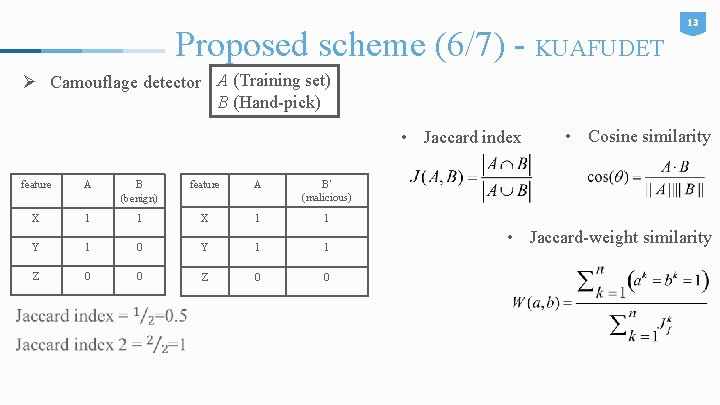

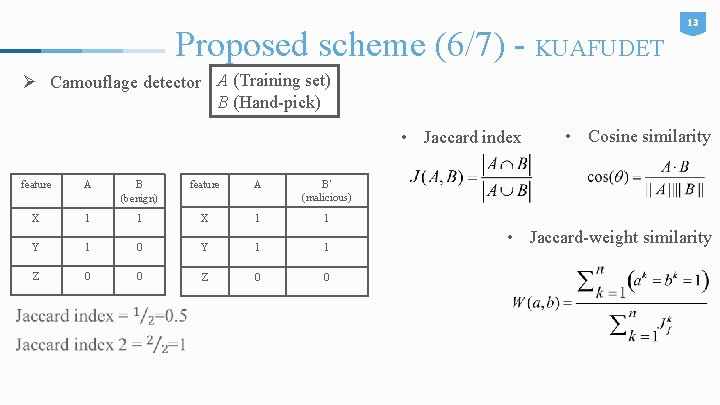

Proposed scheme (6/7) - KUAFUDET 13 Ø Camouflage detector A (Training set) B (Hand-pick) • Jaccard index feature A B (benign) feature A B’ (malicious) X 1 1 Y 1 0 Y 1 1 Z 0 0 • Cosine similarity • Jaccard-weight similarity

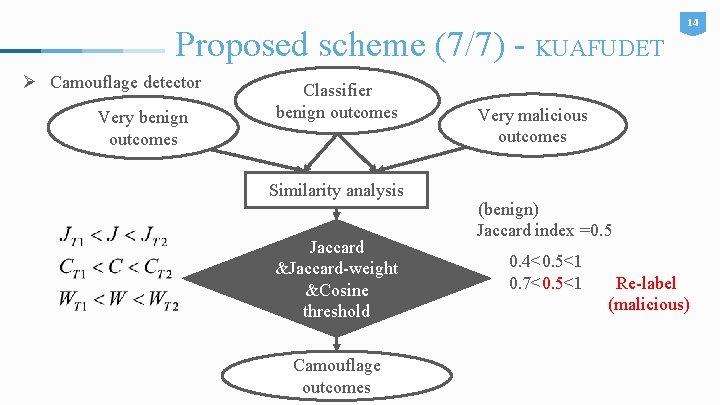

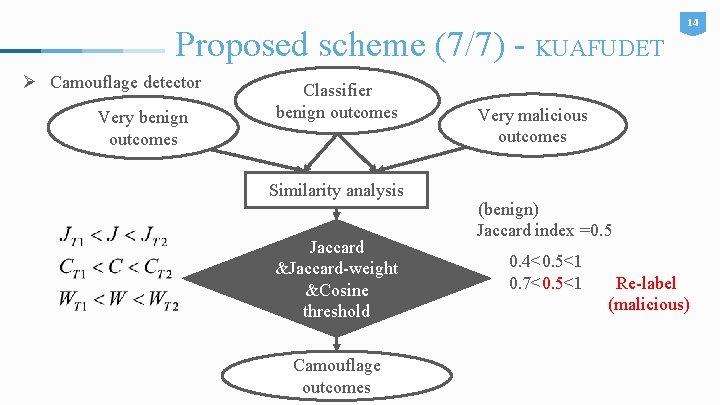

Proposed scheme (7/7) - KUAFUDET Ø Camouflage detector Very benign outcomes Classifier benign outcomes Similarity analysis Jaccard &Jaccard-weight &Cosine threshold Camouflage outcomes 14 Very malicious outcomes (benign) Jaccard index =0. 5 0. 4<0. 5<1 0. 7<0. 5<1 Re-label (malicious)

Experiment results (1/5) Ø Dataset Ø Misclassification of machine learning detection systems 15

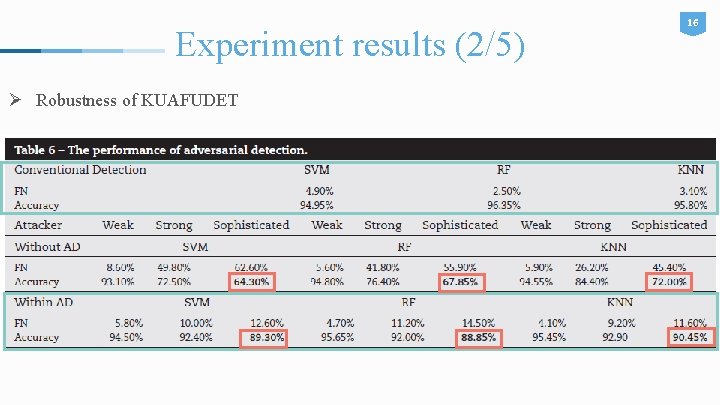

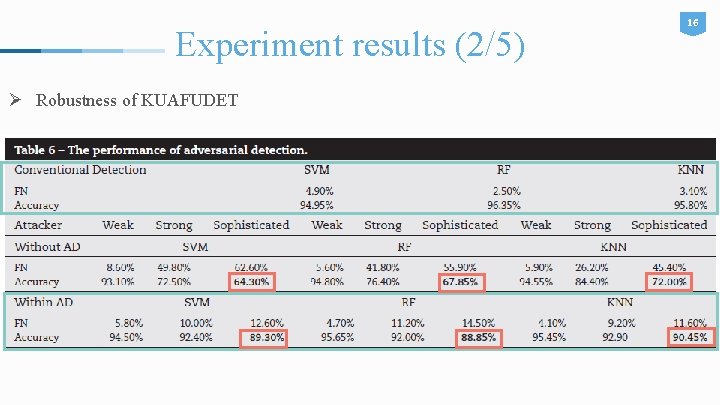

Experiment results (2/5) Ø Robustness of KUAFUDET 16

Experiment results (3/5) 17

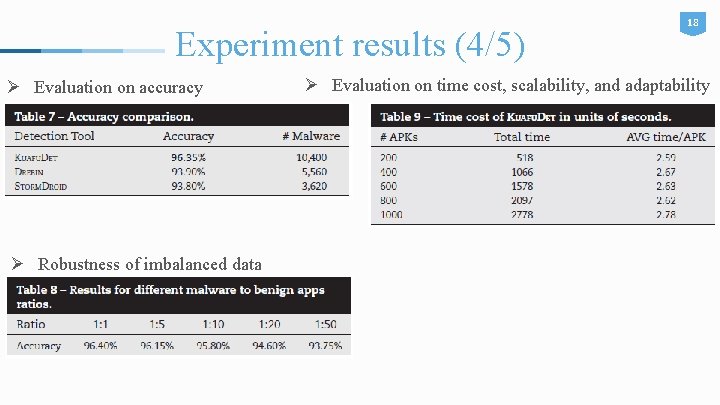

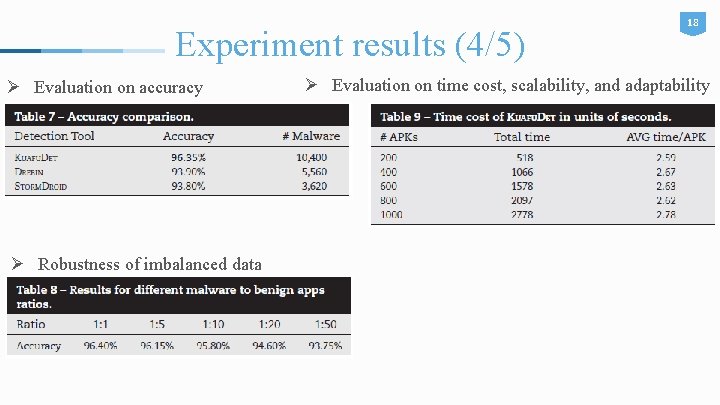

Experiment results (4/5) Ø Evaluation on accuracy Ø Robustness of imbalanced data 18 Ø Evaluation on time cost, scalability, and adaptability

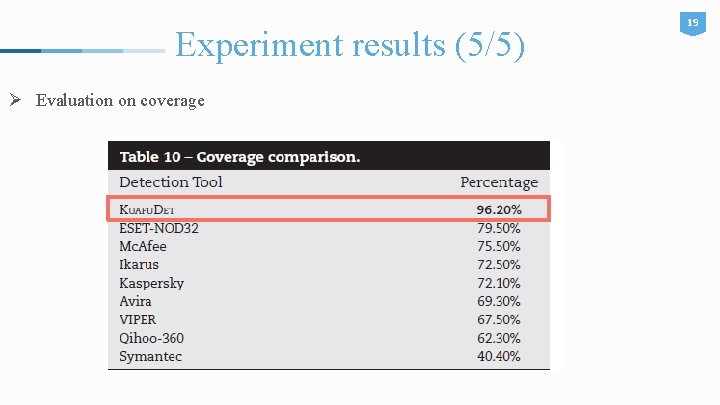

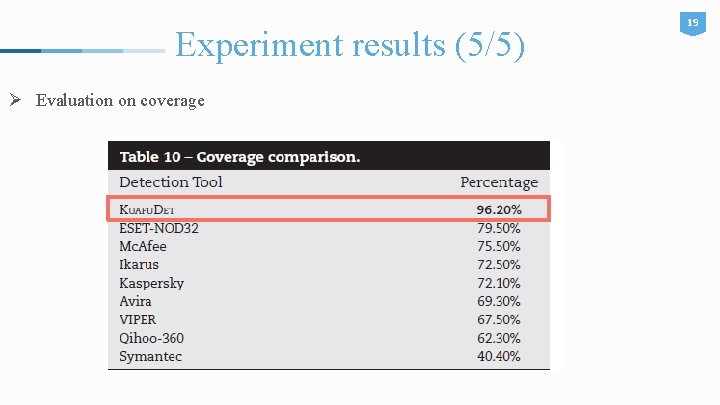

Experiment results (5/5) Ø Evaluation on coverage 19

Conclusions • Designed and evaluated attack • Reduce false negatives • Accuracy 20