AUTOENCODER Hungyi Lee Selfsupervised Learning Framework Autoencoder Selfsupervised

- Slides: 38

AUTO-ENCODER Hung-yi Lee 李宏毅

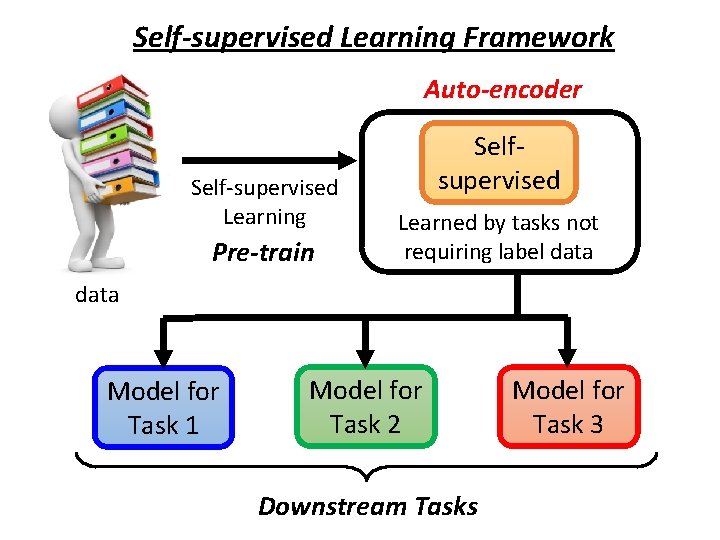

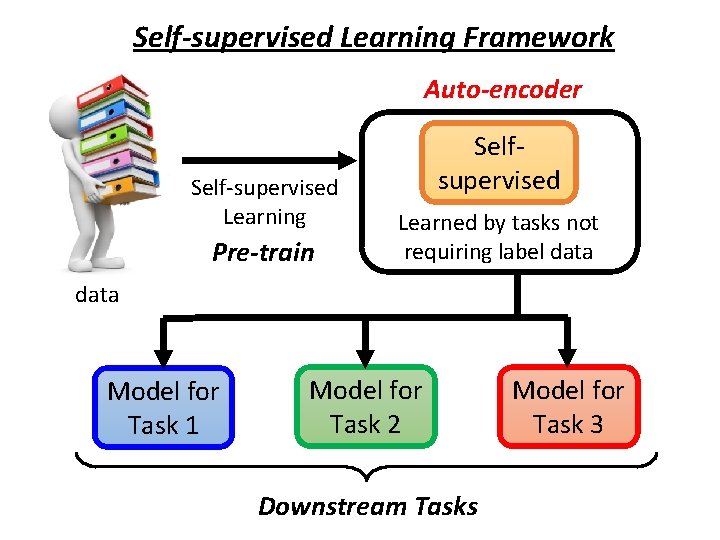

Self-supervised Learning Framework Auto-encoder Self-supervised Learning Pre-train Selfsupervised Learned by tasks not requiring label data Model for Task 1 Model for Task 2 Downstream Tasks Model for Task 3

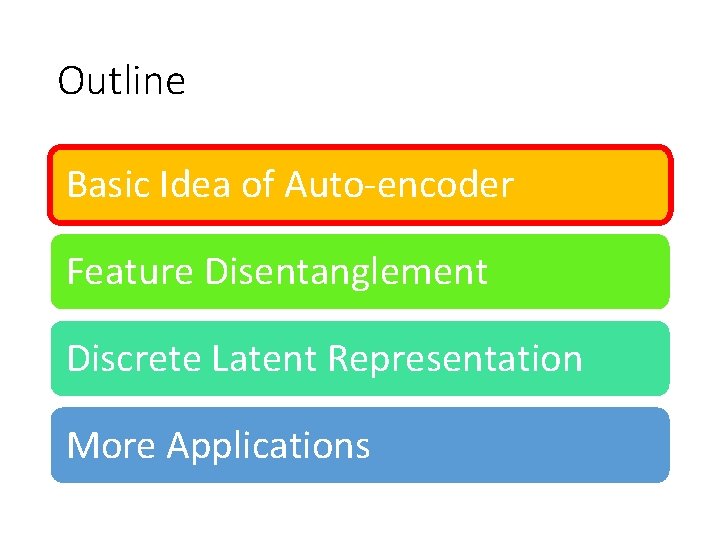

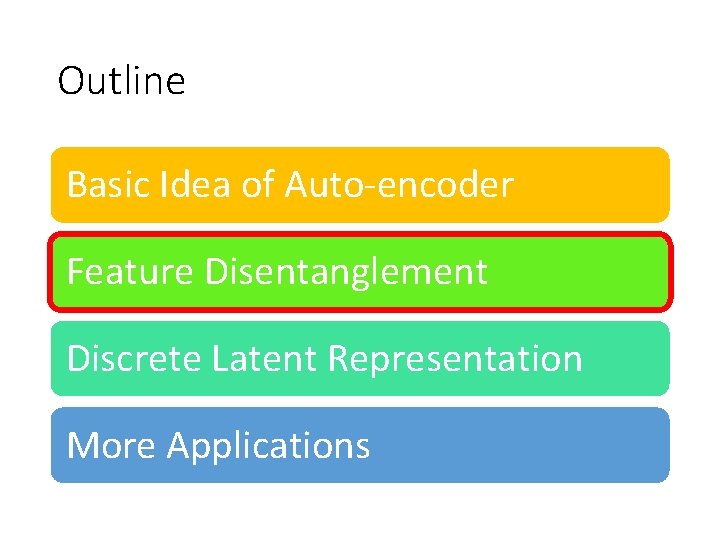

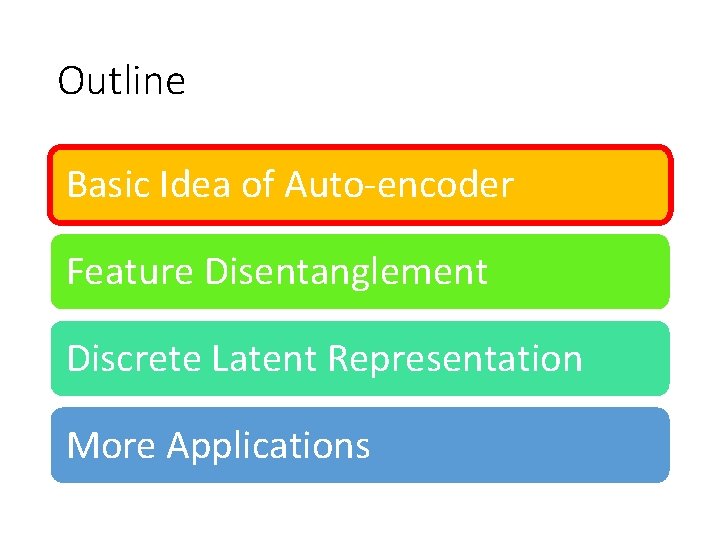

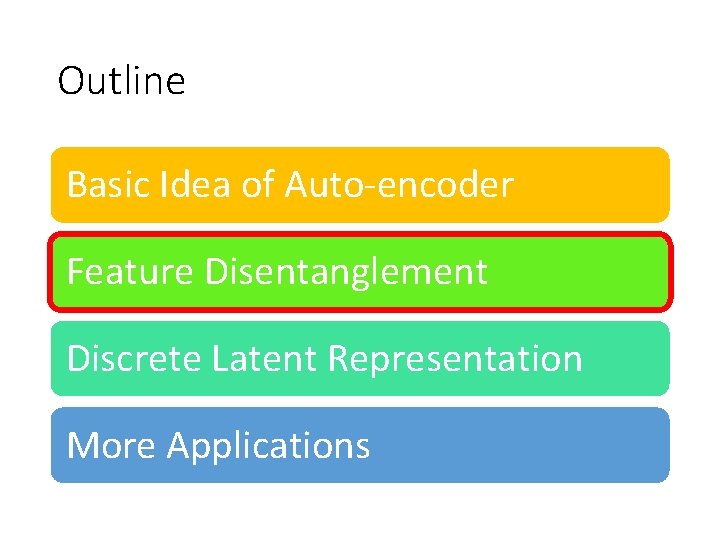

Outline Basic Idea of Auto-encoder Feature Disentanglement Discrete Latent Representation More Applications

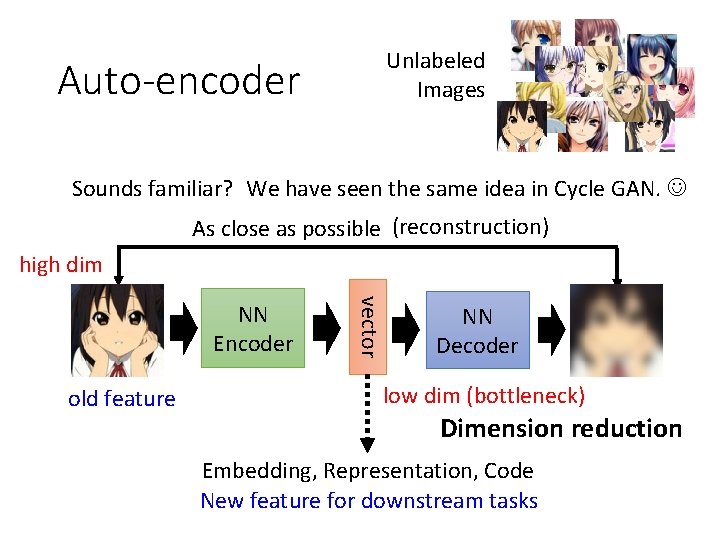

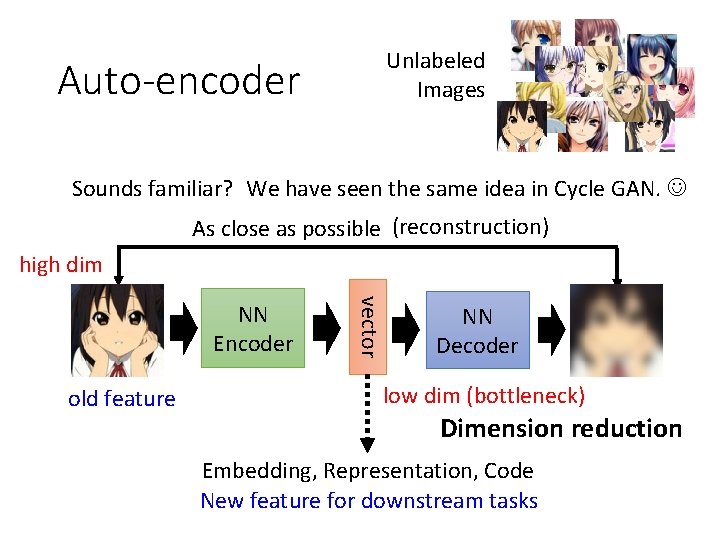

Unlabeled Images Auto-encoder Sounds familiar? We have seen the same idea in Cycle GAN. As close as possible (reconstruction) high dim old feature vector NN Encoder NN Decoder low dim (bottleneck) Dimension reduction Embedding, Representation, Code New feature for downstream tasks

More Dimension Reduction (not based on deep learning) https: //youtu. be/iwh 5 o_M 4 BNU https: //youtu. be/GBUEjkpox. Xc PCA t-SNE

Why Auto-encoder? 《神鵰俠侶》

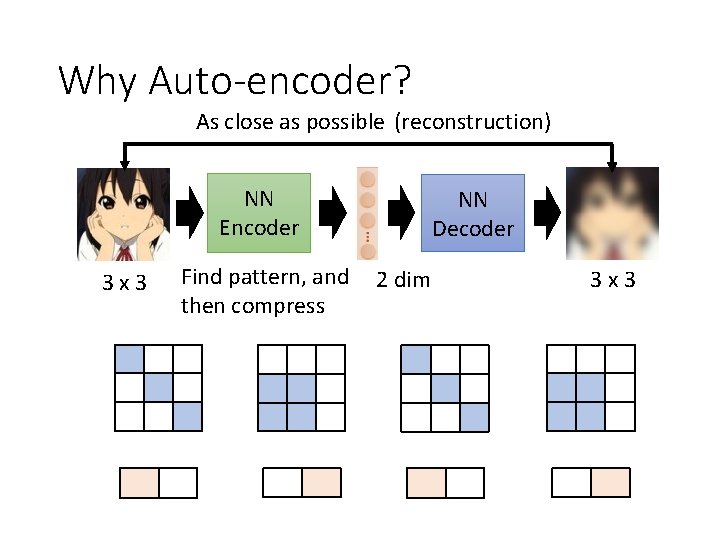

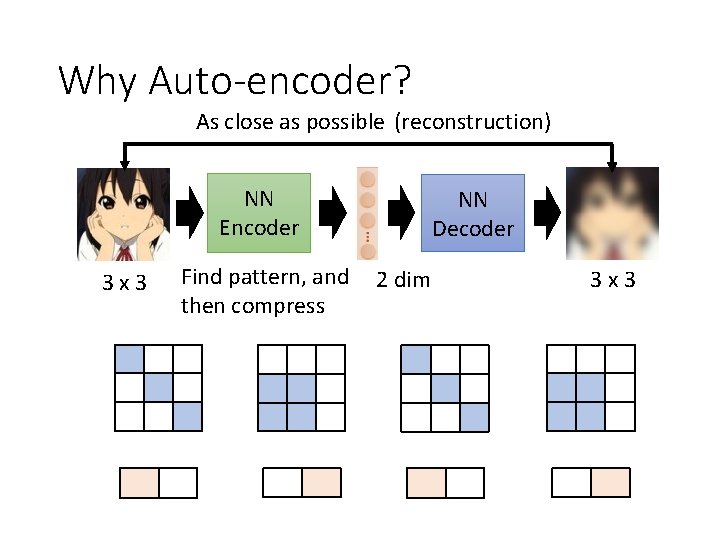

Why Auto-encoder? As close as possible (reconstruction) NN Encoder 3 x 3 Find pattern, and then compress NN Decoder 2 dim 3 x 3

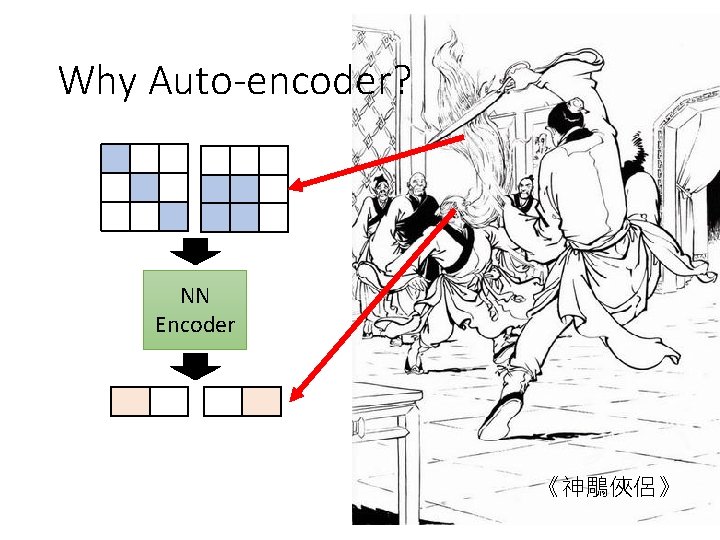

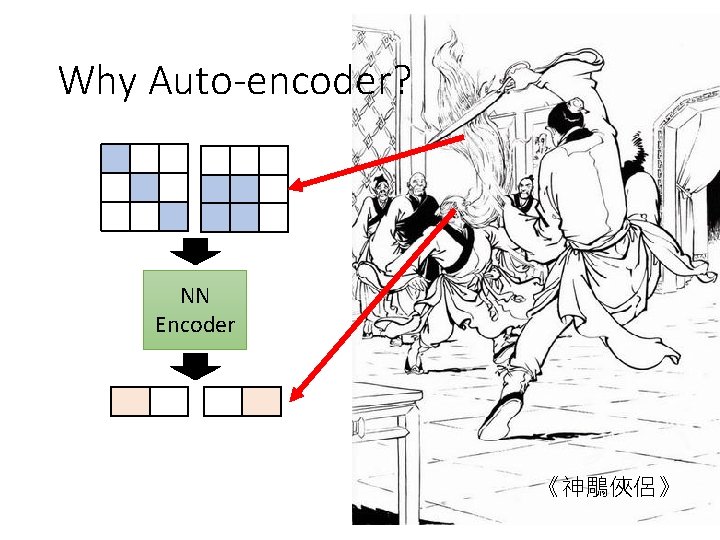

Why Auto-encoder? NN Encoder 《神鵰俠侶》

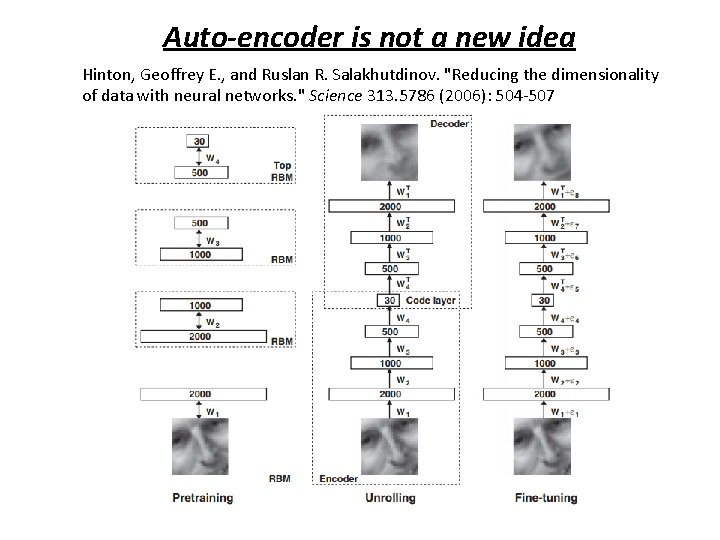

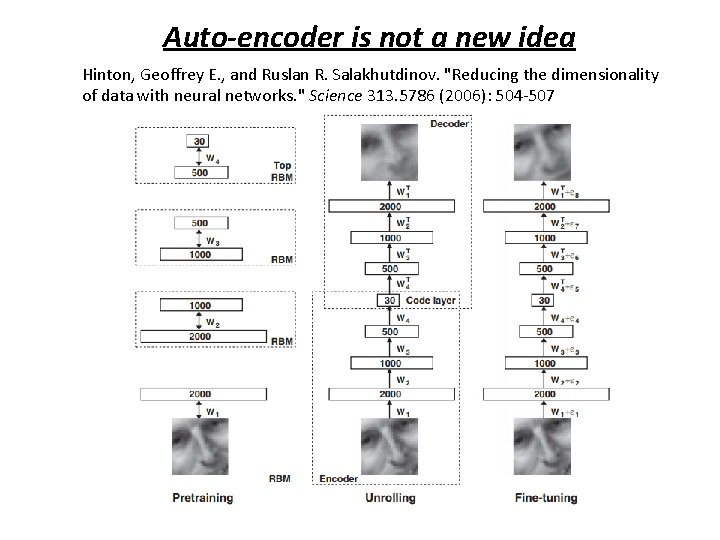

Auto-encoder is not a new idea Hinton, Geoffrey E. , and Ruslan R. Salakhutdinov. "Reducing the dimensionality of data with neural networks. " Science 313. 5786 (2006): 504 -507

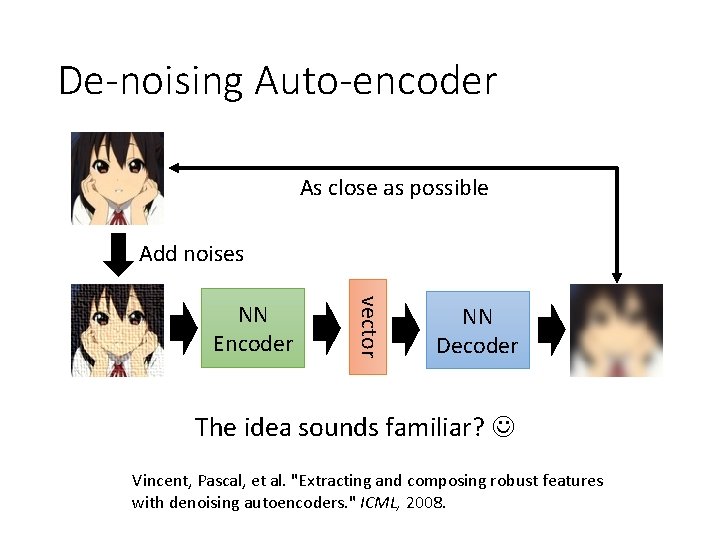

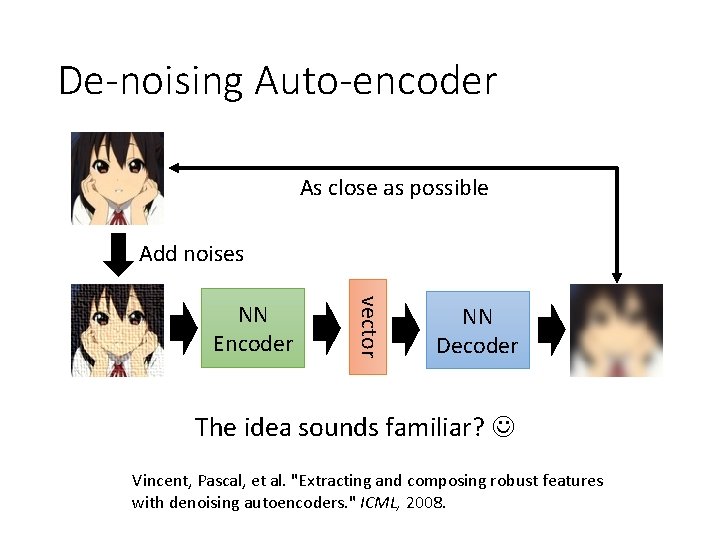

De-noising Auto-encoder As close as possible Add noises vector NN Encoder NN Decoder The idea sounds familiar? Vincent, Pascal, et al. "Extracting and composing robust features with denoising autoencoders. " ICML, 2008.

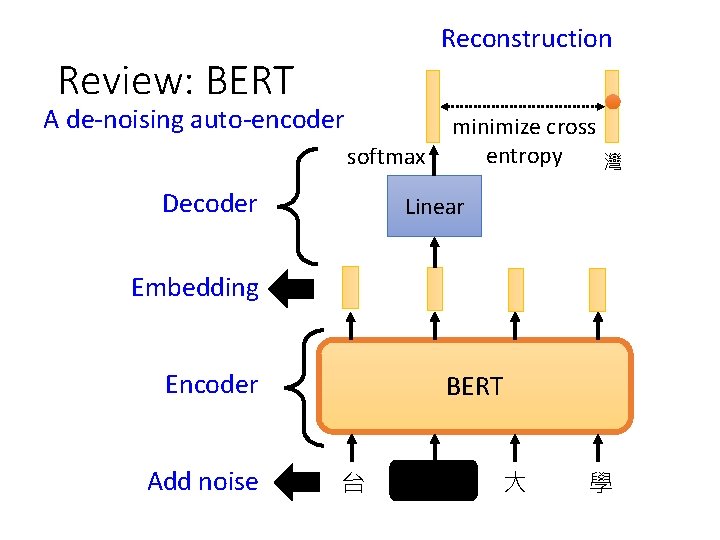

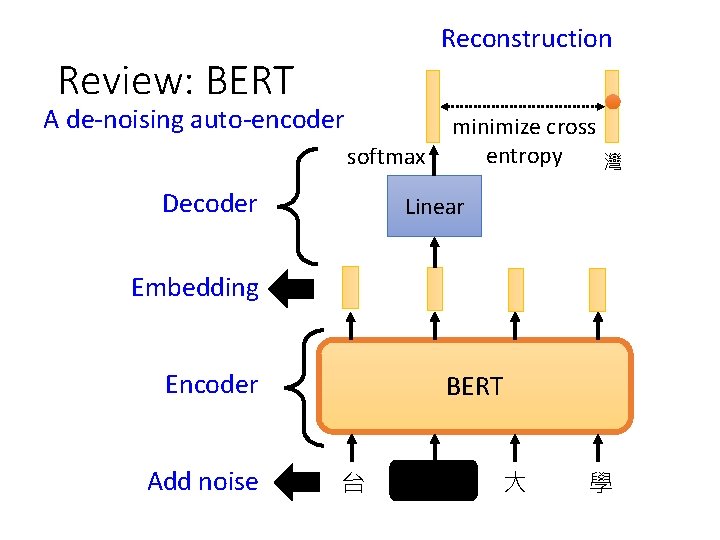

Reconstruction Review: BERT A de-noising auto-encoder softmax Decoder minimize cross entropy 灣 Linear Embedding Encoder Add noise BERT 台 灣 大 學

Outline Basic Idea of Auto-encoder Feature Disentanglement Discrete Latent Representation More Applications

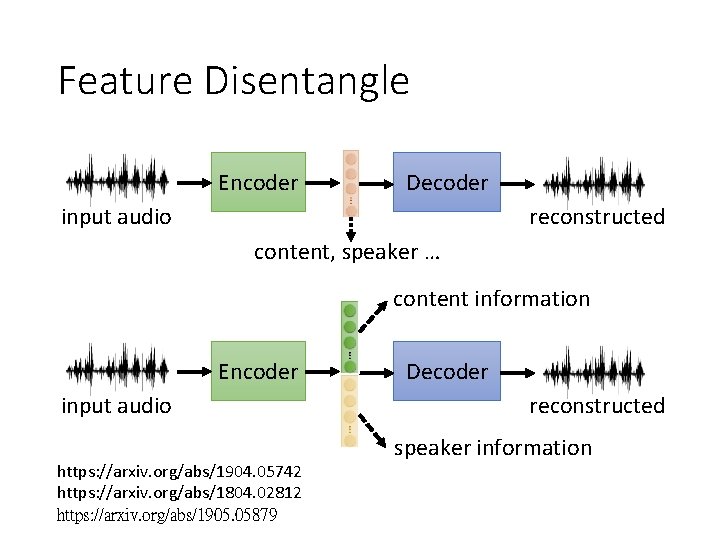

Representation includes information of different aspects Encoder input image Decoder object, texture …. Encoder reconstructed Decoder input audio reconstructed content, speaker … Encoder input sentence Decoder syntax, semantic … reconstructed

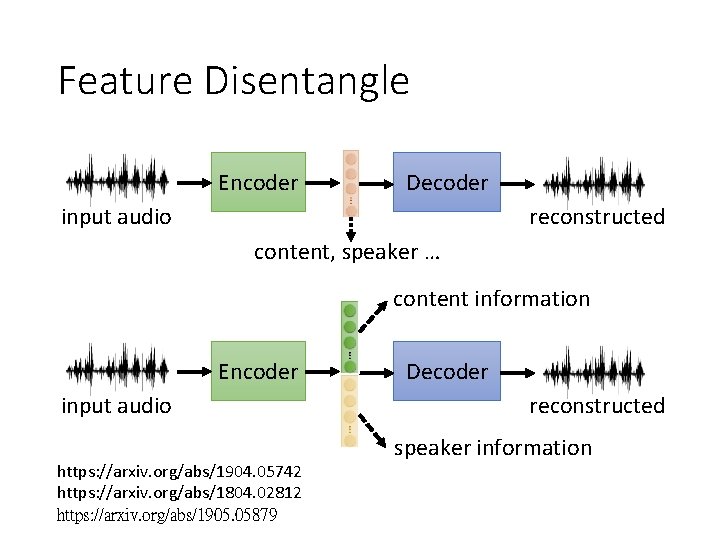

Feature Disentangle Encoder Decoder input audio reconstructed content, speaker … content information Encoder input audio https: //arxiv. org/abs/1904. 05742 https: //arxiv. org/abs/1804. 02812 https: //arxiv. org/abs/1905. 05879 Decoder reconstructed speaker information

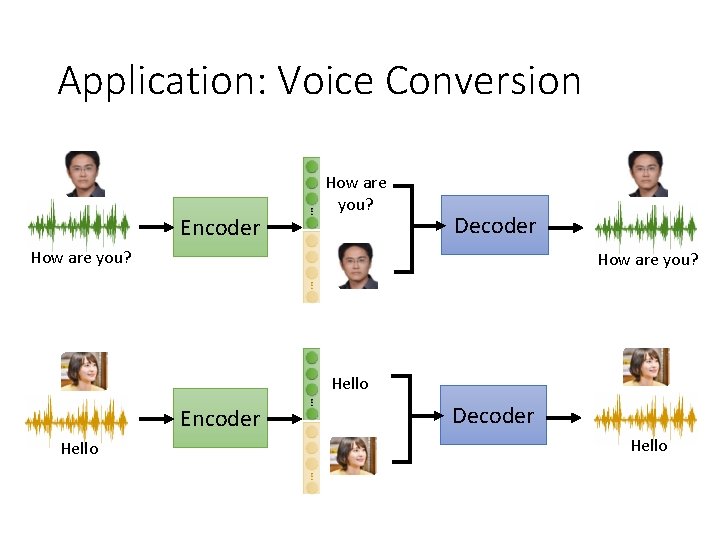

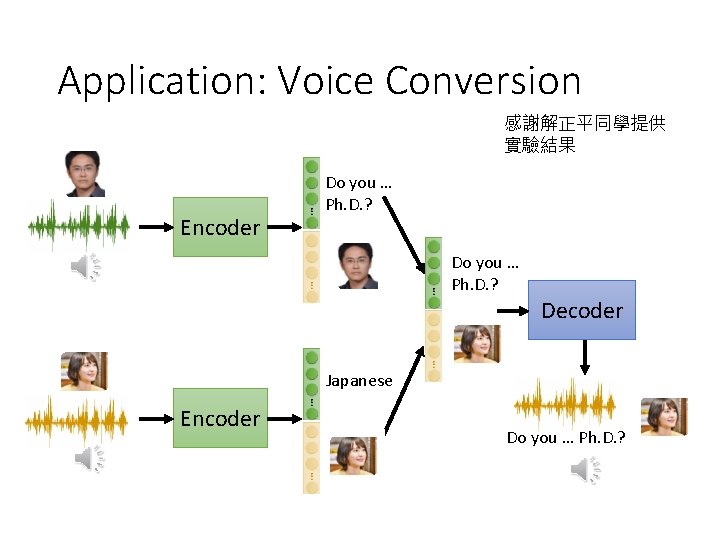

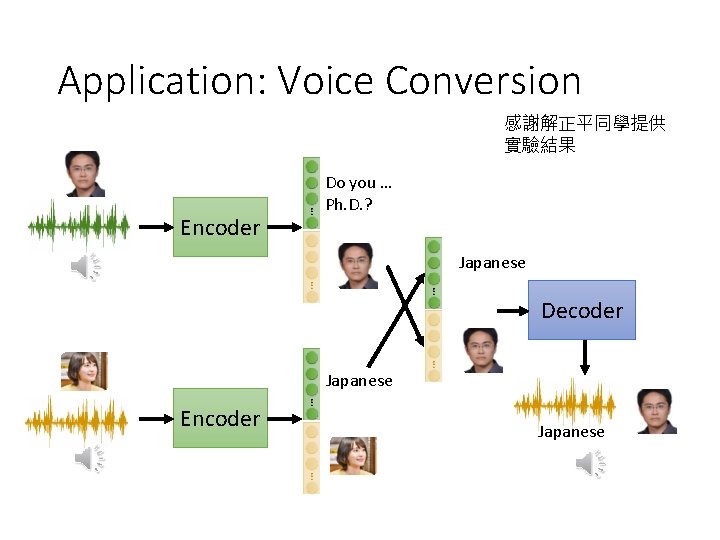

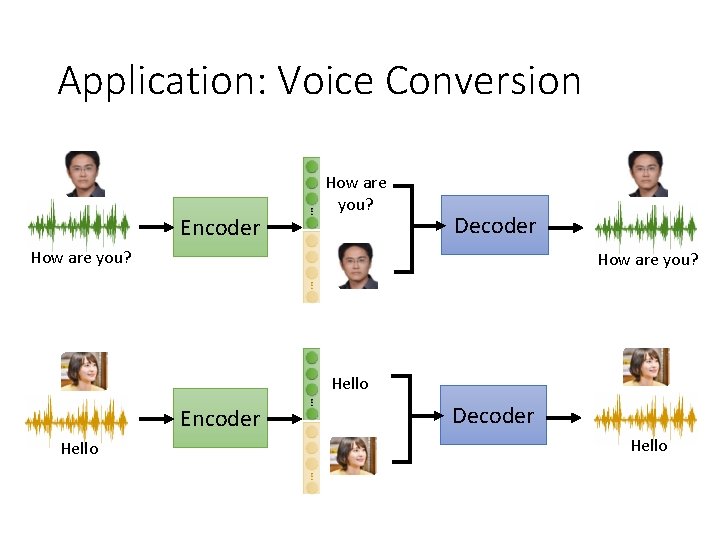

Application: Voice Conversion

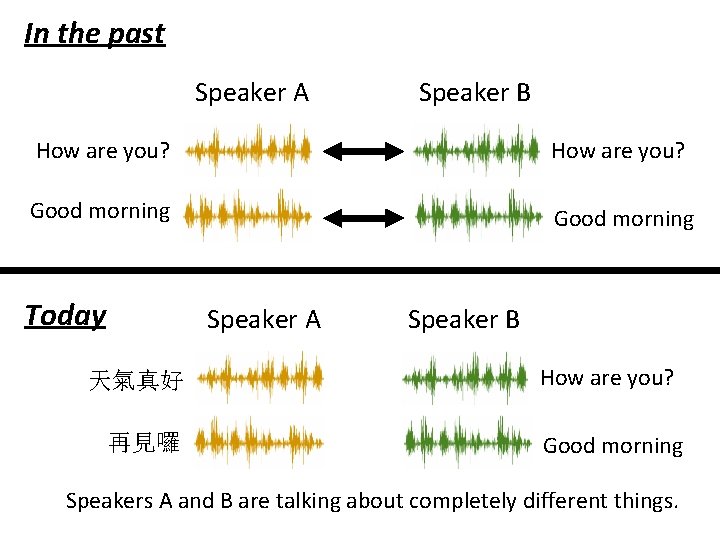

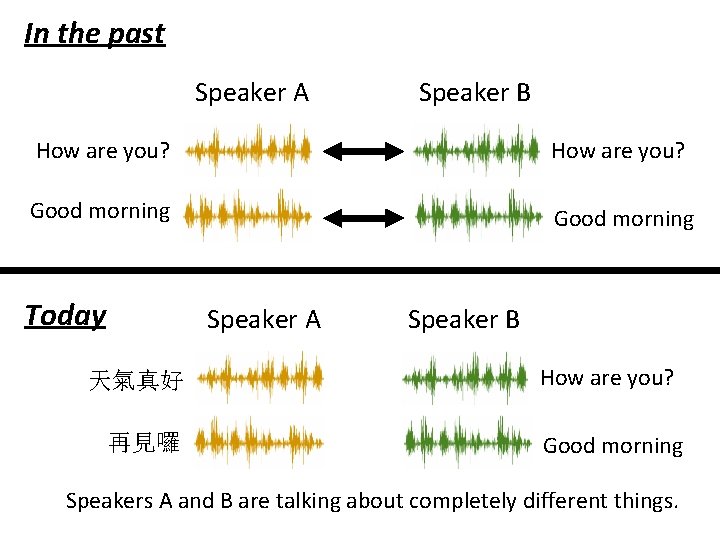

In the past Speaker A Speaker B How are you? Good morning Today Speaker A 天氣真好 再見囉 Speaker B How are you? Good morning Speakers A and B are talking about completely different things.

Application: Voice Conversion Encoder How are you? Decoder How are you? Hello Encoder Hello Decoder Hello

Application: Voice Conversion Encoder How are you? Decoder Hello Encoder Hello How are you?

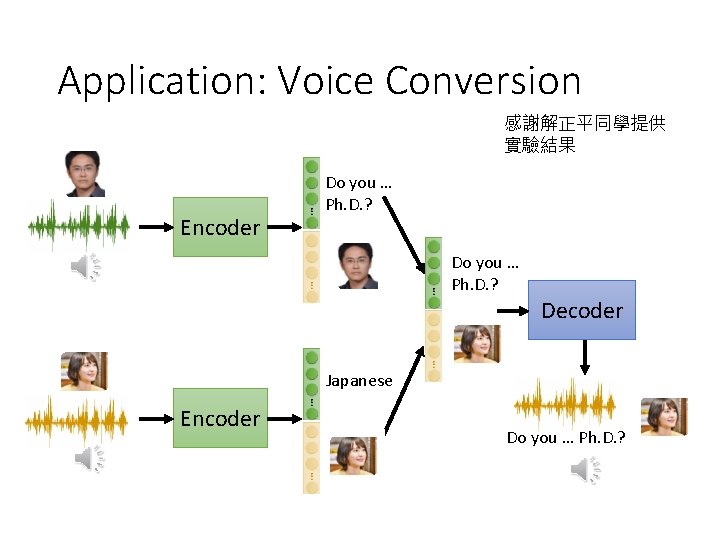

Application: Voice Conversion 感謝解正平同學提供 實驗結果 Encoder Do you … Ph. D. ? Decoder Japanese Encoder Do you … Ph. D. ?

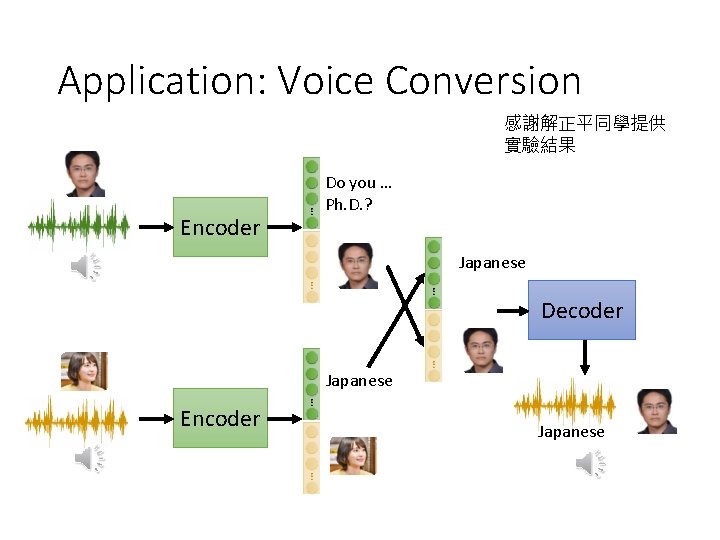

Application: Voice Conversion 感謝解正平同學提供 實驗結果 Encoder Do you … Ph. D. ? Japanese Decoder Japanese Encoder Japanese

Outline Basic Idea of Auto-encoder Feature Disentanglement Discrete Latent Representation More Applications

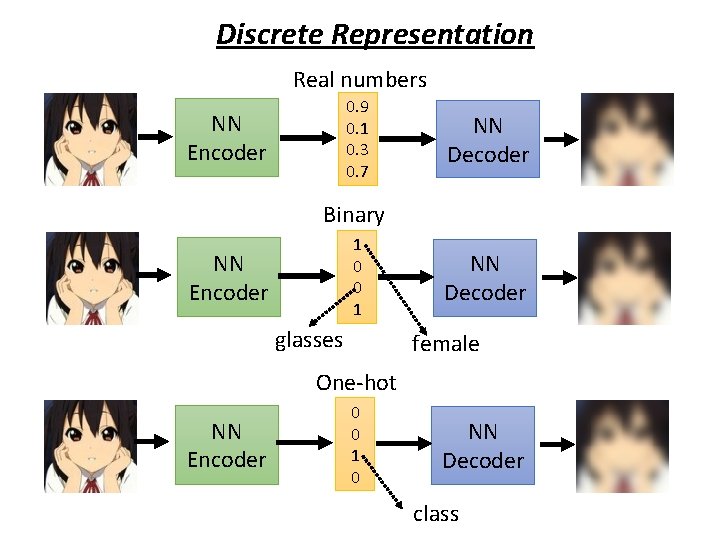

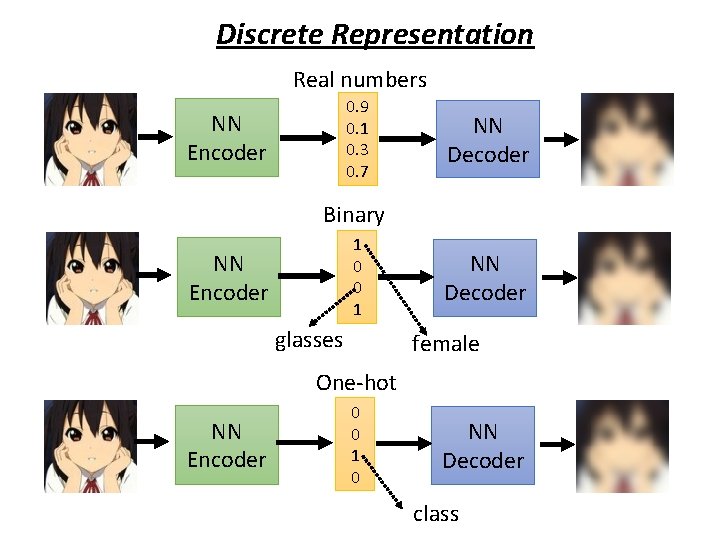

Discrete Representation Real numbers 0. 9 0. 1 0. 3 0. 7 NN Encoder NN Decoder Binary 1 0 0 1 NN Encoder glasses NN Decoder female One-hot NN Encoder 0 0 1 0 NN Decoder class

Discrete Representation https: //arxiv. org/abs/1711. 00937 • Vector Quantized Variational Auto-encoder (VQVAE) vector 3 NN Decoder vector 5 vector 4 vector 3 vector 2 vector 1 Codebook (a set of vectors) Learn from data vector NN Encoder (c. f. attention) Compute similarity The most similar one is the input of decoder. For speech, the codebook represents phonetic information https: //arxiv. org/pdf/1901. 08810. pdf

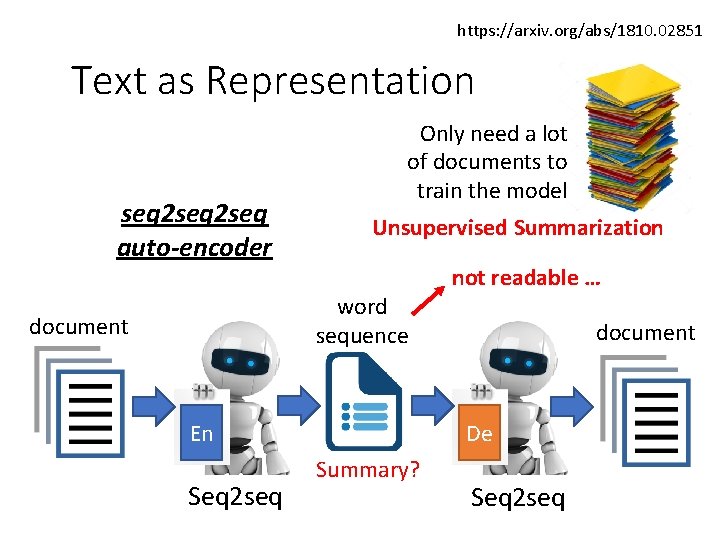

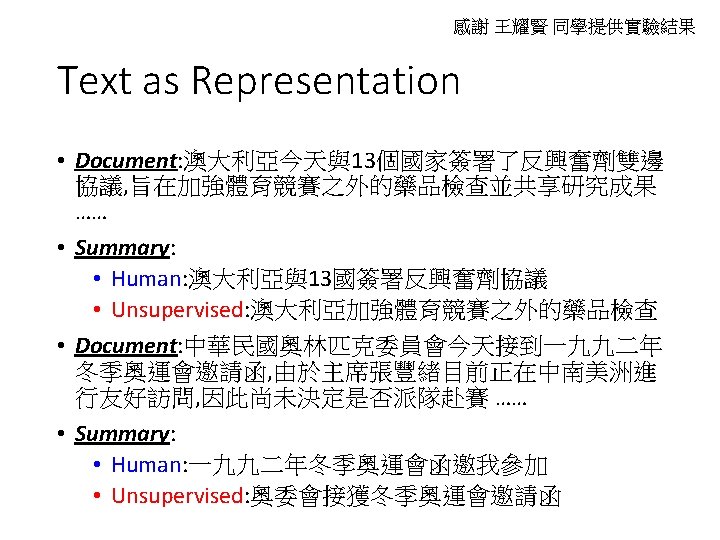

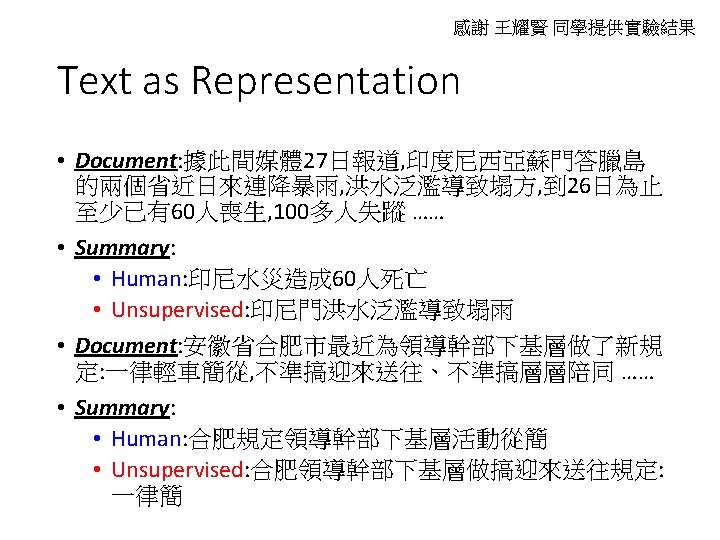

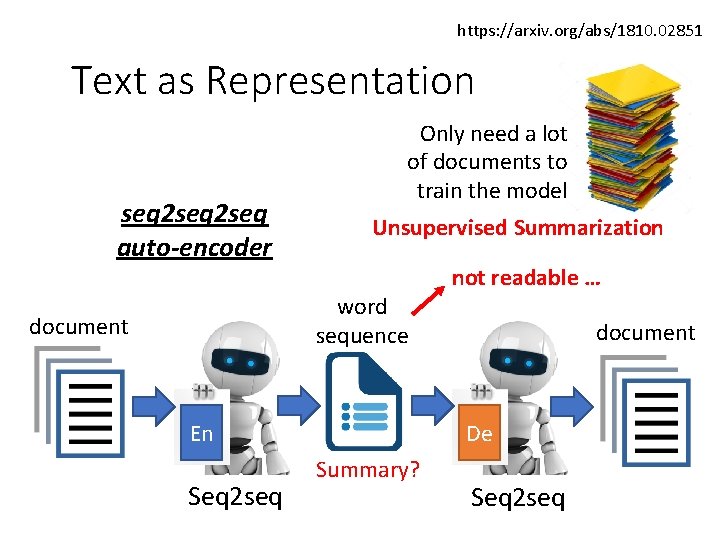

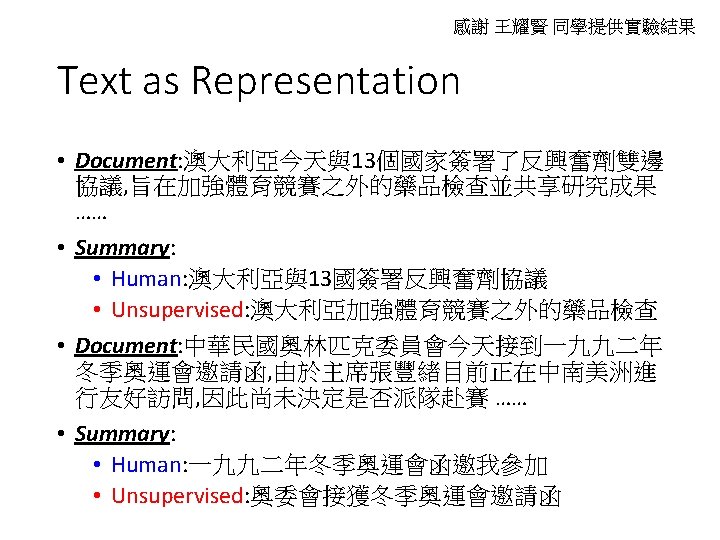

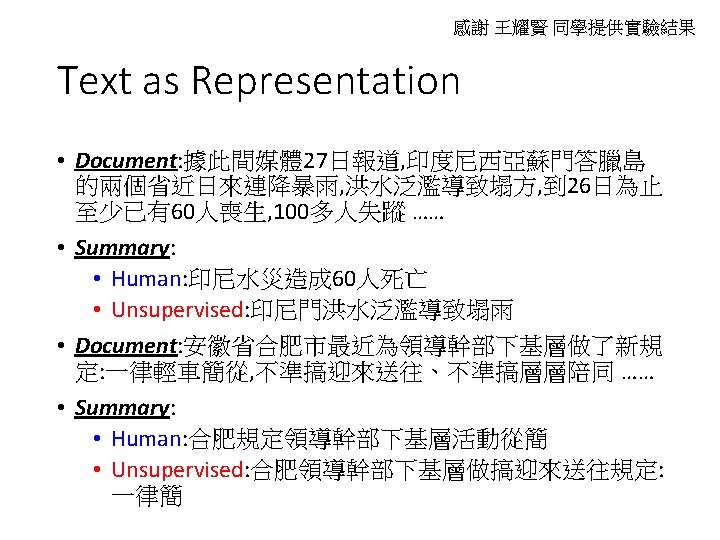

https: //arxiv. org/abs/1810. 02851 Text as Representation seq 2 seq auto-encoder Only need a lot of documents to train the model Unsupervised Summarization word sequence document En Seq 2 seq not readable … document De Summary? Seq 2 seq

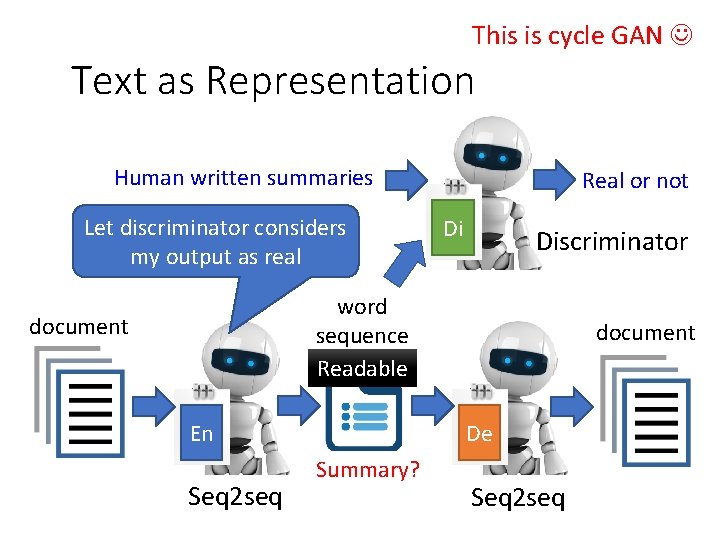

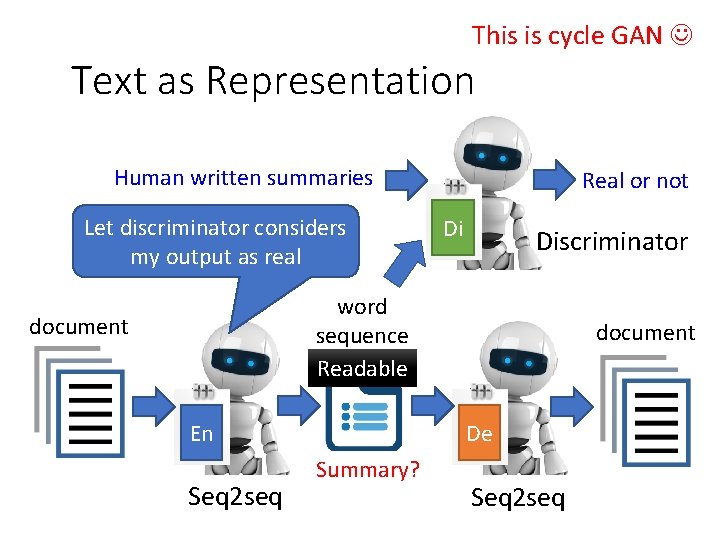

This is cycle GAN Text as Representation Human written summaries Let discriminator considers my output as real Real or not Di Discriminator word sequence Readable document En Seq 2 seq document De Summary? Seq 2 seq

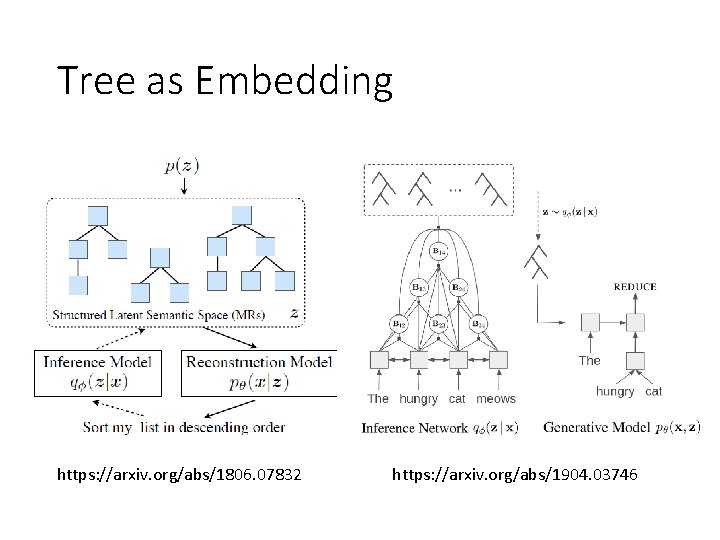

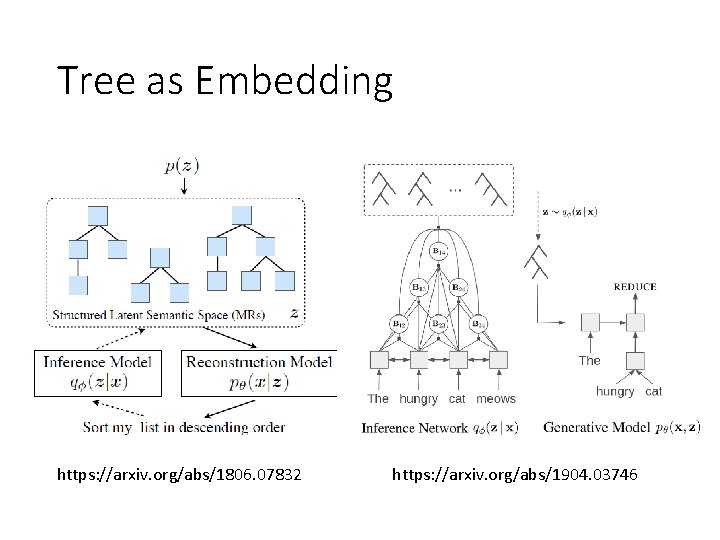

Tree as Embedding https: //arxiv. org/abs/1806. 07832 https: //arxiv. org/abs/1904. 03746

Outline Basic Idea of Auto-encoder Feature Disentanglement Discrete Latent Representation More Applications

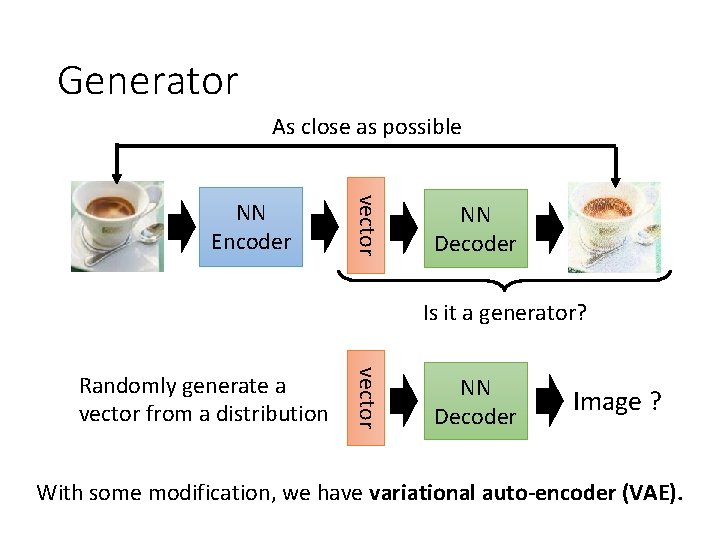

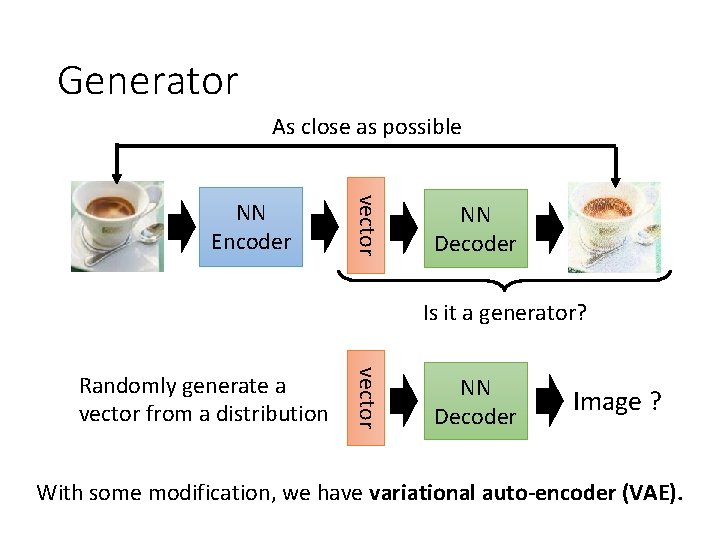

Generator As close as possible vector NN Encoder NN Decoder Is it a generator? vector Randomly generate a vector from a distribution NN Decoder Image ? With some modification, we have variational auto-encoder (VAE).

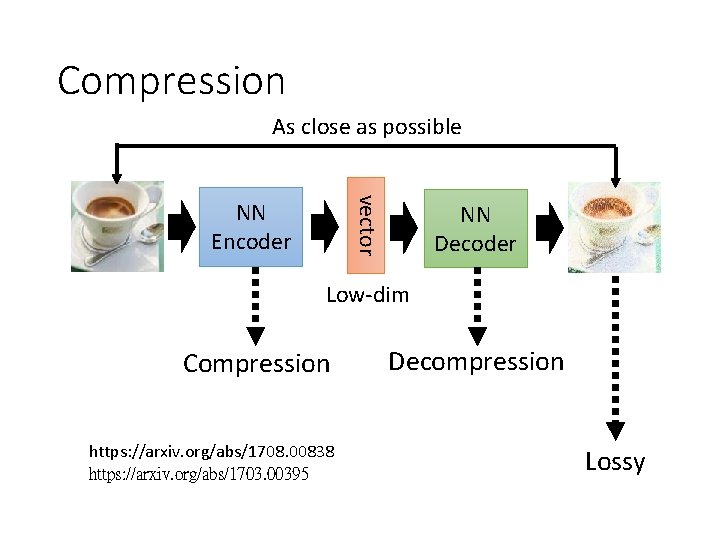

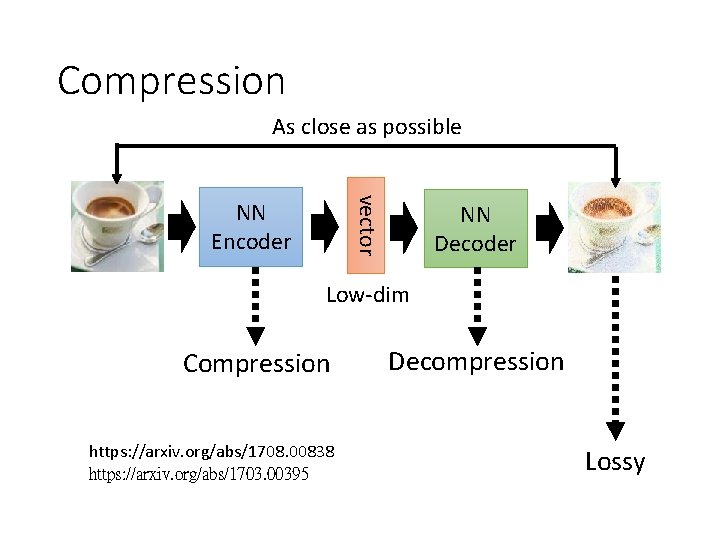

Compression As close as possible vector NN Encoder NN Decoder Low-dim Compression https: //arxiv. org/abs/1708. 00838 https: //arxiv. org/abs/1703. 00395 Decompression Lossy

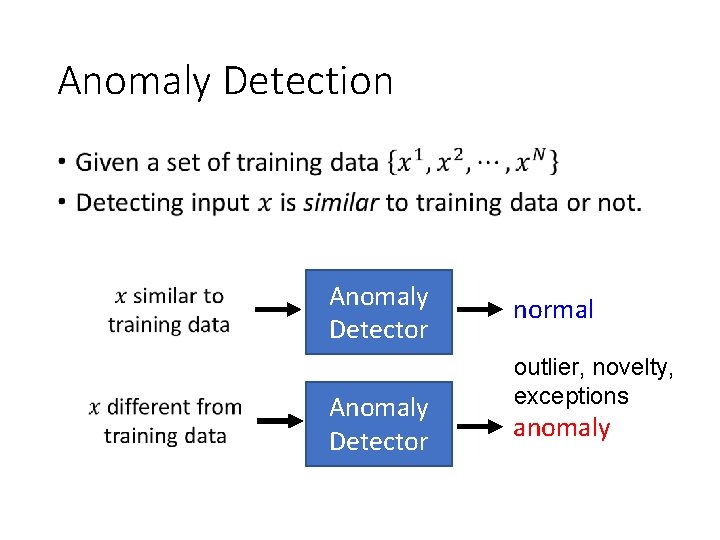

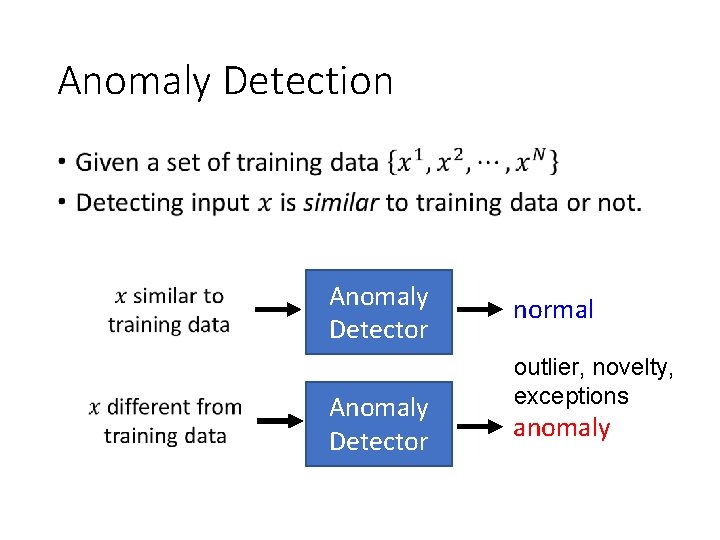

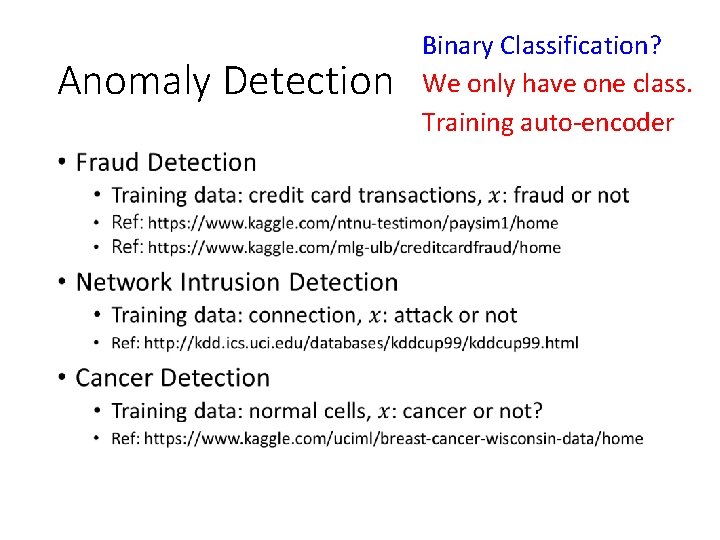

Anomaly Detection • Anomaly Detector normal outlier, novelty, exceptions anomaly

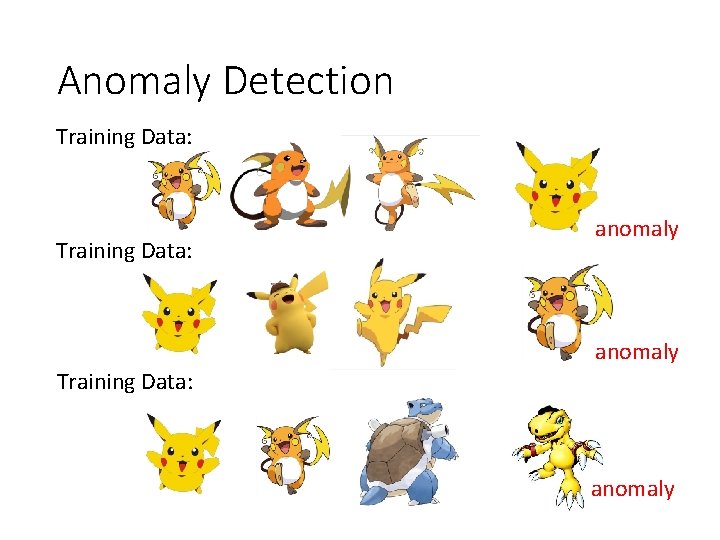

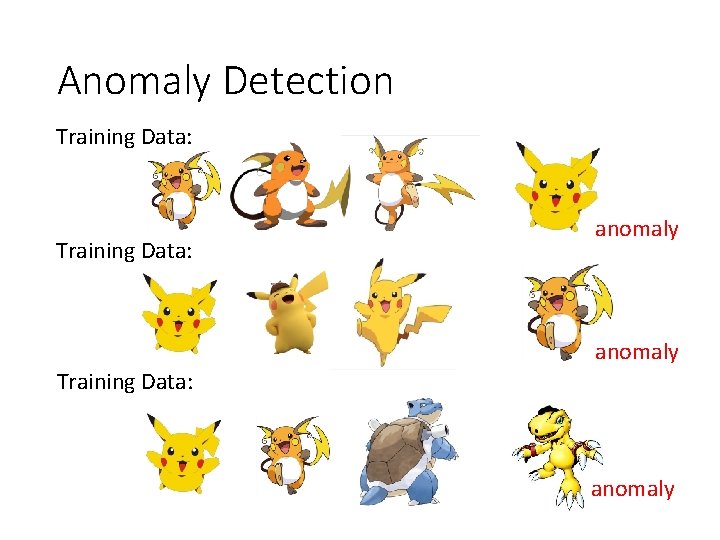

Anomaly Detection Training Data: anomaly

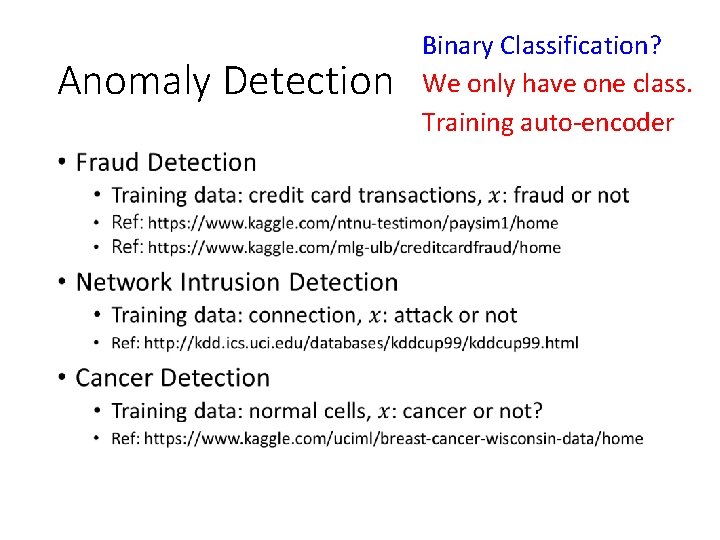

Anomaly Detection • Binary Classification? We only have one class. Training auto-encoder

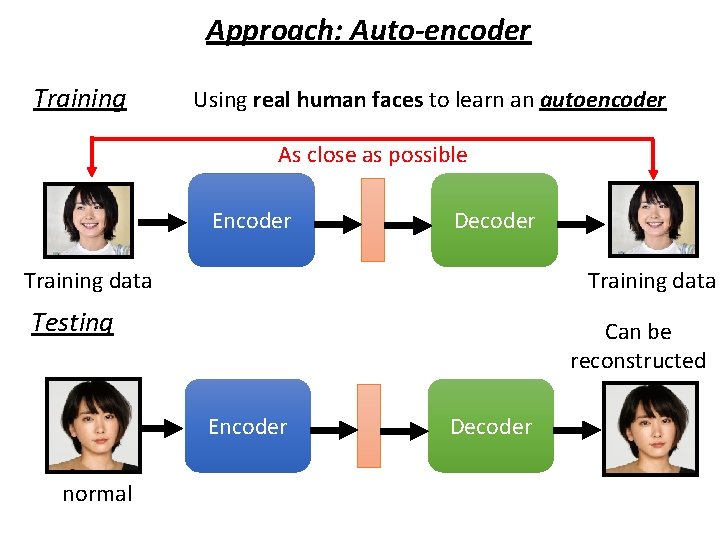

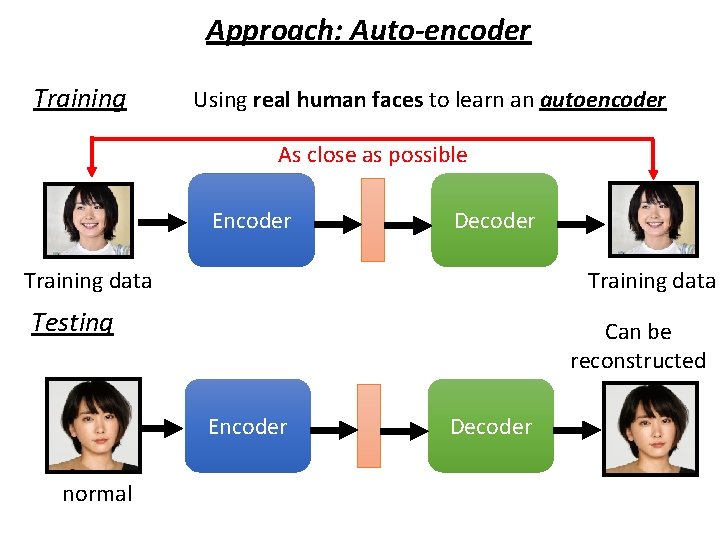

Approach: Auto-encoder Training Using real human faces to learn an autoencoder As close as possible Encoder Decoder Training data Testing Can be reconstructed Encoder normal Decoder

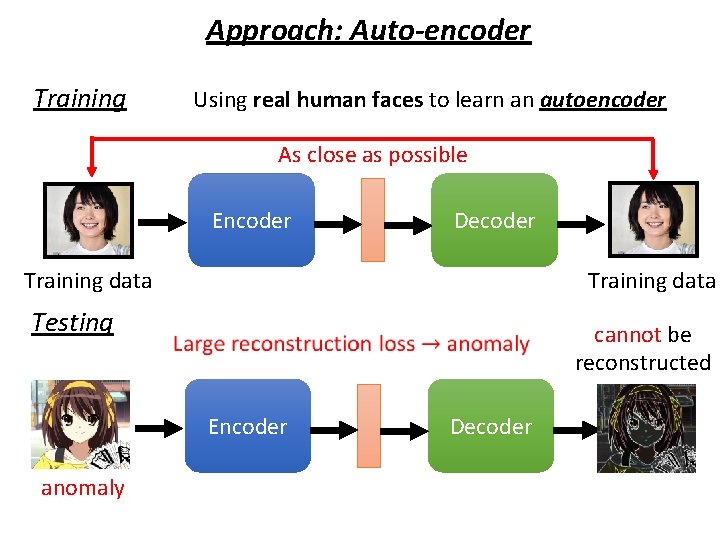

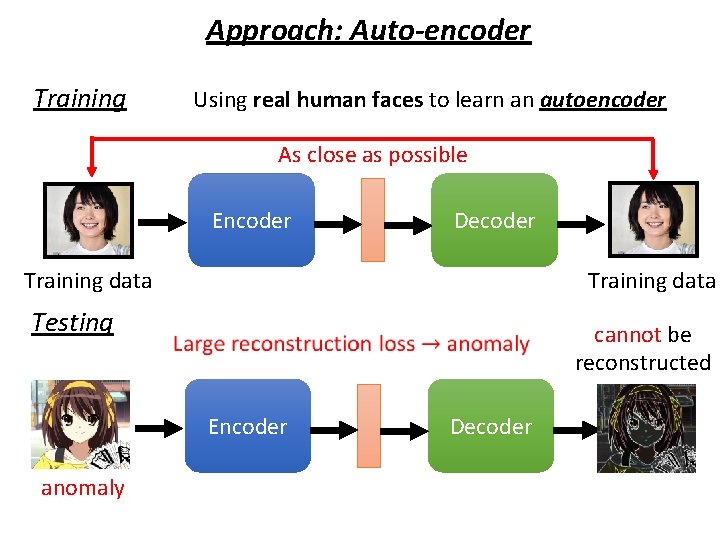

Approach: Auto-encoder Training Using real human faces to learn an autoencoder As close as possible Encoder Decoder Training data Testing cannot be reconstructed Encoder anomaly Decoder

More about Anomaly Detection • Part 1: https: //youtu. be/g. Dp 2 LXGn. VLQ • Part 2: https: //youtu. be/c. Yr. Nj. Lxko. Xs • Part 3: https: //youtu. be/ue. Dlm 2 Fk. Cnw • Part 4: https: //youtu. be/Xwk. HOUPbc 0 Q • Part 5: https: //youtu. be/Fh 1 x. FBkt. RLQ • Part 6: https: //youtu. be/Lm. FWzmn 2 r. FY • Part 7: https: //youtu. be/6 W 8 Fq. UGYy. Do

Concluding Remarks Basic Idea of Auto-encoder Feature Disentanglement Discrete Latent Representation More Applications