Audio vs Video Whats the difference J D

- Slides: 30

Audio vs. Video What’s the difference? J. D. Johnston With contributions from David Workman Copyright (c) 2006 by James D. Johnston. Permission granted for any educational use.

First, a basic reminder of the perceptual aspects: • What stands out for “audio”? • What stands out for “video”? • How are the perceptual systems the same? • How are they different?

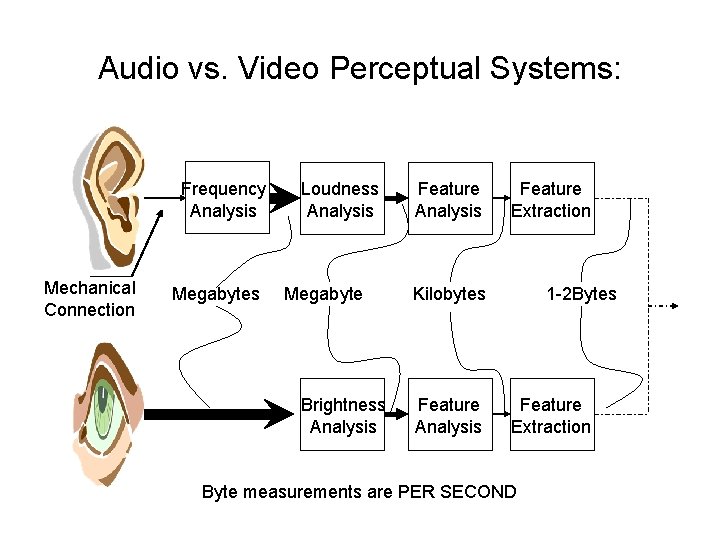

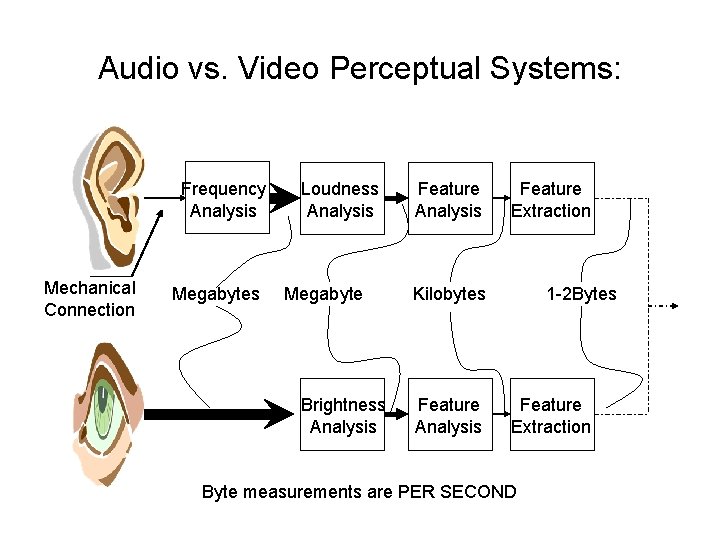

Audio vs. Video Perceptual Systems: Frequency Analysis Mechanical Connection Megabytes Loudness Analysis Megabyte Brightness Analysis Feature Extraction Kilobytes Feature Analysis 1 -2 Bytes Feature Extraction Byte measurements are PER SECOND

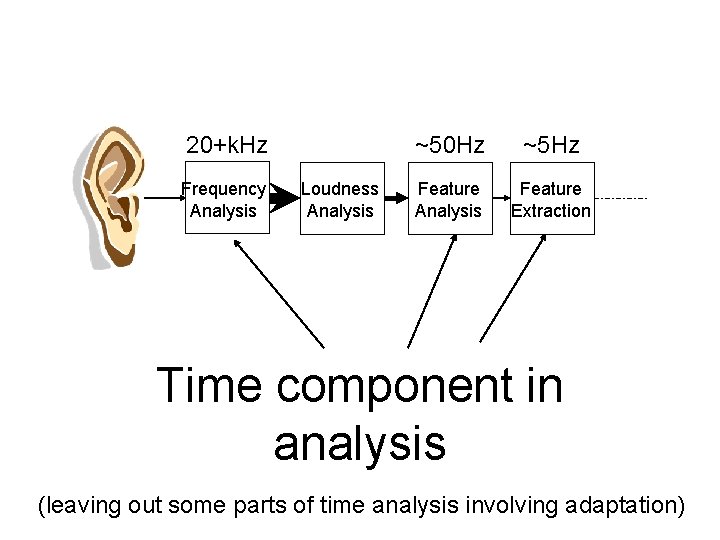

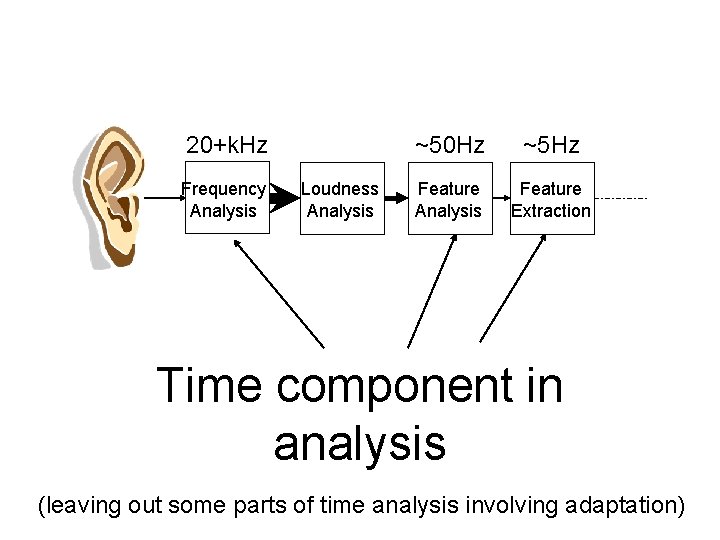

20+k. Hz Frequency Analysis Loudness Analysis ~50 Hz ~5 Hz Feature Analysis Feature Extraction Time component in analysis (leaving out some parts of time analysis involving adaptation)

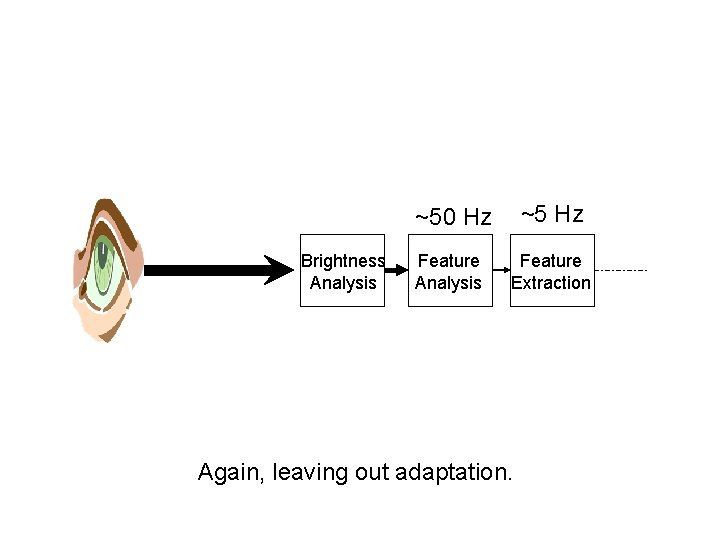

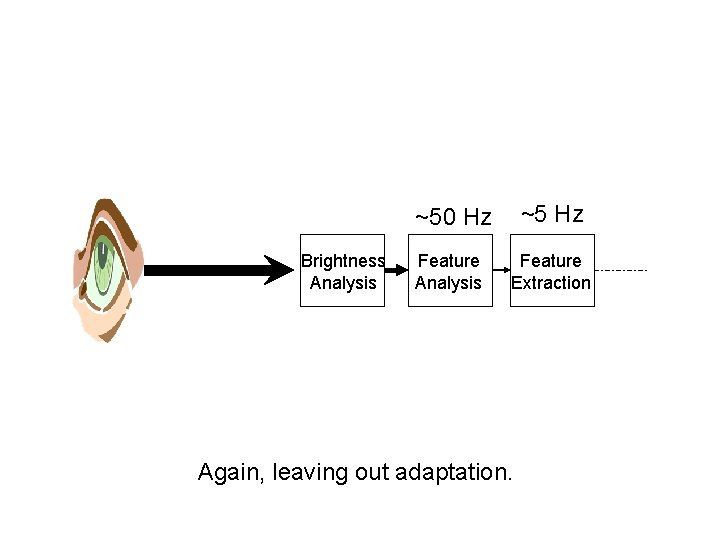

Brightness Analysis ~50 Hz ~5 Hz Feature Analysis Feature Extraction Again, leaving out adaptation.

What’s the Point? • The eye is a weak time analyzer, in terms of “response time” or Hz. • The ear is a very good time/frequency analyzer. • There are, of course, other differences: – – – The eye primarily receives data spatially The ear primarily receives data as a function of time The eye has no intrinsic time axis The ear has an intrinsic time analyzer The ear analyzes pressure (1 variable per ear) The eye analyzes 4 variables (RGBY) over space with varying resolution for each variable depending on direction of sight.

Regarding Each of those steps • Most theories of memory have humans possessing 3 levels of memory. Different people call them different things, but they can be summarized as follows for the auditory system: – Loudness memory (persists up to 200 ms) – Feature memory (persists for seconds) – Long term memory (persists indefinitely) • At each step from Loudness Feature, and Feature Long Term, much information is lost as loudness is turned into features, and features into auditory objects.

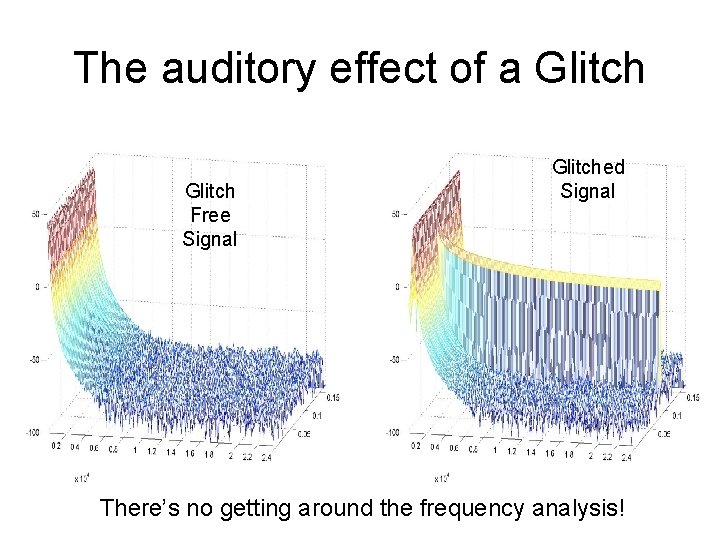

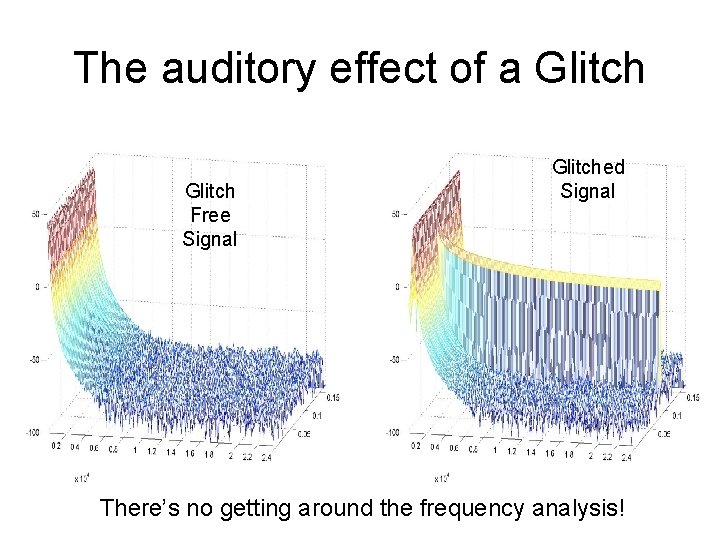

The auditory effect of a Glitch Free Signal Glitched Signal There’s no getting around the frequency analysis!

The result? • Because of the inherent frequency analysis, the loudness of the glitch can be literally ~10 times that of the original signal, give or take. The glitch is VERY perceptible and becomes the primary feature. • It’s very roughly equal to having a photographic strobe go off in your face.

What happens when we repeat a frame? • A good example is the 2: 3 pull-up that happens during telecine • 24 fps content is padded out to 30 fps by repeating “fields” (1/60 th of a second) of video (not “frames” – 1/30 th of a second) in a 2: 3 pattern • The motion looks smooth unless you know what to look for • Repeating a single full frame is usually noticeable, but in a sequence will give a very obvious “judder” to the image

Well? • It’s not a great thing to do, but under most circumstances it doesn’t introduce the equivalent of a “WHITE FLASH” or something of that sort, even though it’s not imperceptible.

What happens when we drop a frame? • Dropped frames are usually more noticeable than repeated frames. • Dropped frames in a regular pattern (30 frames to 15 frames) are LESS objectionable than repeated frames in a 2/3 pull down pattern. • Still not equivalent to having a “strobe light” flashed at you.

Therefore: • A glitch in audio creates an event that is very “big”. The glitch, in and of itself, pushes a great deal of different information down the perceptual chain. • A glitch in video is not necessarily “big” because adjacent frames don’t change that much in most cases, and are “events” when they do, in fact it’s usually small, because the frame to frame difference isn’t that big, and there is no inherent time analysis to capture the glitch.

Dynamic Range of Perception • Audio provides at least 120 d. B of level change over which loudness changes are perceived under normal circumstances. • The eye has a similar range over which brightness changes are perceived under normal circumstances. HOWEVER:

• Adaptation in Audition: – Adaptation takes place over millisecond to second timescales. – A local transducer SNR of 30 d. B, give or take, is scaled rapidly over at least a 120 d. B range. – The frequency analysis can have 90 d. B of range at any given instant. – Linearity need not apply. • Adaptation in Vision – Adaptation is over timescales of 1 second to 1000 seconds. – The local transducer SNR is the same. – In the short term, the eye is more “linear”.

Stereo Response • Two eyes to see 3 D, two ears to hear stereo … • Cover one eye and you still have “hints” about how far away things are • Plug one ear and you still have “hints” about how far away things are • Both can be fooled HOWEVER:

“Stereo” in Audition • Stereo sensations in the auditory system come about from two sources: – Interaural TIME difference – Interaural LEVEL difference • The speed of sound allows for biologically meaningful delay between the ears. • Direction cues must be derived from the time delay.

Stereo Vision • The speed of light does not allow for biologically perceptible time delays between the eyes. • The eyes, however, are spatial receptors. – Parallax between the two eyes provides distance cues. – Direction cues are BUILT IN to the 2 D image.

Color Vision • Rod cells detect luminance • Cone cells detect chrominance • Many more Rod cells than Cone cells – and conversely much better luma resolution • Chroma perception drops off at low light levels • Luma perception “bleaches out” at high light levels • Therefore, day vision and night vision are substantially different

“Color Audition” • Doesn’t exist. This variable does not exist in the auditory system. • The auditory system works approximately the same for loud vs. quiet sounds. The changes are second order except at the thresholds of hearing and pain. This slide intentionally left blank.

Gamma Correction • Video cameras have a Gamma curve applied to the sensing element • Originally, this was to “pre-compensate” for nonlinearities in the camera • The opposite gamma curve is applied in a TV set to restore the linear levels • Gamma curves also compensate for the difference in differential sensitivity of the eye at brighter and darker levels. • Any “harmonic distortion” doesn’t matter, the eye is a spatial receptor.

Gamma correction in audio? • That would introduce a great deal of nonlinear distortion to an audio signal. • The frequency domain components would be audible (the ear is a frequency analyzer) • A varying noise floor would be exposed by the 90+d. B mechanical filtering mechanisms in the ear.

Conclusions:

The main differences: • Time/Frequency vs. spatial analysis • Dynamic range vs. time • Linearity • Stereo senses • Color (the ear hasn’t any)

Spatial vs. Frequency Analysis • The eye is primarily a spatial analyzer, it’s frequency analysis is at higher (cognitive) levels, and is “slow” compared to audio. Accuracy in the spatial domain is of primary importance. • The ear is first and foremost a frequency analyzer, the time domain, and time/frequency domain accuracy, are of primary importance.

Dynamic Range vs. Time • The ear adapts on a. 001 to 1 second basis. Adaptation is effectively instantaneous. Unless the sound is loud enough to cause temporary threshold shift (and that’s loud) there is little “memory”. • The eye adapts on a 1 to 1000 second basis. (enter a cave from bright sunshine, and see how long it takes you to adapt)

Dynamic Range vs. Frequency • The ear’s mechanical filters can provide in excess of 90 d. B separation at different frequencies at any given instant, leading to PCM depths of at least 16 bits. • The eye can handle much less range at a given instant, leading to 8 to 10 bit PCM video.

Linearity • The ear isn’t linear. I think I said this once or twice, but I’ll say it again. • The eye, given a fixed overall illumination level, is more linear. • The ear, however, requires linear capture and reproduction, because nonlinearities introduce frequency components that were not present in the original signal. • The eye, not having the frequency analyzer, does not suffer nearly as much from this problem. Video capture can use “gamma” or ‘warped’ capture curves as a result.

Stereoscopy • The eye implicitly captures direction, it is a spatial sensor. • The ear derives direction information from time and level information. • The eye can derive distance information from parallax, due to its spatial ability. • The ear, barely, can abstract some idea of distance, from tertiary cues. The ear can do pretty well once you learn the acoustics of your location.

Yeah, so? • For hearing, keep the time domain intact. Errors in the time domain, drop outs, etc, create very annoying events. Don’t make errors that create new frequencies! • For vision, keep the spatial domain intact. The time domain, while important, is secondary. Don’t worry about making new “frequencies”, the sensor isn’t that sensitive, BUT GET YOUR EDGES RIGHT.