Audio classification Discriminating speech music and environmental audio

- Slides: 6

Audio classification Discriminating speech, music and environmental audio Rajas A. Sambhare ECE 539

Objective Discrimination between speech, music and environmental audio (special effects) using short 3 -second samples • To extract a relevant set of feature vectors from the audio samples • To develop a pattern classifier that can successfully discriminate three different classes based on the extracted vectors

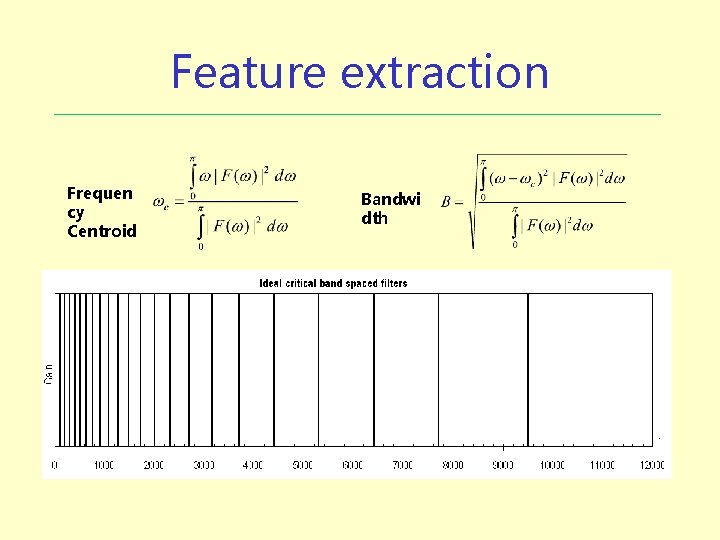

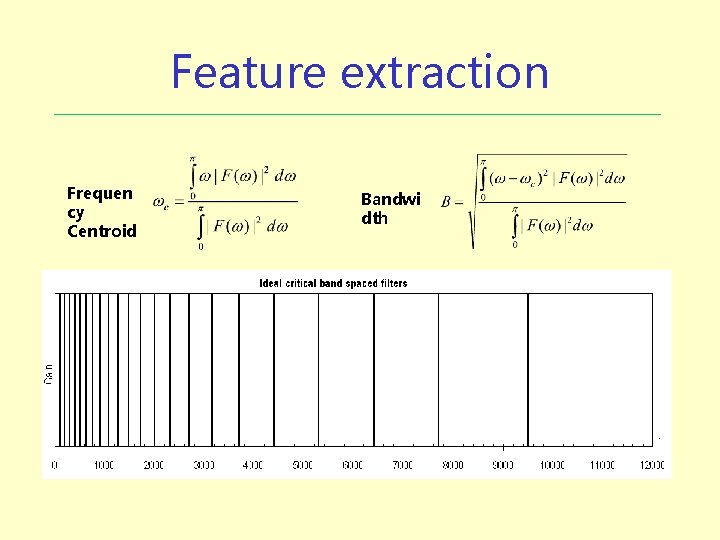

Feature extraction Frequen cy Centroid Bandwi dth

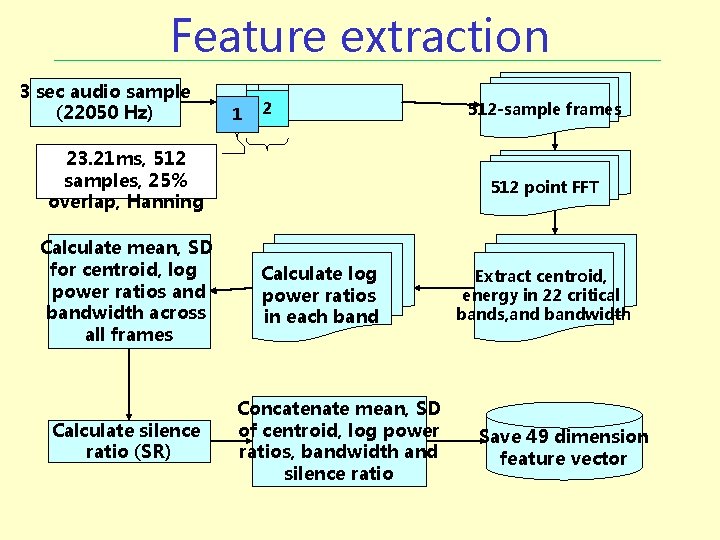

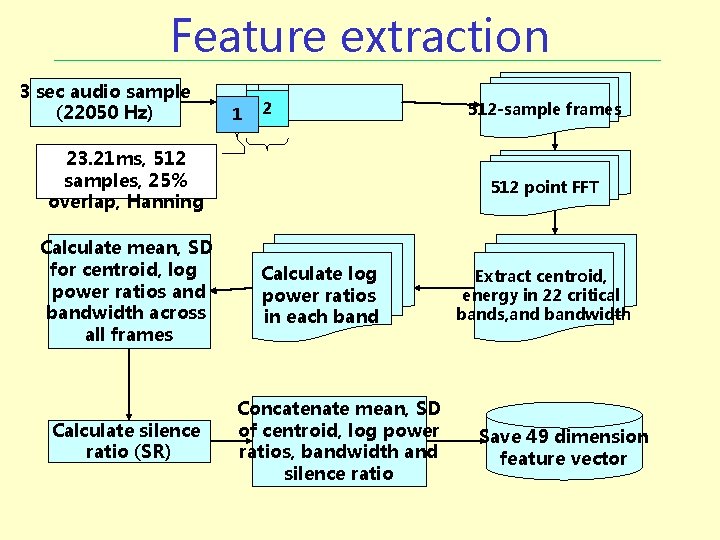

Feature extraction 3 sec audio sample (22050 Hz) 1 2 23. 21 ms, 512 samples, 25% overlap, Hanning Calculate mean, SD for centroid, log power ratios and bandwidth across all frames Calculate silence ratio (SR) 512 -sample frames 512 point FFT Calculate log power ratios in each band Concatenate mean, SD of centroid, log power ratios, bandwidth and silence ratio Extract centroid, energy in 22 critical bands, and bandwidth Save 49 dimension feature vector

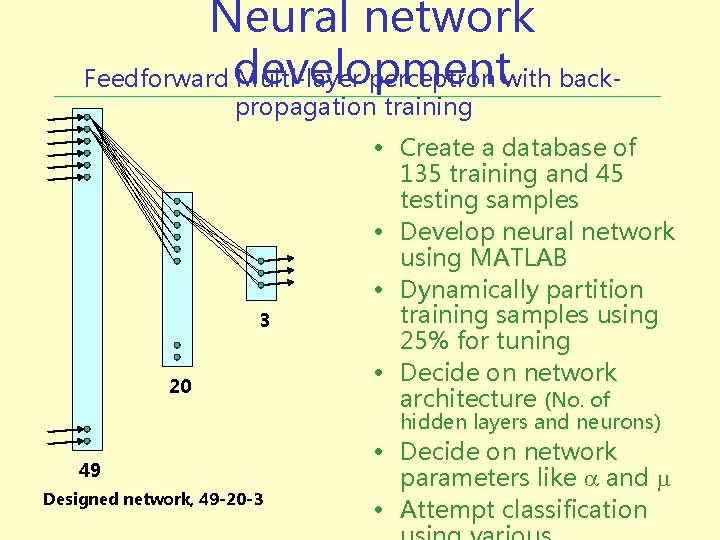

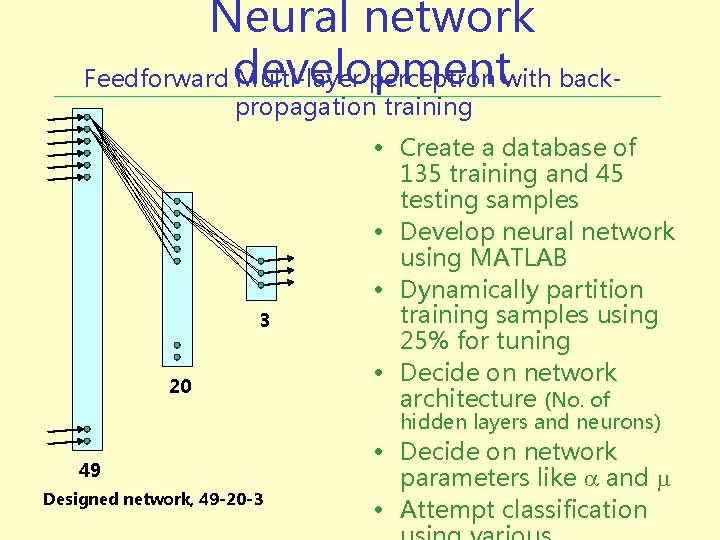

Neural network Feedforward development Multi-layer perceptron with backpropagation training 3 20 • Create a database of 135 training and 45 testing samples • Develop neural network using MATLAB • Dynamically partition training samples using 25% for tuning • Decide on network architecture (No. of hidden layers and neurons) 49 Designed network, 49 -20 -3 • Decide on network parameters like and • Attempt classification

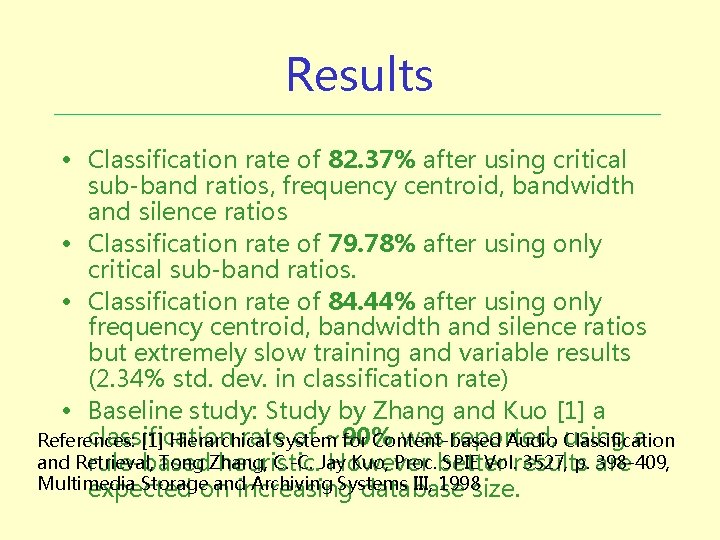

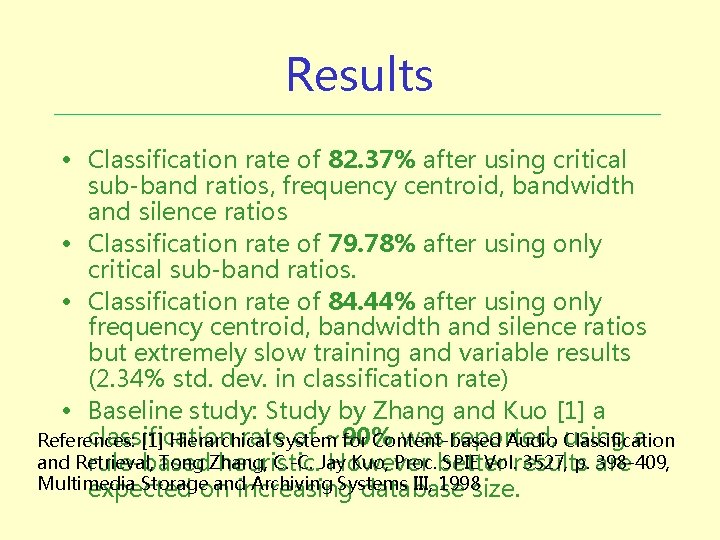

Results • Classification rate of 82. 37% after using critical sub-band ratios, frequency centroid, bandwidth and silence ratios • Classification rate of 79. 78% after using only critical sub-band ratios. • Classification rate of 84. 44% after using only frequency centroid, bandwidth and silence ratios but extremely slow training and variable results (2. 34% std. dev. in classification rate) • Baseline study: Study by Zhang and Kuo [1] a classification rate. System of ~90% was reported, using a References: [1] Hierarchical for Content-based Audio Classification and Retrieval, Tong Zhang, C. -C. Jay Kuo, Proc. better SPIE Vol. results 3527, p. 398 -409, rule-based heuristic. However are Multimedia Storageon andincreasing Archiving Systems III, 1998 size. expected database