Attention is not Explanation NAACL 19 Sarthak Jain

- Slides: 18

Attention is not Explanation NAACL’ 19 Sarthak Jain, Byron C. Wallace Northeastern University

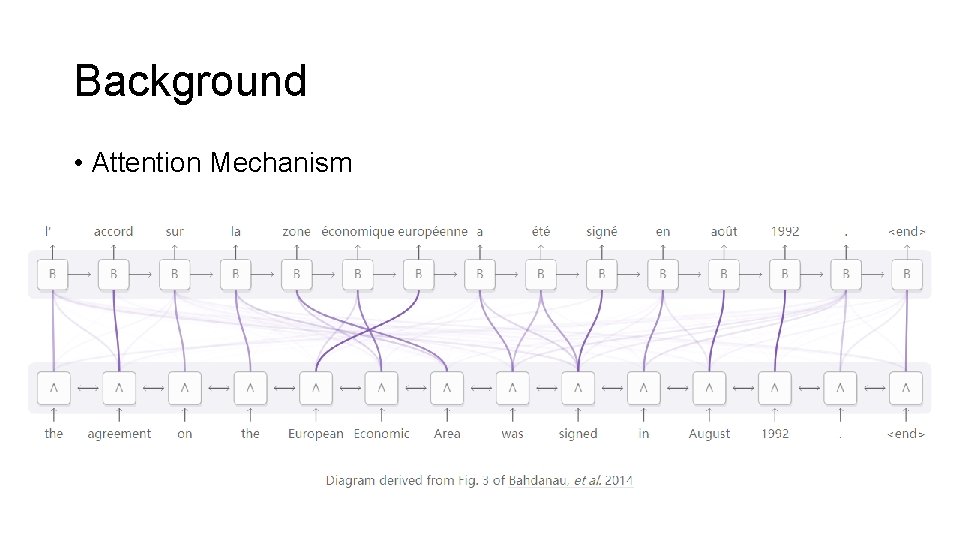

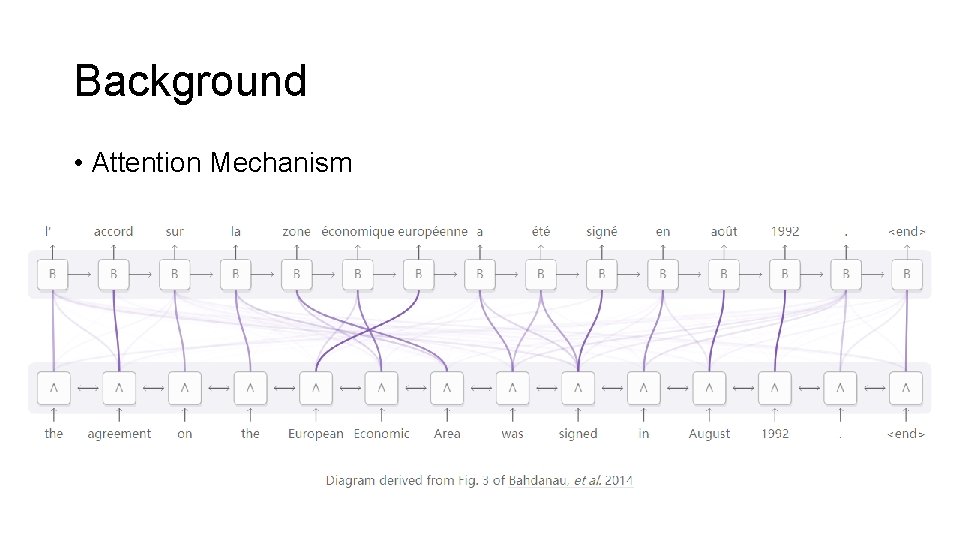

Background • Attention Mechanism

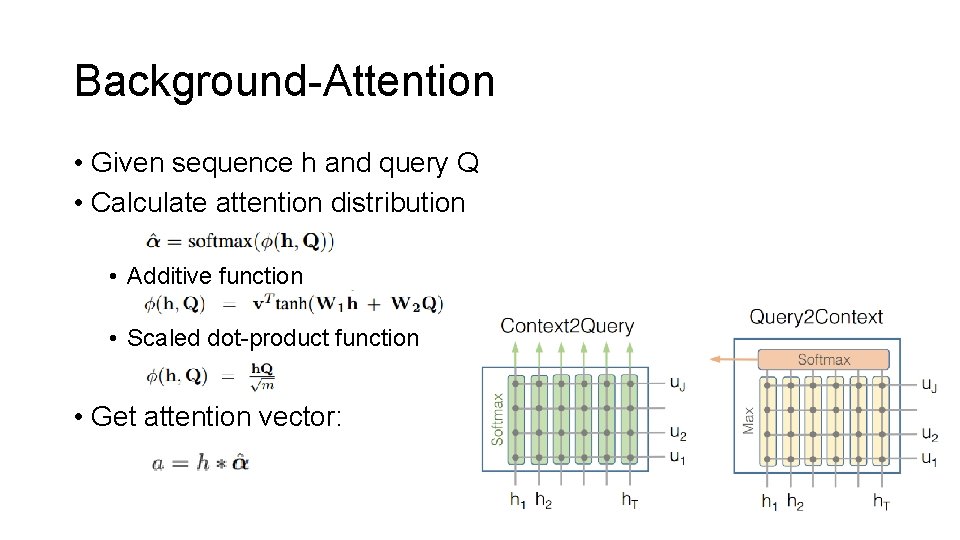

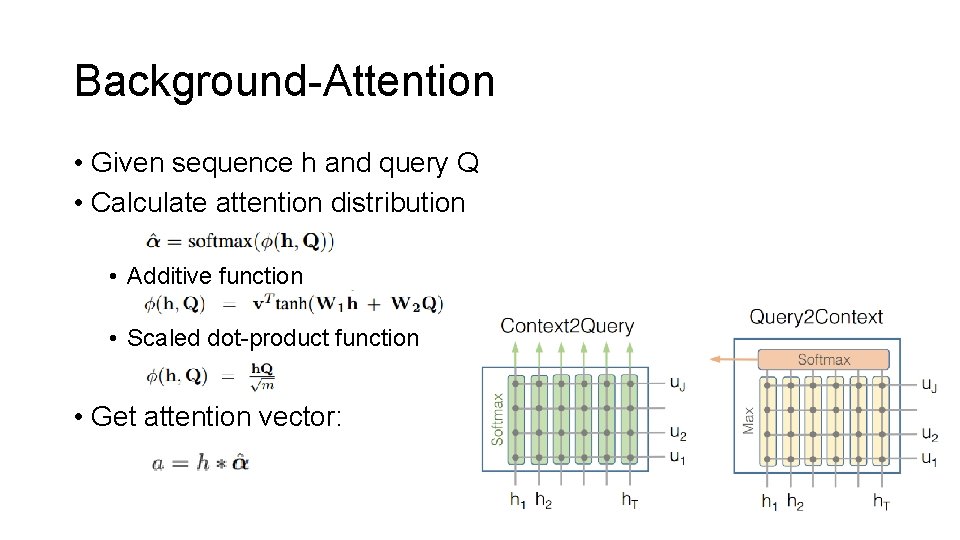

Background-Attention • Given sequence h and query Q • Calculate attention distribution • Additive function • Scaled dot-product function • Get attention vector:

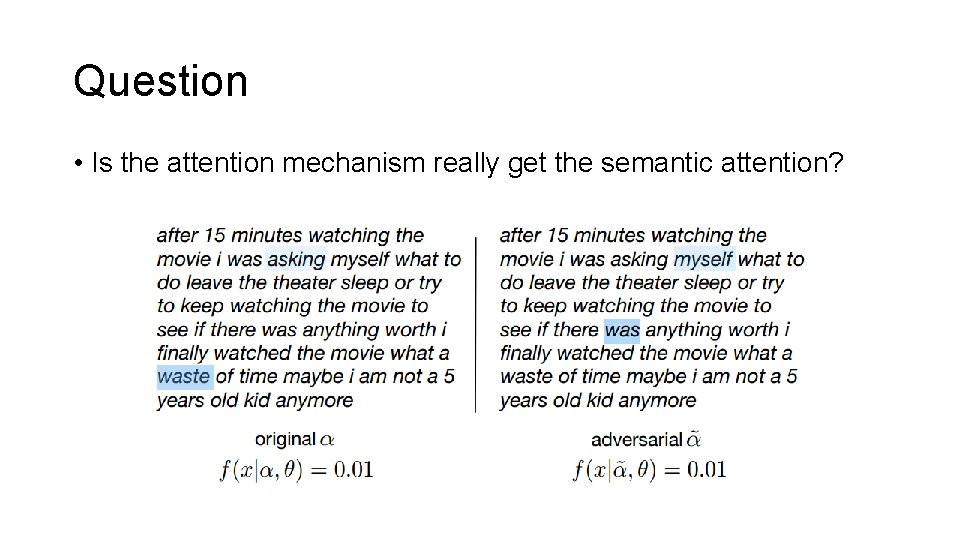

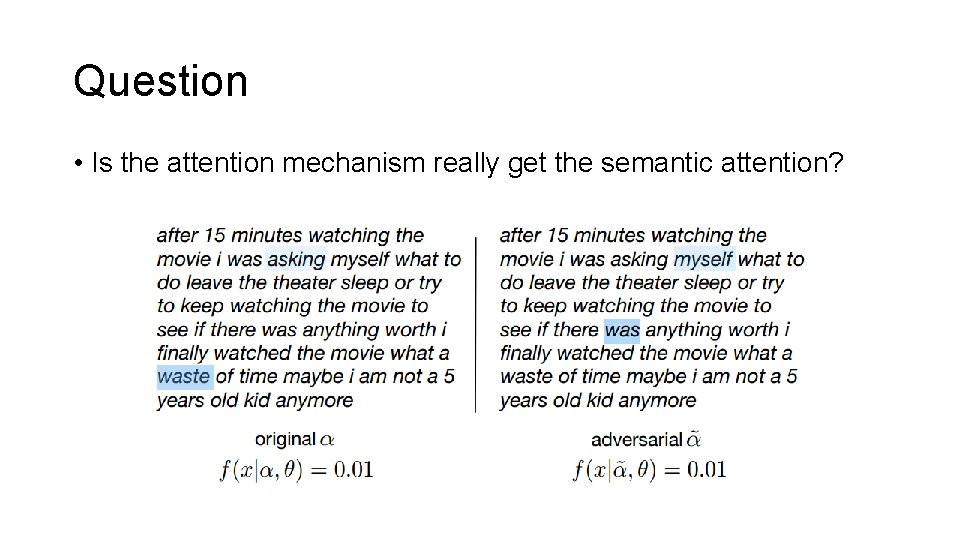

Question • Is the attention mechanism really get the semantic attention?

Is the attention provide transparency? • Do attention weights correlate with measures of feature importance? • Would alternative attention weights necessarily yield different predictions?

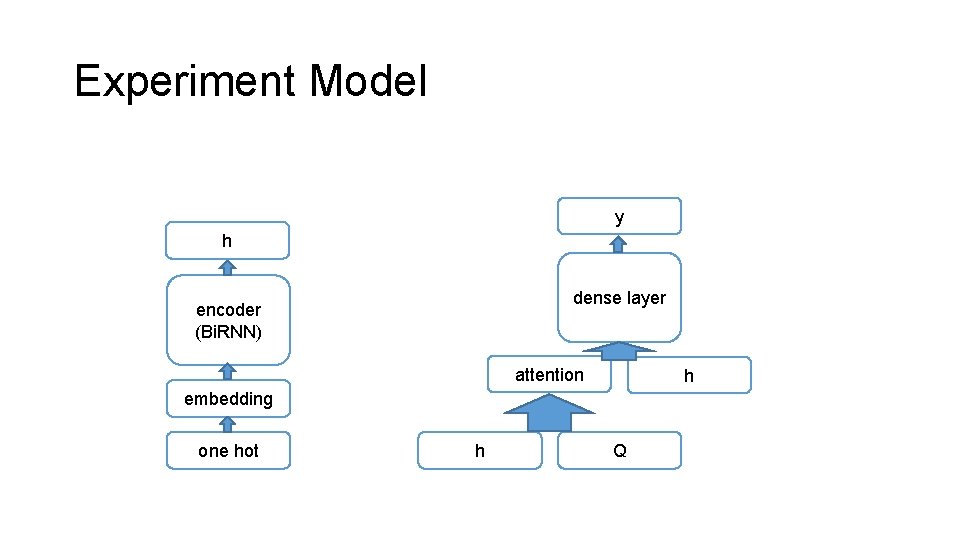

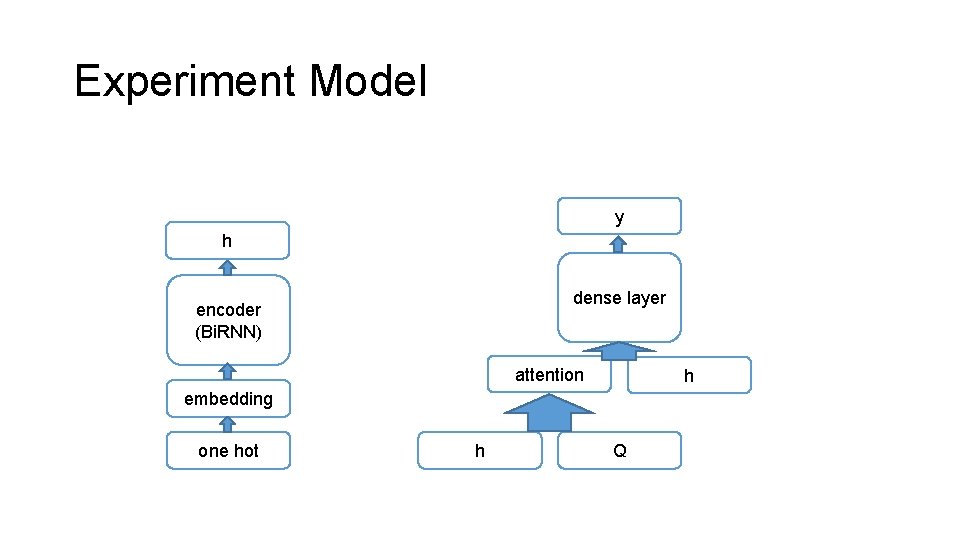

Experiment Model y h dense layer encoder (Bi. RNN) attention h embedding one hot h Q

Dataset

Correlation with Feature Importance • Gradient based measure • Leave one feature out

Result for Correlation Orange=>Positive, Purple=>Negative O, P, G=>Neutral, Contradiction, Entailment • Gradients

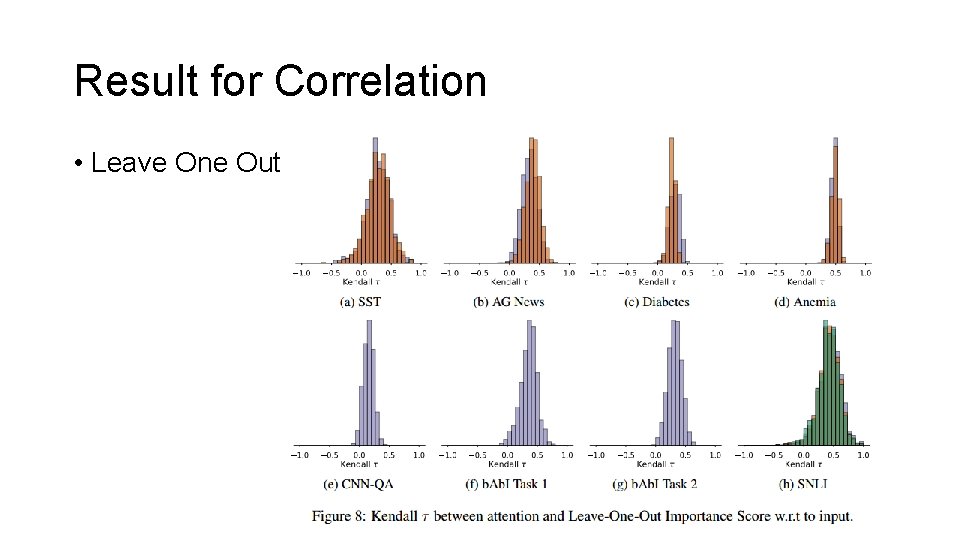

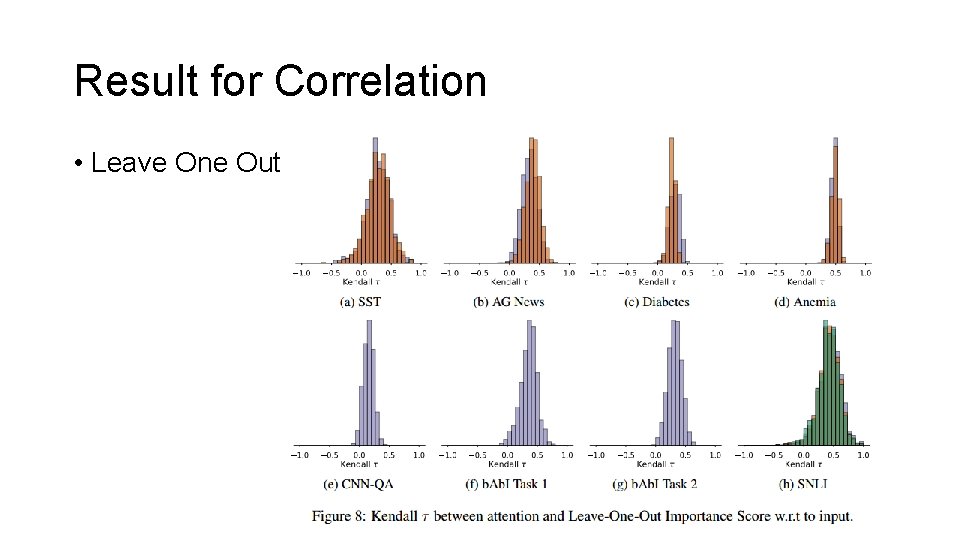

Result for Correlation • Leave One Out

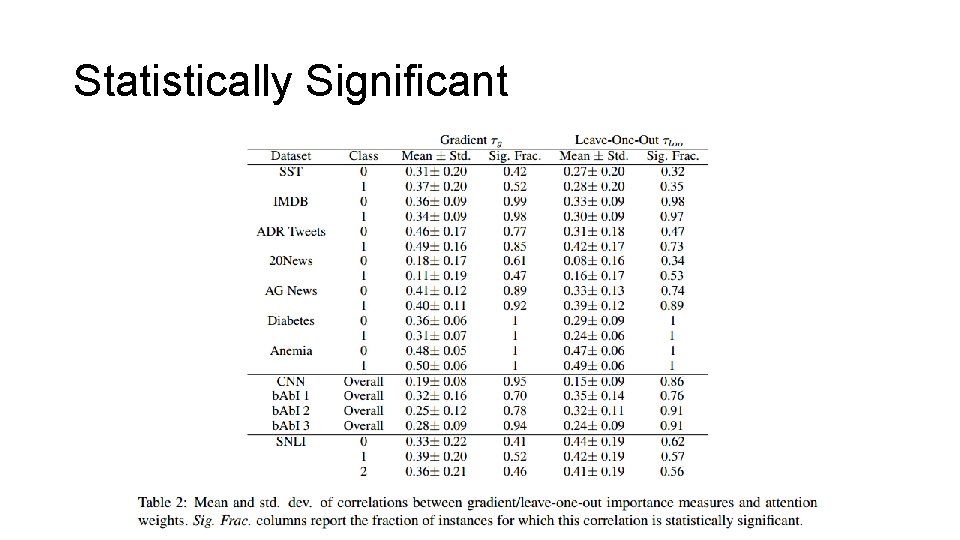

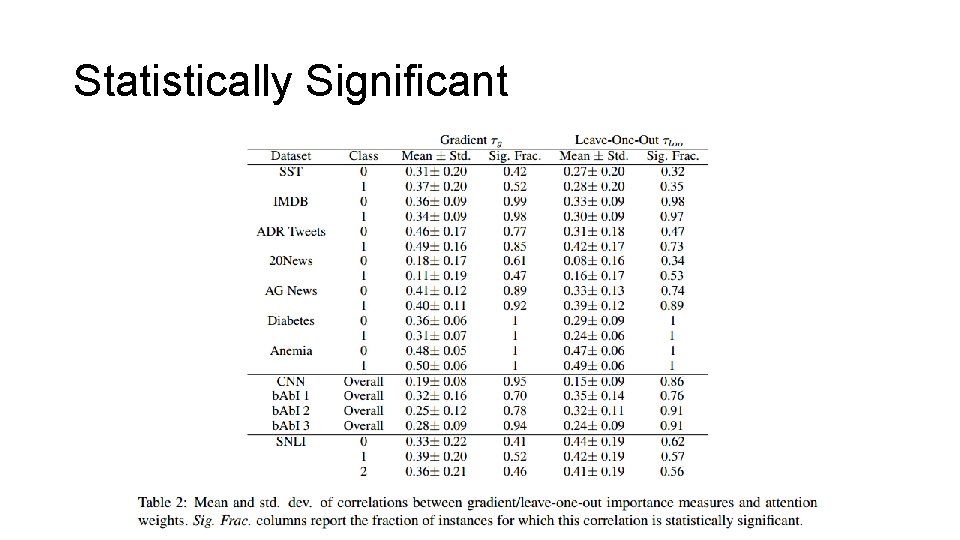

Statistically Significant

Random Attention Weights

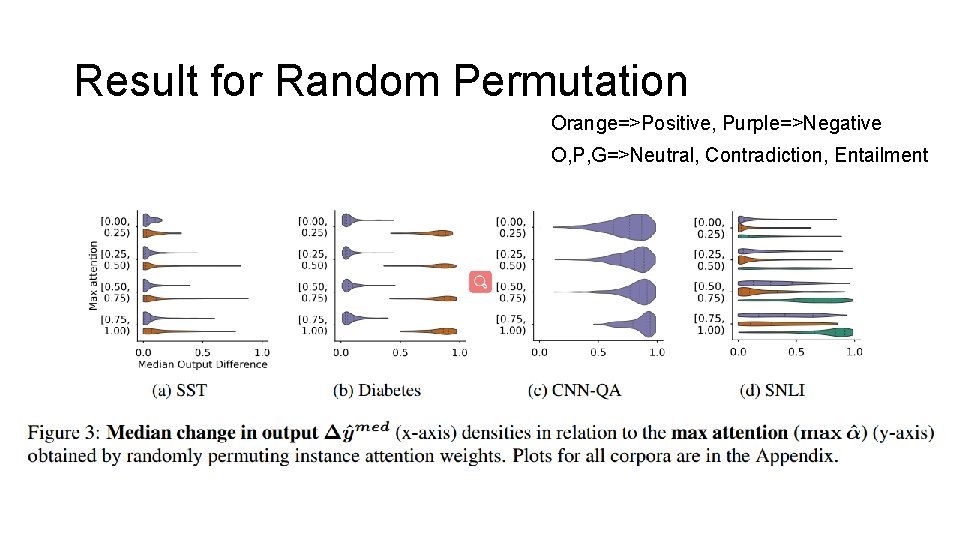

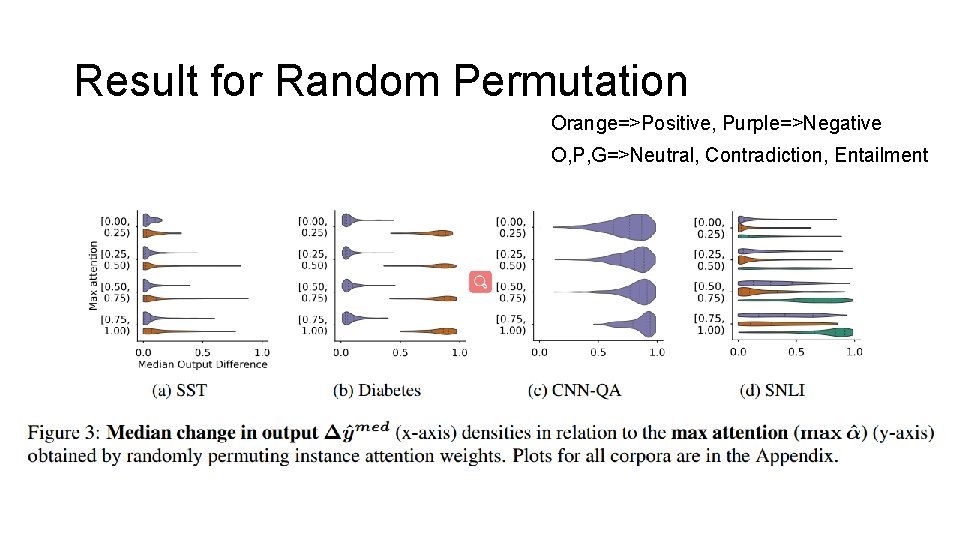

Result for Random Permutation Orange=>Positive, Purple=>Negative O, P, G=>Neutral, Contradiction, Entailment

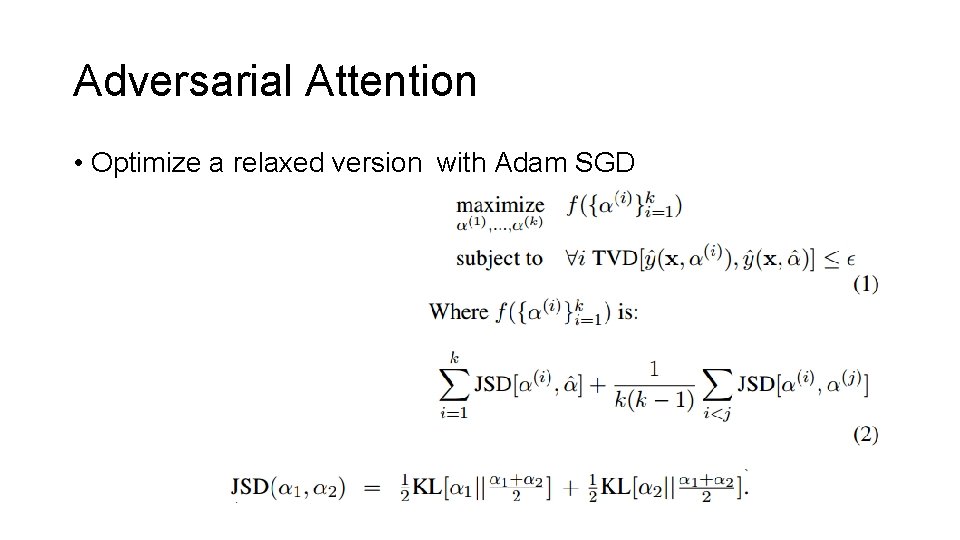

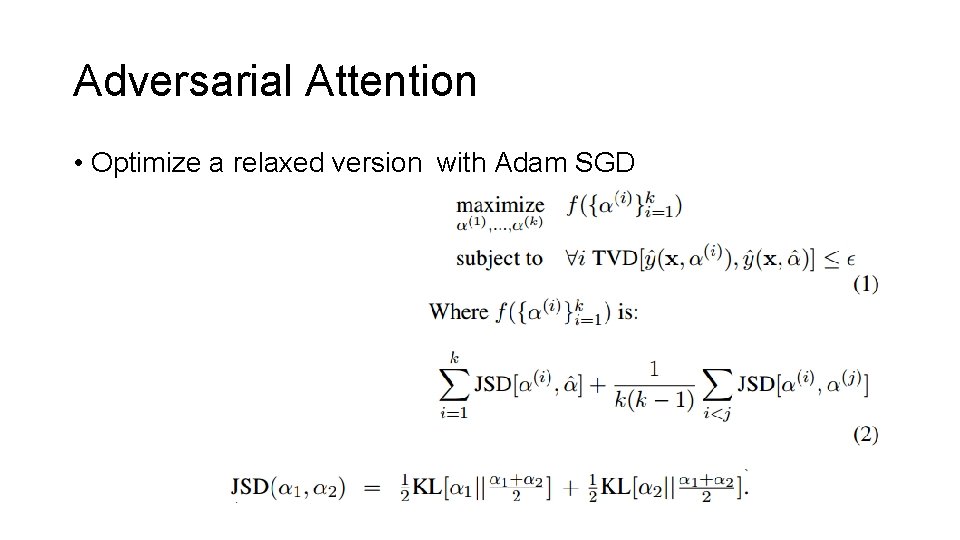

Adversarial Attention • Optimize a relaxed version with Adam SGD

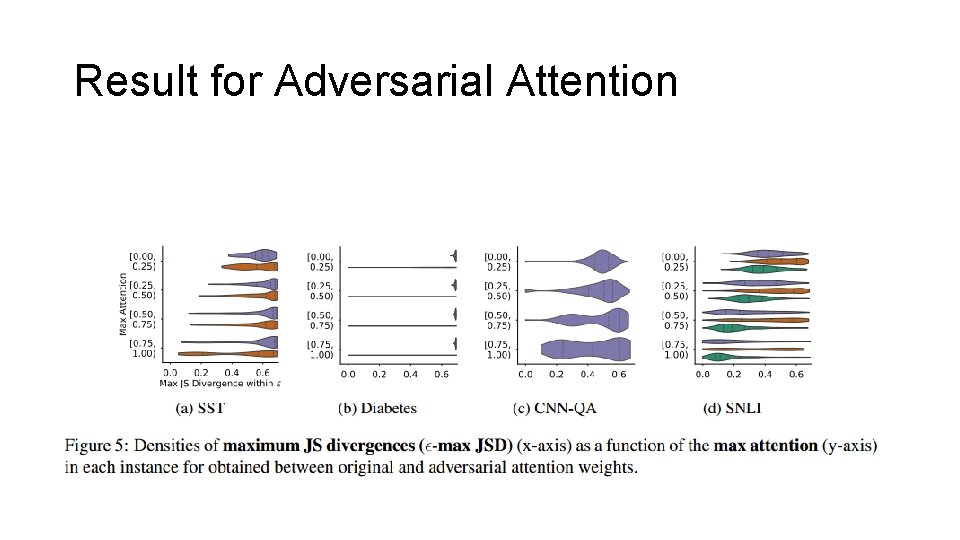

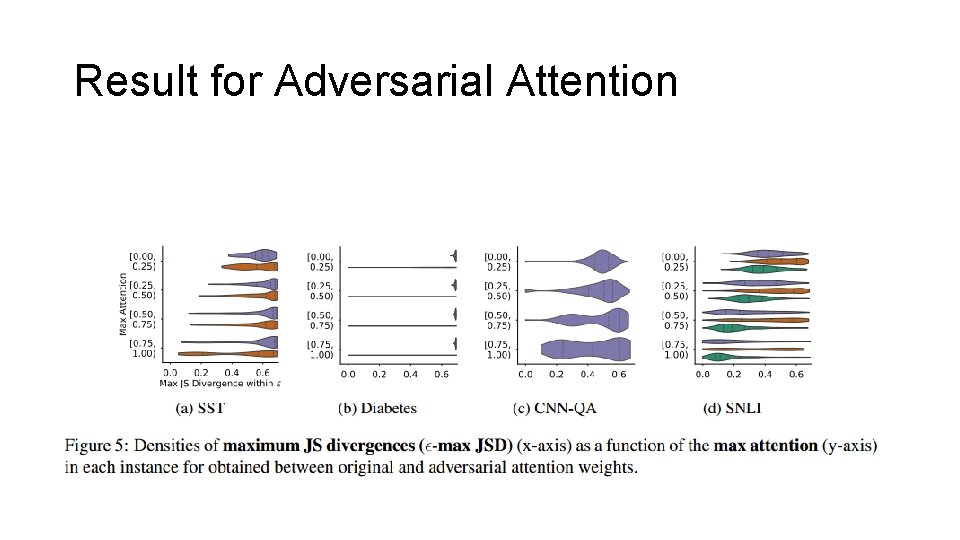

Result for Adversarial Attention

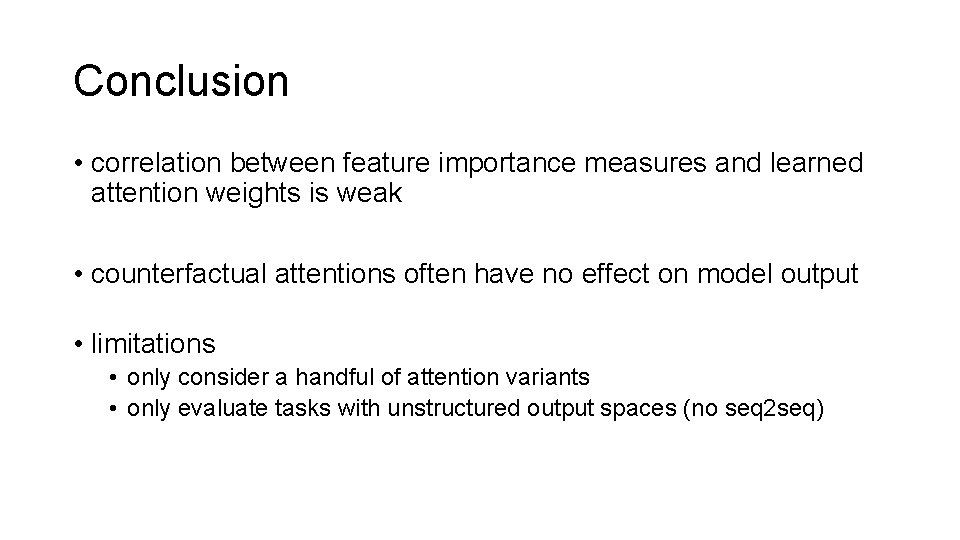

Conclusion • correlation between feature importance measures and learned attention weights is weak • counterfactual attentions often have no effect on model output • limitations • only consider a handful of attention variants • only evaluate tasks with unstructured output spaces (no seq 2 seq)

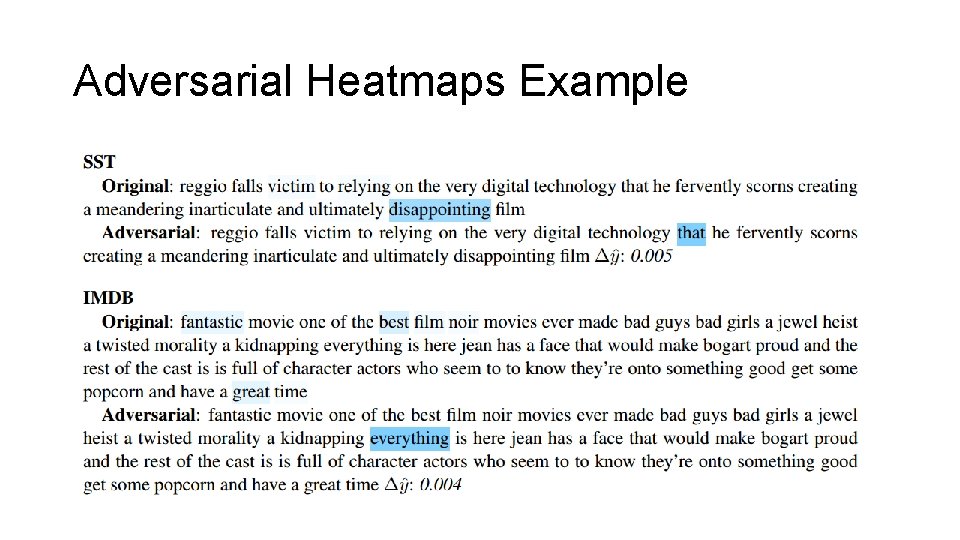

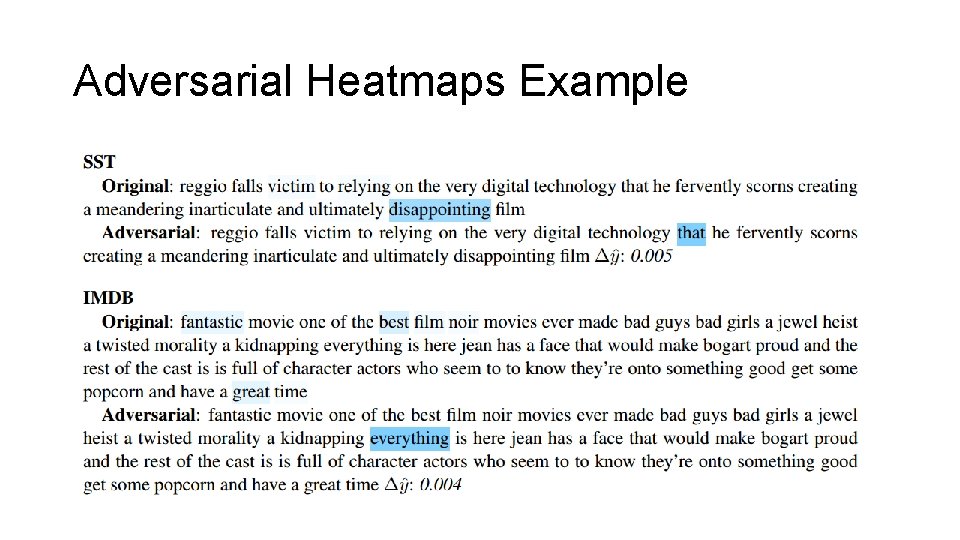

Adversarial Heatmaps Example

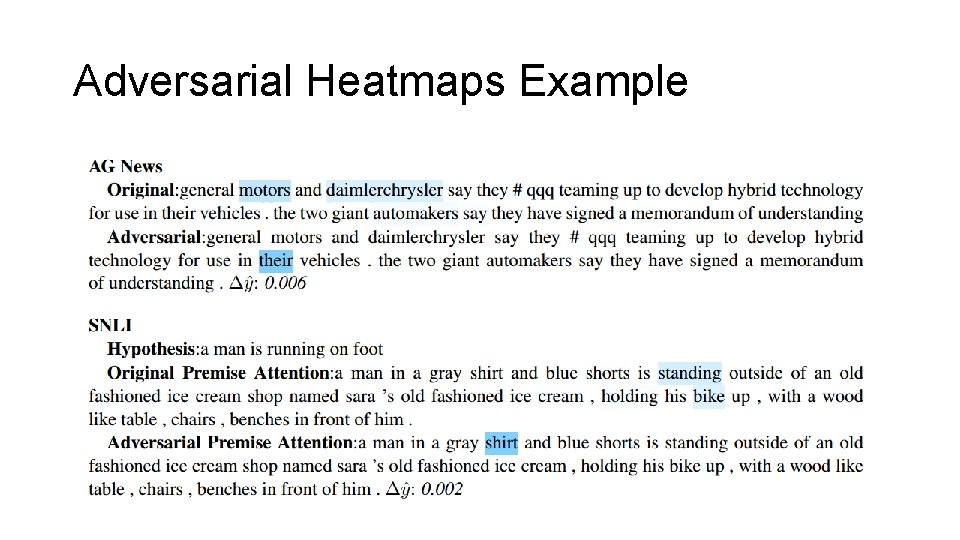

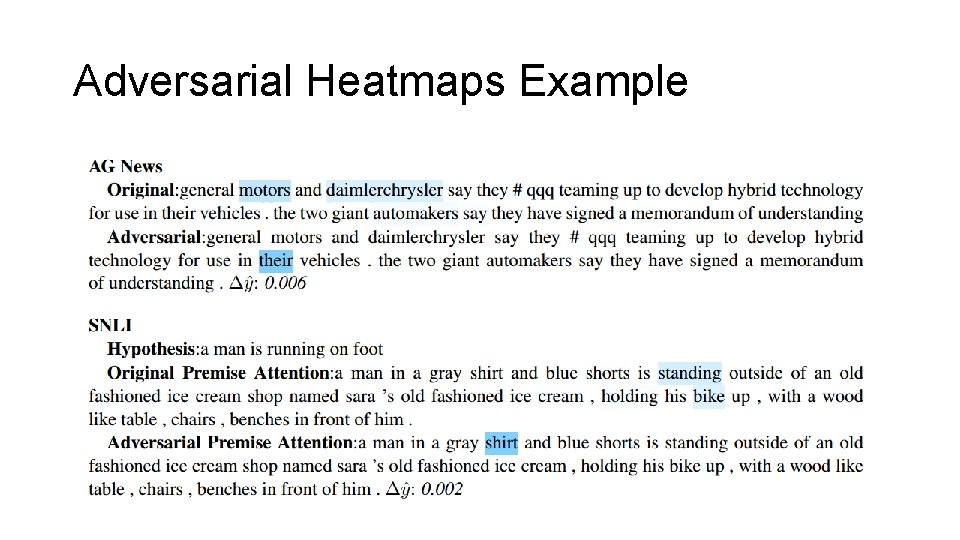

Adversarial Heatmaps Example

Attention is not not explanation

Attention is not not explanation If you are not confused you're not paying attention

If you are not confused you're not paying attention Michelle benjamin phd

Michelle benjamin phd Class please pay attention to my explanation

Class please pay attention to my explanation Not genuine, not true, not valid

Not genuine, not true, not valid He and she ___ not pay attention of teachers announcement

He and she ___ not pay attention of teachers announcement It has come to our attention

It has come to our attention Good delivery does not call attention to itself.

Good delivery does not call attention to itself. The road not taken poem summary

The road not taken poem summary Temple

Temple Ca vinod jain

Ca vinod jain Ashish jain microsoft

Ashish jain microsoft Jain way of life

Jain way of life Vaibhav.14v

Vaibhav.14v Jain society of central florida

Jain society of central florida Geisel student government

Geisel student government Jain portal

Jain portal Vidya jain case

Vidya jain case Atap vs iip

Atap vs iip