Attention is all you need YUN KAI SU

- Slides: 18

如何用有效率的方法得到有用的特徵來完成任務? Attention is all you need YUN KAI SU 2021/3/12 1

Translation river I arrived at the bank after crossing the street 岸邊? 銀行? 2021/3/12 2

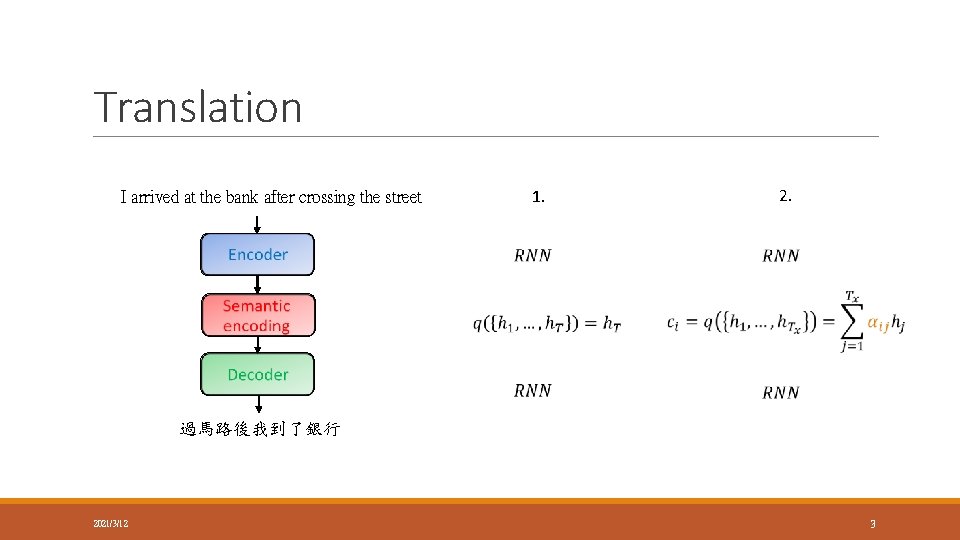

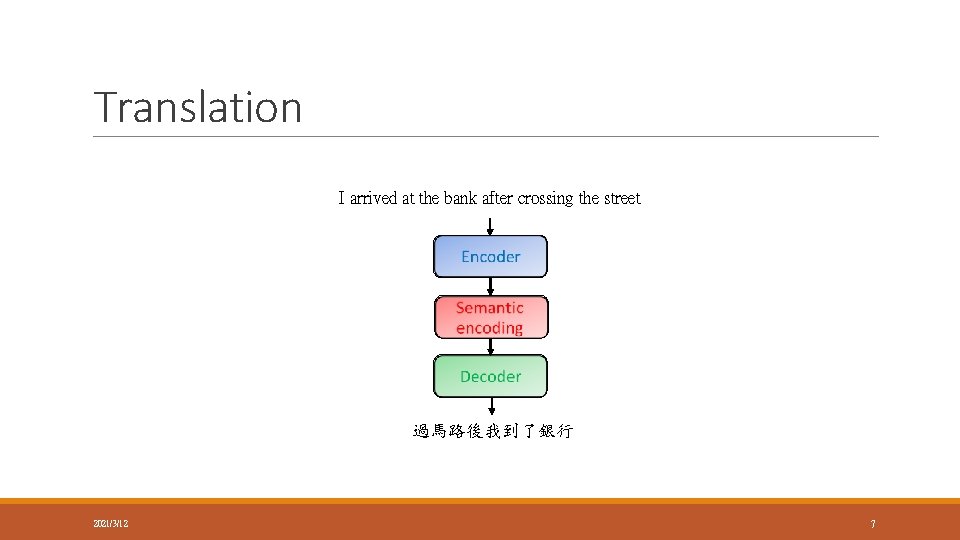

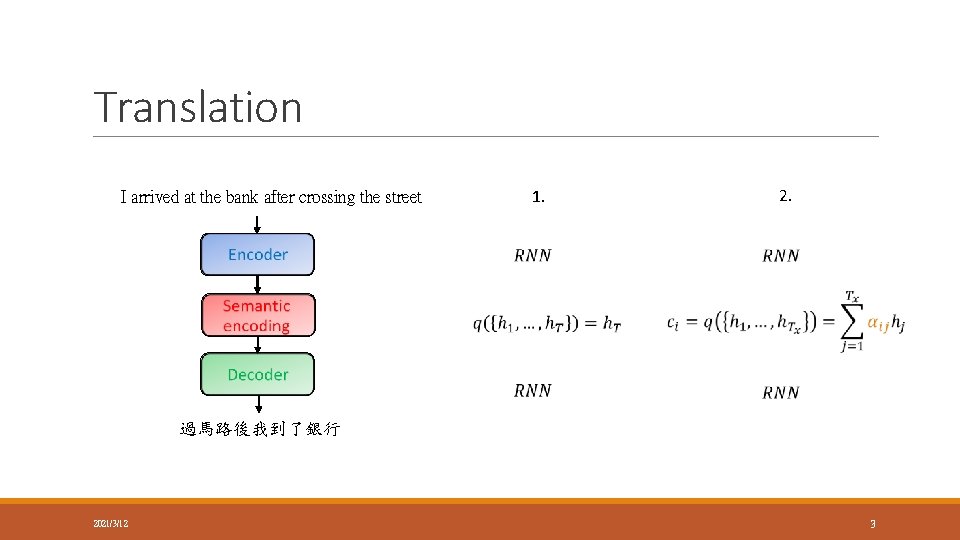

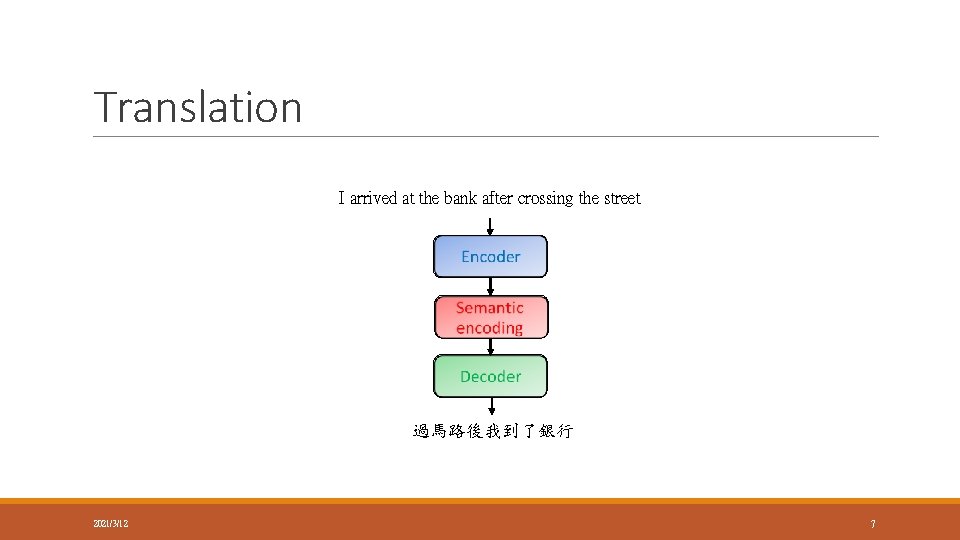

Translation I arrived at the bank after crossing the street 1. 2. 過馬路後我到了銀行 2021/3/12 3

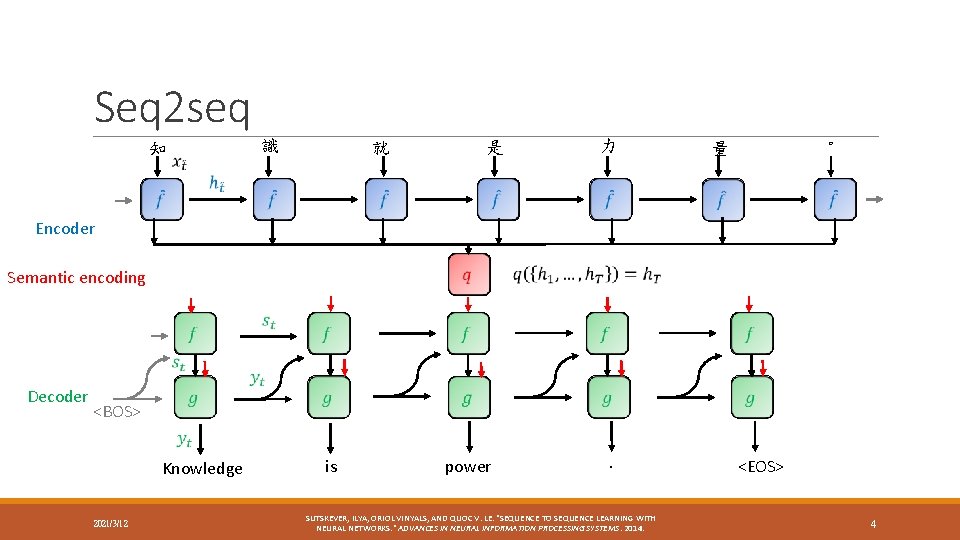

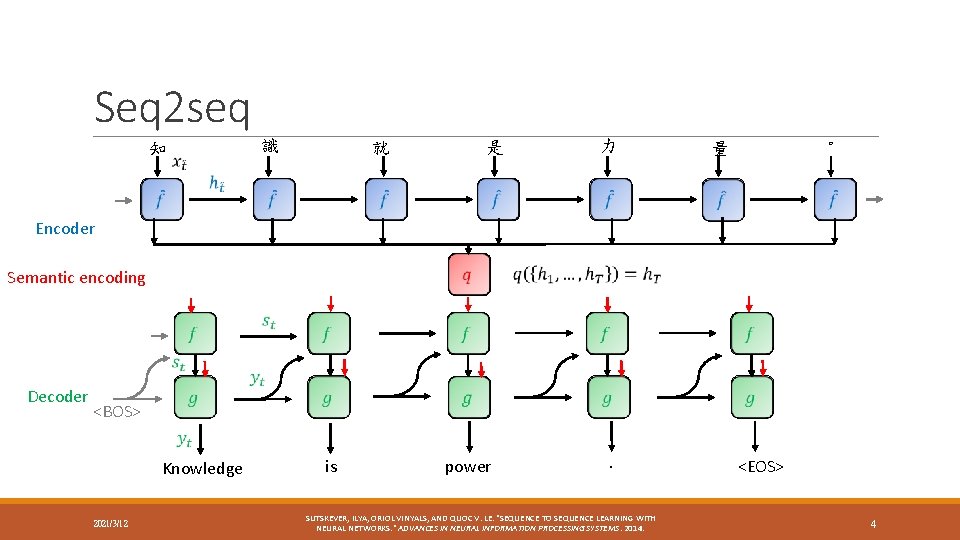

Seq 2 seq 知 識 就 是 力 。 量 Encoder Semantic encoding Decoder <BOS> Knowledge 2021/3/12 is power . SUTSKEVER, ILYA, ORIOL VINYALS, AND QUOC V. LE. "SEQUENCE TO SEQUENCE LEARNING WITH NEURAL NETWORKS. " ADVANCES IN NEURAL INFORMATION PROCESSING SYSTEMS. 2014. <EOS> 4

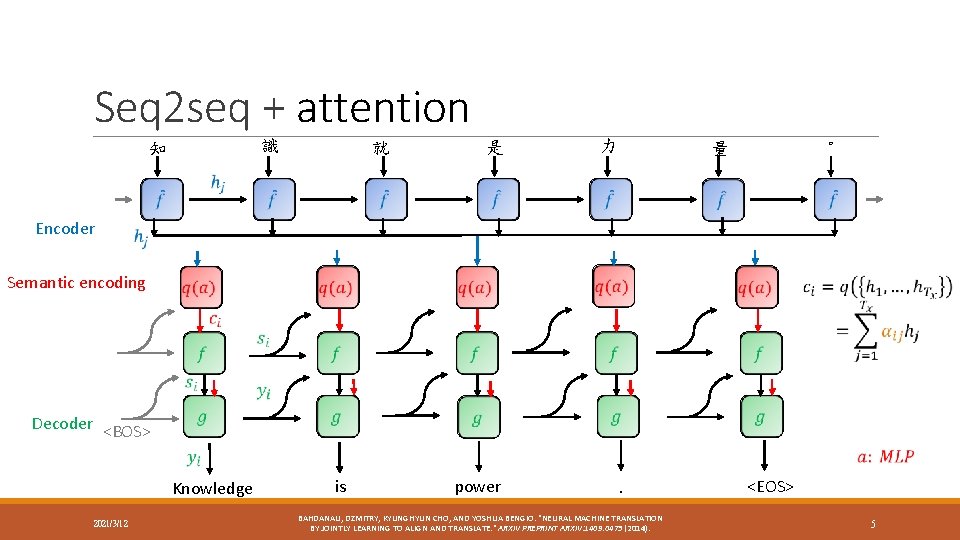

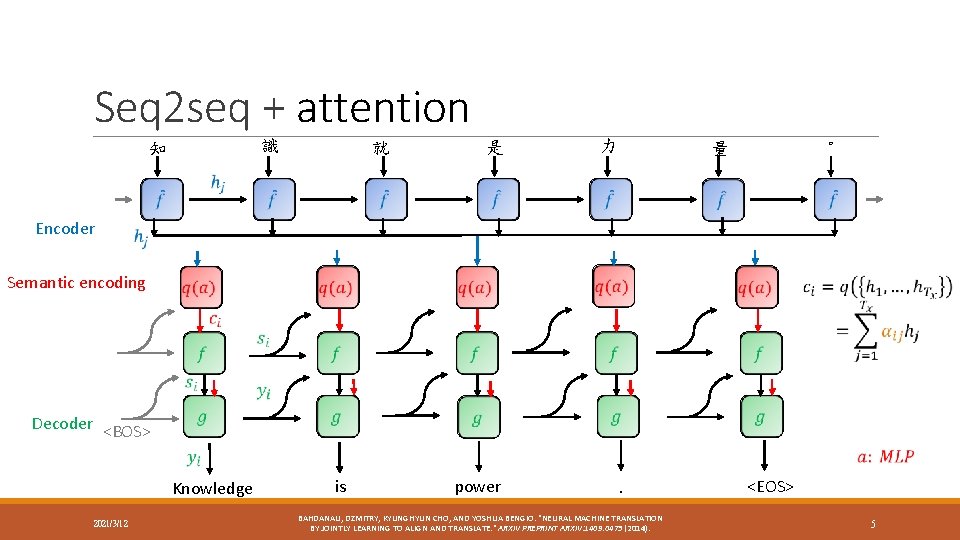

Seq 2 seq + attention 識 知 就 是 力 。 量 Encoder Semantic encoding Decoder <BOS> Knowledge 2021/3/12 is power . BAHDANAU, DZMITRY, KYUNGHYUN CHO, AND YOSHUA BENGIO. "NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. " ARXIV PREPRINT ARXIV: 1409. 0473 (2014). <EOS> 5

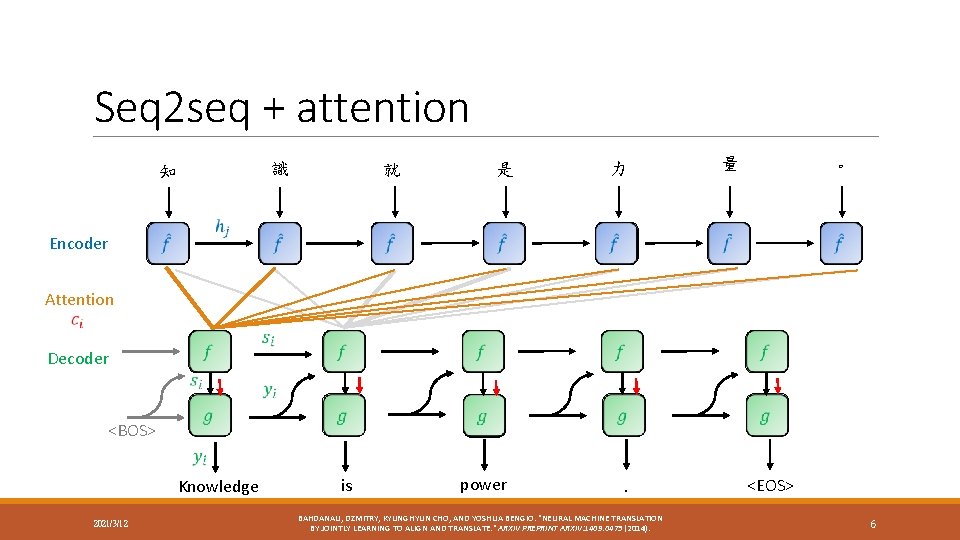

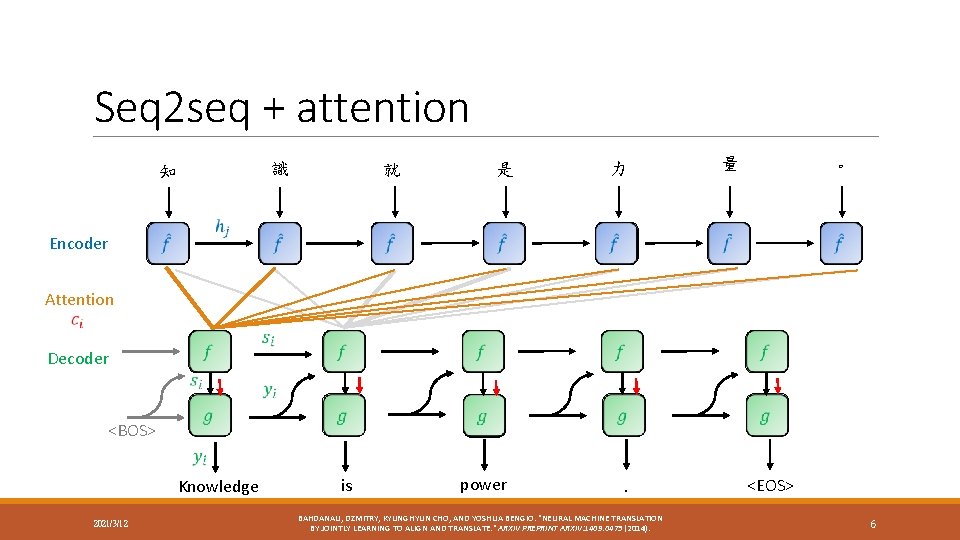

Seq 2 seq + attention 識 知 就 是 力 量 。 Encoder Attention Decoder <BOS> Knowledge 2021/3/12 is power . BAHDANAU, DZMITRY, KYUNGHYUN CHO, AND YOSHUA BENGIO. "NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. " ARXIV PREPRINT ARXIV: 1409. 0473 (2014). <EOS> 6

Translation I arrived at the bank after crossing the street 過馬路後我到了銀行 2021/3/12 7

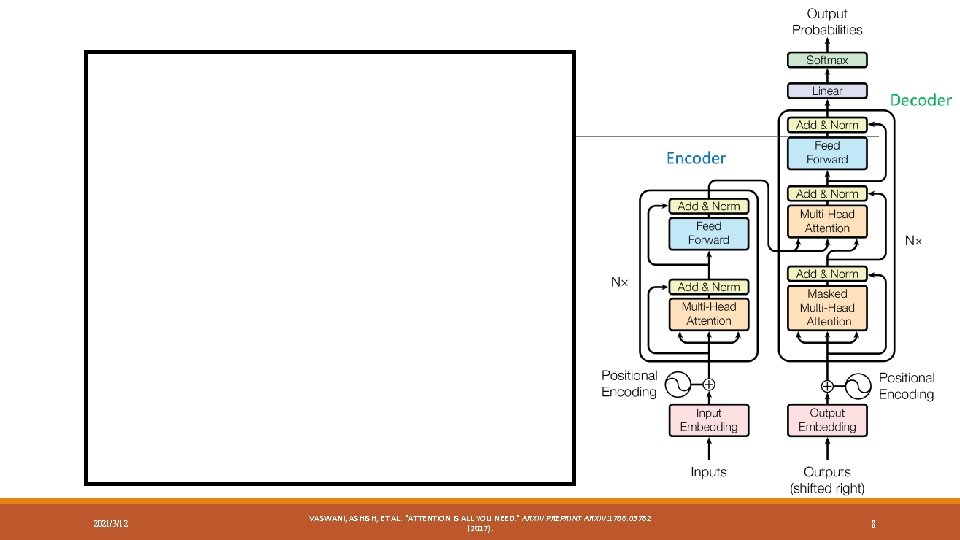

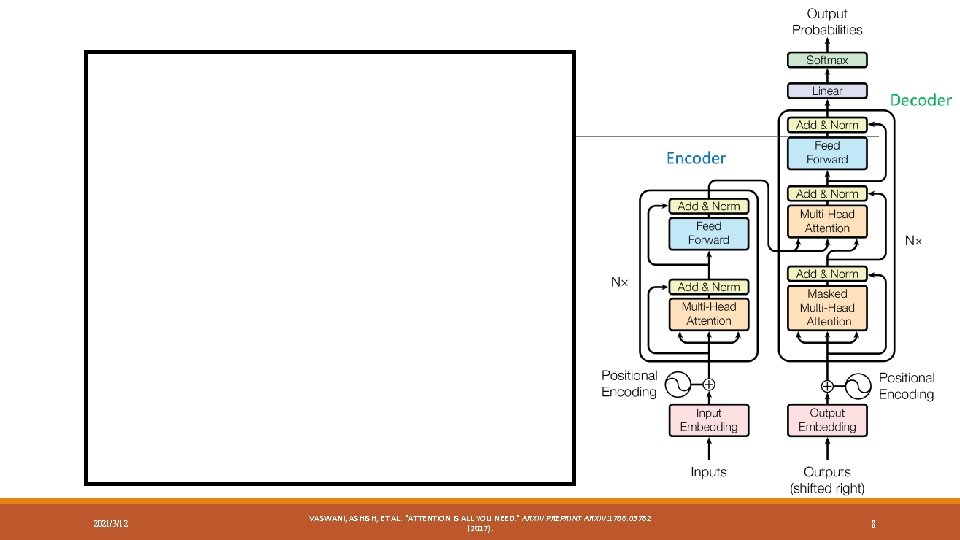

2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 8

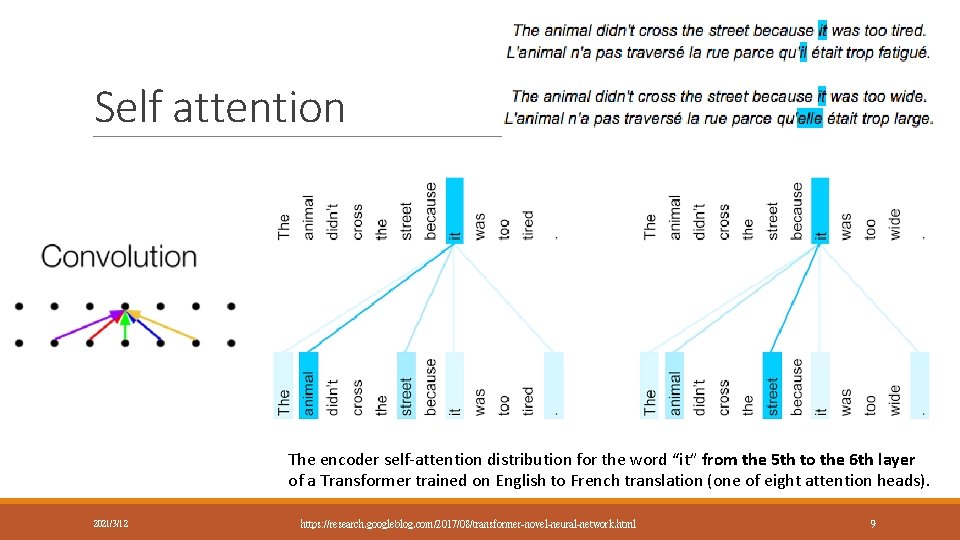

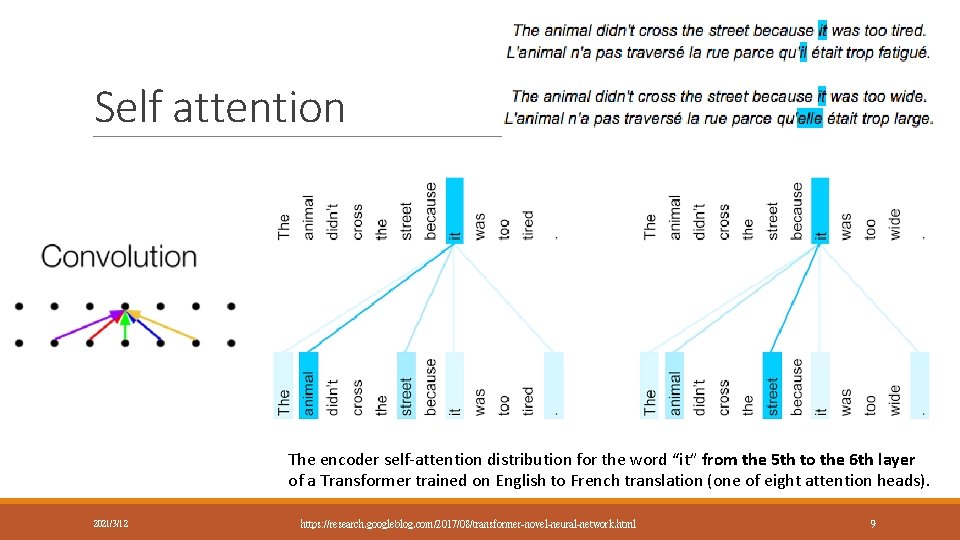

Self attention The encoder self-attention distribution for the word “it” from the 5 th to the 6 th layer of a Transformer trained on English to French translation (one of eight attention heads). 2021/3/12 https: //research. googleblog. com/2017/08/transformer-novel-neural-network. html 9

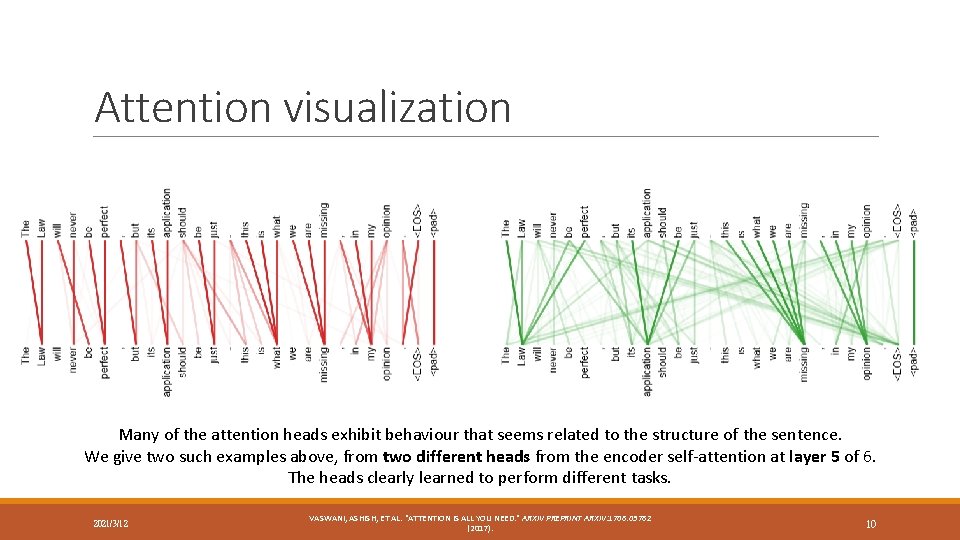

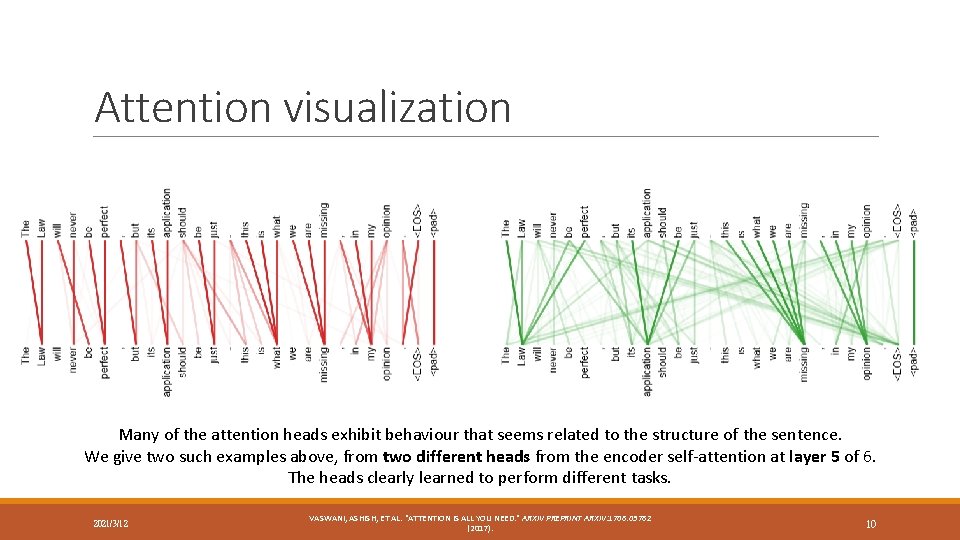

Attention visualization Many of the attention heads exhibit behaviour that seems related to the structure of the sentence. We give two such examples above, from two different heads from the encoder self-attention at layer 5 of 6. The heads clearly learned to perform different tasks. 2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 10

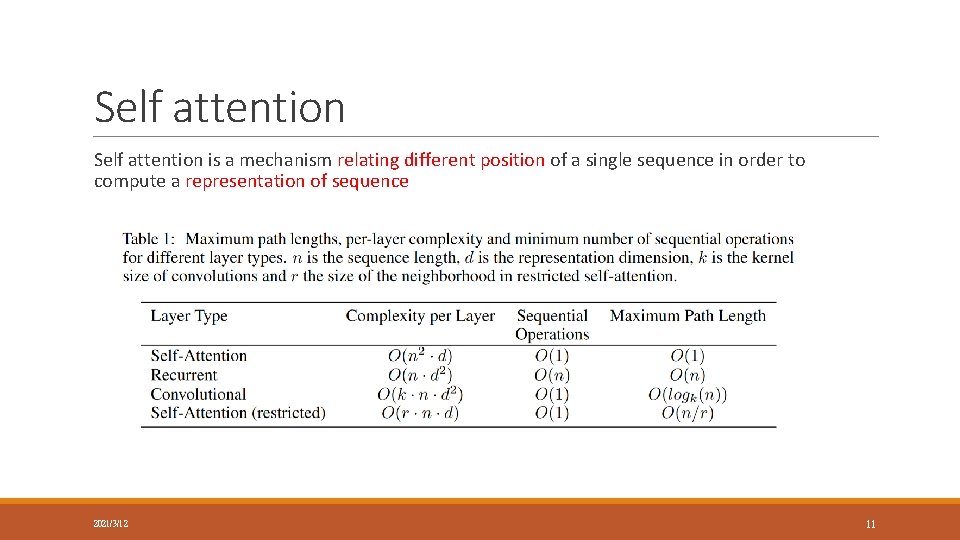

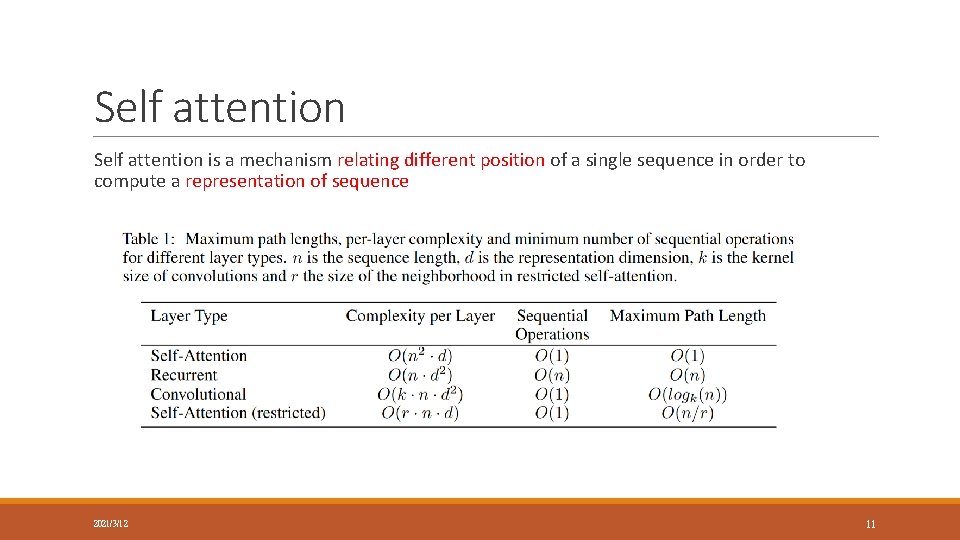

Self attention is a mechanism relating different position of a single sequence in order to compute a representation of sequence 2021/3/12 11

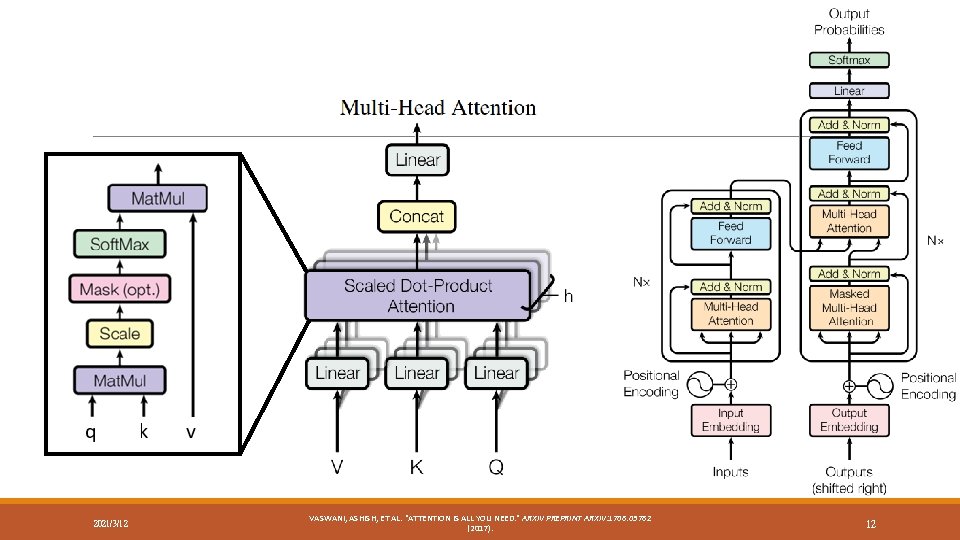

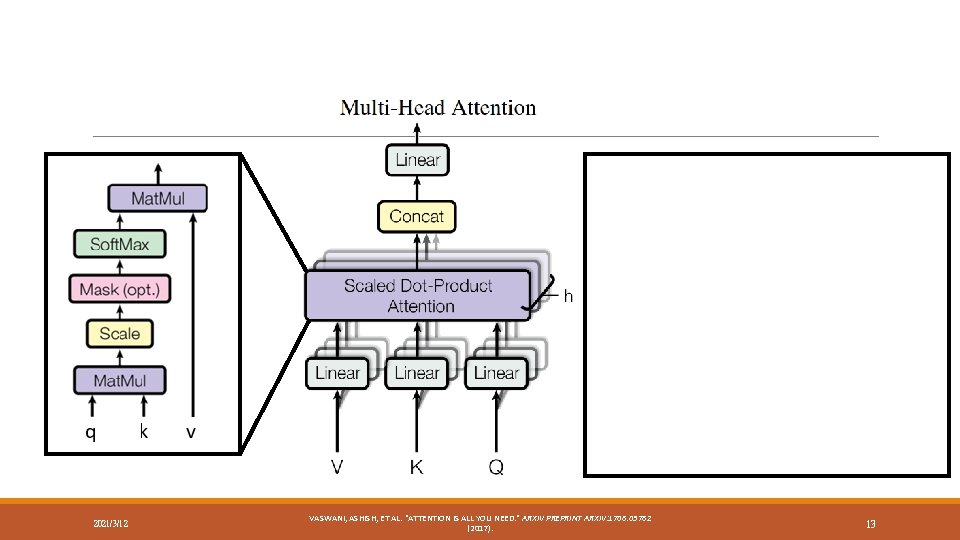

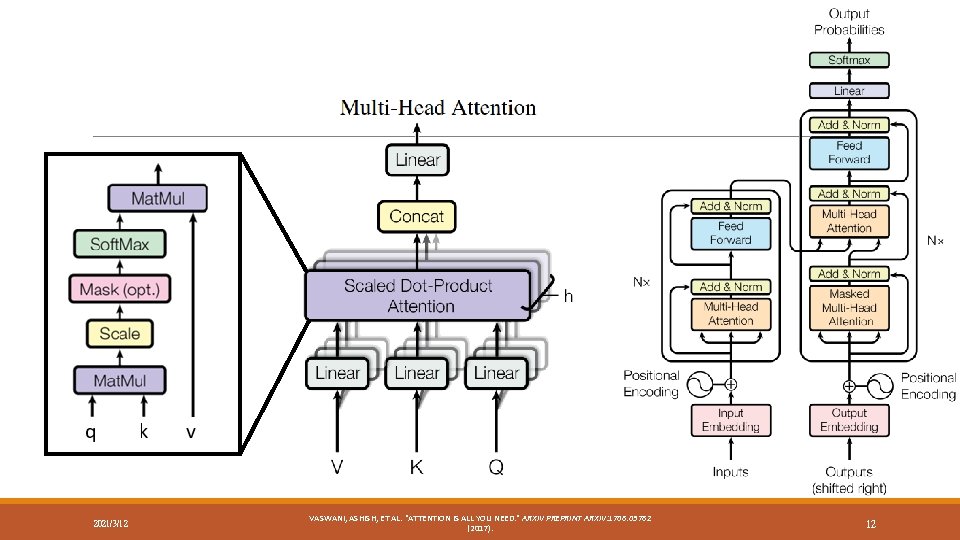

2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 12

2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 13

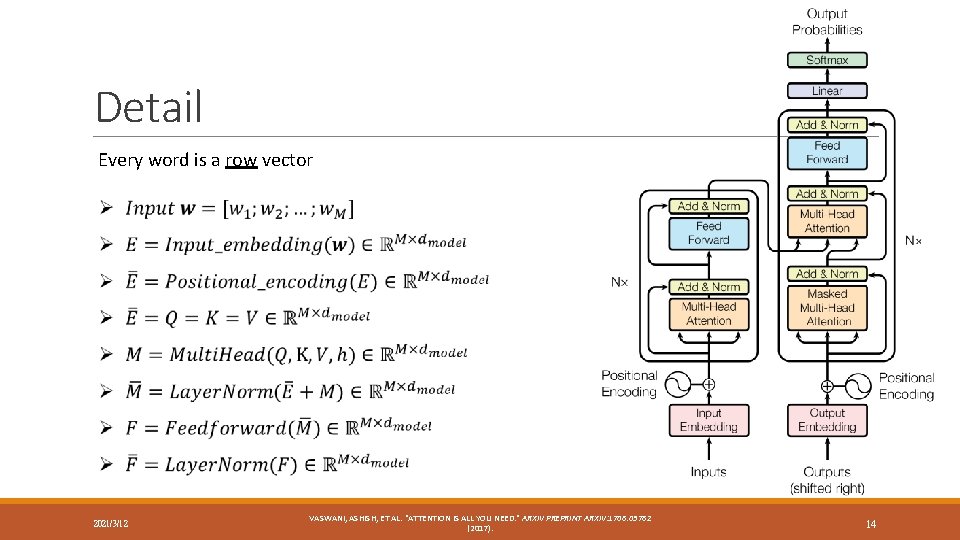

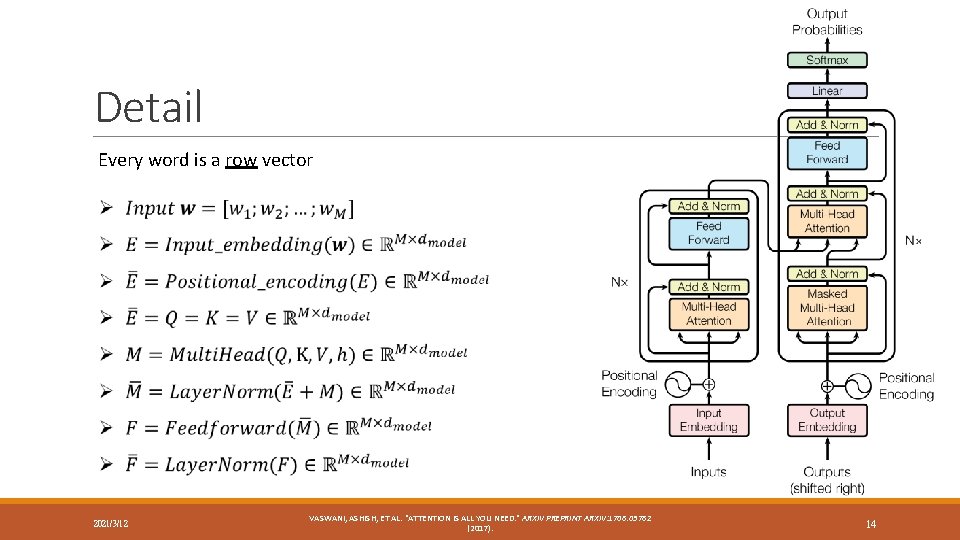

Detail Every word is a row vector 2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 14

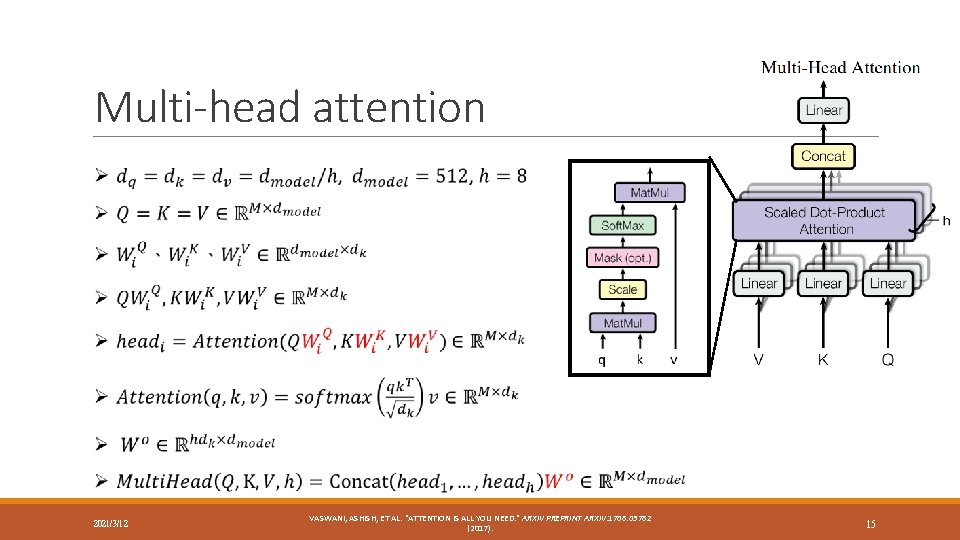

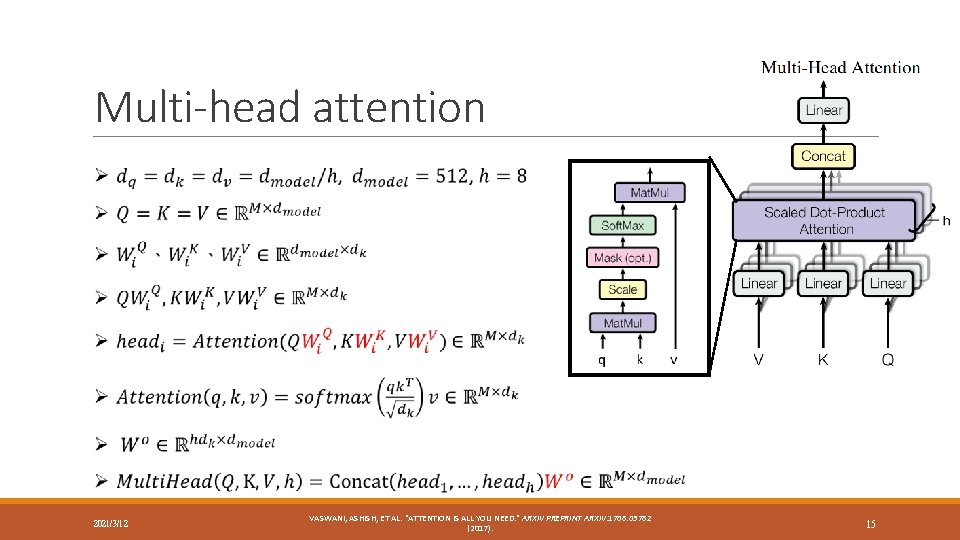

Multi-head attention 2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 15

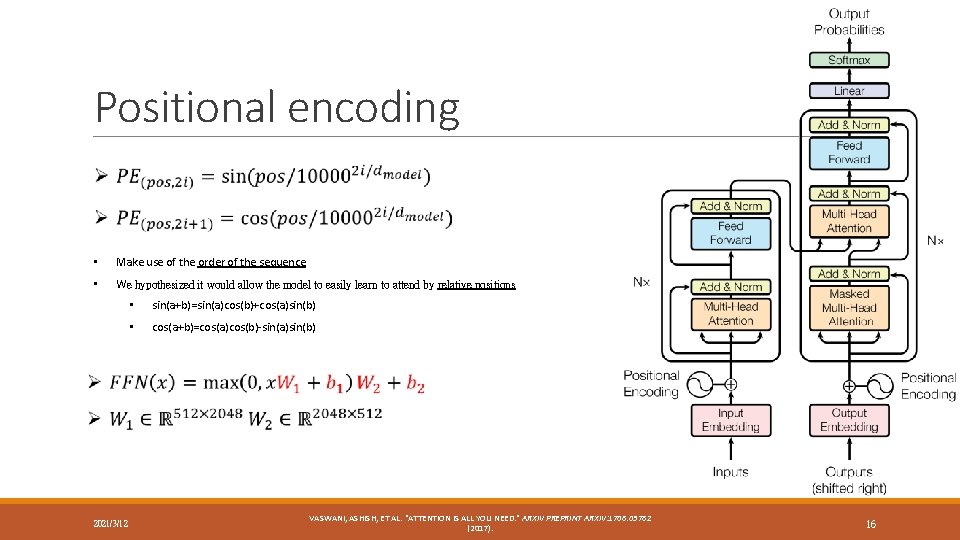

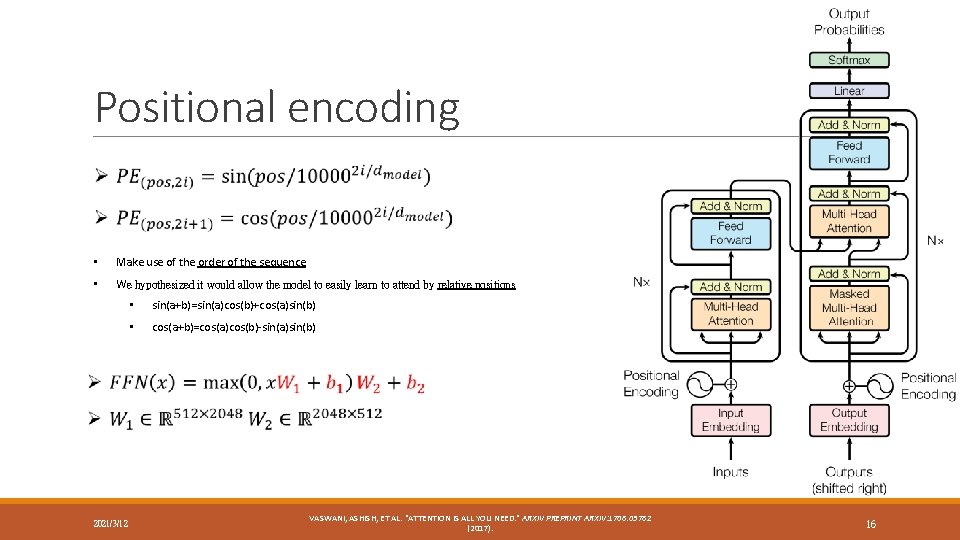

Positional encoding • Make use of the order of the sequence • We hypothesized it would allow the model to easily learn to attend by relative positions • • sin(a+b)=sin(a)cos(b)+cos(a)sin(b) • cos(a+b)=cos(a)cos(b)-sin(a)sin(b) Allow the model to extrapolate to sequence lengths longer than the ones encountered during training. 2021/3/12 VASWANI, ASHISH, ET AL. "ATTENTION IS ALL YOU NEED. " ARXIV PREPRINT ARXIV: 1706. 03762 (2017). 16

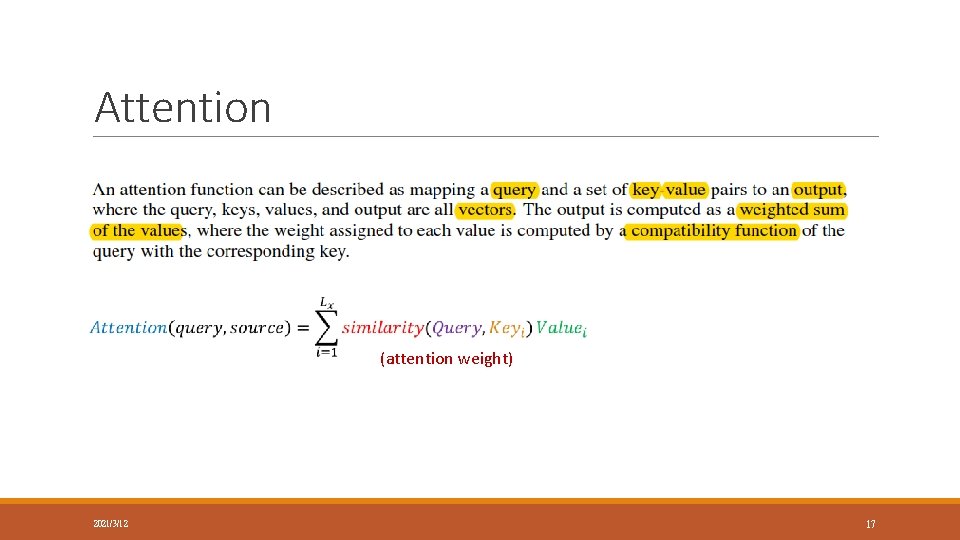

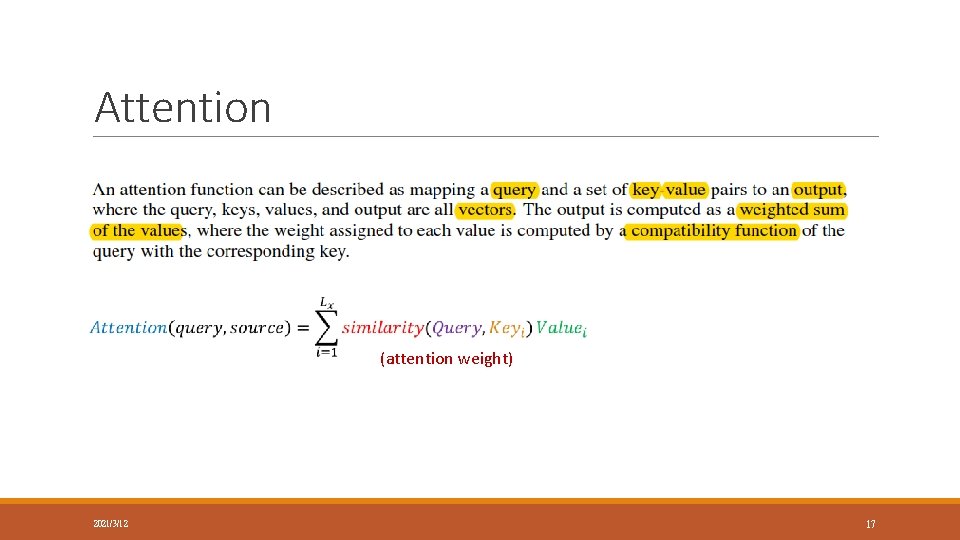

Attention (attention weight) 2021/3/12 17

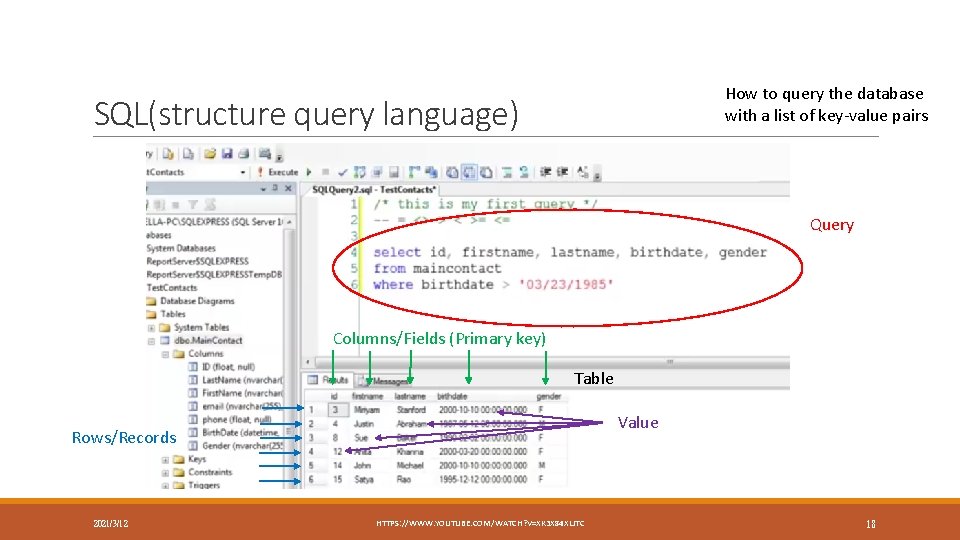

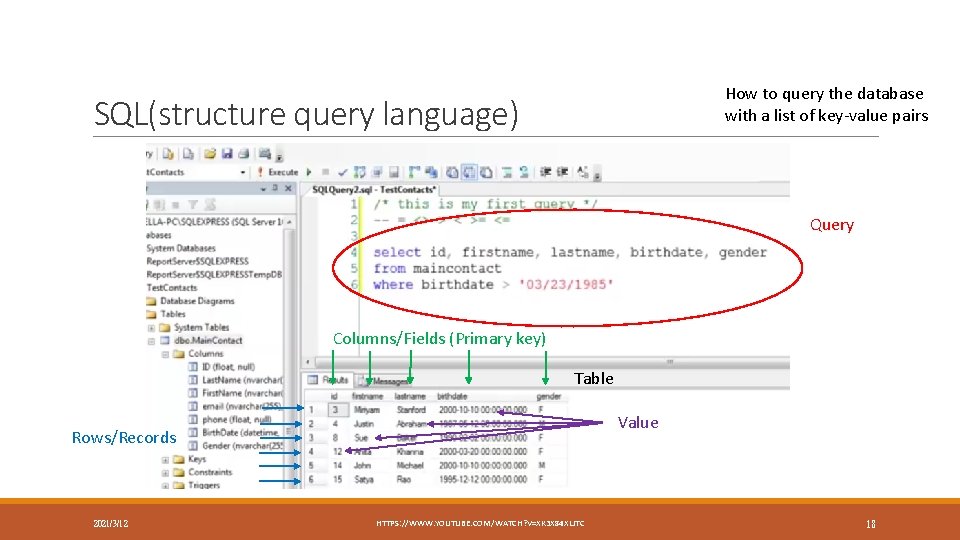

How to query the database with a list of key-value pairs SQL(structure query language) Query Columns/Fields (Primary key) Table Value Rows/Records 2021/3/12 HTTPS: //WWW. YOUTUBE. COM/WATCH? V=XK 3 X 84 XLJTC 18