ATLAS Trigger Data Acquisition system concept architecture Kostas

- Slides: 29

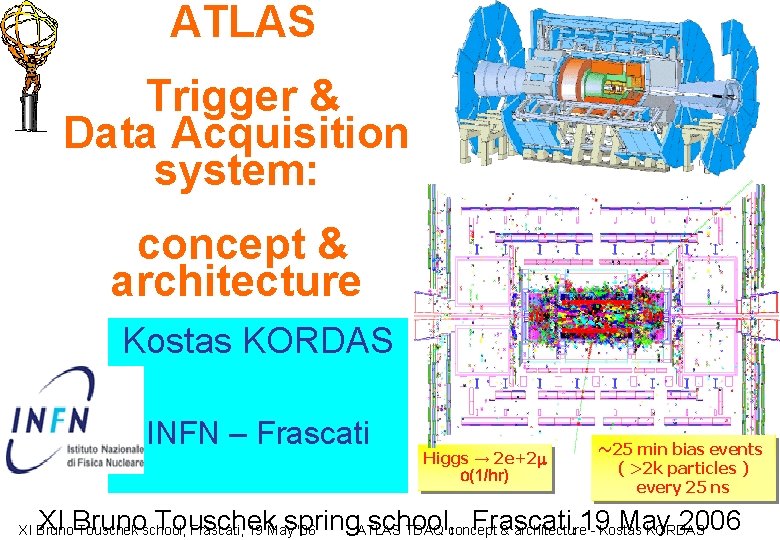

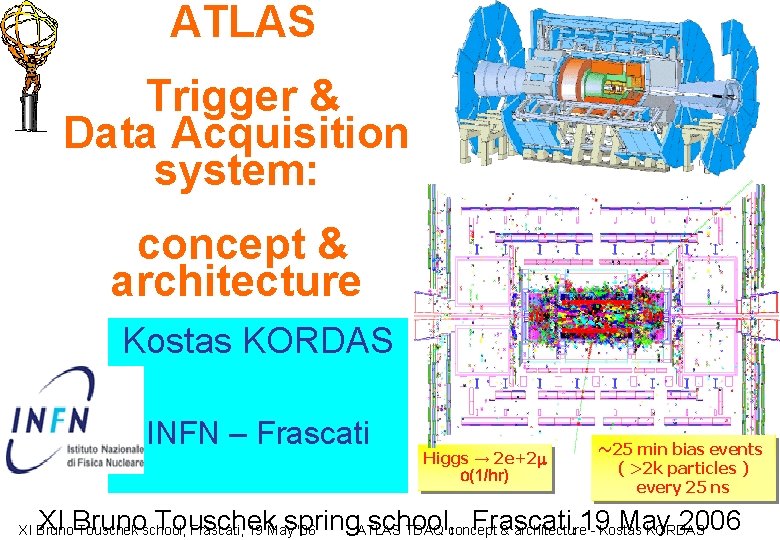

ATLAS Trigger & Data Acquisition system: concept & architecture Kostas KORDAS INFN – Frascati Higgs → 2 e+2 m O(1/hr) ~25 min bias events ( >2 k particles ) every 25 ns XI Bruno Touschek spring. ATLAS school, Frascati, 19 May 2006 TDAQ concept & architecture - Kostas KORDAS XI Bruno Touschek school, Frascati, 19 May '06

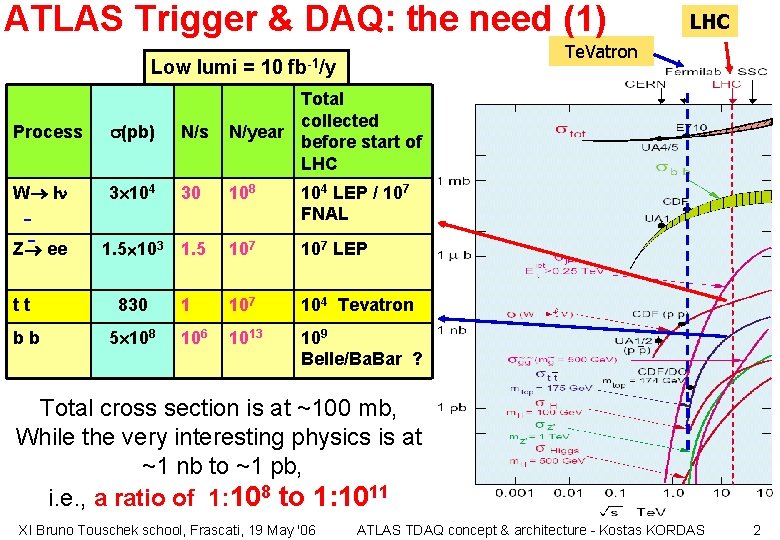

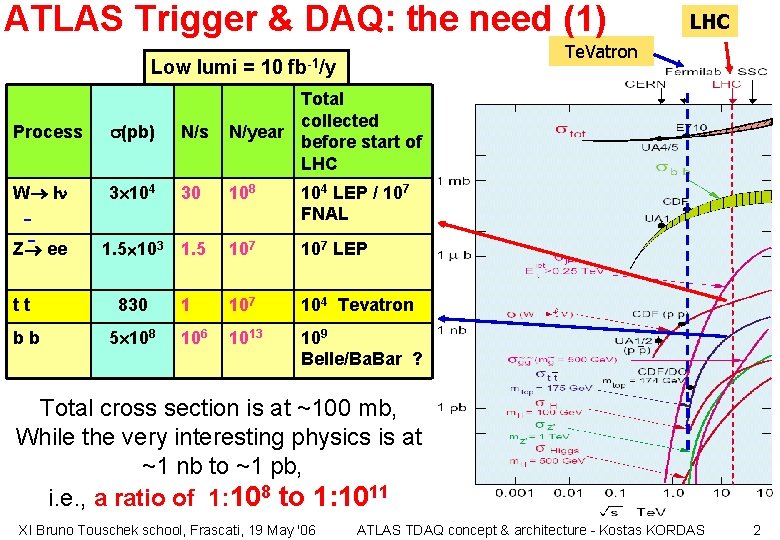

ATLAS Trigger & DAQ: the need (1) Low lumi = 10 Te. Vatron fb-1/y Process (pb) N/s Total collected N/year before start of LHC W l 3 104 30 108 104 LEP / 107 FNAL 1. 5 103 1. 5 107 LEP 1 107 104 Tevatron 106 1013 109 Belle/Ba. Bar ? Z ee tt 830 bb 5 108 LHC Total cross section is at ~100 mb, While the very interesting physics is at ~1 nb to ~1 pb, i. e. , a ratio of 1: 108 to 1: 1011 XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 2

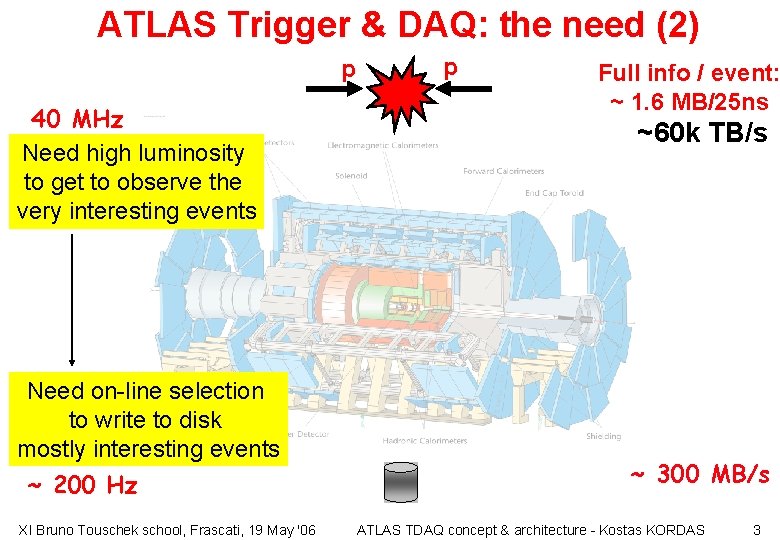

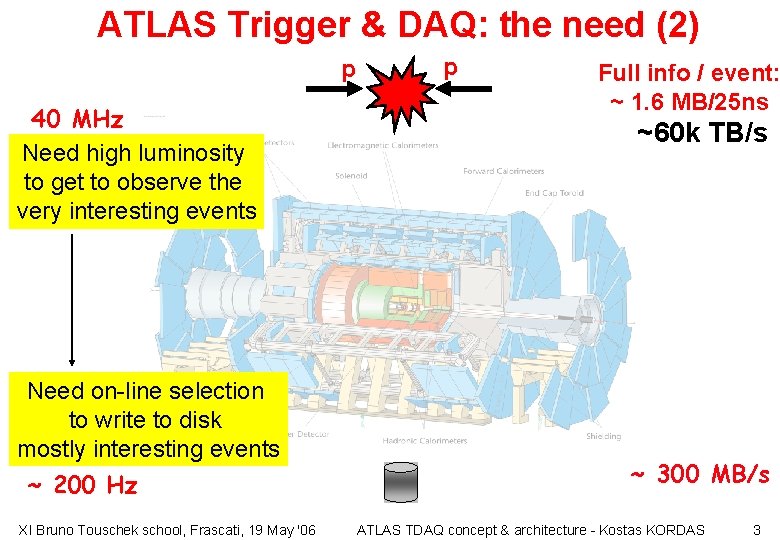

ATLAS Trigger & DAQ: the need (2) p 40 MHz Need high luminosity to get to observe the very interesting events Need on-line selection to write to disk mostly interesting events ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 p Full info / event: ~ 1. 6 MB/25 ns ~60 k TB/s ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 3

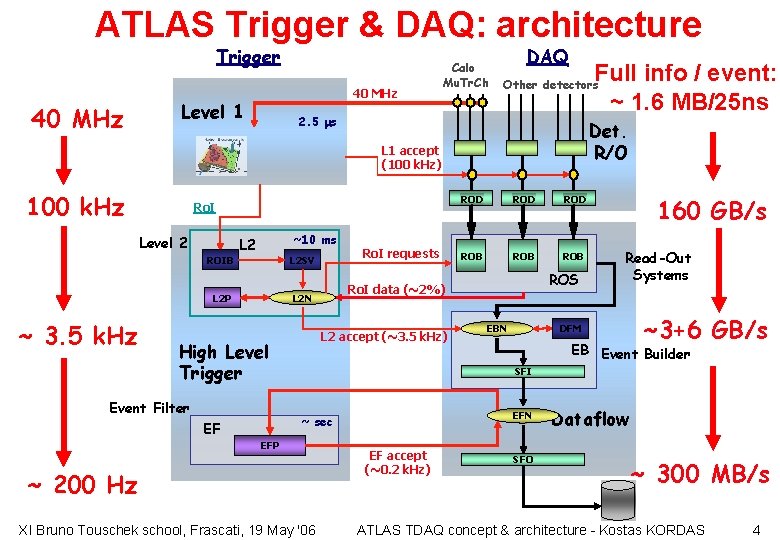

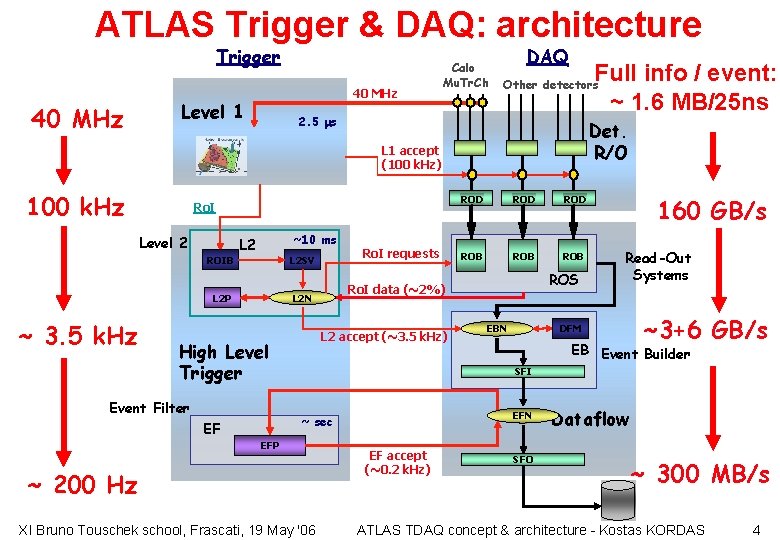

ATLAS Trigger & DAQ: architecture Trigger 40 MHz Level 1 Calo Mu. Tr. Ch DAQ Full info / event: ~ 1. 6 MB/25 ns Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~ 3. 5 k. Hz L 2 accept (~3. 5 k. Hz) High Level Trigger Event Filter ROD ROB ROB ROS 160 GB/s Read-Out Systems DFM EBN ~3+6 GB/s EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD EFP ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 EF accept (~0. 2 k. Hz) SFO Dataflow ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 4

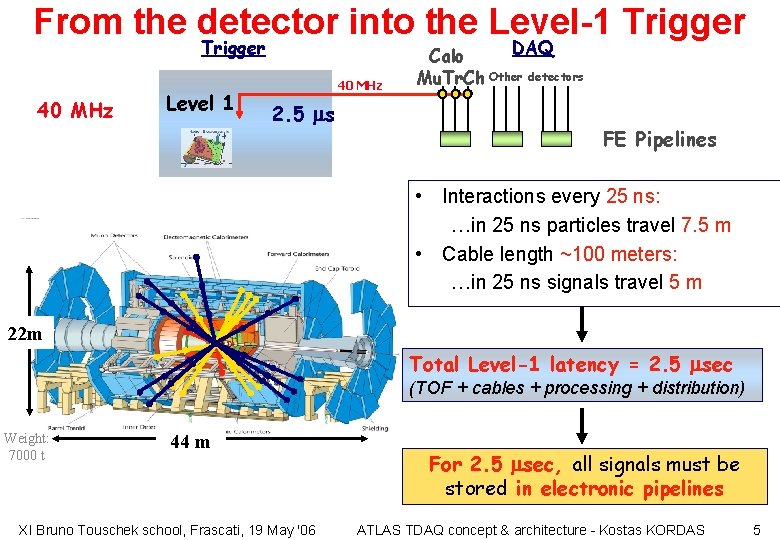

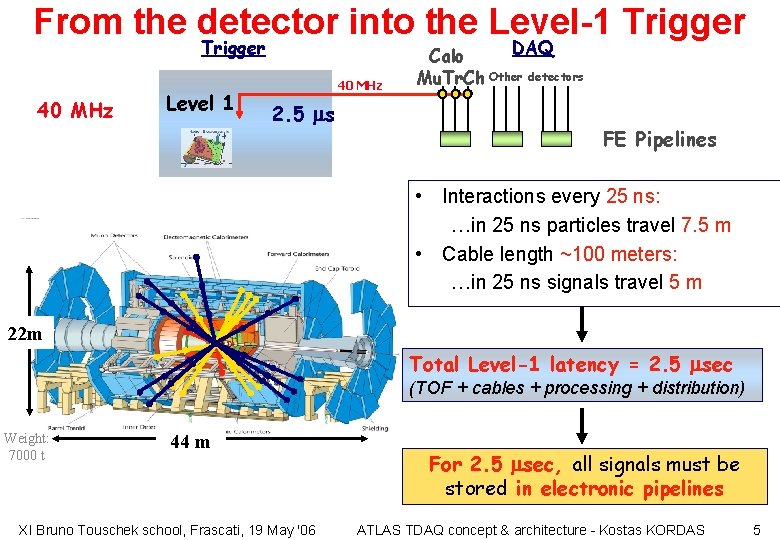

From the detector into the Level-1 Trigger 40 MHz Level 1 40 MHz 2. 5 ms DAQ Calo Mu. Tr. Ch Other detectors FE Pipelines • Interactions every 25 ns: …in 25 ns particles travel 7. 5 m • Cable length ~100 meters: …in 25 ns signals travel 5 m 22 m Total Level-1 latency = 2. 5 msec (TOF + cables + processing + distribution) Weight: 7000 t 44 m XI Bruno Touschek school, Frascati, 19 May '06 For 2. 5 msec, all signals must be stored in electronic pipelines ATLAS TDAQ concept & architecture - Kostas KORDAS 5

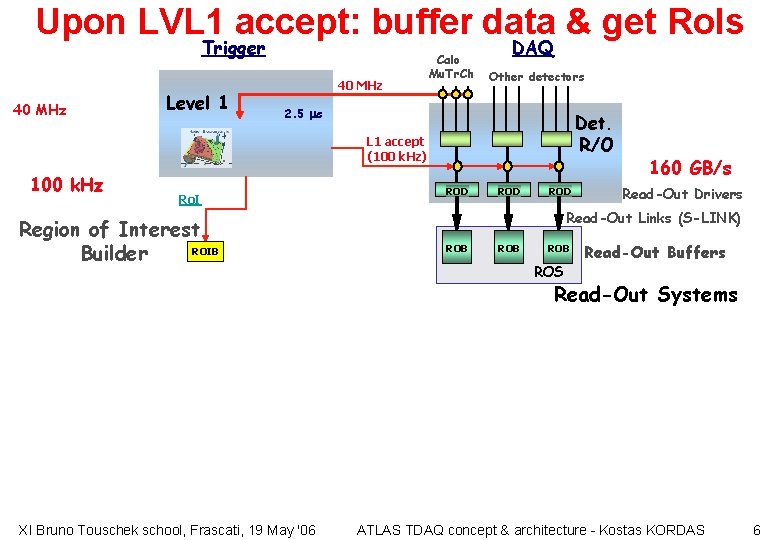

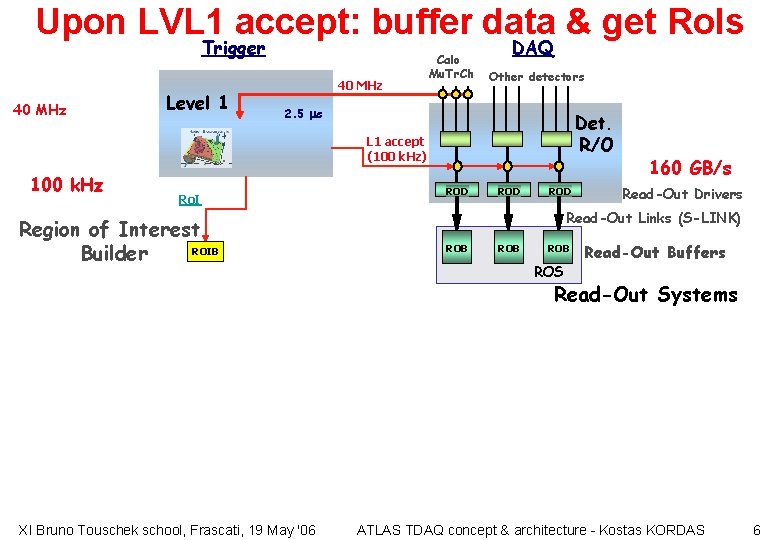

Upon LVL 1 accept: buffer data & get Ro. Is Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Region of Interest ROIB Builder ROD ROD 160 GB/s Read-Out Drivers Read-Out Links (S-LINK) ROB ROB ROS Read-Out Buffers Read-Out Systems XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 6

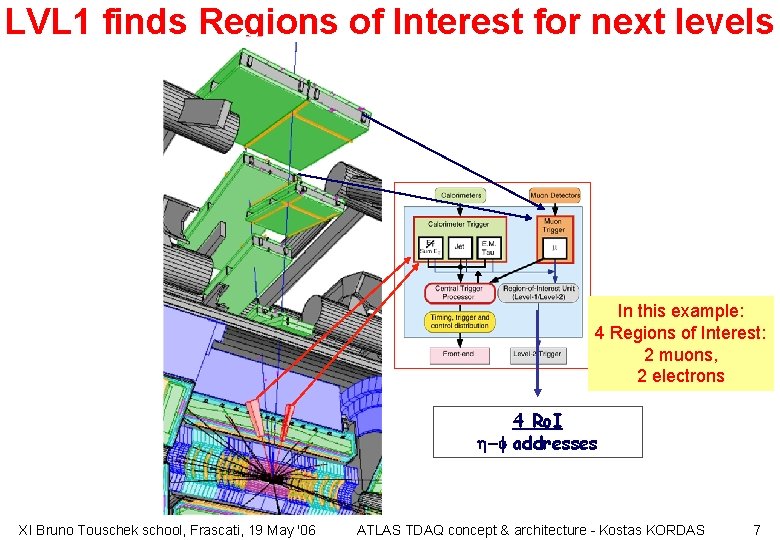

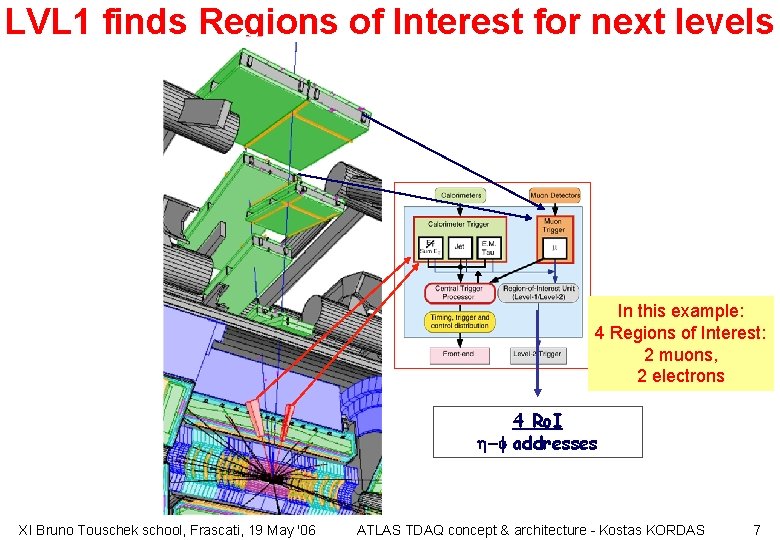

LVL 1 finds Regions of Interest for next levels In this example: 4 Regions of Interest: 2 muons, 2 electrons 4 Ro. I -f addresses XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 7

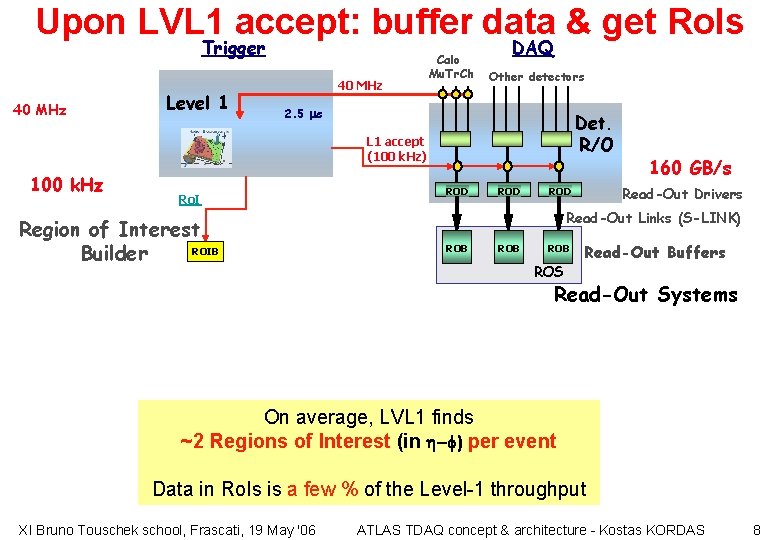

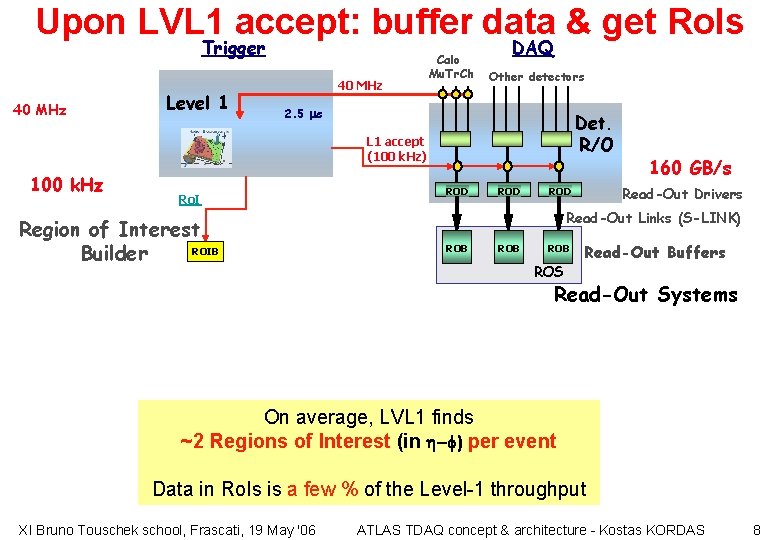

Upon LVL 1 accept: buffer data & get Ro. Is Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Region of Interest ROIB Builder ROD 160 GB/s Read-Out Drivers ROD Read-Out Links (S-LINK) ROB ROB ROS Read-Out Buffers Read-Out Systems On average, LVL 1 finds ~2 Regions of Interest (in -f) per event Data in Ro. Is is a few % of the Level-1 throughput XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 8

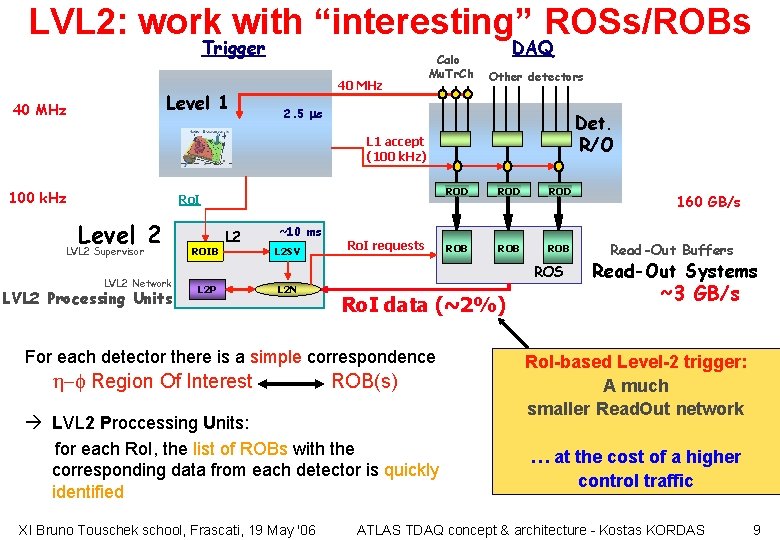

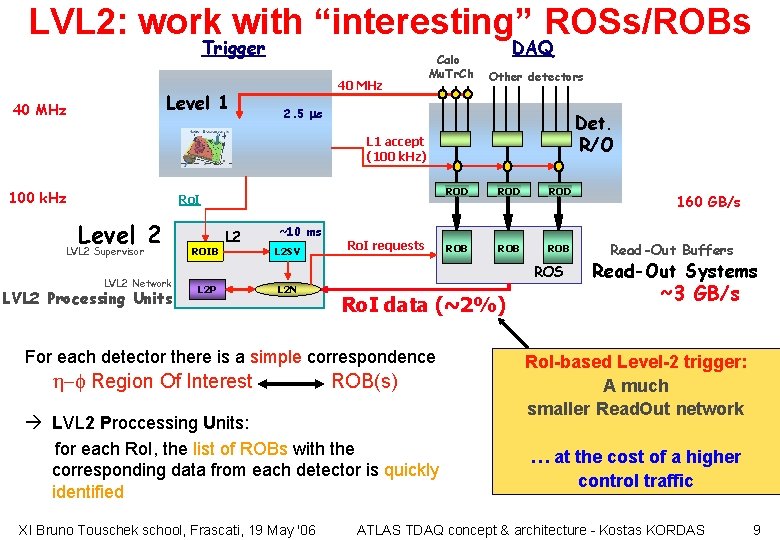

LVL 2: work with “interesting” ROSs/ROBs Trigger Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 LVL 2 Supervisor LVL 2 Network LVL 2 Processing Units ROIB L 2 ~10 ms L 2 SV Ro. I requests ROD ROD ROB ROB ROS L 2 P L 2 N Ro. I data (~2%) For each detector there is a simple correspondence h-f Region Of Interest ROB(s) LVL 2 Proccessing Units: for each Ro. I, the list of ROBs with the corresponding data from each detector is quickly identified XI Bruno Touschek school, Frascati, 19 May '06 160 GB/s Read-Out Buffers Read-Out Systems ~3 GB/s Ro. I-based Level-2 trigger: A much smaller Read. Out network … at the cost of a higher control traffic ATLAS TDAQ concept & architecture - Kostas KORDAS 9

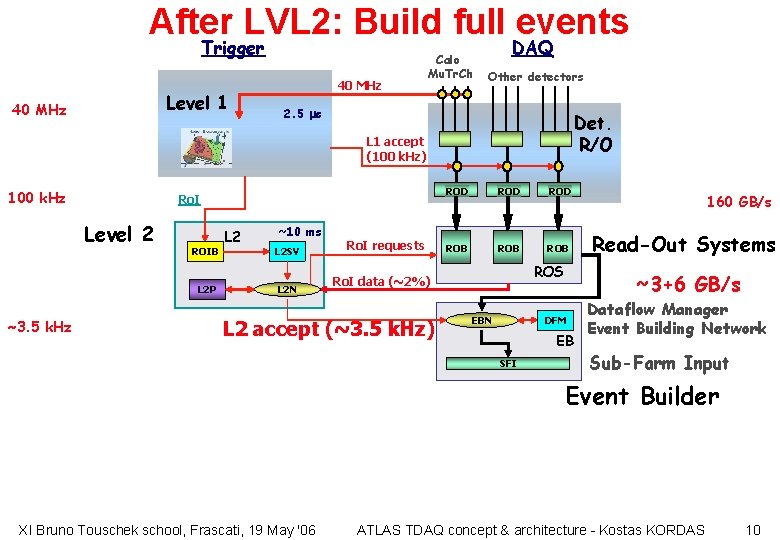

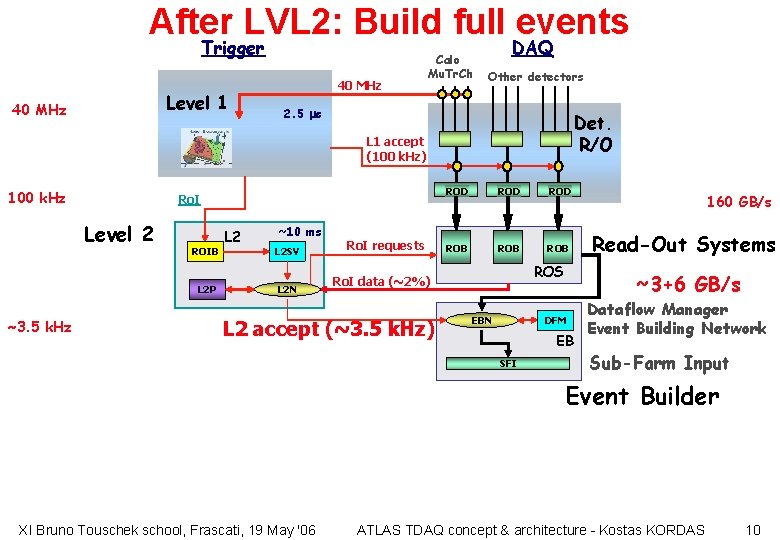

After LVL 2: Build full events Trigger Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB L 2 P ~3. 5 k. Hz L 2 ~10 ms L 2 SV L 2 N Ro. I requests ROD ROD ROB ROB ROS Ro. I data (~2%) L 2 accept (~3. 5 k. Hz) EB SFI Read-Out Systems ~3+6 GB/s DFM EBN 160 GB/s Dataflow Manager Event Building Network Sub-Farm Input Event Builder XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 10

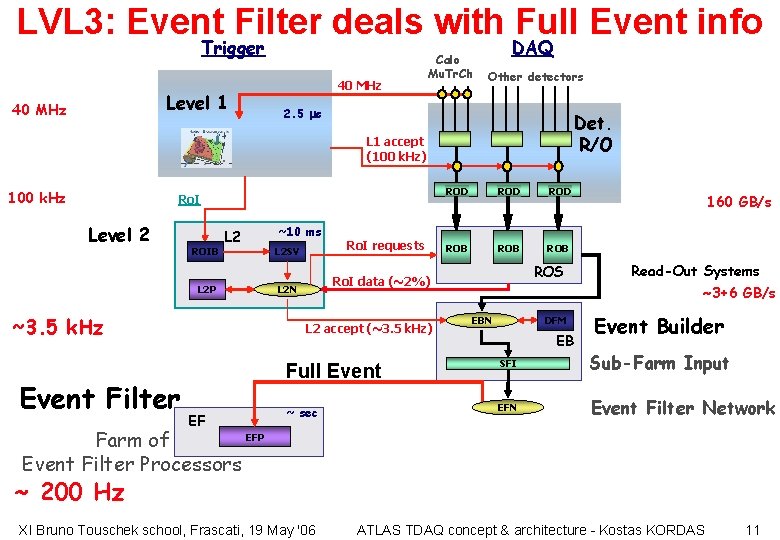

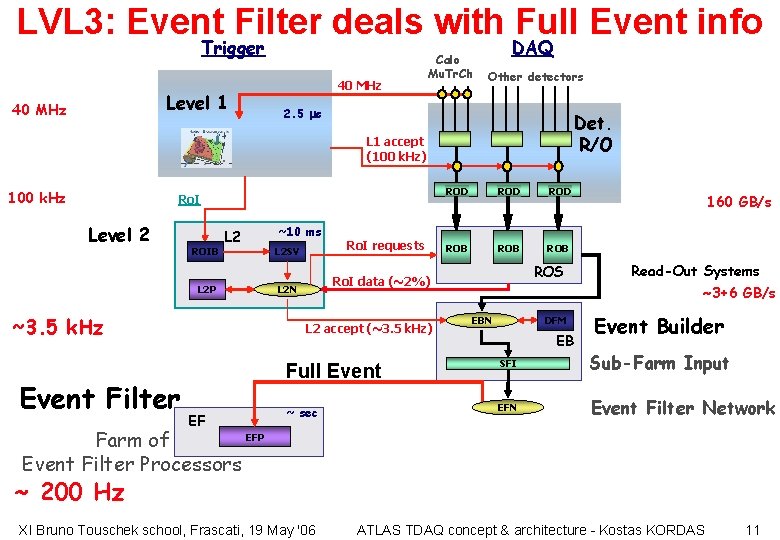

LVL 3: Event Filter deals with Full Event info Trigger Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB L 2 P ~3. 5 k. Hz Event Filter ~10 ms L 2 SV Ro. I requests ROD ROB ROB ROS Ro. I data (~2%) L 2 N L 2 accept (~3. 5 k. Hz) EF ROD 160 GB/s Read-Out Systems ~3+6 GB/s DFM EBN EB Event Builder Full Event SFI Sub-Farm Input ~ sec EFN Event Filter Network EFP Farm of Event Filter Processors ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 11

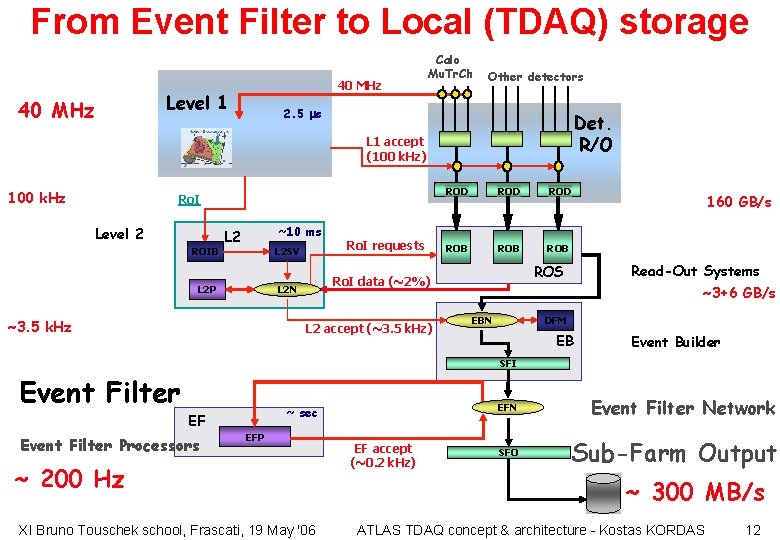

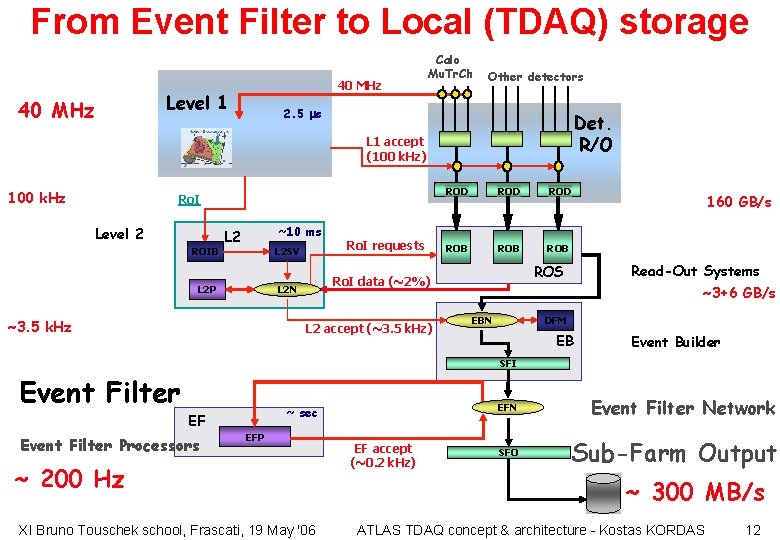

From Event Filter to Local (TDAQ) storage 40 MHz Level 1 Calo Mu. Tr. Ch Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~3. 5 k. Hz ROD ROB ROB L 2 accept (~3. 5 k. Hz) 160 GB/s Read-Out Systems ROS Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD ~3+6 GB/s DFM EBN EB Event Builder SFI Event Filter ~ sec EF Event Filter Processors EFP ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 EF accept (~0. 2 k. Hz) EFN Event Filter Network SFO Sub-Farm Output ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 12

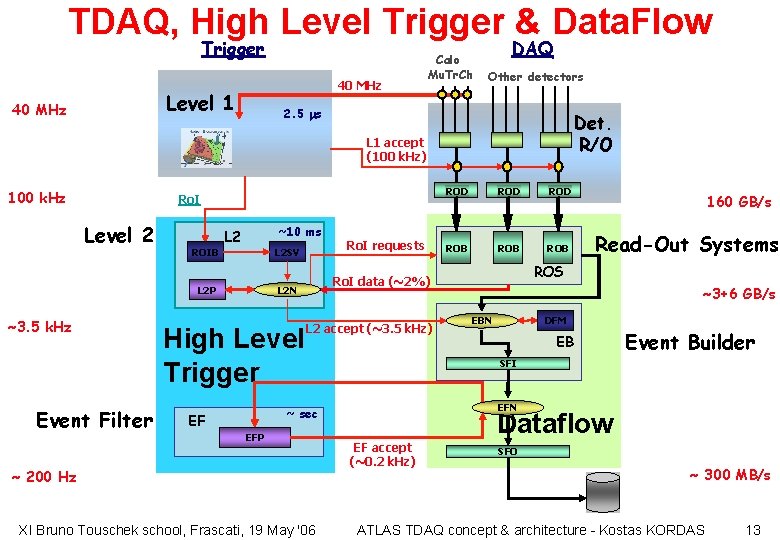

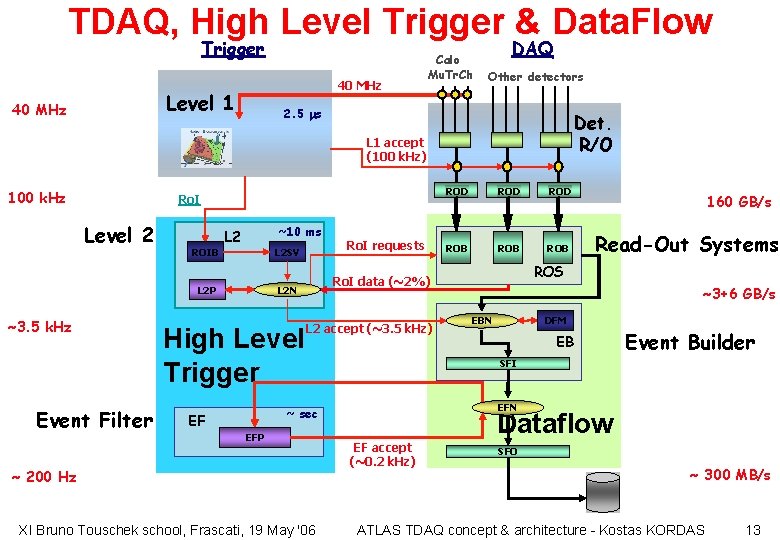

TDAQ, High Level Trigger & Data. Flow Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~3. 5 k. Hz Event Filter High Level Trigger L 2 accept (~3. 5 k. Hz) ROD ROB ROB EFP ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 160 GB/s Read-Out Systems ROS ~3+6 GB/s DFM EBN EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD Dataflow EF accept (~0. 2 k. Hz) SFO ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 13

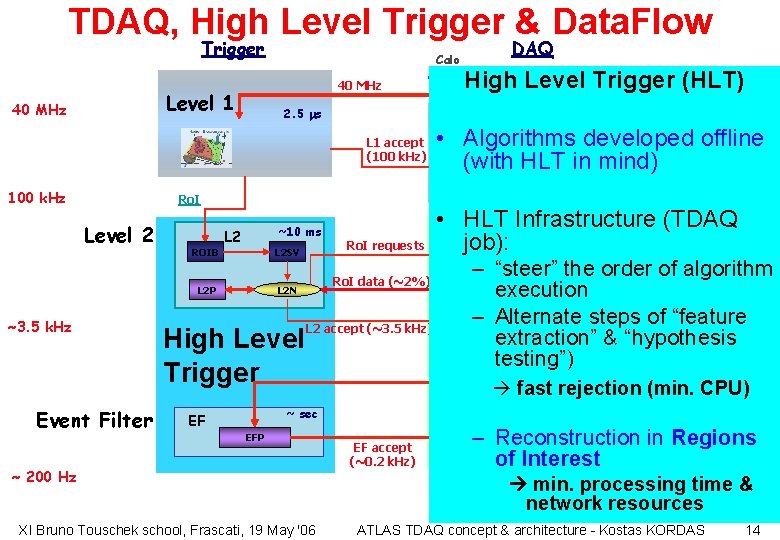

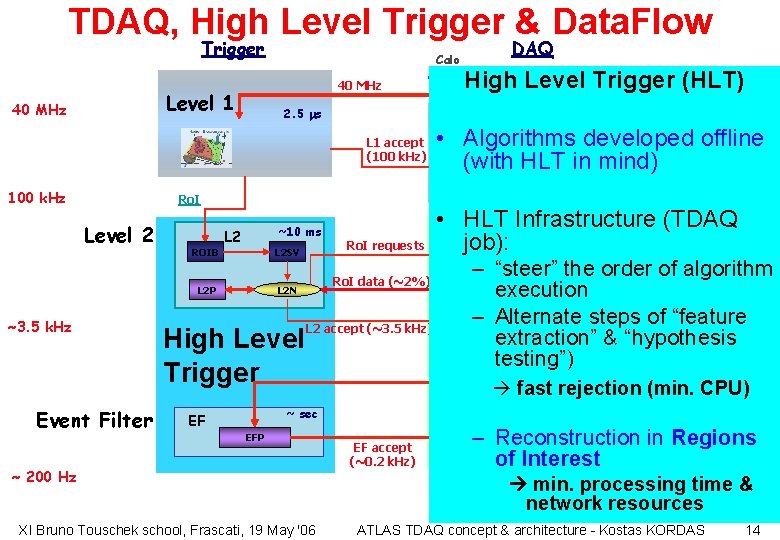

TDAQ, High Level Trigger & Data. Flow Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch 100 k. Hz ROIB ~10 ms L 2 SV Event Filter High Level Trigger Ro. I requests Ro. I data (~2%) L 2 N L 2 P ~3. 5 k. Hz • Det. Algorithms R/O developed (with HLT in mind) ROD Ro. I Level 2 Other detectors High Level Trigger (HLT) 2. 5 ms L 1 accept (100 k. Hz) L 2 accept (~3. 5 k. Hz) EFP ~ 200 Hz XI Bruno Touschek school, Frascati, 19 May '06 ROD offline ROD 160 GB/s • HLT Infrastructure (TDAQ Read-Out Systems ROBjob): ROB – “steer” ROS the order of algorithm execution ~3+6 GB/s – Alternate steps of “feature DFM EBN extraction” & “hypothesis EB Event Builder testing”) SFI fast rejection (min. CPU) EFN ~ sec EF DAQ Dataflow EF accept (~0. 2 k. Hz) – Reconstruction in Regions SFO of Interest ~ 300 MB/s min. processing time & network resources ATLAS TDAQ concept & architecture - Kostas KORDAS 14

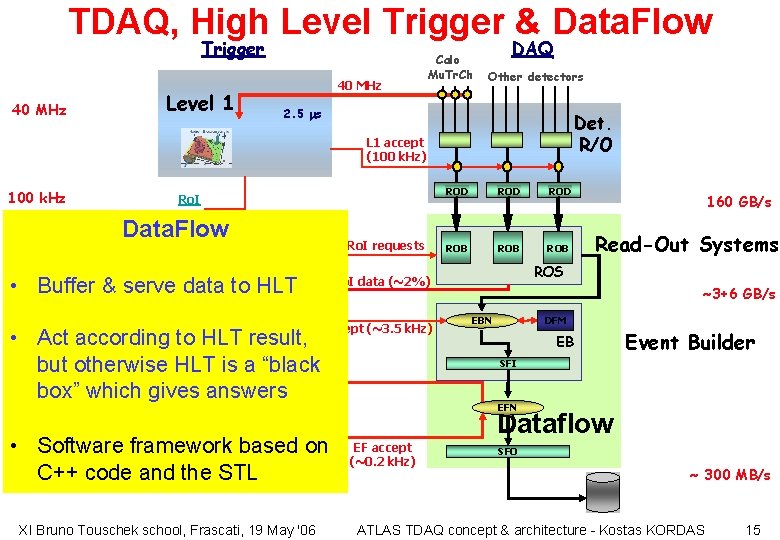

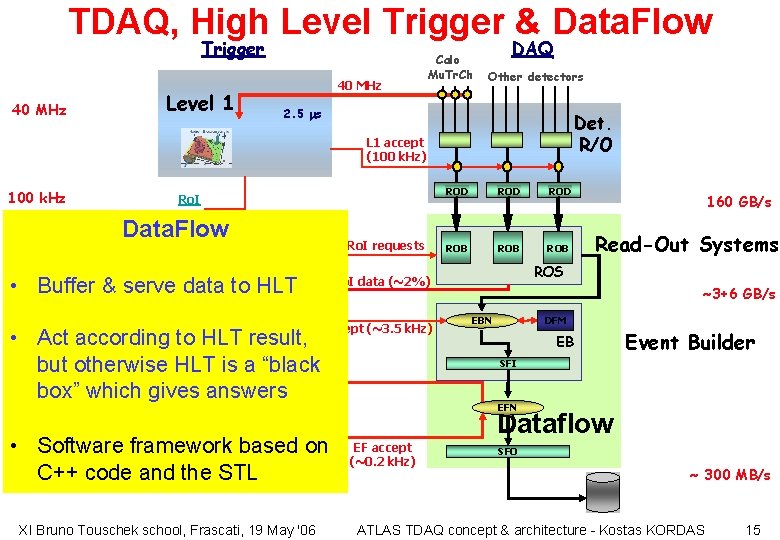

TDAQ, High Level Trigger & Data. Flow Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Data. Flow Level 2 L 2 ~10 ms ROIB L 2 SV • Buffer & serve data to HLT L 2 N L 2 P ~3. 5 k. Hz Ro. I requests L 2 accept (~3. 5 k. Hz) ROD ROB ROB EFP • Software framework based on C++ ~ 200 Hz code and the STL XI Bruno Touschek school, Frascati, 19 May '06 160 GB/s Read-Out Systems ROS ~3+6 GB/s DFM EBN EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) • Act according. High to HLTLevel result, but otherwise. Trigger HLT is a “black box” which gives answers Event Filter ROD Dataflow EF accept (~0. 2 k. Hz) SFO ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 15

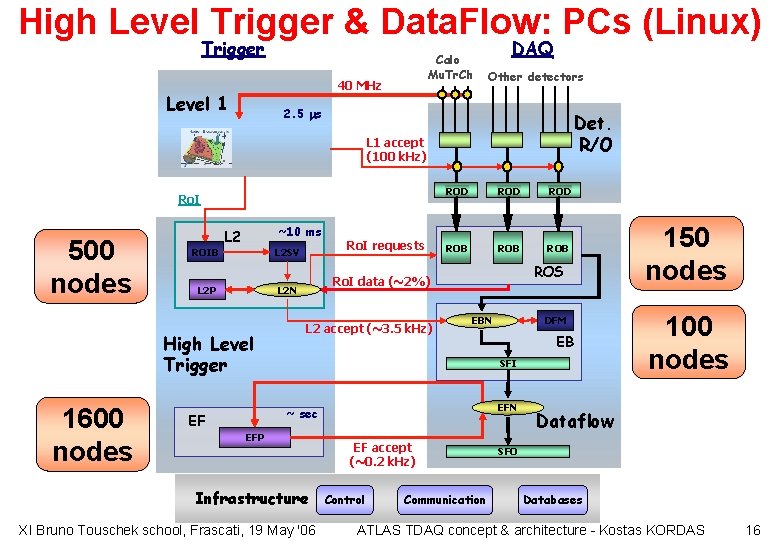

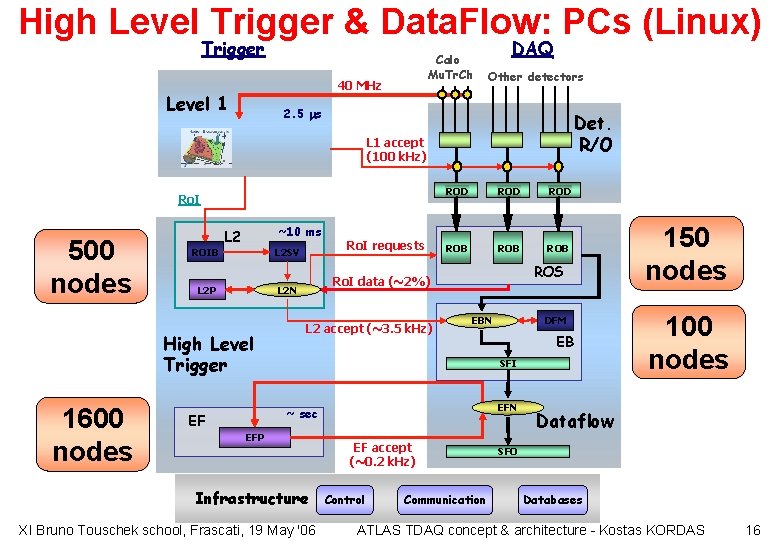

High Level Trigger & Data. Flow: PCs (Linux) Trigger Calo Mu. Tr. Ch 40 MHz Level 1 DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) Ro. I 500 nodes ROIB ~10 ms L 2 SV High Level Trigger 1600 nodes ROD ROB ROB ROS Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD L 2 accept (~3. 5 k. Hz) EB SFI EFN ~ sec EF DFM EBN EFP Infrastructure XI Bruno Touschek school, Frascati, 19 May '06 EF accept (~0. 2 k. Hz) Control Communication 150 nodes 100 nodes Dataflow SFO Databases ATLAS TDAQ concept & architecture - Kostas KORDAS 16

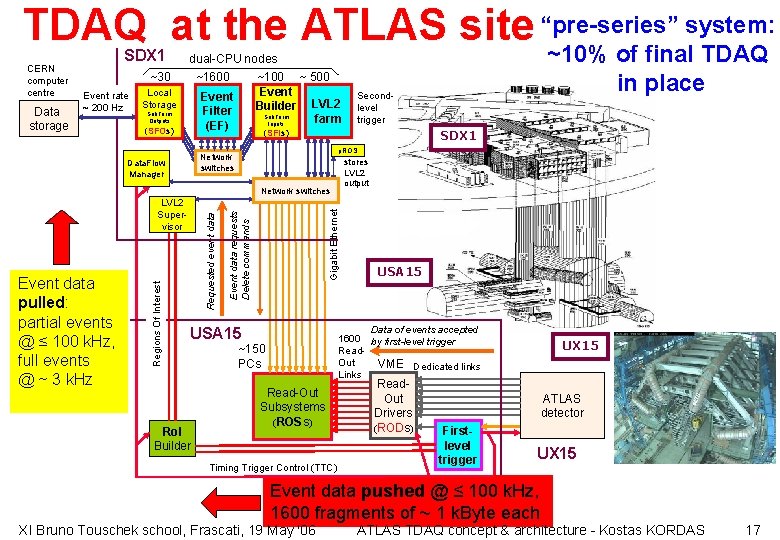

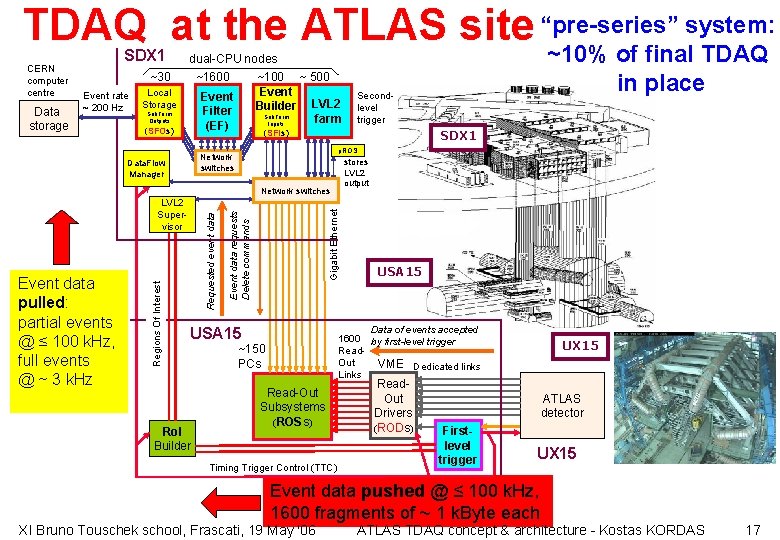

system: TDAQ at the ATLAS site “pre-series” ~10% of final TDAQ CERN computer centre Data storage SDX 1 dual-CPU nodes ~30 Event rate ~ 200 Hz ~1600 Local Storage ~100 ~ 500 Event Builder LVL 2 Sub. Farm farm Inputs Event Filter (EF) Sub. Farm Outputs (SFOs) (SFIs) SDX 1 p. ROS Network switches Data. Flow Manager stores LVL 2 output Event data requests Delete commands Requested event data Regions Of Interest Event data pulled: partial events @ ≤ 100 k. Hz, full events @ ~ 3 k. Hz Gigabit Ethernet Network switches LVL 2 Supervisor USA 15 Data of events accepted 1600 by first-level trigger Read. Out VME Dedicated links Links USA 15 Ro. I Builder in place Secondlevel trigger ~150 PCs Read-Out Subsystems (ROSs) Timing Trigger Control (TTC) Read. Out Drivers (RODs) UX 15 ATLAS detector Firstlevel trigger UX 15 Event data pushed @ ≤ 100 k. Hz, 1600 fragments of ~ 1 k. Byte each XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 17

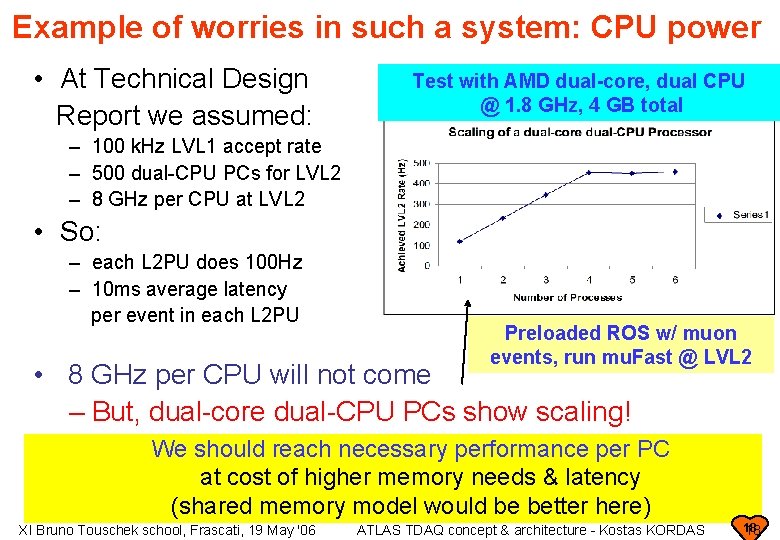

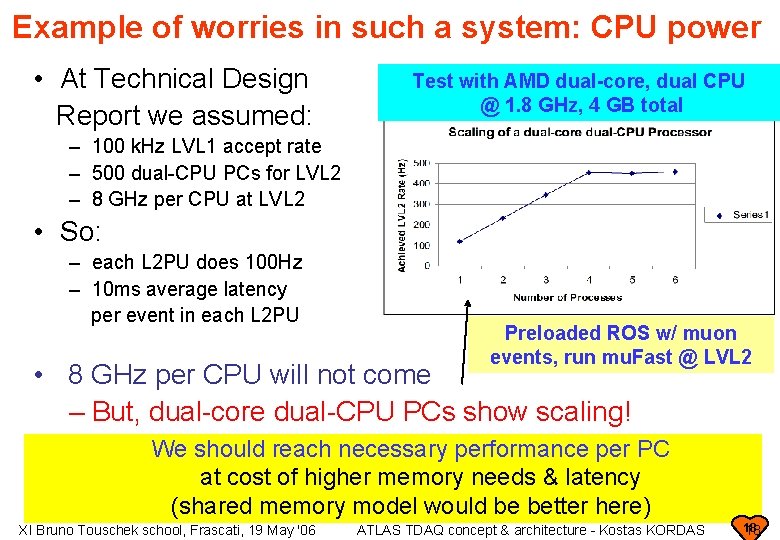

Example of worries in such a system: CPU power • At Technical Design Report we assumed: Test with AMD dual-core, dual CPU @ 1. 8 GHz, 4 GB total – 100 k. Hz LVL 1 accept rate – 500 dual-CPU PCs for LVL 2 – 8 GHz per CPU at LVL 2 • So: – each L 2 PU does 100 Hz – 10 ms average latency per event in each L 2 PU Preloaded ROS w/ muon events, run mu. Fast @ LVL 2 • 8 GHz per CPU will not come – But, dual-core dual-CPU PCs show scaling! We should reach necessary performance per PC at cost of higher memory needs & latency (shared memory model would be better here) XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 18 18

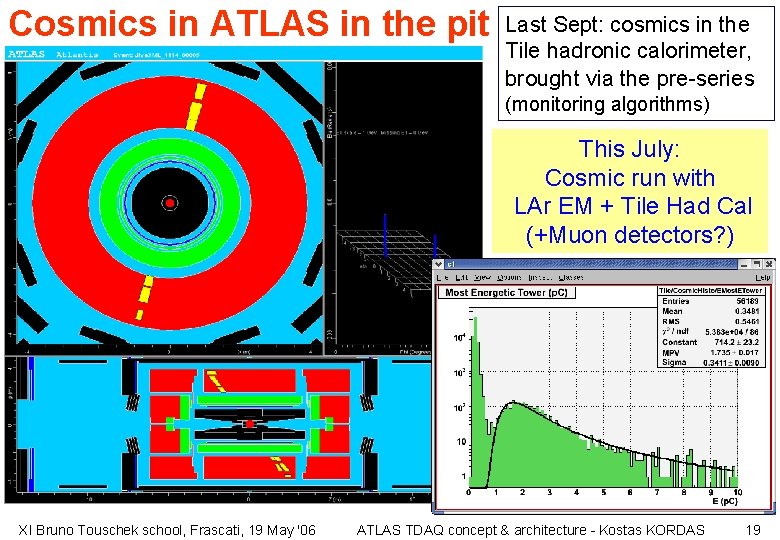

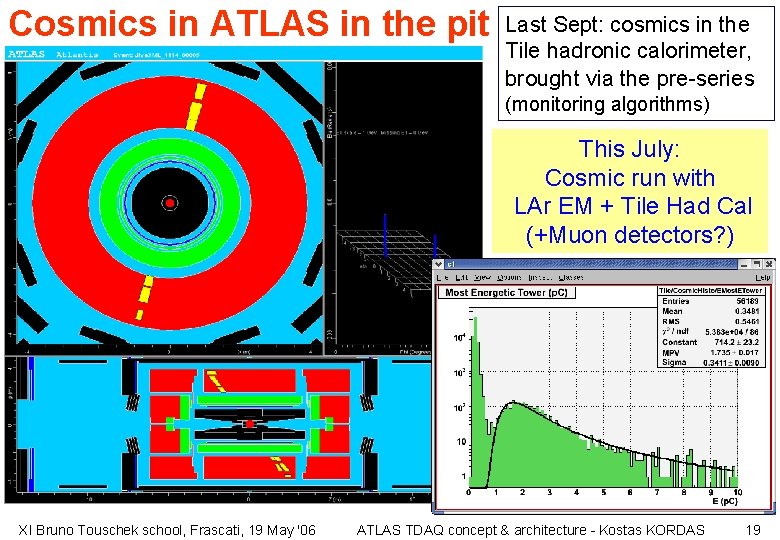

Cosmics in ATLAS in the pit Last Sept: cosmics in the Tile hadronic calorimeter, brought via the pre-series (monitoring algorithms) This July: Cosmic run with LAr EM + Tile Had Cal (+Muon detectors? ) XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 19

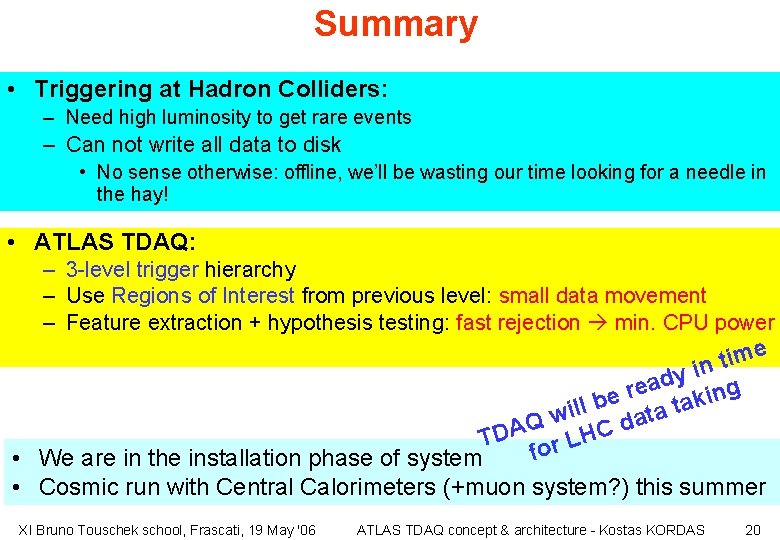

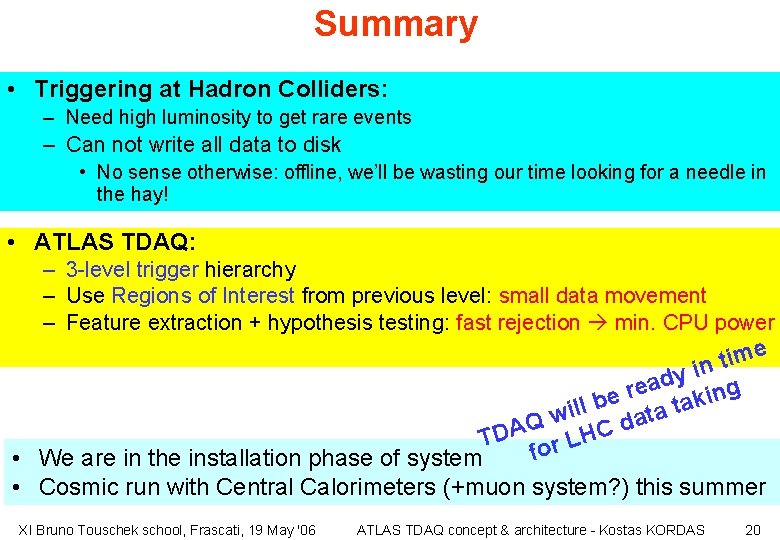

Summary • Triggering at Hadron Colliders: – Need high luminosity to get rare events – Can not write all data to disk • No sense otherwise: offline, we’ll be wasting our time looking for a needle in the hay! • ATLAS TDAQ: – 3 -level trigger hierarchy – Use Regions of Interest from previous level: small data movement – Feature extraction + hypothesis testing: fast rejection min. CPU power e m i t n i y ad ing e r be a tak l l i t w a d Q TDA or LHC f • We are in the installation phase of system • Cosmic run with Central Calorimeters (+muon system? ) this summer XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 20

Thank you XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 21

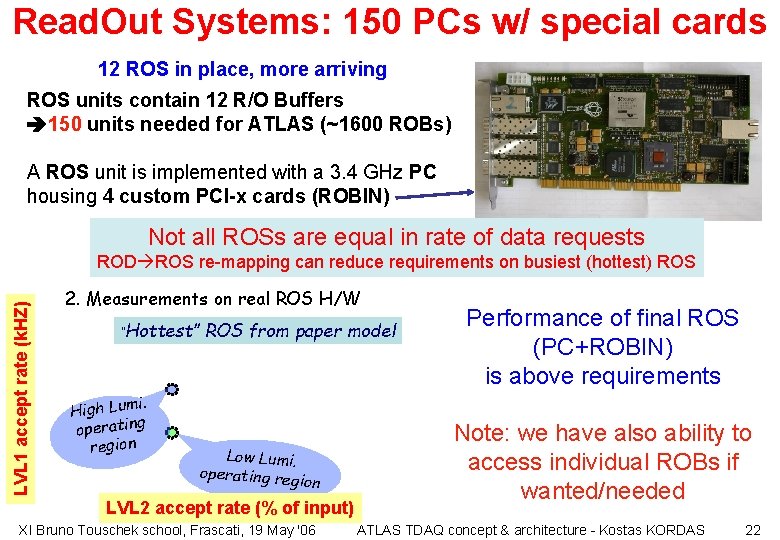

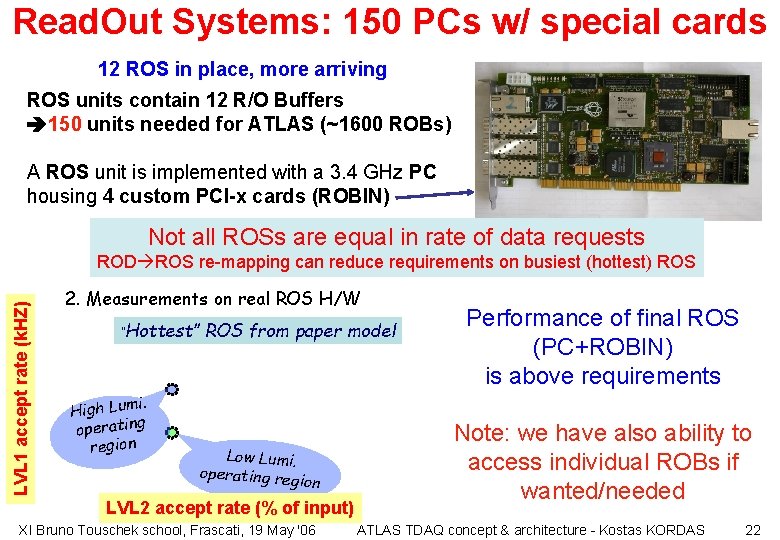

Read. Out Systems: 150 PCs w/ special cards 12 ROS in place, more arriving ROS units contain 12 R/O Buffers 150 units needed for ATLAS (~1600 ROBs) A ROS unit is implemented with a 3. 4 GHz PC housing 4 custom PCI-x cards (ROBIN) Not all ROSs are equal in rate of data requests LVL 1 accept rate (k. HZ) ROD ROS re-mapping can reduce requirements on busiest (hottest) ROS 2. Measurements on real ROS H/W “Hottest” i. High Lum operating region ROS from paper model Low Lumi. operating reg ion LVL 2 accept rate (% of input) XI Bruno Touschek school, Frascati, 19 May '06 Performance of final ROS (PC+ROBIN) is above requirements Note: we have also ability to access individual ROBs if wanted/needed ATLAS TDAQ concept & architecture - Kostas KORDAS 22

Event Building needs Throughput requirements: • 100 KHz LVL 1 accept rate • 3. 5% LVL 2 accept rate 3. 5 KHz EB • 1. 6 MB event size 3. 5 x 1. 6 = 5600 MB/s total input Network limited (fast CPUs): • Event building using 60 -70% of Gbit network ~70 MB/s into each Event Building node (SFI) So, we need: • 5600 MB/s into EB system / (70 MB/s in each EB node) need ~80 SFIs for full ATLAS • When SFI serves EF, throughput decreases by ~20% actually need 80/0. 80 = 100 SFIs 6 prototypes in place, evaluation of PCs now, expect big Event Building needs from day 1: > 50 PCs till end of year XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 23

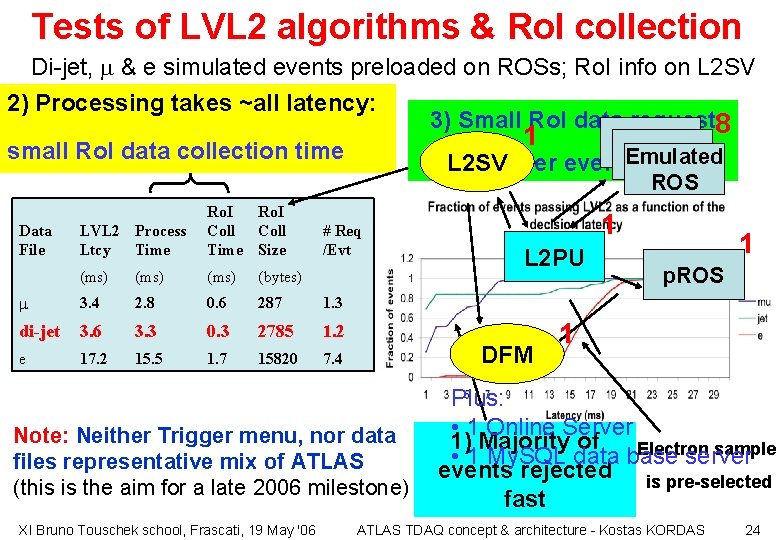

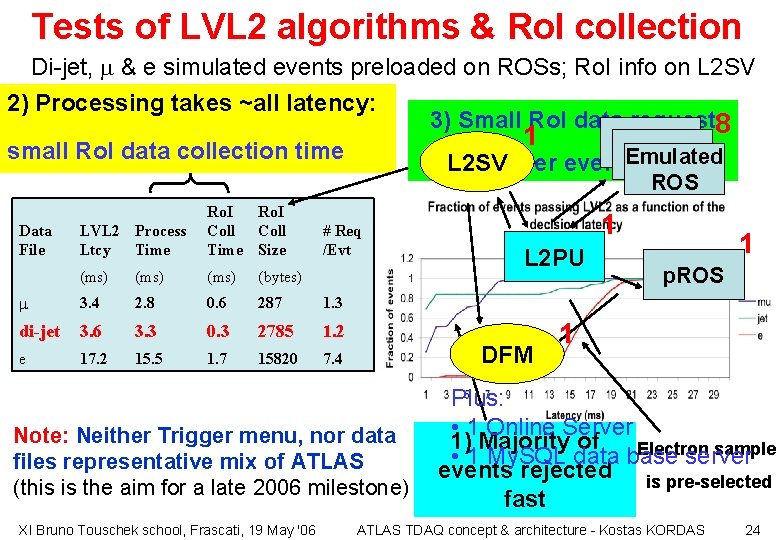

Tests of LVL 2 algorithms & Ro. I collection Di-jet, m & e simulated events preloaded on ROSs; Ro. I info on L 2 SV 2) Processing takes ~all latency: 3) Small Ro. I data request 8 1 p. ROS small Ro. I data collection time L 2 SV per event. Emulated ROS LVL 2 Process Ltcy Time Ro. I Coll Time Size (ms) (bytes) m 3. 4 2. 8 0. 6 287 1. 3 di-jet 3. 6 3. 3 0. 3 2785 1. 2 e 17. 2 15. 5 1. 7 15820 7. 4 Data File # Req /Evt L 2 PU DFM Note: Neither Trigger menu, nor data files representative mix of ATLAS (this is the aim for a late 2006 milestone) XI Bruno Touschek school, Frascati, 19 May '06 1 1 p. ROS 1 Plus: • 1 Online Server 1) Majority of Electron sample • 1 My. SQL data base server events rejected is pre-selected fast ATLAS TDAQ concept & architecture - Kostas KORDAS 24

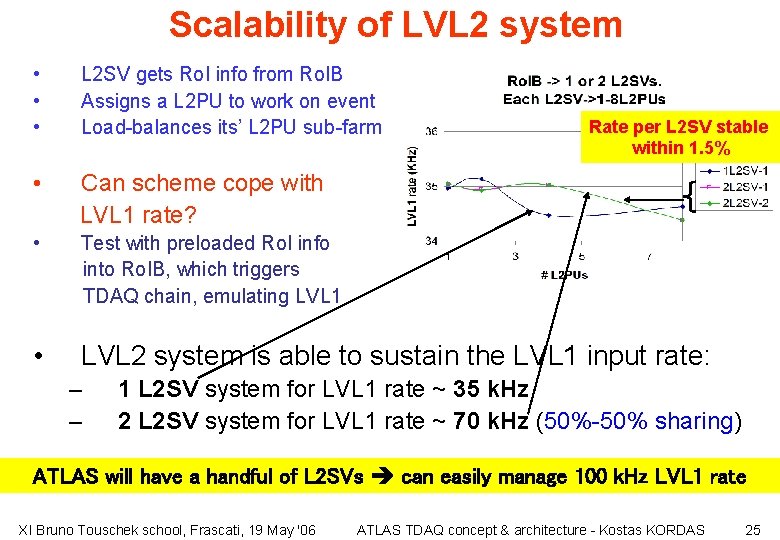

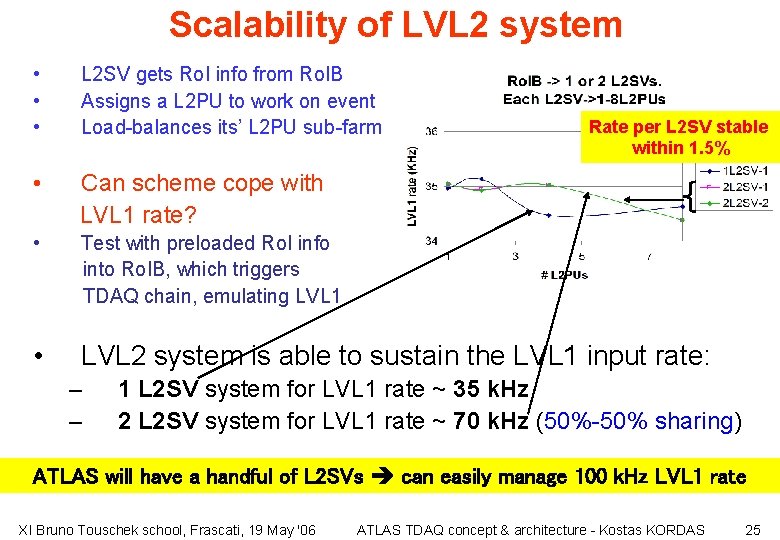

Scalability of LVL 2 system • • • L 2 SV gets Ro. I info from Ro. IB Assigns a L 2 PU to work on event Load-balances its’ L 2 PU sub-farm • Can scheme cope with LVL 1 rate? • Test with preloaded Ro. I info into Ro. IB, which triggers TDAQ chain, emulating LVL 1 • LVL 2 system is able to sustain the LVL 1 input rate: – – Rate per L 2 SV stable within 1. 5% 1 L 2 SV system for LVL 1 rate ~ 35 k. Hz 2 L 2 SV system for LVL 1 rate ~ 70 k. Hz (50%-50% sharing) ATLAS will have a handful of L 2 SVs can easily manage 100 k. Hz LVL 1 rate XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 25

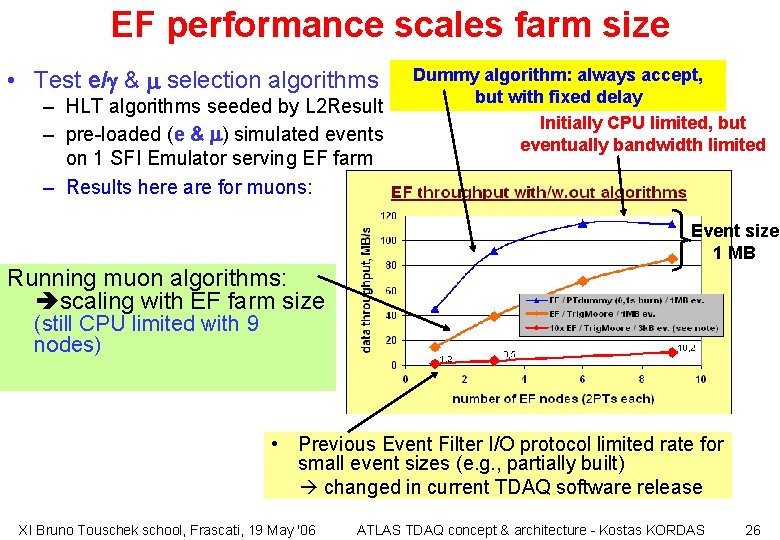

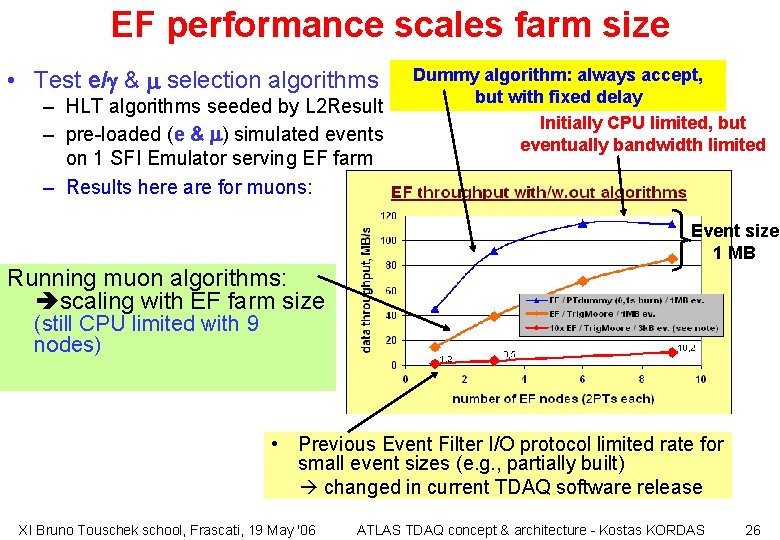

EF performance scales farm size • Test e/g & m selection algorithms – HLT algorithms seeded by L 2 Result – pre-loaded (e & m) simulated events on 1 SFI Emulator serving EF farm – Results here are for muons: Dummy algorithm: always accept, but with fixed delay Initially CPU limited, but eventually bandwidth limited Event size 1 MB Running muon algorithms: scaling with EF farm size (still CPU limited with 9 nodes) • Previous Event Filter I/O protocol limited rate for small event sizes (e. g. , partially built) changed in current TDAQ software release XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 26

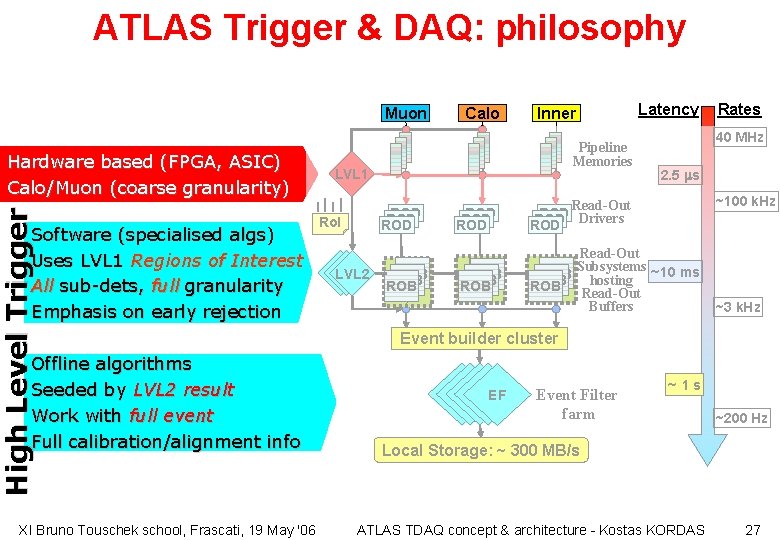

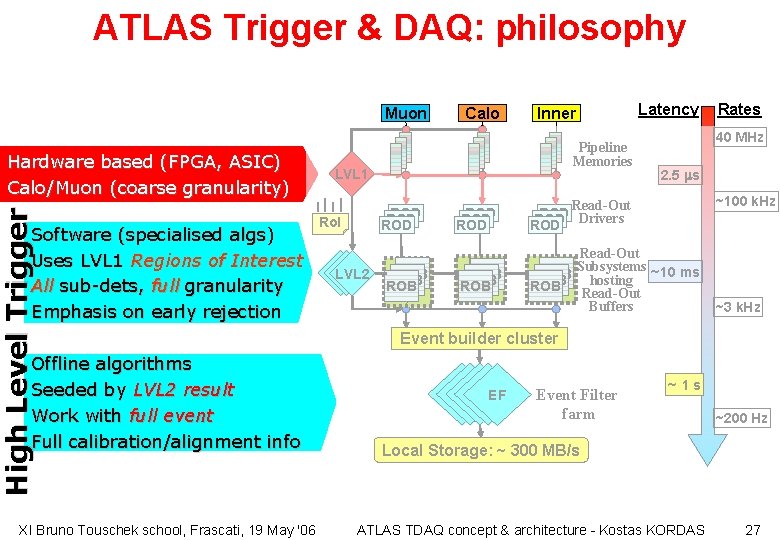

ATLAS Trigger & DAQ: philosophy Muon High Level Trigger Hardware based (FPGA, ASIC) Calo/Muon (coarse granularity) Software (specialised algs) Uses LVL 1 Regions of Interest All sub-dets, full granularity Emphasis on early rejection Offline algorithms Seeded by LVL 2 result Work with full event Full calibration/alignment info XI Bruno Touschek school, Frascati, 19 May '06 Calo Inner Pipeline Memories LVL 1 ROD ROD Ro. I LVL 2 ROB ROB Latency Rates 40 MHz 2. 5 ms ~100 k. Hz ROD ROD Read-Out ROD Drivers ROD ROB ROB Read-Out Subsystems ~10 ms ROB hosting ROB Read-Out Buffers ~3 k. Hz Event builder cluster EF Event Filter farm ~1 s ~200 Hz Local Storage: ~ 300 MB/s ATLAS TDAQ concept & architecture - Kostas KORDAS 27

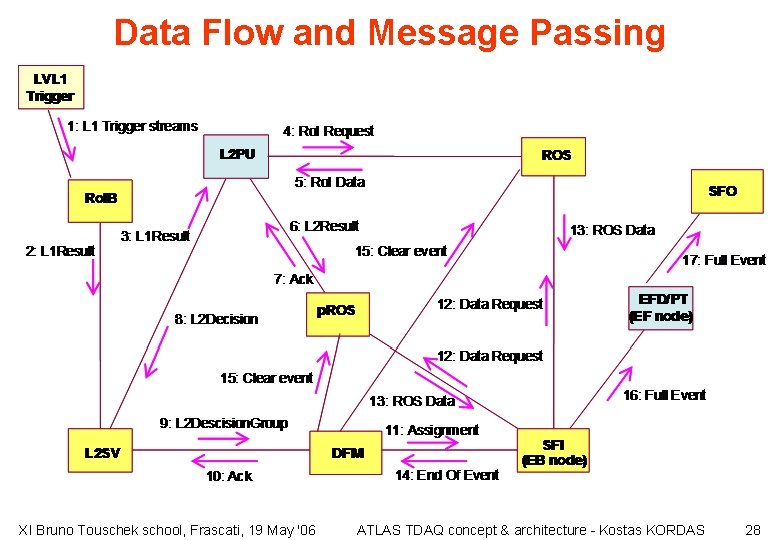

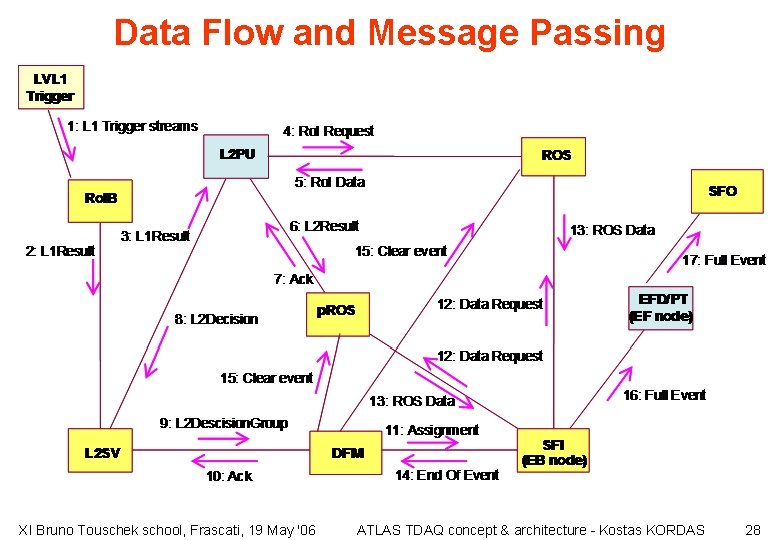

Data Flow and Message Passing XI Bruno Touschek school, Frascati, 19 May '06 ATLAS TDAQ concept & architecture - Kostas KORDAS 28

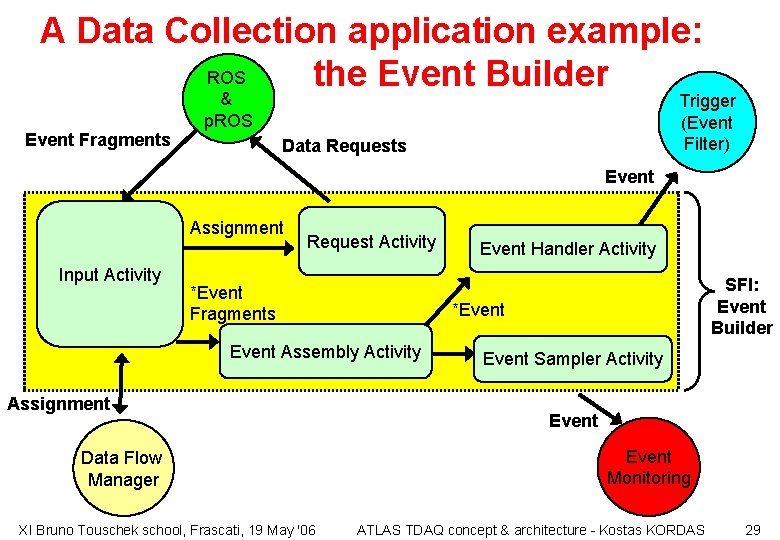

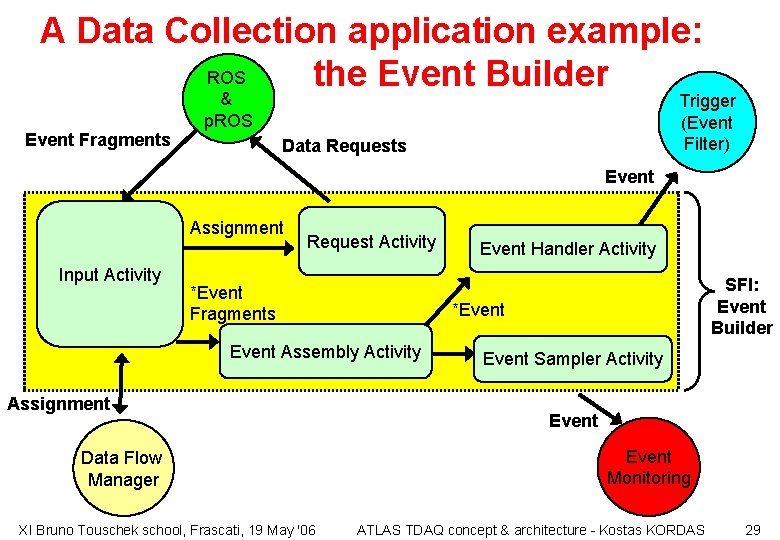

A Data Collection application example: ROS the Event Builder Event Fragments & p. ROS Trigger (Event Filter) Data Requests Event Assignment Input Activity Request Activity *Event Fragments Data Flow Manager XI Bruno Touschek school, Frascati, 19 May '06 SFI: Event Builder *Event Assembly Activity Assignment Event Handler Activity Event Sampler Activity Event Monitoring ATLAS TDAQ concept & architecture - Kostas KORDAS 29