ATLAS Trigger Configuration and Menus and some gauge

- Slides: 7

ATLAS Trigger Configuration and Menus (and some gauge couplings) Paul Bell Summary of 2009 1. Introduction to the ATLAS Trigger Menus and Configuration System 2. Work done/status report 3. Quartic gauge couplings

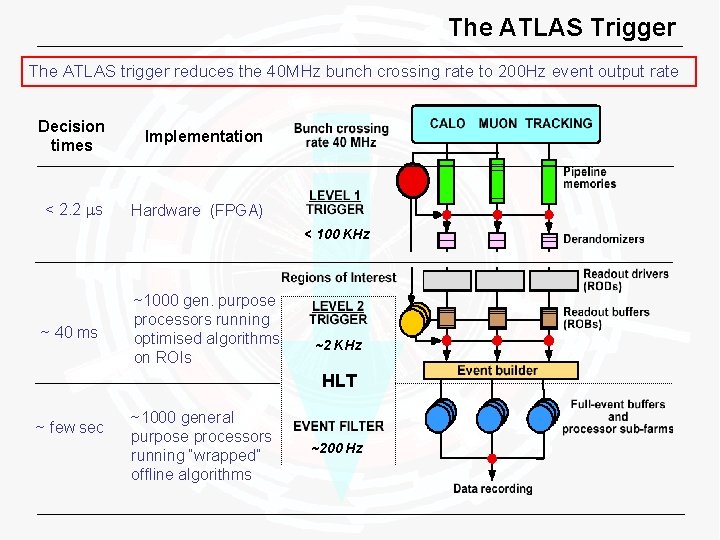

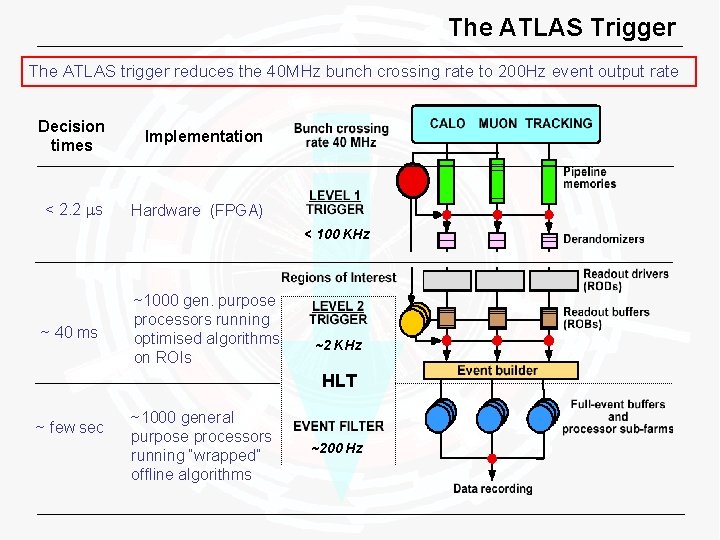

The ATLAS Trigger The ATLAS trigger reduces the 40 MHz bunch crossing rate to 200 Hz event output rate Decision times Implementation < 2. 2 s Hardware (FPGA) < 100 KHz ~ 40 ms ~1000 gen. purpose processors running optimised algorithms on ROIs ~2 KHz HLT ~ few sec ~1000 general purpose processors running “wrapped” offline algorithms ~200 Hz

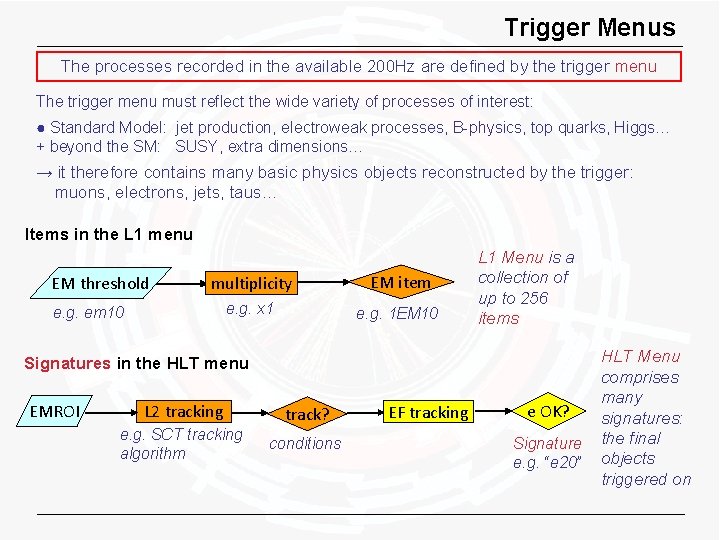

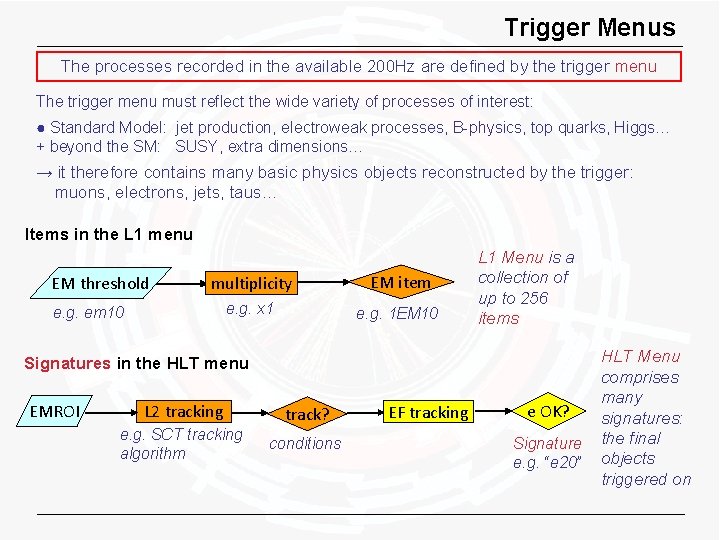

Trigger Menus The processes recorded in the available 200 Hz are defined by the trigger menu The trigger menu must reflect the wide variety of processes of interest: ● Standard Model: jet production, electroweak processes, B-physics, top quarks, Higgs… + beyond the SM: SUSY, extra dimensions… → it therefore contains many basic physics objects reconstructed by the trigger: muons, electrons, jets, taus… Items in the L 1 menu EM threshold multiplicity EM item e. g. x 1 e. g. 1 EM 10 e. g. em 10 L 1 Menu is a collection of up to 256 items Signatures in the HLT menu EMROI L 2 tracking track? e. g. SCT tracking algorithm conditions EF tracking e OK? Signature e. g. “e 20” HLT Menu comprises many signatures: the final objects triggered on

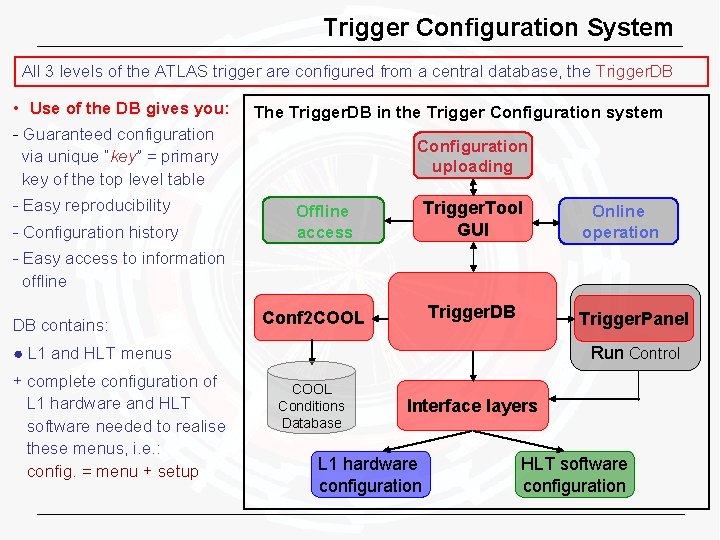

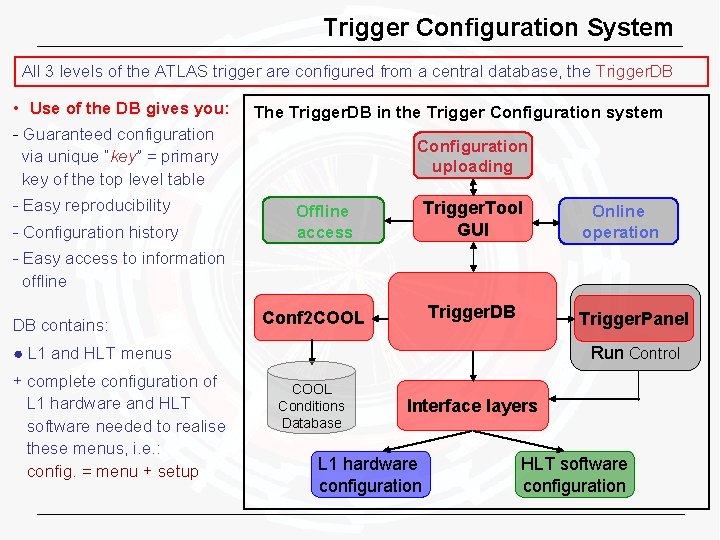

Trigger Configuration System All 3 levels of the ATLAS trigger are configured from a central database, the Trigger. DB • Use of the DB gives you: The Trigger. DB in the Trigger Configuration system - Guaranteed configuration via unique “key” = primary key of the top level table - Easy reproducibility - Configuration history Configuration uploading Trigger. Tool GUI Offline access Online operation - Easy access to information offline DB contains: Trigger. DB Conf 2 COOL Trigger. Panel Run Control ● L 1 and HLT menus + complete configuration of L 1 hardware and HLT software needed to realise these menus, i. e. : config. = menu + setup COOL Conditions Database Interface layers L 1 hardware configuration HLT software configuration

Current Activities and Status • The contents of the menus are managed by the trigger menu group • A menu is deployed online thanks to the trigger configuration system • The 5 “menu experts” implement requests for certain triggers from the physics groups and create complete menus (Cosmics 2009, Initial. Beam, Lumi 1 E 31, etc) (I spent a lot of time standardising the Python framework in which we do this) • The final step in preparing a menu is to “upload” it to the trigger database • The menu experts then follow and understand the performance of the menu online and take decisions to control the trigger rates (lots of time at Point 1) • Status for first beams: - Given luminosity and filled bunches, trigger not needed for online rejection of events (though this was demonstrated) - Role of Higher Level Trigger was to send events from L 1 to the appropriate stream, according to their L 1 trigger type, L 1 Calo, Muons, etc - Highest “physics” rate seen from Min Bias Trigger Scintillators (MBTS) around 100 Hz - Remaining bandwidth filled with zero bias events from Beam Pickup detectors - More complex menus exercised by rerunning the HLT offline • For 2010, menus for higher luminosity are prepared, and we will see an evolution towards ever higher online rejection as bandwidth is used up

Current Activities and Status • Continued co-convening the ATLAS Trigger Configuration group (~10 people) - responsible for development, maintenance and support of the configuration system • “Online” developments during 2009 have included: - ability to update L 1, HLT and express stream prescales during a run - continued improvements to graphical user interfaces (much feedback) • “Offline”, I “took a leading role” in extending the system for use in MC production: - Established a dedicated “MC Trigger. DB” for MC specific configurations - Extended the interface software to allow configuration of offline jobs from the DB (for example the Level 1 simulation) - Integrated with the wider ATLAS core software to allow the choice of DB configuration to be specified in the ATLAS production scripts - Set up the mechanism to make the data available on the grid (T 2 production sites) - Established a validation procedure Þ DB-based configuration now used since MC 09 production Þ Trigger Configuration group now responsible for updating the MC DB as required • Last months mainly been dealing with bugs/feature requests e. g. from the menu group • Much development still remains: e. g. how to retrospectively simulate trigger for some period of data? One proposal is to simulate exactly the trigger conditions – we have the data in the DB after all, though need a whole new layer of software and tools. TBC…

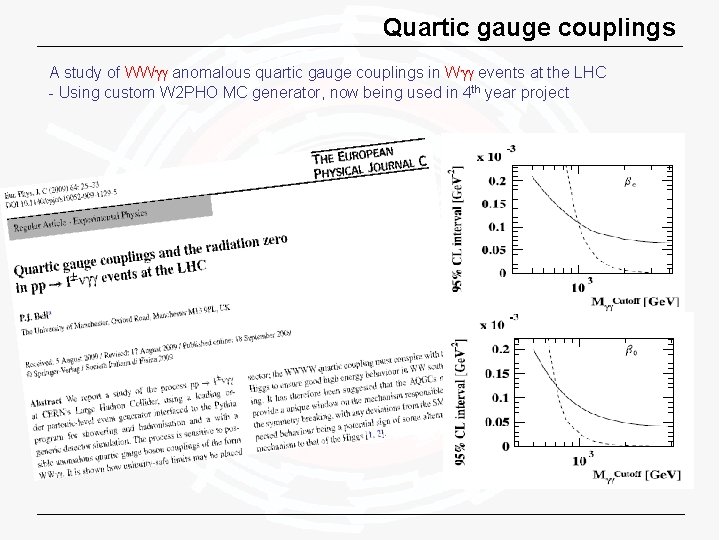

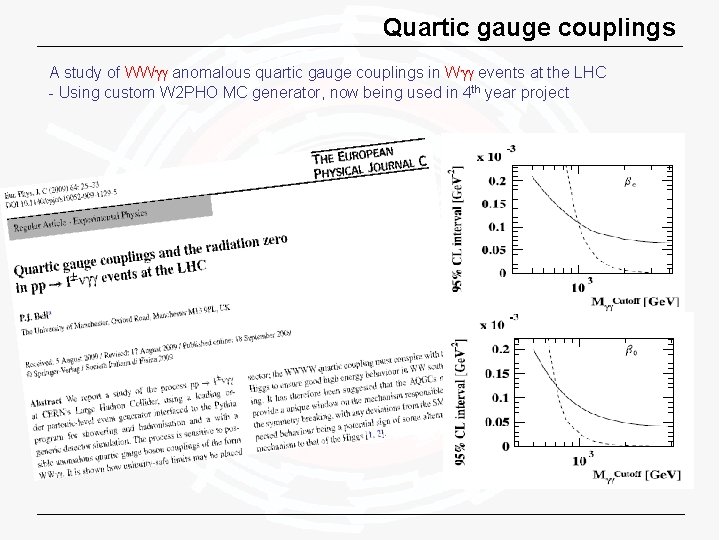

Quartic gauge couplings A study of WWgg anomalous quartic gauge couplings in Wgg events at the LHC - Using custom W 2 PHO MC generator, now being used in 4 th year project