Association Rule Mining Instructor Qiang Yang Thanks Jiawei

- Slides: 35

Association Rule Mining Instructor Qiang Yang Thanks: Jiawei Han and Jian Pei Frequent-pattern mining methods

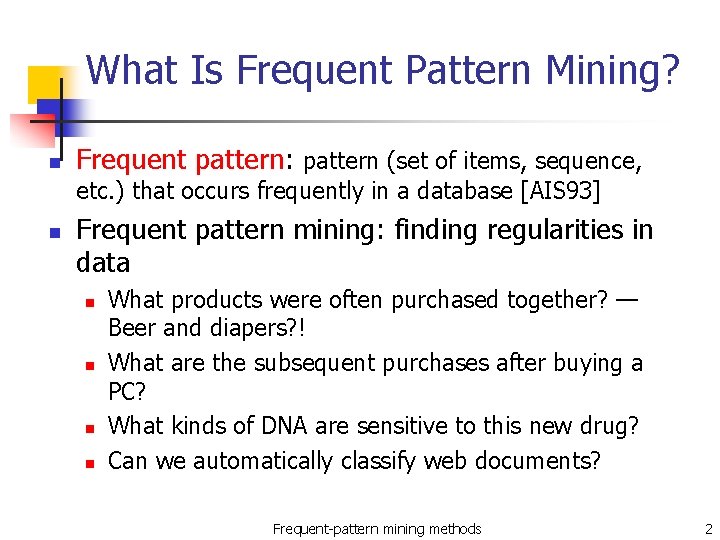

What Is Frequent Pattern Mining? n Frequent pattern: pattern (set of items, sequence, etc. ) that occurs frequently in a database [AIS 93] n Frequent pattern mining: finding regularities in data n n What products were often purchased together? — Beer and diapers? ! What are the subsequent purchases after buying a PC? What kinds of DNA are sensitive to this new drug? Can we automatically classify web documents? Frequent-pattern mining methods 2

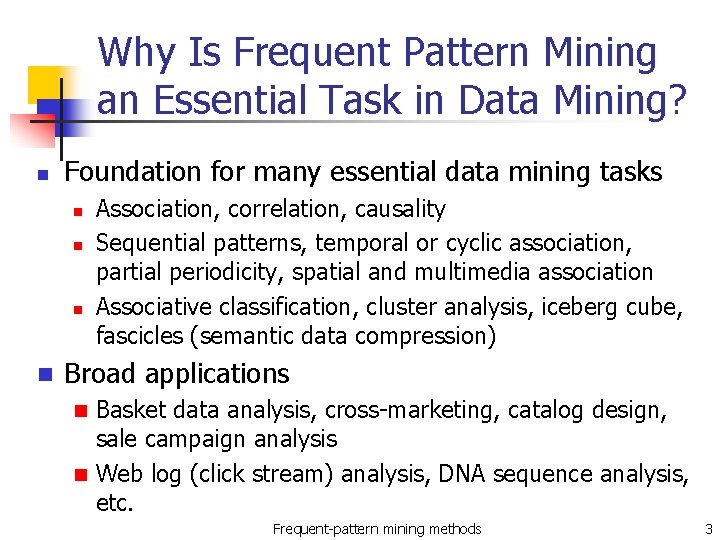

Why Is Frequent Pattern Mining an Essential Task in Data Mining? n Foundation for many essential data mining tasks n n Association, correlation, causality Sequential patterns, temporal or cyclic association, partial periodicity, spatial and multimedia association Associative classification, cluster analysis, iceberg cube, fascicles (semantic data compression) Broad applications Basket data analysis, cross-marketing, catalog design, sale campaign analysis n Web log (click stream) analysis, DNA sequence analysis, etc. n Frequent-pattern mining methods 3

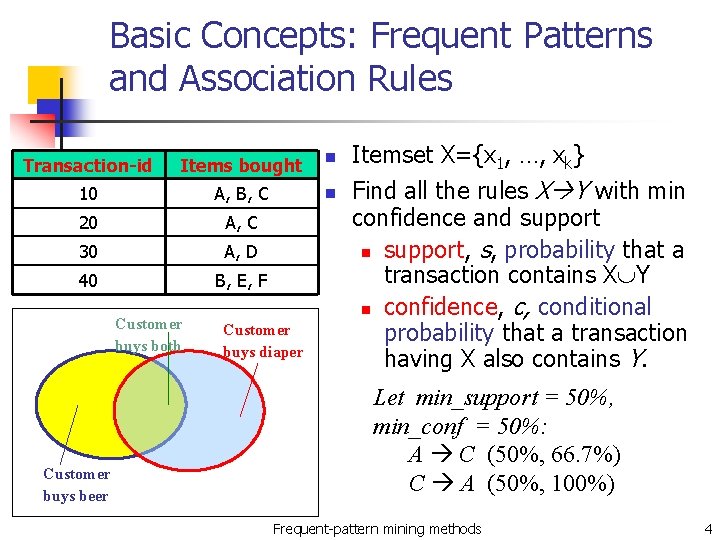

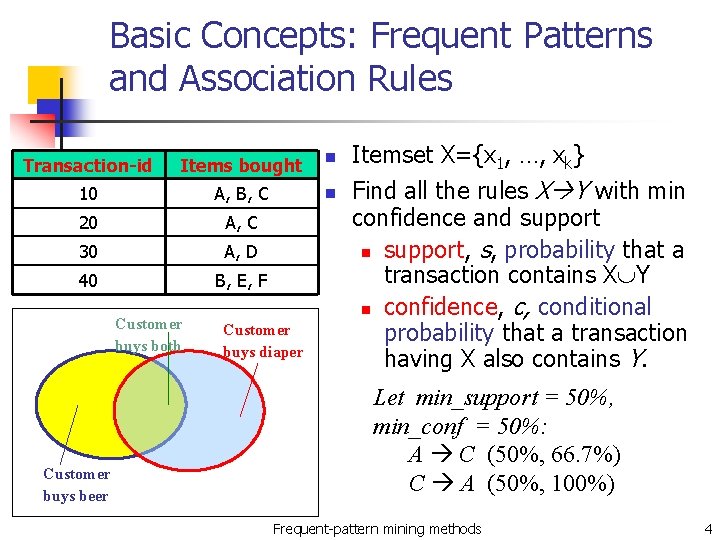

Basic Concepts: Frequent Patterns and Association Rules Transaction-id Items bought n 10 A, B, C n 20 A, C 30 A, D 40 B, E, F Customer buys both Customer buys beer Customer buys diaper Itemset X={x 1, …, xk} Find all the rules X Y with min confidence and support n support, s, probability that a transaction contains X Y n confidence, c, conditional probability that a transaction having X also contains Y. Let min_support = 50%, min_conf = 50%: A C (50%, 66. 7%) C A (50%, 100%) Frequent-pattern mining methods 4

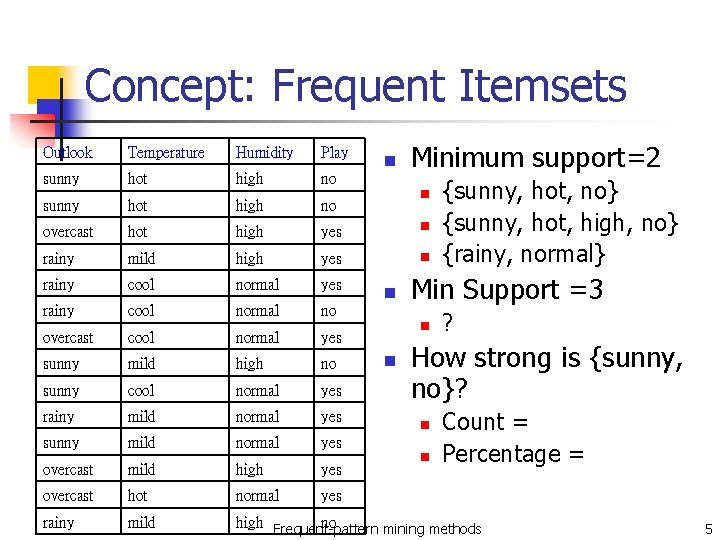

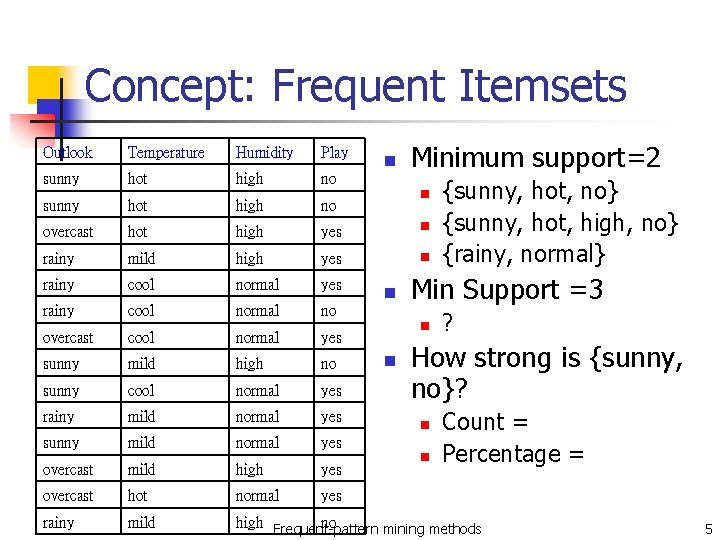

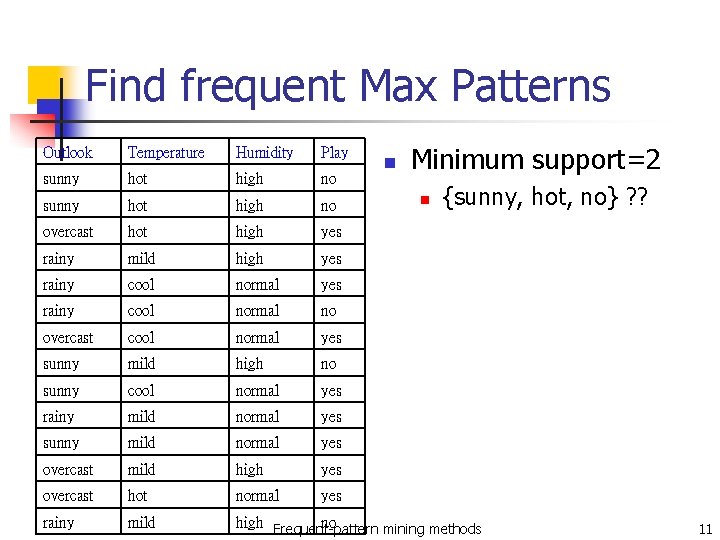

Concept: Frequent Itemsets Minimum support=2 Outlook Temperature Humidity Play sunny hot high no overcast hot high yes n rainy mild high yes n rainy cool normal yes rainy cool normal no overcast cool normal yes sunny mild high no sunny cool normal yes rainy mild normal yes sunny mild normal yes overcast mild high yes overcast hot normal yes rainy mild high Frequent-pattern no mining methods n n n Min Support =3 n n {sunny, hot, no} {sunny, hot, high, no} {rainy, normal} ? How strong is {sunny, no}? n n Count = Percentage = 5

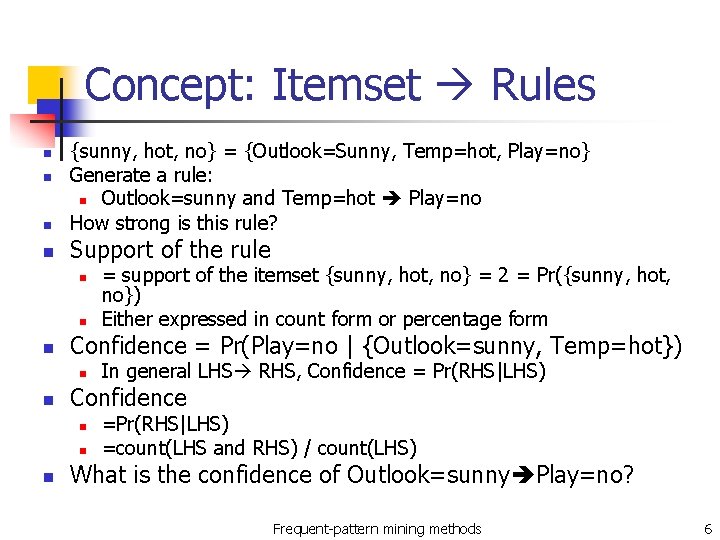

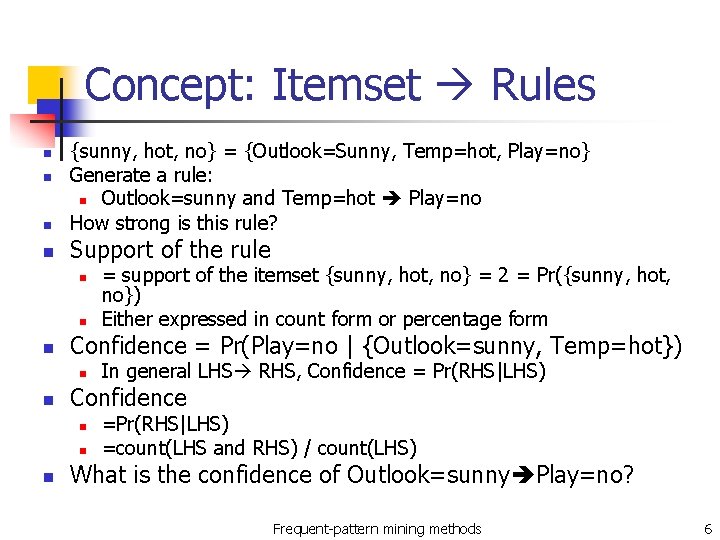

Concept: Itemset Rules n {sunny, hot, no} = {Outlook=Sunny, Temp=hot, Play=no} Generate a rule: n Outlook=sunny and Temp=hot Play=no How strong is this rule? n Support of the rule n n n Confidence = Pr(Play=no | {Outlook=sunny, Temp=hot}) n n In general LHS RHS, Confidence = Pr(RHS|LHS) Confidence n n n = support of the itemset {sunny, hot, no} = 2 = Pr({sunny, hot, no}) Either expressed in count form or percentage form =Pr(RHS|LHS) =count(LHS and RHS) / count(LHS) What is the confidence of Outlook=sunny Play=no? Frequent-pattern mining methods 6

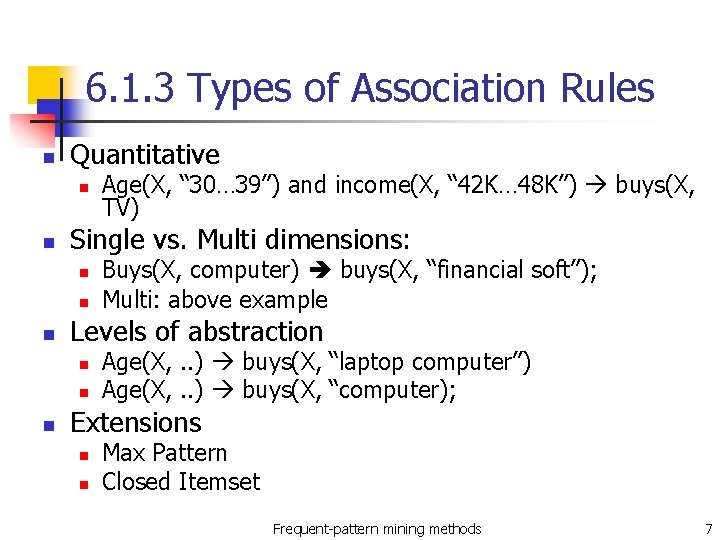

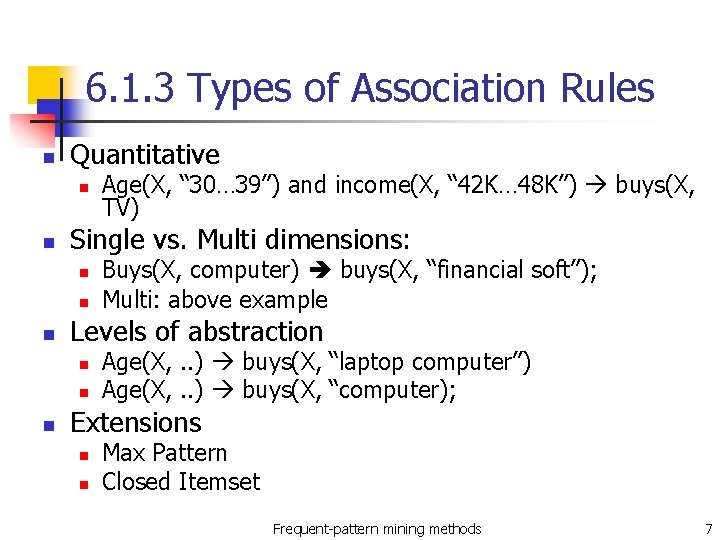

6. 1. 3 Types of Association Rules n Quantitative n n Single vs. Multi dimensions: n n n Buys(X, computer) buys(X, “financial soft”); Multi: above example Levels of abstraction n Age(X, “ 30… 39”) and income(X, “ 42 K… 48 K”) buys(X, TV) Age(X, . . ) buys(X, “laptop computer”) Age(X, . . ) buys(X, “computer); Extensions n n Max Pattern Closed Itemset Frequent-pattern mining methods 7

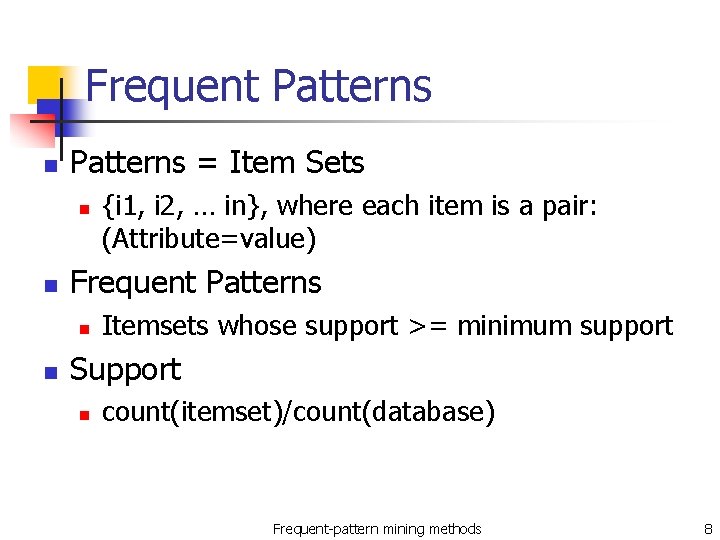

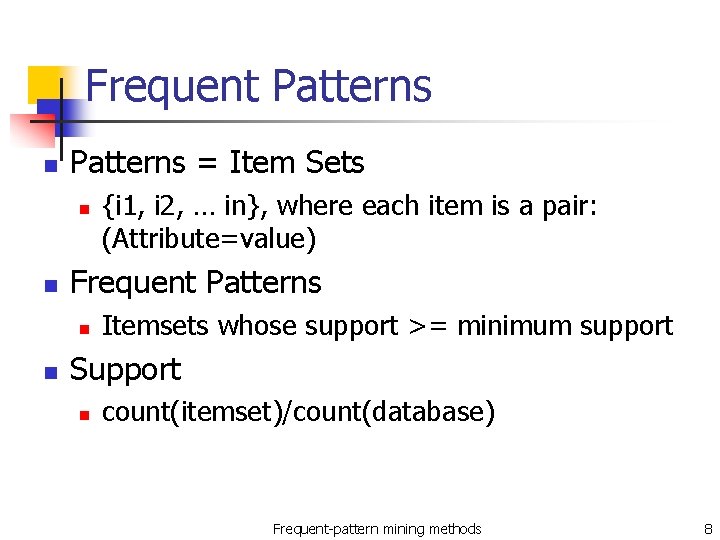

Frequent Patterns n Patterns = Item Sets n n Frequent Patterns n n {i 1, i 2, … in}, where each item is a pair: (Attribute=value) Itemsets whose support >= minimum support Support n count(itemset)/count(database) Frequent-pattern mining methods 8

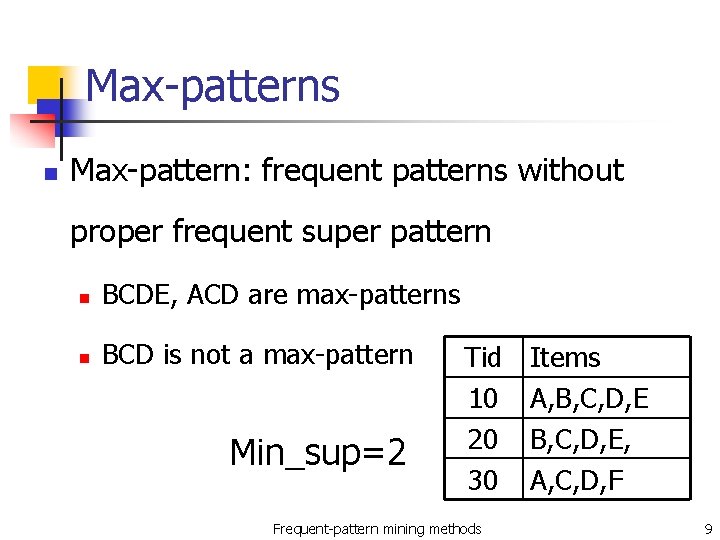

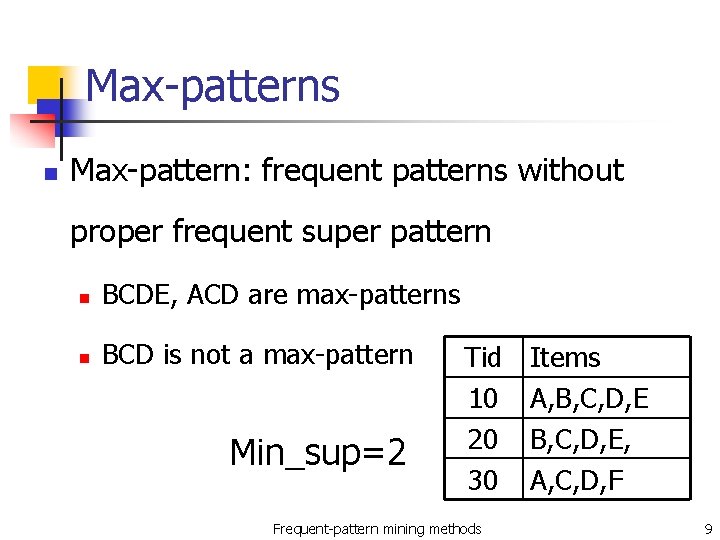

Max-patterns n Max-pattern: frequent patterns without proper frequent super pattern n BCDE, ACD are max-patterns n BCD is not a max-pattern Min_sup=2 Tid 10 20 30 Frequent-pattern mining methods Items A, B, C, D, E, A, C, D, F 9

Frequent Max Patterns n Succinct Expression of frequent patterns n n n Let {a, b, c} be frequent Then, {a, b}, {b, c}, {a, c} must also be frequent Then {a}, {b}, {c}, must also be frequent By writing down {a, b, c} once, we save lots of computation Max Pattern n If {a, b, c} is a frequent max pattern, then {a, b, c, x} is NOT a frequent pattern, for any other item x. Frequent-pattern mining methods 10

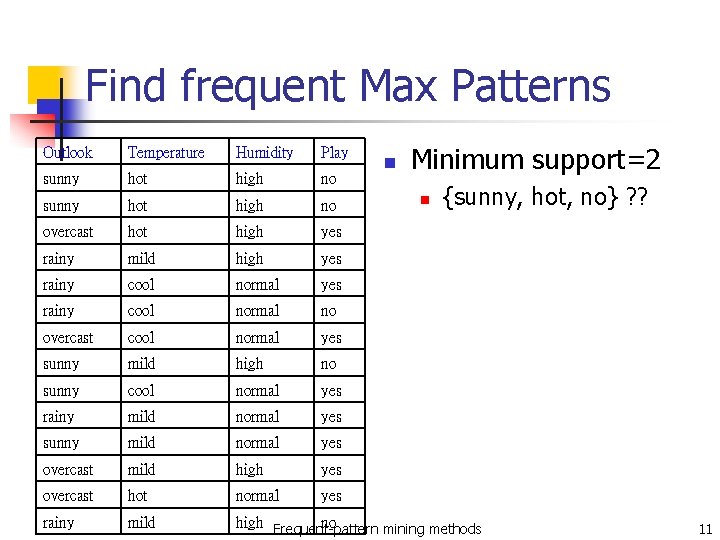

Find frequent Max Patterns Minimum support=2 Outlook Temperature Humidity Play sunny hot high no overcast hot high yes rainy mild high yes rainy cool normal no overcast cool normal yes sunny mild high no sunny cool normal yes rainy mild normal yes sunny mild normal yes overcast mild high yes overcast hot normal yes rainy mild high Frequent-pattern no mining methods n n {sunny, hot, no} ? ? 11

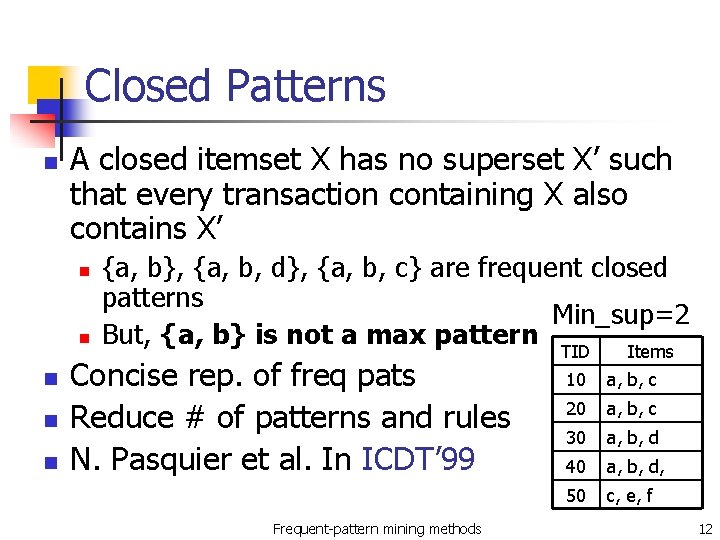

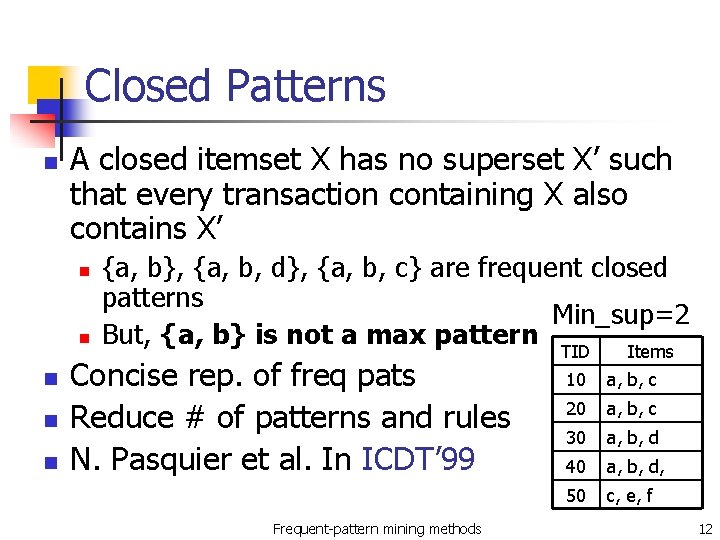

Closed Patterns n A closed itemset X has no superset X’ such that every transaction containing X also contains X’ n n n {a, b}, {a, b, d}, {a, b, c} are frequent closed patterns Min_sup=2 But, {a, b} is not a max pattern TID Items Concise rep. of freq pats Reduce # of patterns and rules N. Pasquier et al. In ICDT’ 99 Frequent-pattern mining methods 10 a, b, c 20 a, b, c 30 a, b, d 40 a, b, d, 50 c, e, f 12

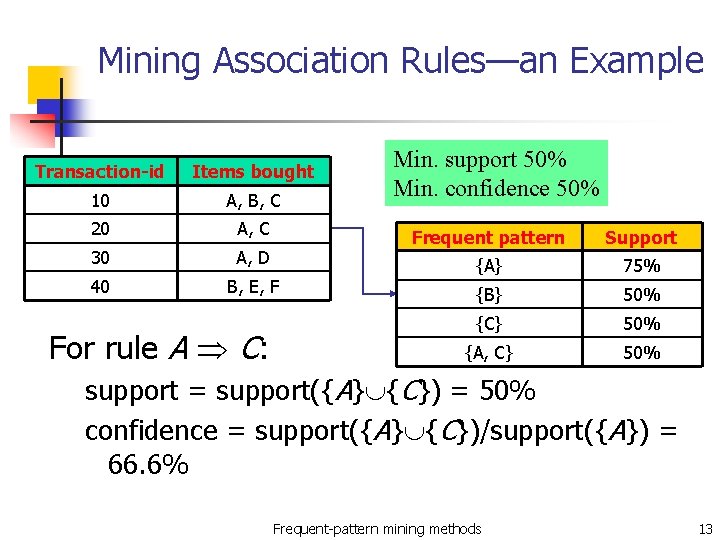

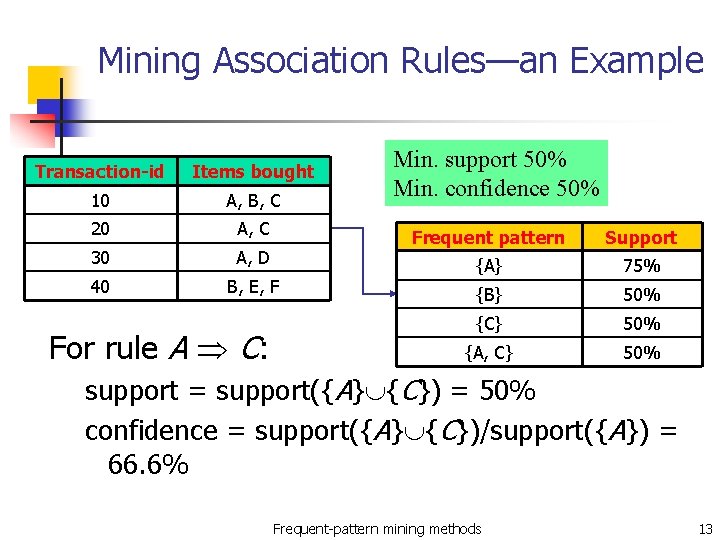

Mining Association Rules—an Example Transaction-id Items bought 10 A, B, C 20 A, C 30 A, D 40 B, E, F For rule A C: Min. support 50% Min. confidence 50% Frequent pattern Support {A} 75% {B} 50% {C} 50% {A, C} 50% support = support({A} {C}) = 50% confidence = support({A} {C})/support({A}) = 66. 6% Frequent-pattern mining methods 13

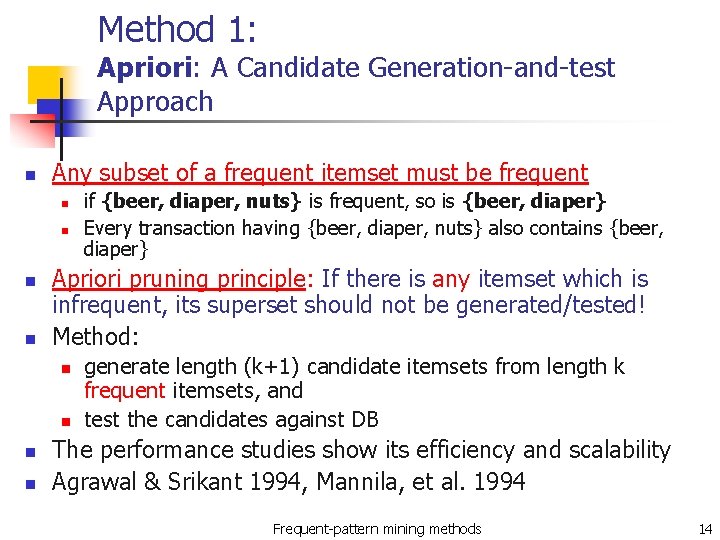

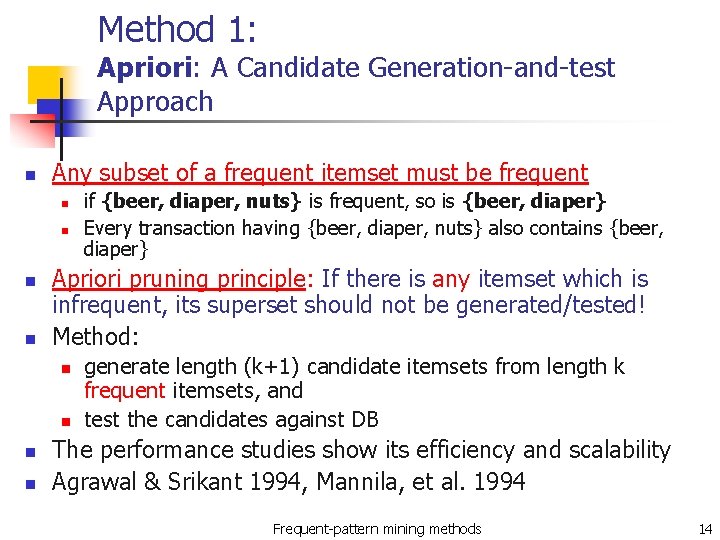

Method 1: Apriori: A Candidate Generation-and-test Approach n Any subset of a frequent itemset must be frequent n n Apriori pruning principle: If there is any itemset which is infrequent, its superset should not be generated/tested! Method: n n if {beer, diaper, nuts} is frequent, so is {beer, diaper} Every transaction having {beer, diaper, nuts} also contains {beer, diaper} generate length (k+1) candidate itemsets from length k frequent itemsets, and test the candidates against DB The performance studies show its efficiency and scalability Agrawal & Srikant 1994, Mannila, et al. 1994 Frequent-pattern mining methods 14

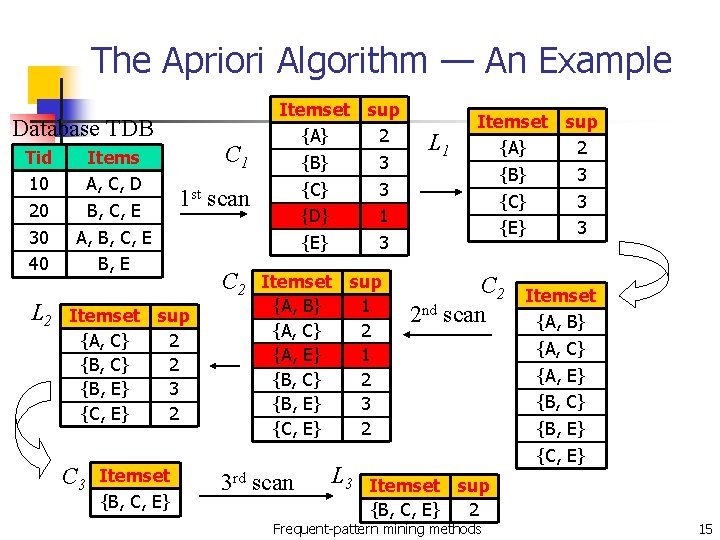

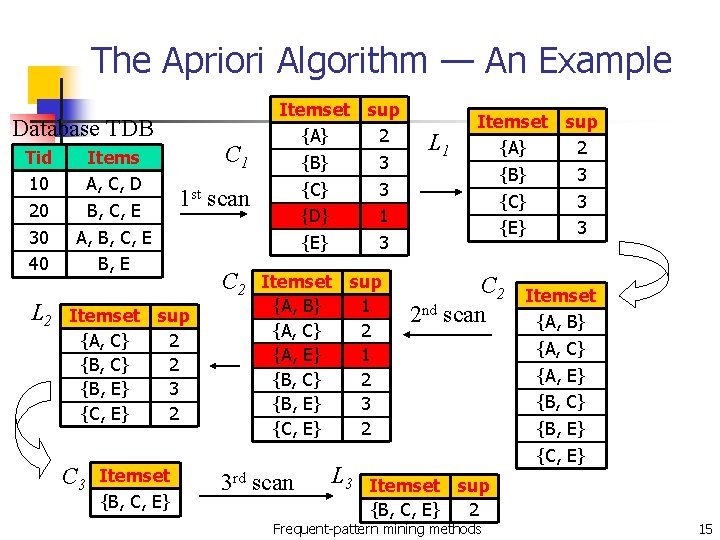

The Apriori Algorithm — An Example Database TDB Tid 10 20 30 40 L 2 Items A, C, D B, C, E A, B, C, E B, E C 1 1 st scan Itemset sup {A, C} 2 {B, E} 3 {C, E} 2 C 3 Itemset {B, C, E} C 2 Itemset sup {A} 2 {B} 3 {C} 3 {D} 1 {E} 3 Itemset sup {A, B} 1 {A, C} 2 {A, E} 1 {B, C} 2 {B, E} 3 {C, E} 2 3 rd scan L 3 L 1 Itemset sup {A} 2 {B} 3 {C} 3 {E} 3 C 2 2 nd scan Itemset sup {B, C, E} 2 Frequent-pattern mining methods Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} 15

The Apriori Algorithm n Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk; Frequent-pattern mining methods 16

Important Details of Apriori n n How to generate candidates? n Step 1: self-joining Lk n Step 2: pruning How to count supports of candidates? Frequent-pattern mining methods 17

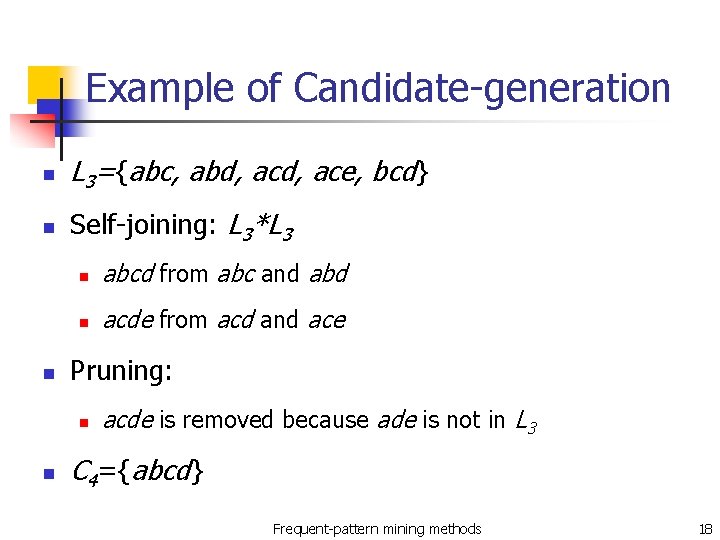

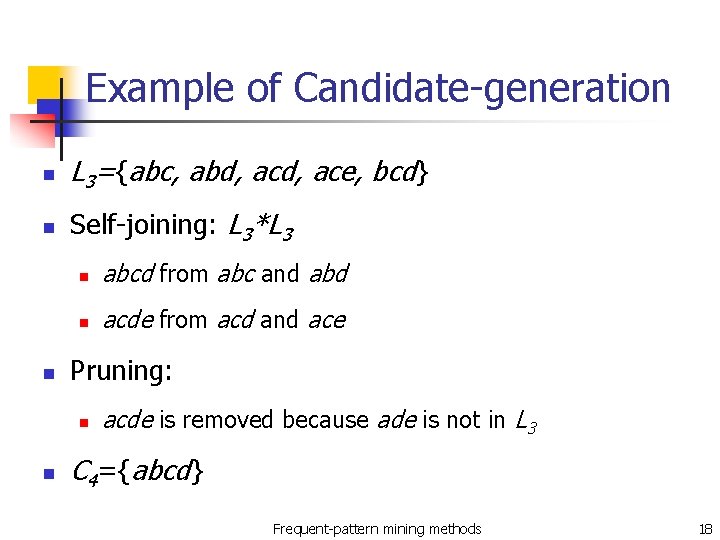

Example of Candidate-generation n L 3={abc, abd, ace, bcd} n Self-joining: L 3*L 3 n n abcd from abc and abd n acde from acd and ace Pruning: n n acde is removed because ade is not in L 3 C 4={abcd} Frequent-pattern mining methods 18

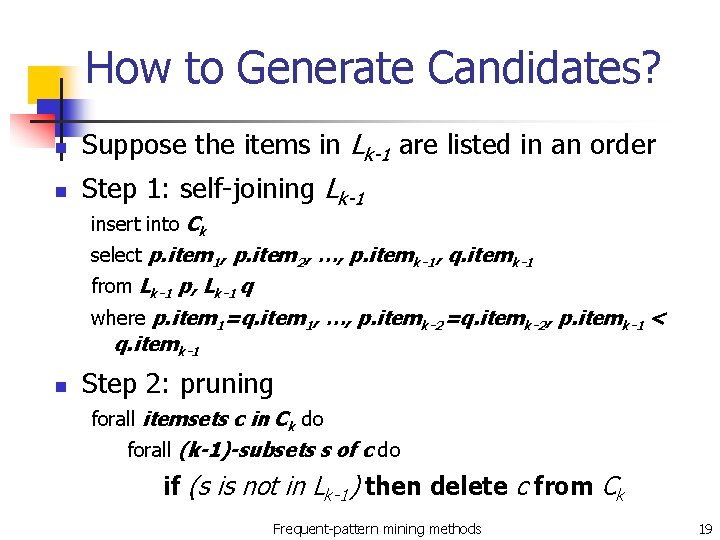

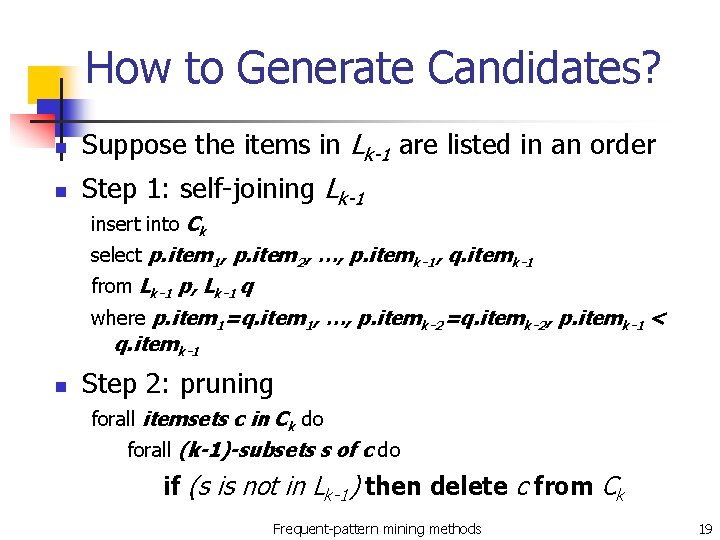

How to Generate Candidates? n Suppose the items in Lk-1 are listed in an order n Step 1: self-joining Lk-1 insert into Ck select p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 from Lk-1 p, Lk-1 q where p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk-1 n Step 2: pruning forall itemsets c in Ck do forall (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck Frequent-pattern mining methods 19

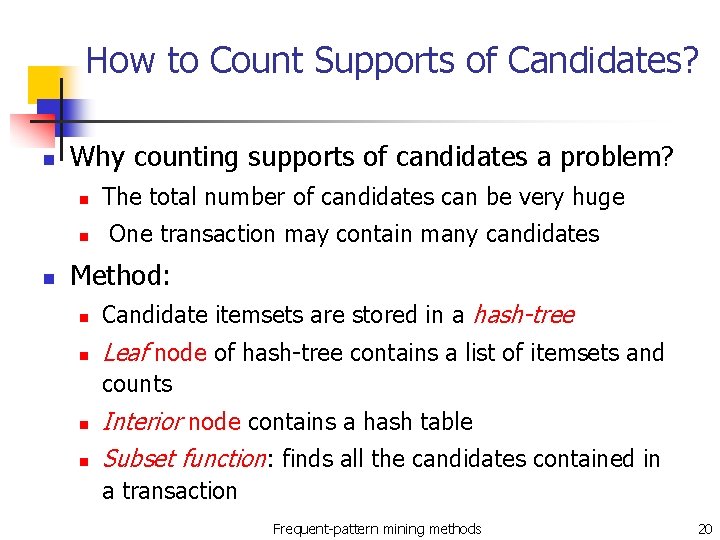

How to Count Supports of Candidates? n Why counting supports of candidates a problem? n n n The total number of candidates can be very huge One transaction may contain many candidates Method: n Candidate itemsets are stored in a hash-tree n Leaf node of hash-tree contains a list of itemsets and counts n n Interior node contains a hash table Subset function: finds all the candidates contained in a transaction Frequent-pattern mining methods 20

Speeding up Association rules Dynamic Hashing and Pruning technique Thanks to Cheng Hong & Hu Haibo Frequent-pattern mining methods

DHP: Reduce the Number of Candidates n A k-itemset whose corresponding hashing bucket count is below the threshold cannot be frequent n n n Candidates: a, b, c, d, e Hash entries: {ab, ad, ae} {bd, be, de} … Frequent 1 -itemset: a, b, d, e ab is not a candidate 2 -itemset if the sum of count of {ab, ad, ae} is below support threshold J. Park, M. Chen, and P. Yu. An effective hash-based algorithm for mining association rules. In SIGMOD’ 95 Frequent-pattern mining methods 22

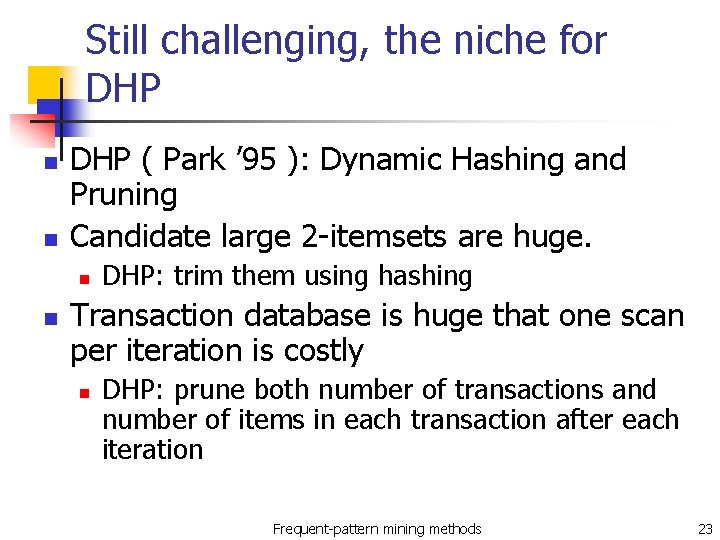

Still challenging, the niche for DHP n n DHP ( Park ’ 95 ): Dynamic Hashing and Pruning Candidate large 2 -itemsets are huge. n n DHP: trim them using hashing Transaction database is huge that one scan per iteration is costly n DHP: prune both number of transactions and number of items in each transaction after each iteration Frequent-pattern mining methods 23

How does it look like? DHP Apriori Generate candidate set Count support Make new hash table Frequent-pattern mining methods 24

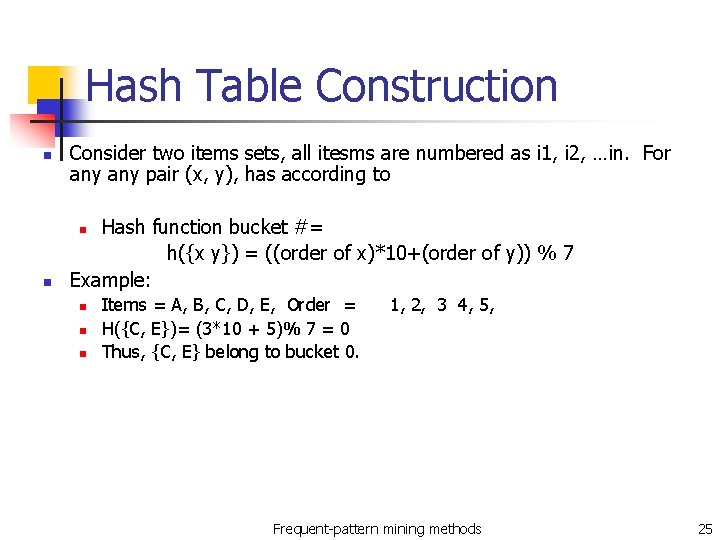

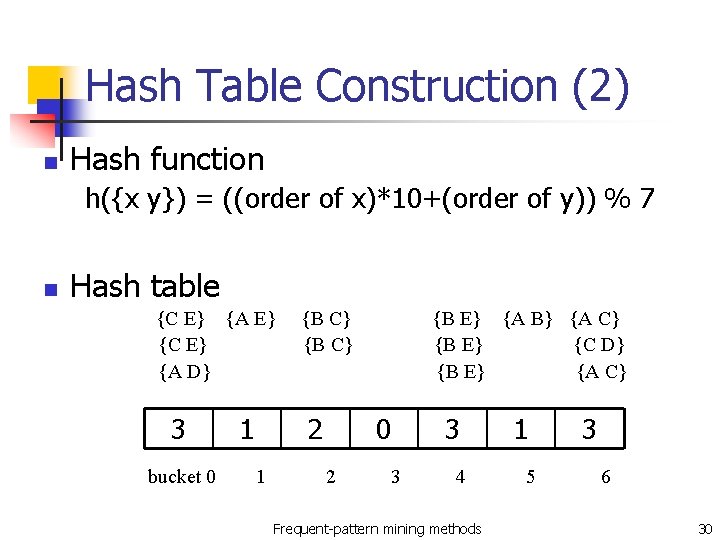

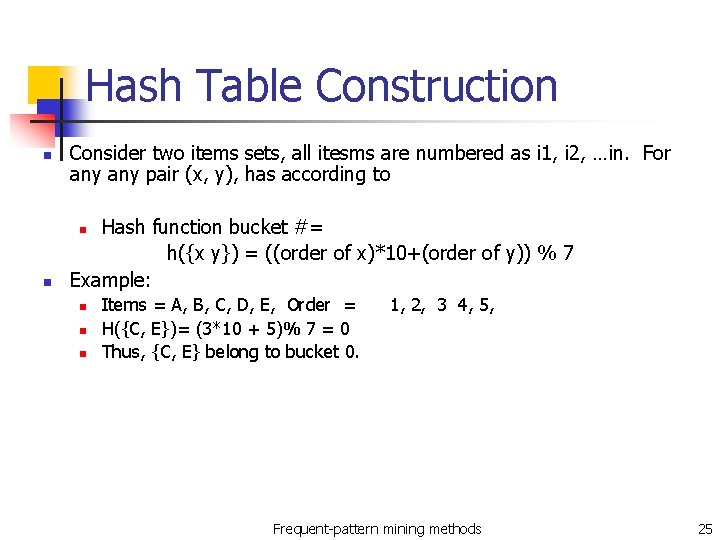

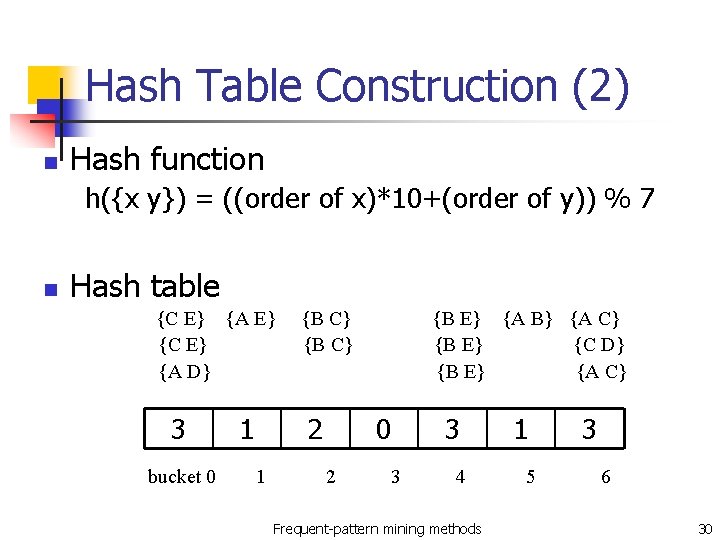

Hash Table Construction n Consider two items sets, all itesms are numbered as i 1, i 2, …in. For any pair (x, y), has according to Hash function bucket #= h({x y}) = ((order of x)*10+(order of y)) % 7 Example: n n n Items = A, B, C, D, E, Order = H({C, E})= (3*10 + 5)% 7 = 0 Thus, {C, E} belong to bucket 0. 1, 2, 3 4, 5, Frequent-pattern mining methods 25

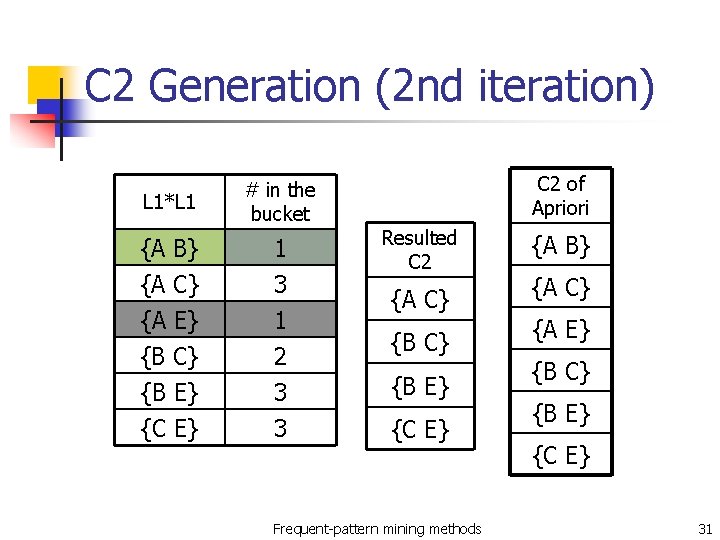

How to trim candidate itemsets n n In k-iteration, hash all “appearing” k+1 itemsets in a hashtable, count all the occurrences of an itemset in the correspondent bucket. In k+1 iteration, examine each of the candidate itemset to see if its correspondent bucket value is above the support ( necessary condition ) Frequent-pattern mining methods 26

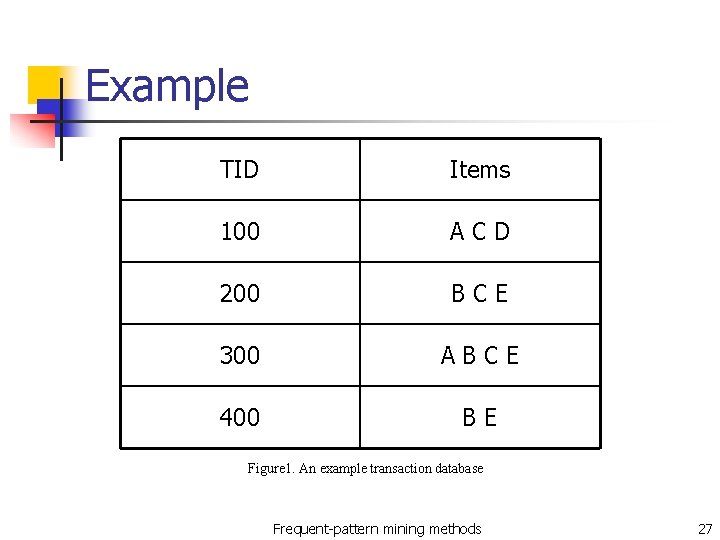

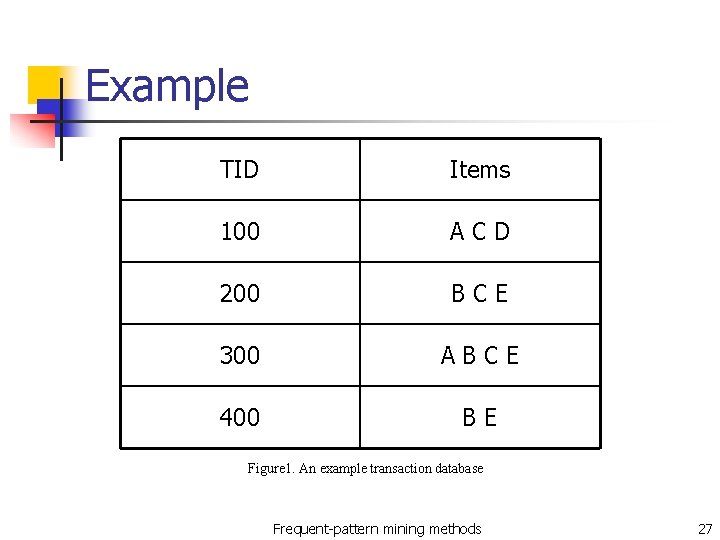

Example TID Items 100 ACD 200 BCE 300 ABCE 400 BE Figure 1. An example transaction database Frequent-pattern mining methods 27

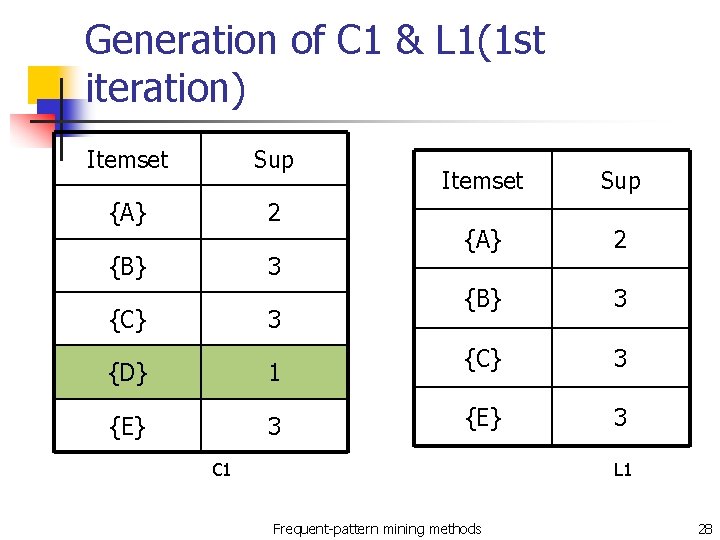

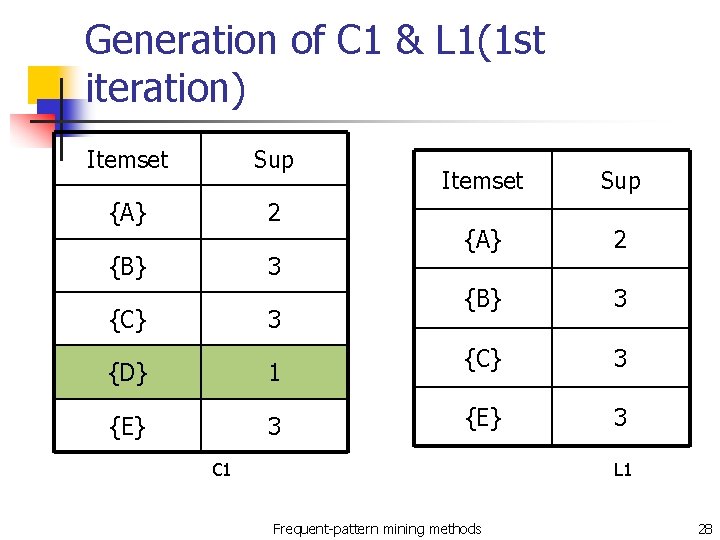

Generation of C 1 & L 1(1 st iteration) Itemset Sup {A} 2 {B} 3 {C} 3 {D} 1 {E} 3 Itemset Sup {A} 2 {B} 3 {C} 3 {E} 3 C 1 L 1 Frequent-pattern mining methods 28

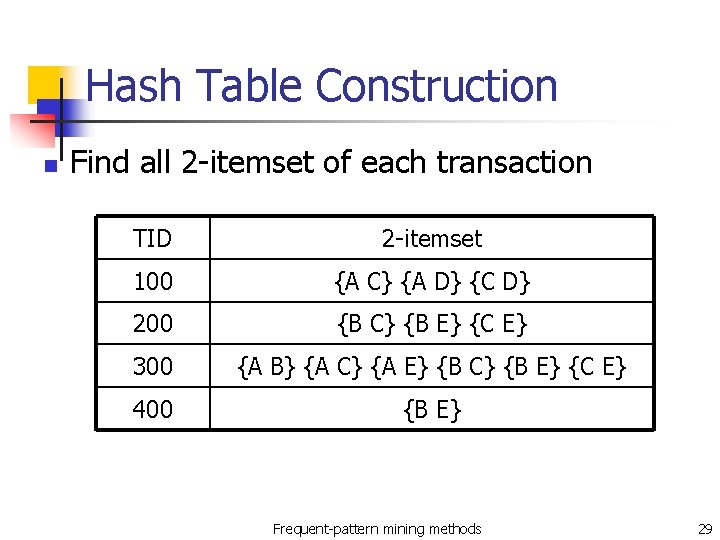

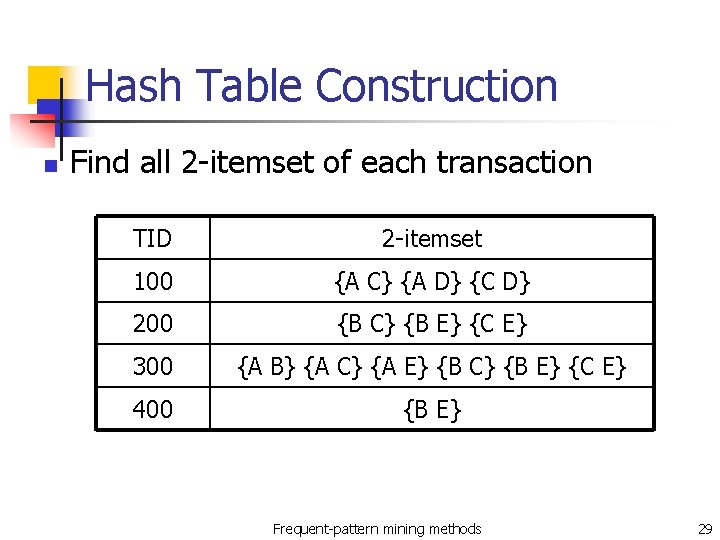

Hash Table Construction n Find all 2 -itemset of each transaction TID 2 -itemset 100 {A C} {A D} {C D} 200 {B C} {B E} {C E} 300 {A B} {A C} {A E} {B C} {B E} {C E} 400 {B E} Frequent-pattern mining methods 29

Hash Table Construction (2) n Hash function h({x y}) = ((order of x)*10+(order of y)) % 7 n Hash table {C E} {A E} {C E} {A D} 3 bucket 0 1 {B C} 2 1 {B E} {A B} {A C} {B E} {C D} {B E} {A C} 0 2 3 3 4 Frequent-pattern mining methods 1 5 3 6 30

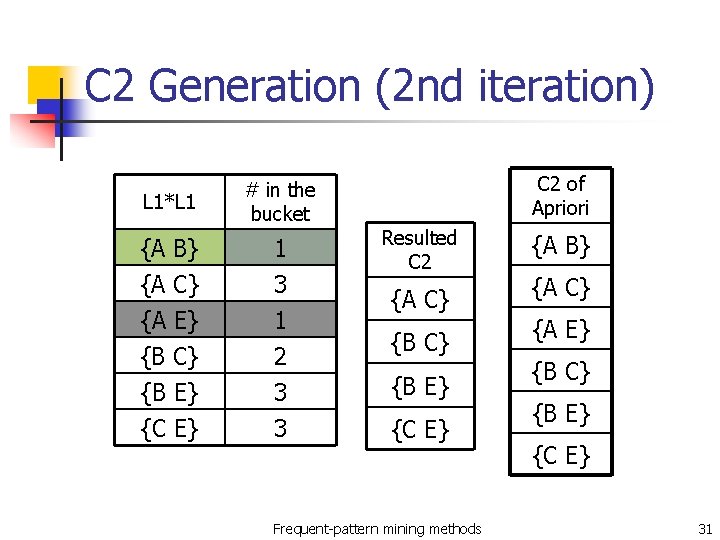

C 2 Generation (2 nd iteration) C 2 of Apriori L 1*L 1 # in the bucket {A B} {A C} {A E} {B C} {B E} 1 3 1 2 3 Resulted C 2 {C E} 3 {C E} {A C} {B E} Frequent-pattern mining methods {A B} {A C} {A E} {B C} {B E} {C E} 31

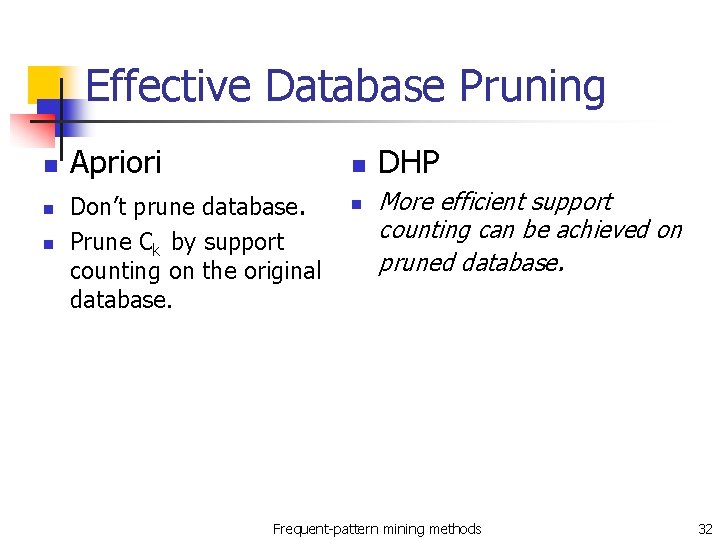

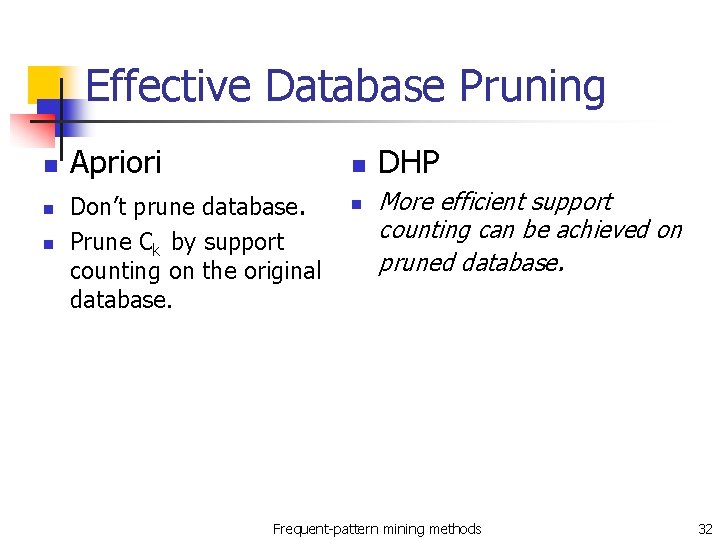

Effective Database Pruning n n n Apriori n Don’t prune database. Prune Ck by support counting on the original database. n DHP More efficient support counting can be achieved on pruned database. Frequent-pattern mining methods 32

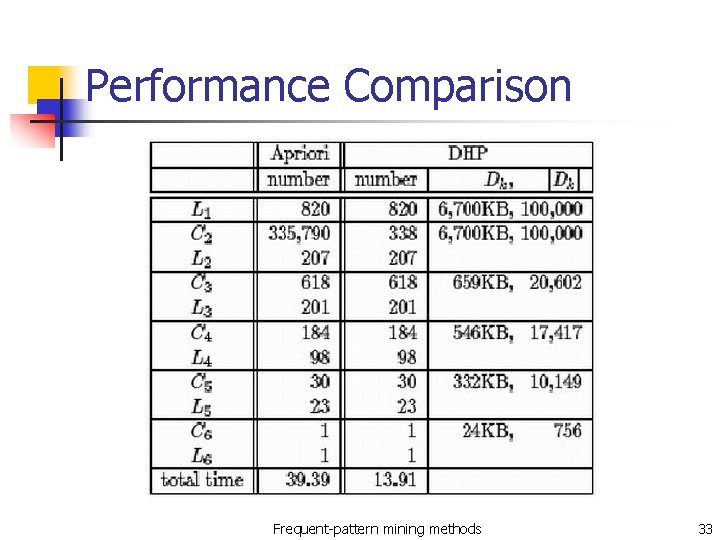

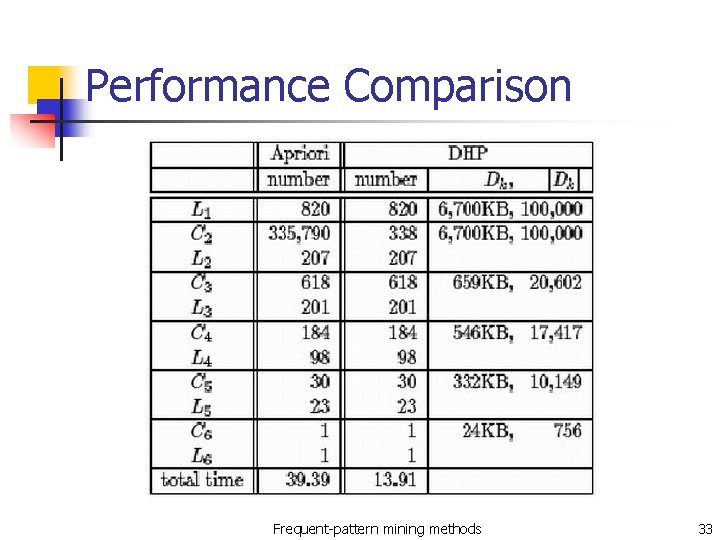

Performance Comparison Frequent-pattern mining methods 33

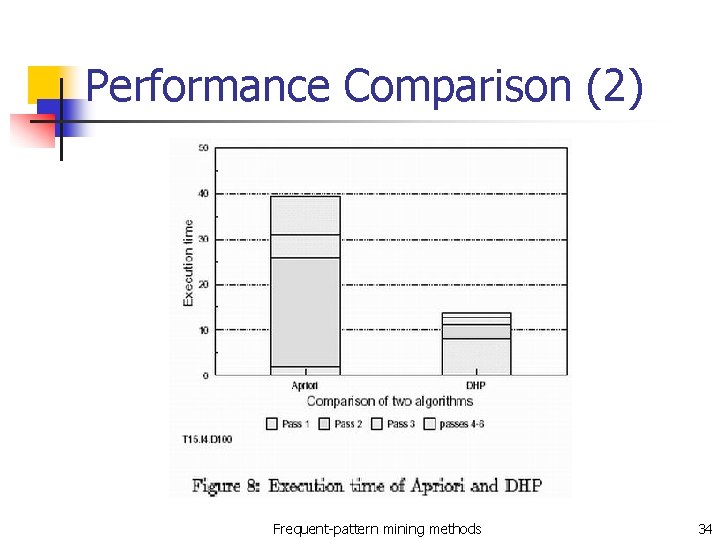

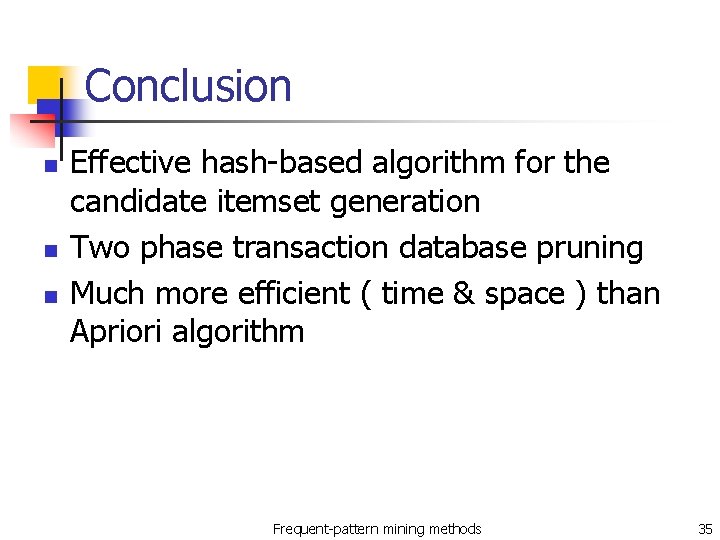

Performance Comparison (2) Frequent-pattern mining methods 34

Conclusion n Effective hash-based algorithm for the candidate itemset generation Two phase transaction database pruning Much more efficient ( time & space ) than Apriori algorithm Frequent-pattern mining methods 35