Association Rule Mining II Instructor Qiang Yang Thanks

![Redundant Rules [SA 95] n Which rule is redundant? n n milk wheat bread, Redundant Rules [SA 95] n Which rule is redundant? n n milk wheat bread,](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-27.jpg)

![INCREMENTAL MINING [CHNW 96] n n Rules in DB were found a set of INCREMENTAL MINING [CHNW 96] n n Rules in DB were found a set of](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-28.jpg)

![CORRELATION RULES n Association does not measure correlation [BMS 97, AY 98]. n Among CORRELATION RULES n Association does not measure correlation [BMS 97, AY 98]. n Among](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-29.jpg)

![Chi-square Correlation [BMS 97] n The cutoff value at 95% significance level is 3. Chi-square Correlation [BMS 97] n The cutoff value at 95% significance level is 3.](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-31.jpg)

- Slides: 42

Association Rule Mining (II) Instructor: Qiang Yang Thanks: J. Han and J. Pei Frequent-pattern mining methods

Bottleneck of Frequent-pattern Mining n n Multiple database scans are costly Mining long patterns needs many passes of scanning and generates lots of candidates n To find frequent itemset i 1 i 2…i 100 n n # of scans: 100 # of Candidates: (1001) + (1002) + … + (110000) = 21001 = 1. 27*1030 ! Bottleneck: candidate-generation-and-test Can we avoid candidate generation? Frequent-pattern mining methods 2

FP-growth: Frequent-pattern Mining Without Candidate Generation n n Heuristic: let P be a frequent itemset, S be the set of transactions contain P, and x be an item. If x is a frequent item in S, {x} P must be a frequent itemset No candidate generation! A compact data structure, FP-tree, to store information for frequent pattern mining Recursive mining algorithm for mining complete set of frequent patterns Frequent-pattern mining methods 3

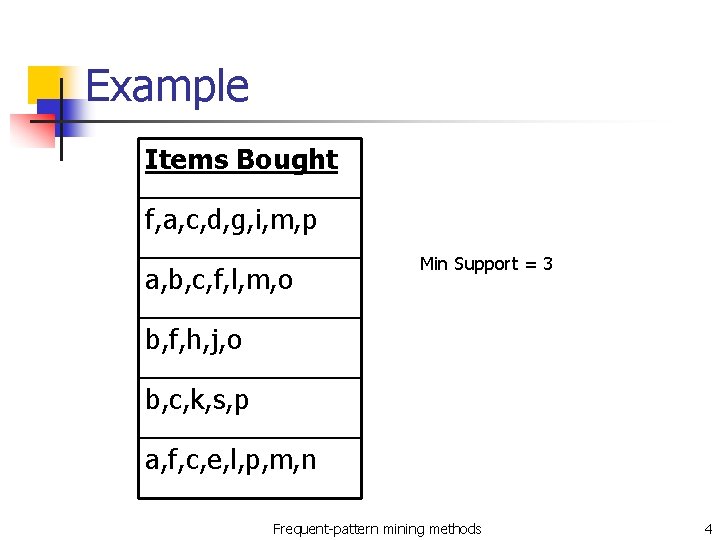

Example Items Bought f, a, c, d, g, i, m, p a, b, c, f, l, m, o Min Support = 3 b, f, h, j, o b, c, k, s, p a, f, c, e, l, p, m, n Frequent-pattern mining methods 4

Scan the database n List of frequent items, sorted: (item: support) n n n <(f: 4), (c: 4), (a: 3), (b: 3), (m: 3), (p: 3)> The root of the tree is created and labeled with “{}” Scan the database n n Scanning the first transaction leads to the first branch of the tree: <(f: 1), (c: 1), (a: 1), (m: 1), (p: 1)> Order according to frequency Frequent-pattern mining methods 5

Scanning TID=100 root Transaction Database TID 100 Items f, a, c, d, g, i, m, p Header Table Node Item count f 1 c 1 a 1 m p {} f: 1 head 1 1 c: 1 a: 1 m: 1 p: 1 Frequent-pattern mining methods 6

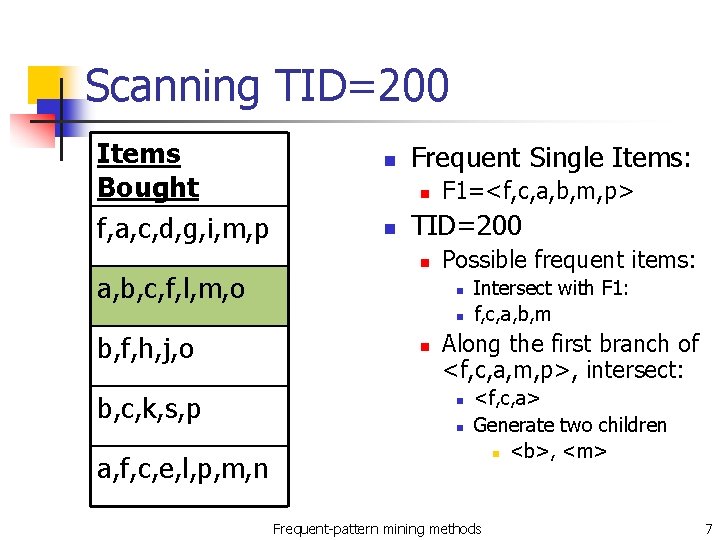

Scanning TID=200 Items Bought f, a, c, d, g, i, m, p a, b, c, f, l, m, o n Frequent Single Items: n n F 1=<f, c, a, b, m, p> TID=200 n Possible frequent items: n n b, f, h, j, o b, c, k, s, p a, f, c, e, l, p, m, n n Intersect with F 1: f, c, a, b, m Along the first branch of <f, c, a, m, p>, intersect: n n <f, c, a> Generate two children n <b>, <m> Frequent-pattern mining methods 7

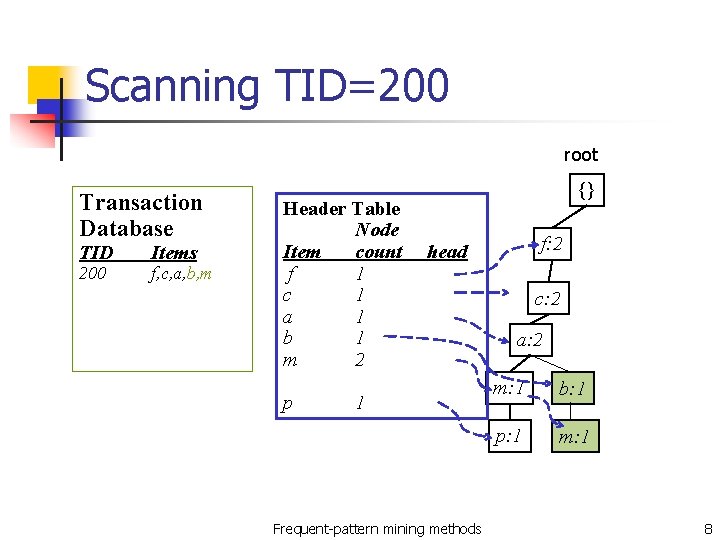

Scanning TID=200 root Transaction Database TID 200 Items f, c, a, b, m Header Table Node Item count f 1 c 1 a 1 b 1 m 2 p {} f: 2 head 1 Frequent-pattern mining methods c: 2 a: 2 m: 1 b: 1 p: 1 m: 1 8

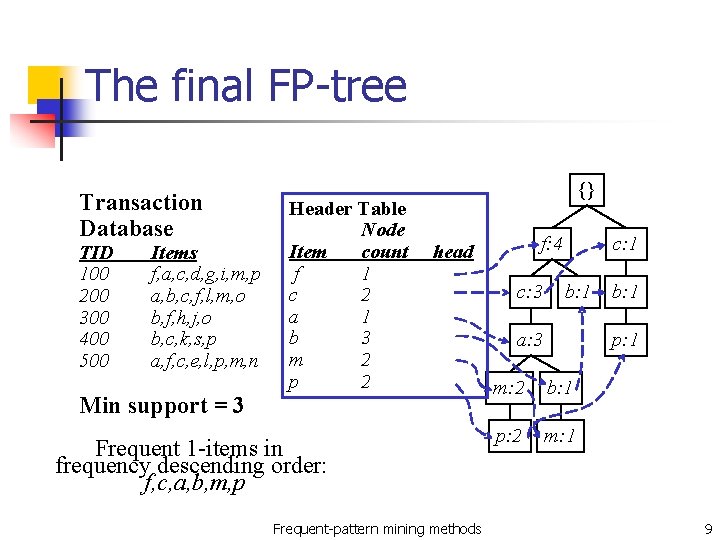

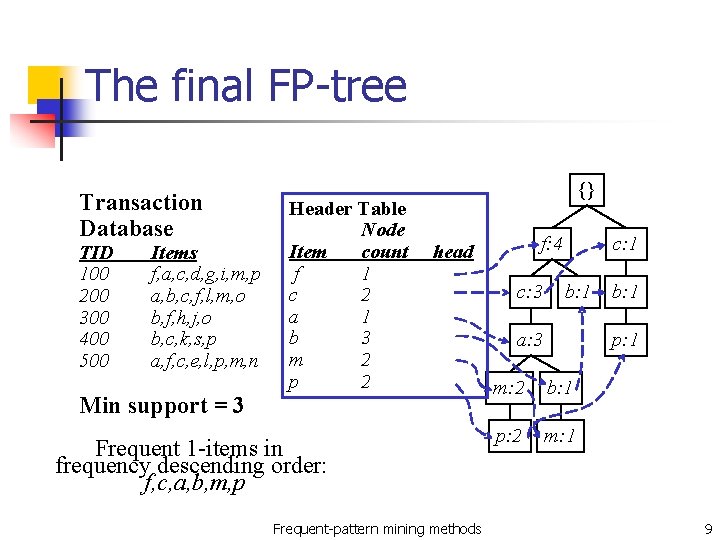

The final FP-tree Transaction Database TID 100 200 300 400 500 Items f, a, c, d, g, i, m, p a, b, c, f, l, m, o b, f, h, j, o b, c, k, s, p a, f, c, e, l, p, m, n Header Table Node Item count f 1 c 2 a 1 b 3 m 2 p 2 {} f: 4 head Min support = 3 Frequent 1 -items in frequency descending order: f, c, a, b, m, p Frequent-pattern mining methods c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 9

FP-Tree Construction n n Scans the database only twice Subsequent mining: based on the FP-tree Frequent-pattern mining methods 10

How to Mine an FP-tree? n Step 1: form conditional pattern base n Step 2: construct conditional FP-tree n Step 3: recursively mine conditional FP-trees Frequent-pattern mining methods 11

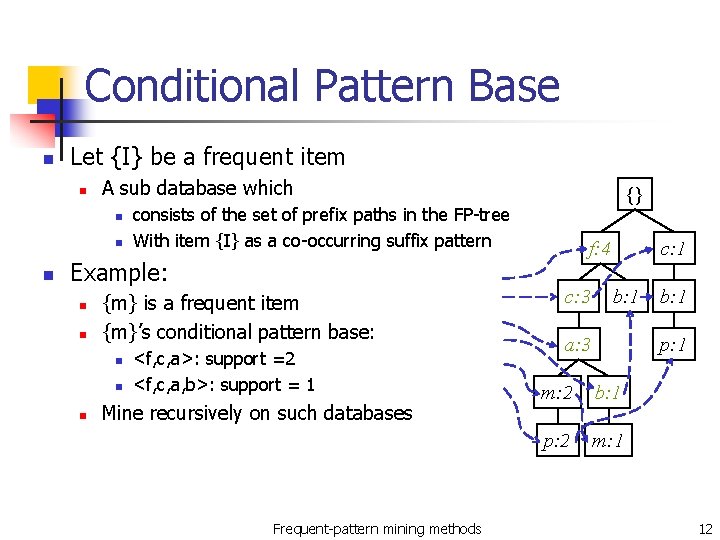

Conditional Pattern Base n Let {I} be a frequent item n A sub database which n n n consists of the set of prefix paths in the FP-tree With item {I} as a co-occurring suffix pattern Example: n n {m} is a frequent item {m}’s conditional pattern base: n n n {} <f, c, a>: support =2 <f, c, a, b>: support = 1 Mine recursively on such databases Frequent-pattern mining methods f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 12

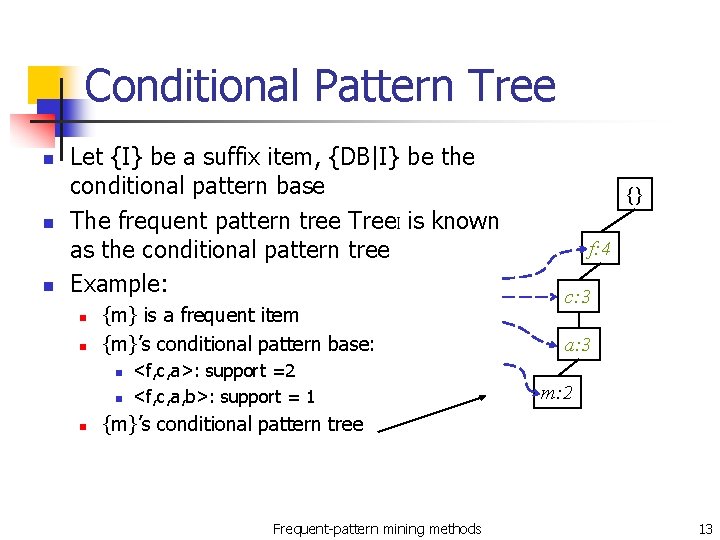

Conditional Pattern Tree n n n Let {I} be a suffix item, {DB|I} be the conditional pattern base The frequent pattern tree Tree. I is known as the conditional pattern tree Example: n n {m} is a frequent item {m}’s conditional pattern base: n n n <f, c, a>: support =2 <f, c, a, b>: support = 1 {} f: 4 c: 3 a: 3 m: 2 {m}’s conditional pattern tree Frequent-pattern mining methods 13

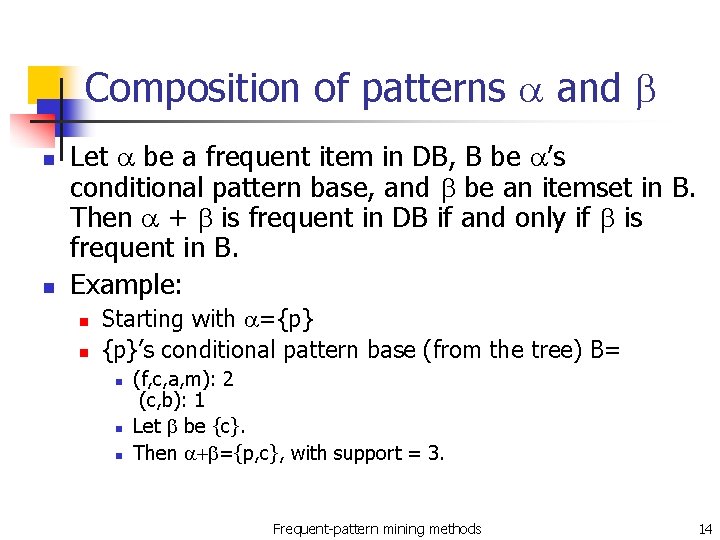

Composition of patterns a and b n n Let a be a frequent item in DB, B be a’s conditional pattern base, and b be an itemset in B. Then a + b is frequent in DB if and only if b is frequent in B. Example: n n Starting with a={p} {p}’s conditional pattern base (from the tree) B= n n n (f, c, a, m): 2 (c, b): 1 Let b be {c}. Then a+b={p, c}, with support = 3. Frequent-pattern mining methods 14

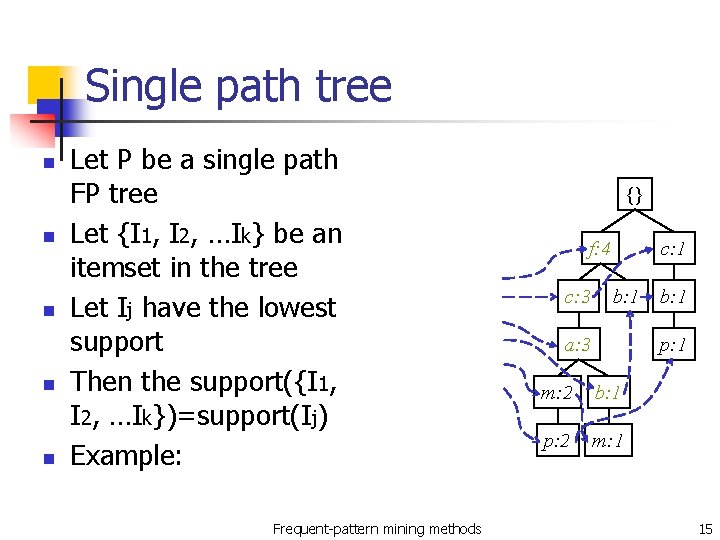

Single path tree n n n Let P be a single path FP tree Let {I 1, I 2, …Ik} be an itemset in the tree Let Ij have the lowest support Then the support({I 1, I 2, …Ik})=support(Ij) Example: Frequent-pattern mining methods {} f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 15

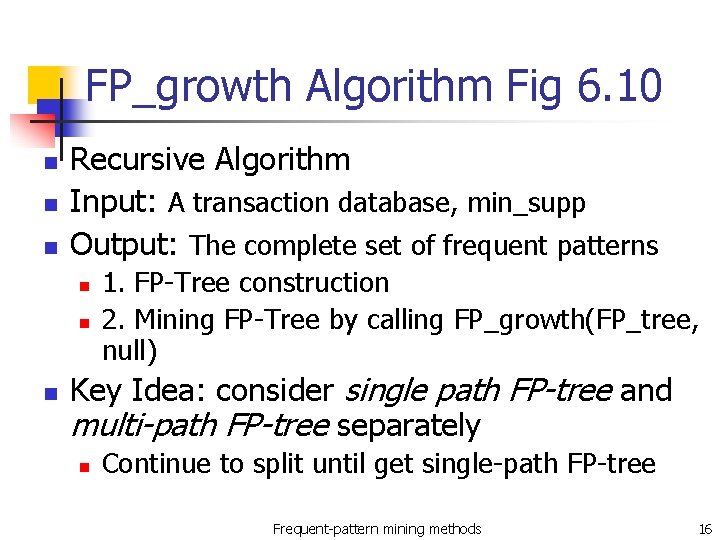

FP_growth Algorithm Fig 6. 10 n n n Recursive Algorithm Input: A transaction database, min_supp Output: The complete set of frequent patterns n n n 1. FP-Tree construction 2. Mining FP-Tree by calling FP_growth(FP_tree, null) Key Idea: consider single path FP-tree and multi-path FP-tree separately n Continue to split until get single-path FP-tree Frequent-pattern mining methods 16

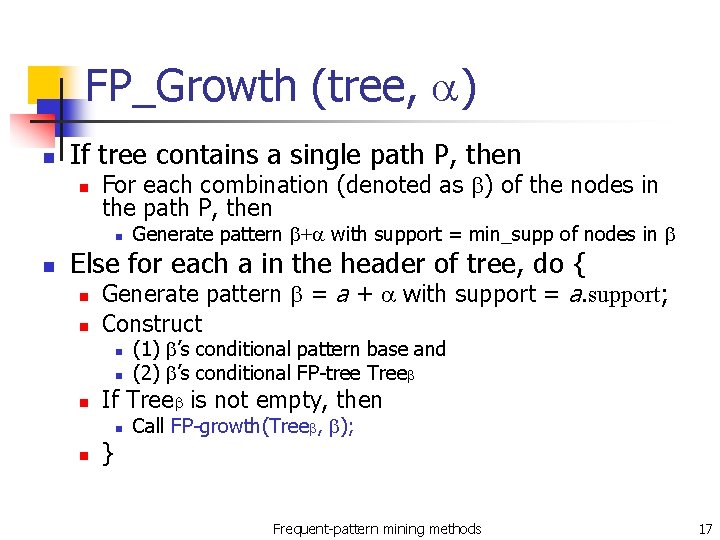

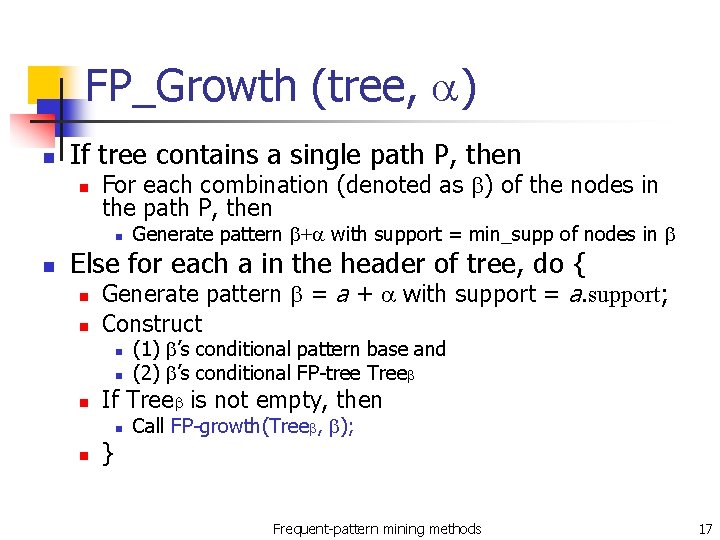

FP_Growth (tree, a) n If tree contains a single path P, then n For each combination (denoted as b) of the nodes in the path P, then n n Generate pattern b+a with support = min_supp of nodes in b Else for each a in the header of tree, do { n n Generate pattern b = a + a with support = a. support; Construct n n n If Treeb is not empty, then n n (1) b’s conditional pattern base and (2) b’s conditional FP-tree Treeb } Call FP-growth(Treeb, b); Frequent-pattern mining methods 17

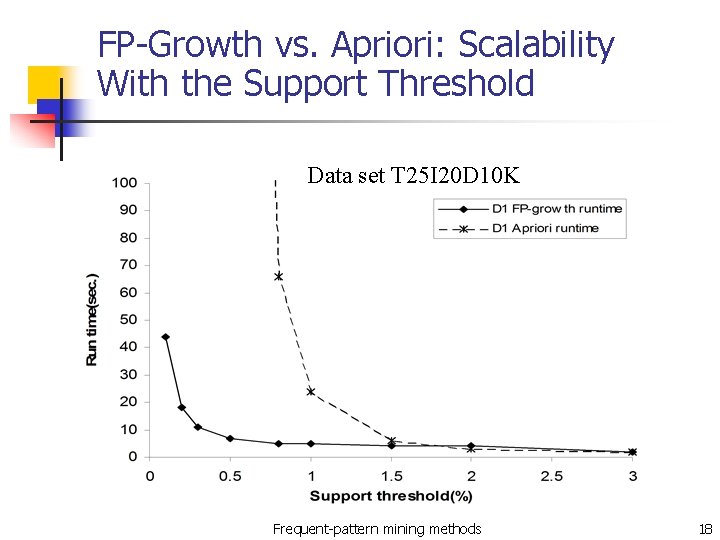

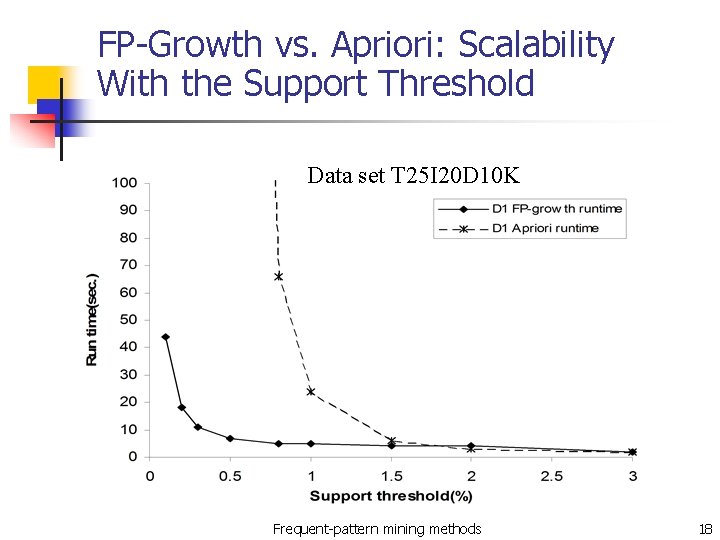

FP-Growth vs. Apriori: Scalability With the Support Threshold Data set T 25 I 20 D 10 K Frequent-pattern mining methods 18

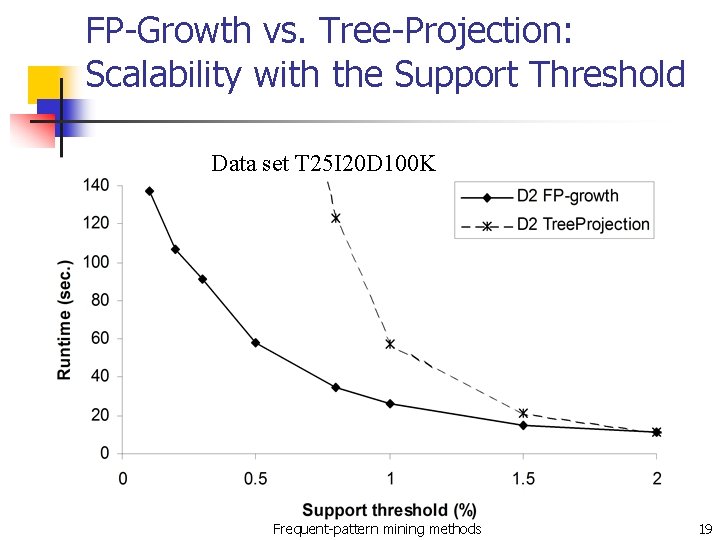

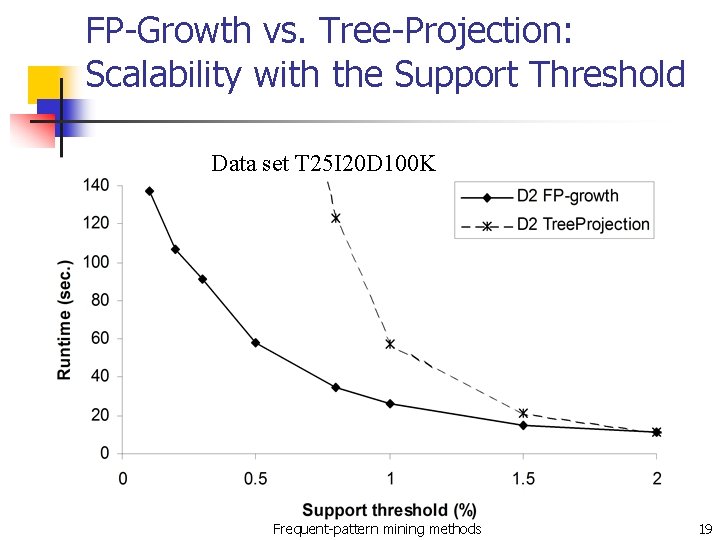

FP-Growth vs. Tree-Projection: Scalability with the Support Threshold Data set T 25 I 20 D 100 K Frequent-pattern mining methods 19

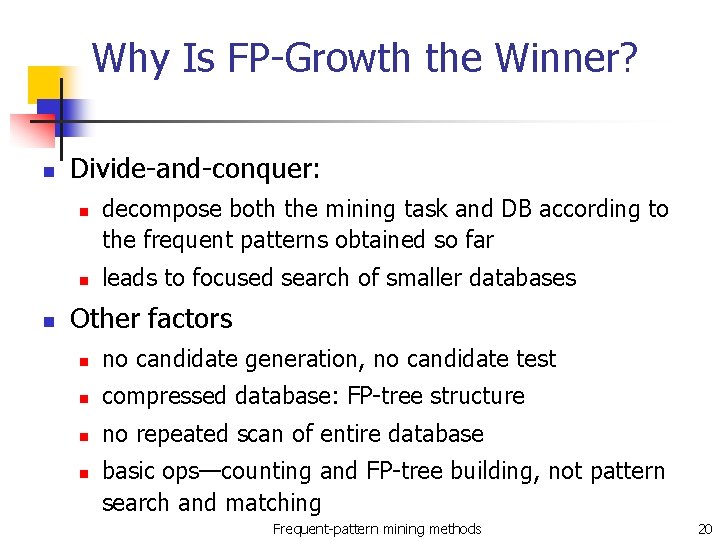

Why Is FP-Growth the Winner? n Divide-and-conquer: n n n decompose both the mining task and DB according to the frequent patterns obtained so far leads to focused search of smaller databases Other factors n no candidate generation, no candidate test n compressed database: FP-tree structure n no repeated scan of entire database n basic ops—counting and FP-tree building, not pattern search and matching Frequent-pattern mining methods 20

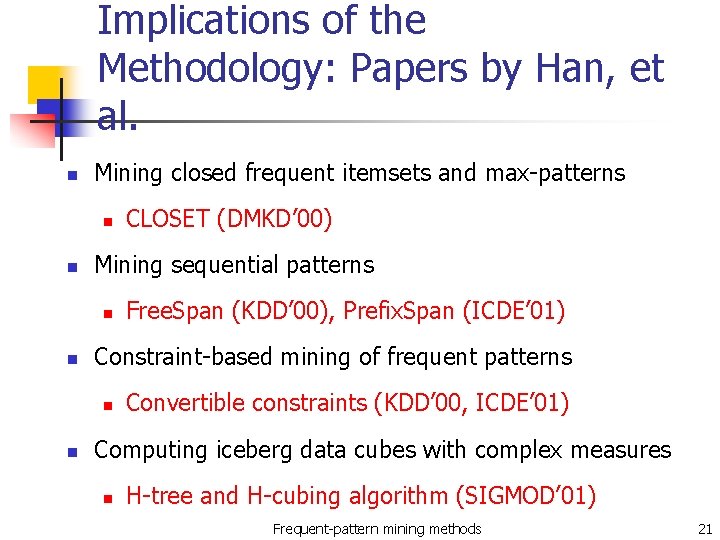

Implications of the Methodology: Papers by Han, et al. n Mining closed frequent itemsets and max-patterns n n Mining sequential patterns n n Free. Span (KDD’ 00), Prefix. Span (ICDE’ 01) Constraint-based mining of frequent patterns n n CLOSET (DMKD’ 00) Convertible constraints (KDD’ 00, ICDE’ 01) Computing iceberg data cubes with complex measures n H-tree and H-cubing algorithm (SIGMOD’ 01) Frequent-pattern mining methods 21

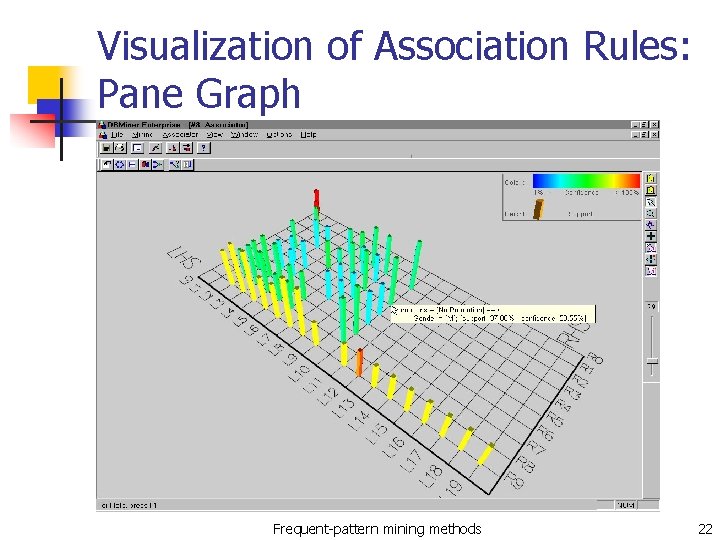

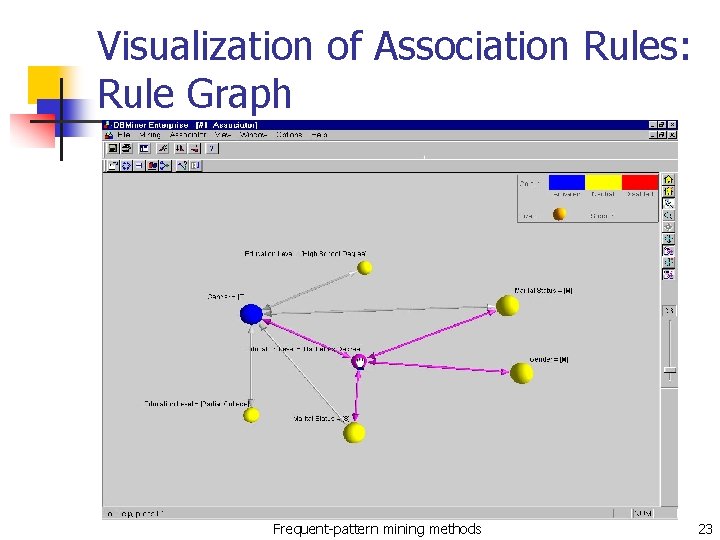

Visualization of Association Rules: Pane Graph Frequent-pattern mining methods 22

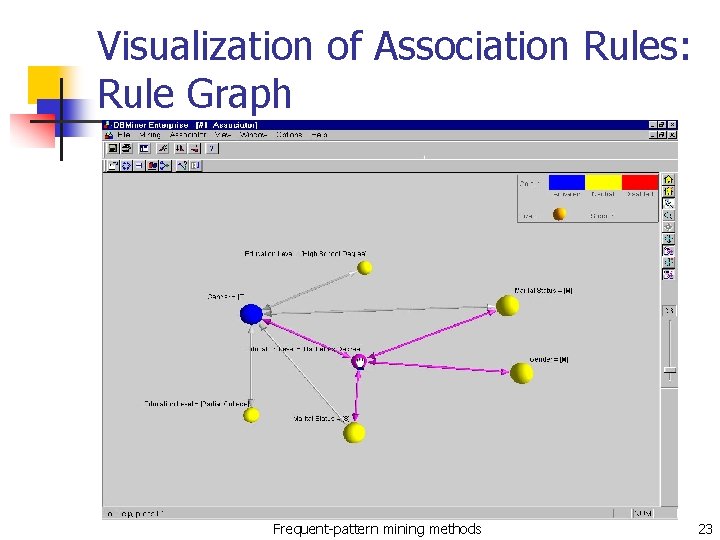

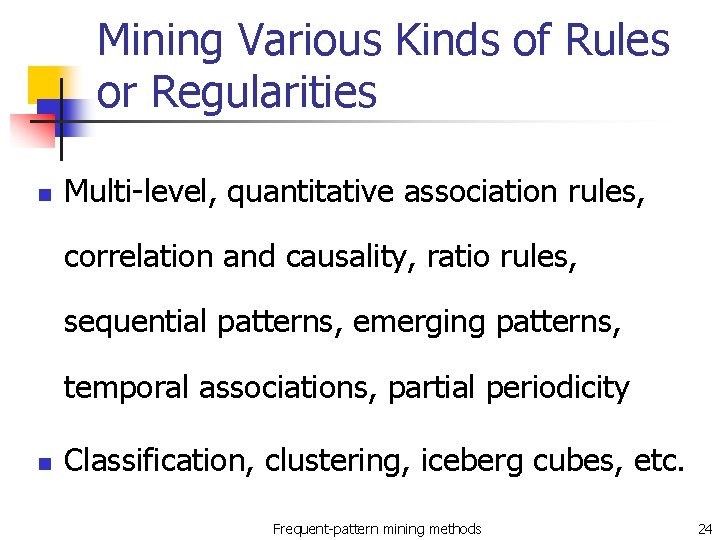

Visualization of Association Rules: Rule Graph Frequent-pattern mining methods 23

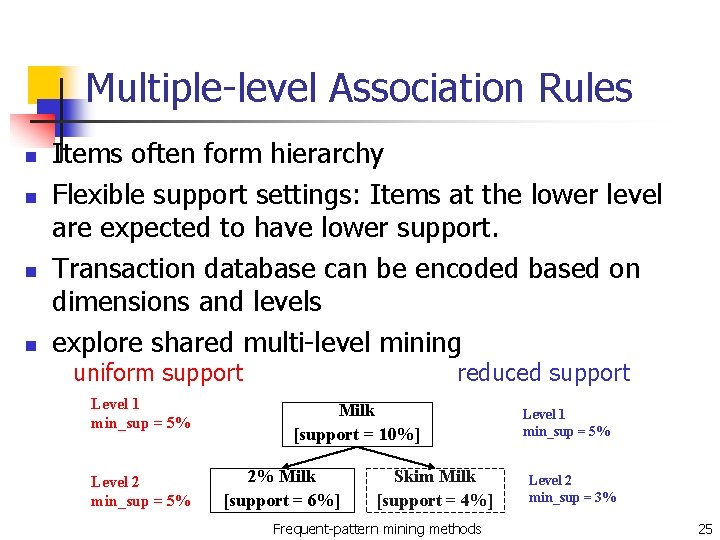

Mining Various Kinds of Rules or Regularities n Multi-level, quantitative association rules, correlation and causality, ratio rules, sequential patterns, emerging patterns, temporal associations, partial periodicity n Classification, clustering, iceberg cubes, etc. Frequent-pattern mining methods 24

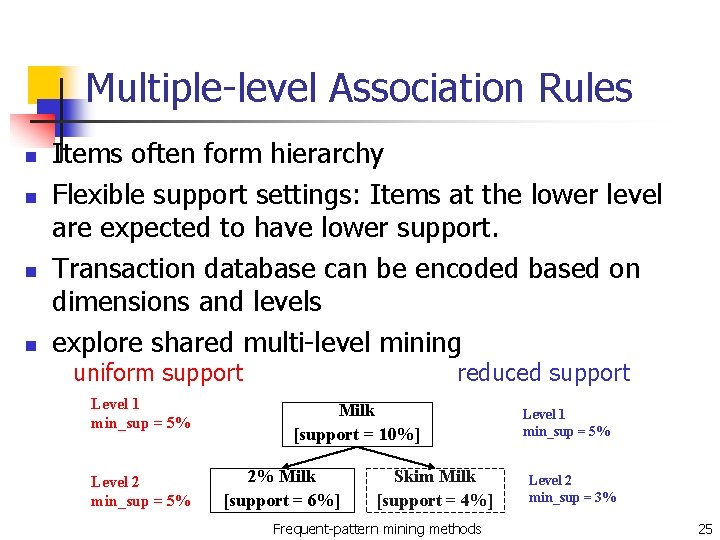

Multiple-level Association Rules n n Items often form hierarchy Flexible support settings: Items at the lower level are expected to have lower support. Transaction database can be encoded based on dimensions and levels explore shared multi-level mining uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% reduced support Milk [support = 10%] 2% Milk [support = 6%] Skim Milk [support = 4%] Frequent-pattern mining methods Level 1 min_sup = 5% Level 2 min_sup = 3% 25

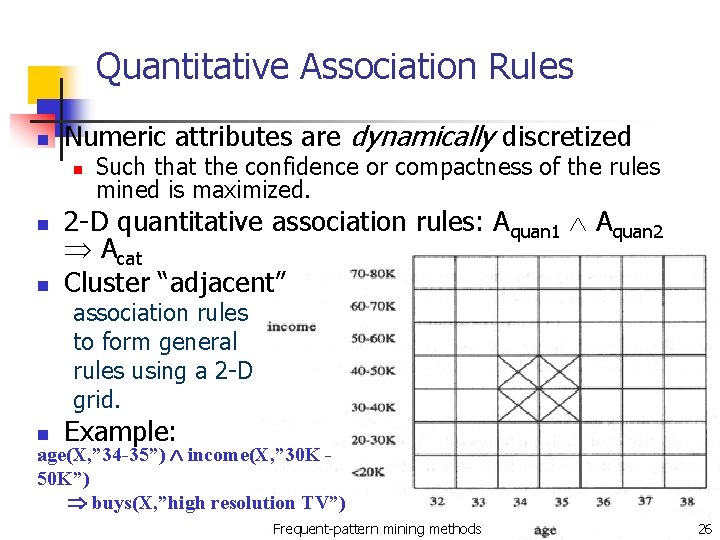

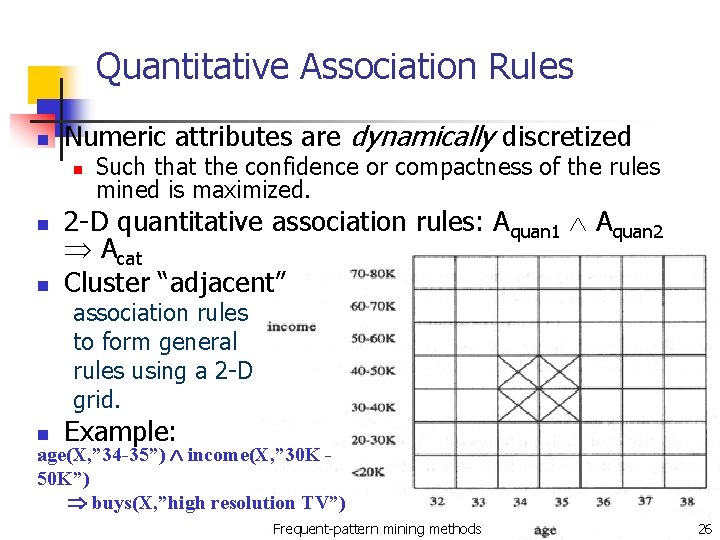

Quantitative Association Rules n Numeric attributes are dynamically discretized n n n Such that the confidence or compactness of the rules mined is maximized. 2 -D quantitative association rules: Aquan 1 Aquan 2 Acat Cluster “adjacent” association rules to form general rules using a 2 -D grid. n Example: age(X, ” 34 -35”) income(X, ” 30 K 50 K”) buys(X, ”high resolution TV”) Frequent-pattern mining methods 26

![Redundant Rules SA 95 n Which rule is redundant n n milk wheat bread Redundant Rules [SA 95] n Which rule is redundant? n n milk wheat bread,](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-27.jpg)

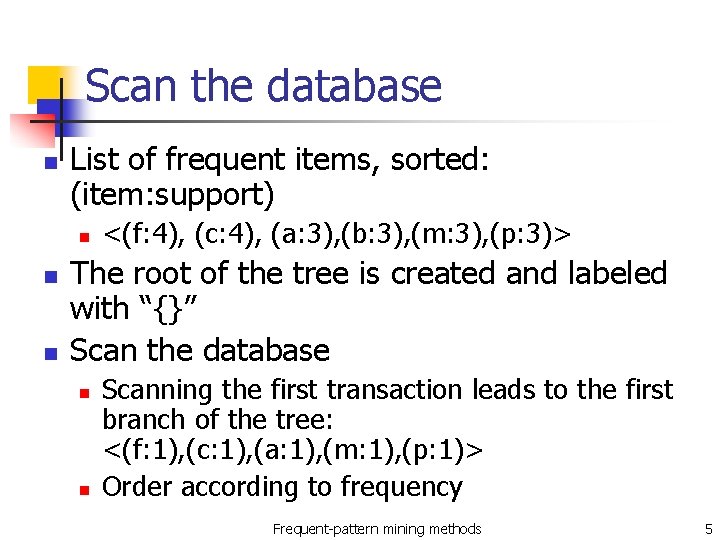

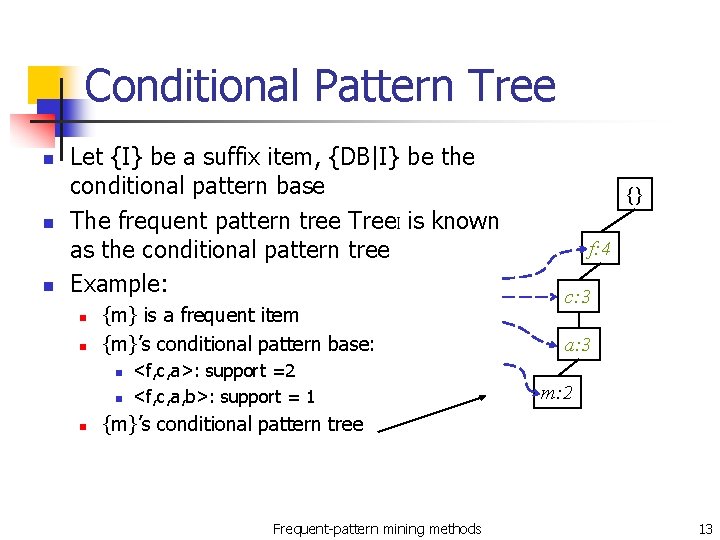

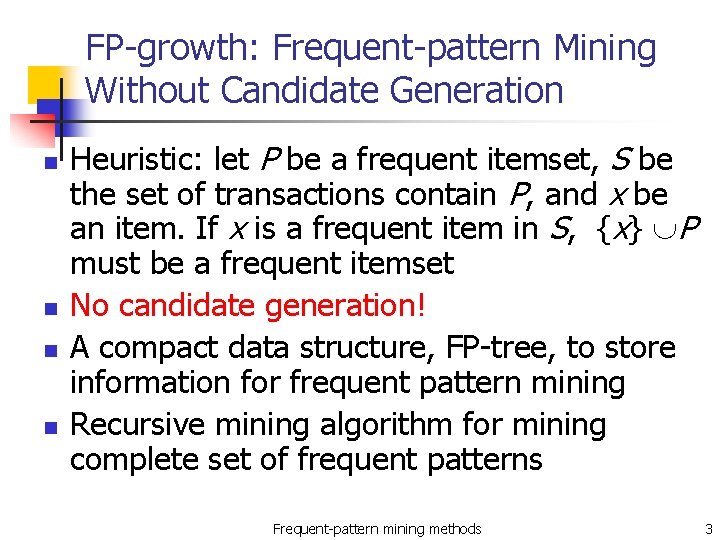

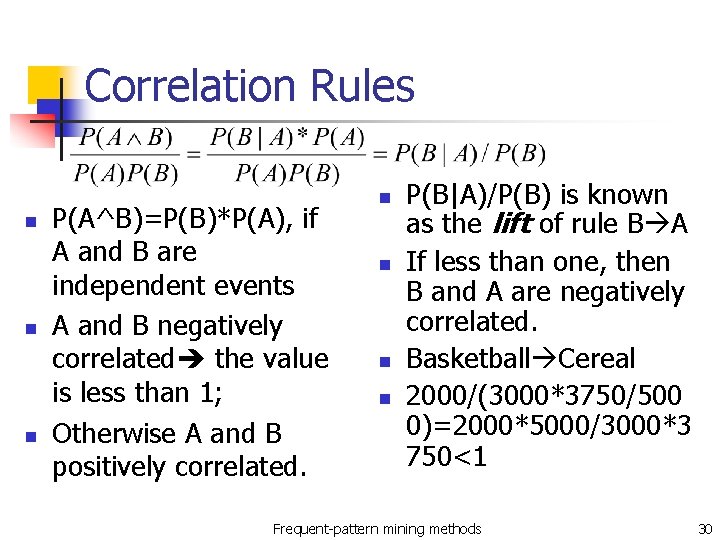

Redundant Rules [SA 95] n Which rule is redundant? n n milk wheat bread, [support = 8%, confidence = 70%] “skim milk” wheat bread, [support = 2%, confidence = 72%] The first rule is more general than the second rule. A rule is redundant if its support is close to the “expected” value, based on a general rule, and its confidence is close to that of the general rule. Frequent-pattern mining methods 27

![INCREMENTAL MINING CHNW 96 n n Rules in DB were found a set of INCREMENTAL MINING [CHNW 96] n n Rules in DB were found a set of](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-28.jpg)

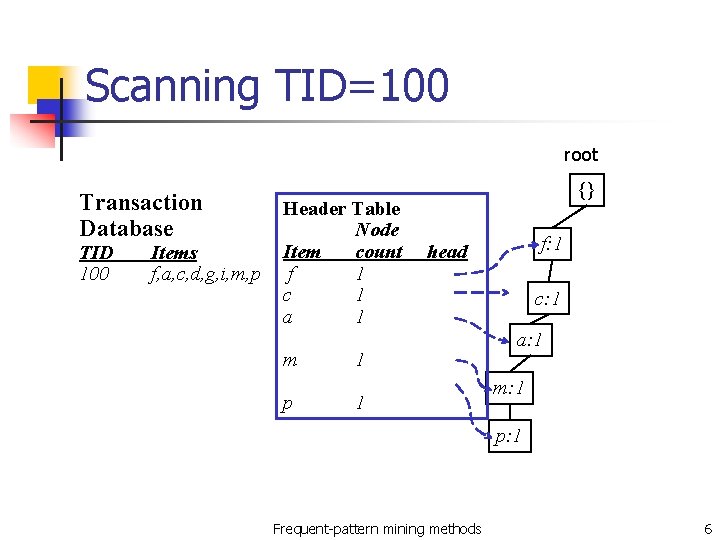

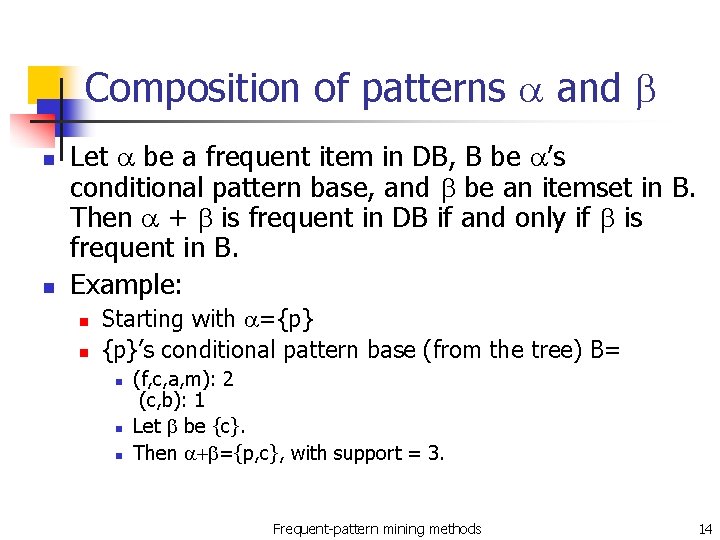

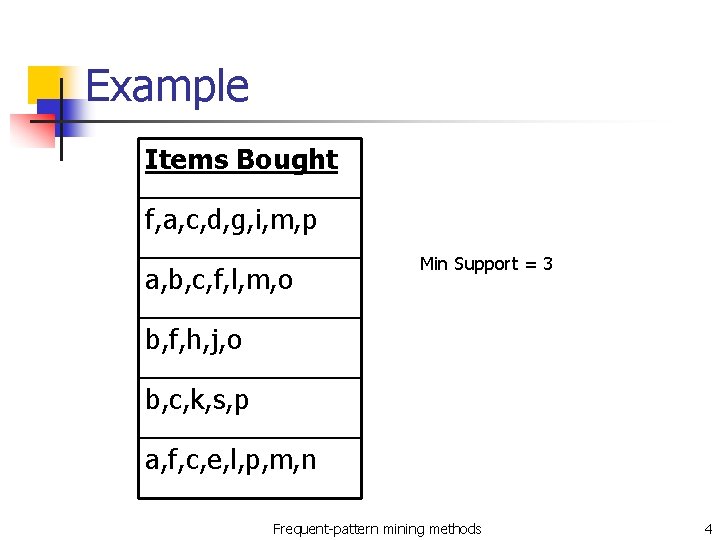

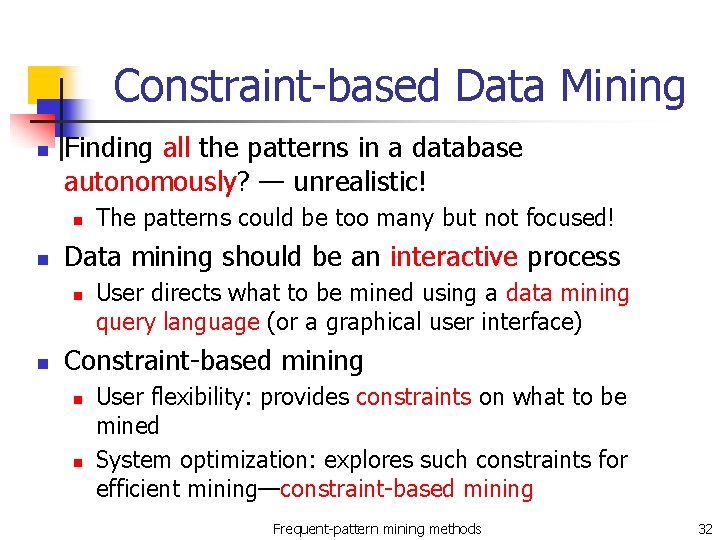

INCREMENTAL MINING [CHNW 96] n n Rules in DB were found a set of new tuples db is added to DB, Task: to find new rules in DB + db. n n Properties of Itemsets: n n n Usually, DB is much larger than db. frequent in DB + db if frequent in both DB and db. infrequent in DB + db if also in both DB and db. frequent only in DB, then merge with counts in db. n No DB scan is needed! frequent only in db, then scan DB once to update their itemset counts. Same principle applicable to distributed/parallel mining.

![CORRELATION RULES n Association does not measure correlation BMS 97 AY 98 n Among CORRELATION RULES n Association does not measure correlation [BMS 97, AY 98]. n Among](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-29.jpg)

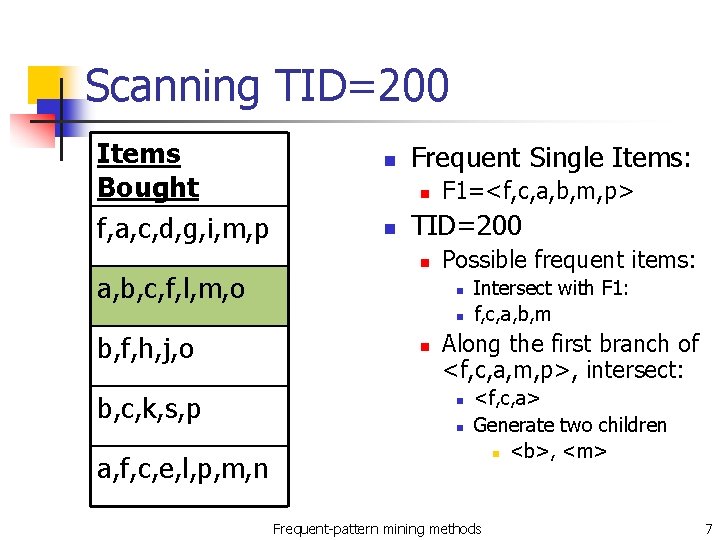

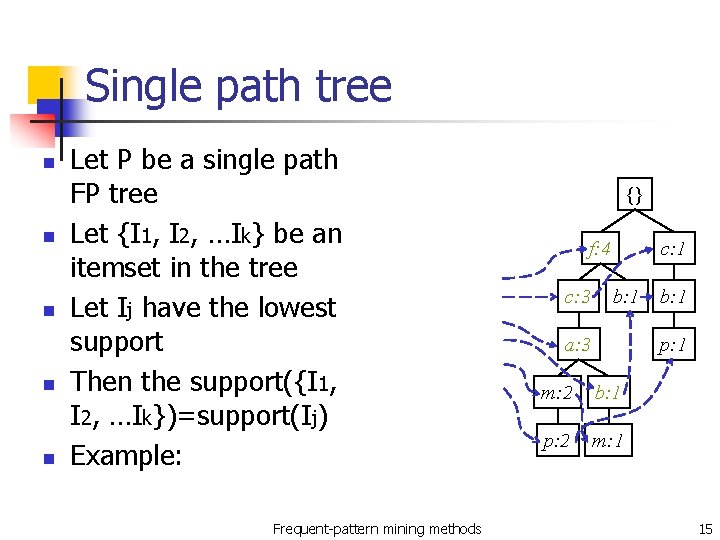

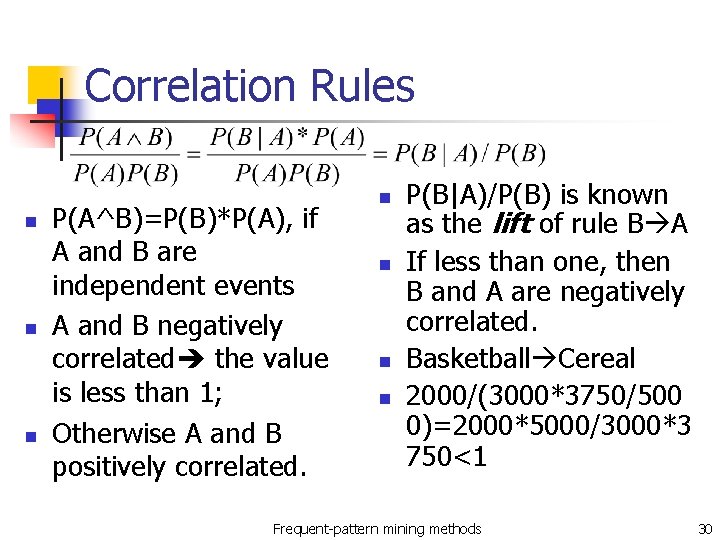

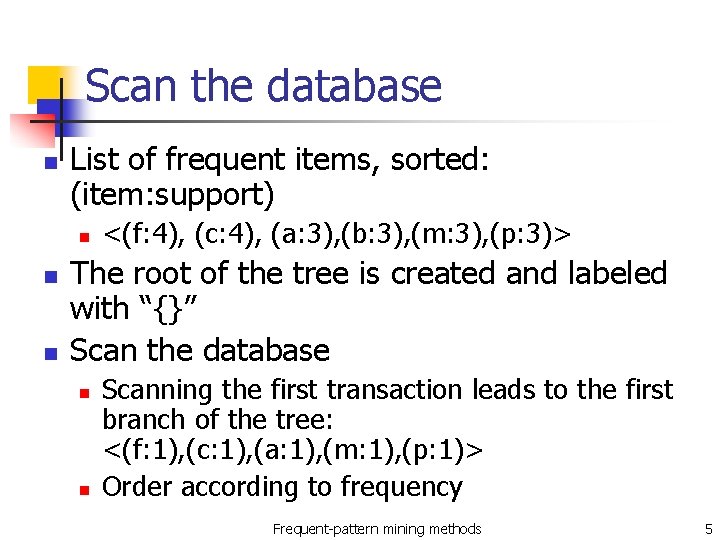

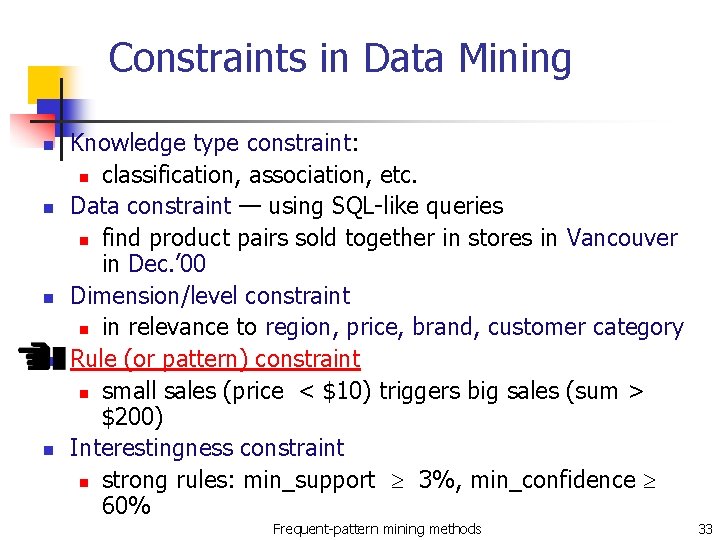

CORRELATION RULES n Association does not measure correlation [BMS 97, AY 98]. n Among 5000 students n n n play basketball eat cereal [40%, 66. 7%] Conclusion: “basketball and cereal are correlated” is misleading n n 3000 play basketball, 3750 eat cereal, 2000 do both because the overall percentage of students eating cereal is 75%, higher than 66. 7%. Confidence does not always give correct picture! Frequent-pattern mining methods 29

Correlation Rules n n n P(A^B)=P(B)*P(A), if A and B are independent events A and B negatively correlated the value is less than 1; Otherwise A and B positively correlated. n n P(B|A)/P(B) is known as the lift of rule B A If less than one, then B and A are negatively correlated. Basketball Cereal 2000/(3000*3750/500 0)=2000*5000/3000*3 750<1 Frequent-pattern mining methods 30

![Chisquare Correlation BMS 97 n The cutoff value at 95 significance level is 3 Chi-square Correlation [BMS 97] n The cutoff value at 95% significance level is 3.](https://slidetodoc.com/presentation_image_h/b316e9e9fa1f1868d3344e74dd8995c9/image-31.jpg)

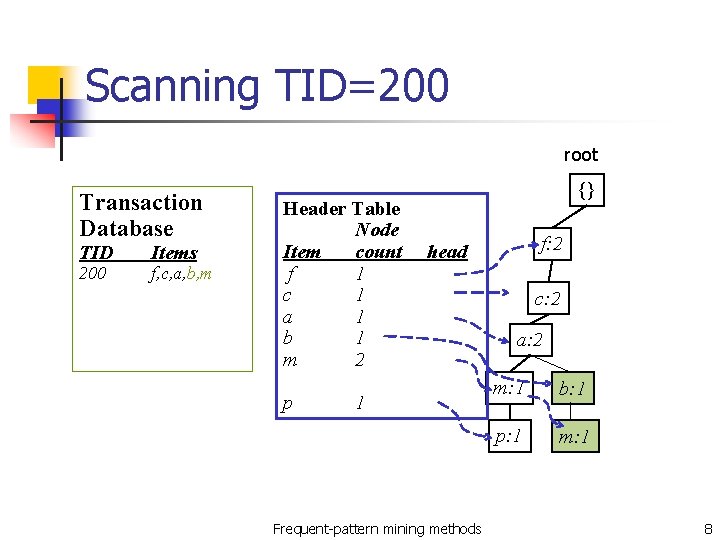

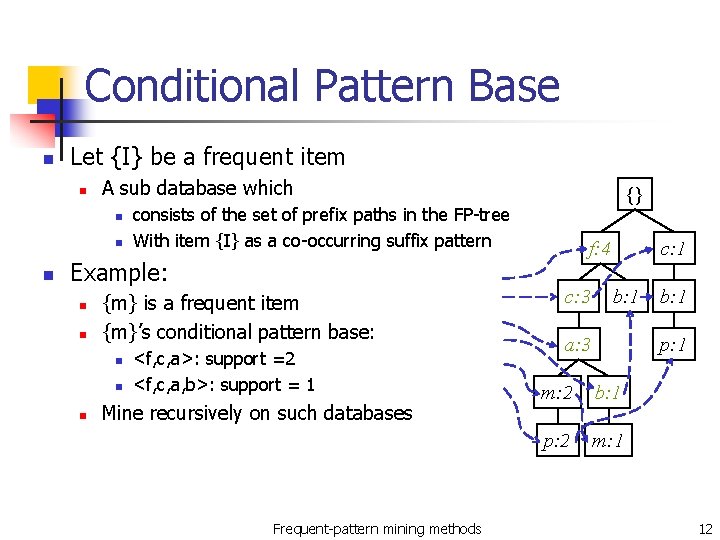

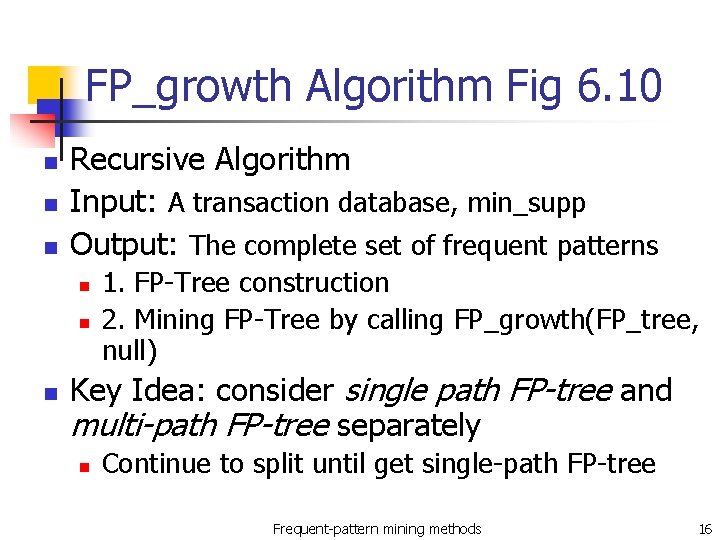

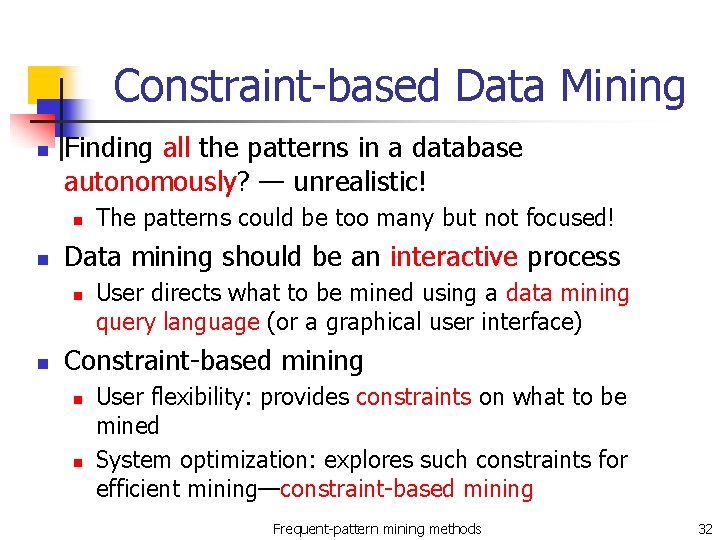

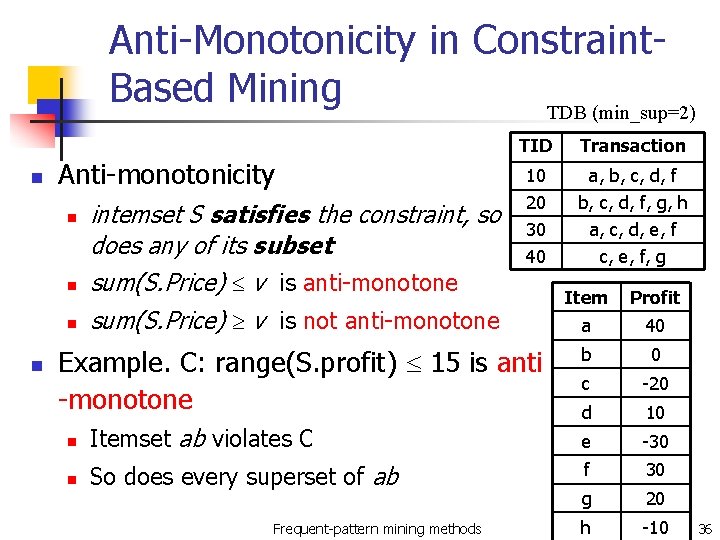

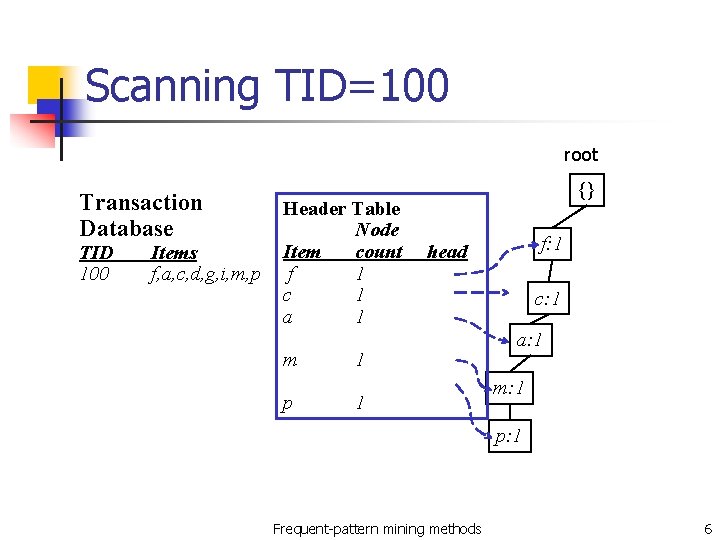

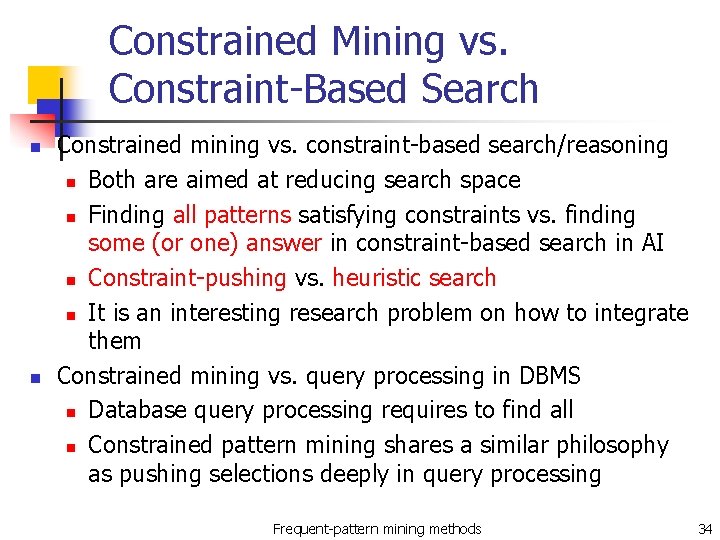

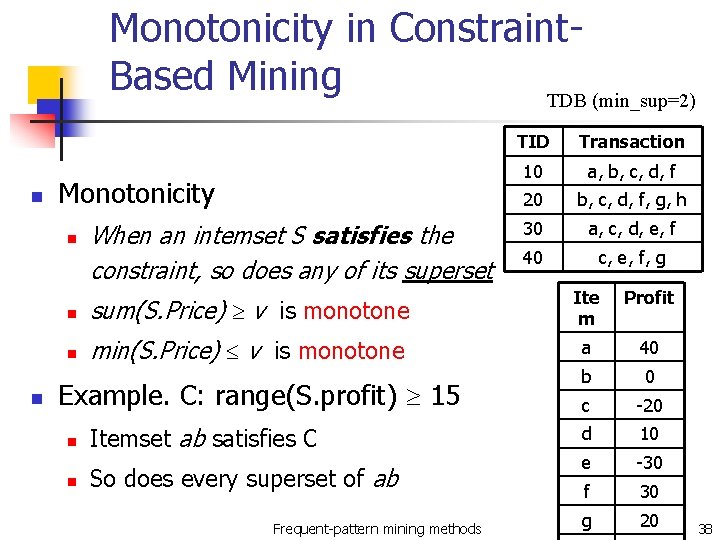

Chi-square Correlation [BMS 97] n The cutoff value at 95% significance level is 3. 84 > 0. 9 n Thus, we do not reject the independence assumption. Frequent-pattern mining methods 31

Constraint-based Data Mining n Finding all the patterns in a database autonomously? — unrealistic! n n Data mining should be an interactive process n n The patterns could be too many but not focused! User directs what to be mined using a data mining query language (or a graphical user interface) Constraint-based mining n n User flexibility: provides constraints on what to be mined System optimization: explores such constraints for efficient mining—constraint-based mining Frequent-pattern mining methods 32

Constraints in Data Mining n n n Knowledge type constraint: n classification, association, etc. Data constraint — using SQL-like queries n find product pairs sold together in stores in Vancouver in Dec. ’ 00 Dimension/level constraint n in relevance to region, price, brand, customer category Rule (or pattern) constraint n small sales (price < $10) triggers big sales (sum > $200) Interestingness constraint n strong rules: min_support 3%, min_confidence 60% Frequent-pattern mining methods 33

Constrained Mining vs. Constraint-Based Search n n Constrained mining vs. constraint-based search/reasoning n Both are aimed at reducing search space n Finding all patterns satisfying constraints vs. finding some (or one) answer in constraint-based search in AI n Constraint-pushing vs. heuristic search n It is an interesting research problem on how to integrate them Constrained mining vs. query processing in DBMS n Database query processing requires to find all n Constrained pattern mining shares a similar philosophy as pushing selections deeply in query processing Frequent-pattern mining methods 34

Constrained Frequent Pattern Mining: A Mining Query Optimization Problem n n n Given a frequent pattern mining query with a set of constraints C, the algorithm should be n sound: it only finds frequent sets that satisfy the given constraints C n complete: all frequent sets satisfying the given constraints C are found A naïve solution n First find all frequent sets, and then test them for constraint satisfaction More efficient approaches: n Analyze the properties of constraints comprehensively n Push them as deeply as possible inside the frequent pattern computation. Frequent-pattern mining methods 35

Anti-Monotonicity in Constraint. Based Mining TDB (min_sup=2) n Anti-monotonicity n n intemset S satisfies the constraint, so does any of its subset sum(S. Price) v is anti-monotone sum(S. Price) v is not anti-monotone TID Transaction 10 a, b, c, d, f 20 30 40 b, c, d, f, g, h a, c, d, e, f c, e, f, g Example. C: range(S. profit) 15 is anti -monotone n Itemset ab violates C n So does every superset of ab Frequent-pattern mining methods Item Profit a 40 b 0 c -20 d 10 e -30 f 30 g 20 h -10 36

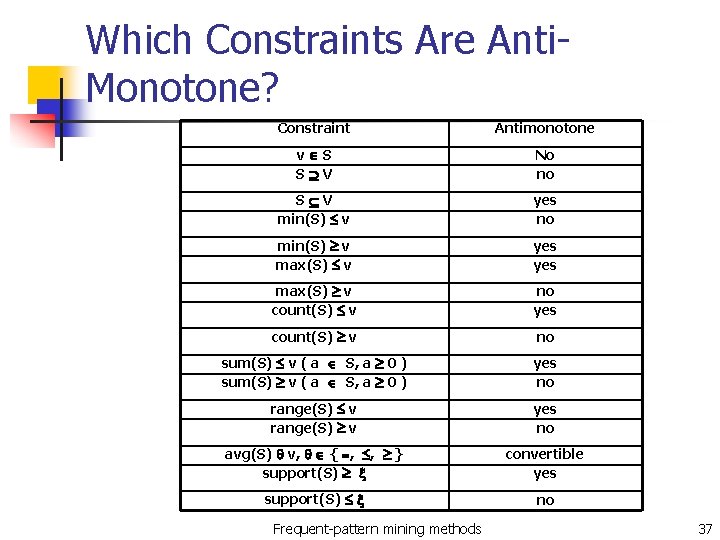

Which Constraints Are Anti. Monotone? Constraint Antimonotone v S S V No no S V min(S) v yes no min(S) v max(S) v yes max(S) v count(S) v no yes count(S) v no sum(S) v ( a S, a 0 ) yes no range(S) v yes no avg(S) v, { , , } support(S) convertible yes support(S) no Frequent-pattern mining methods 37

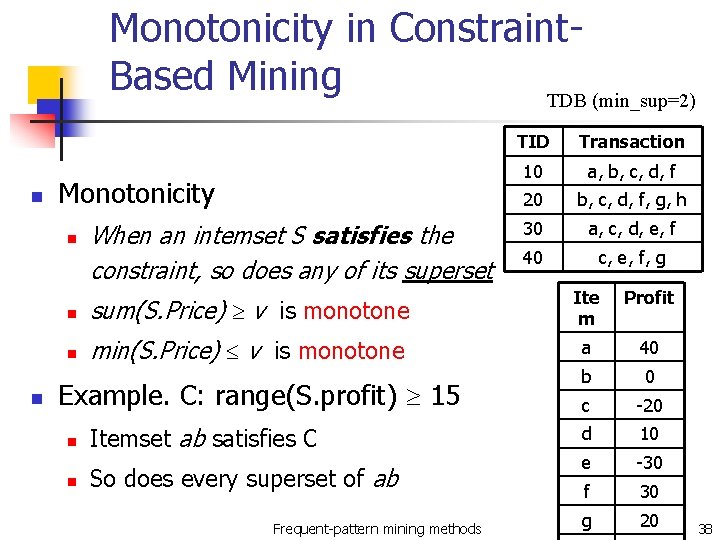

Monotonicity in Constraint. Based Mining TDB (min_sup=2) n Monotonicity n n When an intemset S satisfies the constraint, so does any of its superset TID Transaction 10 a, b, c, d, f 20 b, c, d, f, g, h 30 a, c, d, e, f 40 c, e, f, g n sum(S. Price) v is monotone Ite m n min(S. Price) v is monotone a 40 b 0 c -20 d 10 e -30 f 30 g 20 Example. C: range(S. profit) 15 n n Itemset ab satisfies C So does every superset of ab Frequent-pattern mining methods Profit 38

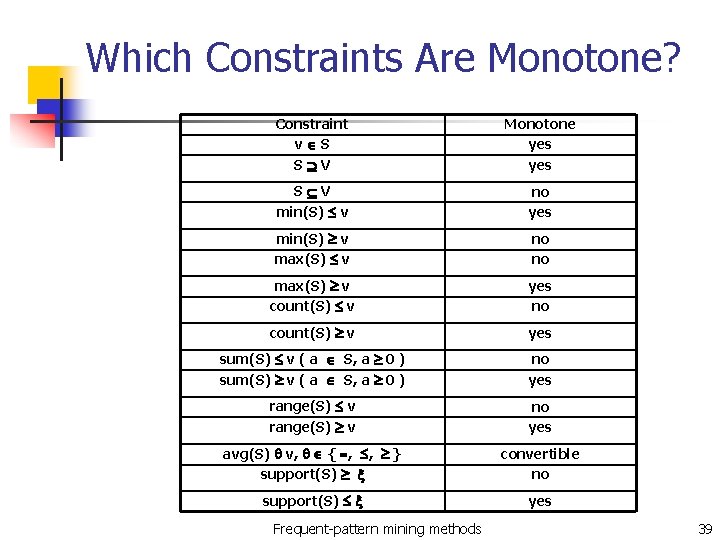

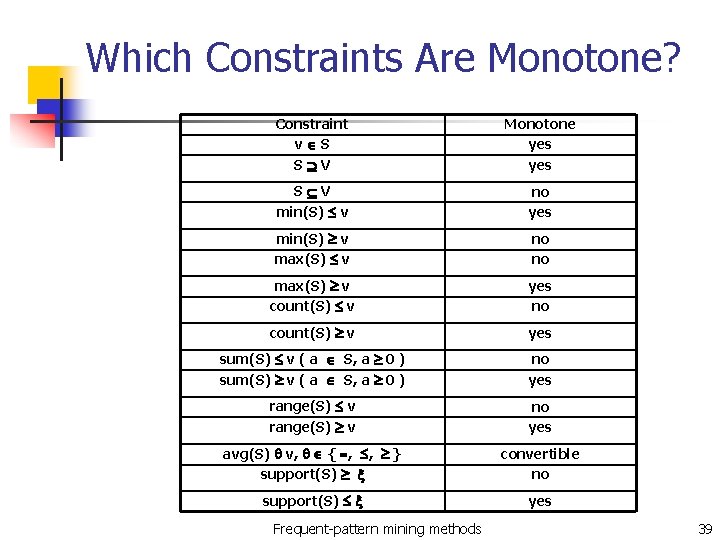

Which Constraints Are Monotone? Constraint v S S V Monotone yes S V min(S) v no yes min(S) v max(S) v no no max(S) v count(S) v yes no count(S) v yes sum(S) v ( a S, a 0 ) no yes range(S) v no yes avg(S) v, { , , } support(S) convertible no support(S) yes Frequent-pattern mining methods 39

Succinctness, Convertible, Inconvertable Constraints in Book n We will not consider these in this course. Frequent-pattern mining methods 40

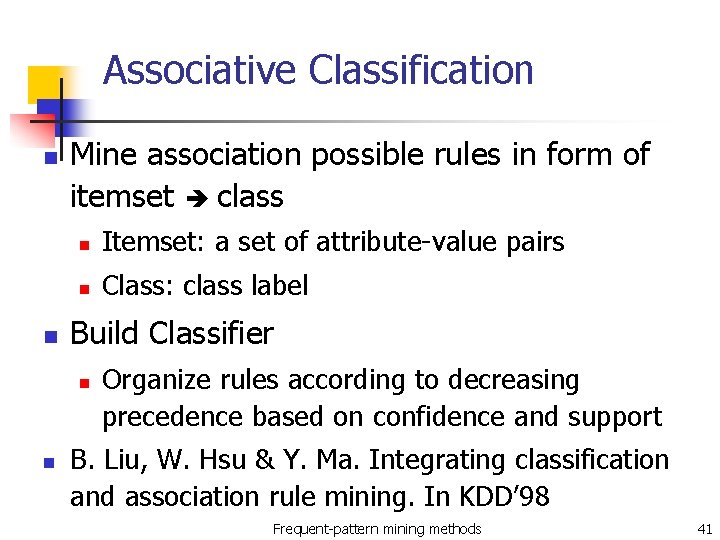

Associative Classification n n Mine association possible rules in form of itemset class n Itemset: a set of attribute-value pairs n Class: class label Build Classifier n n Organize rules according to decreasing precedence based on confidence and support B. Liu, W. Hsu & Y. Ma. Integrating classification and association rule mining. In KDD’ 98 Frequent-pattern mining methods 41

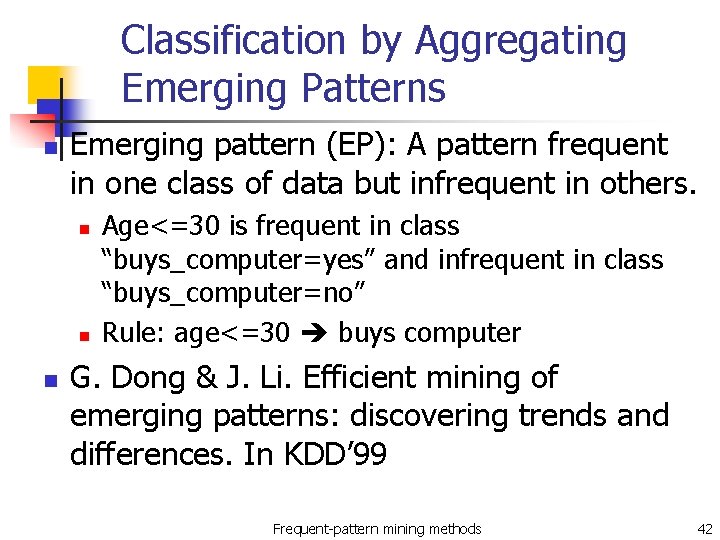

Classification by Aggregating Emerging Patterns n Emerging pattern (EP): A pattern frequent in one class of data but infrequent in others. n n n Age<=30 is frequent in class “buys_computer=yes” and infrequent in class “buys_computer=no” Rule: age<=30 buys computer G. Dong & J. Li. Efficient mining of emerging patterns: discovering trends and differences. In KDD’ 99 Frequent-pattern mining methods 42