Association Analysis Association Rule Mining Last updated 112519

![Association Rules Examples Rule form: “Body Head [support, confidence]”. buys(x, “diapers”) buys(x, “beer”) [0. Association Rules Examples Rule form: “Body Head [support, confidence]”. buys(x, “diapers”) buys(x, “beer”) [0.](https://slidetodoc.com/presentation_image/5d8ec005c5c86b4dac69467e53de215f/image-3.jpg)

- Slides: 54

Association Analysis / Association Rule Mining Last updated 11/25/19 1

What Is Association Rule Mining? Association rule mining is finding frequent patterns or associations among sets of items or objects, usually amongst transactional data Applications include Market Basket analysis, cross-marketing, catalog design, etc. 2

![Association Rules Examples Rule form Body Head support confidence buysx diapers buysx beer 0 Association Rules Examples Rule form: “Body Head [support, confidence]”. buys(x, “diapers”) buys(x, “beer”) [0.](https://slidetodoc.com/presentation_image/5d8ec005c5c86b4dac69467e53de215f/image-3.jpg)

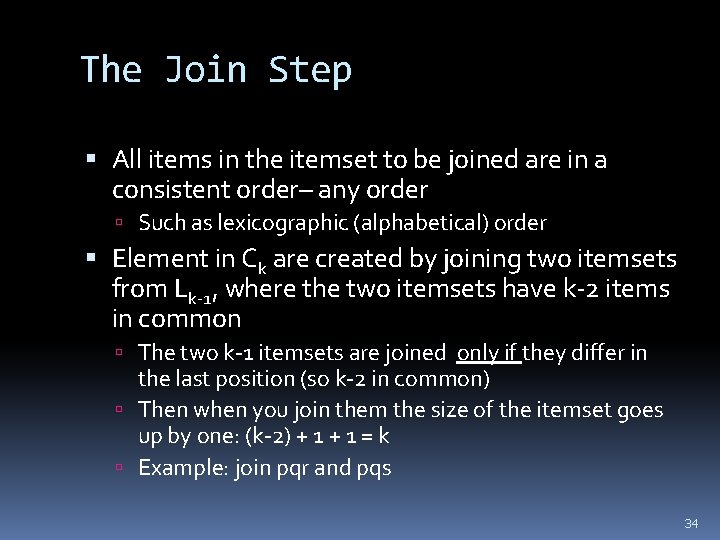

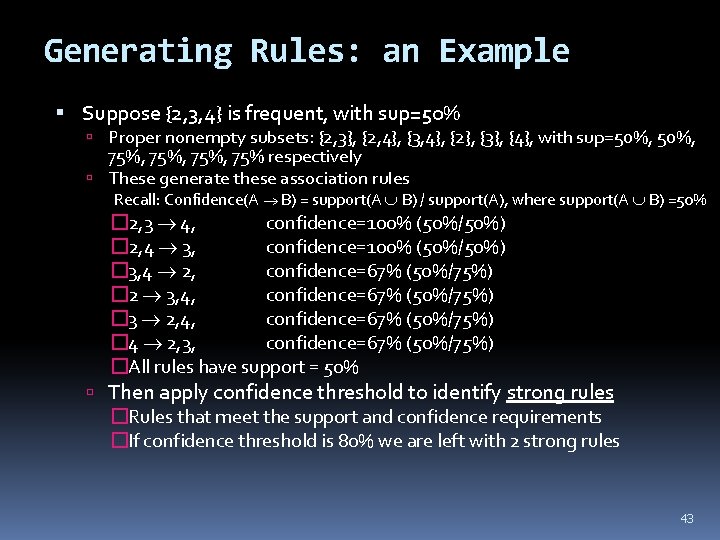

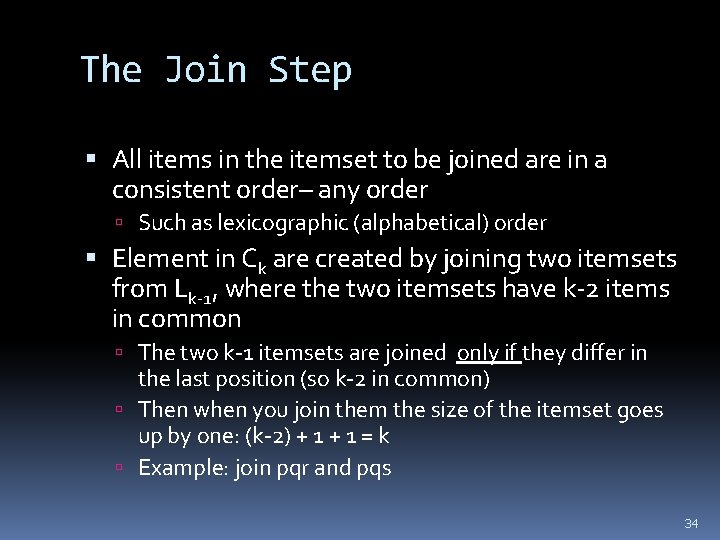

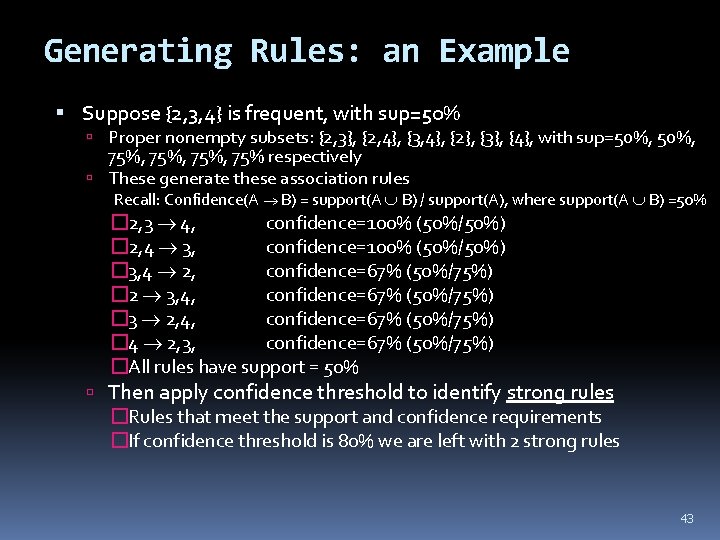

Association Rules Examples Rule form: “Body Head [support, confidence]”. buys(x, “diapers”) buys(x, “beer”) [0. 5%, 60%] buys(x, "bread") buys(x, "milk") [0. 6%, 65%] major(x, "CS") / takes(x, "DB") grade(x, "A") [1%, 75%] age=“ 30 -45”, income=“ 50 K-75 K” car=“SUV” Usually one relation so rule represented more simply Diapers Beer [0. 5%, 60%] We have seen rules before. In what context? Rule Learning (e. g. , Ripper) What is the left side of a rule called? The right side? LHS: antecedent RHS: consequent 3

Market-basket Analysis & Finding Associations Question: do items occur together? Proposed by Agrawal et al in 1993. An important data mining task studied extensively by the database and data mining community. Assumes all data are categorical, usually binary We care if someone purchased “Diet Coke” not how many bottles of diet coke they purchased Initially used for Market Basket Analysis to find how items purchased by customers are related. Bread Milk [sup = 5%, conf = 100%] 4

Association Rule Mining: The Goal Given: Database of transactions Each transaction is a list of items: an itemset �For example, a list of items purchased by a customer Goal: Find all rules that correlate one set of items with another set Example: 98% of people who purchase tires and auto accessories also get automotive services done 5

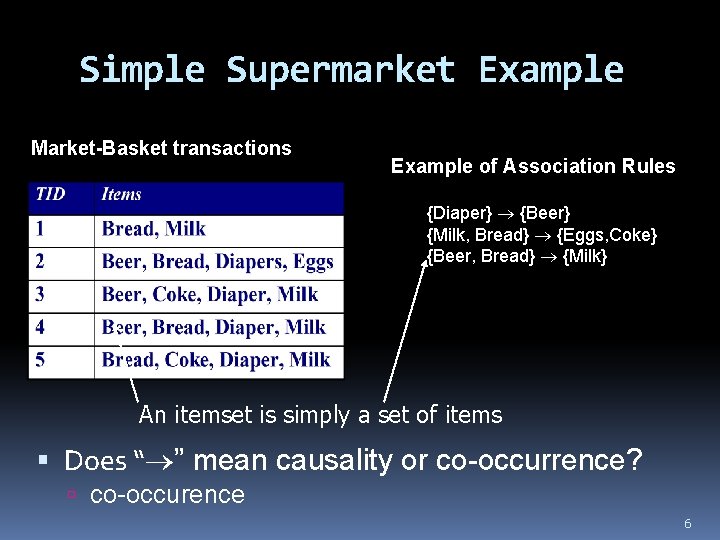

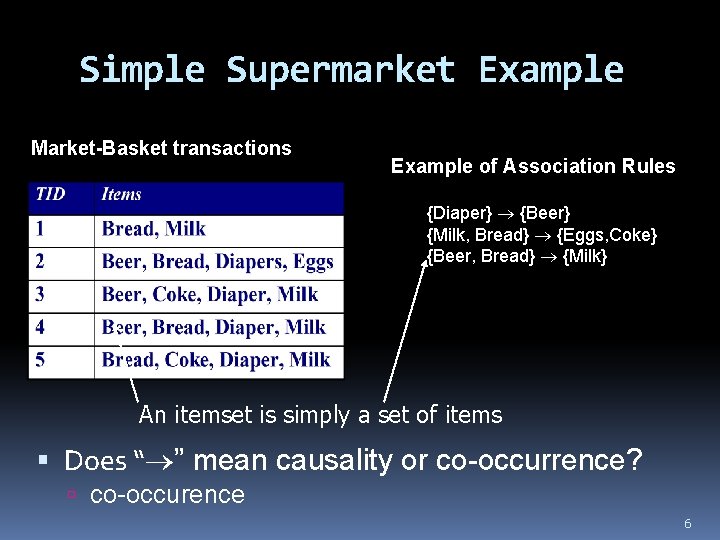

Simple Supermarket Example Market-Basket transactions Example of Association Rules {Diaper} {Beer} {Milk, Bread} {Eggs, Coke} {Beer, Bread} {Milk} An itemset is simply a set of items Does “ ” mean causality or co-occurrence? co-occurence 6

Transaction data representation A simplistic view of “shopping baskets” Some important information not considered: the quantity of each item purchased the price paid 7

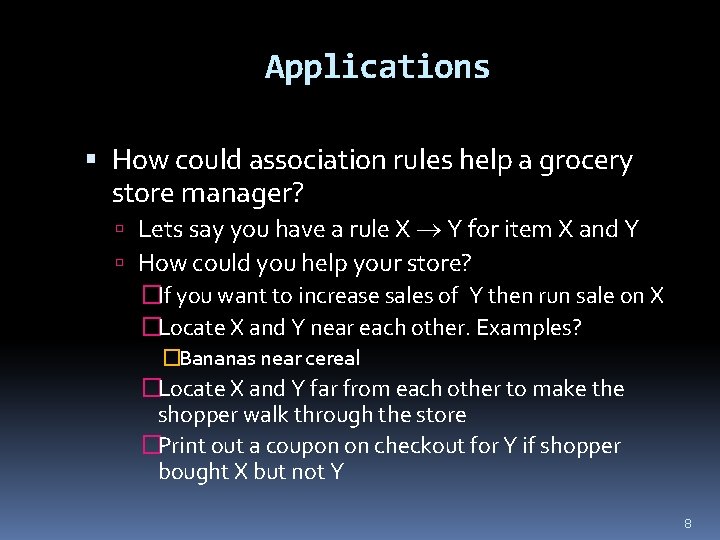

Applications How could association rules help a grocery store manager? Lets say you have a rule X Y for item X and Y How could you help your store? �If you want to increase sales of Y then run sale on X �Locate X and Y near each other. Examples? �Bananas near cereal �Locate X and Y far from each other to make the shopper walk through the store �Print out a coupon on checkout for Y if shopper bought X but not Y 8

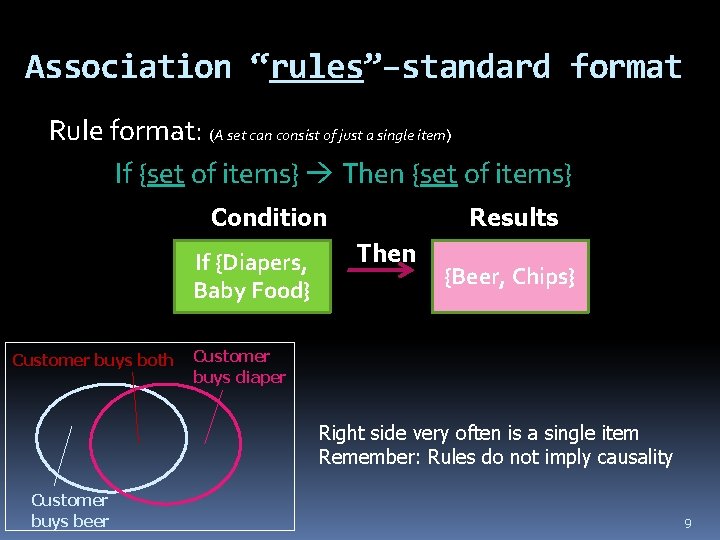

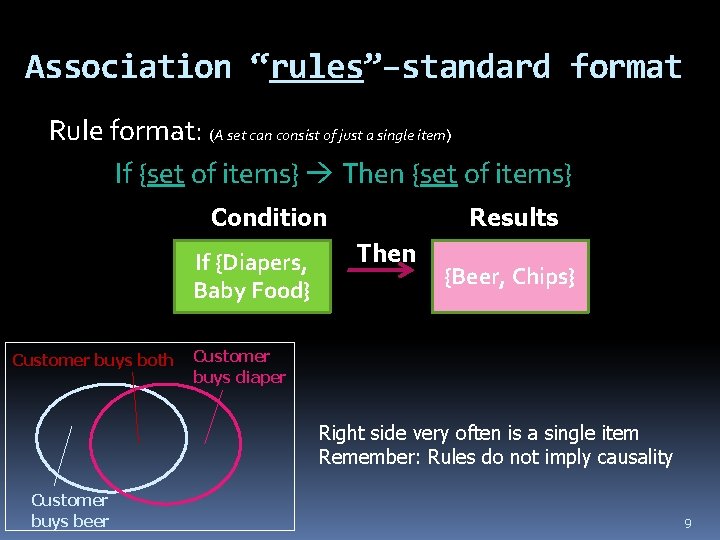

Association “rules”–standard format Rule format: (A set can consist of just a single item) If {set of items} Then {set of items} Condition If {Diapers, Baby Food} Customer buys both Results Then {Beer, Chips} Customer buys diaper Right side very often is a single item Remember: Rules do not imply causality Customer buys beer 9

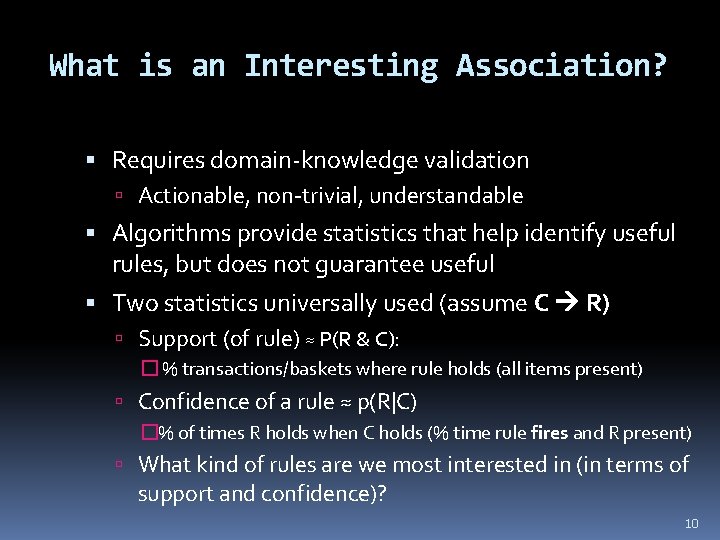

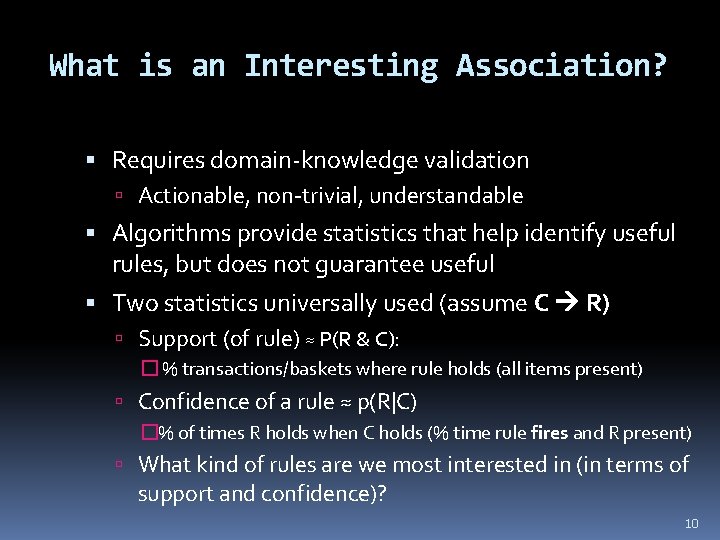

What is an Interesting Association? Requires domain-knowledge validation Actionable, non-trivial, understandable Algorithms provide statistics that help identify useful rules, but does not guarantee useful Two statistics universally used (assume C R) Support (of rule) ≈ P(R & C): � % transactions/baskets where rule holds (all items present) Confidence of a rule ≈ p(R|C) �% of times R holds when C holds (% time rule fires and R present) What kind of rules are we most interested in (in terms of support and confidence)? 10

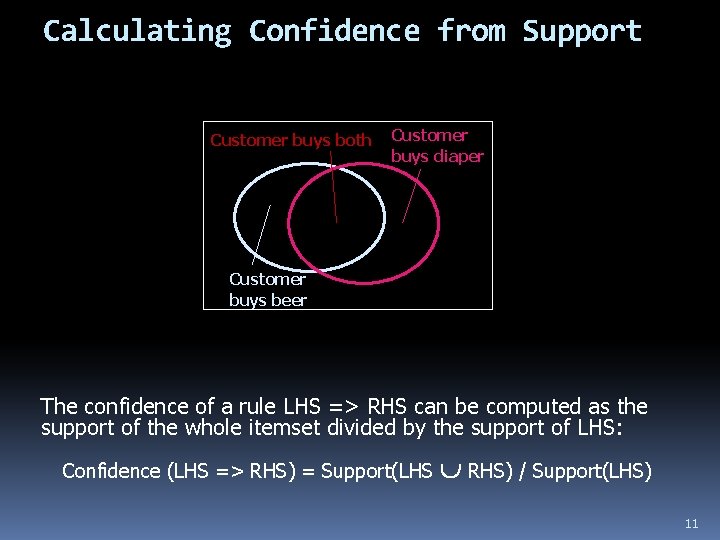

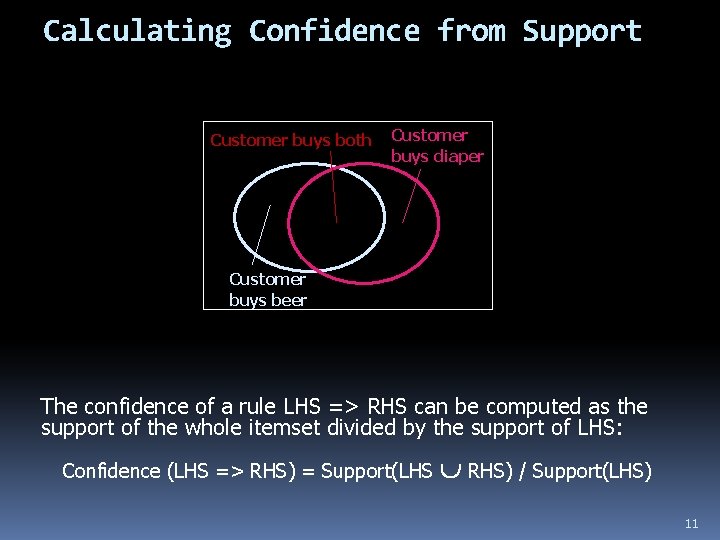

Calculating Confidence from Support Customer buys both Customer buys diaper Customer buys beer The confidence of a rule LHS => RHS can be computed as the support of the whole itemset divided by the support of LHS: Confidence (LHS => RHS) = Support(LHS È RHS) / Support(LHS) 11

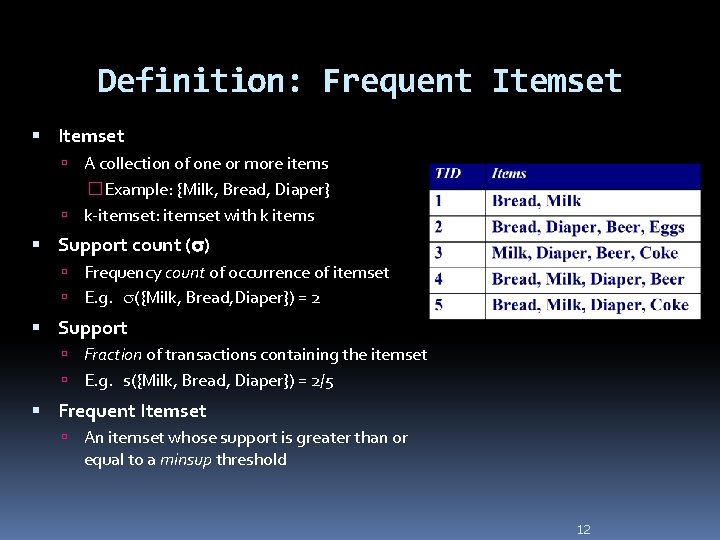

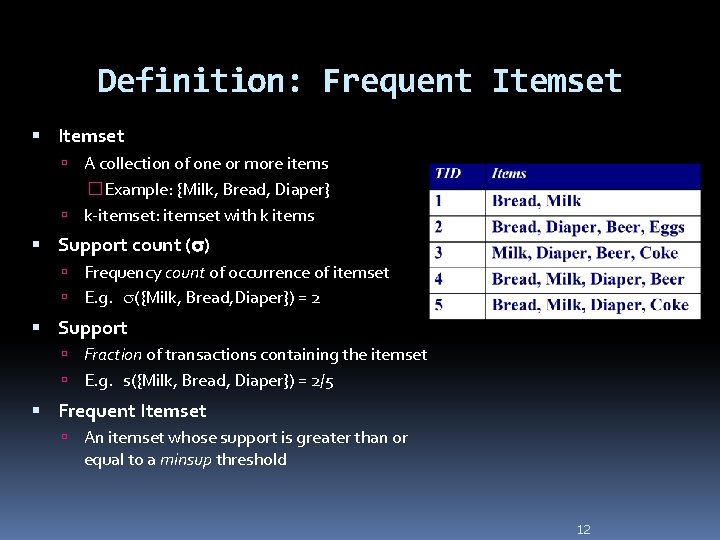

Definition: Frequent Itemset A collection of one or more items �Example: {Milk, Bread, Diaper} k-itemset: itemset with k items Support count ( ) Frequency count of occurrence of itemset E. g. ({Milk, Bread, Diaper}) = 2 Support Fraction of transactions containing the itemset E. g. s({Milk, Bread, Diaper}) = 2/5 Frequent Itemset An itemset whose support is greater than or equal to a minsup threshold 12

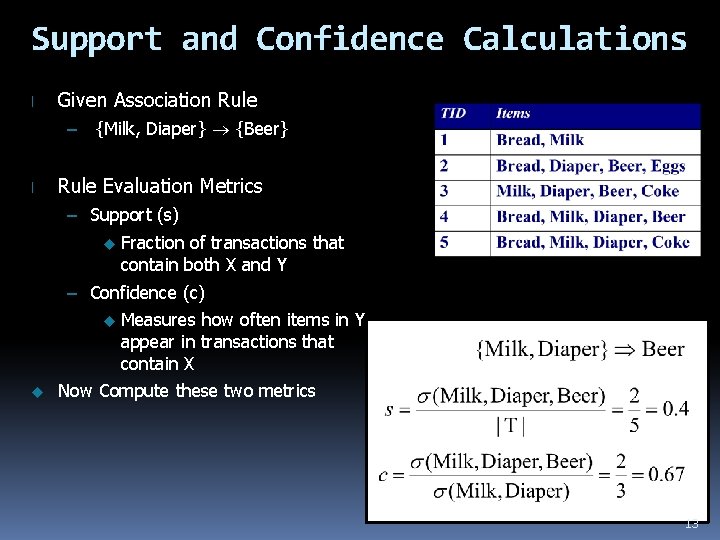

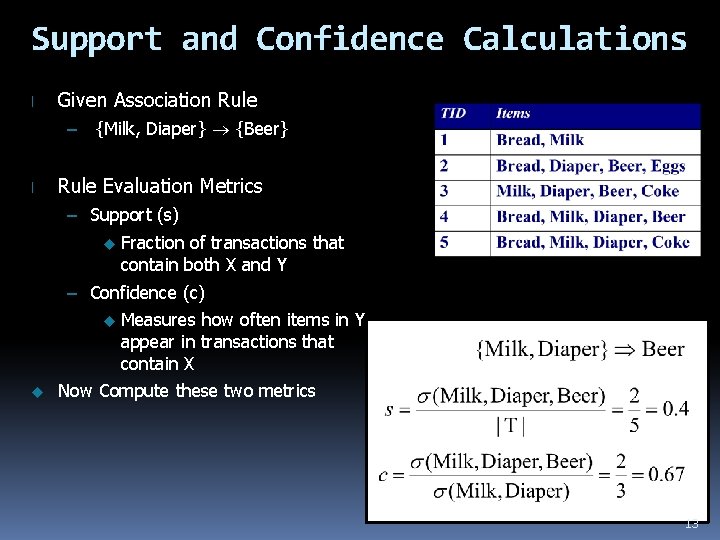

Support and Confidence Calculations l Given Association Rule – {Milk, Diaper} {Beer} l Rule Evaluation Metrics – Support (s) u Fraction of transactions that contain both X and Y – Confidence (c) u u Measures how often items in Y appear in transactions that contain X Now Compute these two metrics 13

Association Rule Mining Task Given set of transactions T, the goal of association rule mining is to find all rules having support ≥ minsup threshold confidence ≥ minconf threshold Brute-force approach: List all possible association rules Compute the support and confidence for each rule Prune rules that fail the minsup and minconf thresholds Þ Computationally prohibitive! So what do we do? What have we done in such cases in this course? We have use heuristic methods and not achieved optimal solution But not in this case. In this case we will act smarter and find optimal (correct) solution 14

Number of Itemsets Given d distinct items, how many distinct itemsets can be formed? You all learned this is CISC 1100/1400 It has to do with set theory Answer: �It is the number of all possible subsets �It is the cardinality of the Power Set of d �Every item is either present or not present (2 choices) �So answer is 2 d 15

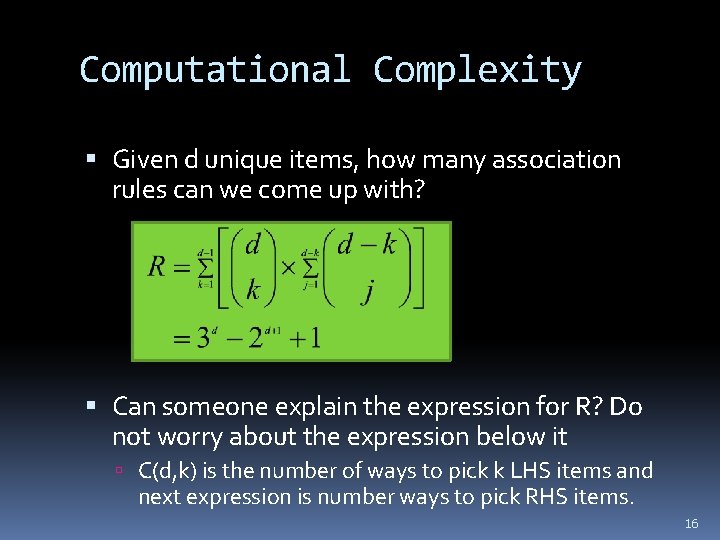

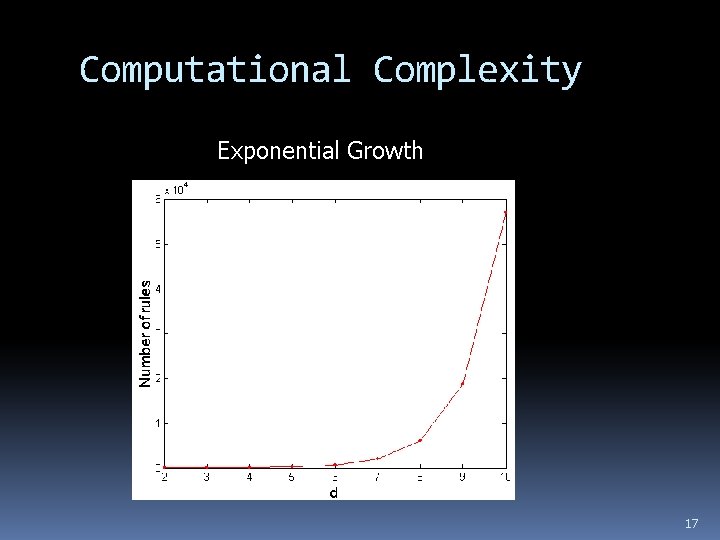

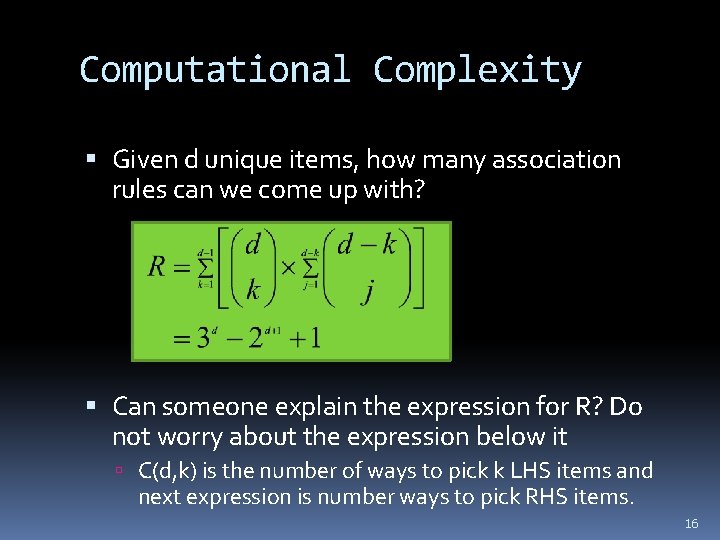

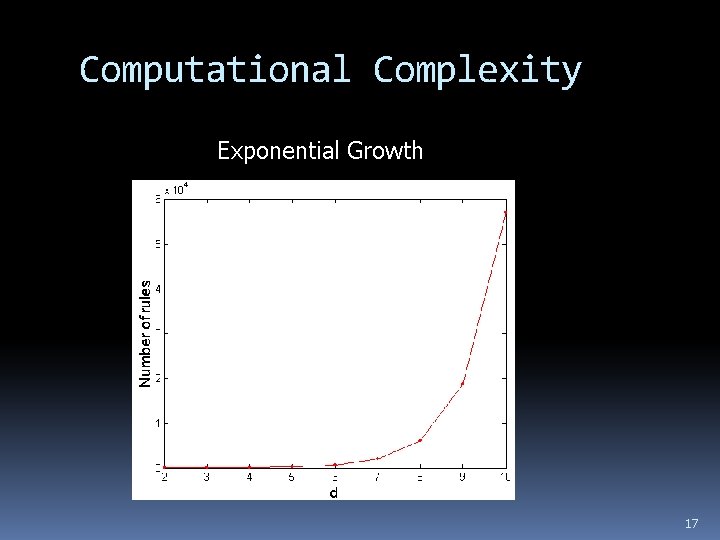

Computational Complexity Given d unique items, how many association rules can we come up with? Can someone explain the expression for R? Do not worry about the expression below it C(d, k) is the number of ways to pick k LHS items and next expression is number ways to pick RHS items. 16

Computational Complexity Exponential Growth 17

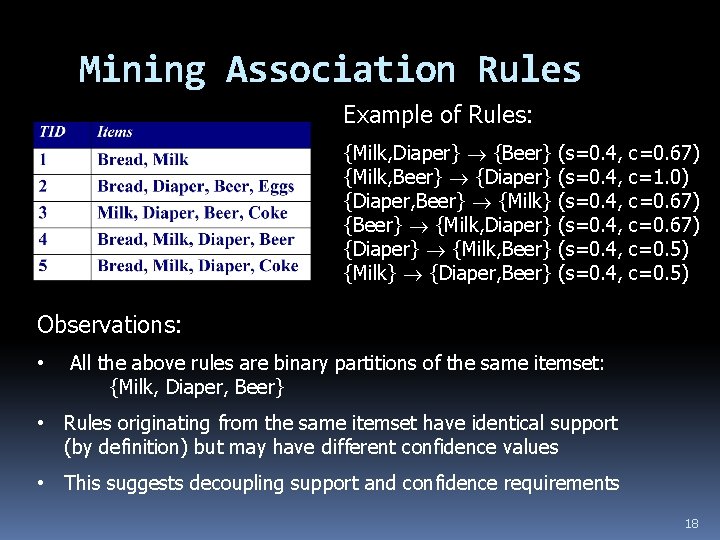

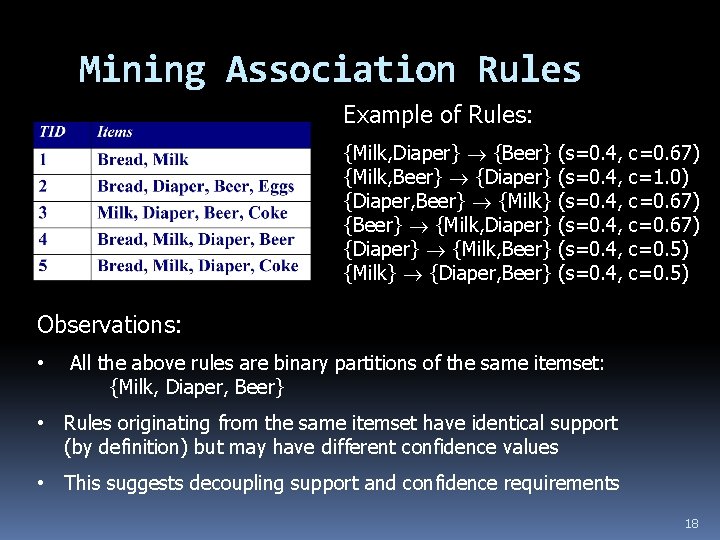

Mining Association Rules Example of Rules: {Milk, Diaper} {Beer} {Milk, Beer} {Diaper} {Diaper, Beer} {Milk} {Beer} {Milk, Diaper} {Diaper} {Milk, Beer} {Milk} {Diaper, Beer} (s=0. 4, c=0. 67) c=1. 0) c=0. 67) c=0. 5) Observations: • All the above rules are binary partitions of the same itemset: {Milk, Diaper, Beer} • Rules originating from the same itemset have identical support (by definition) but may have different confidence values • This suggests decoupling support and confidence requirements 18

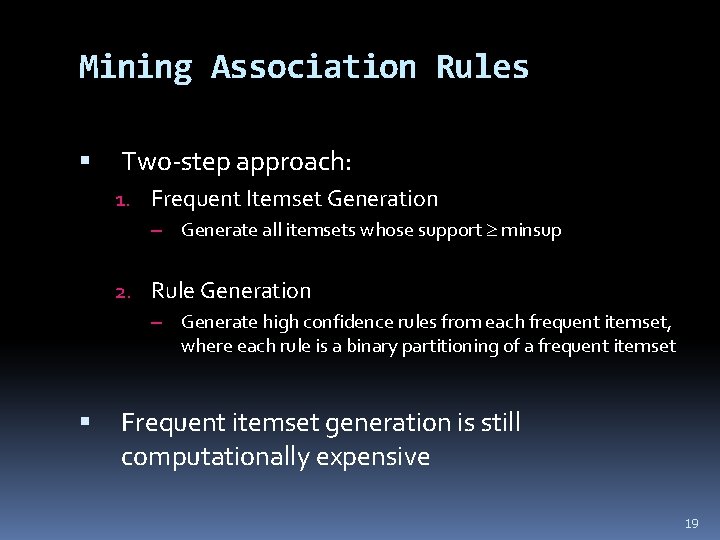

Mining Association Rules Two-step approach: 1. Frequent Itemset Generation – Generate all itemsets whose support minsup 2. Rule Generation – Generate high confidence rules from each frequent itemset, where each rule is a binary partitioning of a frequent itemset Frequent itemset generation is still computationally expensive 19

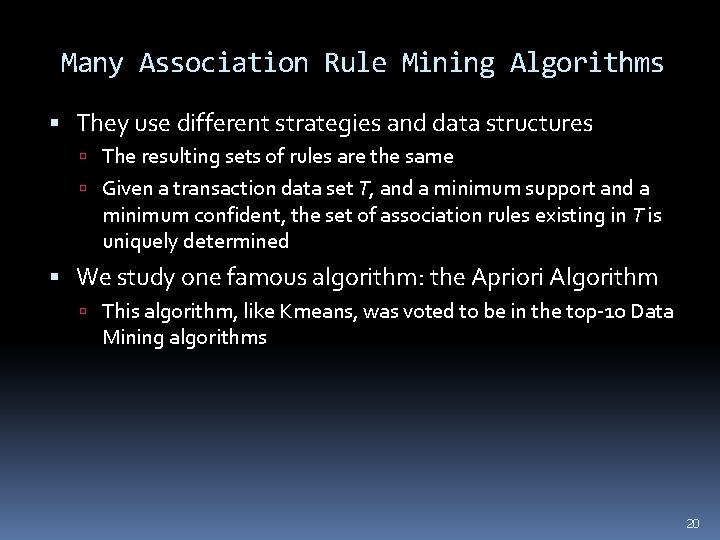

Many Association Rule Mining Algorithms They use different strategies and data structures The resulting sets of rules are the same Given a transaction data set T, and a minimum support and a minimum confident, the set of association rules existing in T is uniquely determined We study one famous algorithm: the Apriori Algorithm This algorithm, like Kmeans, was voted to be in the top-10 Data Mining algorithms 20

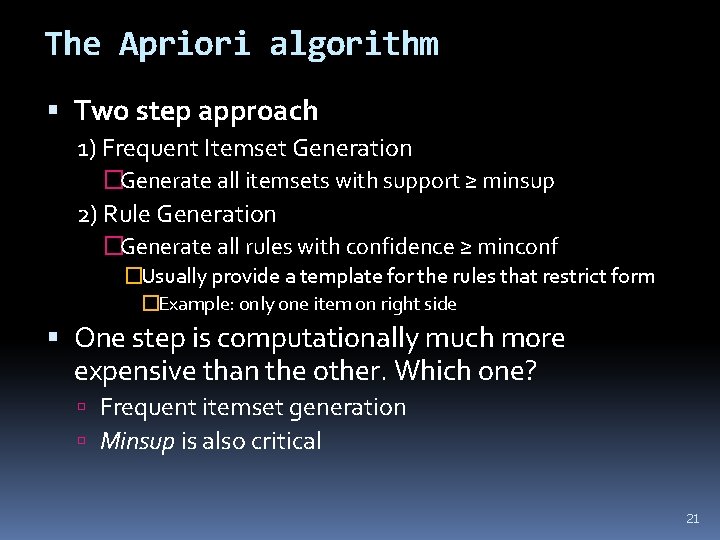

The Apriori algorithm Two step approach 1) Frequent Itemset Generation �Generate all itemsets with support ≥ minsup 2) Rule Generation �Generate all rules with confidence ≥ minconf �Usually provide a template for the rules that restrict form �Example: only one item on right side One step is computationally much more expensive than the other. Which one? Frequent itemset generation Minsup is also critical 21

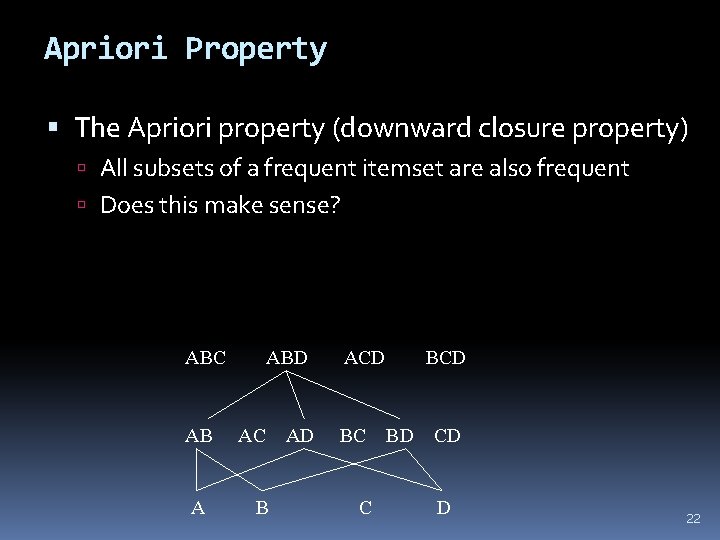

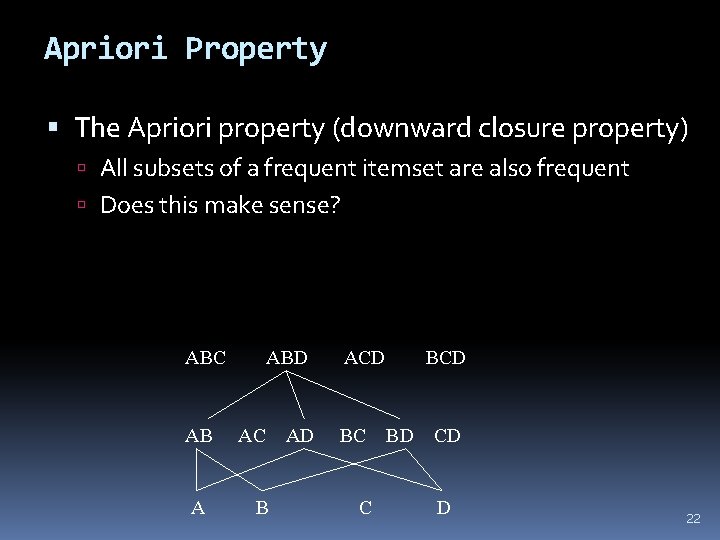

Apriori Property The Apriori property (downward closure property) All subsets of a frequent itemset are also frequent Does this make sense? ABC AB A ABD AC B AD ACD BC C BCD BD CD D 22

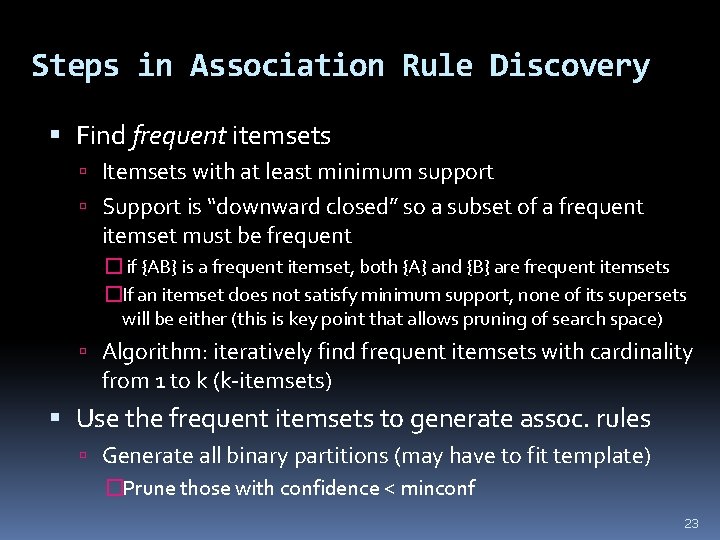

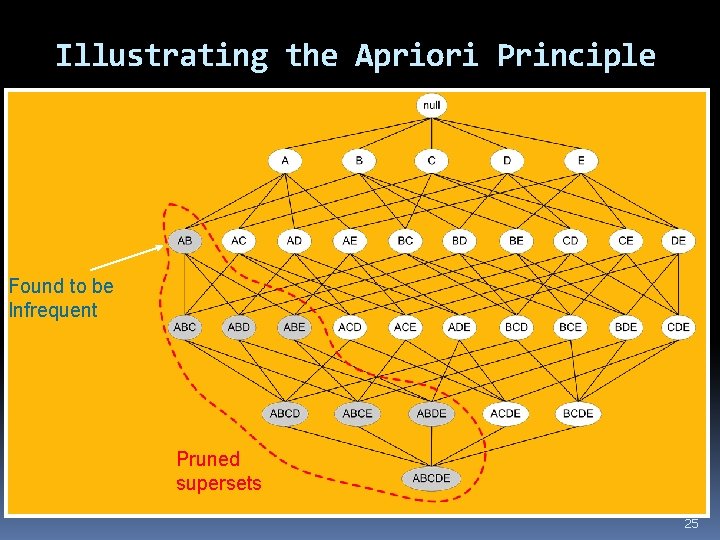

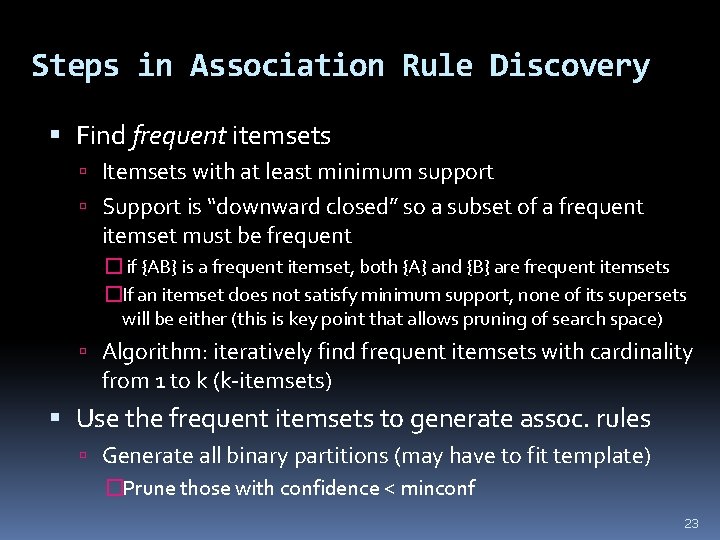

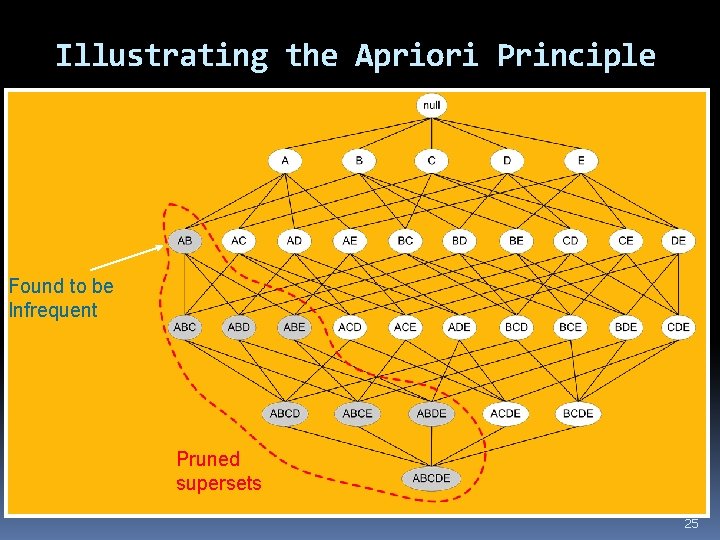

Steps in Association Rule Discovery Find frequent itemsets Itemsets with at least minimum support Support is “downward closed” so a subset of a frequent itemset must be frequent � if {AB} is a frequent itemset, both {A} and {B} are frequent itemsets �If an itemset does not satisfy minimum support, none of its supersets will be either (this is key point that allows pruning of search space) Algorithm: iteratively find frequent itemsets with cardinality from 1 to k (k-itemsets) Use the frequent itemsets to generate assoc. rules Generate all binary partitions (may have to fit template) �Prune those with confidence < minconf 23

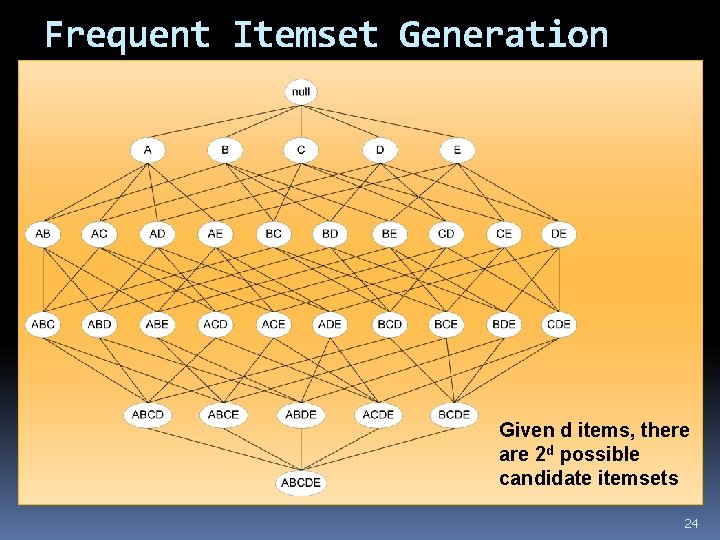

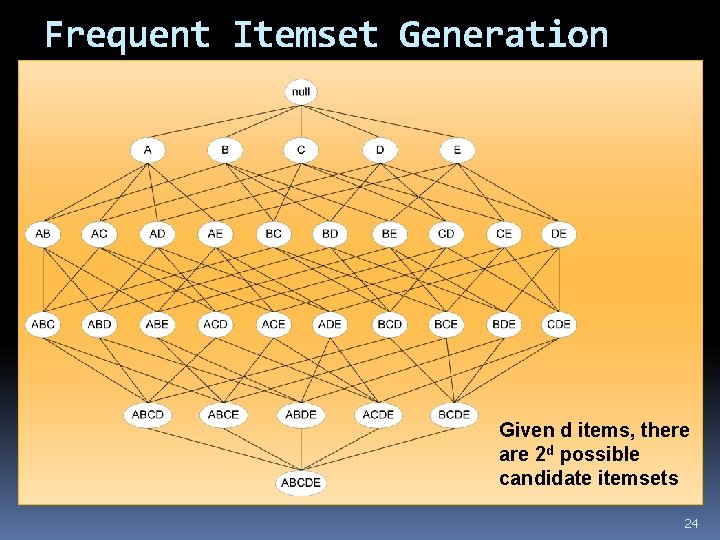

Frequent Itemset Generation Given d items, there are 2 d possible candidate itemsets 24

Illustrating the Apriori Principle Found to be Infrequent Pruned supersets 25

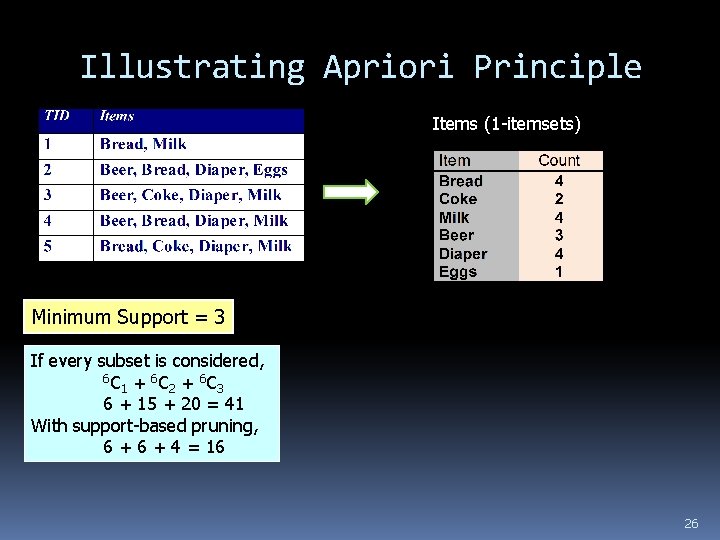

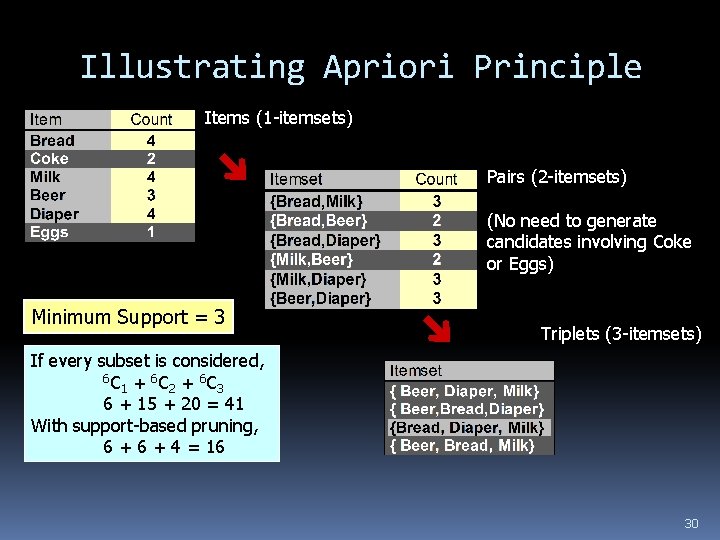

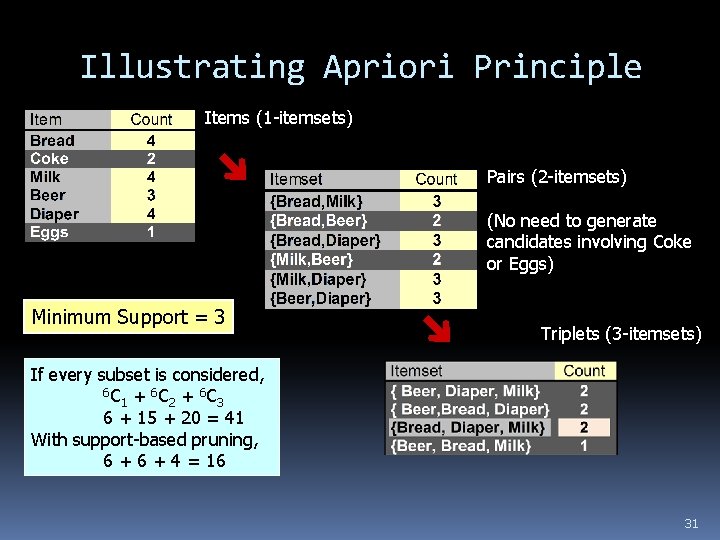

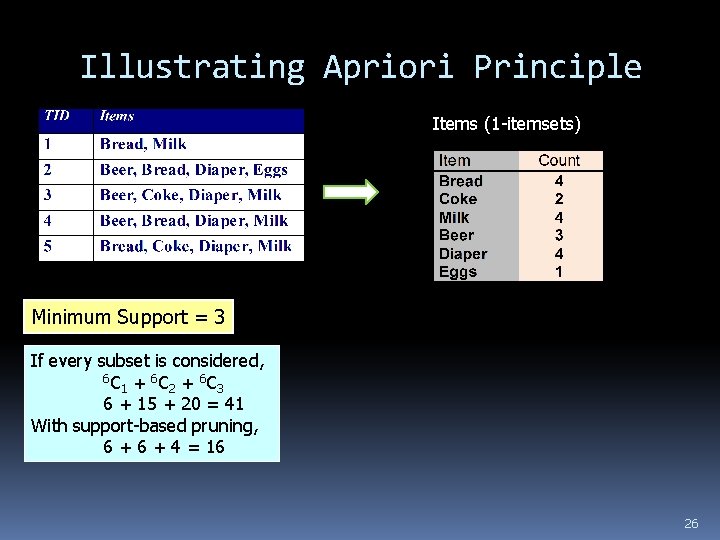

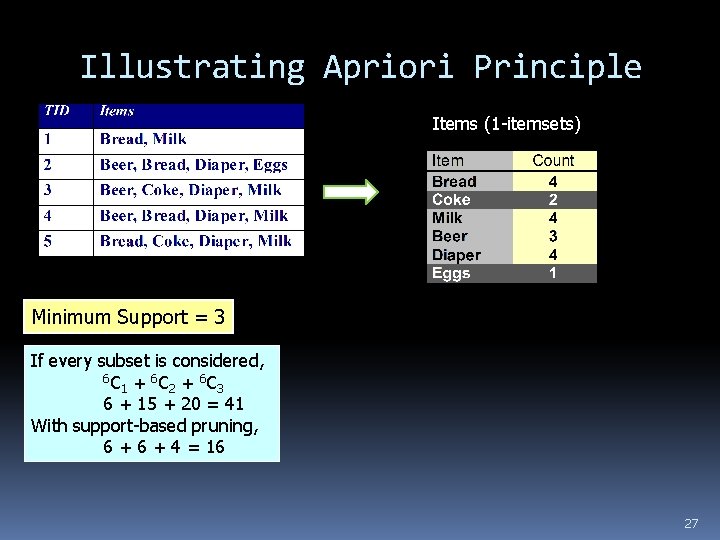

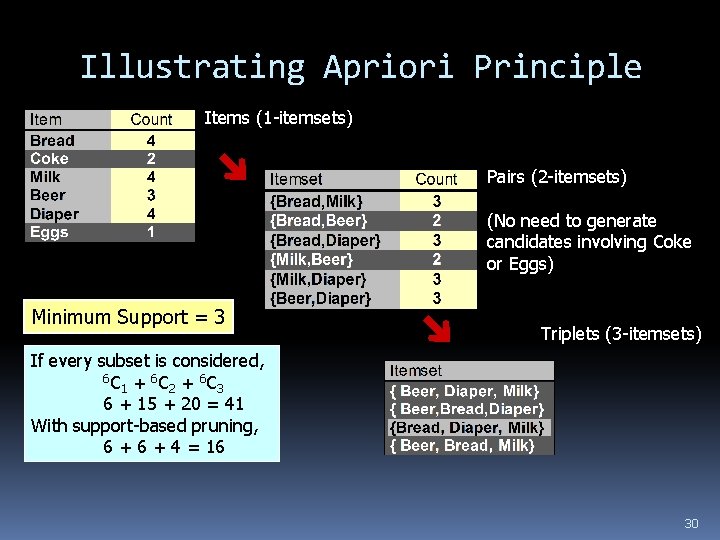

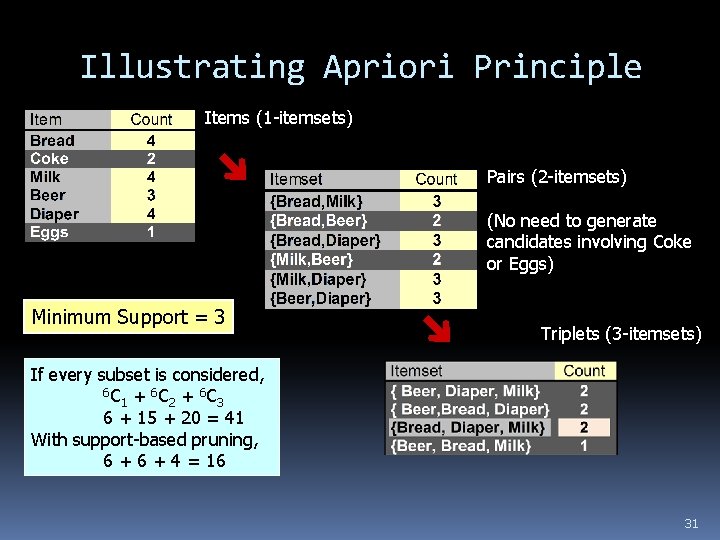

Illustrating Apriori Principle Items (1 -itemsets) Minimum Support = 3 If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 26

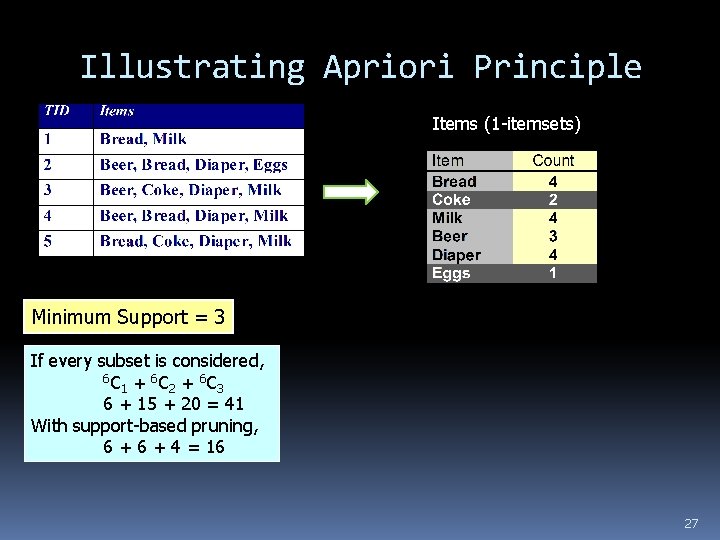

Illustrating Apriori Principle Items (1 -itemsets) Minimum Support = 3 If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 27

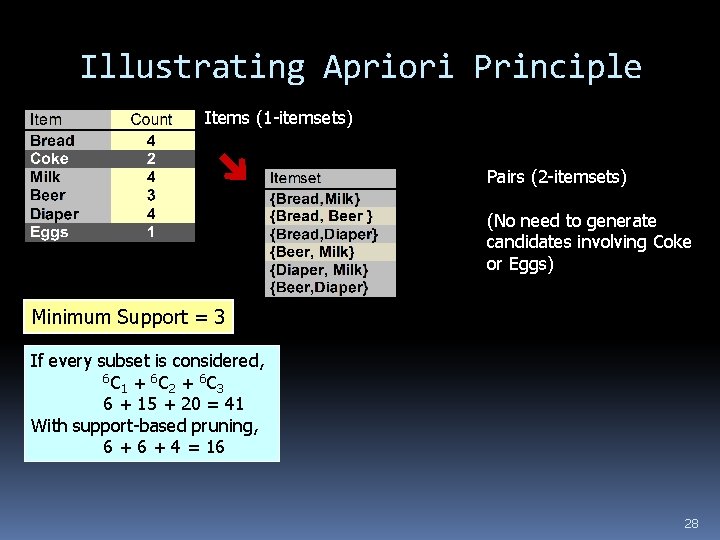

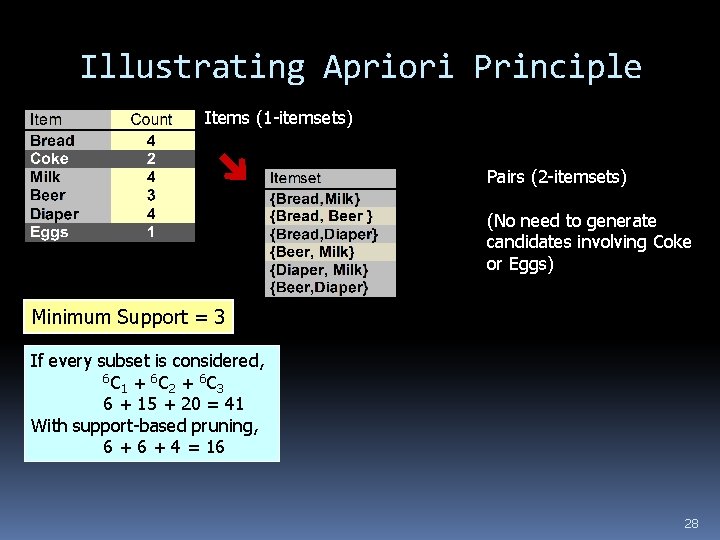

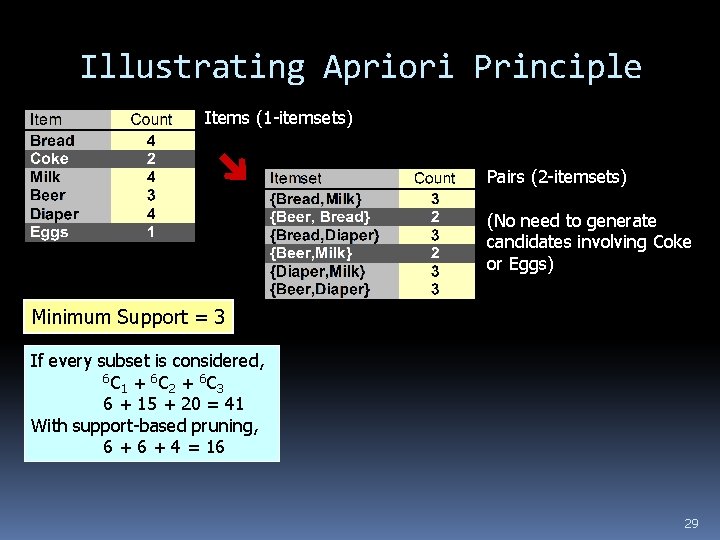

Illustrating Apriori Principle Items (1 -itemsets) Pairs (2 -itemsets) (No need to generate candidates involving Coke or Eggs) Minimum Support = 3 If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 28

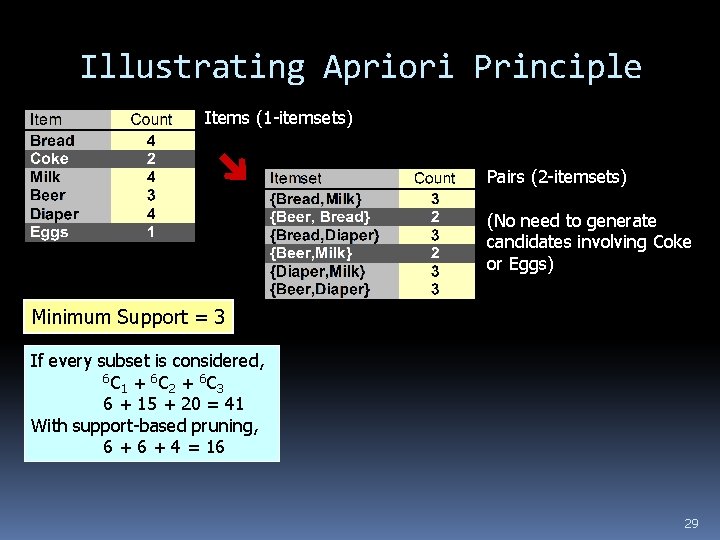

Illustrating Apriori Principle Items (1 -itemsets) Pairs (2 -itemsets) (No need to generate candidates involving Coke or Eggs) Minimum Support = 3 If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 29

Illustrating Apriori Principle Items (1 -itemsets) Pairs (2 -itemsets) (No need to generate candidates involving Coke or Eggs) Minimum Support = 3 Triplets (3 -itemsets) If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 30

Illustrating Apriori Principle Items (1 -itemsets) Pairs (2 -itemsets) (No need to generate candidates involving Coke or Eggs) Minimum Support = 3 Triplets (3 -itemsets) If every subset is considered, 6 C + 6 C 1 2 3 6 + 15 + 20 = 41 With support-based pruning, 6 + 4 = 16 31

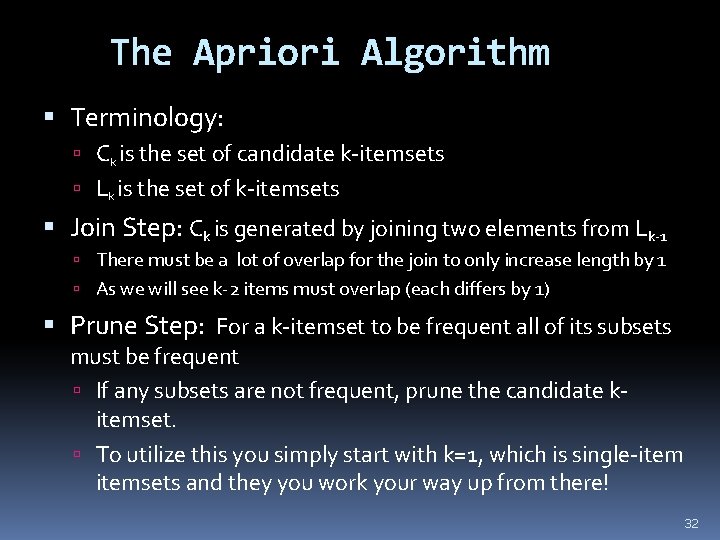

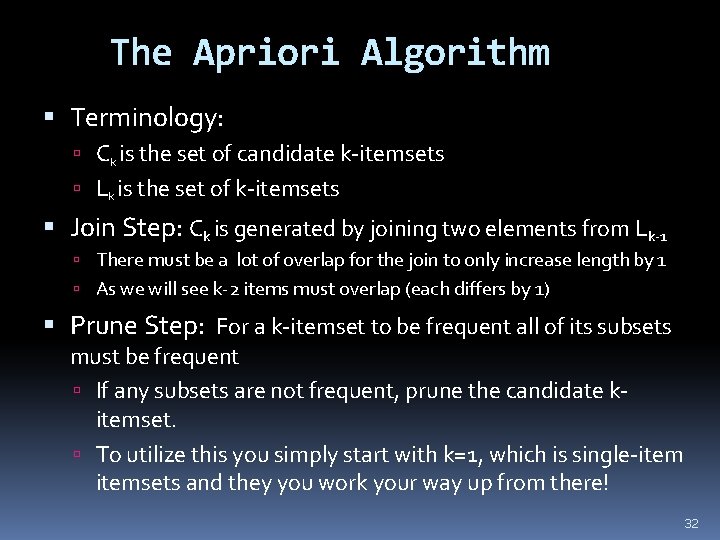

The Apriori Algorithm Terminology: Ck is the set of candidate k-itemsets Lk is the set of k-itemsets Join Step: Ck is generated by joining two elements from Lk-1 There must be a lot of overlap for the join to only increase length by 1 As we will see k-2 items must overlap (each differs by 1) Prune Step: For a k-itemset to be frequent all of its subsets must be frequent If any subsets are not frequent, prune the candidate kitemset. To utilize this you simply start with k=1, which is single-itemsets and they you work your way up from there! 32

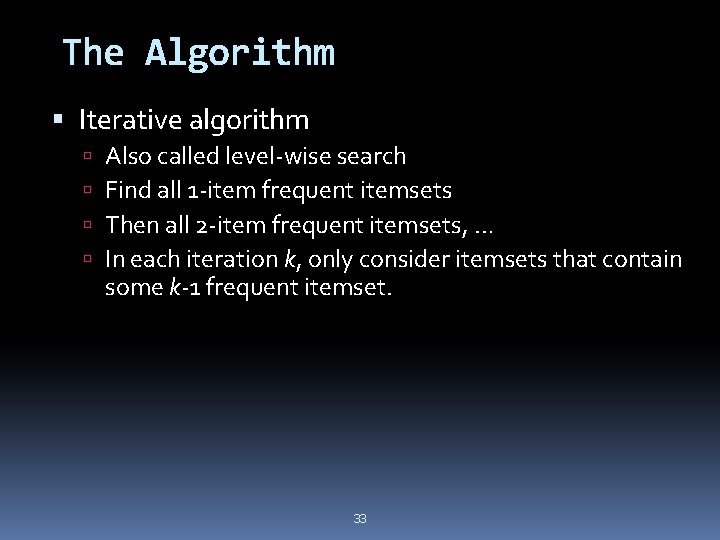

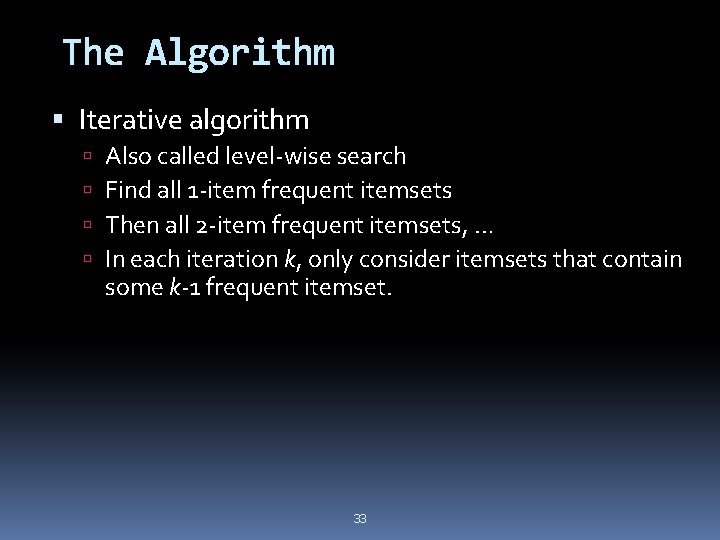

The Algorithm Iterative algorithm Also called level-wise search Find all 1 -item frequent itemsets Then all 2 -item frequent itemsets, … In each iteration k, only consider itemsets that contain some k-1 frequent itemset. 33

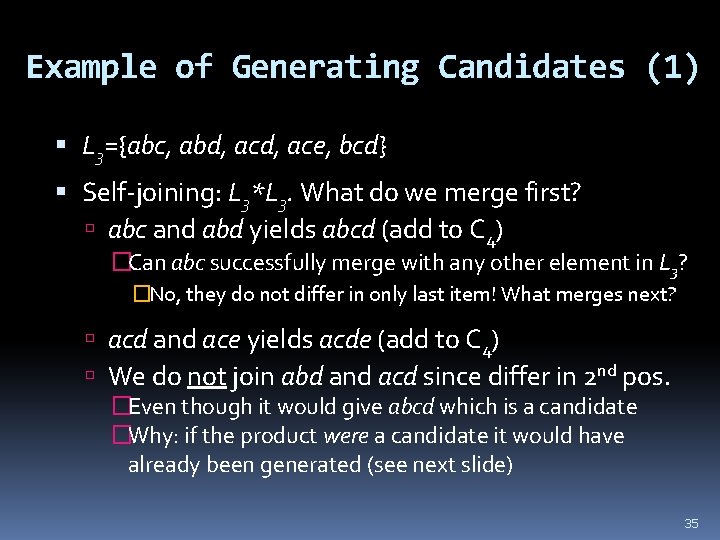

The Join Step All items in the itemset to be joined are in a consistent order– any order Such as lexicographic (alphabetical) order Element in Ck are created by joining two itemsets from Lk-1, where the two itemsets have k-2 items in common The two k-1 itemsets are joined only if they differ in the last position (so k-2 in common) Then when you join them the size of the itemset goes up by one: (k-2) + 1 = k Example: join pqr and pqs 34

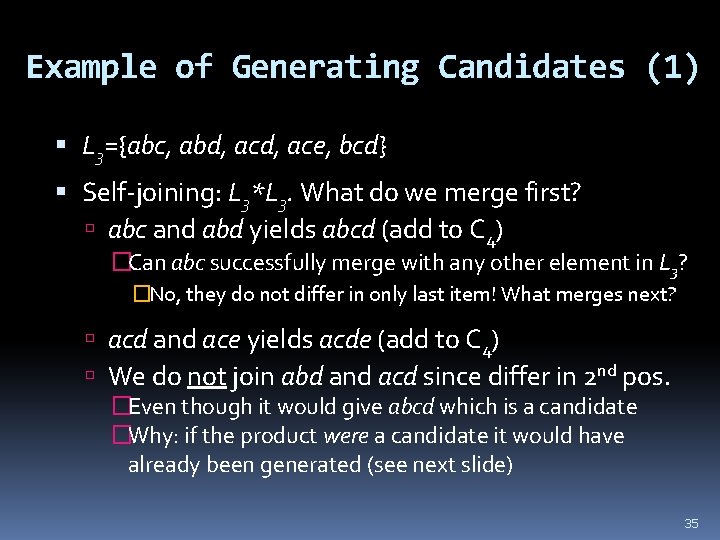

Example of Generating Candidates (1) L 3={abc, abd, ace, bcd} Self-joining: L 3*L 3. What do we merge first? abc and abd yields abcd (add to C 4) �Can abc successfully merge with any other element in L 3? �No, they do not differ in only last item! What merges next? acd and ace yields acde (add to C 4) We do not join abd and acd since differ in 2 nd pos. �Even though it would give abcd which is a candidate �Why: if the product were a candidate it would have already been generated (see next slide) 35

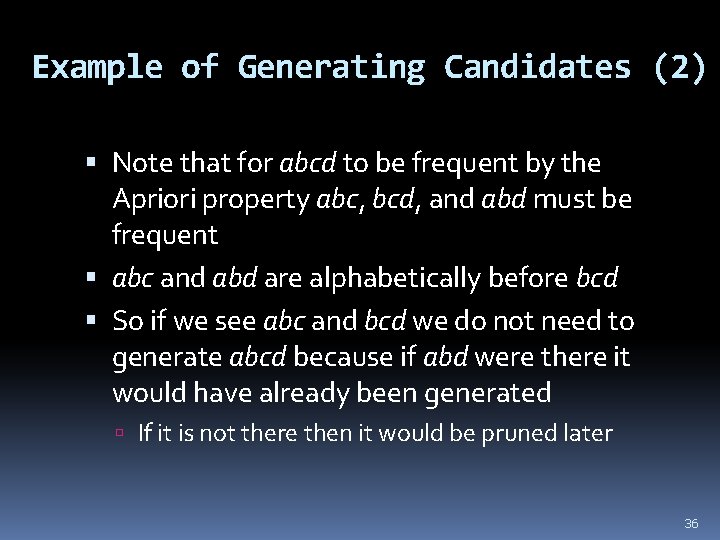

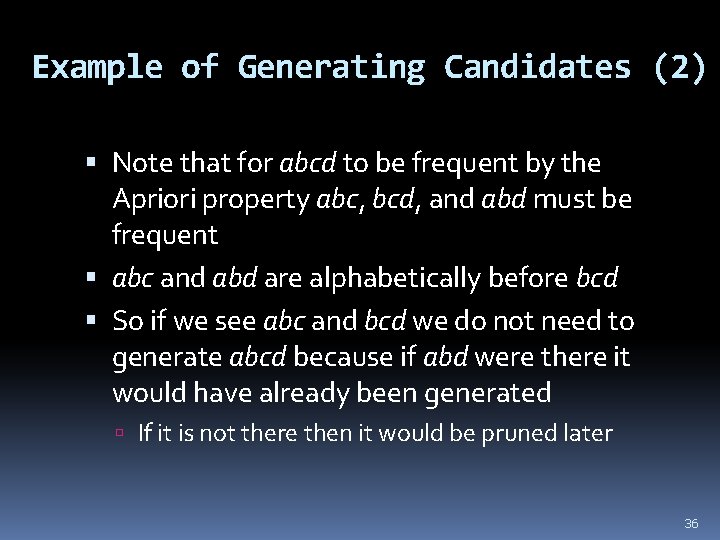

Example of Generating Candidates (2) Note that for abcd to be frequent by the Apriori property abc, bcd, and abd must be frequent abc and abd are alphabetically before bcd So if we see abc and bcd we do not need to generate abcd because if abd were there it would have already been generated If it is not there then it would be pruned later 36

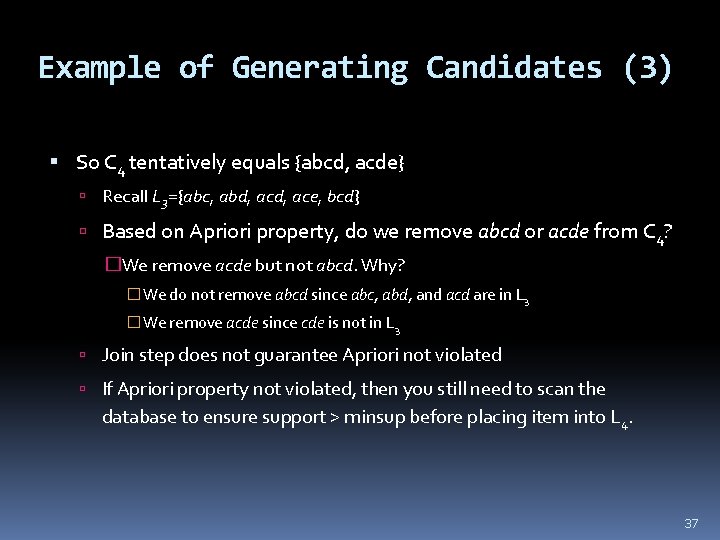

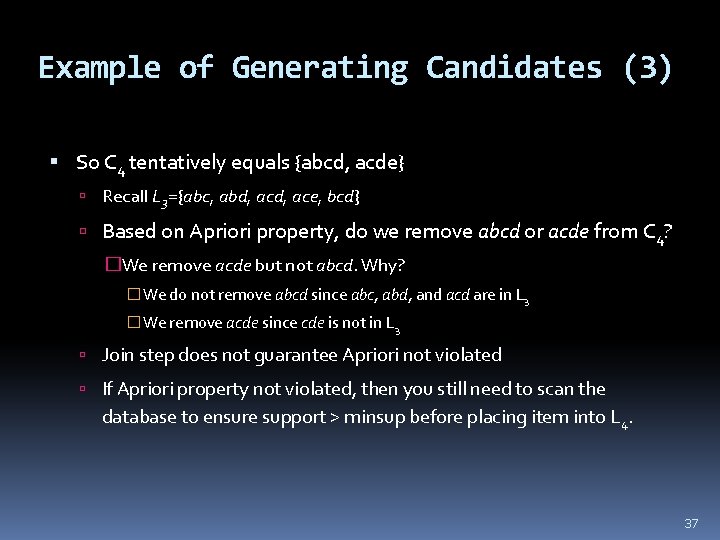

Example of Generating Candidates (3) So C 4 tentatively equals {abcd, acde} Recall L 3={abc, abd, ace, bcd} Based on Apriori property, do we remove abcd or acde from C 4? �We remove acde but not abcd. Why? �We do not remove abcd since abc, abd, and acd are in L 3 �We remove acde since cde is not in L 3 Join step does not guarantee Apriori not violated If Apriori property not violated, then you still need to scan the database to ensure support > minsup before placing item into L 4. 37

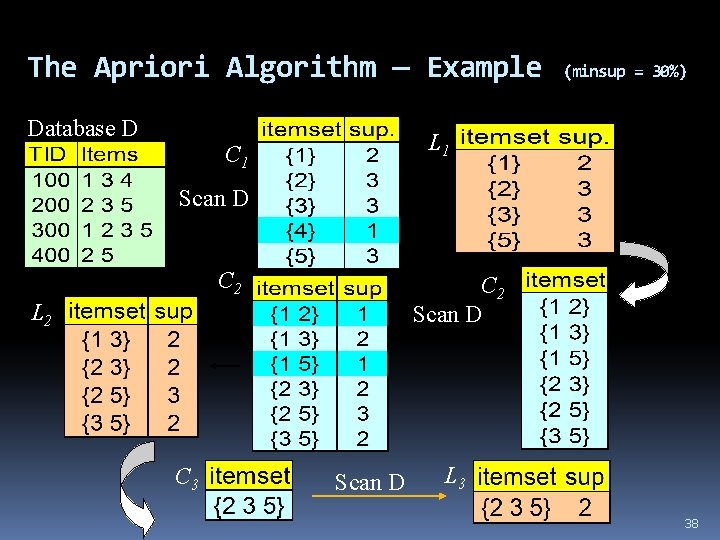

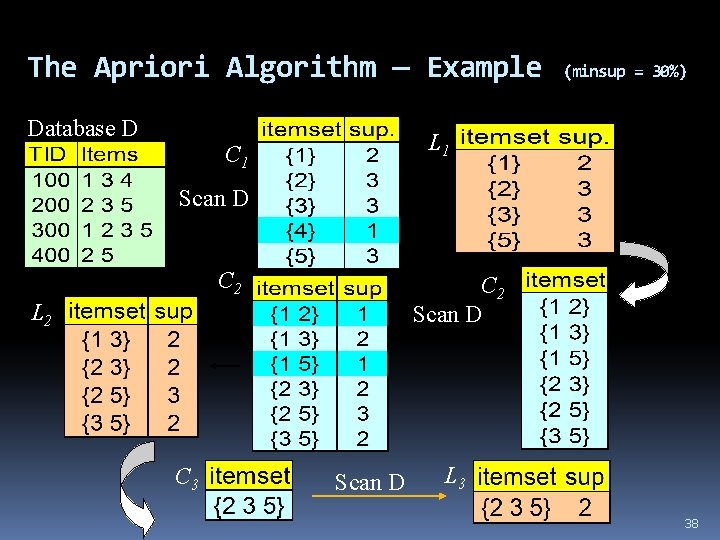

The Apriori Algorithm — Example Database D (minsup = 30%) L 1 C 1 Scan D C 2 Scan D L 2 C 3 Scan D L 3 38

Warning: Do Not Forget Pruning Rules get pruned in two ways Apriori property violated If Apriori not violated, still must scan database and if minsup not exceeded then prune �Apriori property is necessary but not sufficient to keep a rule If you forget to prune via Apriori property, you will get same results since will catch on the scan �But I will take off points on an exam. Make it clear when prune using Apriori property (do not fill in count when crossing off) Apriori property cannot be violated until k=3. Begins go get trickier at k=4 since more subsets to check 39

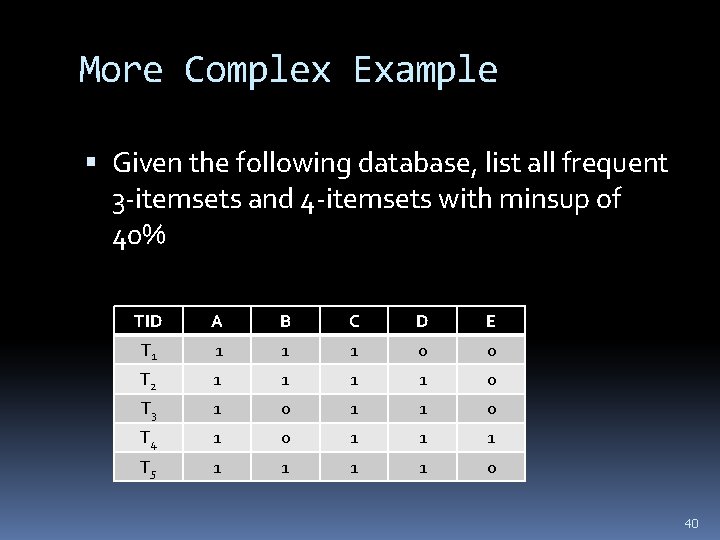

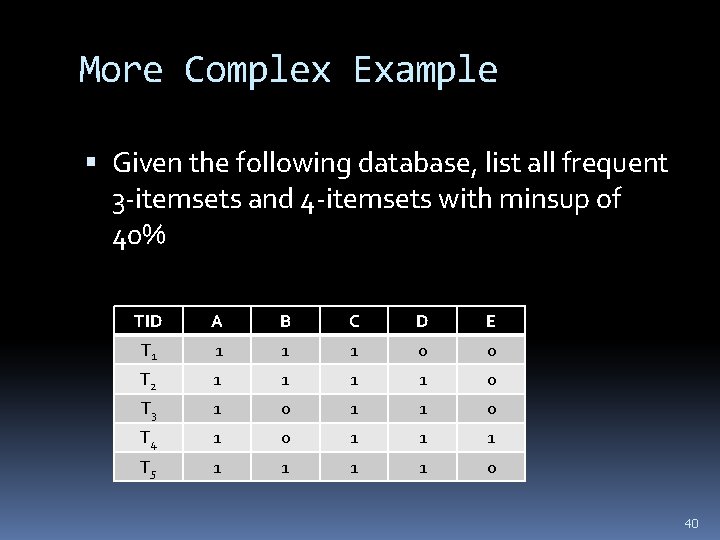

More Complex Example Given the following database, list all frequent 3 -itemsets and 4 -itemsets with minsup of 40% TID A B C D E T 1 1 0 0 T 2 1 1 0 T 3 1 0 1 1 0 T 4 1 0 1 1 1 T 5 1 1 0 40

Solution The details are provided on this webpage: http: //www 2. cs. uregina. ca/~dbd/cs 831/notes/itemset_apriori. html Frequent 3 -itemsets: �ABC, ABD, ACE, ADE, BCD, CDE Frequent 4 -itemsets �ABCD, ACDE 41

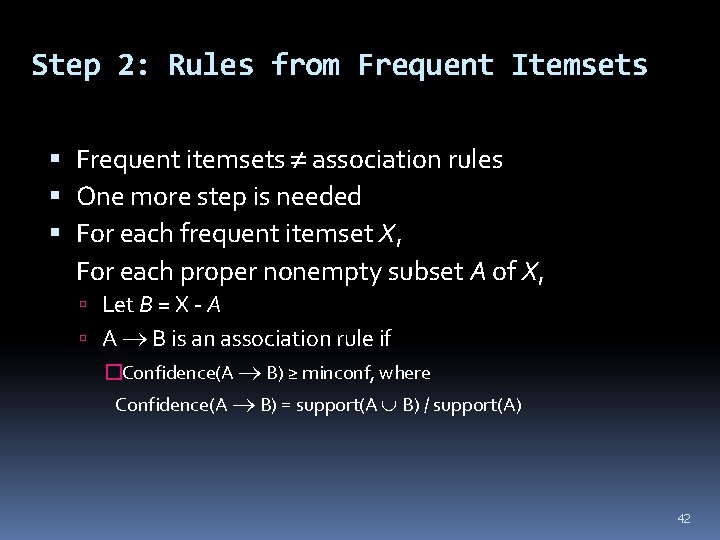

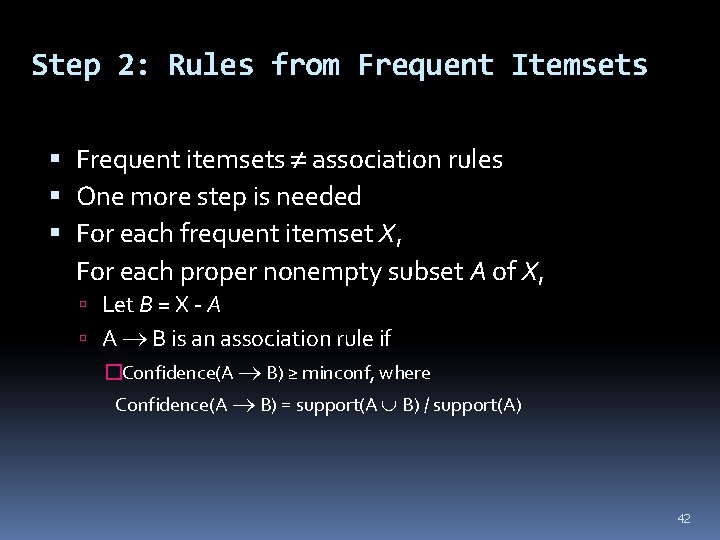

Step 2: Rules from Frequent Itemsets Frequent itemsets association rules One more step is needed For each frequent itemset X, For each proper nonempty subset A of X, Let B = X - A A B is an association rule if �Confidence(A B) ≥ minconf, where Confidence(A B) = support(A B) / support(A) 42

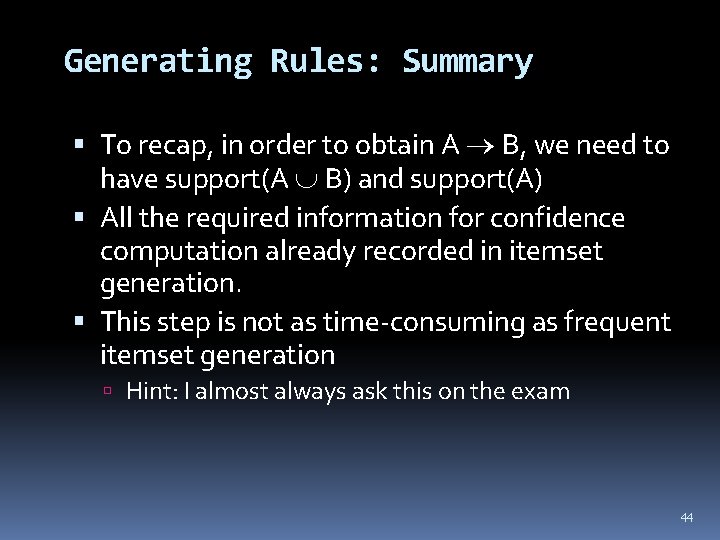

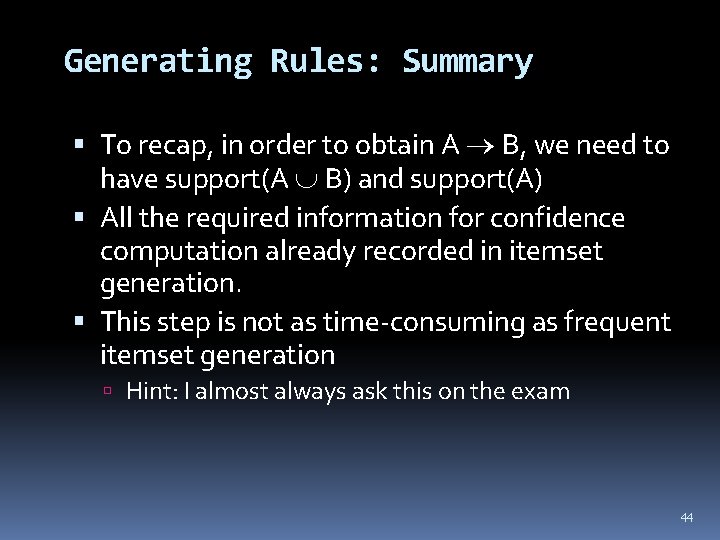

Generating Rules: an Example Suppose {2, 3, 4} is frequent, with sup=50% Proper nonempty subsets: {2, 3}, {2, 4}, {3, 4}, {2}, {3}, {4}, with sup=50%, 75%, 75% respectively These generate these association rules Recall: Confidence(A B) = support(A B) / support(A), where support(A B) =50% � 2, 3 4, confidence=100% (50%/50%) � 2, 4 3, confidence=100% (50%/50%) � 3, 4 2, confidence=67% (50%/75%) � 2 3, 4, confidence=67% (50%/75%) � 3 2, 4, confidence=67% (50%/75%) � 4 2, 3, confidence=67% (50%/75%) �All rules have support = 50% Then apply confidence threshold to identify strong rules �Rules that meet the support and confidence requirements �If confidence threshold is 80% we are left with 2 strong rules 43

Generating Rules: Summary To recap, in order to obtain A B, we need to have support(A B) and support(A) All the required information for confidence computation already recorded in itemset generation. This step is not as time-consuming as frequent itemset generation Hint: I almost always ask this on the exam 44

On Apriori Algorithm Seems to be very expensive Level-wise search K = the size of the largest itemset It makes at most K passes over data In practice, K is bounded (10). The algorithm is very fast. Under some conditions, all rules can be found in linear time. Scale up to large data sets 45

Granularity of items One exception to the “ease” of applying association rules is selecting the granularity of the items. Should you choose: diet coke? coke product? soft drink? beverage? Should you include more than one level of granularity? Some association finding techniques allow you to represent hierarchies explicitly 46

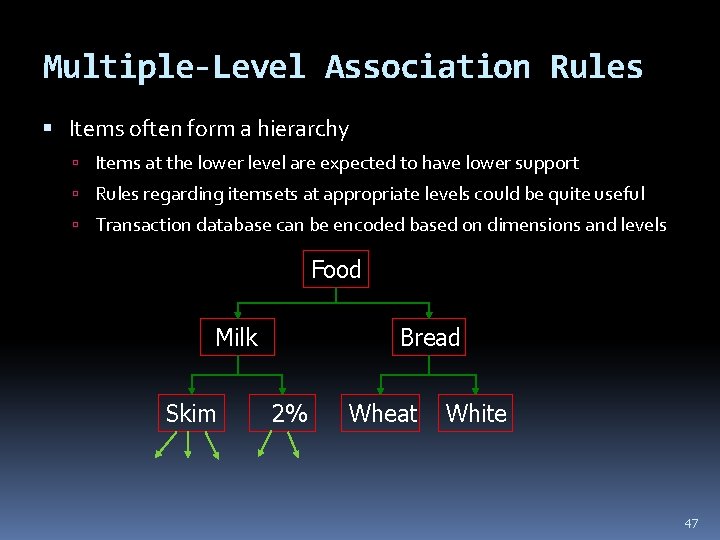

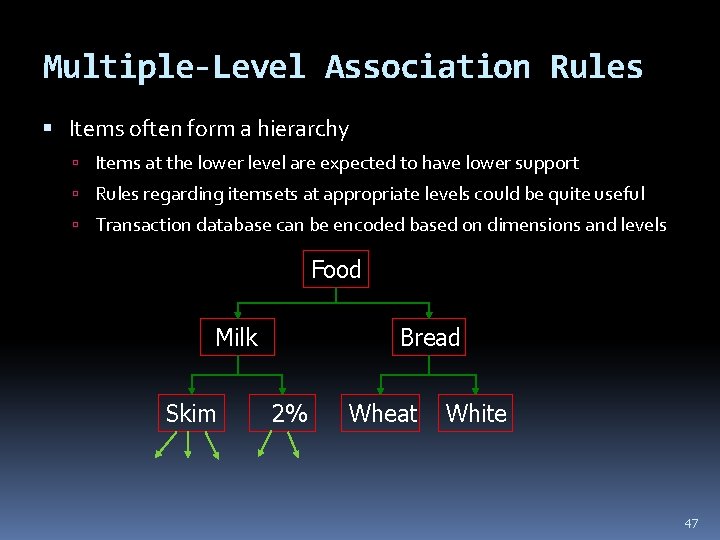

Multiple-Level Association Rules Items often form a hierarchy Items at the lower level are expected to have lower support Rules regarding itemsets at appropriate levels could be quite useful Transaction database can be encoded based on dimensions and levels Food Milk Skim Bread 2% Wheat White 47

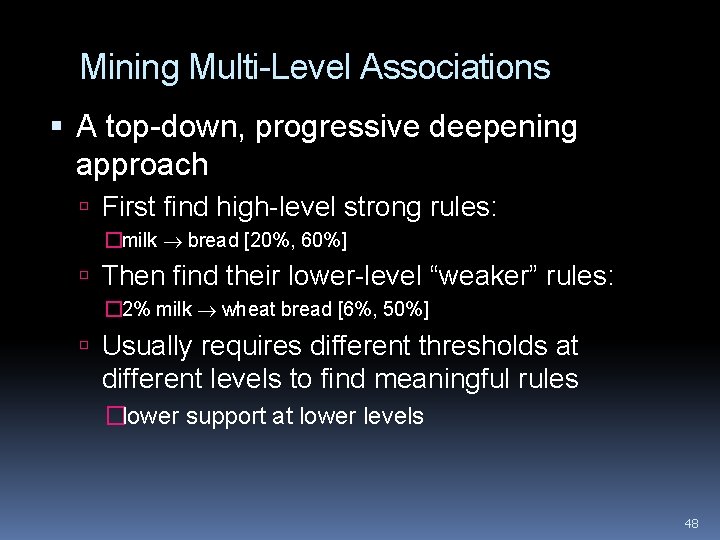

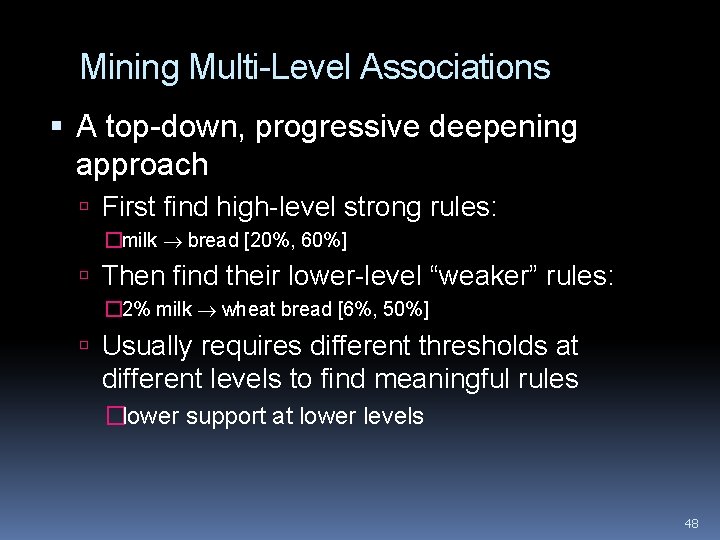

Mining Multi-Level Associations A top-down, progressive deepening approach First find high-level strong rules: �milk bread [20%, 60%] Then find their lower-level “weaker” rules: � 2% milk wheat bread [6%, 50%] Usually requires different thresholds at different levels to find meaningful rules �lower support at lower levels 48

Interestingness Measurements Objective measures Two popular measurements: �Support �Confidence Subjective measures (Silberschatz & Tuzhilin, KDD 95) A rule (pattern) is interesting if it is unexpected (surprising to the user); and/or actionable (the user can do something with it) 49

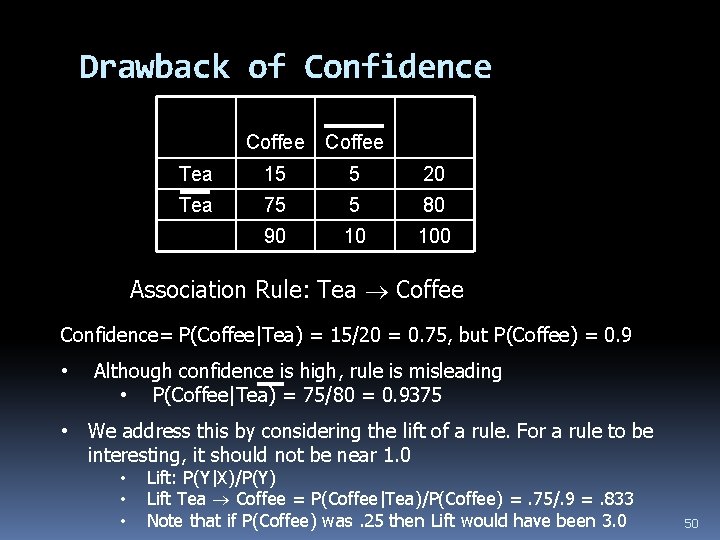

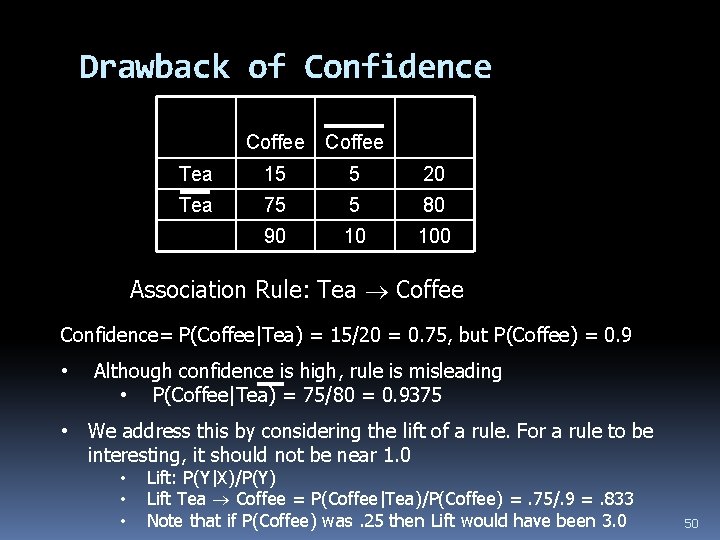

Drawback of Confidence Coffee Tea 15 5 20 Tea 75 5 80 90 10 100 Association Rule: Tea Coffee Confidence= P(Coffee|Tea) = 15/20 = 0. 75, but P(Coffee) = 0. 9 • Although confidence is high, rule is misleading • P(Coffee|Tea) = 75/80 = 0. 9375 • We address this by considering the lift of a rule. For a rule to be interesting, it should not be near 1. 0 • • • Lift: P(Y|X)/P(Y) Lift Tea Coffee = P(Coffee|Tea)/P(Coffee) =. 75/. 9 =. 833 Note that if P(Coffee) was. 25 then Lift would have been 3. 0 50

Customer Number vs. Transaction ID In the homework you may have a problem where there is a customer id for each transaction You can be asked to do association analysis based on the customer id �If this is so, you need to aggregate the transactions to the customer level �If a customer has 3 transactions then you just create an itemset containing all of the items in the union of the 3 transactions �Note we will ignore the frequency of purchase 51

Virtual items If you’re interested in including other possible variables, can create “virtual items” gift-wrap, used-coupon, new-store, winterholidays, bought-nothing, … 52

Associations: Pros and Cons Pros can quickly mine patterns describing business/customers/etc. without major effort in problem formulation virtual items allow much flexibility unparalleled tool for hypothesis generation Cons unfocused �not clear exactly how to apply mined “knowledge” �only hypothesis generation can produce many, many rules! �may only be a few nuggets among them (or none) 53

Association Rules Association rule types: Actionable Rules – contain high-quality, actionable information Trivial Rules – information already well-known by those familiar with the business Inexplicable Rules – no explanation and do not suggest action Trivial and inexplicable rules occur most often 54