ASSESSMENT OF STUDENT LEARNING Why do we need

- Slides: 73

ASSESSMENT OF STUDENT LEARNING

Why do we need to Assess Methods? Assessment literacy involves understanding how assessments are made, what type of assessments answer what questions, and how the data from assessments can be used to help teachers, students, parents, and other stakeholders make decisions about teaching and learning.

Chapter II Characteristics of Assessment Methods

Validity Reliability Practicability Justness Morality in Assessment

I. VALIDITY The degree to which a test measures what it tends to measure or the truthfulness of the response. A test concern “ what the test measures and how well it does so”.

Types of Validity Content Validity Concurrent Validity Predictive Validity Construct Validity

Content Validity The content or topic is truly the representative course. It involves the systematic administration of the test contain to determine if it covers the representative of the behavior domain to be measured.

Content Validity Described by the relevance of a test to different types of criteria namely: Thorough judgement Systematic examination of relevant course syllabi Textbooks

The three domains of behavior Cognitive Affective Psychomotor

Concurrent Validity The degree to which the test agrees or correlates with a criterion set up as an acceptable measures. The criterion is always available at the time of testing.

Predictive Validity Determines by showing how well predictions made from the test are proven by proof collected at some succeeding time. The criterion measures against this type of validity is important.

Construct Validity The extent to which the test measures a theoretical trait.

What are some ways to improve validity? >Make sure your goals and objectives are clearly defined and operationalized. Expectations of students should be written down. >Match your assessment measure to your goals and objectives. Additionally, have the test reviewed by faculty at other schools to obtain feedback from an outside party who is less invested in the instrument.

What are some ways to improve validity? >Get students involved; have the students look over the assessment for troublesome wording, or other difficulties. >If possible, compare your measure with other measures, or data that may be available.

So why is validity important? If the results of a study are not deemed to be valid then they are meaningless to our study. If it does not measure what we want it to measure then the results cannot be used to answer the research question, which is the main aim of the study.

So why is validity important? These results cannot then be used to generalize any findings and become a waste of time and effort. It is important to remember that just because a study is valid in one instance it does not mean that it is valid for measuring something else.

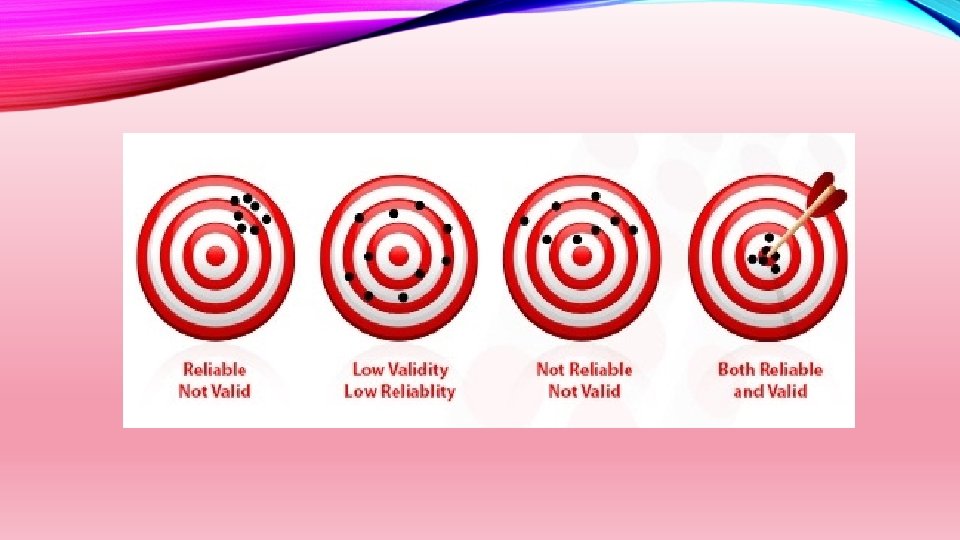

II. RELIABILITY a test is consistent and dependable the test agrees with itself concerned with the consistency of responses from moment to moment however, a reliable test may not always be valid

2 Reasons for Reliability: 1. Reliability provides a measure of the extent to which an examinee’s score reflects random measurement error.

Measurement errors are caused by one of four factors: (a)examinee-specific factors such as motivation, concentration, fatigue, boredom, momentary lapses of memory, carelessness in marking answers, and luck in guessing, (b)test-specific factors such as the specific set of questions selected for a test, ambiguous or tricky items, and poor directions (c)scoring-specific factors such as non-uniform scoring guidelines, carelessness, and counting or computational errors (d)environmental factors. Differences in the testing environment, such as room temperature, lighting, noise, or even the test administrator, can influence an individual's test performance

2 Reasons for Reliability: 2. Reliability is a precursor to test validity. That is, if test scores cannot be assigned consistently, it is impossible to conclude that the scores accurately measure the domain of interest.

2 Types of Reliability: 1. Internal reliability assesses the consistency of results across items within a test (split-half method) 2. External reliability refers to the extent to which a measure varies from one use to another (testretest)

TECHNIQUES IN TESTING THE RELIABILITY OF ASSESSMENT METHOD

1. Test Retest Method- same test is administered twice to the same group of students and the correlation coefficient is determined The correlation coefficient is a measure that determines the degree to which two variables' movements are associated. The range of values for the correlation coefficient is -1. 0 to 1. 0.

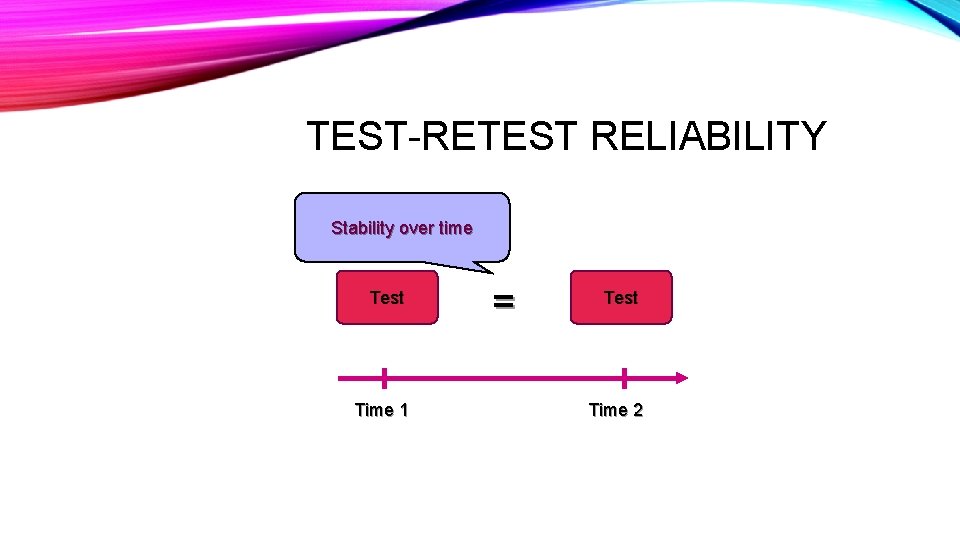

TEST-RETEST RELIABILITY Stability over time Test Time 1 = Test Time 2

1. Test Retest Method Disadvantage: - when the time interval is short, the respondents may recall their previous responses and tends to make the correlation coefficient high - when the time is long, factors such as unlearning, forgetting, among others may occur and may result in low correlation of the test - regardless of the time interval separating the two administrations, other varying environmental conditions such as noise, temperature, lighting and other factors may affect the correlation coefficient of the test

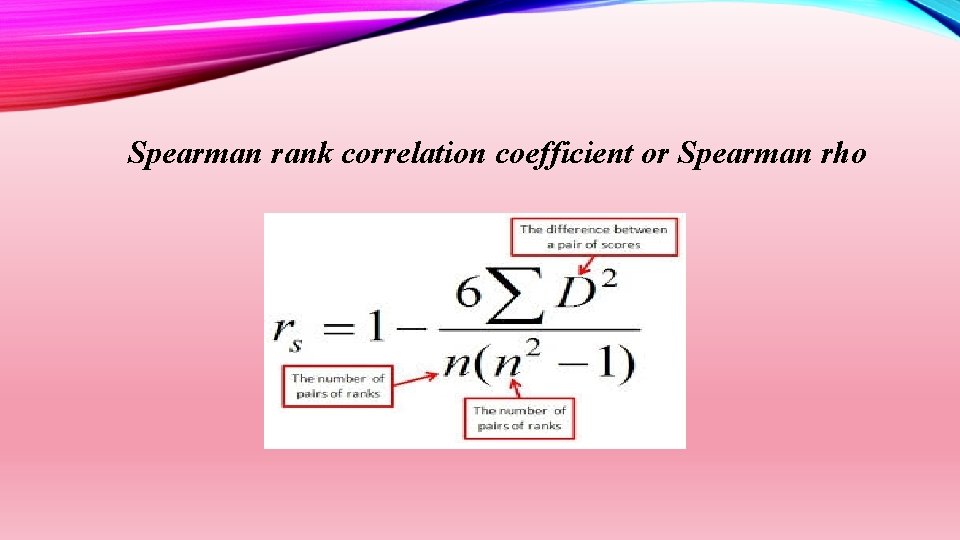

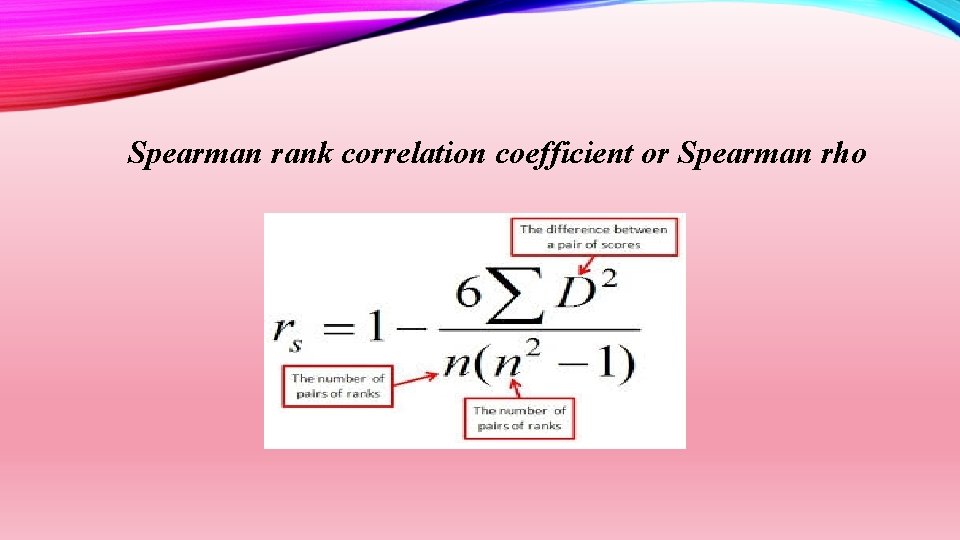

A Spearman rank correlation coefficient or Spearman rho is the statistical tool used to measure the relationship between paired ranks assigned to the individual scores on two variables, X and Y of the first administration (X) and second administration (Y). To obtain the value of Spearman rho (r), consider this formula:

Spearman rank correlation coefficient or Spearman rho

Interpretation of Correlation Value An r from 0. 00 indicates zero correlation An r from 0. 01 to ± 0. 20 denotes negligible correlation An r from 0. 21 to ± 0. 40 means low or slight correlation An r from 0. 41 to ± 0. 70 signifies marked of moderate relationship An r from 0. 71 to ± 0. 90 deals on high relationship An r from 0. 91 to ± 0. 99 denotes very high relationship An r of ± 1. 0 means perfect correlation If correlation is more than 1. 0, it means there is something wrong in computation

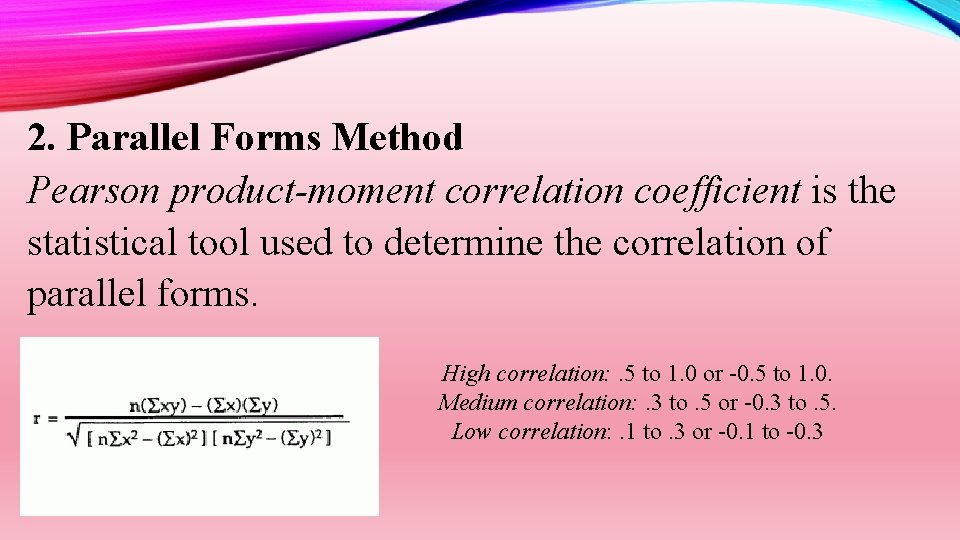

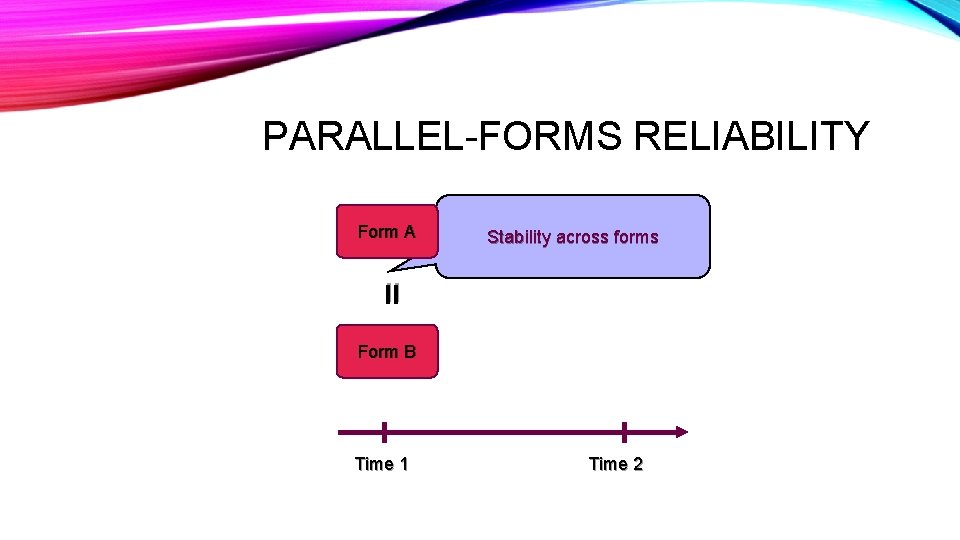

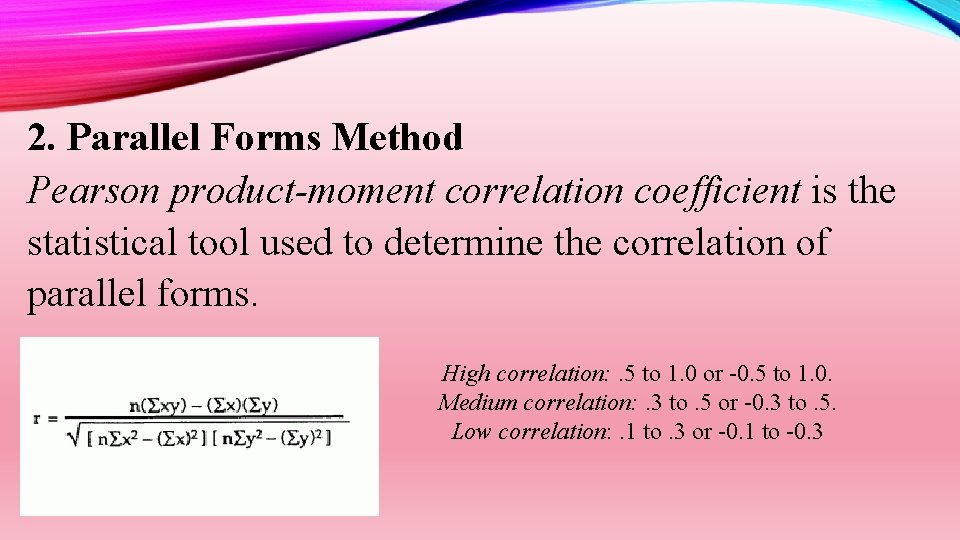

2. Parallel Forms Method- is administered to a group of students, and paired observation is correlated. In constructing this, the two forms of the test must be constructed that the content, type of test item, difficulty and instruction of administration are similar but not identical.

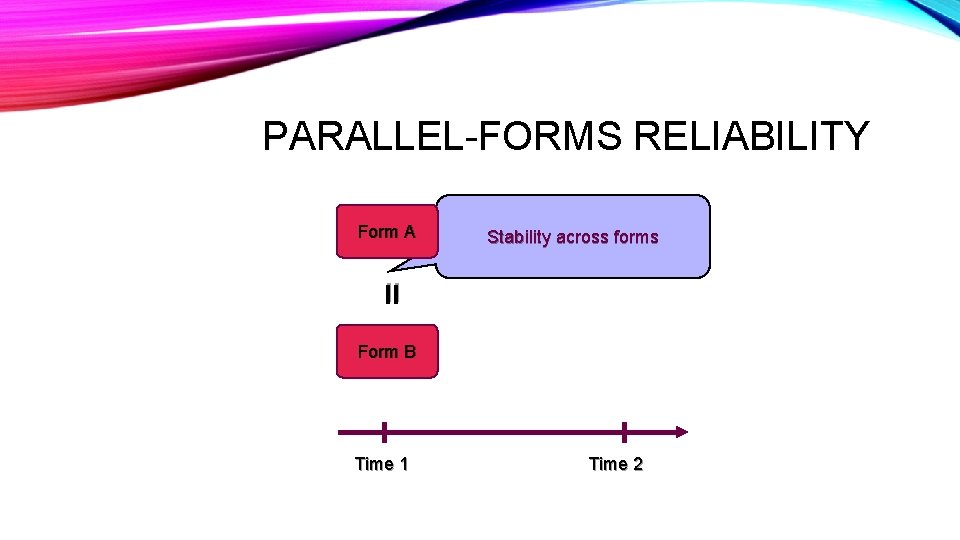

PARALLEL-FORMS RELIABILITY Stability across forms = Form A Form B Time 1 Time 2

2. Parallel Forms Method Pearson product-moment correlation coefficient is the statistical tool used to determine the correlation of parallel forms. High correlation: . 5 to 1. 0 or -0. 5 to 1. 0. Medium correlation: . 3 to. 5 or -0. 3 to. 5. Low correlation: . 1 to. 3 or -0. 1 to -0. 3

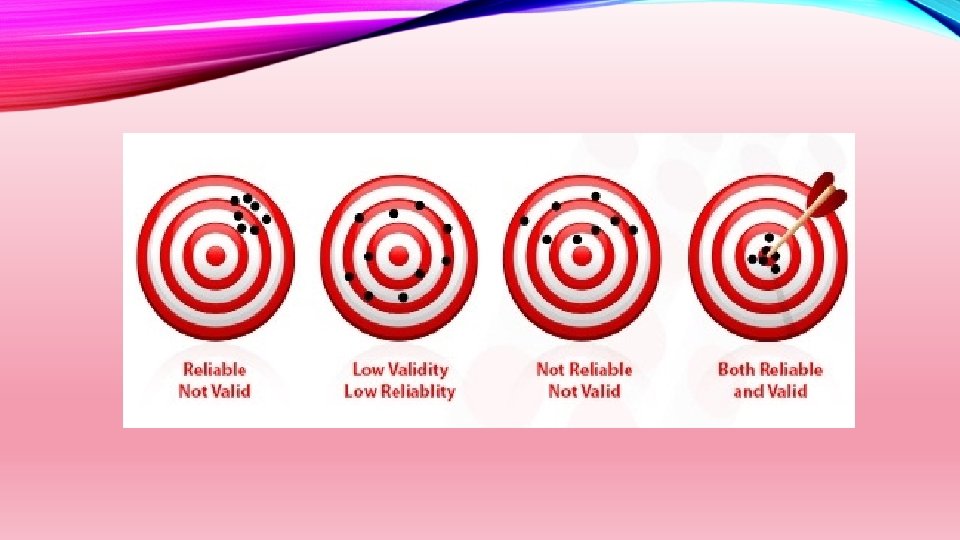

Reliability is defined as the consistency of results from a test. If the new test delivers scores for a candidate of 87, 65, 143 and 102, then the test is not reliable or valid, and it is fatally flawed. • If the test consistently delivers a score of 100 when checked, but the candidates real IQ is 120, then the test is reliable, but not valid. • If the researcher's test delivers a consistent score of 118, then that is pretty close, and the test can be considered both valid and reliable. •

Reliability is an essential component of validity but, on its own, is not a sufficient measure of validity. A test can be reliable but not valid, whereas a test cannot be valid yet unreliable.

The Definition of Reliability - An Example Imagine that a researcher discovers a new drug that she believes helps people to become more intelligent, a process measured by a series of mental exercises. After analyzing the results, she finds that the group given the drug performed the mental tests much better than the control group.

For her results to be reliable, another researcher must be able to perform exactly the same experiment on another group of people and generate results with the same statistical significance. If repeat experiments fail, then there may be something wrong with the original research.

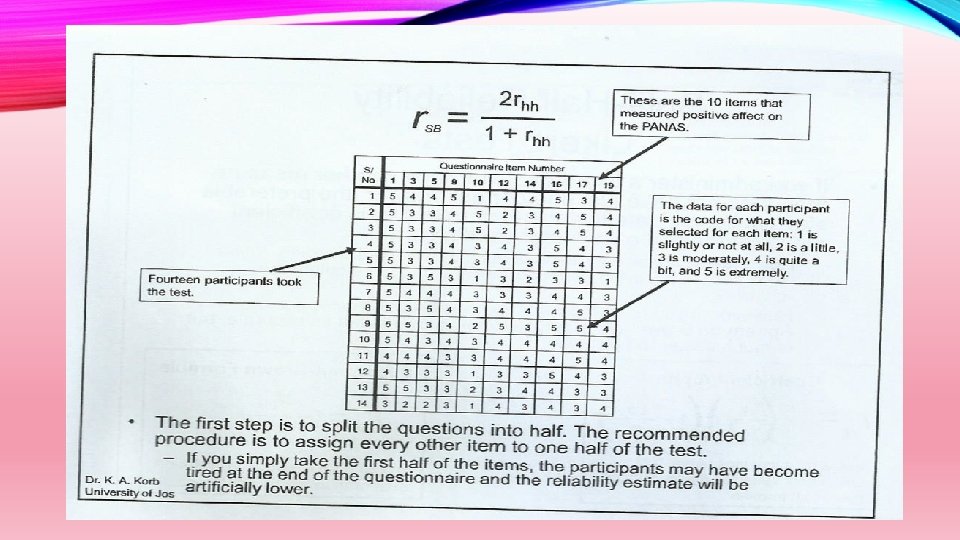

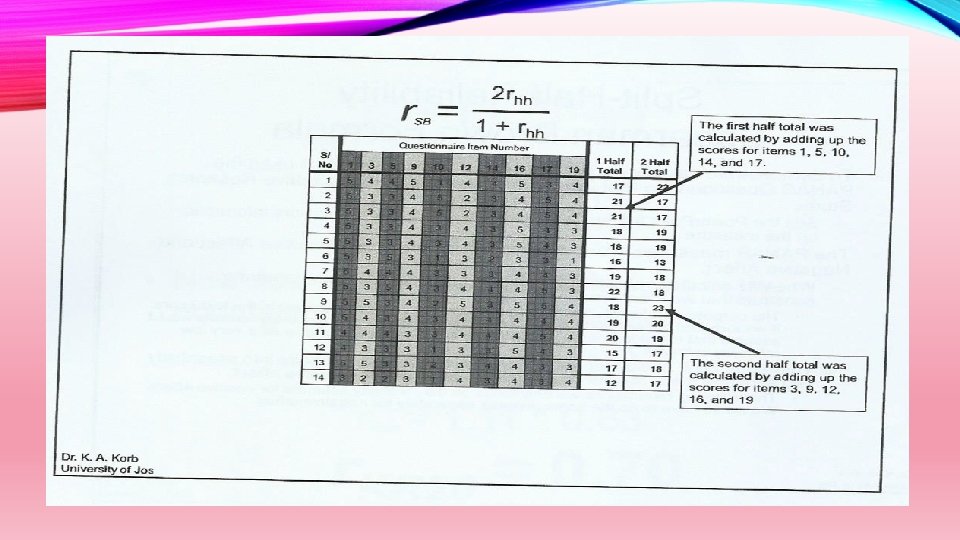

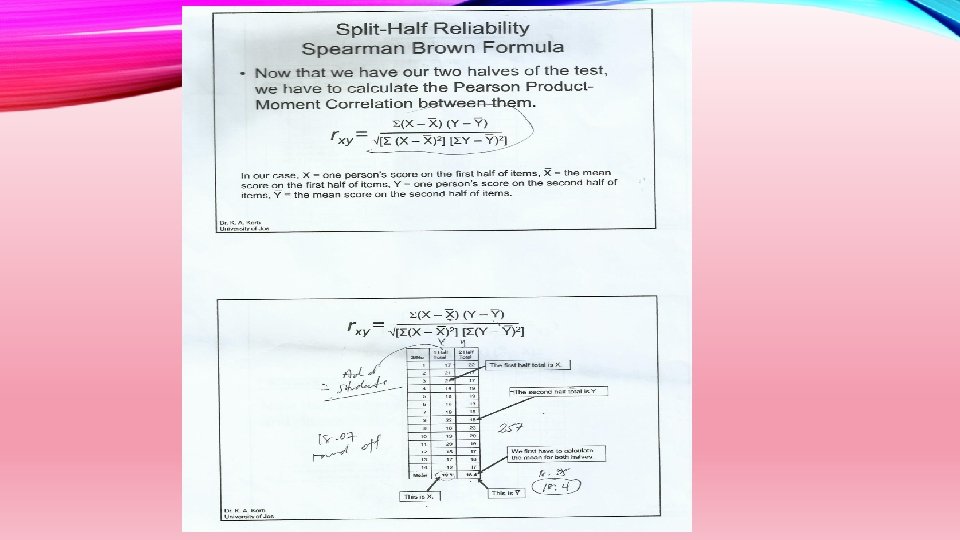

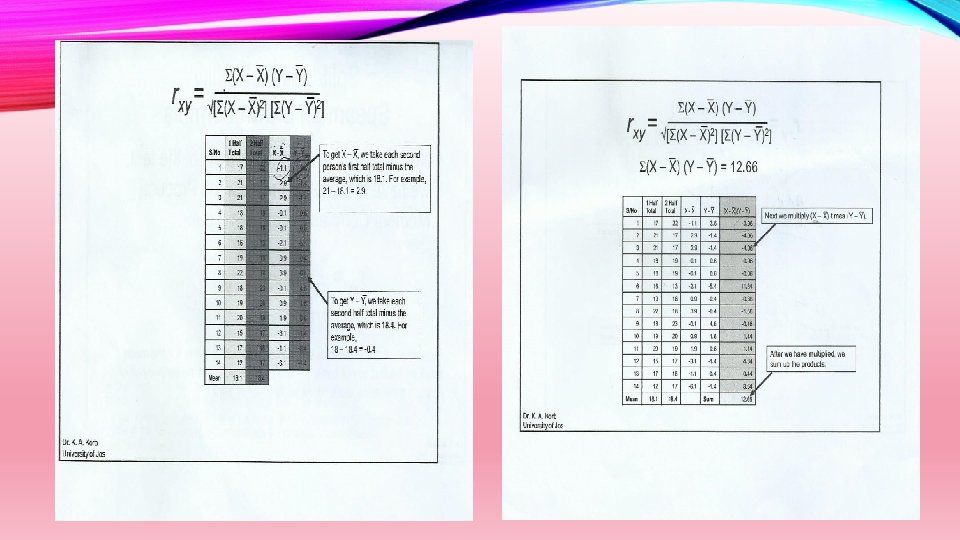

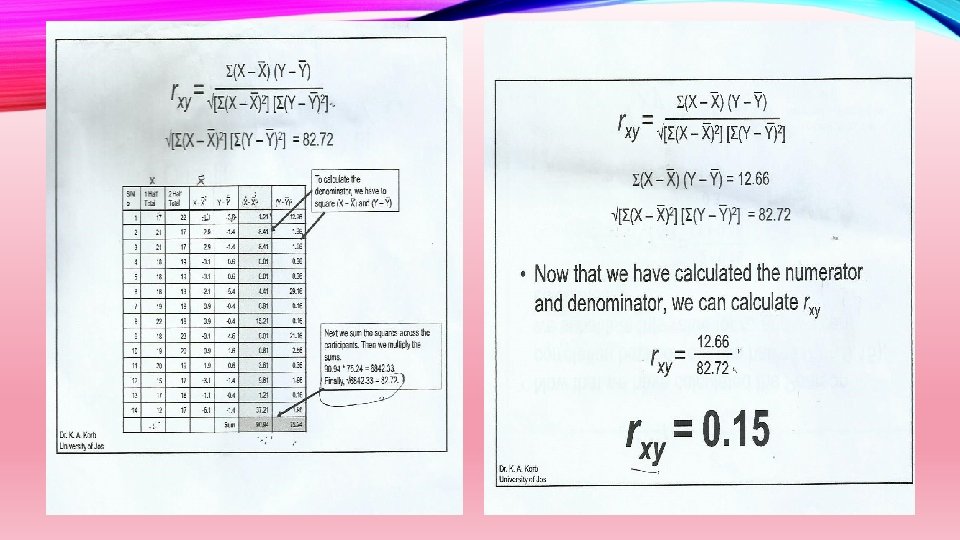

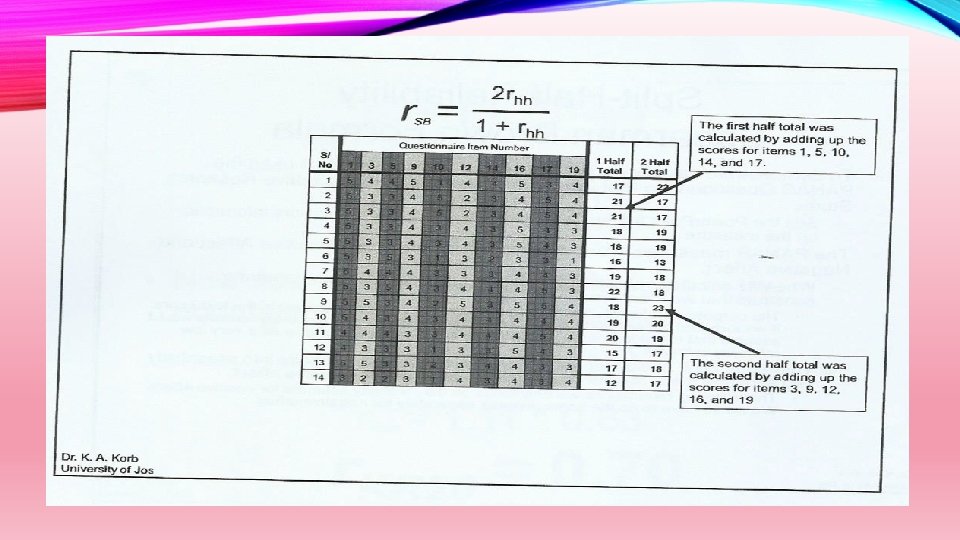

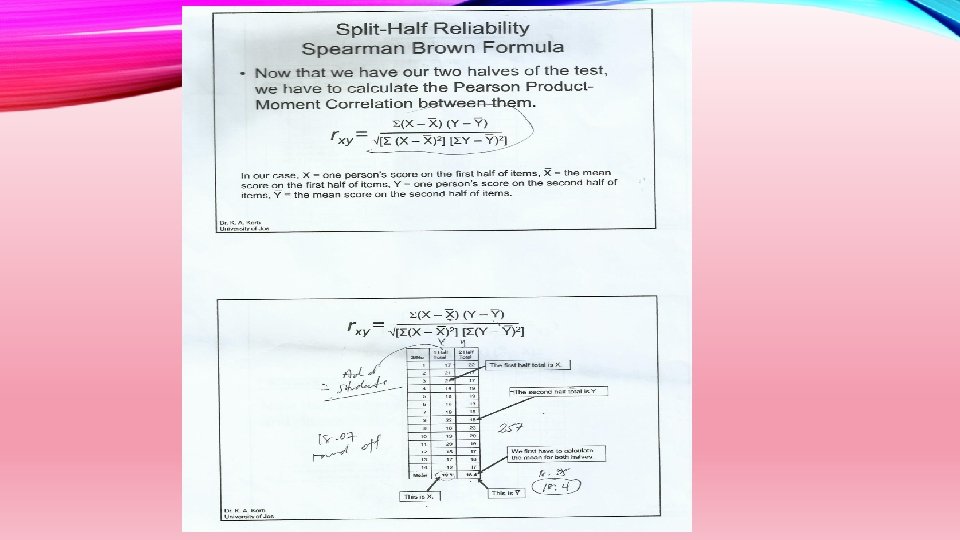

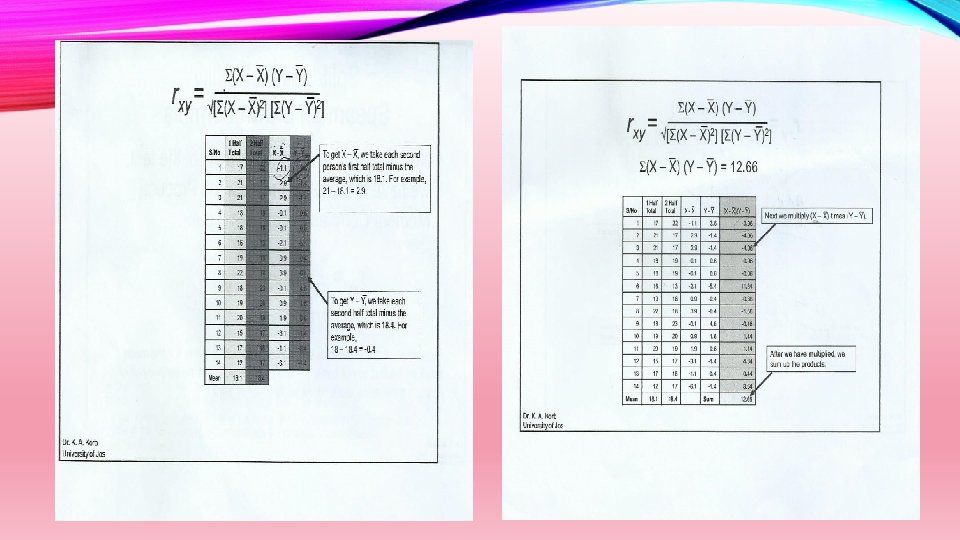

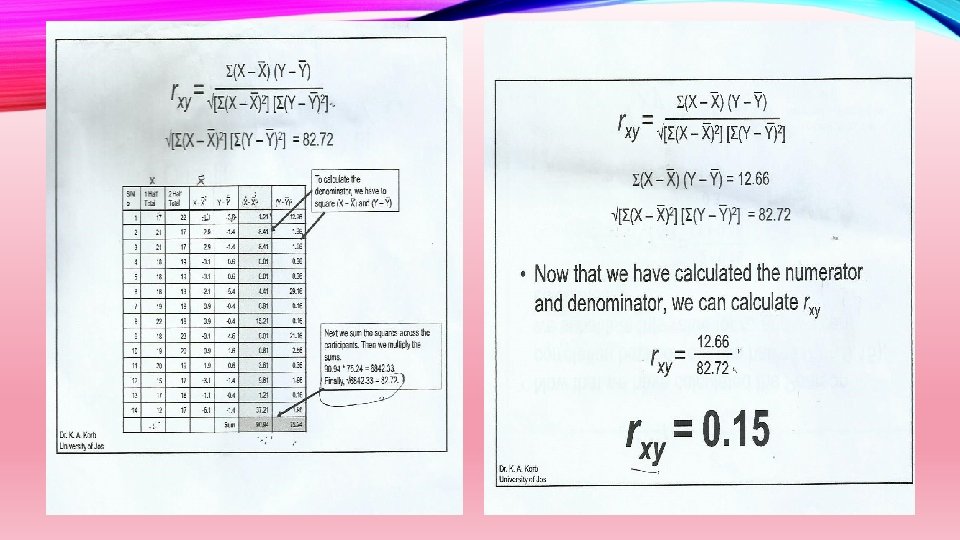

3. Split-Half Method - Measures the extent to which all parts of the test contribute equally to what is being measured

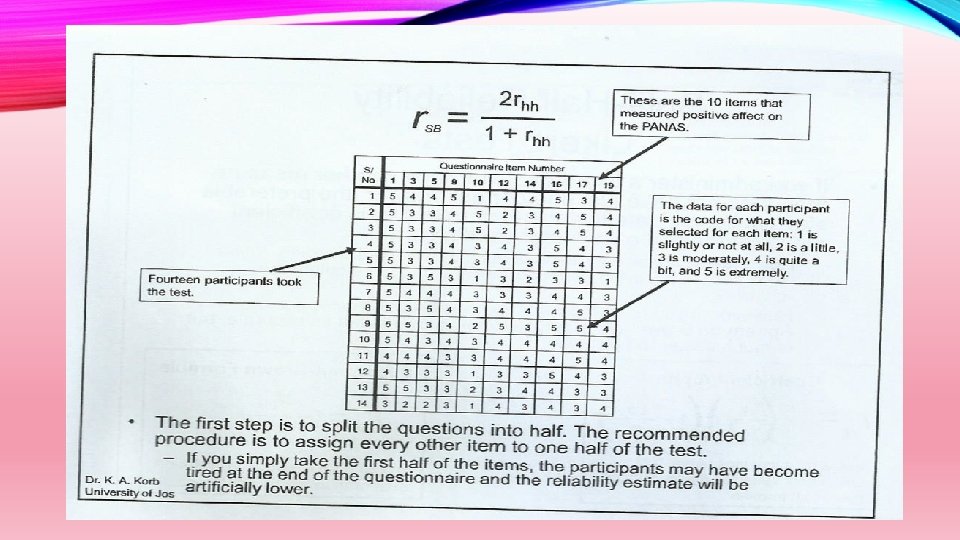

3. Split-Half Method -Administered once, but the test items are divided into two halves. In Split-half Reliability we randomly divide all items that support to measure the same construct into two sets. We administer the entire instrument to a sample of people and calculate the total score for each randomly divided half.

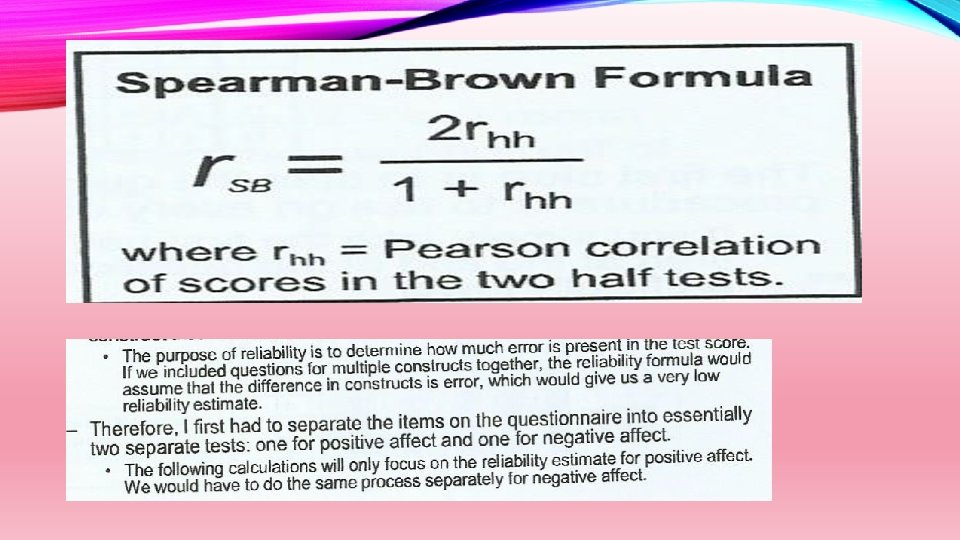

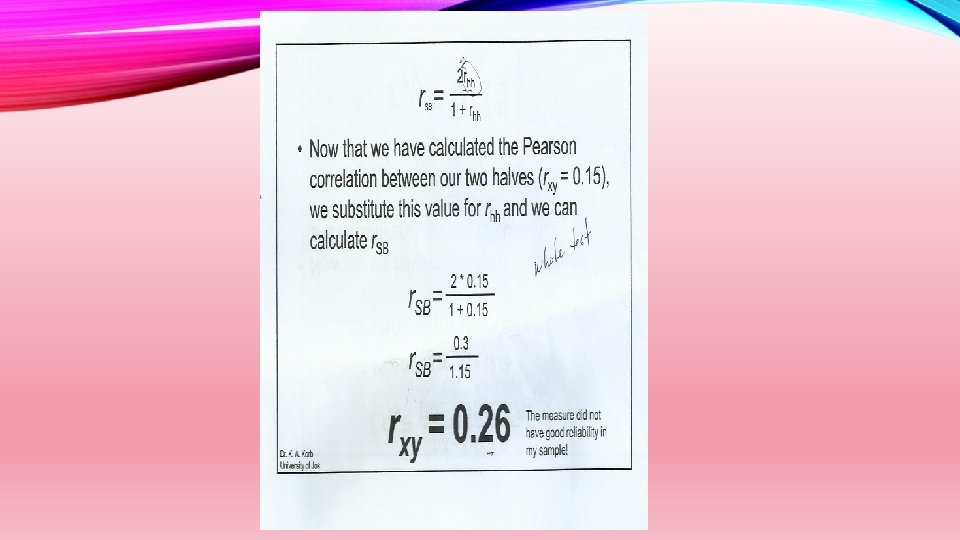

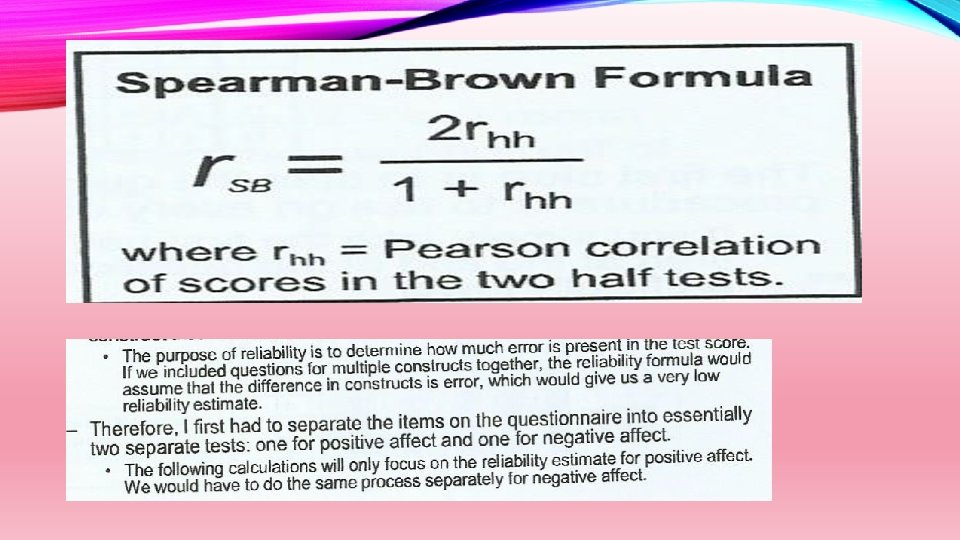

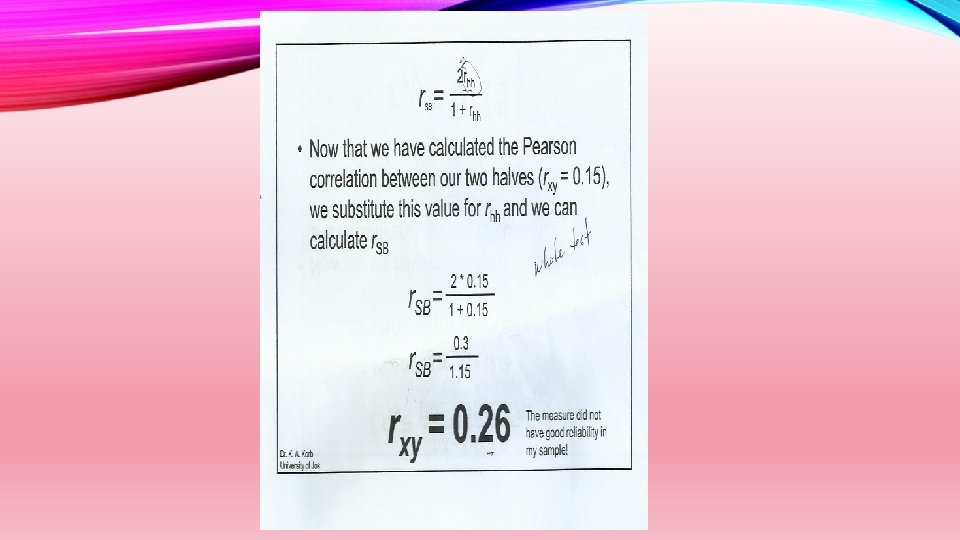

3. Split-Half Method However, if you must calculate the reliability by hand, use the Spearman Brown formula. Spearman Brown is not as accurate, but is much easier to calculate.

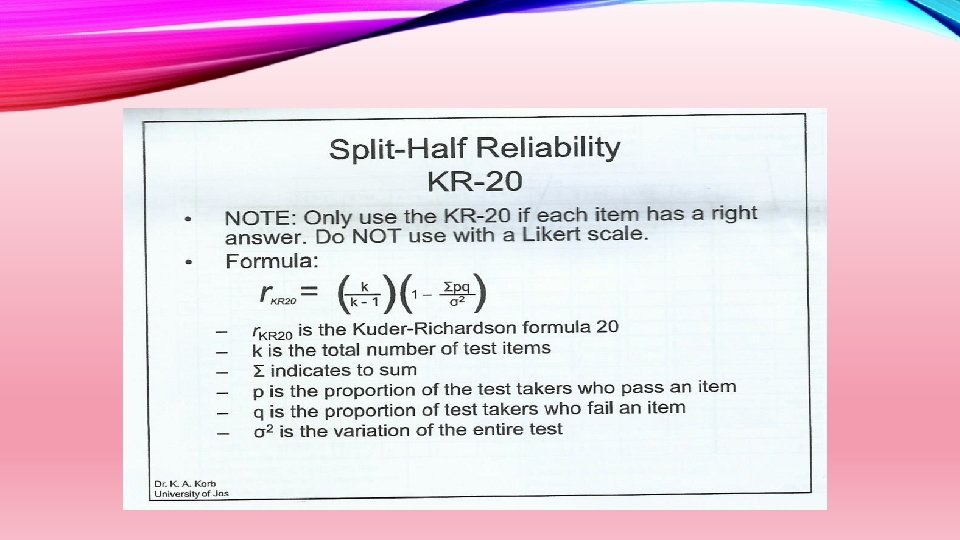

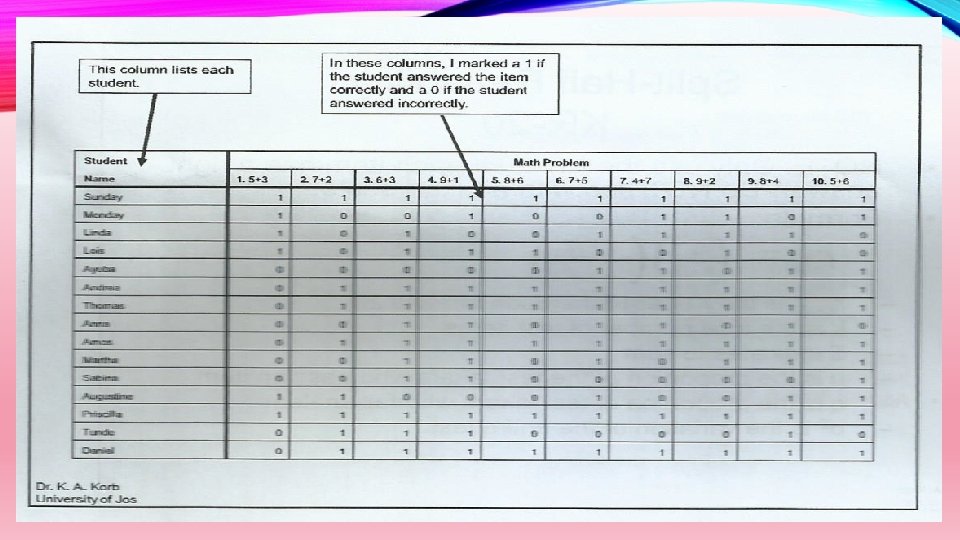

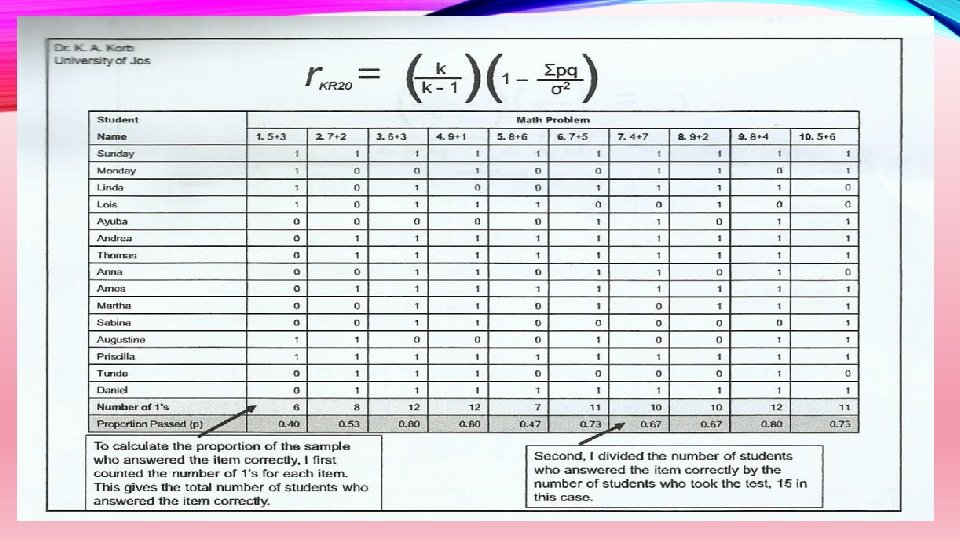

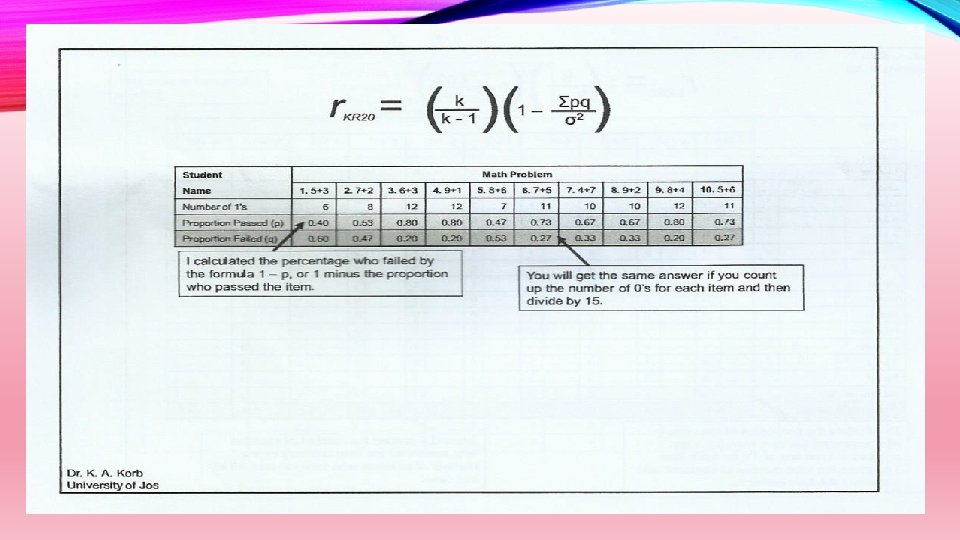

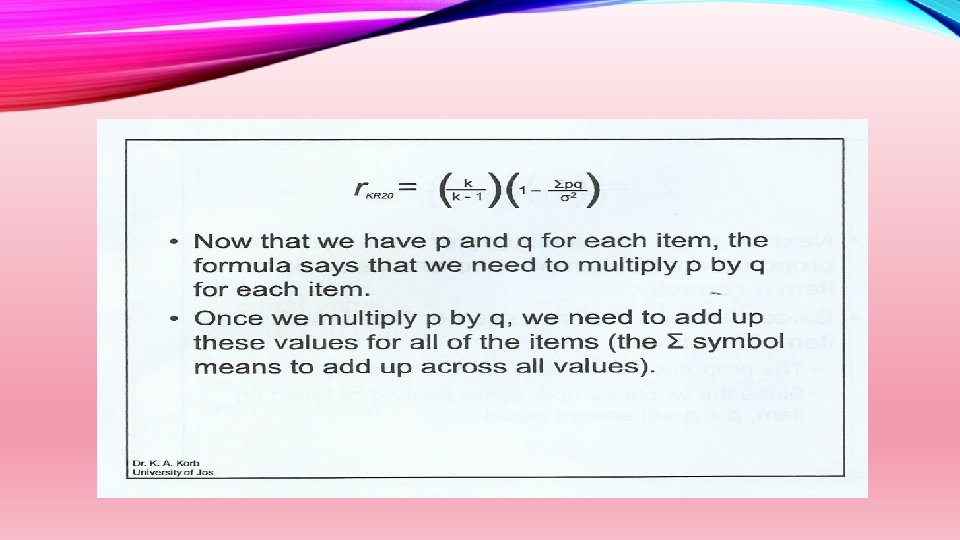

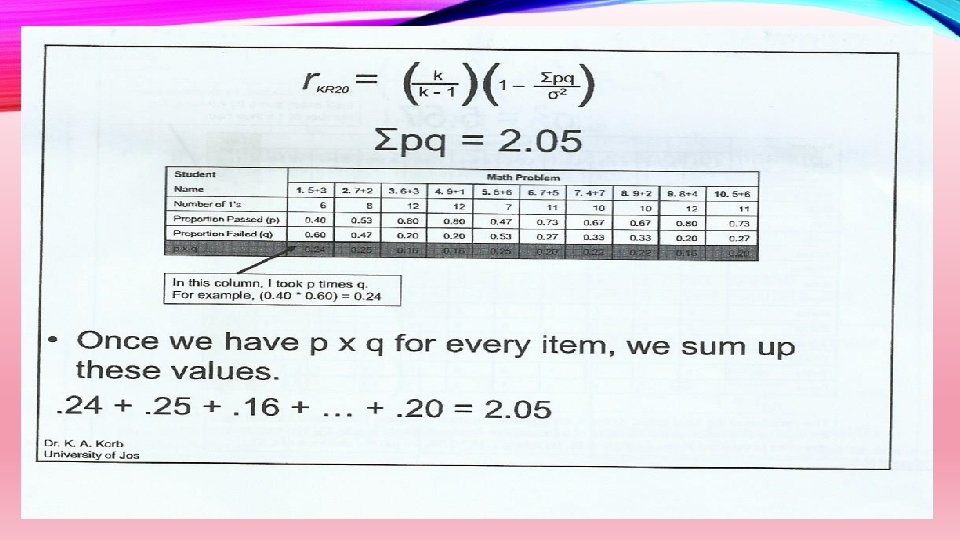

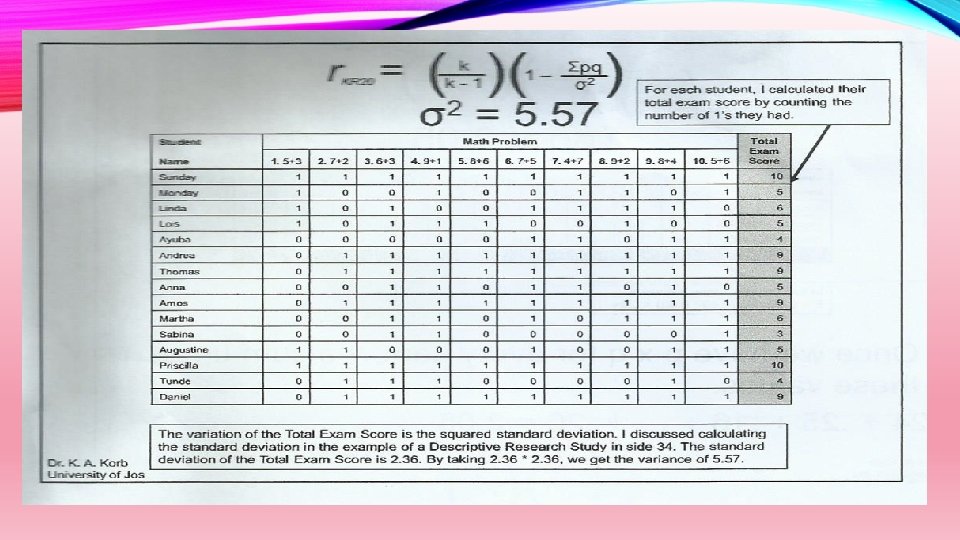

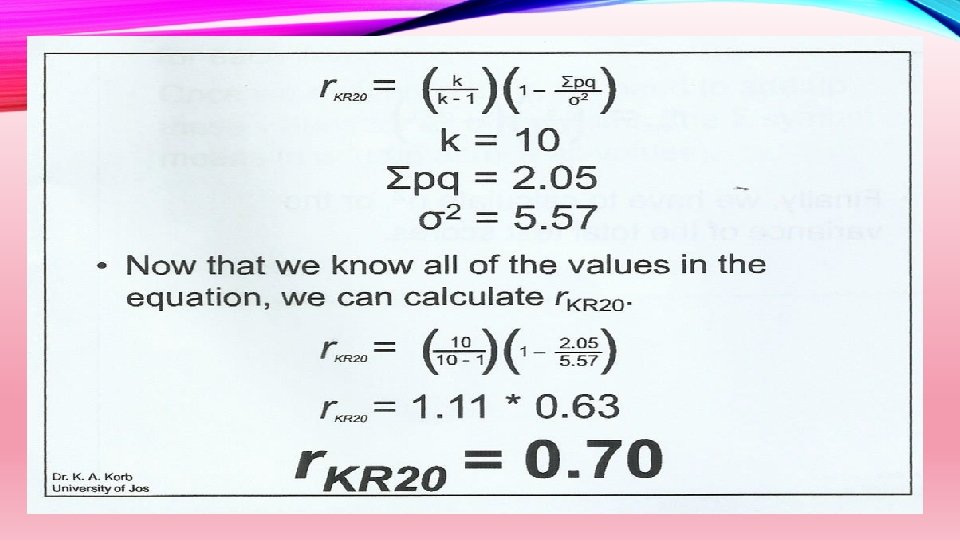

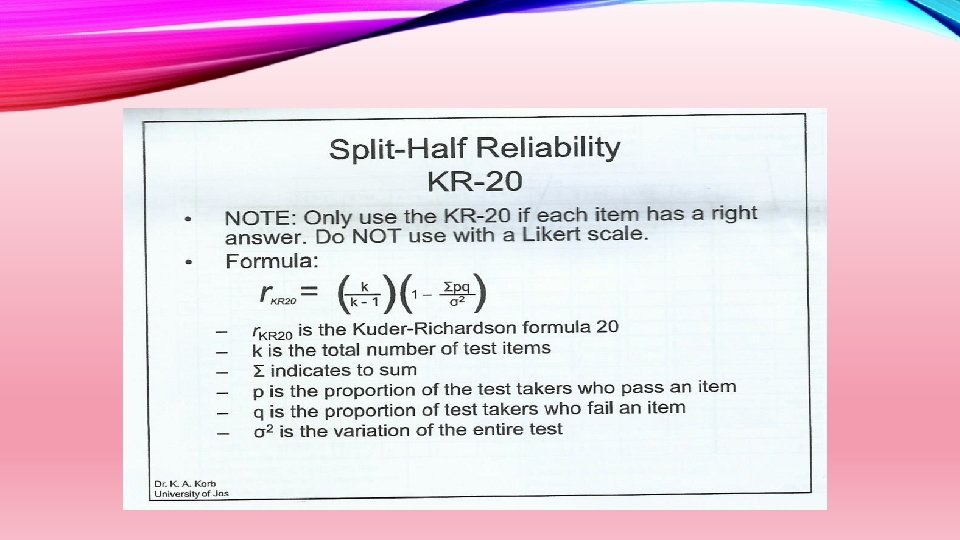

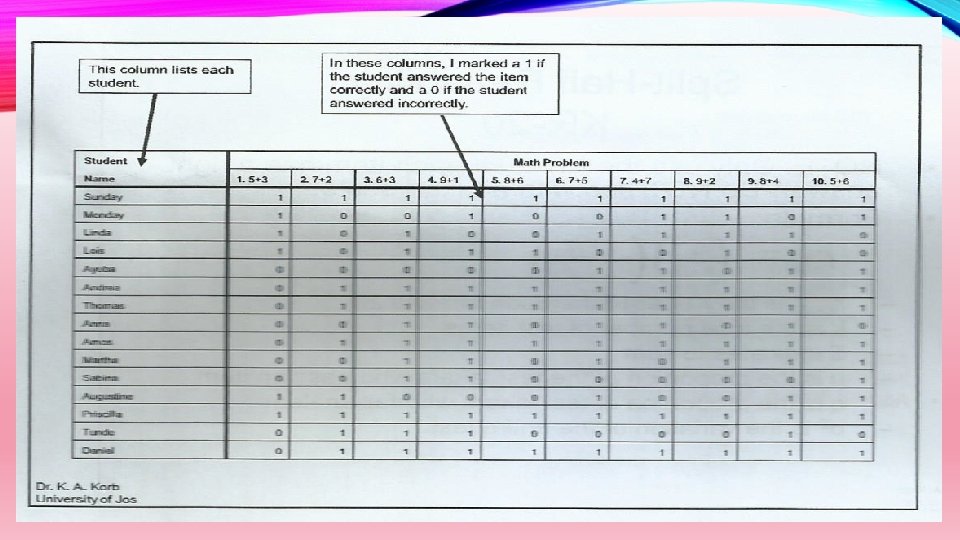

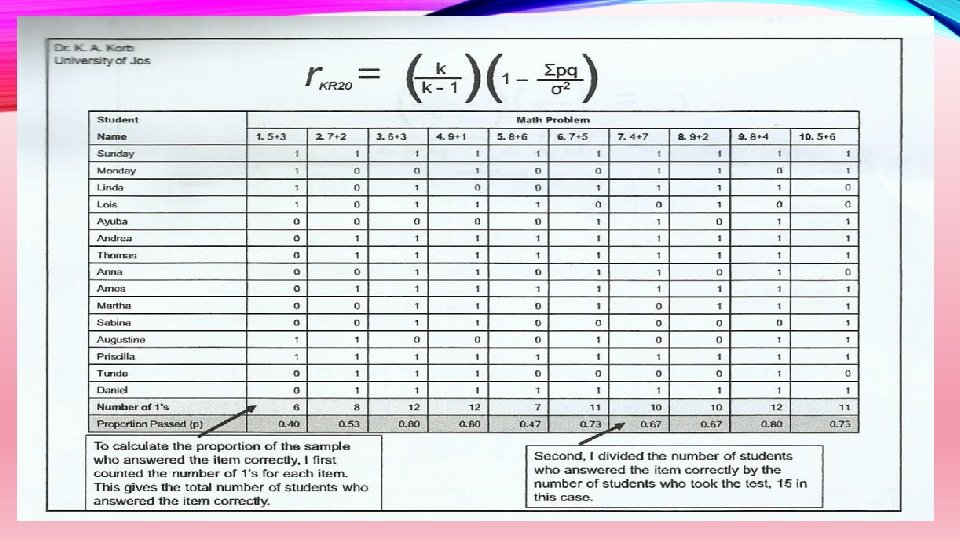

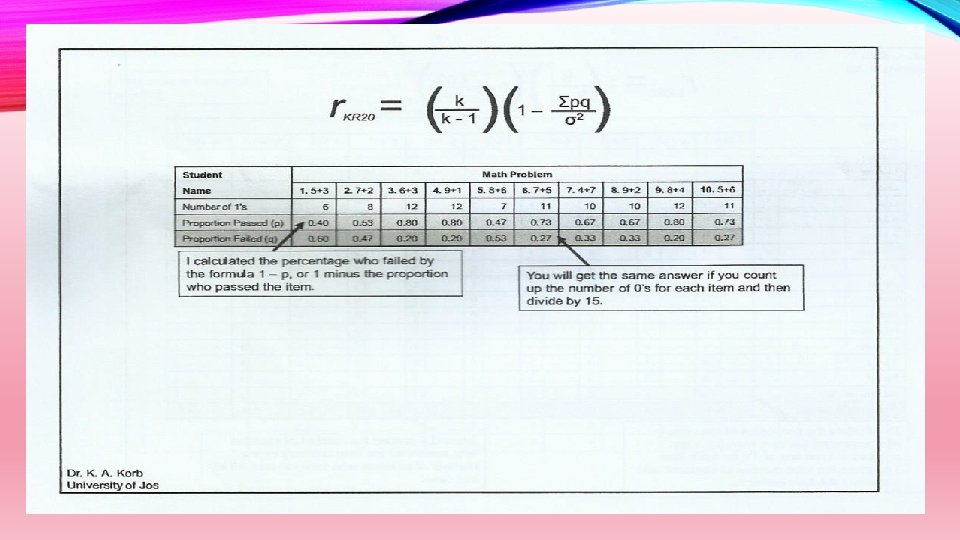

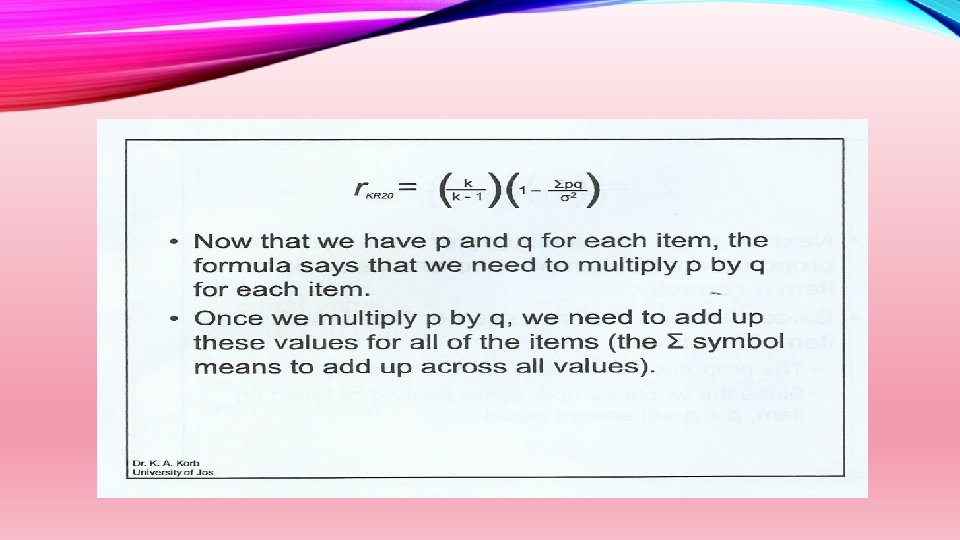

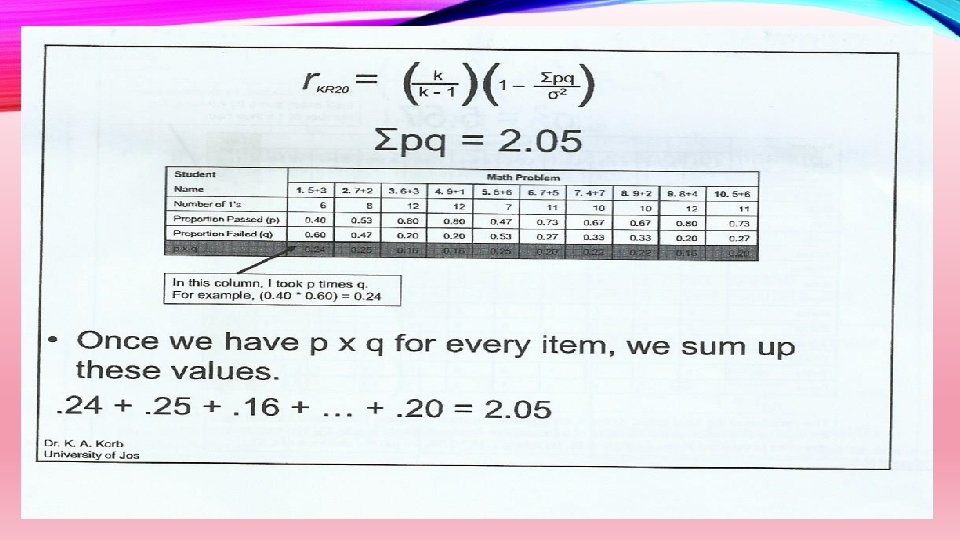

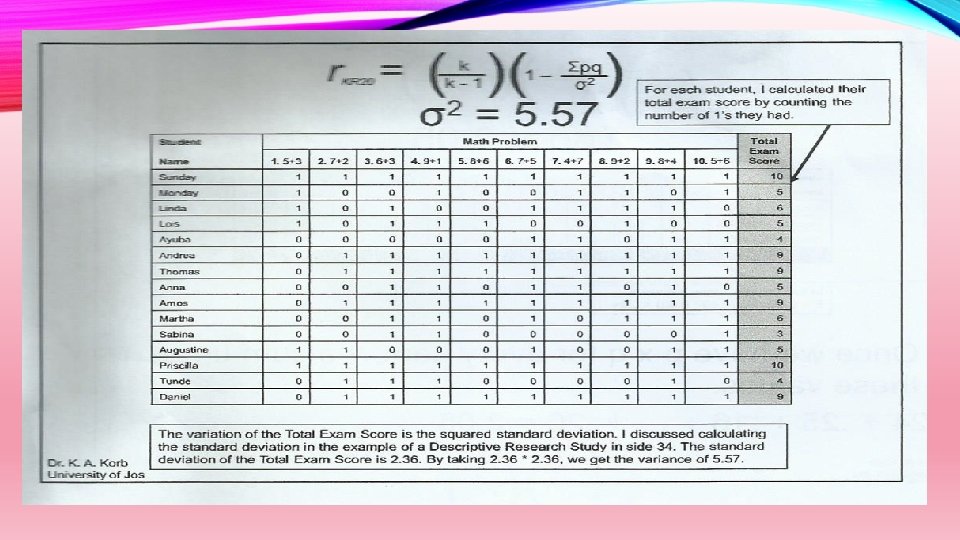

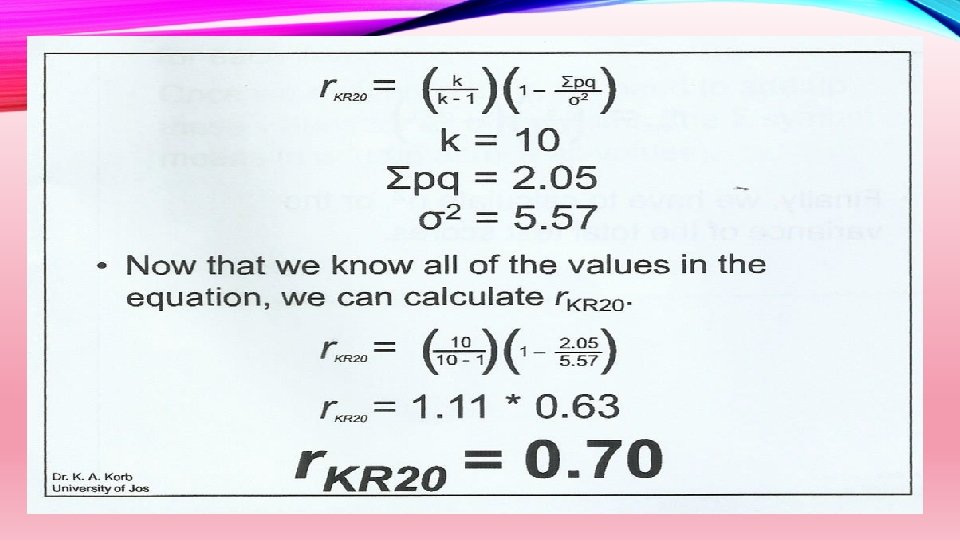

4. Internal Consistency Method >Is used in psychological test that consists of dichotomous scored items. >The examinee either passes or falls in an item. A rating of 1 (one) is assigned for correct answer and 0 (zero) for incorrect response. This method is obtained by using Kuder-Richardson Formula 20.

4. Internal Consistency Method • Used to assess the consistency of results across items within a test. • Different questions, same construct.

III. PRACTICABILITY Ø means “ feasible” as well as “usable” Ø capable of being done, effected, or put into practice

FACTORS THAT DETERMINE PRACTICABILITY

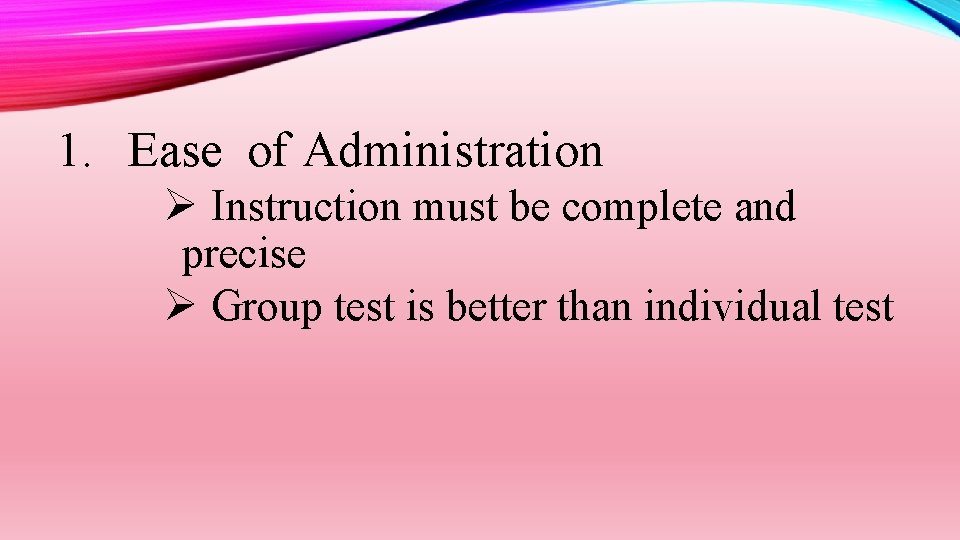

1. Ease of Administration Ø Instruction must be complete and precise Ø Group test is better than individual test

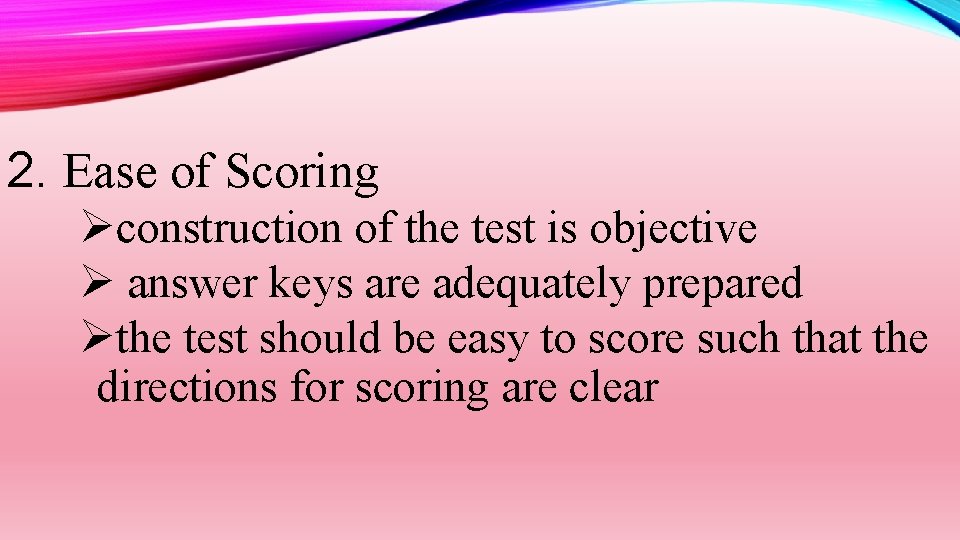

2. Ease of Scoring Øconstruction of the test is objective Ø answer keys are adequately prepared Øthe test should be easy to score such that the directions for scoring are clear

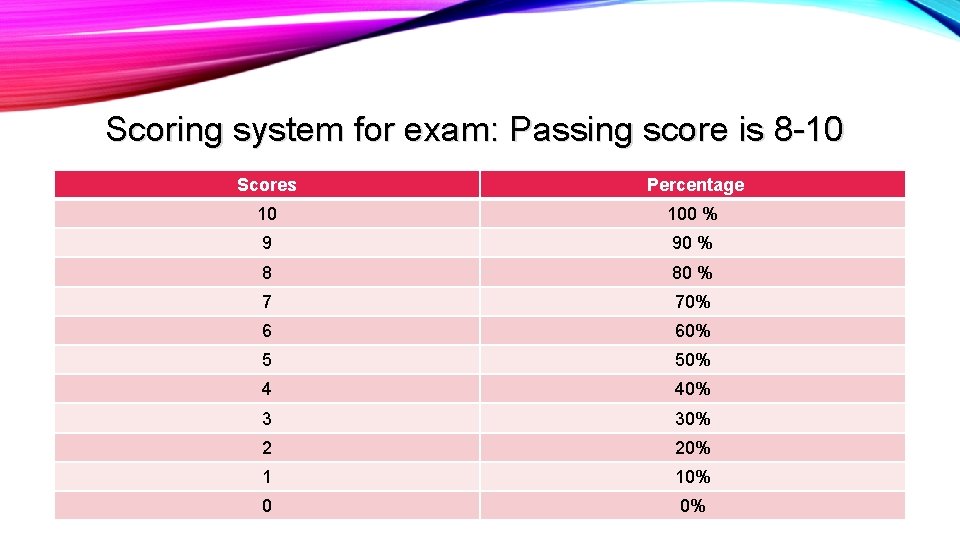

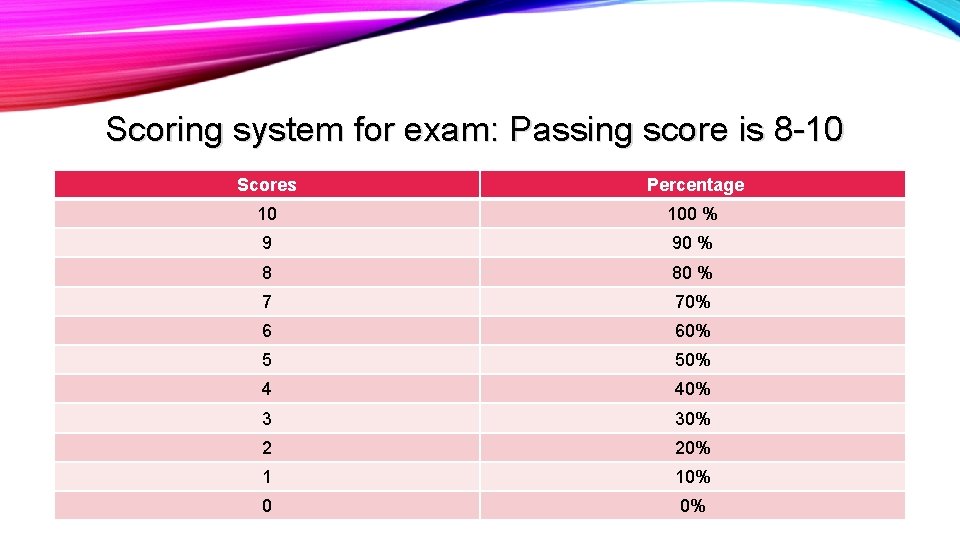

Scoring system for exam: Passing score is 8 -10 Scores Percentage 10 100 % 9 90 % 8 80 % 7 70% 6 60% 5 50% 4 40% 3 30% 2 20% 1 10% 0 0%

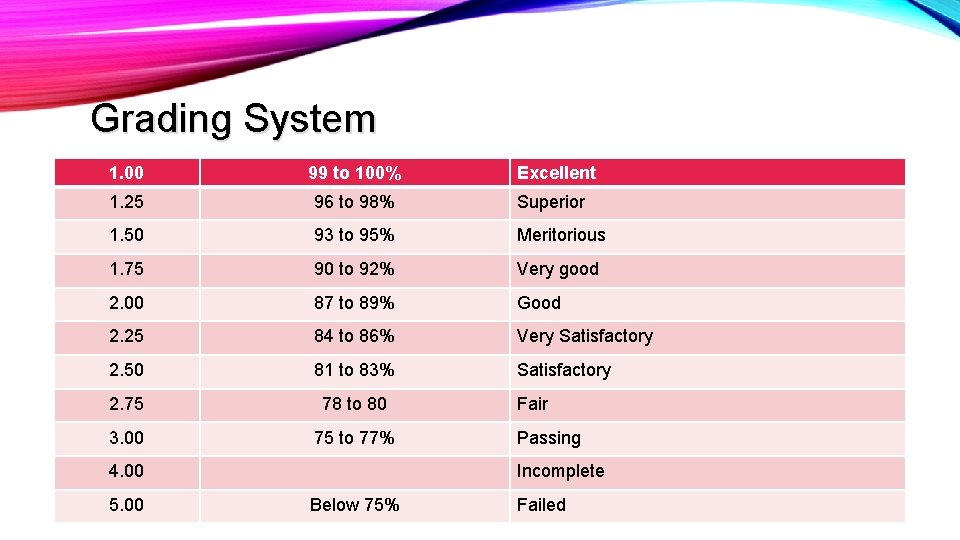

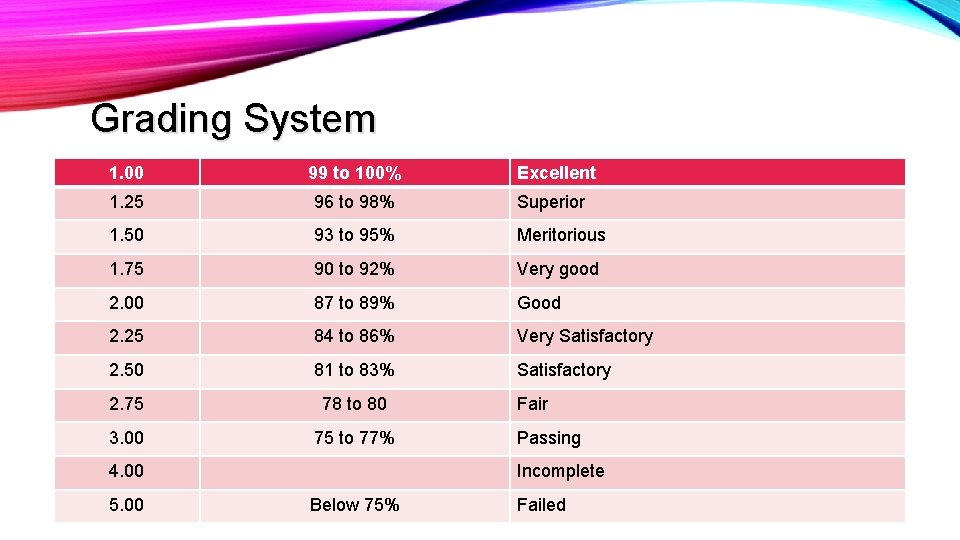

Grading System 1. 00 99 to 100% 1. 25 96 to 98% Superior 1. 50 93 to 95% Meritorious 1. 75 90 to 92% Very good 2. 00 87 to 89% Good 2. 25 84 to 86% Very Satisfactory 2. 50 81 to 83% Satisfactory 2. 75 78 to 80 3. 00 75 to 77% 4. 00 5. 00 Excellent Fair Passing Incomplete Below 75% Failed

3. Ease of Interpretation and Application Ø all scores must be give equivalent from tables without necessity of computation ØMust be base on age and grade/year level

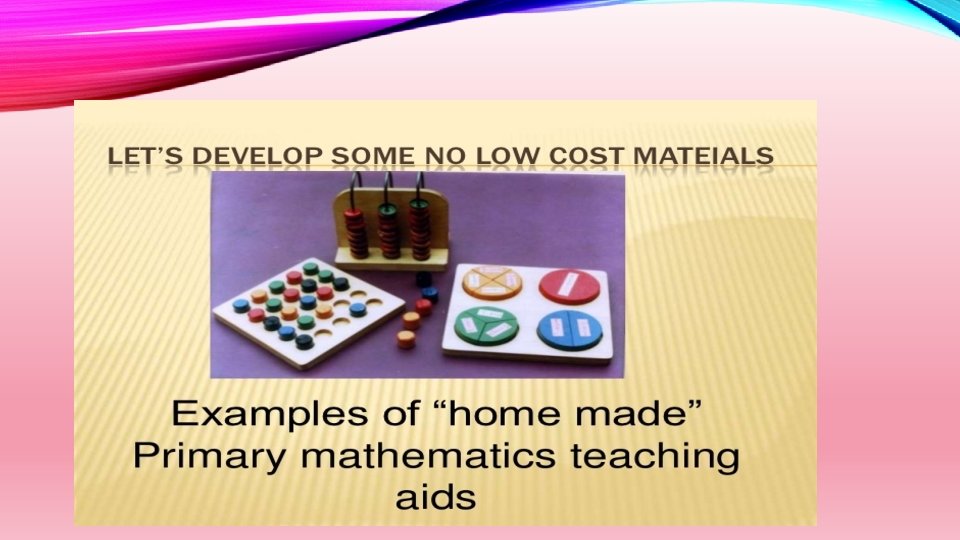

4. Low Cost Ø Low cost no cost materials are developed from the waste and help the teachers in making the teaching interesting and concrete. Ø Low cost no cost of materials are teaching aids which require no cost or available cheaply, and develop by locally available resources.

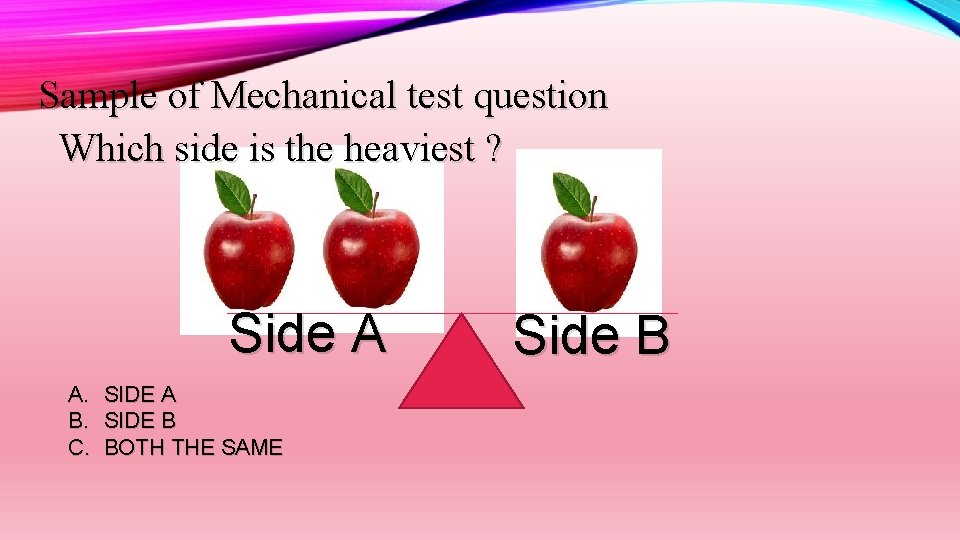

5. Proper Mechanical Make-up Ø A good test must be printed clearly in an appropriate font size for the grade or year level. Ø Picture and illustration must be give to lower grades

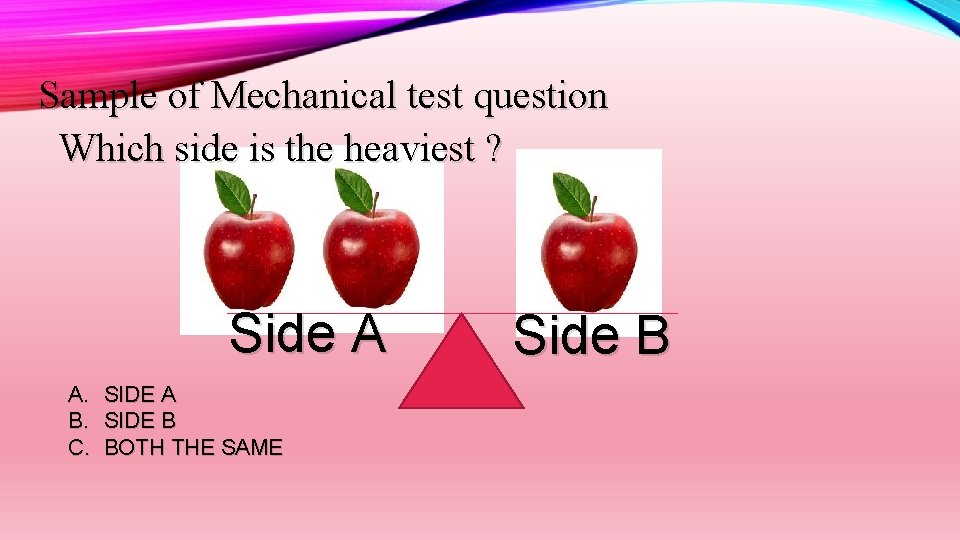

Sample of Mechanical test question Which side is the heaviest ? Side A A. SIDE A B. SIDE B C. BOTH THE SAME Side B

IV. JUSTNESS - Is the fourth characteristic of assessment method - It is the degree to which the teacher is fair in assessing the grade of the students

IV. JUSTNESS the learner must be informed on the criteria they are being assessed. -if the learner’s achievement are assessed fairly and justly by teachers , they are inspired and their interest to study hard is aroused

V. MORALITY IN ASSESSMENT - it is the fifth characteristic of assessment method - it is the degree of secrecy of the grades of the learner. -morality or ethics in assessment of test results or grades must be confidential to void slow learner from embarrassment.

V. MORALITY IN ASSESSMENT -Learner who have high grades, honor, and top ten must be published as reward -learner who have low score or grade must be kept confidential, and parents whose children are low achievers must be informed

Thank You God Bless