ASSESSMENT LITERACY Language Testing Assessment and Evaluation JENNIFER

- Slides: 49

ASSESSMENT LITERACY Language Testing, Assessment and Evaluation JENNIFER RAMOS MAY 2015

Presentation Overview q q q Terminology Assessment categories Assessment principles Skill-specific assessment Grading and Rubrics Analyzing scores Assessment literacy

Workshop Goal: Improve Assessment Literacy Workshop Outcomes: q q q Develop a framework for designing language assessments Increase awareness of how to capture a student’s best performance Understand basic rules for traditional testing formats Expand methods used to assess language skills Gain a basic understanding of test analysis Time for a pre-test!

Basic Terminology q q q Test Assessment Evaluation

Test: (in the context of language learning) A procedure or device designed to measure a person’s language knowledge or abilities. A test elicits a sample of language knowledge and needs to be a good representation of it. Keep in mind: a test is one form of assessment

Assessment: broad term for the way we document knowledge and skills of our students. Assessments can be direct (evidence based) or indirect (inferred). • • • Direct: tests writing assignments projects portfolios presentations role-plays • • • Indirect: student’s perception of their progress questions like, “Do you understand? ” attendance level of participation effort assessm ent

Evaluation: a judgment on how well a student progresses and achieves the desired outcomes. Example evaluations: • “Student is weak in the area of oral communication. His knowledge of grammar is adequate but pronunciation and vocabulary are very poor. ” • “Student is not making sufficient progress to remain in the program. ” • “This student has made significant improvement. ” Assessments are tools which allow teachers to evaluate their students.

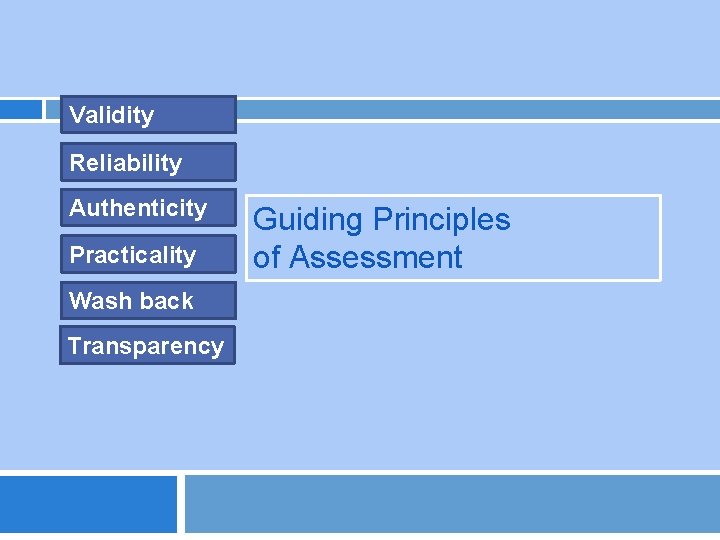

Validity Reliability Authenticity Practicality Guiding Principles of Assessment Wash back Transparency

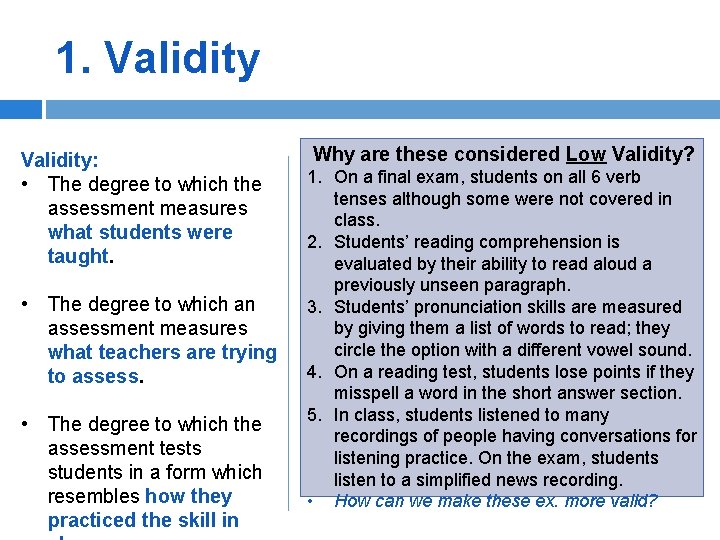

1. Validity: • The degree to which the assessment measures what students were taught. • The degree to which an assessment measures what teachers are trying to assess. • The degree to which the assessment tests students in a form which resembles how they practiced the skill in Why are these considered Low Validity? 1. On a final exam, students on all 6 verb tenses although some were not covered in class. 2. Students’ reading comprehension is evaluated by their ability to read aloud a previously unseen paragraph. 3. Students’ pronunciation skills are measured by giving them a list of words to read; they circle the option with a different vowel sound. 4. On a reading test, students lose points if they misspell a word in the short answer section. 5. In class, students listened to many recordings of people having conversations for listening practice. On the exam, students listen to a simplified news recording. • How can we make these ex. more valid?

2. Reliability: • the degree to which a test is administered and scored consistently • the degree to which the data collected on the student’s abilities/knowledge is reliable. Factors that affect reliability : • Test length: longer tests give more reliable data than shorter tests • Scoring mechanisms (level of consistency/specificity) • Instructions for the students • Level of student stress • Lighting, room temperature, noise, etc.

3. Transparency: the degree to which information about the test or other assessment is readily available to students, including: • The dates of test • The approximate test length • The material covered on the test • The form of the test • Point values for each question/rubrics for communicative tasks • The weight of the test relative to final grade • Other grading criteria (for ex, oral eval or written Thecomposition) element of surprise is not conducive to good testing conditions!

4. Washback: the degree to which a test or other assessment tool has a positive influence on teaching and learning Low or negative washback: Positive washback: The teacher gives students their grades on the chapter test but does not show them which items they answered incorrectly. A teacher outlines exactly how he will evaluate students’ compositions. (for ex: grammar, spelling, punctuation, content, transition signals). Students don’t know what area they are weak in. Teacher doesn’t review tests to see overall performance of the class. Students carefully check all those areas, revise, and proudly submit their compositions.

5. Practicality: the degree to which a test or other assessment meets the following criteria: • • is not excessively expensive stays within appropriate time constraints is relatively easy to administer has a scoring procedure that is specific and time-efficient How practical are these assessments? A. A teacher records students’ 5 -7 minute presentations. The teacher watches the recordings at home. The teacher has 25 students. Additionally, each students has to meet with the teacher to watch the video and give their own self-assessment. B. On Monday morning, a teacher uses the language lab software to record his 25 students responding to a prompt. The teacher listens to the recordings (1 -2 min each) at home and gives students written feedback on Wednesday.

6. Authenticity: the degree to which test situations reflect language use in authentic, real world language situations. Authentic language assessments: • Focus on communication and comprehension • Closely related to what people actually do with language in the real world • Are highly contextualized; authentic communication happens in a context, not isolated sentences • Present meaningful, relevant, interesting topics • Provide some thematic organization to items, such as through a story line or episode

Authenticity How can these assessments be made more authentic? 1. Oral evaluation: tester states: “I’m from Vancouver. ” Student has to formulate question: “Where are you from? ” 2. A teacher reads a newspaper article out loud to her students. Students then take a listening comprehension quiz. 3. On a grammar test on verb tenses, students are given the base form of 10 verbs and have to write the past participle for each. 4. To test pronunciation, the teacher reads a sentence. The student repeats it back. Example: “I have a pain in my ear. ” 5. To test vocabulary, teacher makes a multiple choice test. The vocabulary word is given as the item and 4 choices are given as options (A-D). Student has to identify the option that is most similar to vocab item.

6 principles High degree of: Validity Reliability Authenticity Transparency Practicality Wash back Bias for Best— Set up optimal conditions to capture student ability

Formative vs. Summative Achievement vs. Proficiency Performance-based TYPES OF ASSESSMENTS

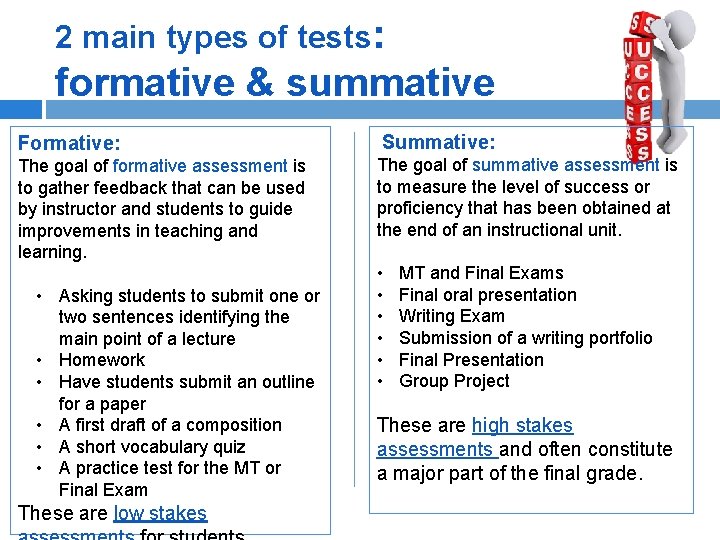

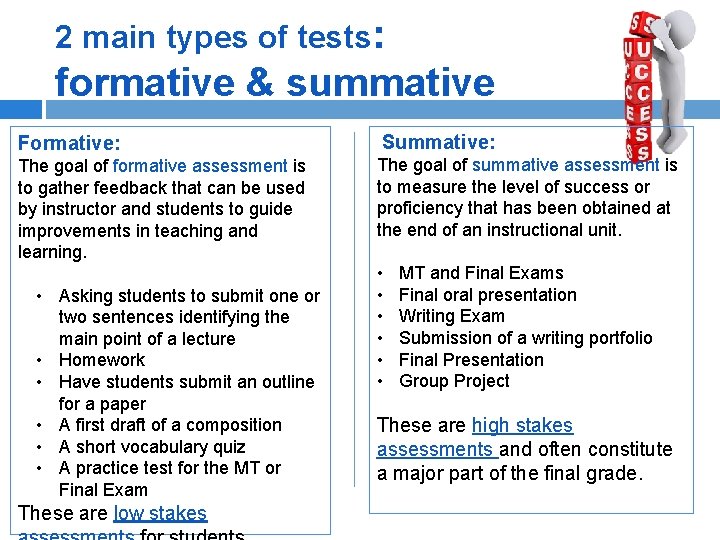

2 main types of tests: formative & summative Formative: The goal of formative assessment is to gather feedback that can be used by instructor and students to guide improvements in teaching and learning. • Asking students to submit one or two sentences identifying the main point of a lecture • Homework • Have students submit an outline for a paper • A first draft of a composition • A short vocabulary quiz • A practice test for the MT or Final Exam These are low stakes Summative: The goal of summative assessment is to measure the level of success or proficiency that has been obtained at the end of an instructional unit. • MT and Final Exams • Final oral presentation • Writing Exam • Submission of a writing portfolio • Final Presentation • Group Project These are high stakes assessments and often constitute a major part of the final grade.

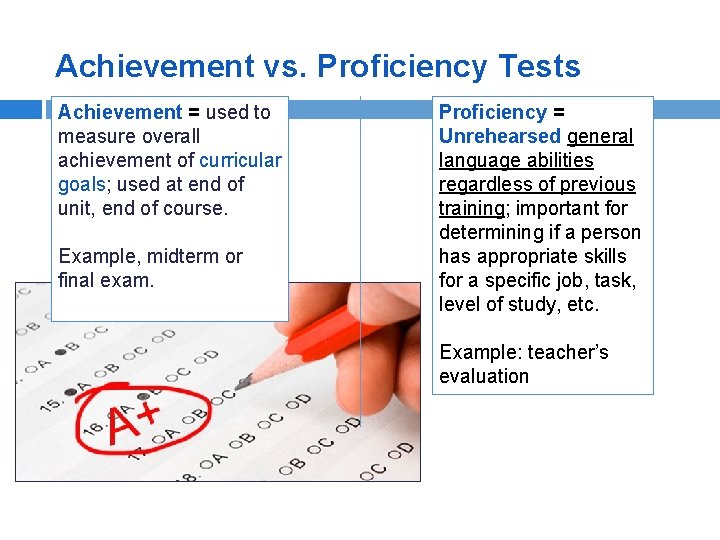

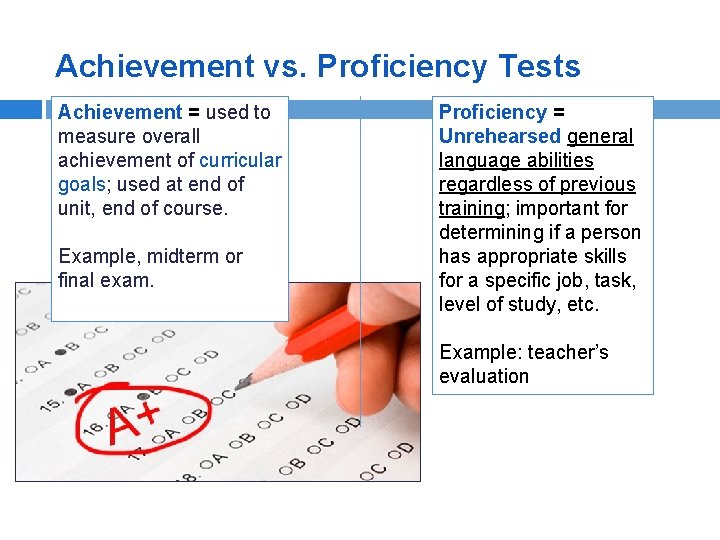

Achievement vs. Proficiency Tests Achievement = used to measure overall achievement of curricular goals; used at end of unit, end of course. Example, midterm or final exam. Proficiency = Unrehearsed general language abilities regardless of previous training; important for determining if a person has appropriate skills for a specific job, task, level of study, etc. Example: teacher’s evaluation

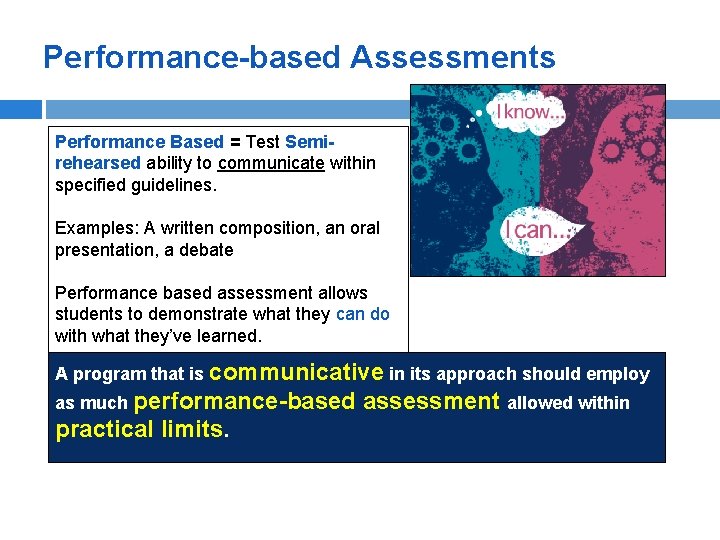

Performance-based Assessments Performance Based = Test Semirehearsed ability to communicate within specified guidelines. Examples: A written composition, an oral presentation, a debate Performance based assessment allows students to demonstrate what they can do with what they’ve learned. A program that is communicative in its approach should employ as much performance-based practical limits. assessment allowed within

Design Considerations Handouts

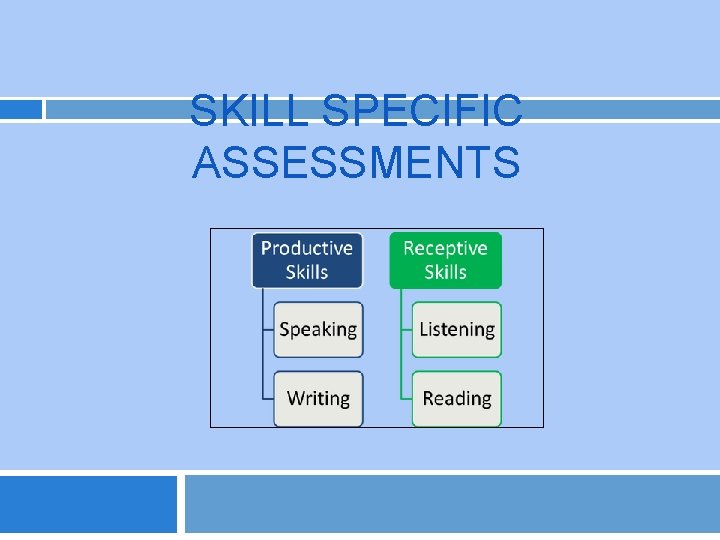

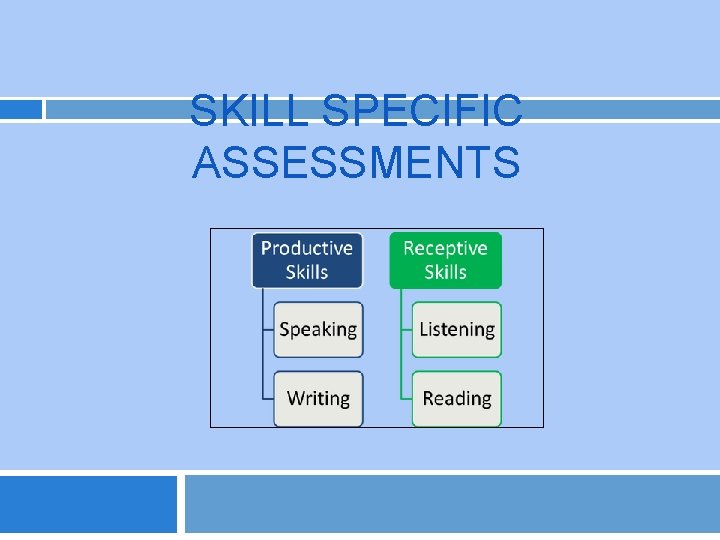

SKILL SPECIFIC ASSESSMENTS

Receptive Skills: listening, reading Evidence of listening and reading comprehension is ‘indirect’ Comprehension is internal, not directly observable! Assessment through other skills (reading questions, writing responses, discussing content, etc. ) To measure comprehension, a good sample of subkills must be tested!

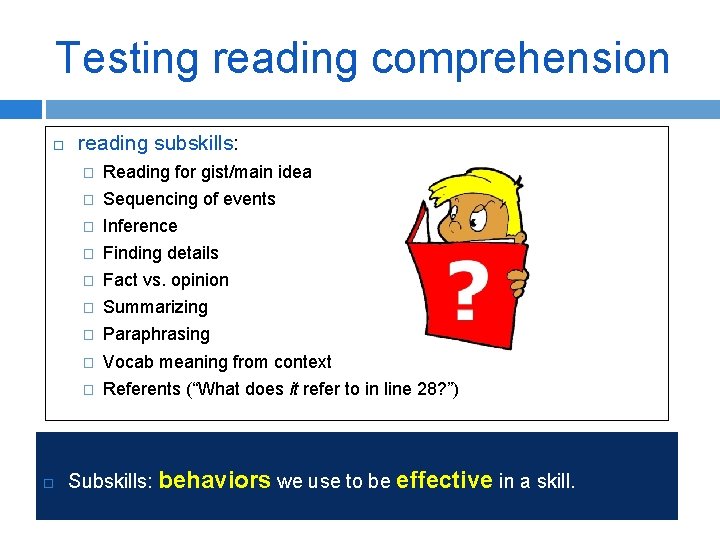

Testing reading comprehension reading subskills: � Reading for gist/main idea � Sequencing of events � Inference � Finding details � Fact vs. opinion � Summarizing � Paraphrasing � Vocab meaning from context � Referents (“What does it refer to in line 28? ”) Subskills: behaviors we use to be effective in a skill.

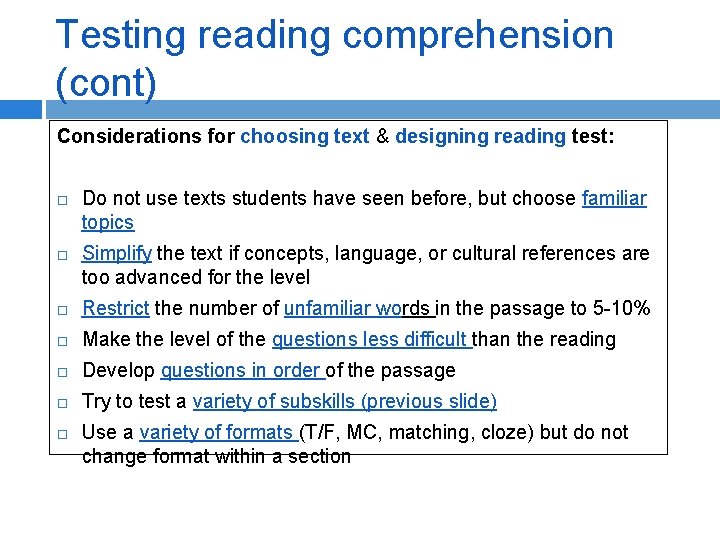

Testing reading comprehension (cont) Considerations for choosing text & designing reading test: Do not use texts students have seen before, but choose familiar topics Simplify the text if concepts, language, or cultural references are too advanced for the level Restrict the number of unfamiliar words in the passage to 5 -10% Make the level of the questions less difficult than the reading Develop questions in order of the passage Try to test a variety of subskills (previous slide) Use a variety of formats (T/F, MC, matching, cloze) but do not change format within a section

Listening comprehension Common listening assumptions: develops automatically Can’t be taught Tested exactly like reading comp. What the research says about listening: Active cognitive process We receive & construct meaning from spoken messages Essential for developing other language skills Don’t expect complete comprehension Can be tested through microskills

Testing listening comprehension Testing listening comprehension: Should be set in context, not one word utterances Task should reflect real world situations (when do you listen for gist, when do you listen for details, when you write down info word for word, etc. ? ) Play recording 1 x for gist Play recording 2 x for detailed info Use a variety of task types Audio should be around 2 -3 minutes (if longer, give in chunks) to avoid testing memory. Do not test content from first 15 -20 seconds of recording Place emphasis on meaning over trivial details Students should know 90 -95% of words to understand a script � Try to frame the context of the listening for the students: � “You are going to listen to Mr. and Mrs. Rollins, a couple from New York, talk about their summer plans. Listen to their conversation and then answer the questions” Lexical overlap (same words in script/questions) makes test easier

Listening subskills listening comprehension subskills & structures to test them: q q Phonemic Discrimination: Students hear, “Jose plans to buy a new ship this week. ” Students circle the correct word: Jose plans to buy a new ship/sheep this week. SHOULD BE IN CONTEXT OF A SENTENCE—NOT AN ISOLATED WORD Paraphrase recognition: Students hear: “Unless James has purchased tickets in advance, he won’t be able to go to the concert. ” We can infer that: A. Tickets to the concert are no longer available B. James has purchased his tickets already C. James is not going to the concert

Listening subskills (cont. ) listening comprehension subskills & methods to test them (cont. ): q MC, TF: general content—gist and meaningful info q Cloze (fill in the blank): specific lexical items (meaning, not function) q Dictation (3 x): (try to keep it authentic) q q q Information Transfer (Example: students hear a job interview in progress –they fill out candidates job application): testing for details; biographical Take notes on graphic organizer: note-taking Circle the correct photo (or other visual—great for beginners) *low skill contamination

Productive Skills: Speaking/writing When assessing productive skills, keep in mind: Productive skills can be directly observed Choose tasks appropriate to student’s level (ex: simple paragraph for beginners but an extended essay for advanced students) Set consistent grading criteria (grading rubrics) Be sure assessments reflect curricular outcomes Set consistent test conditions (ex: no dictionaries; in-class essay 60 minutes)

Assessing Speaking 1. Grammatical Competence: Grammar � Vocabulary � pronunciation (basic sounds of letters and syllables, intonation and stress) 2. Discourse Competence: meaningful, logical communication � 3. Sociolinguistic Competence: Knowledge of what is socially and culturally expected in L 2. 4. Strategic Competence: � speakers know how to take turns � how to keep a conversation going � how to end conversation, etc. � how to resolve communication breakdowns

Assessing Speaking (cont. ) Speaking Assessment Techniques: Picture (or other visual) cue—student answers questions Prepared oral presentation/monologue (* assesses rehearsed speech, not automatized language) Role Play Conversation Prompts Information Gap Activity (partners have different pieces of information/have to ask each other to complete form) Debate Retelling a story *SCORING RUBRICS EXTREMLEY IMPORTANT IN SPEAKING ASSESSMENT

Assessing Writing indirect vs. direct Indirect Direct MC, cloze formats Sentence level constructions Test spelling, vocab, grammar, mechanics knowledge Production of written texts produce content, organize, vocab, spelling, syntax In line with curricular goals in line with communicative approach

Assessing Writing Types of writing assessments: Snapshot vs. Portfolio Prompts: � � Written Visual Good Prompt Characteristics: Generate the type of writing you want to assess Doesn’t require background knowledge (eg. About global warming) Meaningful and relevant topic Unambiguous Unbiased language Don’t tap into sensitive topics (religion, morals, etc. )

Assessing Grammar Why do tests focus heavily grammar? It’s practical for test-makers, lends itself well to MC questions It’s ‘traditional’—old grammar translation approach Based on rules; objective Grammar competence important all 4 skills: RWLS

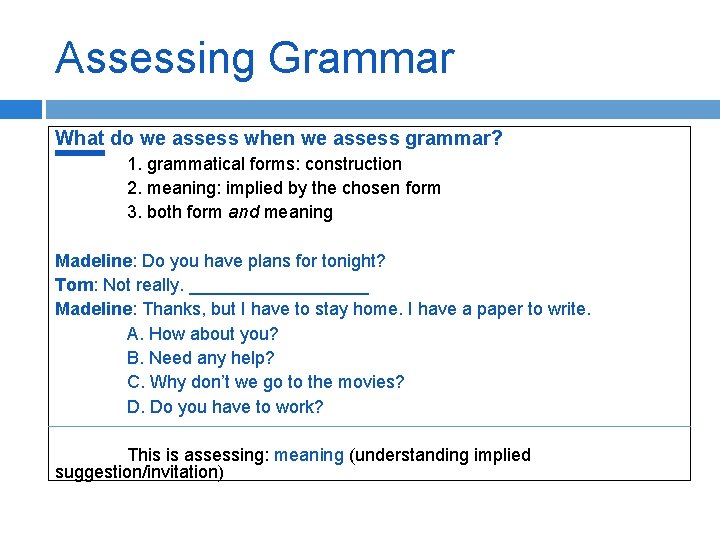

Assessing Grammar What do we assess when we assess grammar? 1. grammatical forms: construction 2. meaning: implied by the chosen form 3. both form and meaning Madeline: Do you have plans for tonight? Tom: Not really. _________ Madeline: Thanks, but I have to stay home. I have a paper to write. A. How about you? B. Need any help? C. Why don’t we go to the movies? D. Do you have to work? This is assessing: meaning (understanding implied suggestion/invitation)

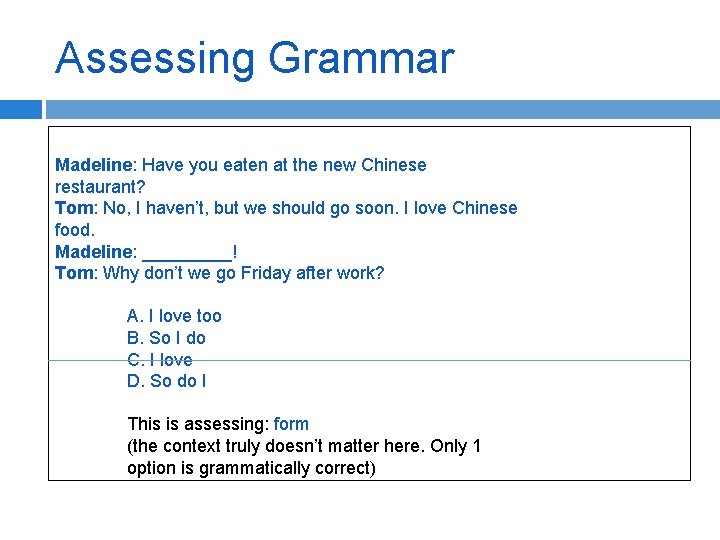

Assessing Grammar Madeline: Have you eaten at the new Chinese restaurant? Tom: No, I haven’t, but we should go soon. I love Chinese food. Madeline: _____! Tom: Why don’t we go Friday after work? A. I love too B. So I do C. I love D. So do I This is assessing: form (the context truly doesn’t matter here. Only 1 option is grammatically correct)

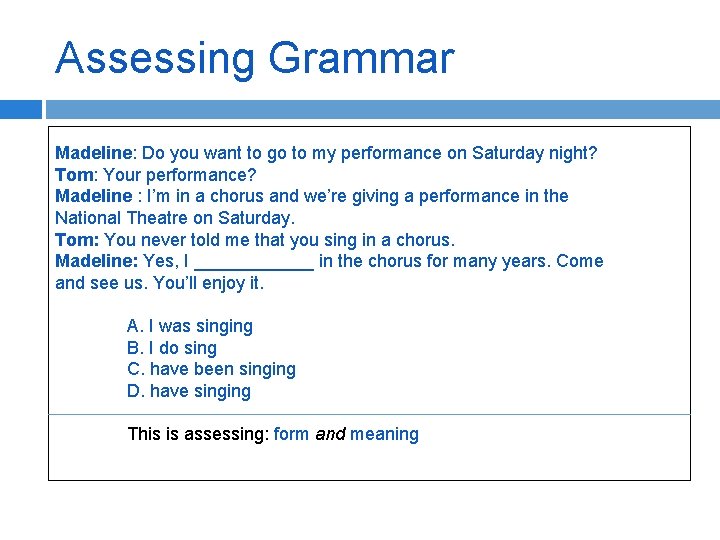

Assessing Grammar Madeline: Do you want to go to my performance on Saturday night? Tom: Your performance? Madeline : I’m in a chorus and we’re giving a performance in the National Theatre on Saturday. Tom: You never told me that you sing in a chorus. Madeline: Yes, I ______ in the chorus for many years. Come and see us. You’ll enjoy it. A. I was singing B. I do sing C. have been singing D. have singing This is assessing: form and meaning

Grammar test items MC Cloze (gap fill): controlled vs. open Visual Circle the picture that expresses the follow sentence: She was interviewed.

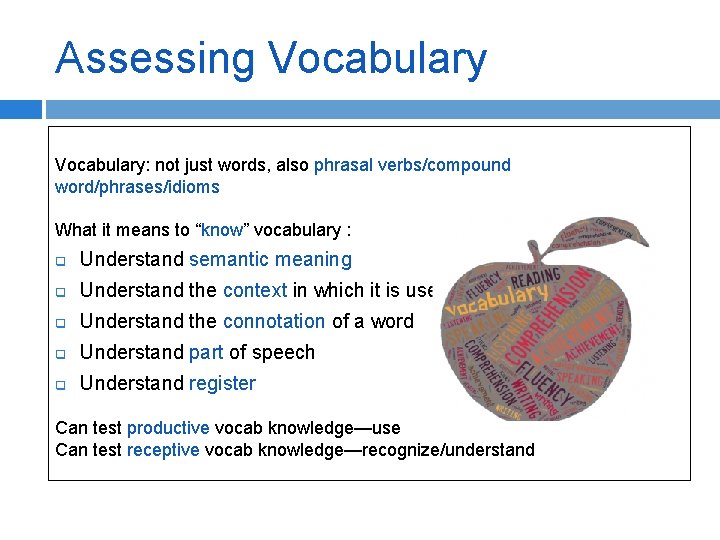

Assessing Vocabulary: not just words, also phrasal verbs/compound word/phrases/idioms What it means to “know” vocabulary : q Understand semantic meaning q Understand the context in which it is used q Understand the connotation of a word q Understand part of speech q Understand register Can test productive vocab knowledge—use Can test receptive vocab knowledge—recognize/understand

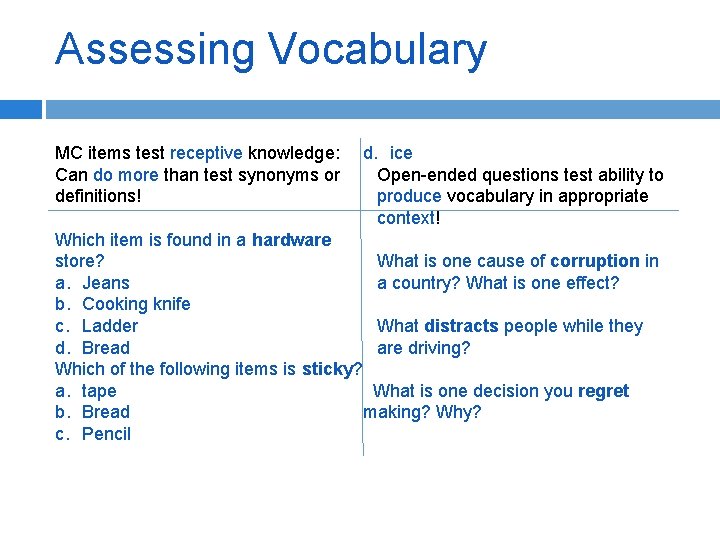

Assessing Vocabulary MC items test receptive knowledge: d. ice Can do more than test synonyms or Open-ended questions test ability to definitions! produce vocabulary in appropriate context! Which item is found in a hardware store? What is one cause of corruption in a. Jeans a country? What is one effect? b. Cooking knife c. Ladder What distracts people while they d. Bread are driving? Which of the following items is sticky? a. tape What is one decision you regret b. Bread making? Why? c. Pencil

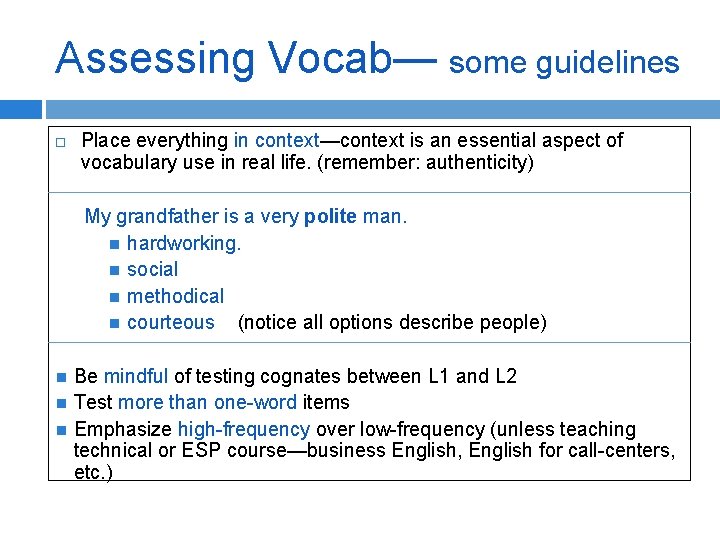

Assessing Vocab— some guidelines Place everything in context—context is an essential aspect of vocabulary use in real life. (remember: authenticity) My grandfather is a very polite man. hardworking. social methodical courteous (notice all options describe people) Be mindful of testing cognates between L 1 and L 2 Test more than one-word items Emphasize high-frequency over low-frequency (unless teaching technical or ESP course—business English, English for call-centers, etc. )

Grading & Analysis Rubrics Means Standard Deviation Item Analysis

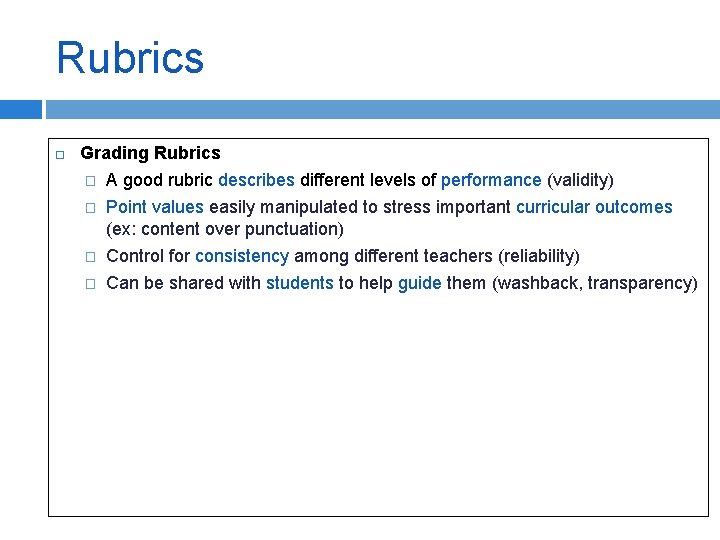

Rubrics Grading Rubrics � A good rubric describes different levels of performance (validity) � Point values easily manipulated to stress important curricular outcomes (ex: content over punctuation) � Control for consistency among different teachers (reliability) � Can be shared with students to help guide them (washback, transparency)

Analyzing the results Calculating the mean: mathematical average of all scores 1. Add Scores for all students/# of students = mean 2. Mean score 70 -79 good indicator of test validity Standard deviation: how closely scores are distributed around mean How to calculate SD 1. Find the mean 2. The next step is to take each score, subtract the mean from it and square the difference. 3. Add up all numbers from step 2, divide # of students, take square root 4. Don’t worry, Exam View Pro does this for you! Also, online SD calculators! 5. For our program, we want narrow SD, theoretically all students should be mastering the curriculum

Analyzing the results Item analysis: gives you level of difficulty of a question # of students who answered correctly/# of students For example: 23 students answered correctly/25 students in class = . 92 (low level of difficulty) A test should be composed of the following: 30% low level of difficulty 40% medium level of difficulty 30% of high level difficulty Goldilocks Theory!

Final Thought “Used with skill, assessment can motivate the reluctant, revive the discouraged, and thereby increase, not simply measure, achievement. ”

Sources: Chappuis, Jan. Classroom Assessment for Student Learning: Doing It Right, Using It Well. Upper Saddle River, NJ: Pearson Education, 2012. Print. Coombe, Christine A. , Keith S. Folse, and Nancy J. Hubley. A Practical Guide to Assessing English Language Learners. Ann Arbor, MI: U of Michigan, 2007. Print. Language Assessment: Principles and Classroom Practices, 2 nd Edition. Brown & Abeywickrama, 2010, Pearson Longman, pp. 25 -52 "Oral Language Assessment. " Reading Assessment Linking Language, Literacy, and Cognition (2012): 13961. Web.

Thank you for your attention! Any questions? Now let’s practice!