Assessing Science Comprehension Two Extremes Danielle S Mc

- Slides: 21

Assessing Science Comprehension: Two Extremes Danielle S. Mc. Namara Promoting Active Reading Strategies to Improve Students’ Understanding of Science

A little about our project: • Examined SERT in high-schools – Self-Explanation Reading Training • Created i. START – Web-delivered automated version of SERT with training delivered by automated agents • Examining effects of i. START in highschools and preparing for scale-up

What I could have shared (Re: Instrumentation that is): • Prior knowledge assessment – Use general measures of science knowledge using multiple choice questions – Text-specific or topic specific measures using openended questions • Reading strategy knowledge assessment – MSI, MARSI • Comprehension assessment – Multiple-choice, Open-ended, Protocols – Sharing Methodologies, not Instruments

Generation and Evaluation of Multiple-Choice Questions Yasuhiro Ozuru, Mike Rowe, Kyle Dempsey, Jayme Sayroo University of Memphis This work is part of a study with 3 rd and 4 th grade children, funded by IES. But, it’s relevant to all of our work assessing text comprehension using MCQ.

INTRODUCTION Multiple choice questions are common and useful for measuring reading comprehension because: • • They are easy to score Less influenced by the subjects’ production skill However, construction of good MCQ is often challenging because it requires careful selection of distracter options appropriate for the type of comprehension processes to be measured.

History • PIs created first round of MCQs for a study with 3 rd and 4 th grade children. • Ceiling and floor effects. • Postdocs and graduate students revised the questions and derived rules for the creation of questions and stems, and the assessment of their characteristics.

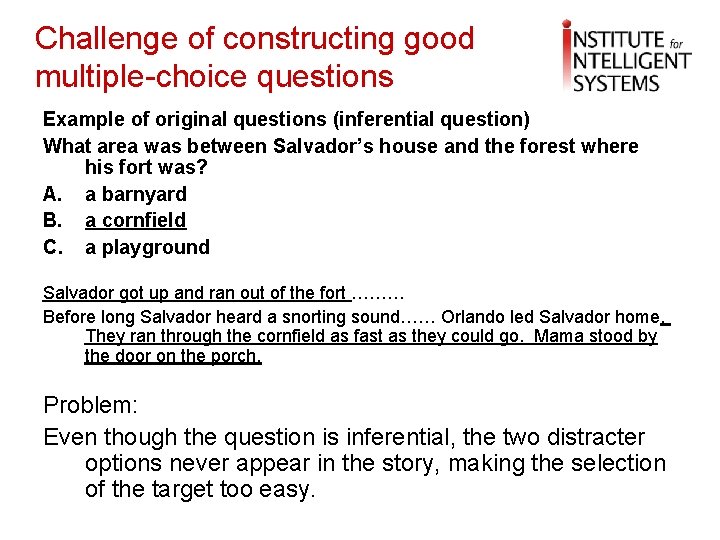

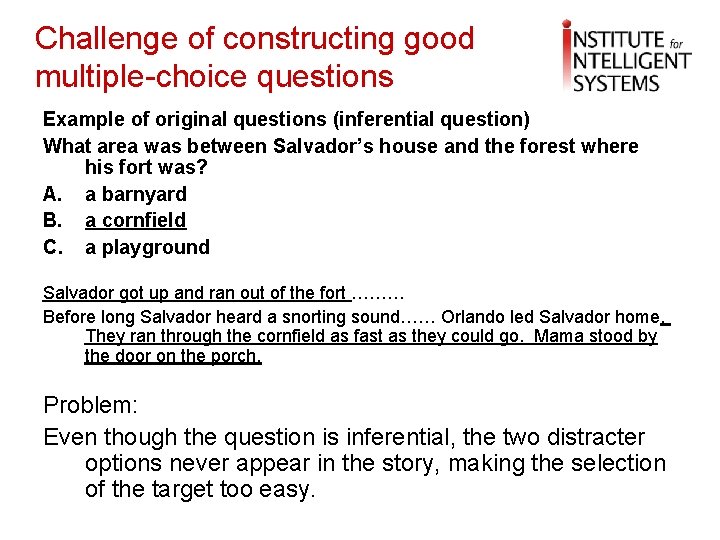

Challenge of constructing good multiple-choice questions Example of original questions (inferential question) What area was between Salvador’s house and the forest where his fort was? A. a barnyard B. a cornfield C. a playground Salvador got up and ran out of the fort ……… Before long Salvador heard a snorting sound…… Orlando led Salvador home. They ran through the cornfield as fast as they could go. Mama stood by the door on the porch. Problem: Even though the question is inferential, the two distracter options never appear in the story, making the selection of the target too easy.

Data collection based on original items • Ceiling effects – Four of the 12 questions were answered correctly by more than 85% of the 3 rd graders. – At least 1 item was answered correctly by 100%. • Range restriction and skewed distributions • Correlations between measures of knowledge, reading decoding, and reading comprehension unexpectedly weak. • Experimental effects of cohesion and reader aptitudes limited.

Guideline for constructing reading comprehension multiplechoice questions In order to address the problems associated with the questions, we constructed the following two sets of guidelines: 1) A guideline for classifying question types in terms of the depth of comprehension 2) Guidelines for selecting appropriate distracter options

Classifying question types Two types of local questions: Local text-based questions Local inference-based questions Two types of global questions: Global text-based questions Global inference-based questions

Standardizing the distracter options The criteria for selecting distracter options: • Near-miss distracter: The main idea or the word itself appears in the text but in a context other than the section in which the question taps. The distracter does not contradict the idea of the overall story and the target area of the text. • Thematic distracter: The main idea or word of this answer option does not directly appear in the text but is consistent with themes of the text and highly probable given the situation or topic described by the stem. • Unrelated distracter: This distracter must have a chance of being selected by a person who did not read the text, but this distracter should be the first one eliminated by someone who has actually read the text.

Additional guideline for textbased questions Text-base versus surface-code questions: Whereas text-base item tap into reader’s understanding of information explicitly stated in the text, surface code question tap into verbatim memory of the text. Avoid surface-code questions by: - avoiding questions on specific name, location, or item in sub-ordinate category - using paraphrase as opposed to verbatim content - avoiding conceptually similar distracters (e. g. , a river and a stream)

Piloting the MCQ items Each item was evaluated with the following three criteria: • mean: evaluation of ceiling/floor effect (ceiling: > 85%; Floor: < 20%) • item-total correlation: evaluation of whether a performance of particular item predicts the performance measured with all items - small or negative correlation indicates failure to predict overall performance, or inconsistency. • distracter performance as the function of over all scores: classifying subjects into high, medium and low performance groups, and analyzing what percentage of each group chose each type of answer options.

Example of an item with a satisfactory performance What was between Salvador’s house and his fort? A. the fence B. the cornfield C. the barn D. the forest Target Near-miss Thematic Unrelated B D A C Top Middle 100% 60% 0% 20% 0% 0% Proportion of correct response: Standard Deviation: Item-total correlation: Lowest 38% 50% 12% 0% 0. 71 0. 46 0. 54

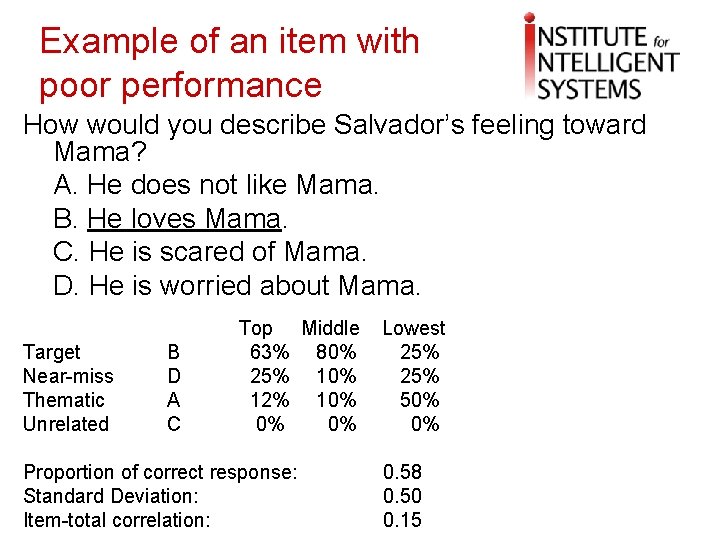

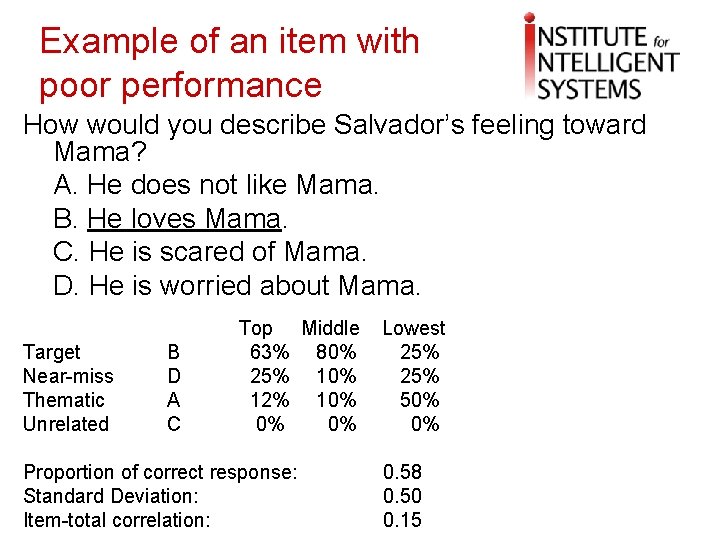

Example of an item with poor performance How would you describe Salvador’s feeling toward Mama? A. He does not like Mama. B. He loves Mama. C. He is scared of Mama. D. He is worried about Mama. Target Near-miss Thematic Unrelated B D A C Top Middle 63% 80% 25% 10% 12% 10% 0% 0% Proportion of correct response: Standard Deviation: Item-total correlation: Lowest 25% 50% 0% 0. 58 0. 50 0. 15

Summary • • Overall revision and generation of MCQ items following systematic guidelines (for question types and distracters) appear to improve the quality of items. Item analysis based on psychometric techniques helped us to evaluate the item performance at more precise level. - subsequent results of study based with items selected in this procedure showed a large improvement (reduction of ceiling/floor and higher item-total correlations)

Self-Explanation Coding Scheme Rachel Best and Yasuhiro Ozuru University of Memphis

Self-Explanations • Self-explanation refers to the process of explaining a text to oneself while reading (e. g. , Chi, Bassok, Lewis, Reimann, & Glaser, 1989) • Analysis of self-explanations is useful for gaining insight into what students do to process information in a text. – Students adopt a range of comprehension strategies (e. g. , paraphrasing, elaborative inferencing) • Training students how to self-explain using reading strategies improves reading comprehension. (e. g. , Mc. Namara, 2004) Please take a folder from the back

Coding Self-Explanation Protocols • Ultimate goal is to develop automated assessments of self-explanations • Need to conduct systematic analyses to identify the strategies being used (to assess comprehension and to refine our automated algorithms) • Coding scheme must address specific theoretical questions • Need to code using objectively observable criteria

Long Story Short • Rachel and Yasuhiro developed a very effective, useful and reliable coding scheme – the details are available in the handout.

Conclusion • I hope you can use something we’ve done.