Assessing Innovation for Scalability a New Focus for

Assessing Innovation for Scalability: a New Focus for External Validity Presented at the American Evaluation Association Annual Conference Washington DC, October 2013 Kate Fehlenberg; Management Systems International

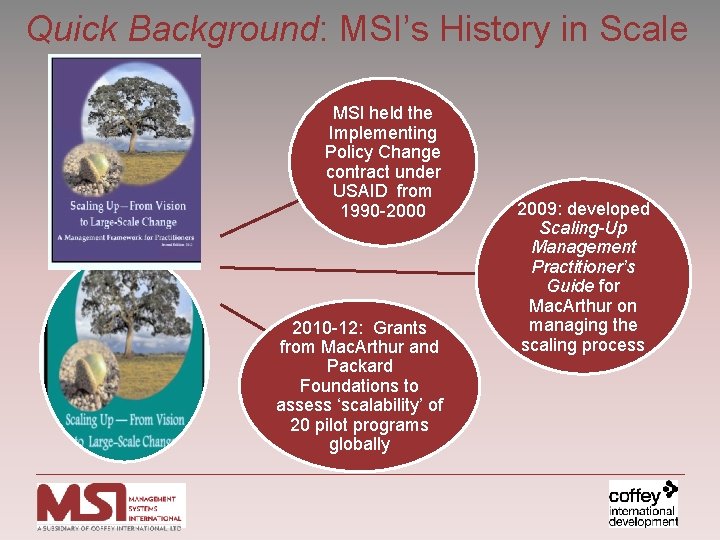

Quick Background: MSI’s History in Scale MSI held the Implementing Policy Change contract under USAID from 1990 -2000 2010 -12: Grants from Mac. Arthur and Packard Foundations to assess ‘scalability’ of 20 pilot programs globally 2009: developed Scaling-Up Management Practitioner’s Guide for Mac. Arthur on managing the scaling process

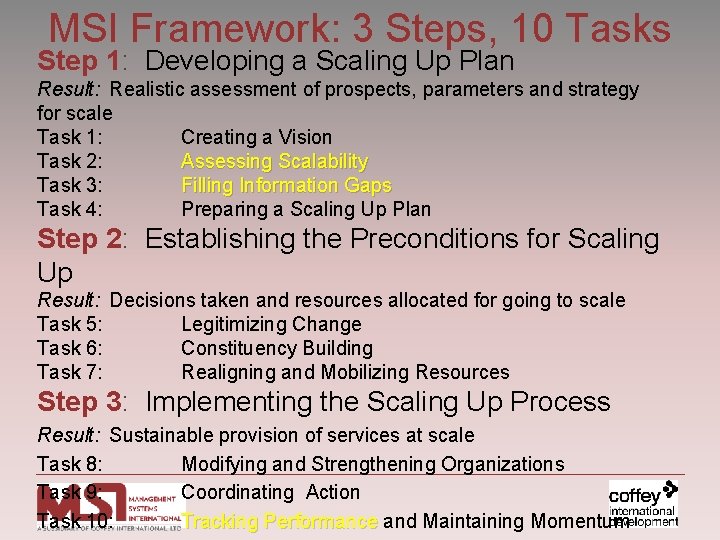

MSI Framework: 3 Steps, 10 Tasks Step 1: Developing a Scaling Up Plan Result: Realistic assessment of prospects, parameters and strategy for scale Task 1: Creating a Vision Task 2: Assessing Scalability Task 3: Filling Information Gaps Task 4: Preparing a Scaling Up Plan Step 2: Establishing the Preconditions for Scaling Up Result: Decisions taken and resources allocated for going to scale Task 5: Legitimizing Change Task 6: Constituency Building Task 7: Realigning and Mobilizing Resources Step 3: Implementing the Scaling Up Process Result: Sustainable provision of services at scale Task 8: Modifying and Strengthening Organizations Task 9: Coordinating Action Task 10: Tracking Performance and Maintaining Momentum

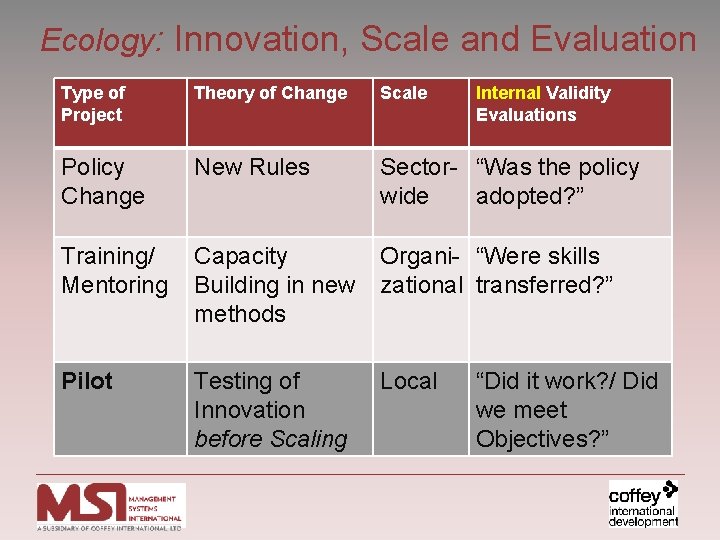

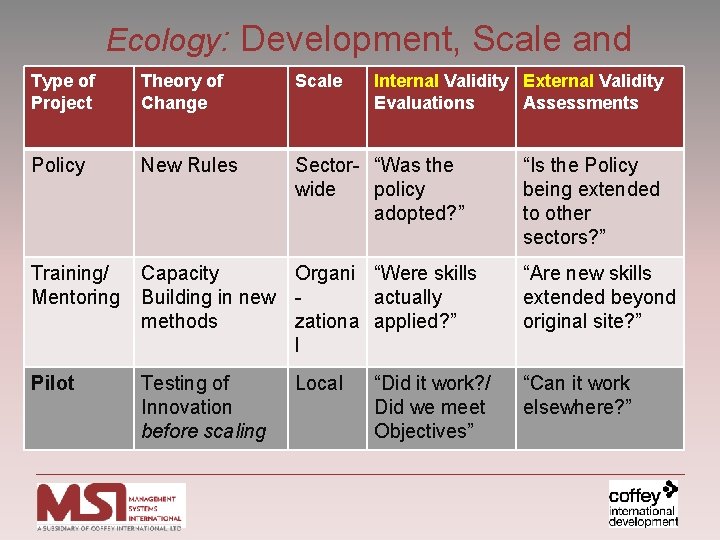

Ecology: Innovation, Scale and Evaluation Type of Project Theory of Change Scale Internal Validity Evaluations Policy Change New Rules Sector- “Was the policy wide adopted? ” Training/ Mentoring Capacity Building in new methods Organi- “Were skills zational transferred? ” Pilot Testing of Innovation before Scaling Local “Did it work? / Did we meet Objectives? ”

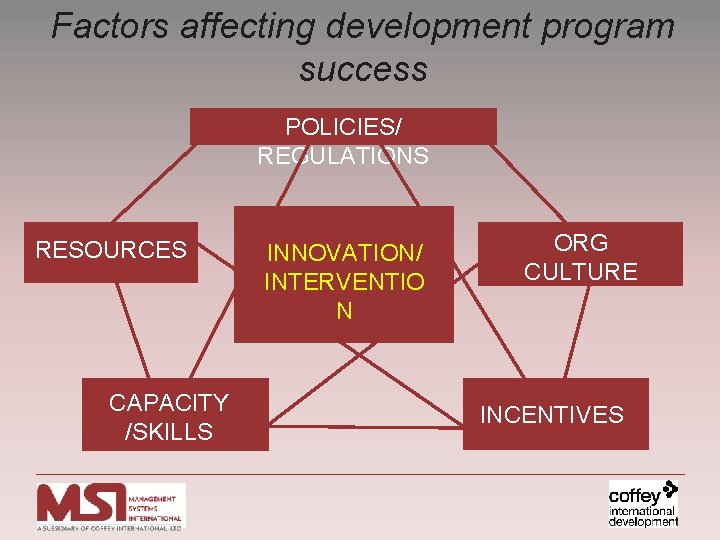

Factors affecting development program success POLICIES/ REGULATIONS RESOURCES CAPACITY /SKILLS INNOVATION/ INTERVENTIO N ORG CULTURE INCENTIVES

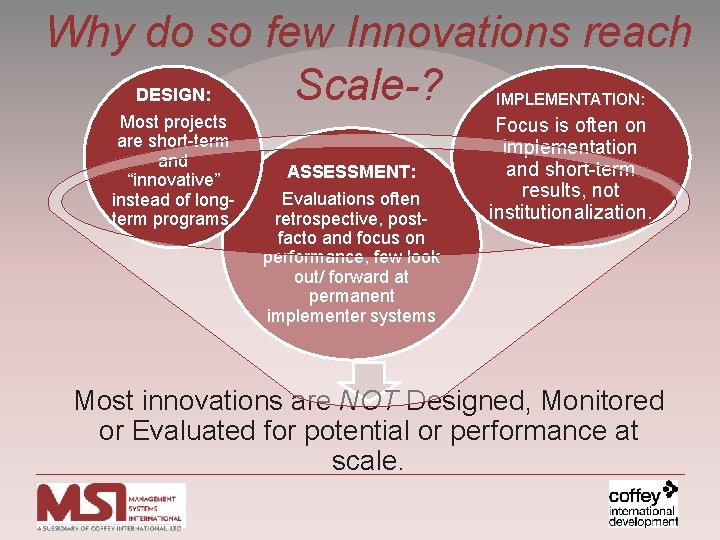

Why do so few Innovations reach Scale-? DESIGN: IMPLEMENTATION: Most projects are short-term and “innovative” instead of longterm programs. ASSESSMENT: Evaluations often retrospective, postfacto and focus on performance, few look out/ forward at permanent implementer systems Focus is often on implementation and short-term results, not institutionalization. Most innovations are NOT Designed, Monitored or Evaluated for potential or performance at scale.

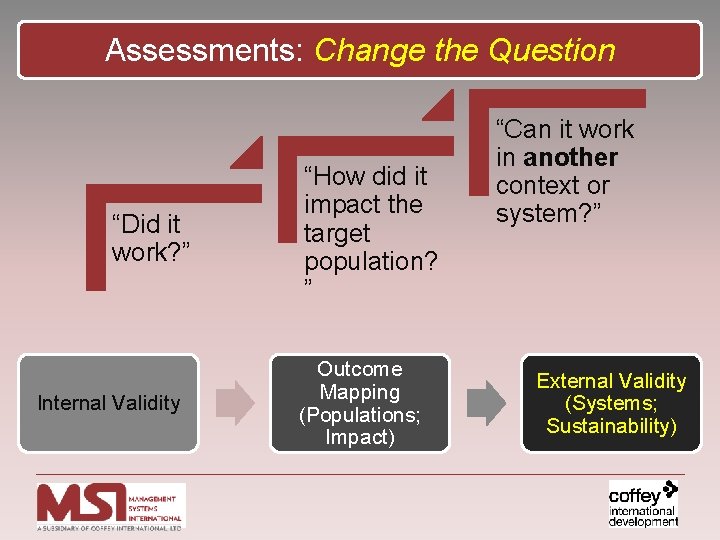

Assessments: Change the Question “Did it work? ” Internal Validity “How did it impact the target population? ” Outcome Mapping (Populations; Impact) “Can it work in another context or system? ” External Validity (Systems; Sustainability)

Ecology: Development, Scale and Scale Internal Validity Evaluations Type of Project Theory of Change Policy New Rules Training/ Mentoring Capacity Organi “Were skills Building in new actually methods zationa applied? ” l “Are new skills extended beyond original site? ” Pilot Testing of Innovation before scaling “Can it work elsewhere? ” Sector- “Was the wide policy adopted? ” Local “Did it work? / Did we meet Objectives” External Validity Assessments “Is the Policy being extended to other sectors? ”

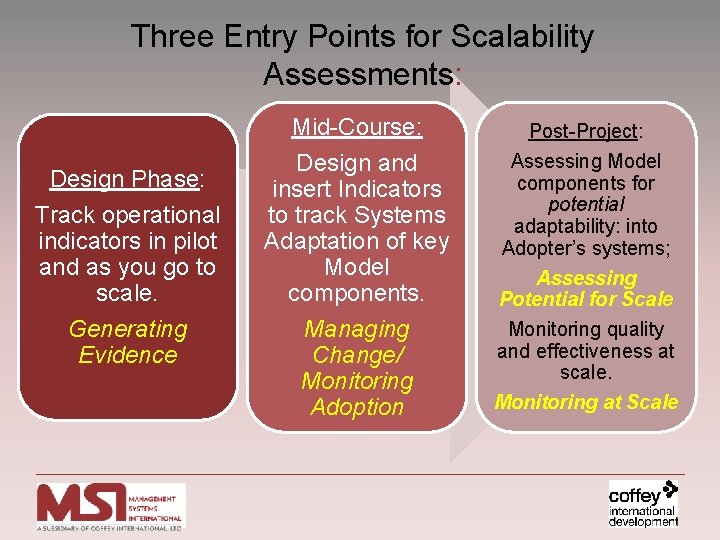

Three Entry Points for Scalability Assessments: Mid-Course: Design Phase: Track operational indicators in pilot and as you go to scale. Generating Evidence Design and insert Indicators to track Systems Adaptation of key Model components. Managing Change/ Monitoring Adoption Post-Project: Assessing Model components for potential adaptability: into Adopter’s systems; Assessing Potential for Scale Monitoring quality and effectiveness at scale. Monitoring at Scale

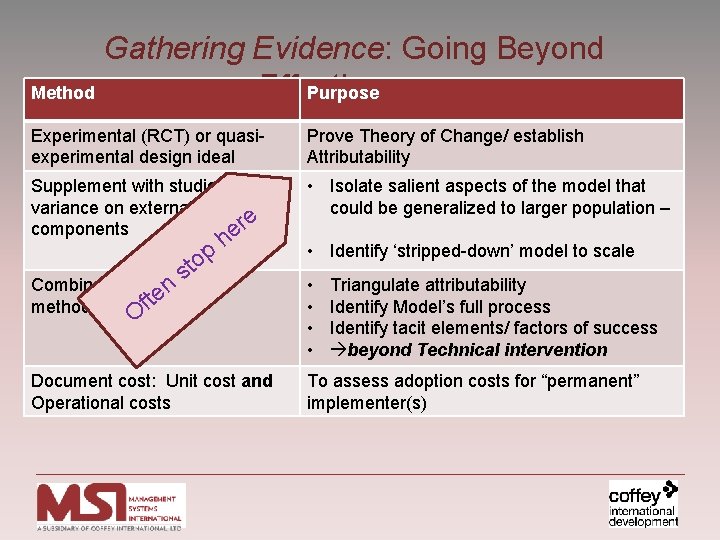

Gathering Evidence: Going Beyond Effectiveness Method Purpose Experimental (RCT) or quasiexperimental design ideal Prove Theory of Change/ establish Attributability Supplement with studies of variance on external factors and e components er • Isolate salient aspects of the model that could be generalized to larger population – Combine with qualitative n e t f methods • • Document cost: Unit cost and Operational costs To assess adoption costs for “permanent” implementer(s) p o t s h O • Identify ‘stripped-down’ model to scale Triangulate attributability Identify Model’s full process Identify tacit elements/ factors of success beyond Technical intervention

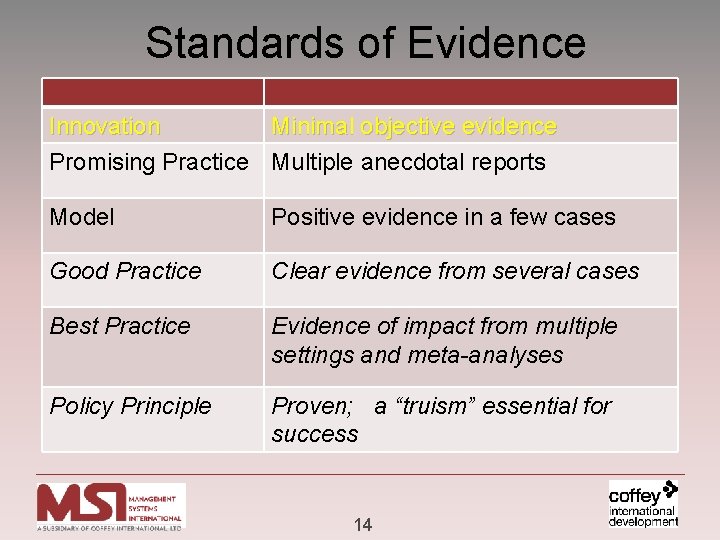

Standards of Evidence Innovation Minimal objective evidence Promising Practice Multiple anecdotal reports Model Positive evidence in a few cases Good Practice Clear evidence from several cases Best Practice Evidence of impact from multiple settings and meta-analyses Policy Principle Proven; a “truism” essential for success 14

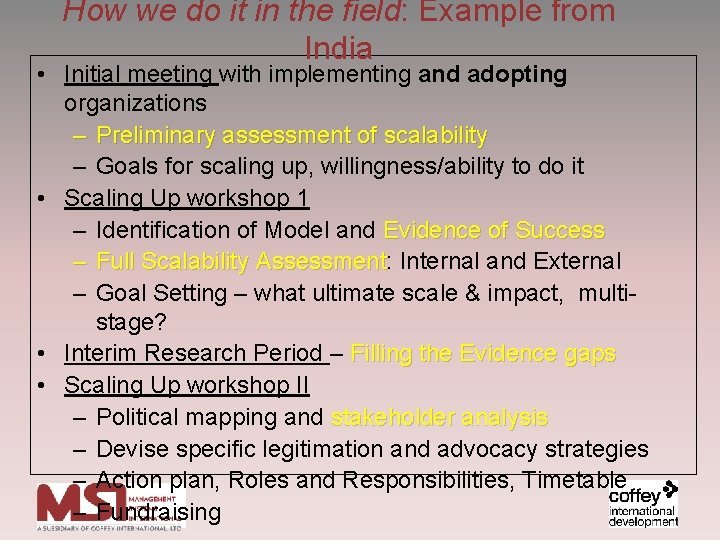

How we do it in the field: Example from India • Initial meeting with implementing and adopting organizations – Preliminary assessment of scalability – Goals for scaling up, willingness/ability to do it • Scaling Up workshop 1 – Identification of Model and Evidence of Success – Full Scalability Assessment: Assessment Internal and External – Goal Setting – what ultimate scale & impact, multistage? • Interim Research Period – Filling the Evidence gaps • Scaling Up workshop II – Political mapping and stakeholder analysis – Devise specific legitimation and advocacy strategies – Action plan, Roles and Responsibilities, Timetable – Fundraising

- Slides: 12