Artificial Neural Network Hopfield Neural NetworkHNN Assoicative MemoryAM

- Slides: 16

-Artificial Neural Network. Hopfield Neural Network(HNN) 朝陽科技大學 資訊管理系 李麗華 教授

Assoicative Memory(AM) - 1 • Def: Associative memory (AM) is any device that associates a set of predefined output patterns with specific input patterns. • Two types of AM: – Auto-associative Memory: Converts a corrupted input pattern into the most resembled input. – Hetro-associative Memory: Produces an output pattern that was stored corresponding to the most similar input pattern. 朝陽科技大學 李麗華 教授 2

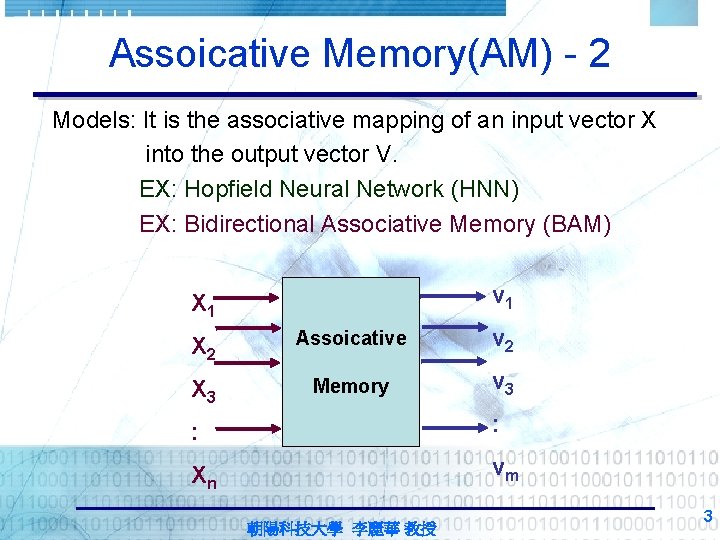

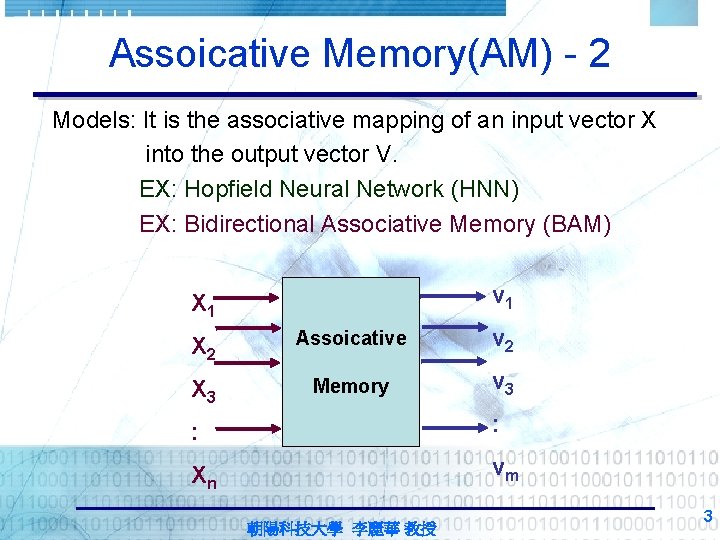

Assoicative Memory(AM) - 2 Models: It is the associative mapping of an input vector X into the output vector V. EX: Hopfield Neural Network (HNN) EX: Bidirectional Associative Memory (BAM) v 1 X 2 Assoicative v 2 X 3 Memory v 3 : : Xn vm 朝陽科技大學 李麗華 教授 3

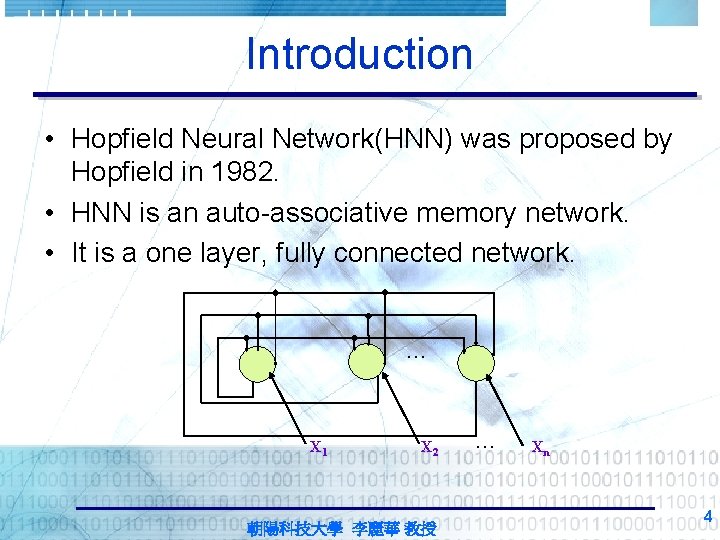

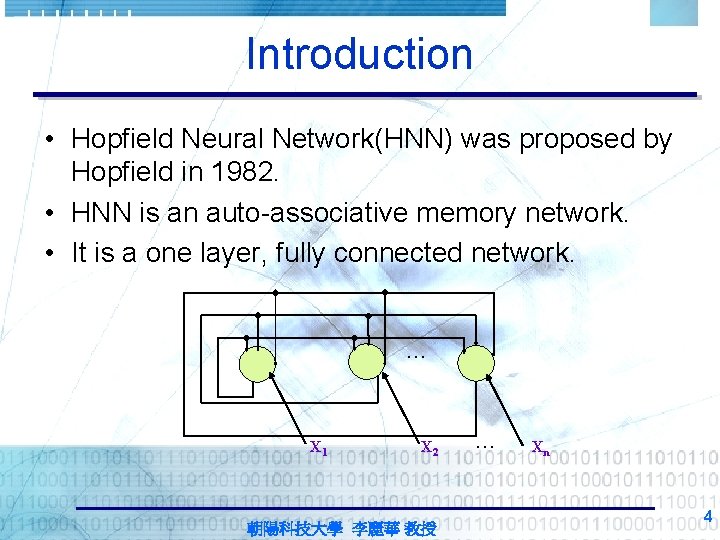

Introduction • Hopfield Neural Network(HNN) was proposed by Hopfield in 1982. • HNN is an auto-associative memory network. • It is a one layer, fully connected network. … X 1 X 2 朝陽科技大學 李麗華 教授 … Xn 4

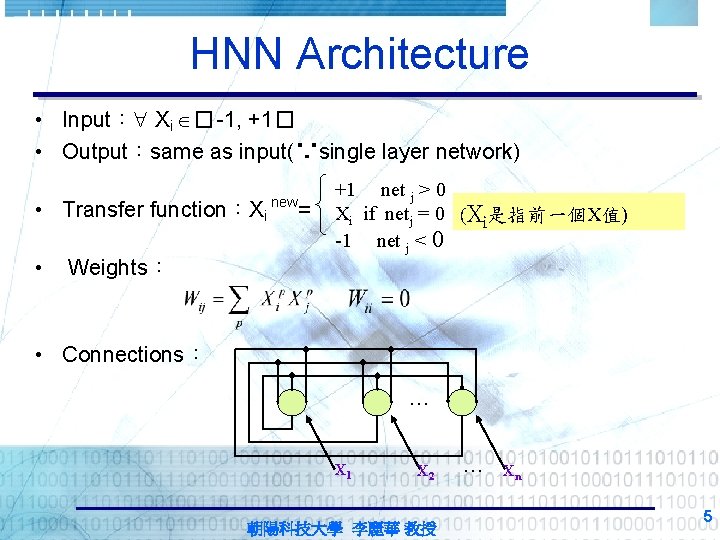

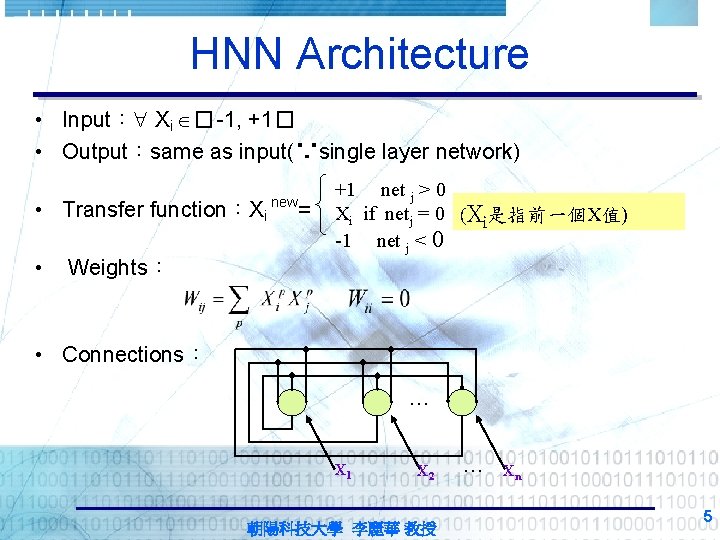

HNN Architecture • Input: Xi � -1, +1� • Output:same as input(∵single layer network) • Transfer function:Xi • Weights: new = +1 net j > 0 Xi if netj = 0 (Xi是指前一個X值) -1 net j < 0 • Connections: … X 1 X 2 朝陽科技大學 李麗華 教授 … Xn 5

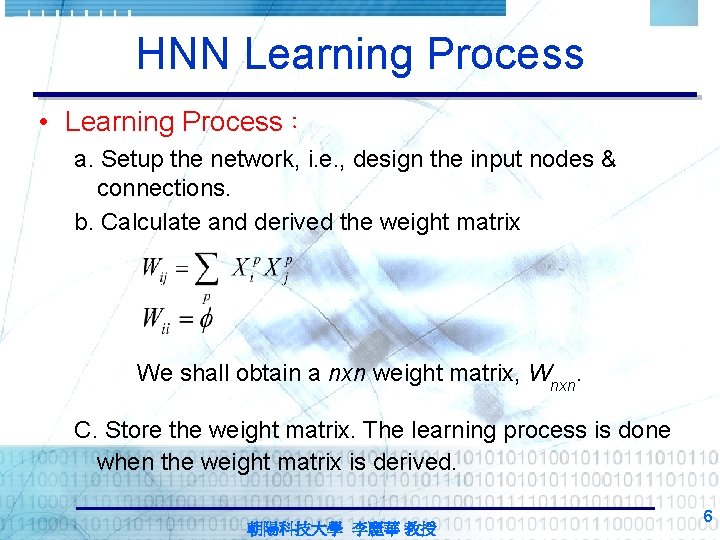

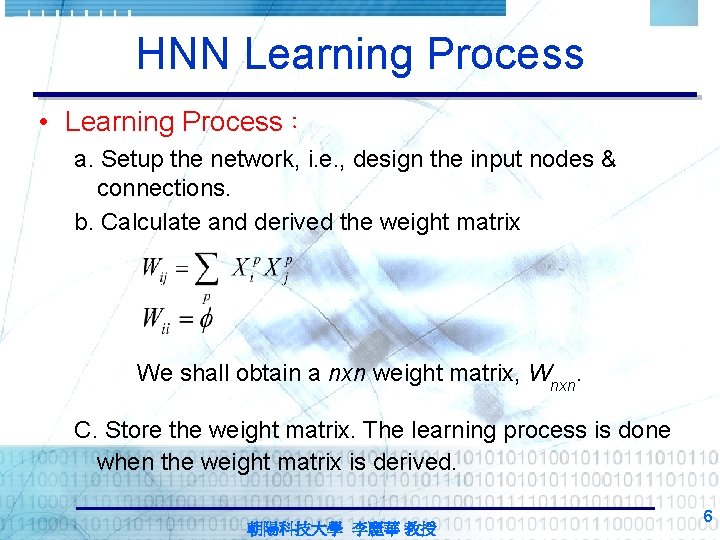

HNN Learning Process • Learning Process: a. Setup the network, i. e. , design the input nodes & connections. b. Calculate and derived the weight matrix We shall obtain a nxn weight matrix, Wnxn. C. Store the weight matrix. The learning process is done when the weight matrix is derived. 朝陽科技大學 李麗華 教授 6

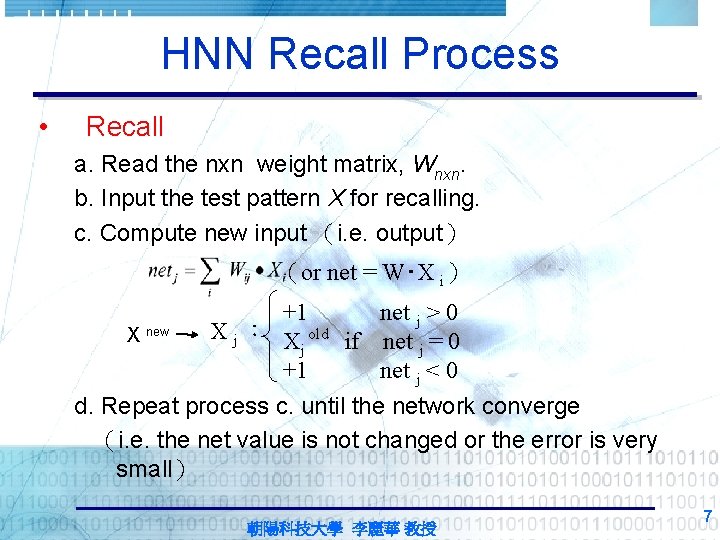

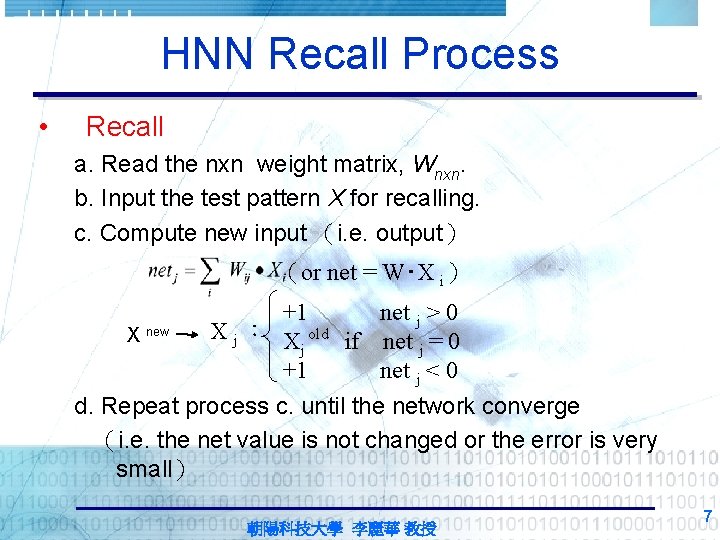

HNN Recall Process • Recall a. Read the nxn weight matrix, Wnxn. b. Input the test pattern X for recalling. c. Compute new input (i. e. output) (or net = W‧X i) X new +1 net j > 0 X j : X old if net = 0 j j +1 net j < 0 d. Repeat process c. until the network converge (i. e. the net value is not changed or the error is very small) 朝陽科技大學 李麗華 教授 7

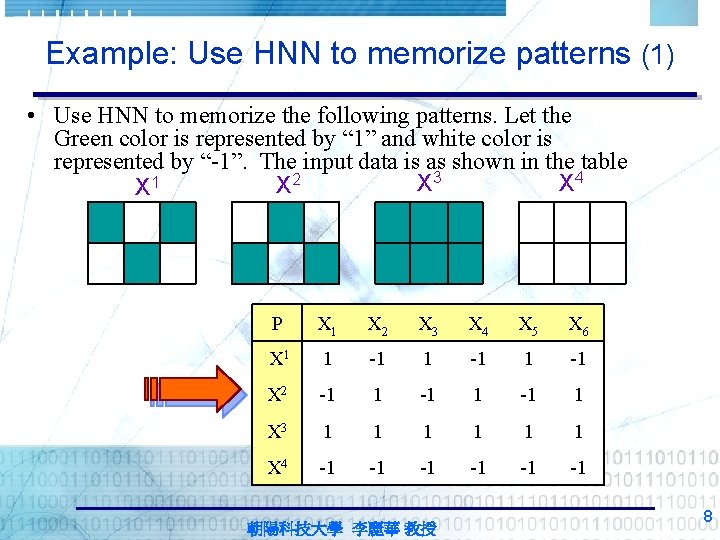

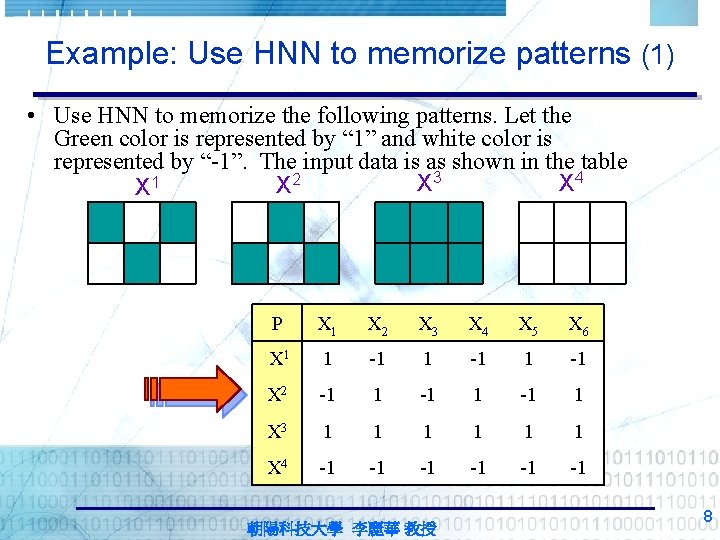

Example: Use HNN to memorize patterns (1) • Use HNN to memorize the following patterns. Let the Green color is represented by “ 1” and white color is represented by “-1”. The input data is as shown in the table X 3 X 4 X 2 X 1 P X 1 X 2 X 3 X 4 X 5 X 6 X 1 1 -1 X 2 -1 1 X 3 1 1 1 X 4 -1 -1 -1 朝陽科技大學 李麗華 教授 8

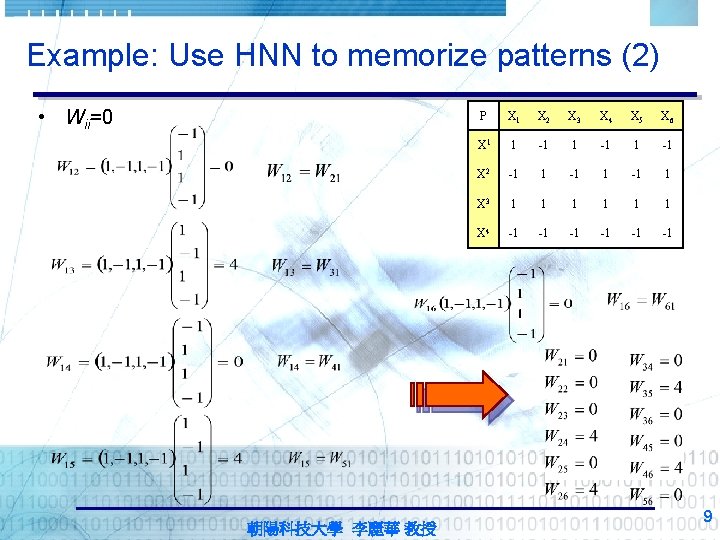

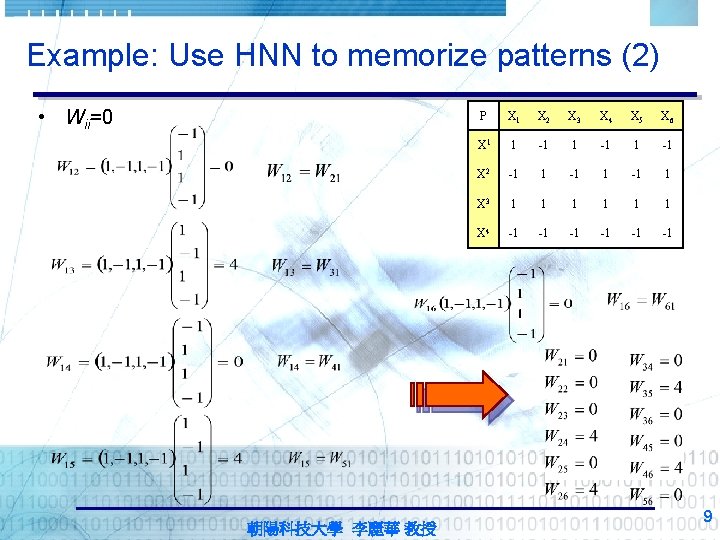

Example: Use HNN to memorize patterns (2) • Wii=0 朝陽科技大學 李麗華 教授 P X 1 X 2 X 3 X 4 X 5 X 6 X 1 1 -1 X 2 -1 1 X 3 1 1 1 X 4 -1 -1 -1 9

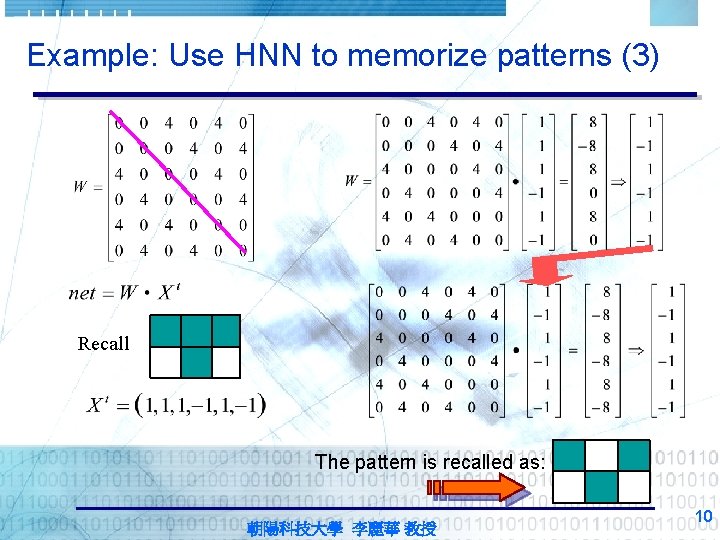

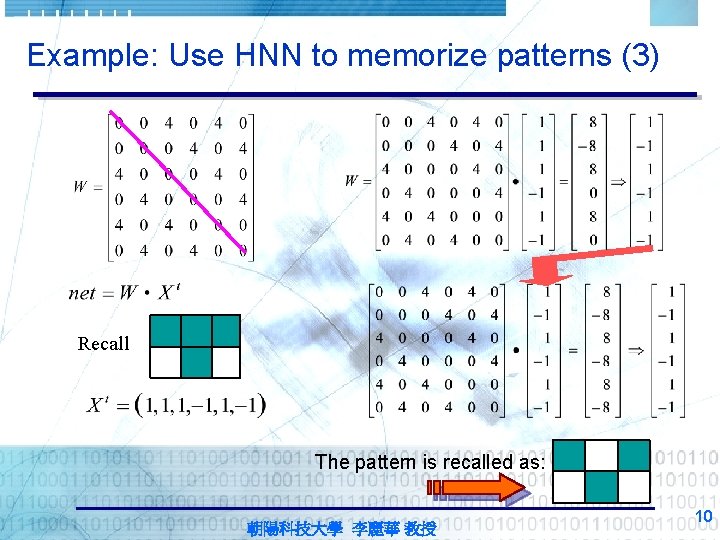

Example: Use HNN to memorize patterns (3) Recall The pattern is recalled as: 朝陽科技大學 李麗華 教授 10

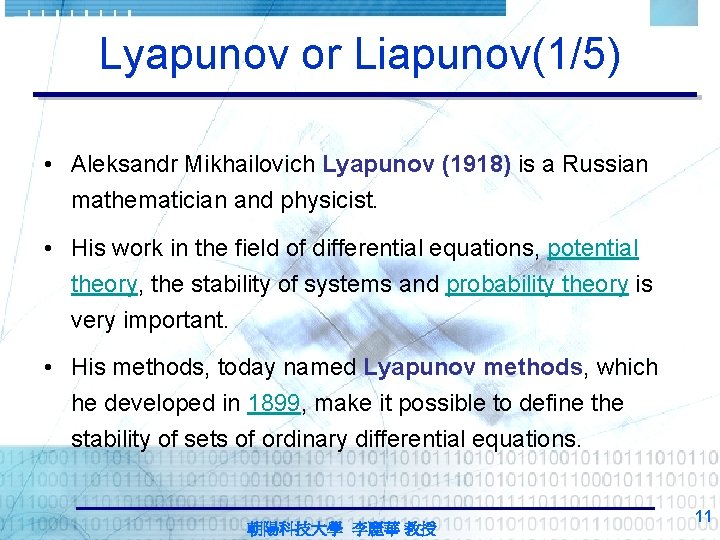

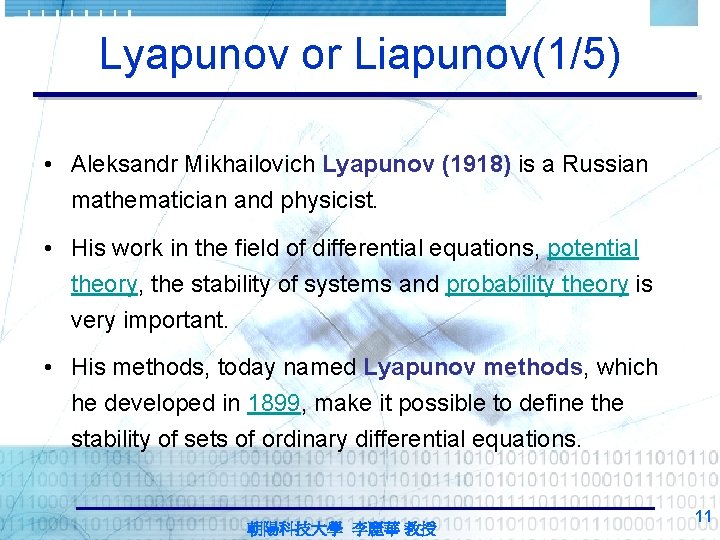

Lyapunov or Liapunov(1/5) • Aleksandr Mikhailovich Lyapunov (1918) is a Russian mathematician and physicist. • His work in the field of differential equations, potential theory, the stability of systems and probability theory is very important. • His methods, today named Lyapunov methods, which he developed in 1899, make it possible to define the stability of sets of ordinary differential equations. 朝陽科技大學 李麗華 教授 11

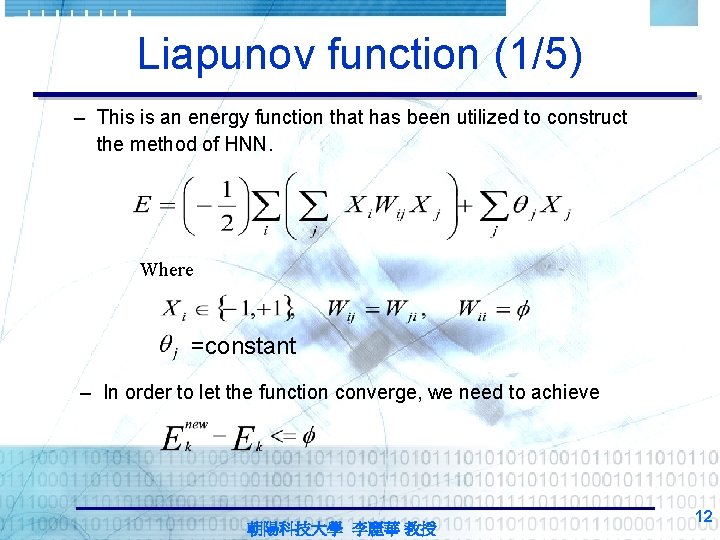

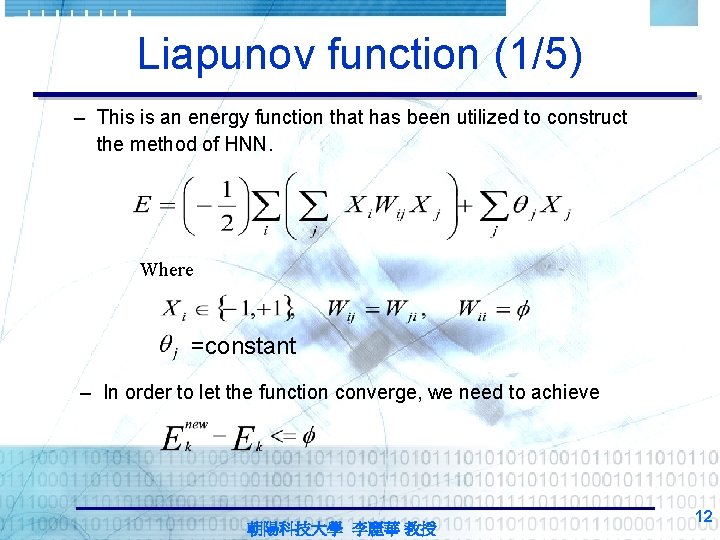

Liapunov function (1/5) – This is an energy function that has been utilized to construct the method of HNN. Where =constant – In order to let the function converge, we need to achieve 朝陽科技大學 李麗華 教授 12

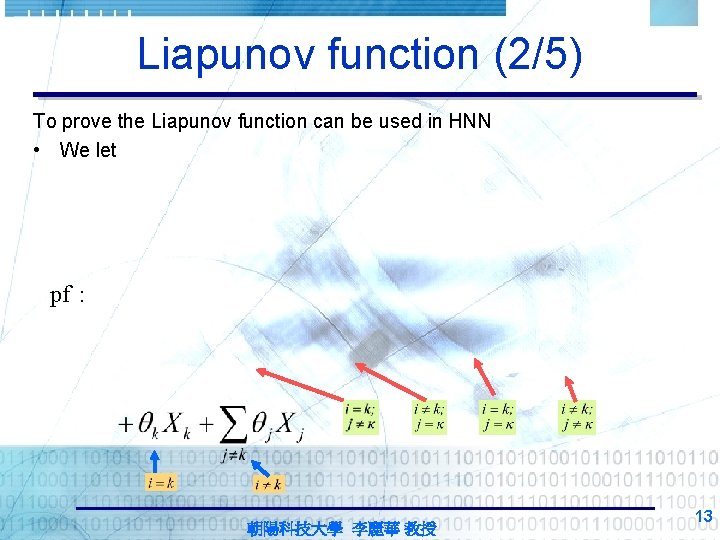

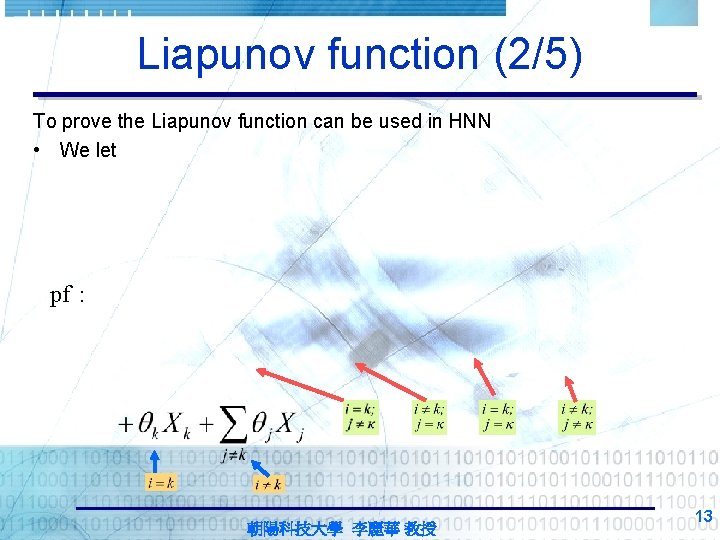

Liapunov function (2/5) To prove the Liapunov function can be used in HNN • We let pf: 朝陽科技大學 李麗華 教授 13

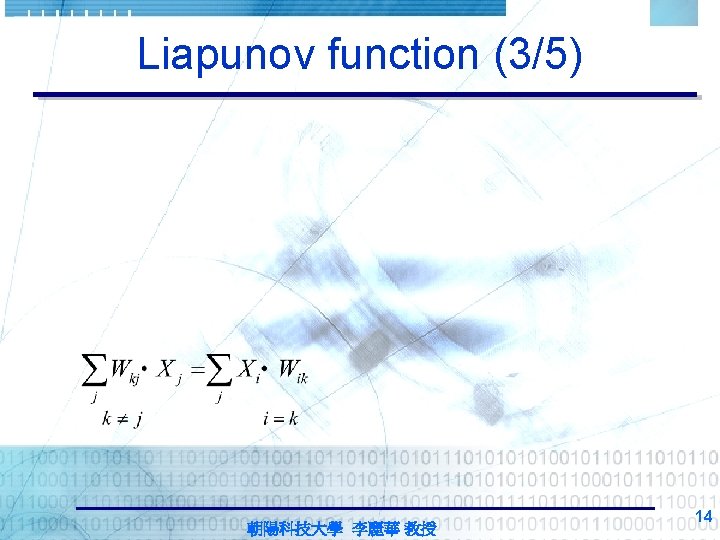

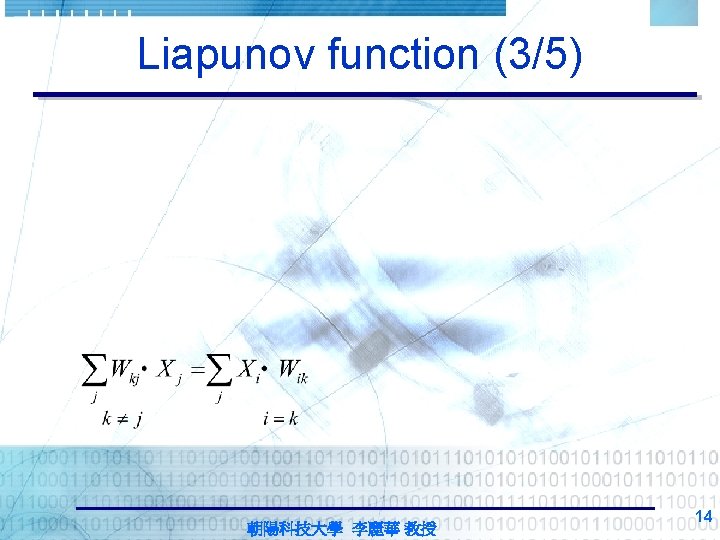

Liapunov function (3/5) 朝陽科技大學 李麗華 教授 14

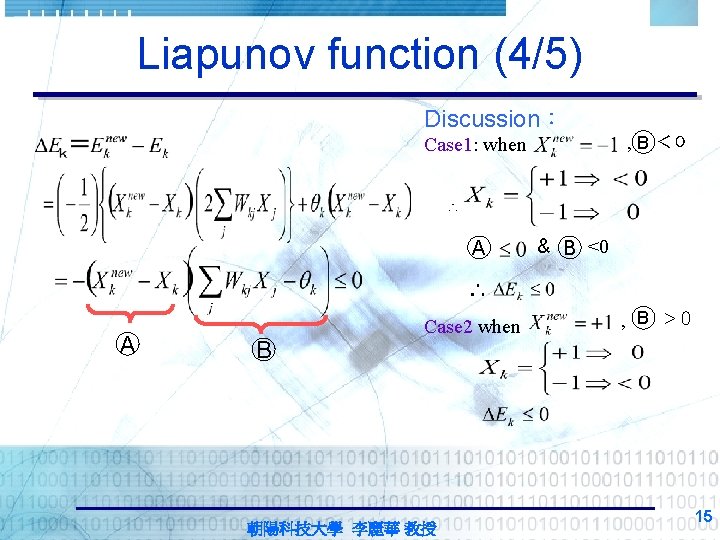

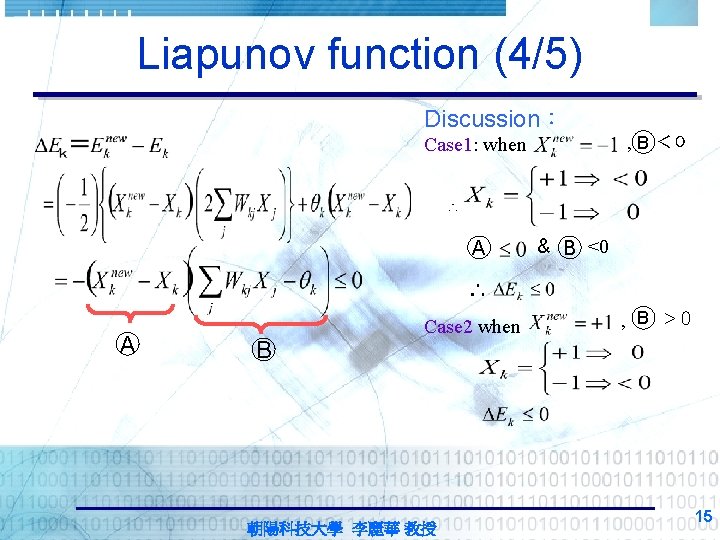

Liapunov function (4/5) Discussion: , B <0 Case 1: when ∴ A & B <0 ∴ A B Case 2 when 朝陽科技大學 李麗華 教授 , B >0 15

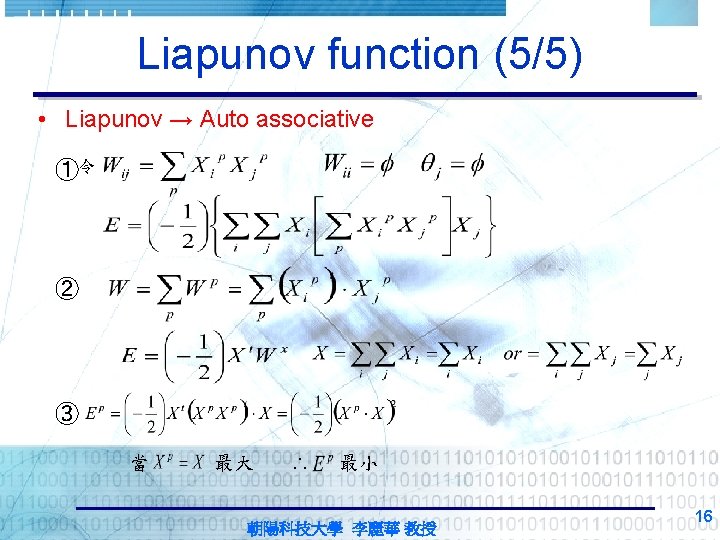

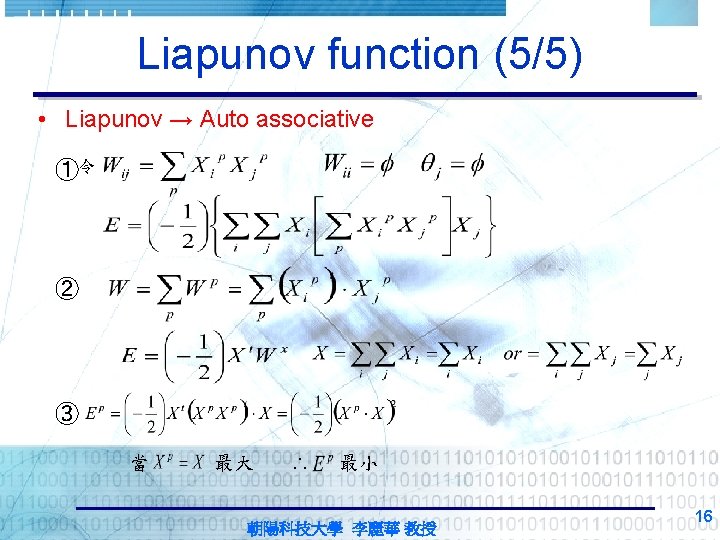

Liapunov function (5/5) • Liapunov → Auto associative ①令 ② ③ 當 最大 ∴ 最小 朝陽科技大學 李麗華 教授 16