Artificial Moral Agent AMAs Prospects and Approaches for

- Slides: 33

Artificial Moral Agent (AMAs) Prospects and Approaches for Building Computer Systems and Robots Capable of Making Moral Decisions Wendell Wallach wendell. wallach@yale. edu Yale University Institution for Social and Policy Studies Interdisciplinary Center for Bioethics December 10, 2006

A New Field of Inquiry Machine Morality Machine Ethics Computational Ethics Artificial Morality Friendly AI

n Implementation of moral decisionmaking facilities in artificial agents n Necessitated by autonomous systems making choices

Mapping the Field

Questions • • Do we need artificial moral agents (AMAs) • When? For what? Do we want computers making ethical decisions? Whose morality or what morality? How can we make ethics computable?

• What role should ethical theory play in defining the control architecture for systems sensitive to moral considerations in their choices and actions? • • Top-down imposition of ethical theory Bottom-up building of systems that aim at goals or standards which may or may not be specified in explicit theoretical terms

Top-Down Theories • Two main contenders • Utilitarian - Greatest good of the greatest number - “Only compute!” • Duties (Deontology) - Respect for rational agents - “Consistent Deontic Logic”

Frame Problem BOTH have a version of the frame problem -computation load due to requirements of: Psychological knowledge Knowledge of effects of actions in the world Estimating sufficiency of initial information

Bottom Up Approaches • Evolution • Game Theorists and Evolutionary Psychologist • • AI Engineering -- exploit “self-organizing” feature of evolved systems • • • “Emergent morality” – The emergence of values in evolutionary terms Alife Genetic Algorithms Evolutionary Robotics Development and Learning • • • Associative Learning Platforms Behavior Based Robotics Simulating Moral Development – Piaget, Kohlberg, Gilligan, etc Fine Tuning a System

Distinction • Humans -- Biochemical, • Instinctual, Emotion Platform • Higher Order Faculties Emerged • Computers -- Logical Platform

Possible Advantages • Calculated Morality • Does the ability of computers to process large quantities of information, and analyze the potential results from many courses of action, suggest that computers will be superior to humans in making judgments? (Allen, 2002) • Absence of emotions or base motivations (dubious • • Is the absence of a nervous system subject to emotional “highjackings” a moral advantage? (sexual jealousy) Base motivations (greed)

Supra-rational Faculties and Social Mechanisms • Complex Faculty • Emotions • Stoicism vs. Moral Intelligence • Embodiment • Learning from Experience • Sociability • Understanding and Consciousness • Theory of Mind

Role of Emotions in Moral Decision. Making Machines n Both beneficial and dysfunctional • • n AMAs can be reasonable without being subject to dysfunctional emotional highjackings Will require some affective intelligence • Ability to recognize emotional states of others Will humans feel comfortable with machine sensitive to their emotional states?

Sociability Appreciation for both the verbal and non-verbal aspects of human communication. May be necessary for the acceptance by humans of AMAs.

Embodied Intelligence v Moral Reasoning • A robotic systems learning from interaction with its environment and humans, not the mere application of reasoning.

Consciousness, Theory of Mind n Breaking down complex mental activities or social mechanisms into faculties which are themselves composites of lower level skills • • n Brian Scassellati – Theory of Mind Reassembly • n Igor Alexander –Five Axioms – Consciousness/Machine Consciousness Do we have working theories? Will advances in evolutionary robotics facilitate integration?

Cooperation • Between AMA’s and humans. • Training agents to work together in pursuit of a shared goal, e. g. Robo. Soccer • The development of interface standards that will allow agents to interact and cooperate with other classes of artificial entities.

Facilitating Trust in Machines n Will artificial agents need to emulate the full array of human faculties to function as adequate moral agents and to instill trust in their actions?

WHAT HAS BEEN ENGINEERED SO FAR • Not Much • The Gap Between Possibility or Hype and reality

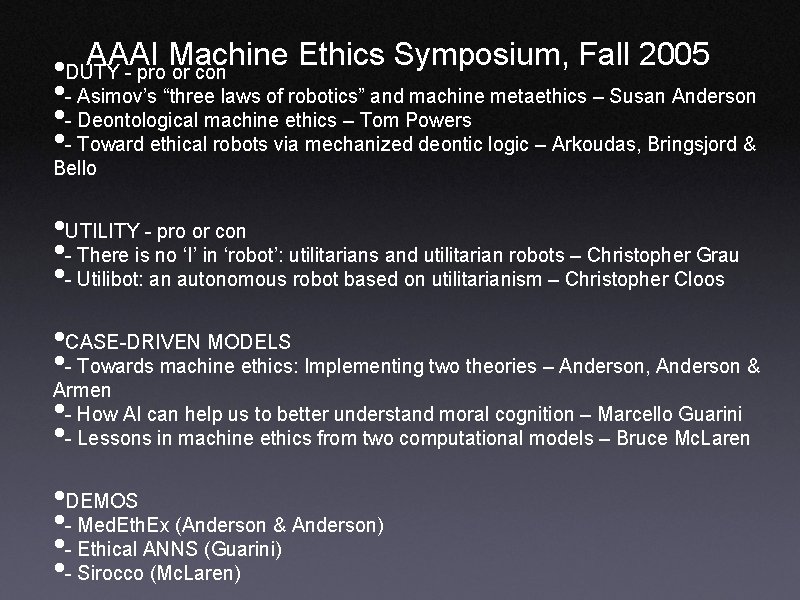

AAAI Machine Ethics Symposium, Fall 2005 • DUTY - pro or con • - Asimov’s “three laws of robotics” and machine metaethics – Susan Anderson • - Deontological machine ethics – Tom Powers • - Toward ethical robots via mechanized deontic logic – Arkoudas, Bringsjord & Bello • UTILITY - pro or con • - There is no ‘I’ in ‘robot’: utilitarians and utilitarian robots – Christopher Grau • - Utilibot: an autonomous robot based on utilitarianism – Christopher Cloos • CASE-DRIVEN MODELS • - Towards machine ethics: Implementing two theories – Anderson, Anderson & Armen • - How AI can help us to better understand moral cognition – Marcello Guarini • - Lessons in machine ethics from two computational models – Bruce Mc. Laren • DEMOS • - Med. Eth. Ex (Anderson & Anderson) • - Ethical ANNS (Guarini) • - Sirocco (Mc. Laren)

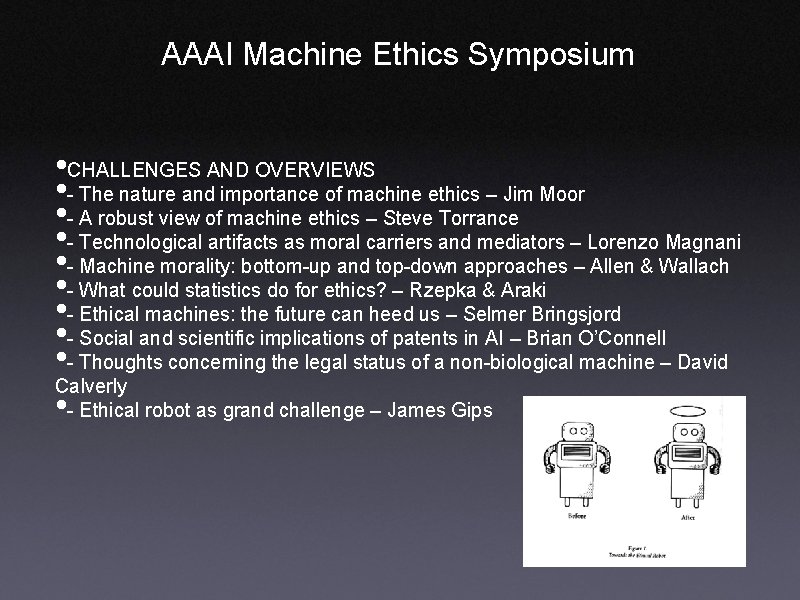

AAAI Machine Ethics Symposium • CHALLENGES AND OVERVIEWS • - The nature and importance of machine ethics – Jim Moor • - A robust view of machine ethics – Steve Torrance • - Technological artifacts as moral carriers and mediators – Lorenzo Magnani • - Machine morality: bottom-up and top-down approaches – Allen & Wallach • - What could statistics do for ethics? – Rzepka & Araki • - Ethical machines: the future can heed us – Selmer Bringsjord • - Social and scientific implications of patents in AI – Brian O’Connell • - Thoughts concerning the legal status of a non-biological machine – David Calverly • - Ethical robot as grand challenge – James Gips

Other Key Players • • • “Beyond AI: Creating the Conscience of the Machine” -- Josh Storrs Hall “Artificial Morality” -- Peter Danielson Friendly AI -- Eliezer Yudkowsky Moral Agency for Mindless Machines --Luciano Floridi and J. W. Sanders Agents for Ethical Assistance -- Catriona Kennedy Thousands of additional researchers

LIDA Cognitive Cycle Stan Franklin & Bernie Baars (GWT)

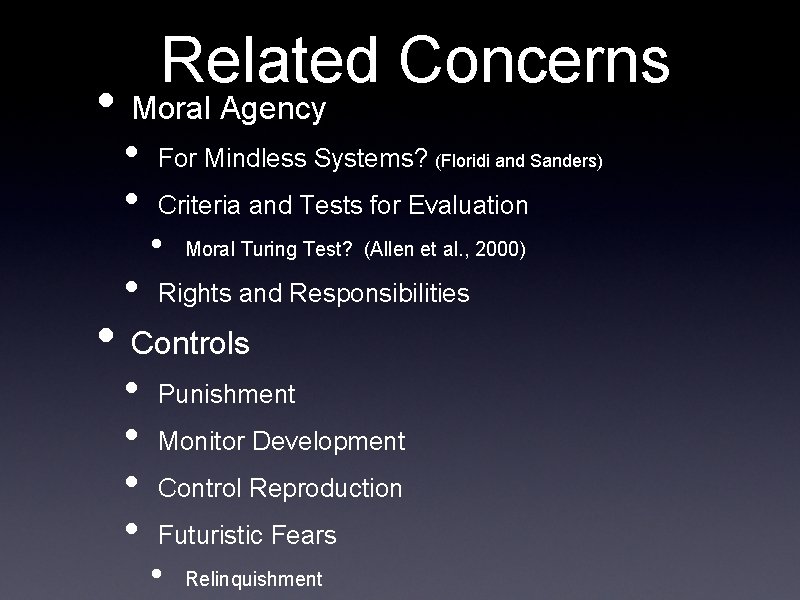

Related Concerns • Moral Agency • • • For Mindless Systems? (Floridi and Sanders) Criteria and Tests for Evaluation • Moral Turing Test? (Allen et al. , 2000) Rights and Responsibilities • Controls • • Punishment Monitor Development Control Reproduction Futuristic Fears • Relinquishment

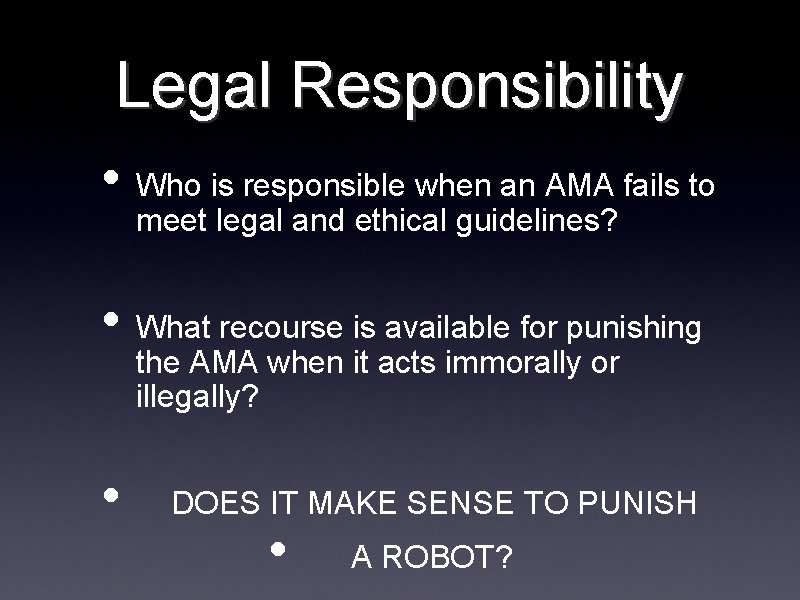

Legal Responsibility • Who is responsible when an AMA fails to meet legal and ethical guidelines? • What recourse is available for punishing the AMA when it acts immorally or illegally? • DOES IT MAKE SENSE TO PUNISH • A ROBOT?

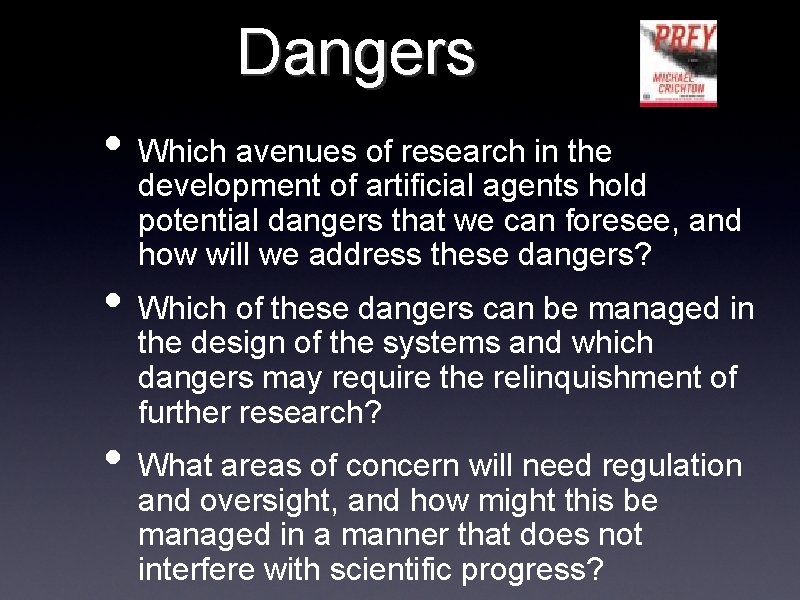

Dangers • Which avenues of research in the development of artificial agents hold potential dangers that we can foresee, and how will we address these dangers? • Which of these dangers can be managed in the design of the systems and which dangers may require the relinquishment of further research? • What areas of concern will need regulation and oversight, and how might this be managed in a manner that does not interfere with scientific progress?

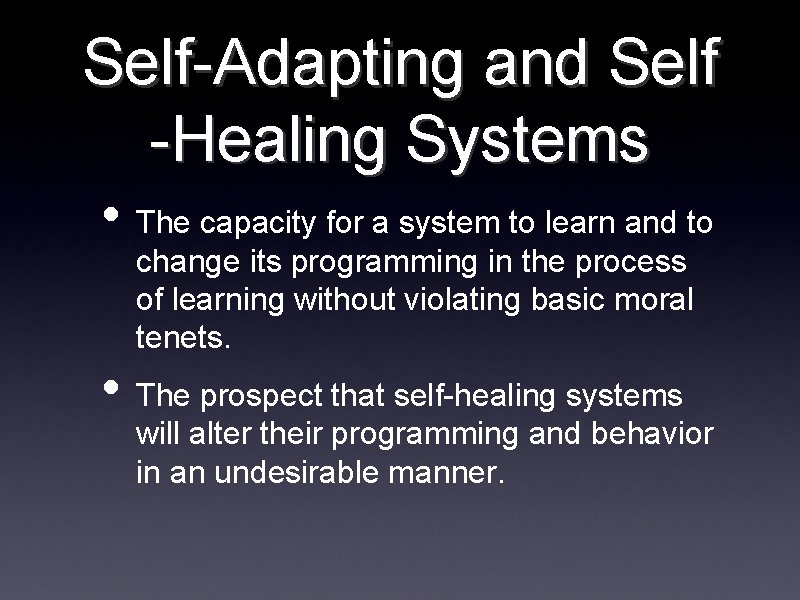

Self-Adapting and Self -Healing Systems • The capacity for a system to learn and to change its programming in the process of learning without violating basic moral tenets. • The prospect that self-healing systems will alter their programming and behavior in an undesirable manner.

Design Strategies for Restraining AMAs • Design strategies for building-in restraints on the behavior of artificial agents. • Limitations on the reliability and safety of existing design strategies.

• If there are clear limits in our ability to develop or manage AMAs, then it will be incumbent upon us to recognize those limits so that we can turn our attention away from a false reliance on autonomous systems and toward more human intervention in the decisionmaking process of computers and robots.

Hybrids • Eventually we will need AMA’s which maintain the dynamic and flexible morality of bottom-up systems that accommodate diverse inputs, while subjecting the evaluation of choices and actions to topdown principles that represent ideals we strive to meet.

Thanks To My Colleagues • Professor Colin Allen, Philosophy Dept. , University of Indiana • Dr. Iva Smit, E&E Consultants, Netterden, Netherlands