Artificial Intelligence Representation and Problem Solving Sequential Decision

Artificial Intelligence: Representation and Problem Solving Sequential Decision Making (3): Passive Reinforcement Learning 15 -381 / 681 Instructors: Fei Fang (This Lecture) and Dave Touretzky feifang@cmu. edu Wean Hall 4126

Recap � You know exactly how the world works! 2 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 3 Fei Fang 6/18/2021

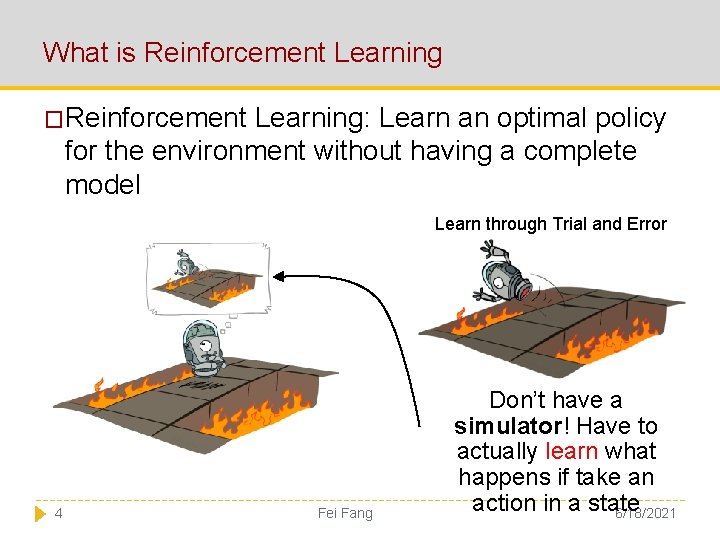

What is Reinforcement Learning �Reinforcement Learning: Learn an optimal policy for the environment without having a complete model Learn through Trial and Error 4 Fei Fang Don’t have a simulator! Have to actually learn what happens if take an action in a state 6/18/2021

What is Reinforcement Learning �Reinforcement Learning: Learn an optimal policy for the environment without having a complete model 5 Fei Fang 6/18/2021

What is Reinforcement Learning � The agents can ”sense” the environment (it knows the state) and has goals � Learning from interaction with the environment � Trial and error search � (Delayed) Rewards (Advisory signals ≠ errors signals) � What actions to take � Exploration-Exploitation 6 dilemma Fei Fang 6/18/2021

RL Applications / Examples Bipedal Robot Learn to Walk 7 Fei Fang 6/18/2021

RL Applications / Examples Helicopter Manoeuvres 8 Fei Fang 6/18/2021

RL Applications / Examples Learn to Play Atari Games 9 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 10 Fei Fang 6/18/2021

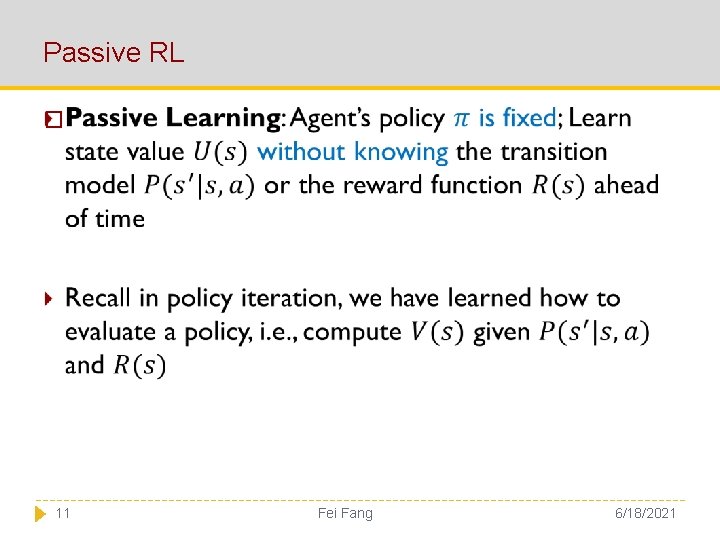

Passive RL � 11 Fei Fang 6/18/2021

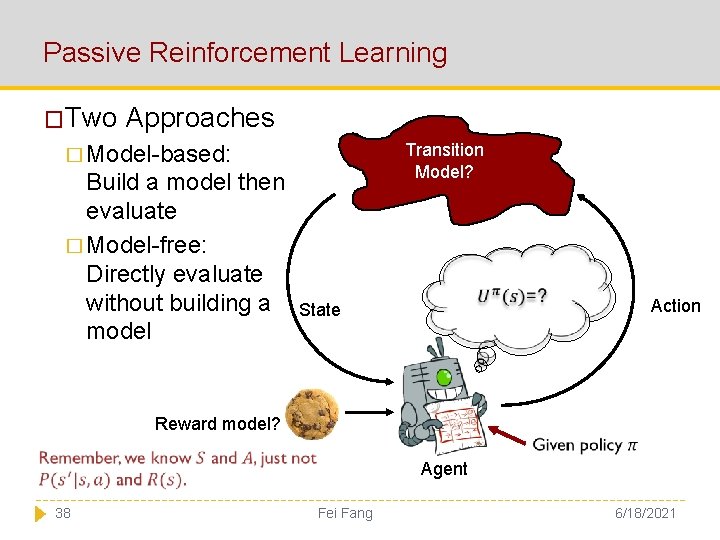

Passive Reinforcement Learning �Two Approaches Transition Model? � Model-based: Build a model then evaluate � Model-free: Directly evaluate without building a model State P(s’|a, s)=? , R(s)=? , … Action Reward model? Agent 12 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 13 Fei Fang 6/18/2021

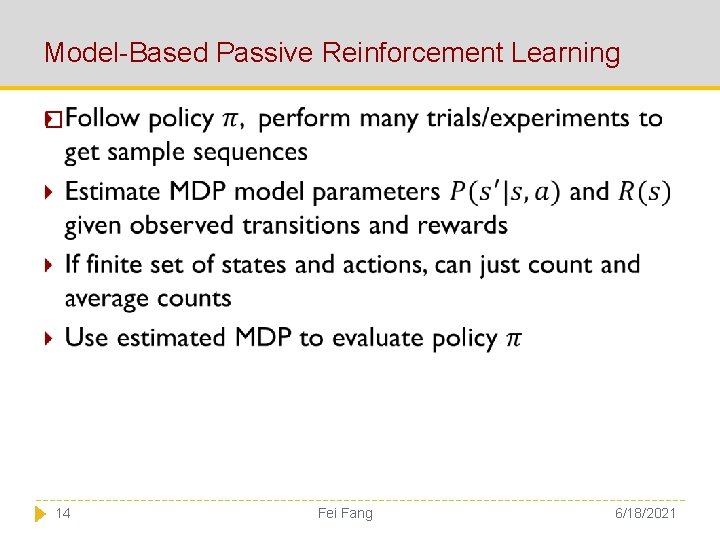

Model-Based Passive Reinforcement Learning � 14 Fei Fang 6/18/2021

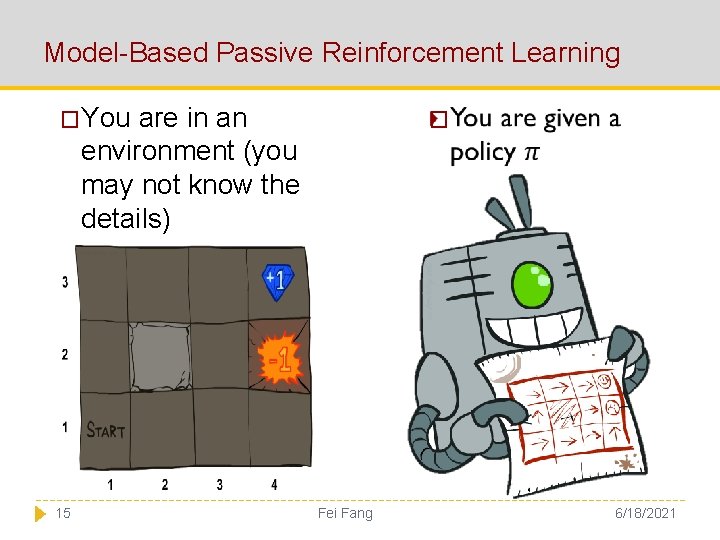

Model-Based Passive Reinforcement Learning �You are in an environment (you may not know the details) 15 � Fei Fang 6/18/2021

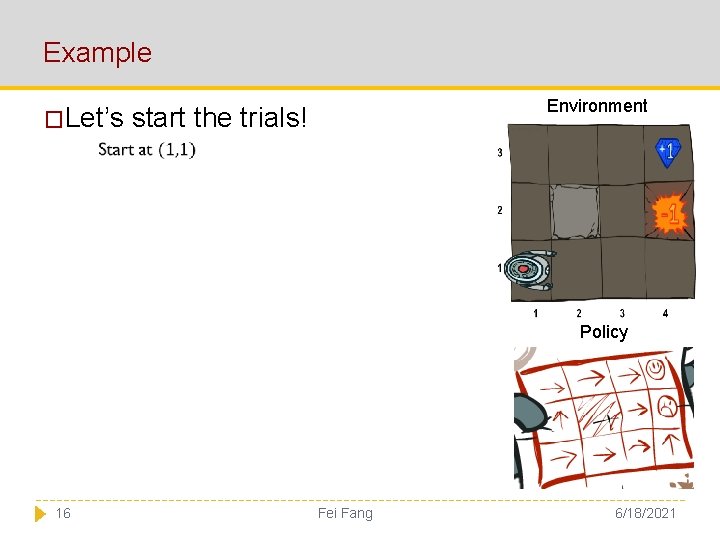

Example �Let’s Environment start the trials! Policy 16 Fei Fang 6/18/2021

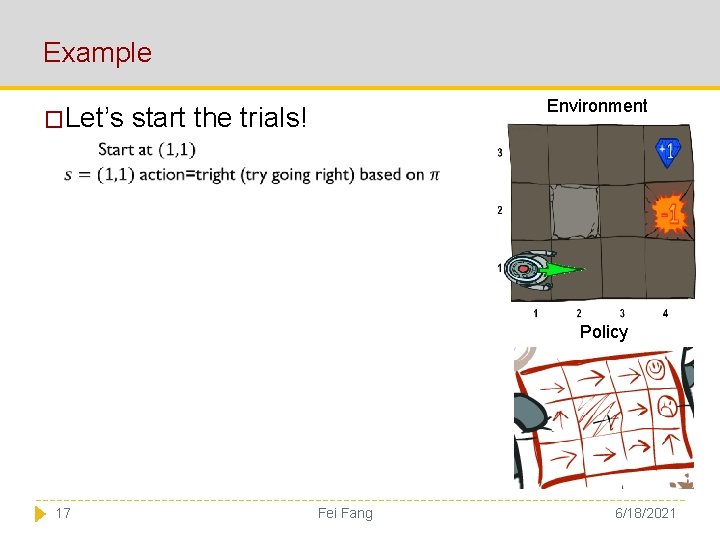

Example �Let’s Environment start the trials! Policy 17 Fei Fang 6/18/2021

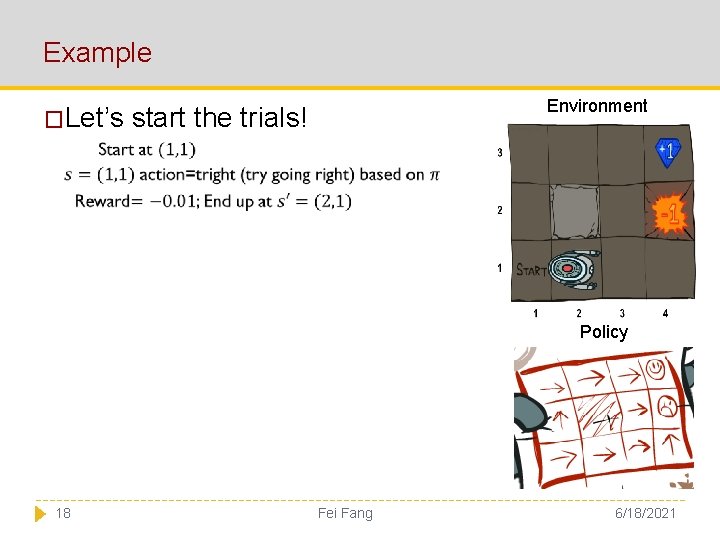

Example �Let’s Environment start the trials! Policy 18 Fei Fang 6/18/2021

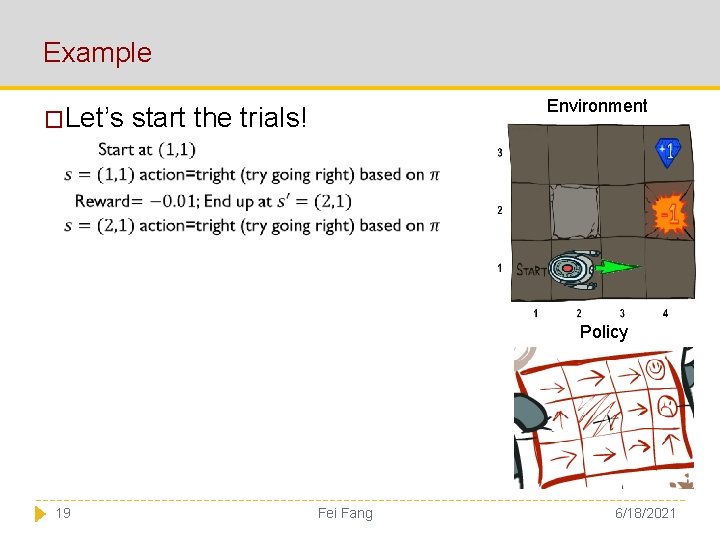

Example �Let’s Environment start the trials! Policy 19 Fei Fang 6/18/2021

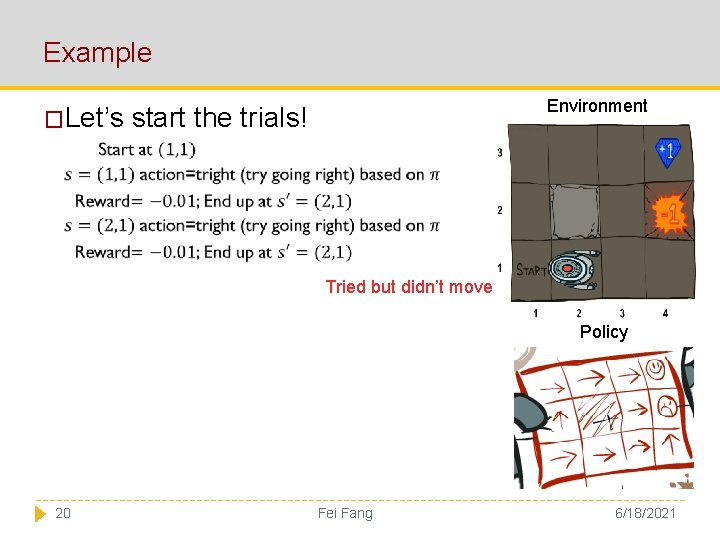

Example �Let’s Environment start the trials! Tried but didn’t move Policy 20 Fei Fang 6/18/2021

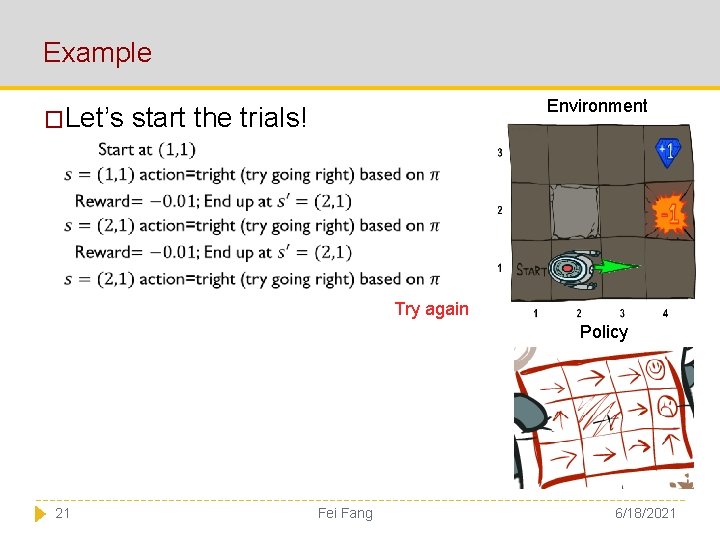

Example �Let’s Environment start the trials! Try again Policy 21 Fei Fang 6/18/2021

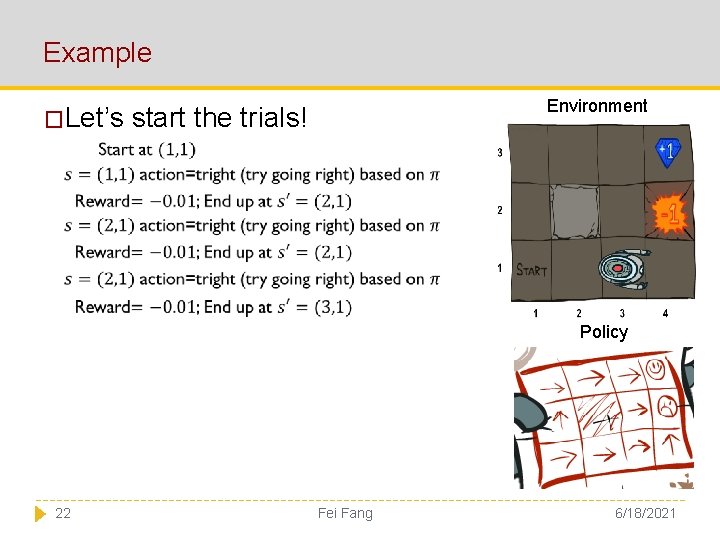

Example �Let’s Environment start the trials! Policy 22 Fei Fang 6/18/2021

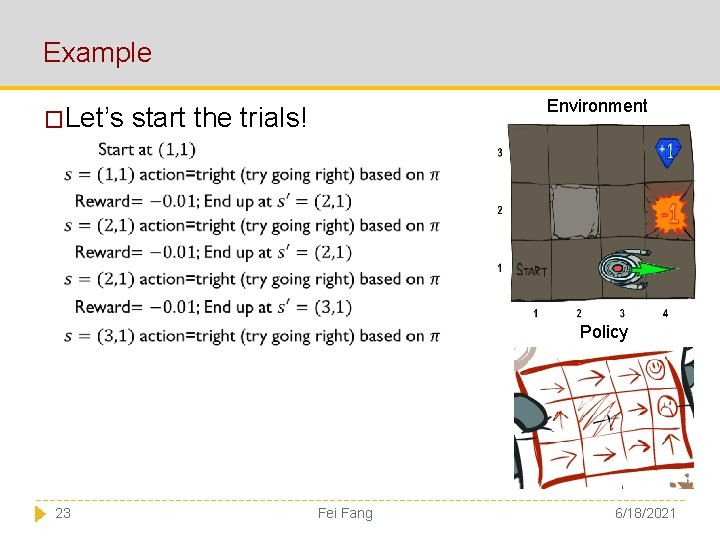

Example �Let’s Environment start the trials! Policy 23 Fei Fang 6/18/2021

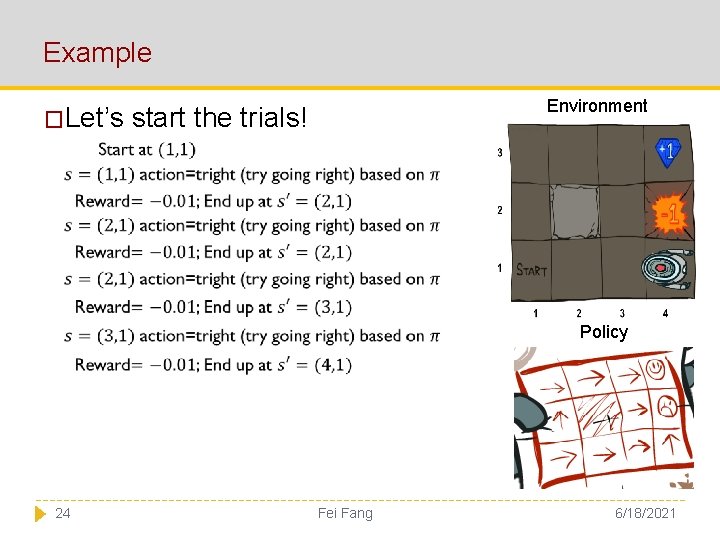

Example �Let’s Environment start the trials! Policy 24 Fei Fang 6/18/2021

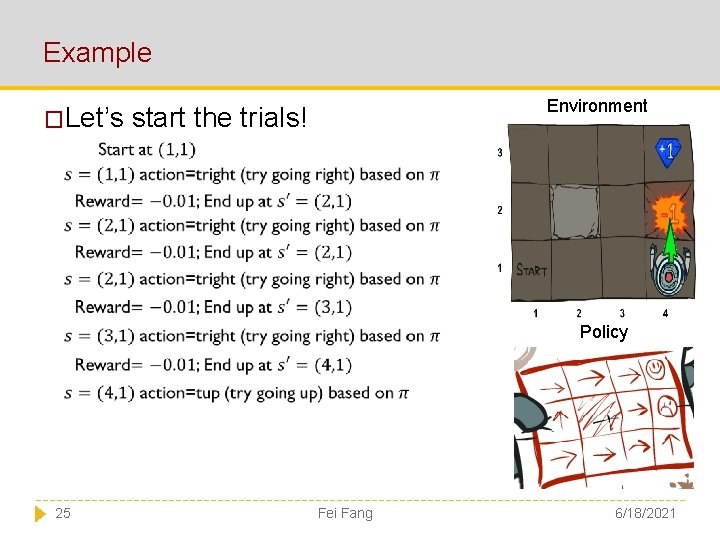

Example �Let’s Environment start the trials! Policy 25 Fei Fang 6/18/2021

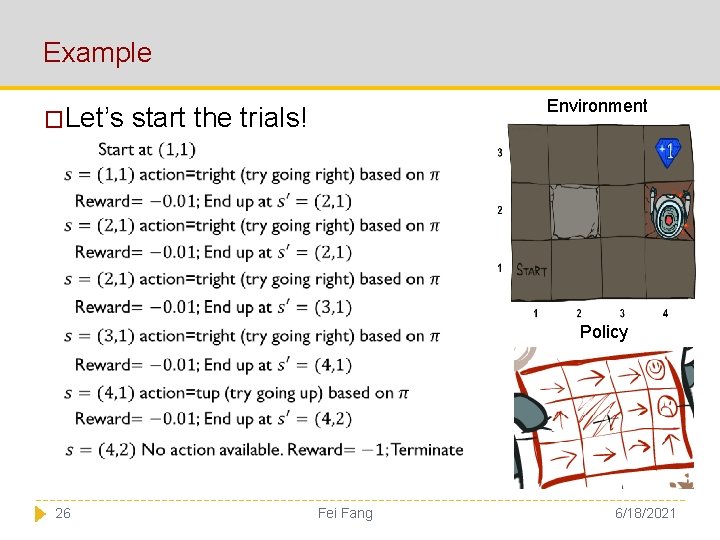

Example �Let’s Environment start the trials! Policy 26 Fei Fang 6/18/2021

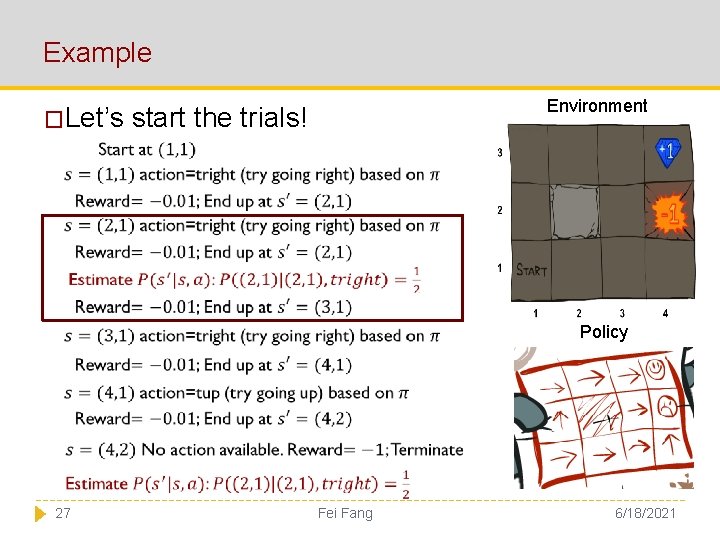

Example �Let’s Environment start the trials! Policy 27 Fei Fang 6/18/2021

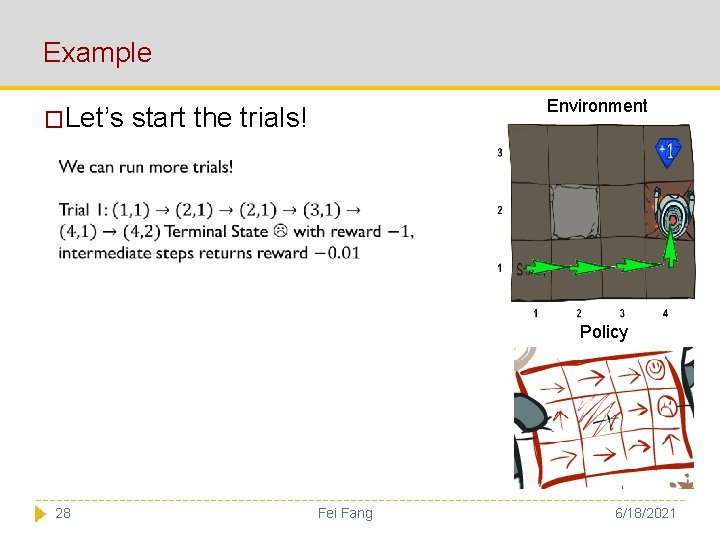

Example �Let’s Environment start the trials! Policy 28 Fei Fang 6/18/2021

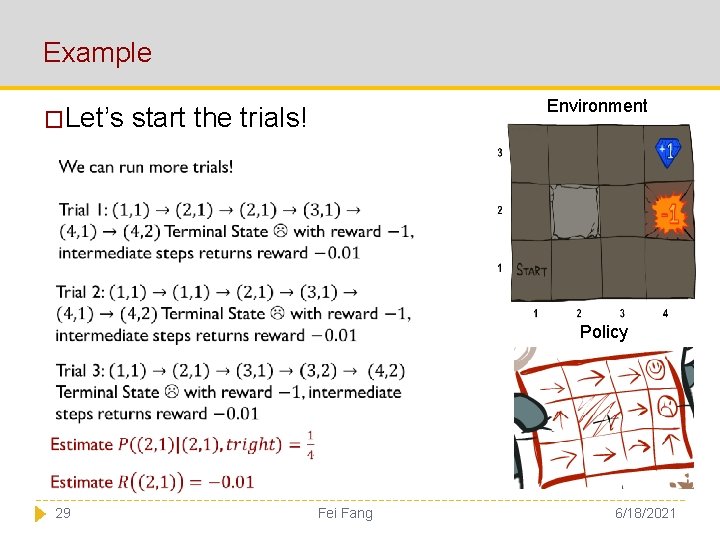

Example �Let’s Environment start the trials! Policy 29 Fei Fang 6/18/2021

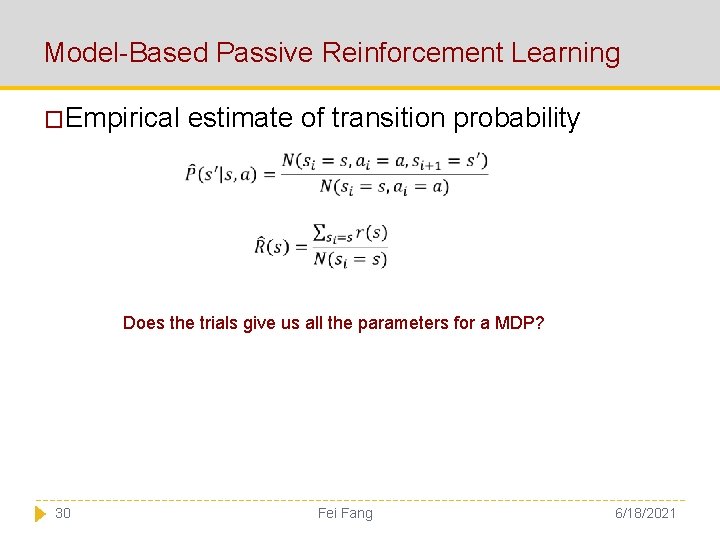

Model-Based Passive Reinforcement Learning �Empirical estimate of transition probability Does the trials give us all the parameters for a MDP? 30 Fei Fang 6/18/2021

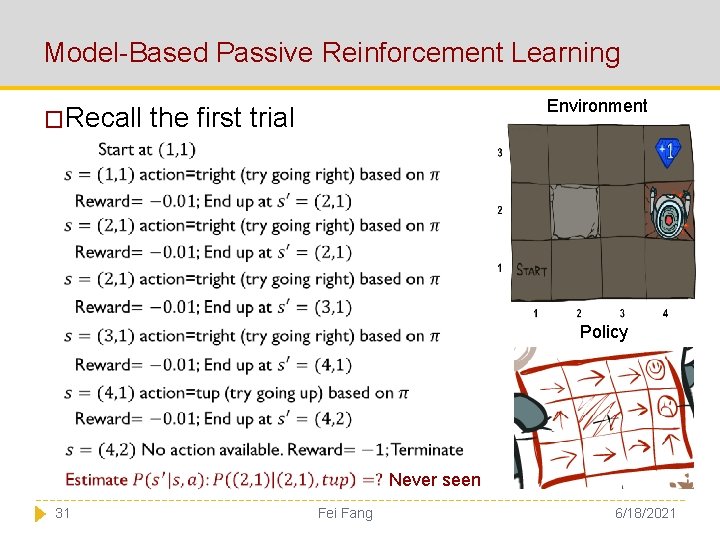

Model-Based Passive Reinforcement Learning �Recall Environment the first trial Policy Never seen 31 Fei Fang 6/18/2021

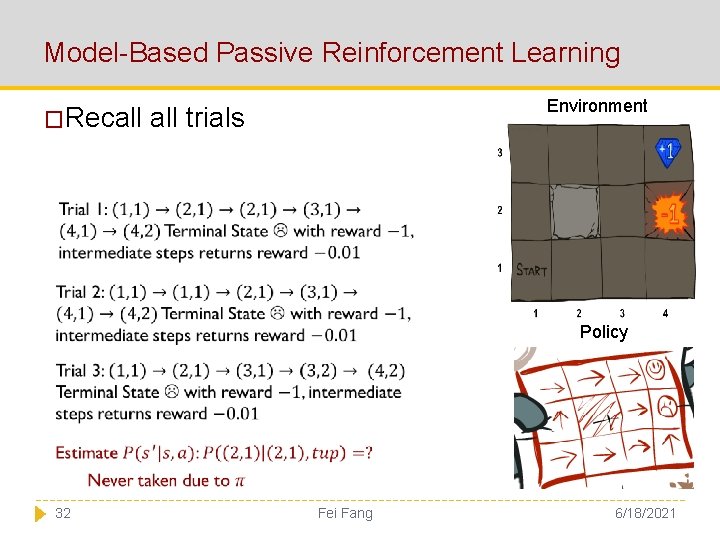

Model-Based Passive Reinforcement Learning �Recall Environment all trials Policy 32 Fei Fang 6/18/2021

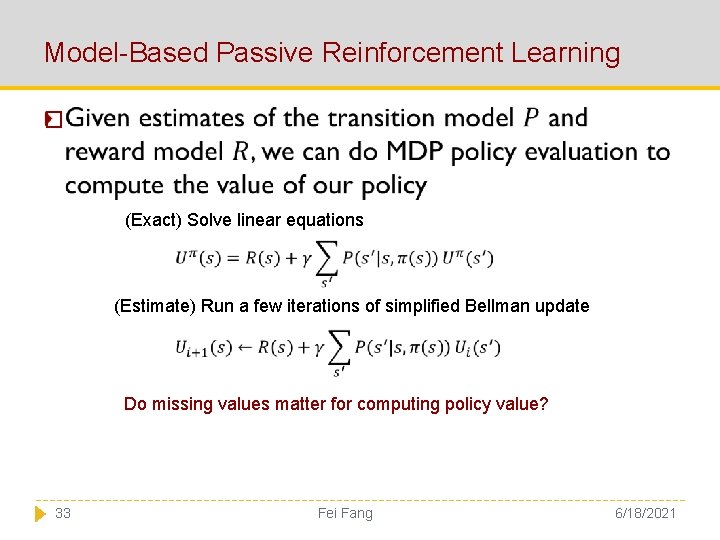

Model-Based Passive Reinforcement Learning � (Exact) Solve linear equations (Estimate) Run a few iterations of simplified Bellman update Do missing values matter for computing policy value? 33 Fei Fang 6/18/2021

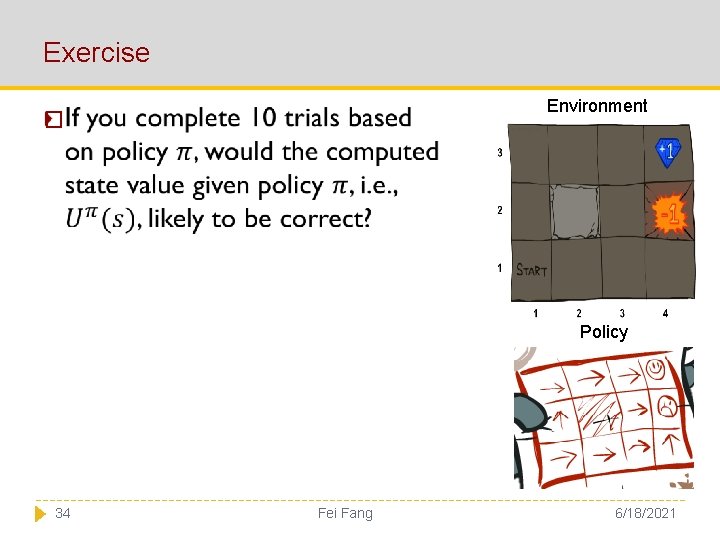

Exercise Environment � Policy 34 Fei Fang 6/18/2021

Model-Based Passive Reinforcement Learning �Advantage: Make good use of data �Disadvantage: Require building the actual MDP model. Intractable if state space is too large 36 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 37 Fei Fang 6/18/2021

Passive Reinforcement Learning �Two Approaches Transition Model? � Model-based: Build a model then evaluate � Model-free: Directly evaluate without building a model Action State Reward model? Agent 38 Fei Fang 6/18/2021

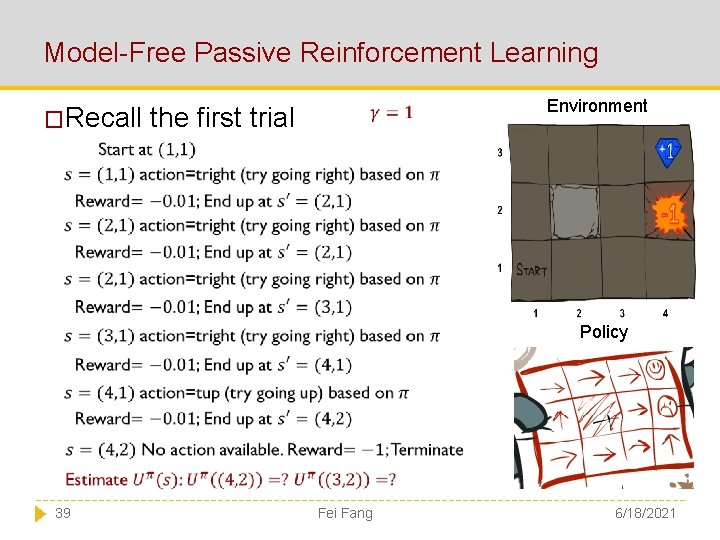

Model-Free Passive Reinforcement Learning �Recall Environment the first trial Policy 39 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 40 Fei Fang 6/18/2021

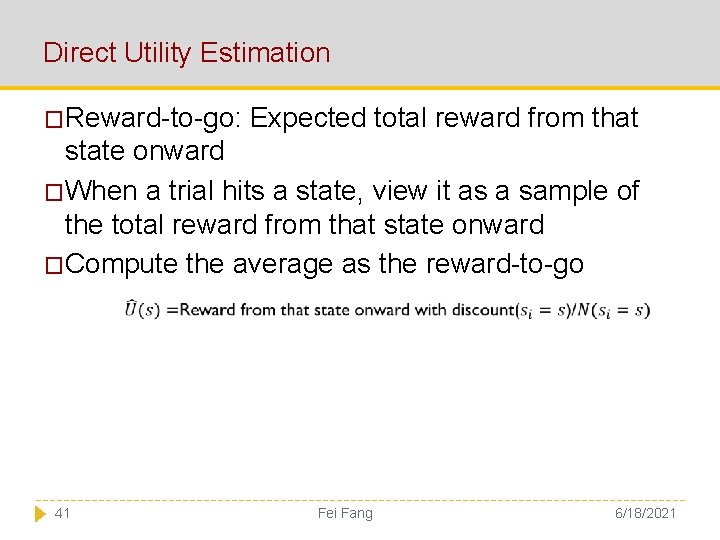

Direct Utility Estimation �Reward-to-go: Expected total reward from that state onward �When a trial hits a state, view it as a sample of the total reward from that state onward �Compute the average as the reward-to-go 41 Fei Fang 6/18/2021

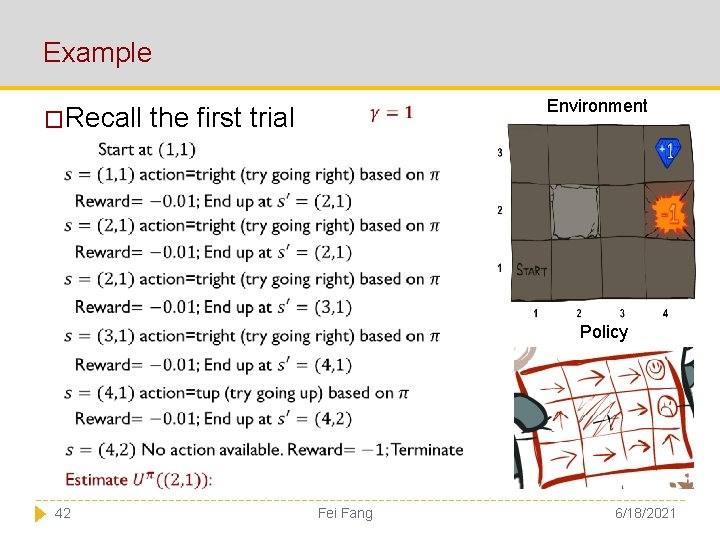

Example �Recall Environment the first trial Policy 42 Fei Fang 6/18/2021

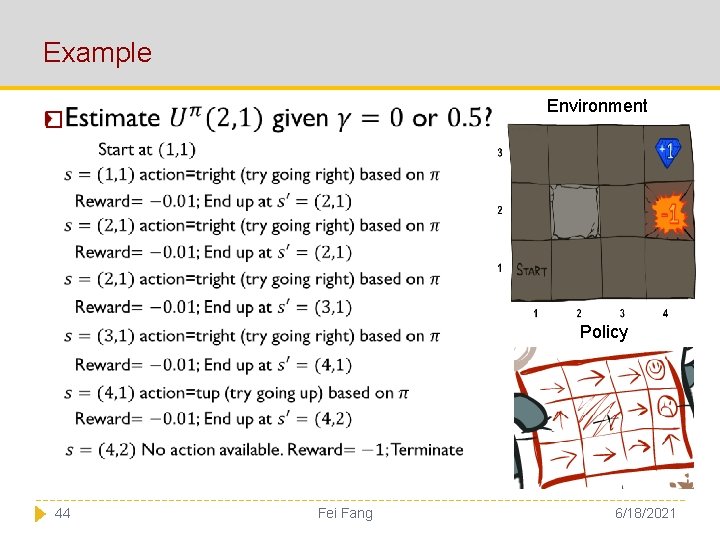

Example Environment � Policy 44 Fei Fang 6/18/2021

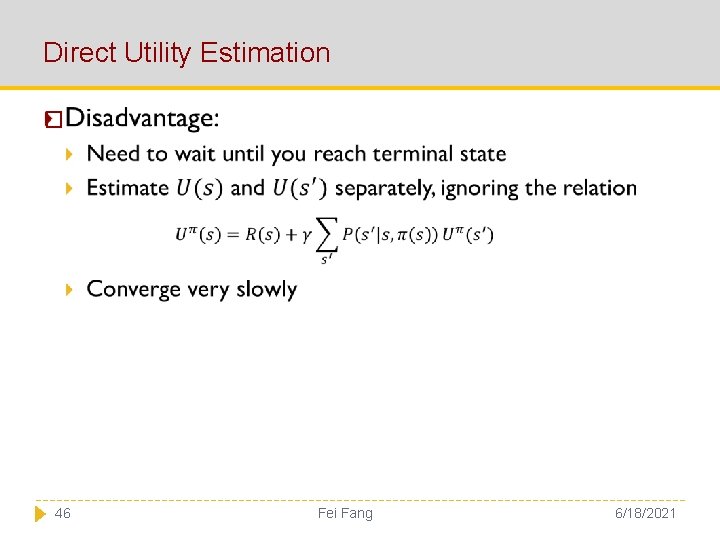

Direct Utility Estimation � 46 Fei Fang 6/18/2021

Outline �What is Reinforcement Learning �Passive RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 47 Fei Fang 6/18/2021

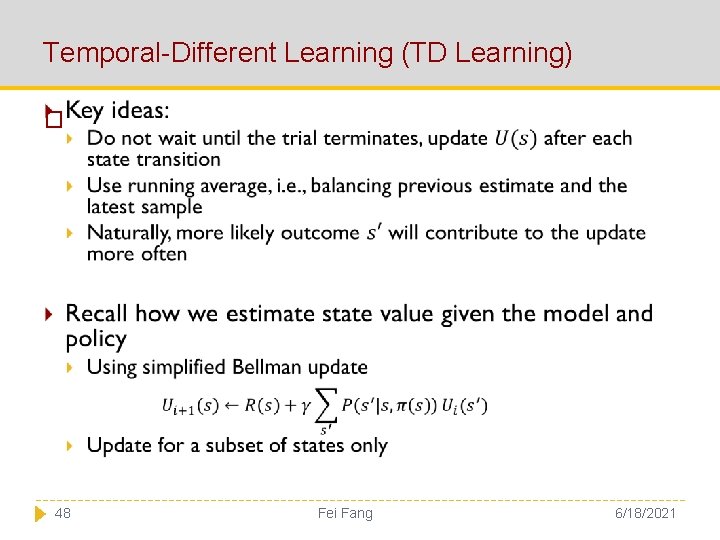

Temporal-Different Learning (TD Learning) � 48 Fei Fang 6/18/2021

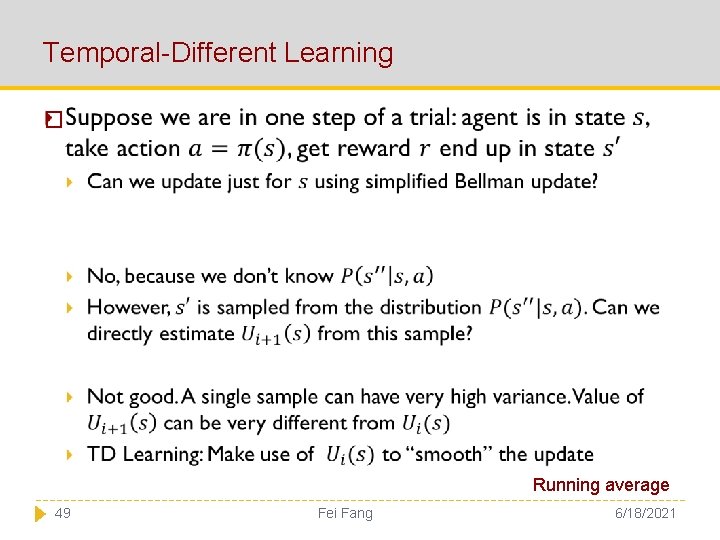

Temporal-Different Learning � Running average 49 Fei Fang 6/18/2021

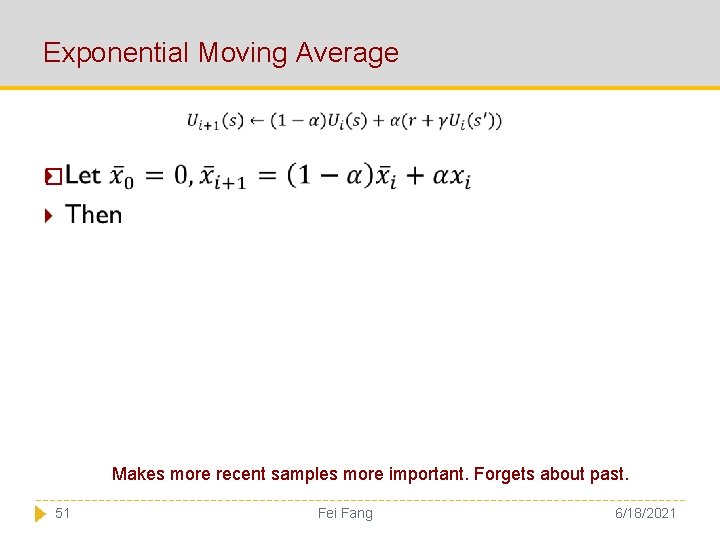

Exponential Moving Average � Makes more recent samples more important. Forgets about past. 51 Fei Fang 6/18/2021

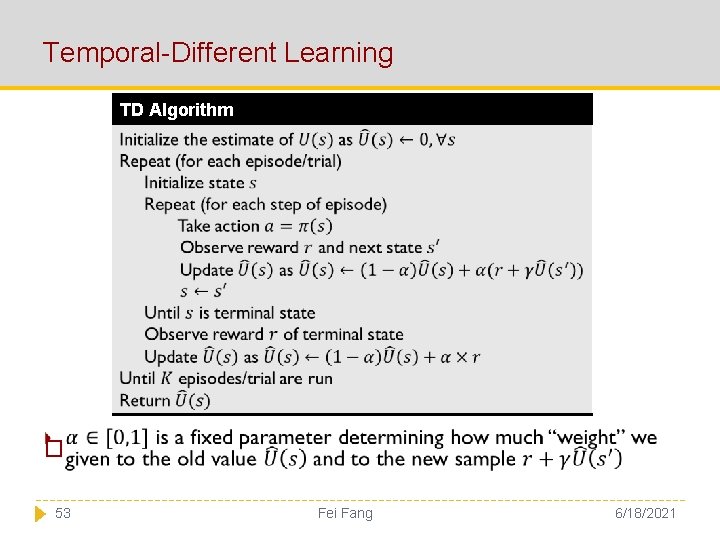

Temporal-Different Learning TD Algorithm � 53 Fei Fang 6/18/2021

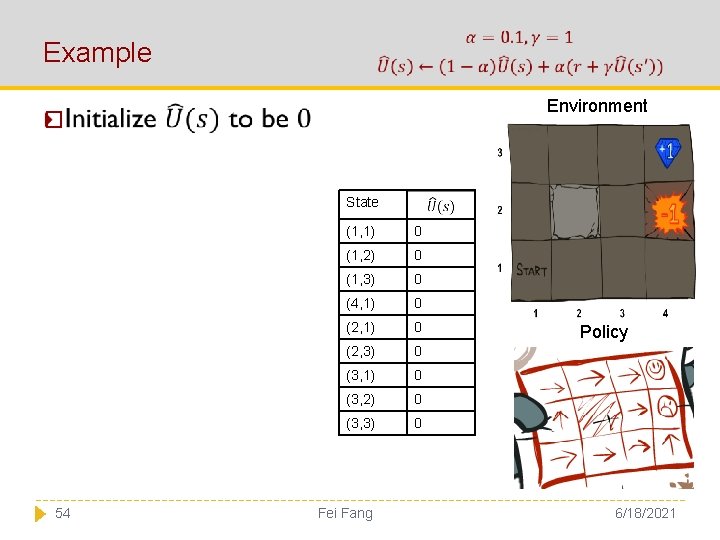

Example Environment � State 54 (1, 1) 0 (1, 2) 0 (1, 3) 0 (4, 1) 0 (2, 3) 0 (3, 1) 0 (3, 2) 0 (3, 3) 0 Fei Fang Policy 6/18/2021

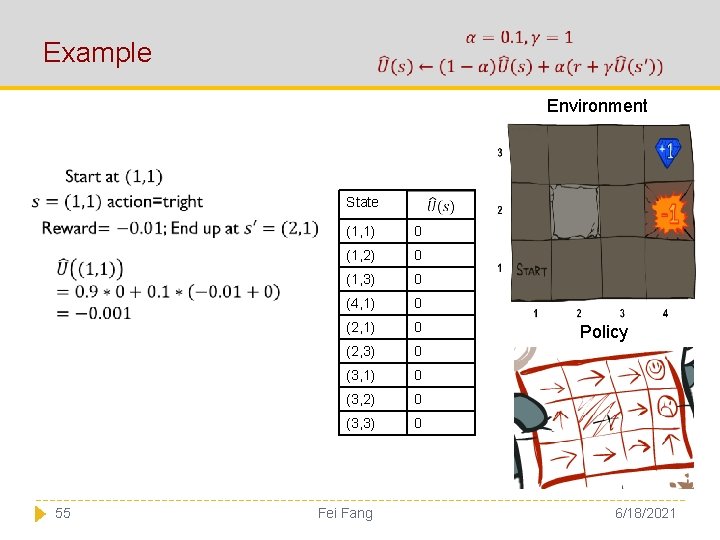

Example Environment State 55 (1, 1) 0 (1, 2) 0 (1, 3) 0 (4, 1) 0 (2, 3) 0 (3, 1) 0 (3, 2) 0 (3, 3) 0 Fei Fang Policy 6/18/2021

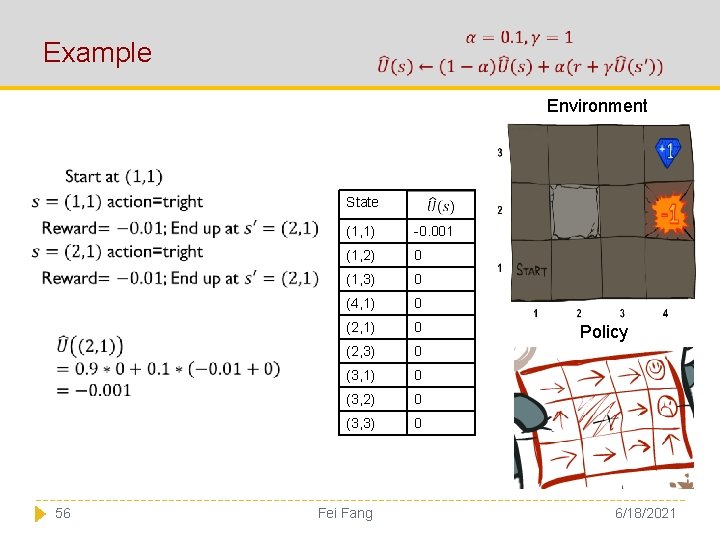

Example Environment State 56 (1, 1) -0. 001 (1, 2) 0 (1, 3) 0 (4, 1) 0 (2, 3) 0 (3, 1) 0 (3, 2) 0 (3, 3) 0 Fei Fang Policy 6/18/2021

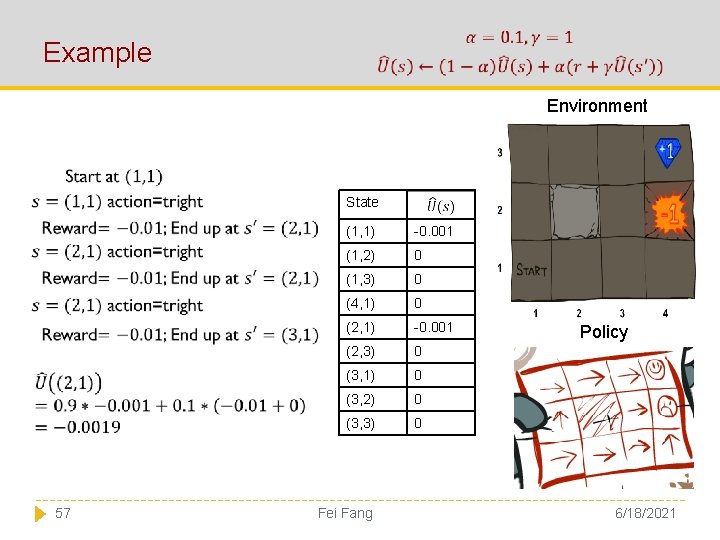

Example Environment State 57 (1, 1) -0. 001 (1, 2) 0 (1, 3) 0 (4, 1) 0 (2, 1) -0. 001 (2, 3) 0 (3, 1) 0 (3, 2) 0 (3, 3) 0 Fei Fang Policy 6/18/2021

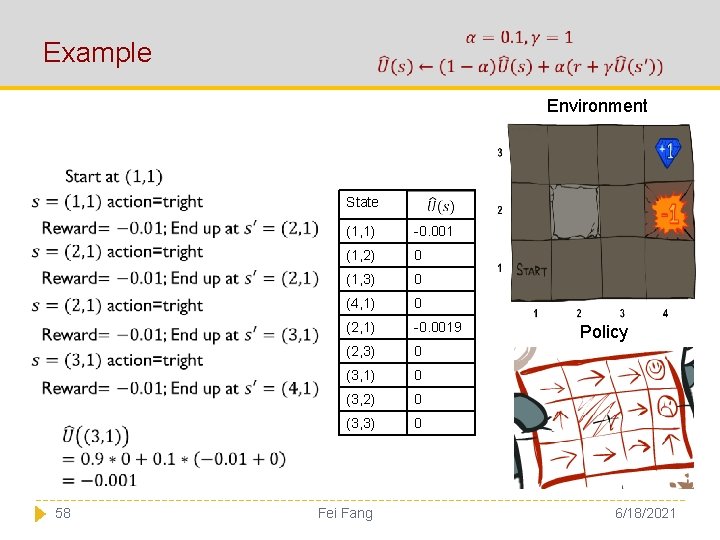

Example Environment State 58 (1, 1) -0. 001 (1, 2) 0 (1, 3) 0 (4, 1) 0 (2, 1) -0. 0019 (2, 3) 0 (3, 1) 0 (3, 2) 0 (3, 3) 0 Fei Fang Policy 6/18/2021

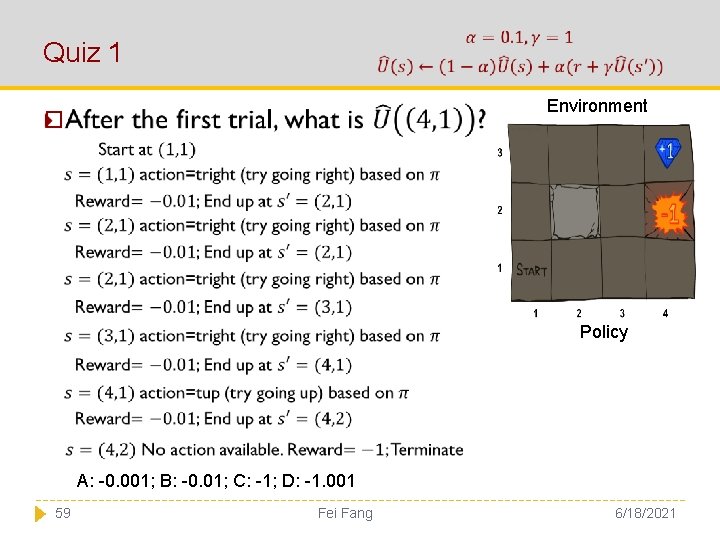

Quiz 1 Environment � Policy A: -0. 001; B: -0. 01; C: -1; D: -1. 001 59 Fei Fang 6/18/2021

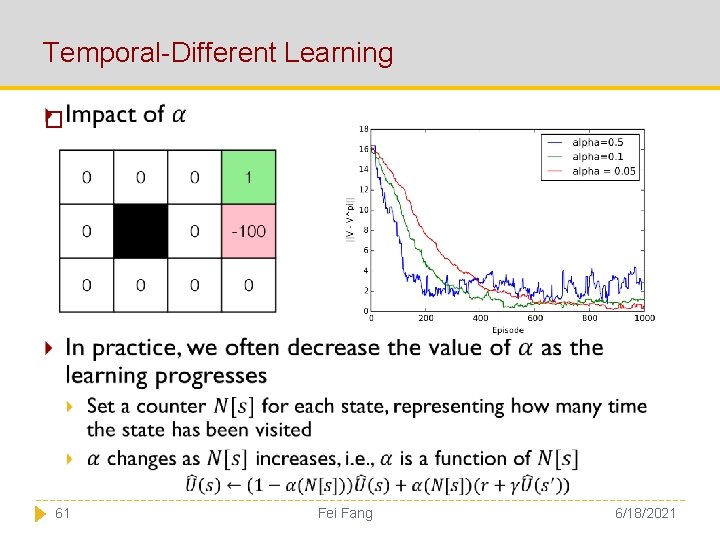

Temporal-Different Learning � 61 Fei Fang 6/18/2021

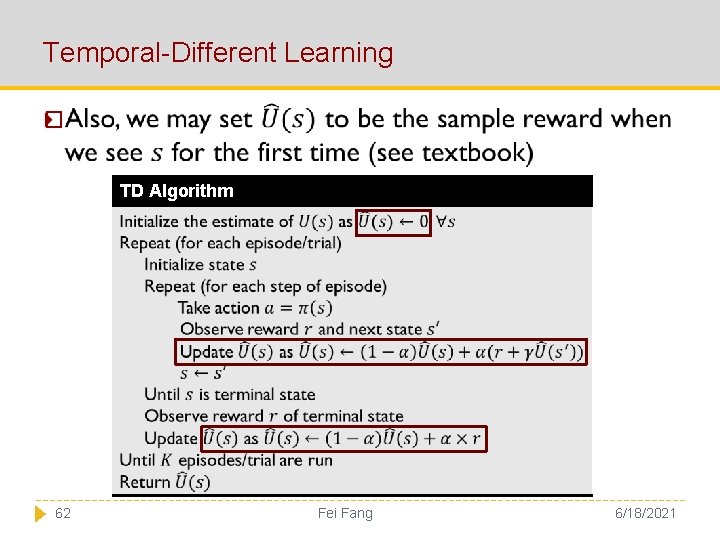

Temporal-Different Learning � TD Algorithm 62 Fei Fang 6/18/2021

Summary �Reinforcement �Passive Learning RL � Model-based Passive RL � Model-free Passive RL � Direct Utility Estimation � Temporal Difference Learning 63 Fei Fang 6/18/2021

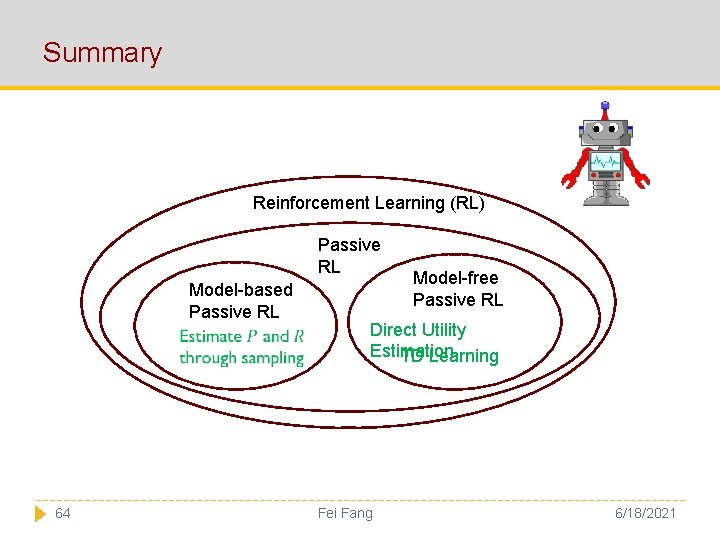

Summary Reinforcement Learning (RL) Passive RL Model-based Passive RL 64 Model-free Passive RL Direct Utility Estimation TD Learning Fei Fang 6/18/2021

Passive RL � 65 Fei Fang 6/18/2021

Acknowledgment �Some slides are borrowed from previous slides made by Tai Sing Lee and Zico Kolter 66 Fei Fang 6/18/2021

Backup Slides Recap of Value Iteration and Policy Iteration Fei Fang

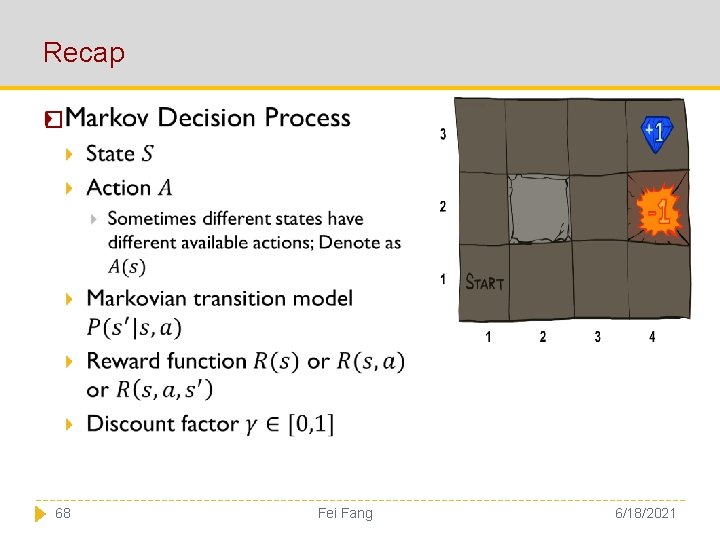

Recap � 68 Fei Fang 6/18/2021

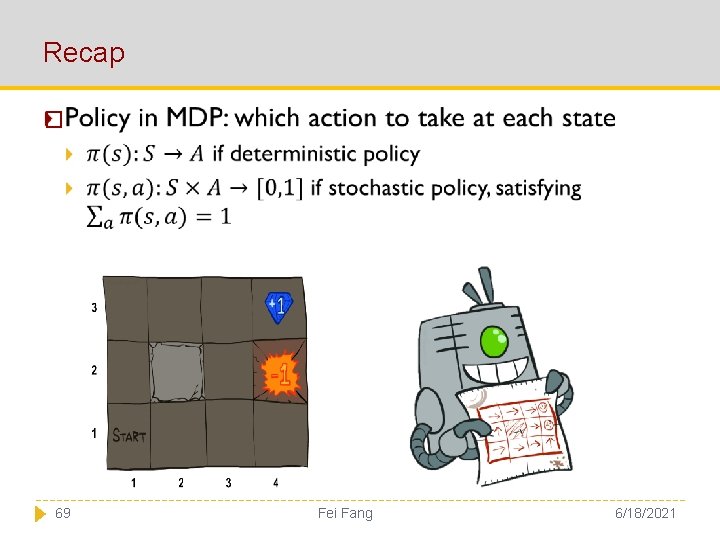

Recap � 69 Fei Fang 6/18/2021

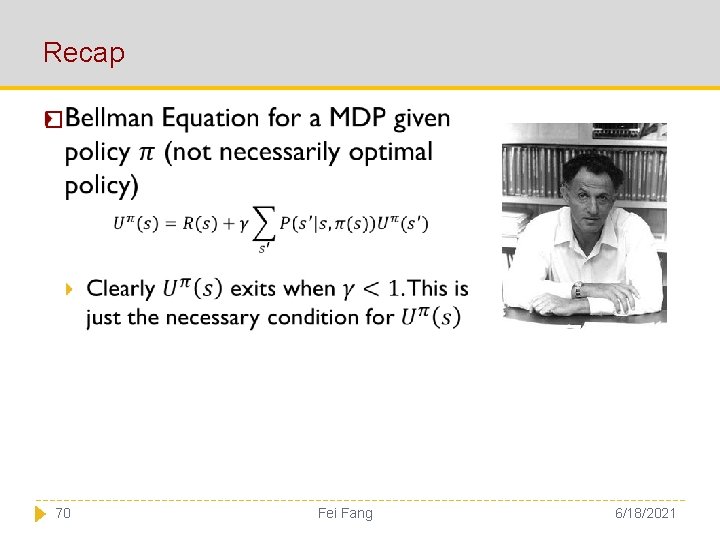

Recap � 70 Fei Fang 6/18/2021

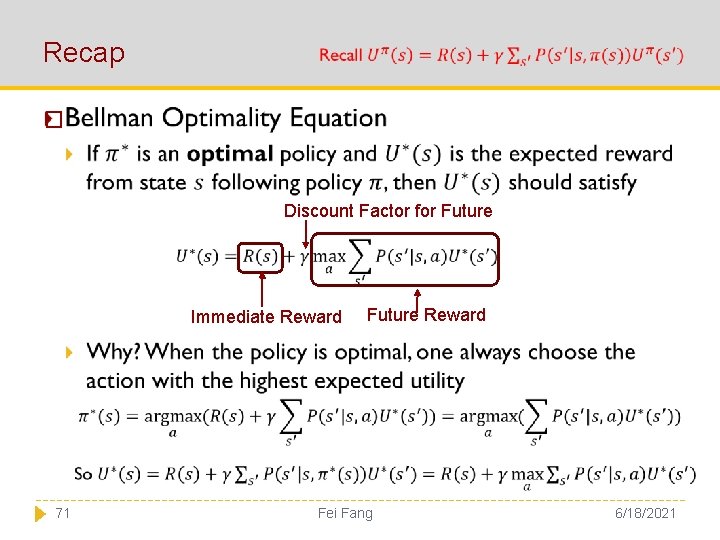

Recap � Discount Factor for Future Immediate Reward 71 Future Reward Fei Fang 6/18/2021

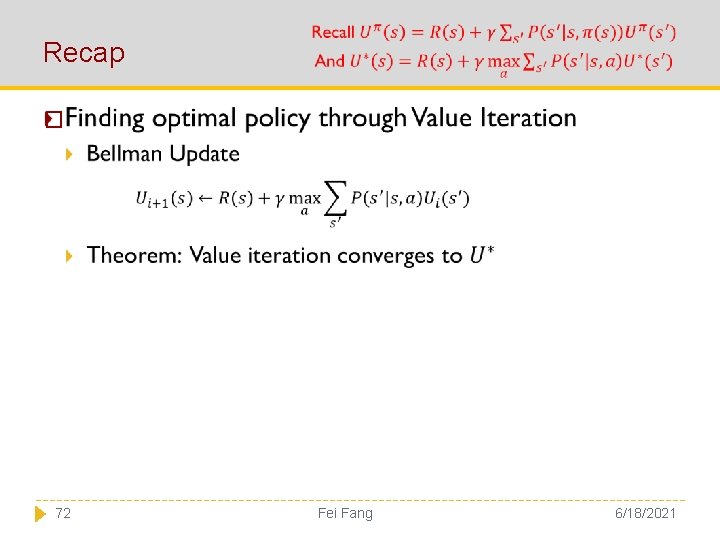

Recap � 72 Fei Fang 6/18/2021

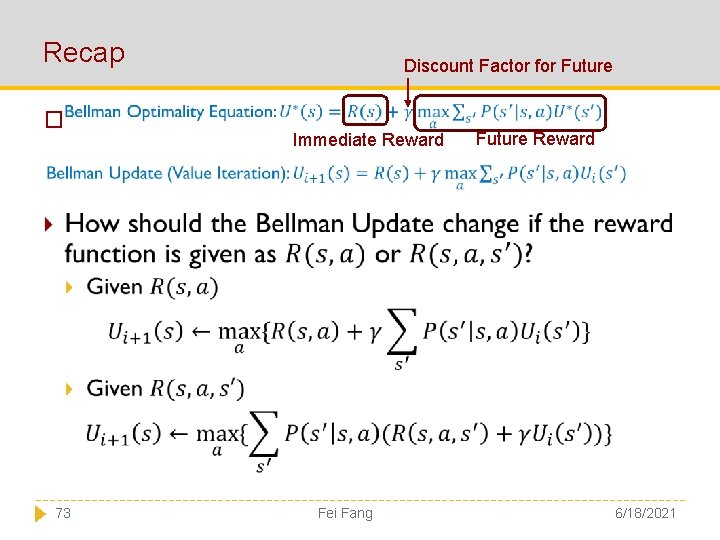

Recap � 73 Discount Factor for Future Immediate Reward Fei Fang Future Reward 6/18/2021

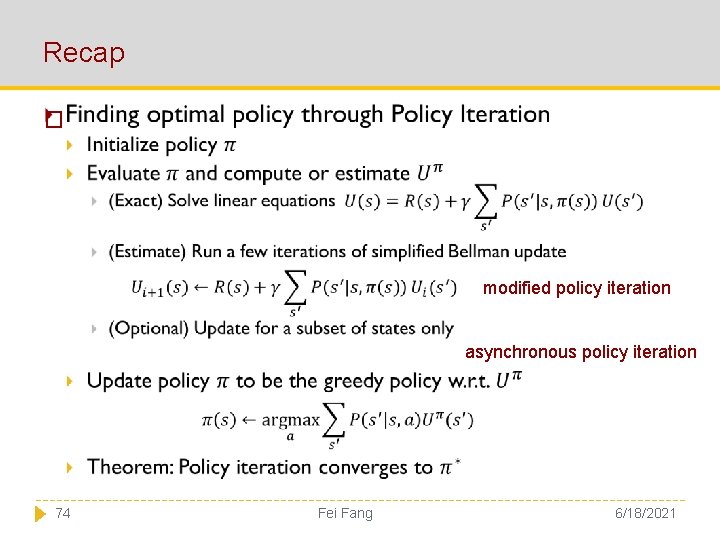

Recap � modified policy iteration asynchronous policy iteration 74 Fei Fang 6/18/2021

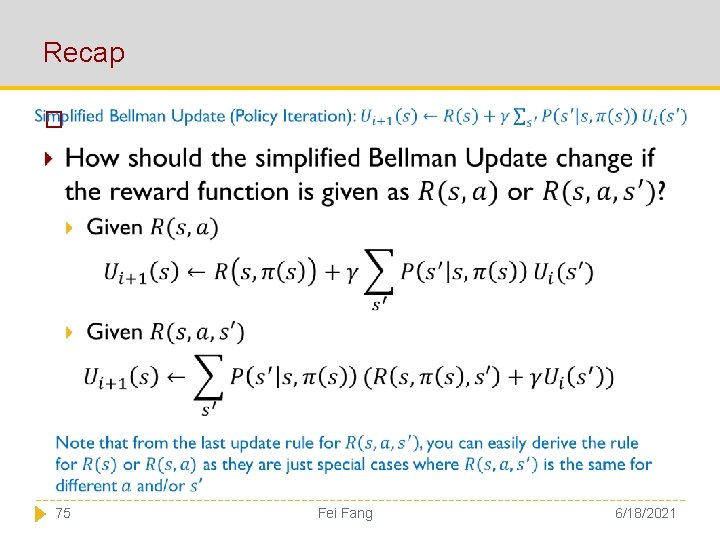

Recap � 75 Fei Fang 6/18/2021

- Slides: 69