Artificial Intelligence Overview John Paxton Montana State University

- Slides: 35

Artificial Intelligence Overview John Paxton Montana State University August 14, 2003

Montana State University

A Brief Bio • 1985 The Ohio State University, B. S. Computer Science • 1987 The University of Michigan, M. S. Computer Science • 1990 The University of Michigan, Ph. D. Artificial Intelligence • 2003 Montana State University – Bozeman, Professor of Computer Science

Talk Outline • • What is AI? Foundations History Areas Search Knowledge Representation Agents

What is AI? Science Approach 1. Systems that think like humans 2. Systems that act like humans Engineering Approach 1. Systems that think rationally 2. Systems that act rationally

Acting Humanly • Turing Test (1950)

Thinking Humanly • Cognitive Modelling Approach • General Problem Solver (Newell and Simon, 1961)

Thinking Rationally • The laws-of-thought approach • Syllogisms (Aristotle) • It is difficult to code the knowledge and to reason with it efficiently.

Acting Rationally • Rational Agent Approach. The agent acts to achieve the best (or near best) expected outcome.

Foundations • Philosophy (e. g. Where does knowledge come from? ) • Mathematics (e. g. What are the formal rules to draw valid conclusions? ) • Economics (e. g. How should we make decisions to maximize payoff? ) • Neuroscience (e. g. How do brains process information? )

Foundations • Psychology (e. g. How do humans and animals think and act? ) • Computer Engineering (e. g. How can we build an efficient computer? ) • Control Theory (e. g. How can artifacts operate under their own control? ) • Linguistics (e. g. How does language relate to thought? )

History • 1943 -1955 Gestation. Mc. Culloch-Pitts, Hebb, Turing Test • 1956. Dartmouth Conference. • 1952 -1969. Great Expectations. Logic Theorist, GPS, Checkers, Lisp, Microworlds (calculus) • 1966 -1973. Reality. Machine translation (spirit == vodka), chess, intractability, fundamental limitations (Perceptrons).

History • 1969 -1979. Knowledge-Based Systems. Dendral (infer molecular structure) • 1980 -present. Commercial Products. • 1986 -present. Return of neural networks. • 1987 -present. Science. Hidden Markov Models. Neural Networks. Bayesian Networks. • 1995 -present. Intelligent Agents.

Areas • • Agents Artificial Life Machine Discovery and Data Mining Expert Systems Fuzzy Logic Game Playing Genetic Algorithms

Areas • • Knowledge Representation Learning Neural Networks Natural Language Processing Planning Reasoning Robotics

Areas • • Search Speech Recognition and Synthesis Virtual Reality Computer Vision

Search • Missionaries and Cannibals Problem MMM CCC

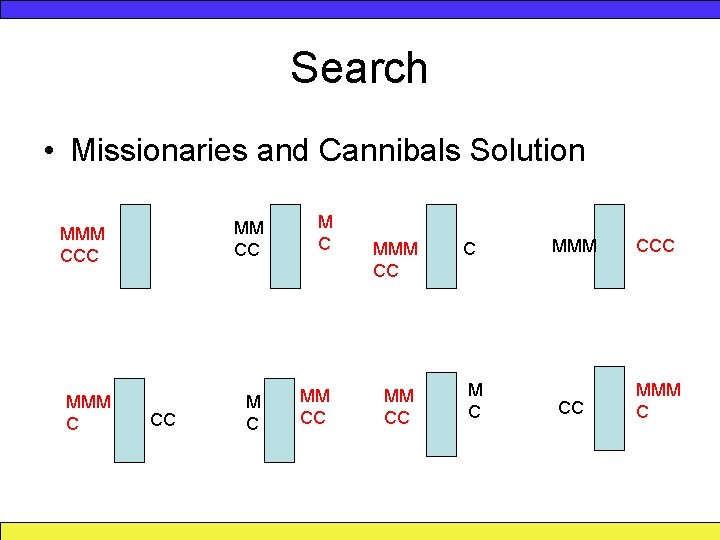

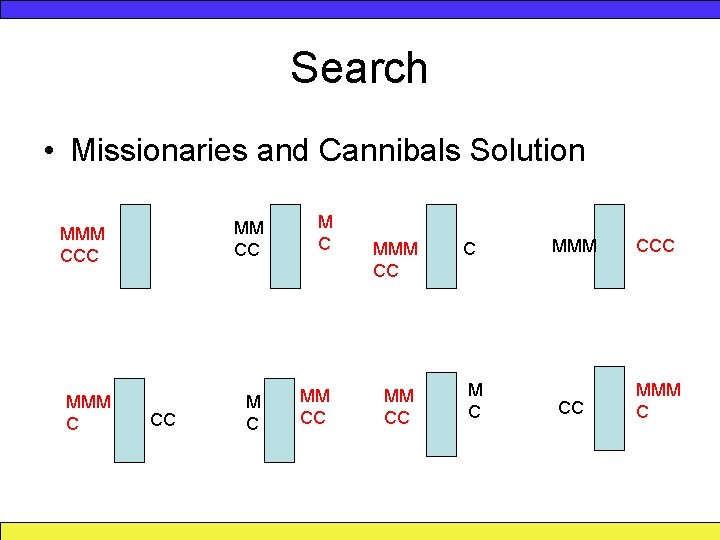

Search • Missionaries and Cannibals Solution MMM CCC MMM C CC MM CC MM CC C MMM CCC CC MMM C

Types of Search • Blind Search – Breadth-First Search – Depth-First Search • Informed Search – Best-First Search – A* Search

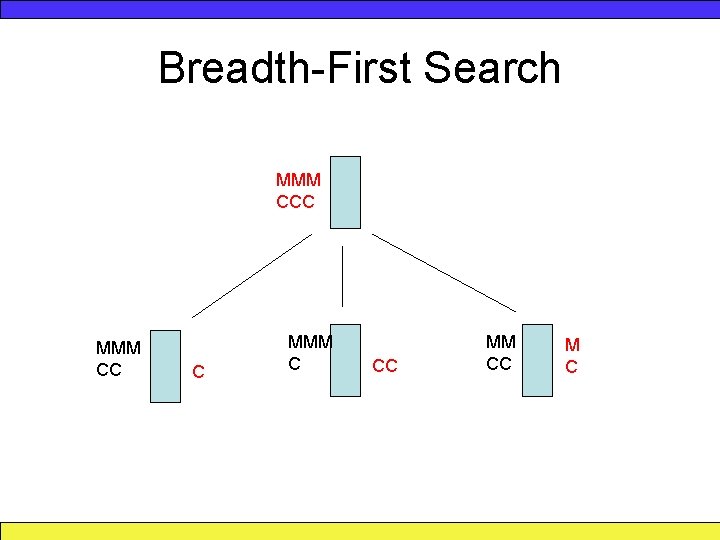

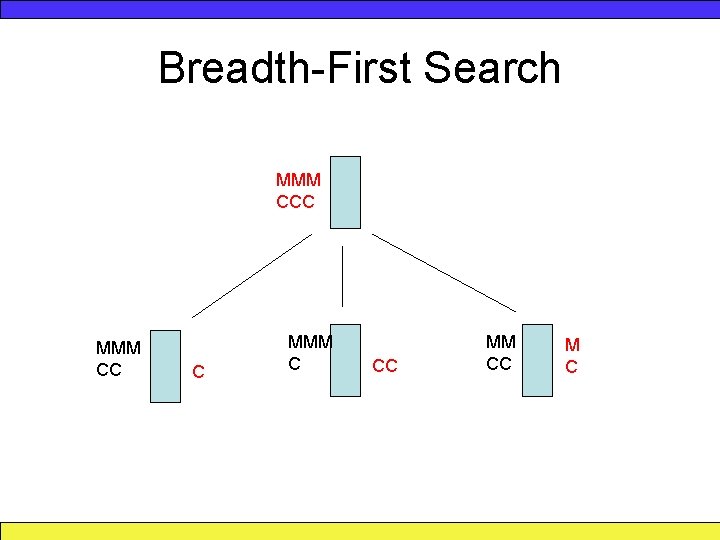

Breadth-First Search MMM CCC MMM CC C MMM C CC MM CC M C

Minimax Search • Commonly used to determine which move to make in a 2 player, strategy game. • Deep Junior (Ban, Bushinsky, Alterman), the reigning computer chess champion uses minimax. • Minimax requires an evaluation function.

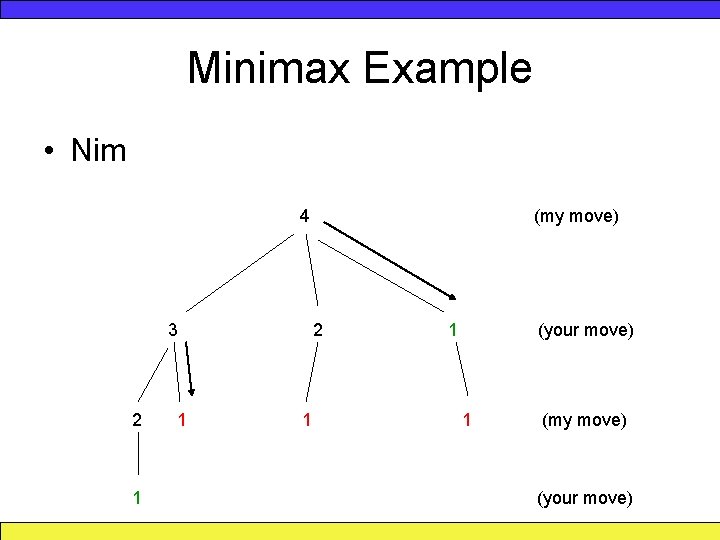

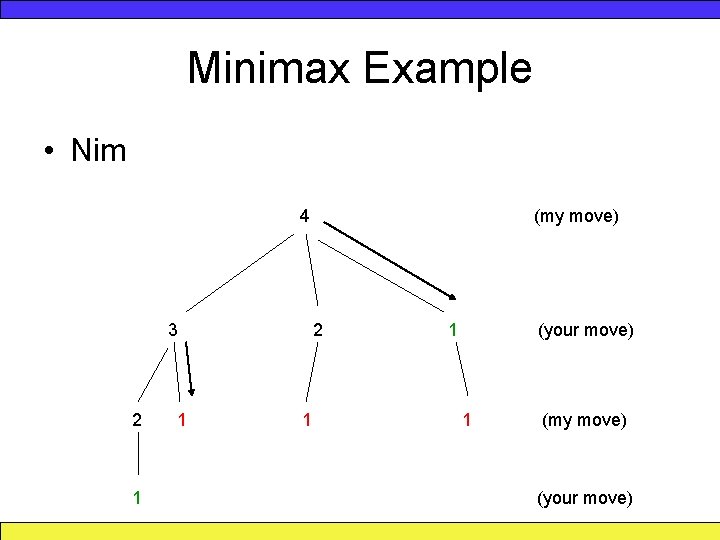

Minimax Example • Nim 4 3 2 1 1 (my move) 2 1 1 (your move) 1 (my move) (your move)

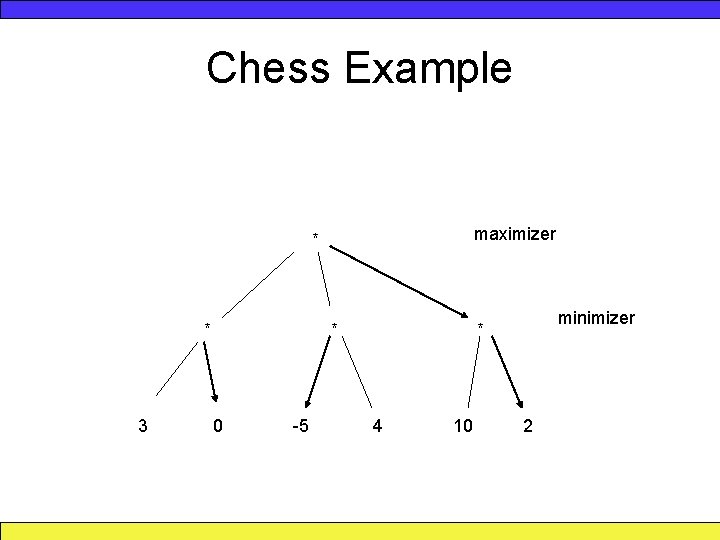

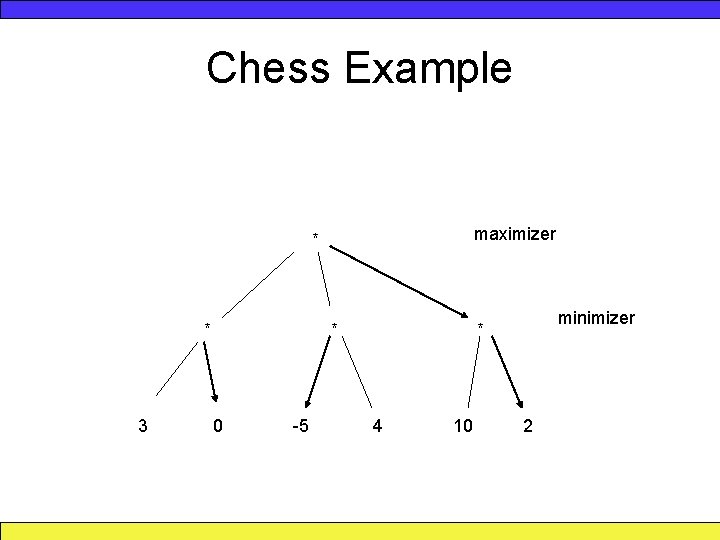

Chess Example maximizer * * 3 * 0 -5 minimizer * 4 10 2

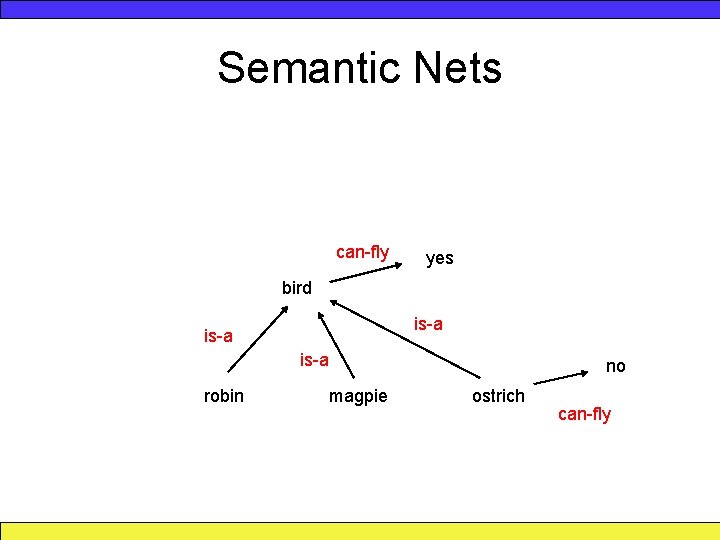

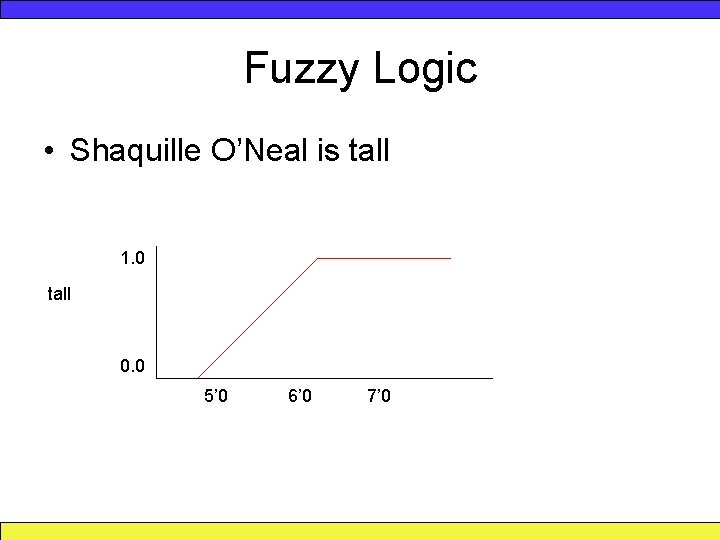

Knowledge Representation • Semantic Nets • Fuzzy Logic • First Order Predicate Calculus

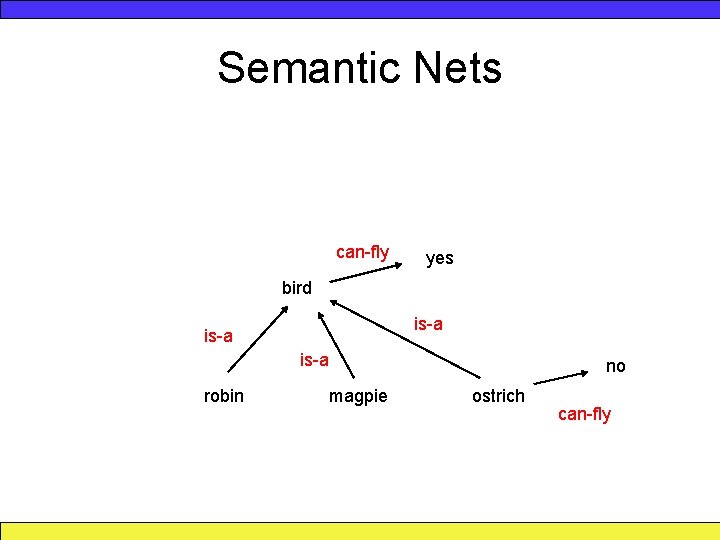

Semantic Nets can-fly yes bird is-a robin magpie no ostrich can-fly

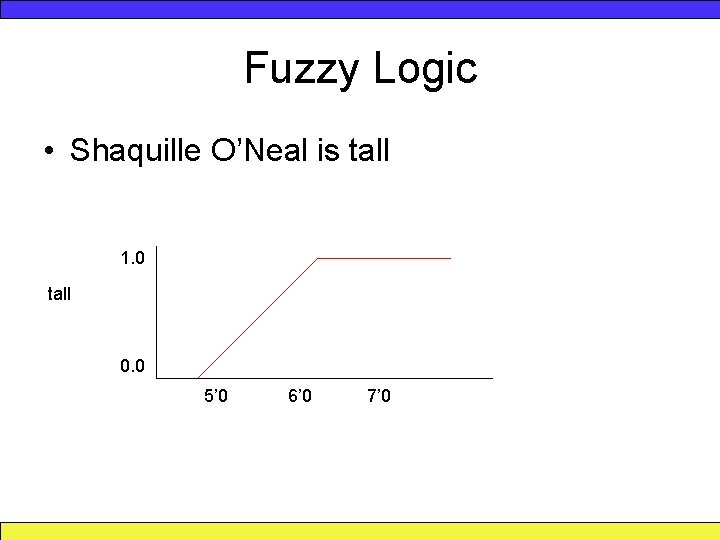

Fuzzy Logic • Shaquille O’Neal is tall 1. 0 tall 0. 0 5’ 0 6’ 0 7’ 0

Fuzzy Logic • Karim is tall (0. 6) and a good teacher (0. 9) = 0. 6 • Karim is tall or a good teacher = 0. 9. • Karim is not tall = 1. 0 – 0. 6 = 0. 4

First Order Predicate Calculus • Every Saturday is a weekend. x Saturday(x) weekend(x) • Some day is a week day. x day(x) weekday(x)

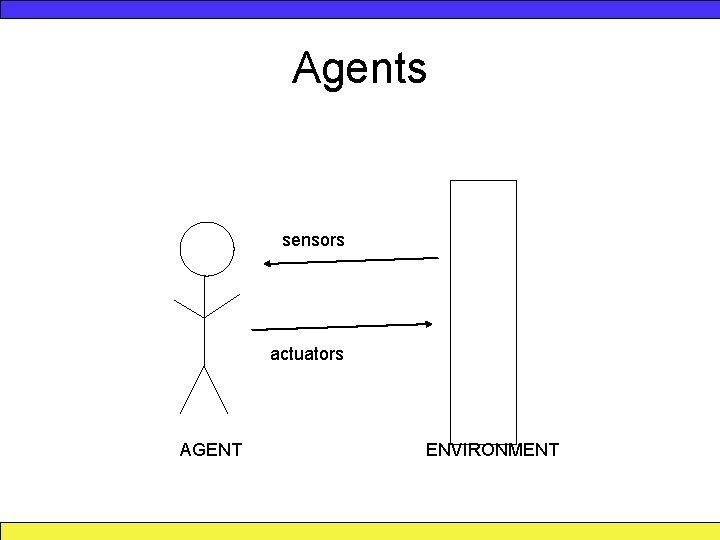

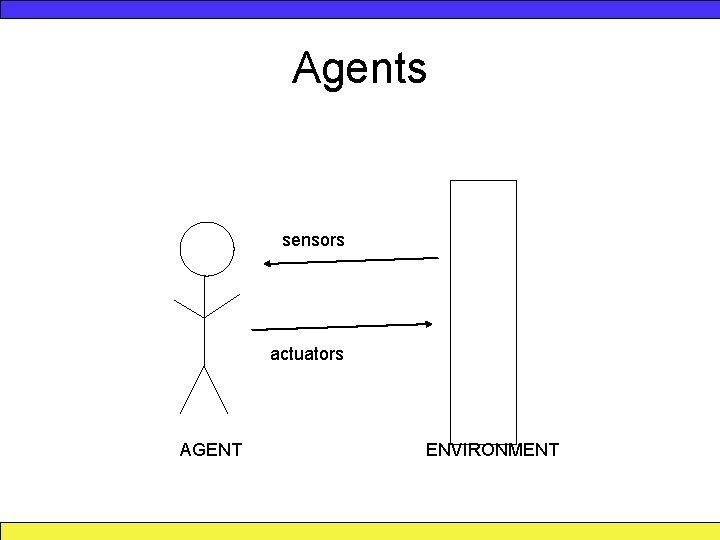

Agents sensors actuators AGENT ENVIRONMENT

Rationality Factors • Performance Measure • Prior Knowledge • Performable Actions • Agent’s Prior Percepts

Rational Agent • For each possible sensor sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the sensor sequence and whatever built-in knowledge the agent has.

Agent Terminology • Omniscience: the outcome of its actions are known. Impossible! • Learning: taking actions in order to perform better (e. g. robot vacuum cleaner) • Autonomy: the agent relies on its own sensors rather than built-in knowledge

Environments • Fully observable vs. partially observable • Deterministic vs. stochastic • Episodic (classification) vs. sequential (conversation) • Static vs. dynamic • Discrete (chess) vs. continuous (taxidriving) • Single agent vs. multi-agent.

Types of Agents • • • Reflex Model-Based Goal-Based Utility-Based Learning Combinations of the above!

Questions?