Artificial Intelligence in my research Seungwon Hwang Department

![The Context: query select * from houses top-3 houses order by [ranking function F] The Context: query select * from houses top-3 houses order by [ranking function F]](https://slidetodoc.com/presentation_image/285eb22e89335fbe9e36e1b066dc723c/image-3.jpg)

- Slides: 27

“Artificial Intelligence” in my research Seung-won Hwang Department of CSE POSTECH

Recap n n n Bridging the gap between under-/over-specified user queries We went through various techniques to support intelligent querying, implicitly/automatically from data, prior users, specific user, and domain knowledge My research shares the same goal, with some AI techniques applied (e. g. , search, machine learning) 2

![The Context query select from houses top3 houses order by ranking function F The Context: query select * from houses top-3 houses order by [ranking function F]](https://slidetodoc.com/presentation_image/285eb22e89335fbe9e36e1b066dc723c/image-3.jpg)

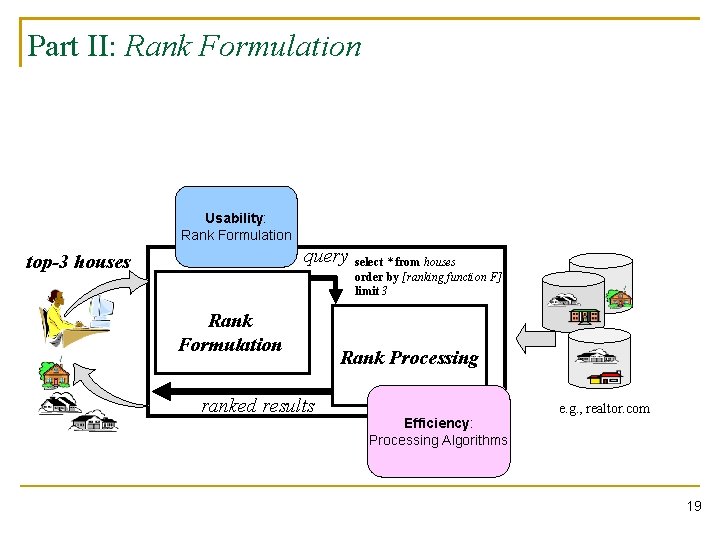

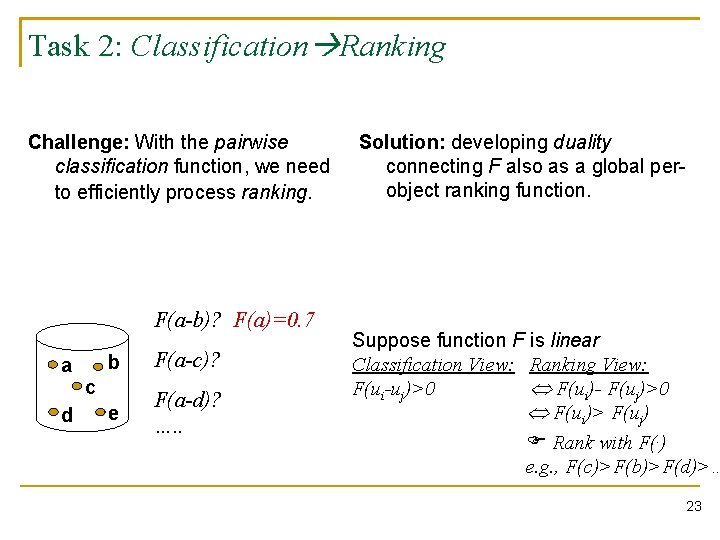

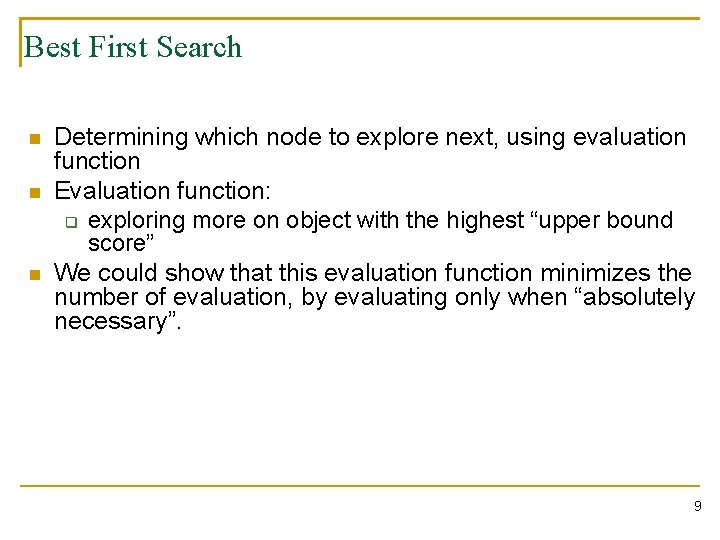

The Context: query select * from houses top-3 houses order by [ranking function F] limit 3 Rank Formulation ranked results Rank Processing e. g. , realtor. com 3

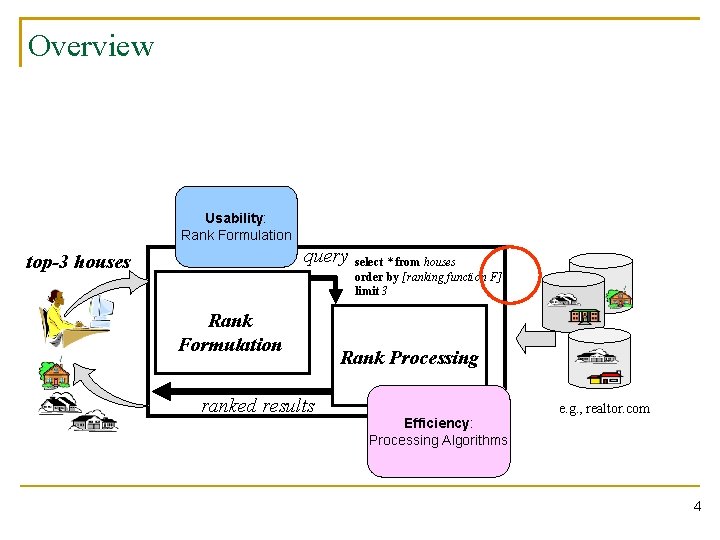

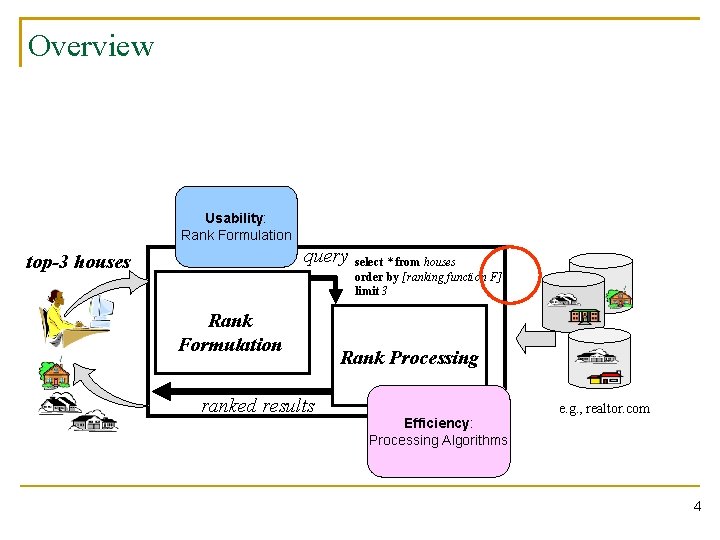

Overview Usability: Rank Formulation query select * from houses top-3 houses order by [ranking function F] limit 3 Rank Formulation ranked results Rank Processing Efficiency: Processing Algorithms e. g. , realtor. com 4

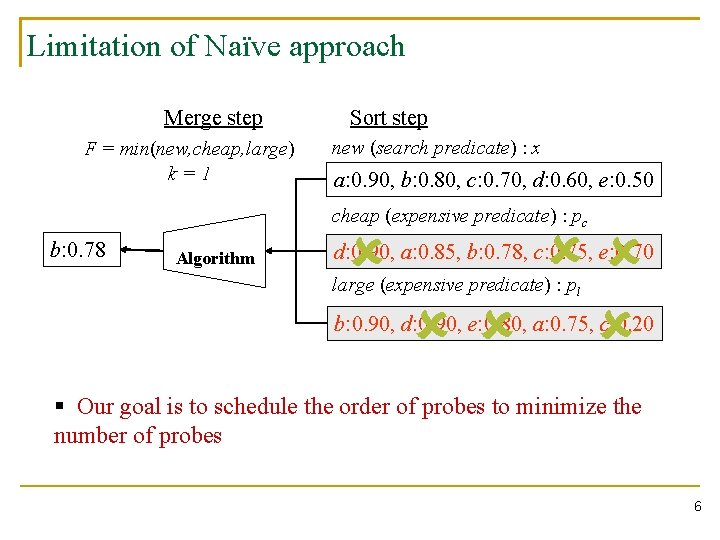

Part I: Rank Processing n Essentially a search problem (you studied in AI) 5

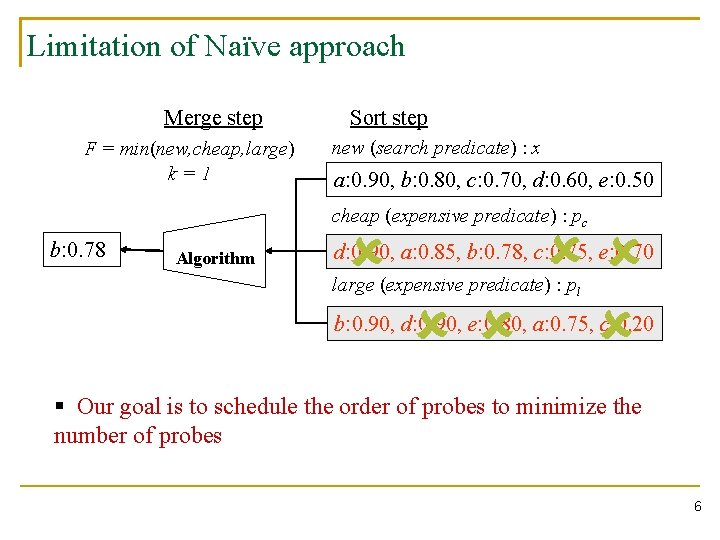

Limitation of Naïve approach Merge step F = min(new, cheap, large) k=1 Sort step new (search predicate) : x a: 0. 90, b: 0. 80, c: 0. 70, d: 0. 60, e: 0. 50 cheap (expensive predicate) : pc b: 0. 78 Algorithm û ûû ûû û d: 0. 90, a: 0. 85, b: 0. 78, c: 0. 75, e: 0. 70 large (expensive predicate) : pl b: 0. 90, d: 0. 90, e: 0. 80, a: 0. 75, c: 0. 20 § Our goal is to schedule the order of probes to minimize the number of probes 6

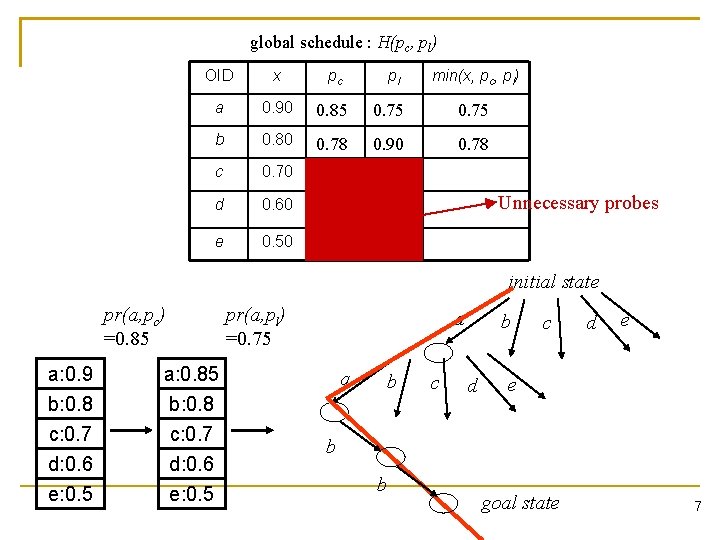

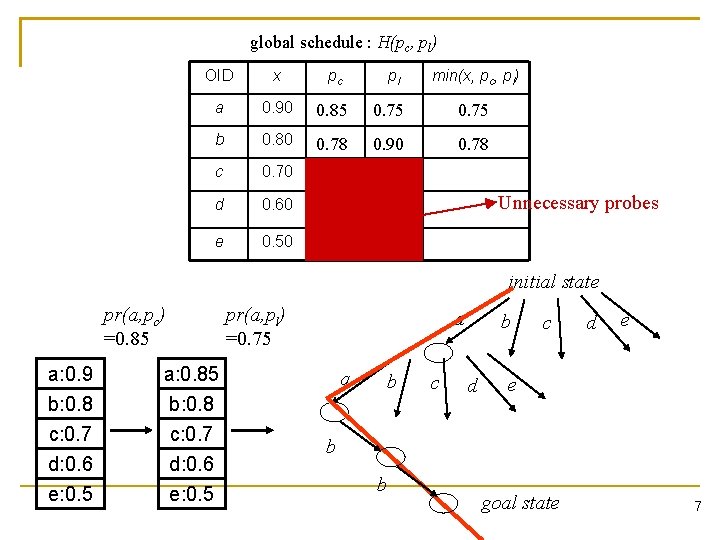

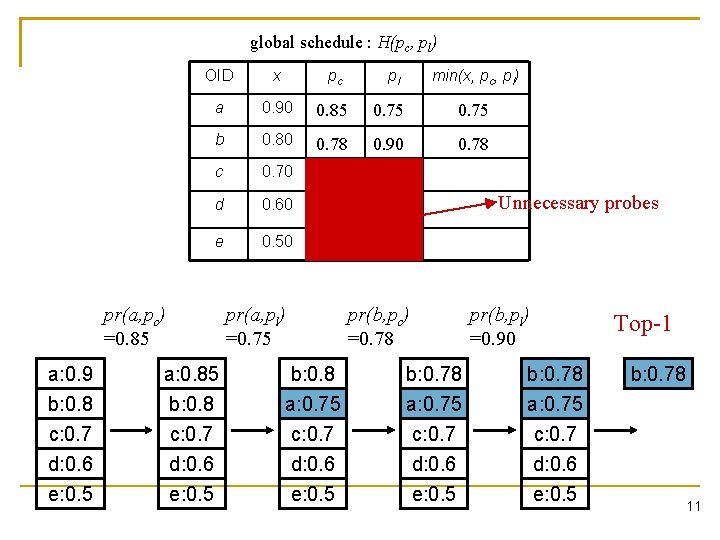

global schedule : H(pc, pl) OID x pc pl min(x, pc, pl) a 0. 90 0. 85 0. 75 b 0. 80 0. 78 0. 90 0. 78 c 0. 70 d 0. 60 e 0. 50 Unnecessary probes initial state pr(a, pc) =0. 85 pr(a, pl) =0. 75 a: 0. 9 b: 0. 8 a: 0. 85 b: 0. 8 c: 0. 7 d: 0. 6 e: 0. 5 a a b c b d c d e e b b goal state 7

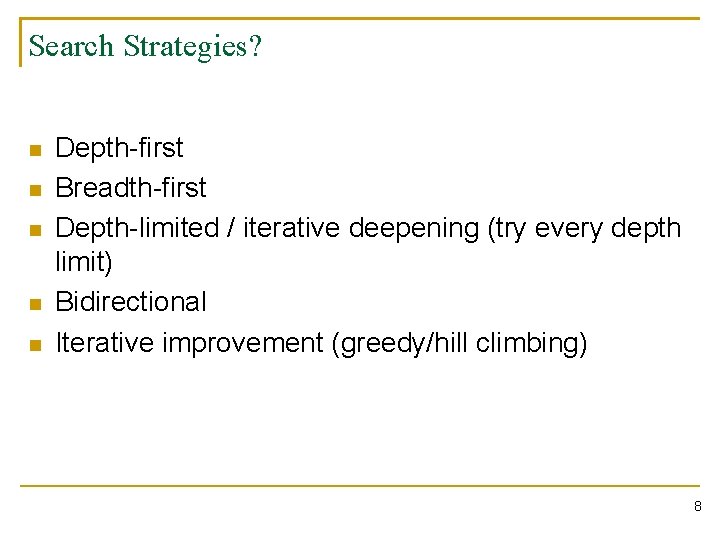

Search Strategies? n n n Depth-first Breadth-first Depth-limited / iterative deepening (try every depth limit) Bidirectional Iterative improvement (greedy/hill climbing) 8

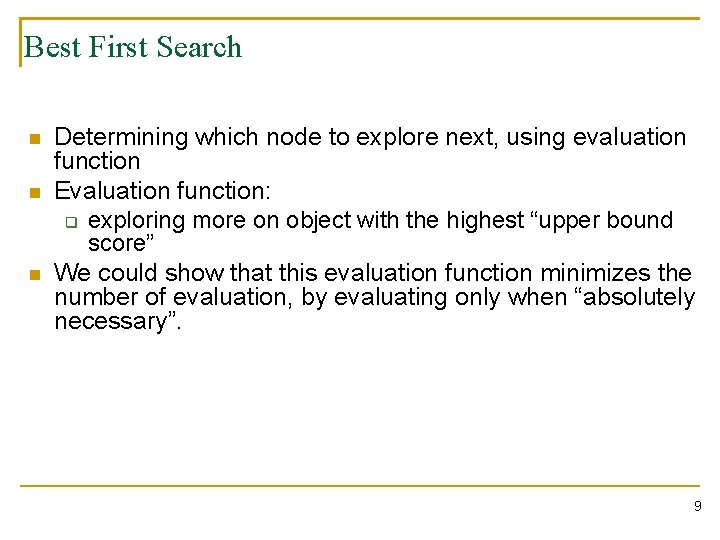

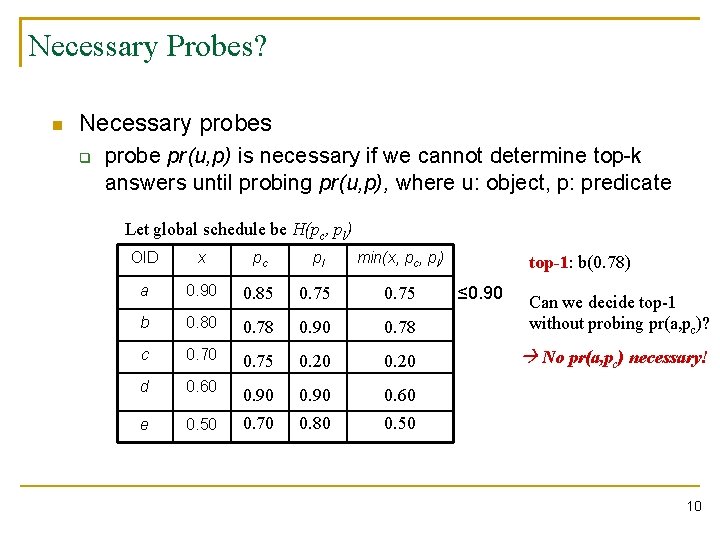

Best First Search n n n Determining which node to explore next, using evaluation function Evaluation function: q exploring more on object with the highest “upper bound score” We could show that this evaluation function minimizes the number of evaluation, by evaluating only when “absolutely necessary”. 9

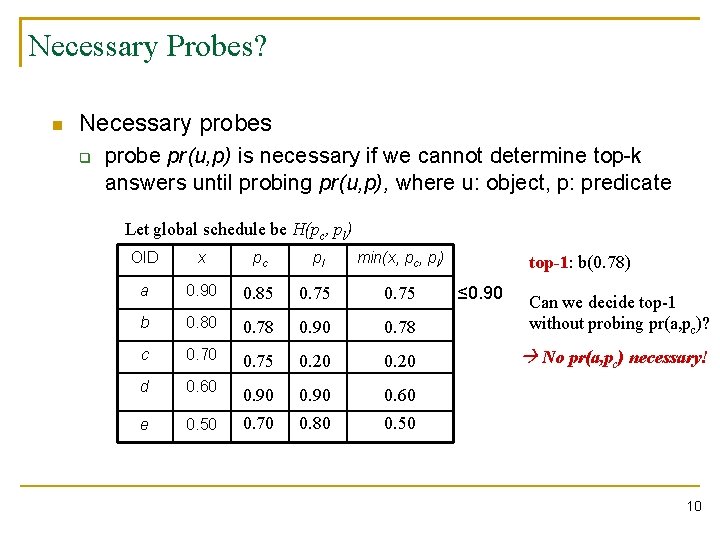

Necessary Probes? n Necessary probes q probe pr(u, p) is necessary if we cannot determine top-k answers until probing pr(u, p), where u: object, p: predicate Let global schedule be H(pc, pl) OID x pc pl min(x, pc, pl) a 0. 90 0. 85 0. 75 b 0. 80 0. 78 0. 90 0. 78 Can we decide top-1 without probing pr(a, pc)? c 0. 70 0. 75 0. 20 No pr(a, pc) necessary! d 0. 60 0. 90 0. 60 e 0. 50 0. 70 0. 80 0. 50 top-1: b(0. 78) ≤ 0. 90 10

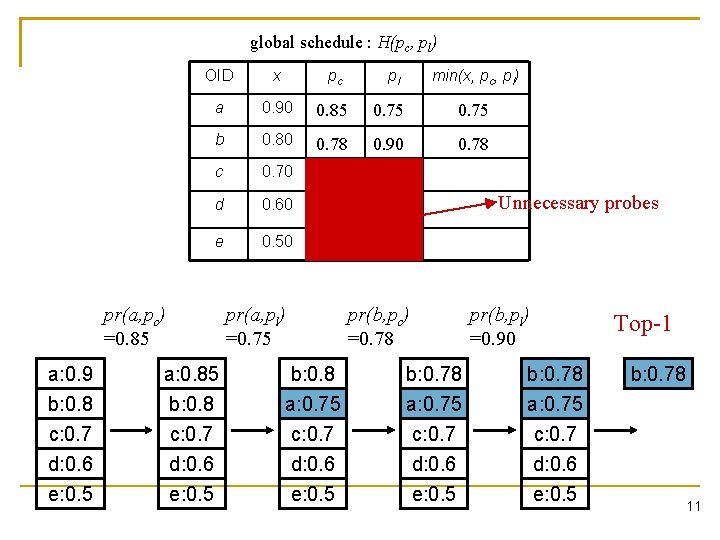

global schedule : H(pc, pl) OID x pc a 0. 90 0. 85 0. 75 b 0. 80 0. 78 0. 90 0. 78 c 0. 70 d 0. 60 e 0. 50 pr(a, pc) =0. 85 pr(a, pl) =0. 75 pl min(x, pc, pl) Unnecessary probes pr(b, pc) =0. 78 pr(b, pl) =0. 90 Top-1 a: 0. 9 b: 0. 8 a: 0. 85 b: 0. 8 a: 0. 75 b: 0. 78 a: 0. 75 c: 0. 7 d: 0. 6 e: 0. 5 b: 0. 78 11

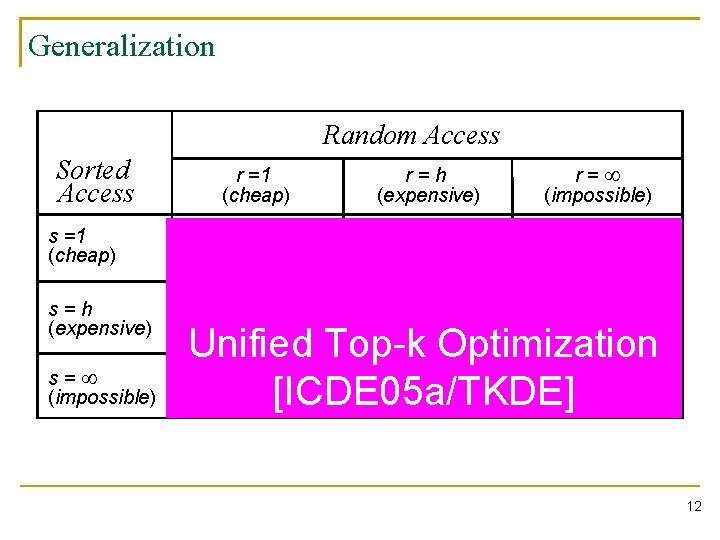

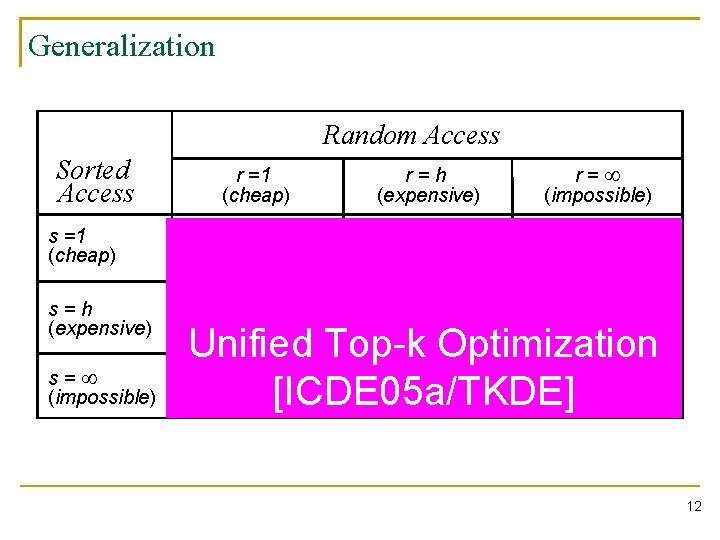

Generalization Random Access Sorted Access s =1 (cheap) s=h (expensive) s=¥ (impossible) r =1 (cheap) r=h (expensive) r=¥ (impossible) FA, TA, Quick. Combine CA, SR-Combine NRA, Stream. Combine FA, TA, Quick. Combine NRA, Stream. Combine Unified Top-k Optimization MPro [ICDE 05 a/TKDE] [SIGMOD 02/TODS] 12

Just for Laugh: Adapted from Hyountaek Yong’s presentation Strong nuclear force Electromagnetic force Weak nuclear force Unified field theory Gravitational force 13

FA TA NRA Unified Cost-based Approach CA MPro 14

Generality n Across a wide range of scenarios q One algorithm for all 15

Adaptivity n Optimal at specific runtime scenario 16

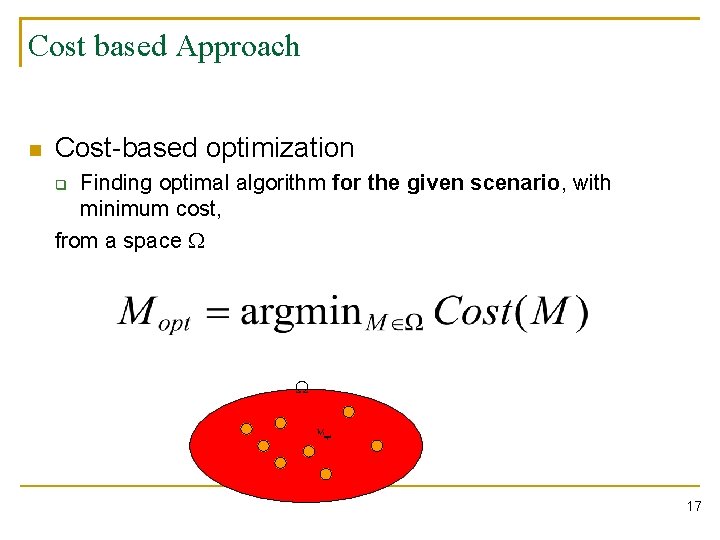

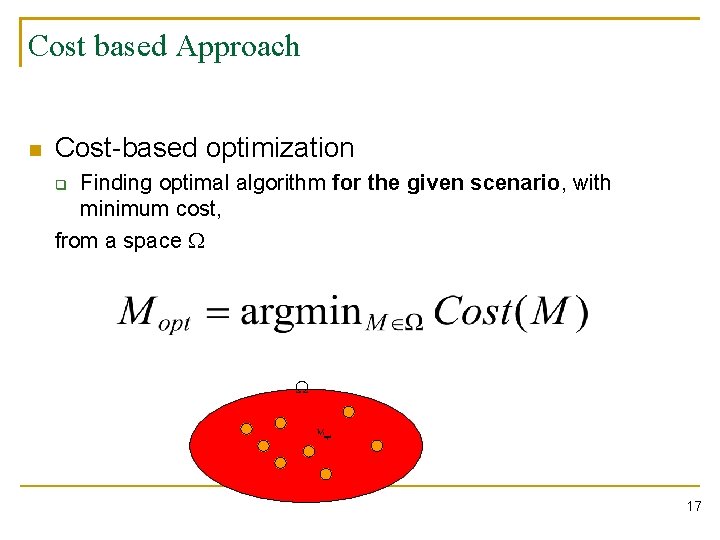

Cost based Approach n Cost-based optimization Finding optimal algorithm for the given scenario, with minimum cost, from a space q Mopt 17

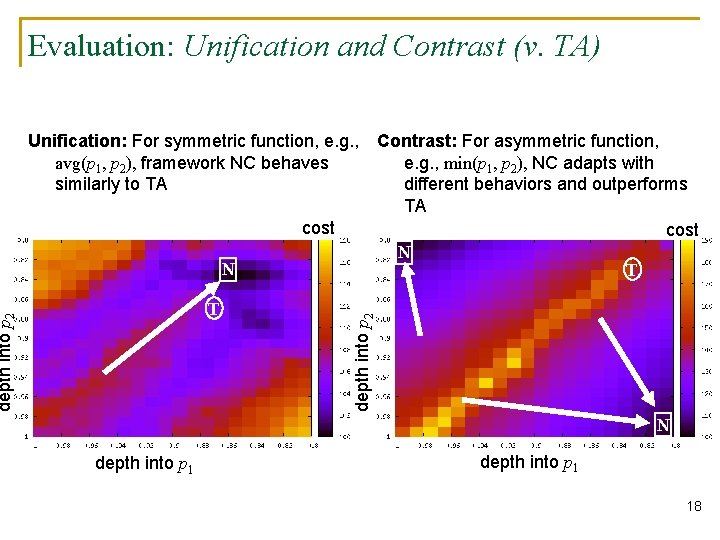

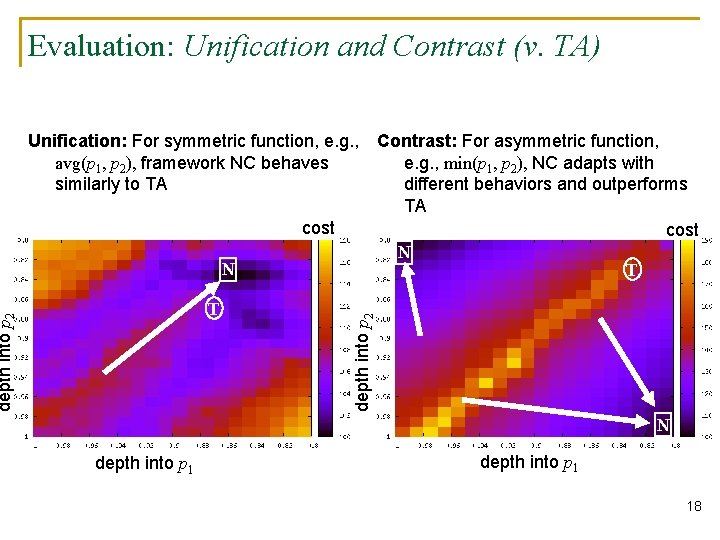

Evaluation: Unification and Contrast (v. TA) Unification: For symmetric function, e. g. , avg(p 1, p 2), framework NC behaves similarly to TA cost depth into p 2 T depth into p 2 N Contrast: For asymmetric function, e. g. , min(p 1, p 2), NC adapts with different behaviors and outperforms TA cost N T N depth into p 1 18

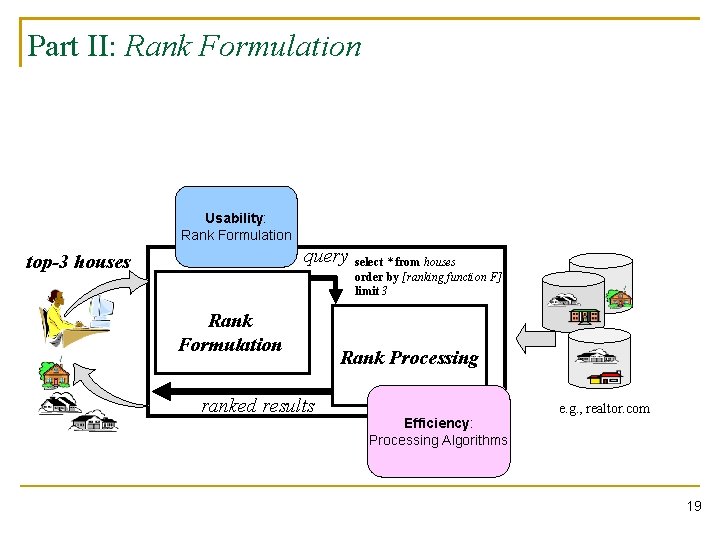

Part II: Rank Formulation Usability: Rank Formulation query select * from houses top-3 houses order by [ranking function F] limit 3 Rank Formulation ranked results Rank Processing Efficiency: Processing Algorithms e. g. , realtor. com 19

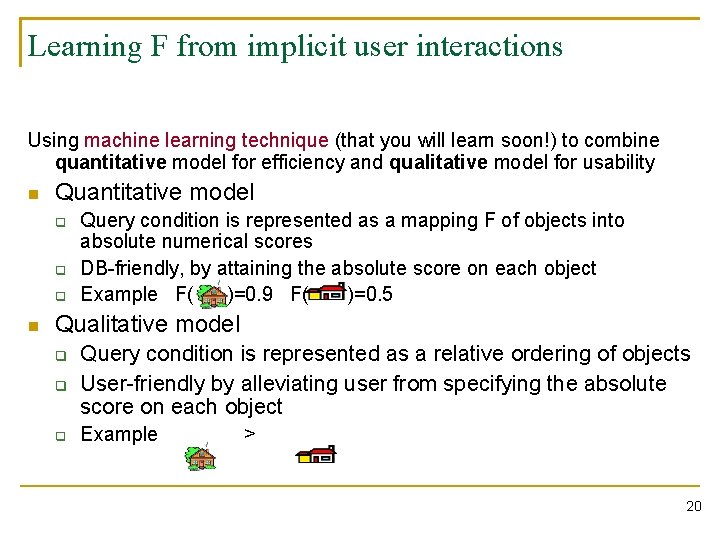

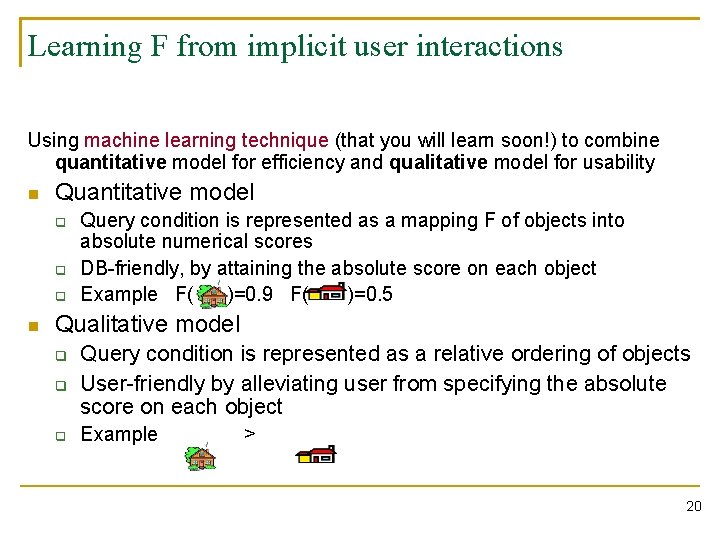

Learning F from implicit user interactions Using machine learning technique (that you will learn soon!) to combine quantitative model for efficiency and qualitative model for usability n Quantitative model q q q n Query condition is represented as a mapping F of objects into absolute numerical scores DB-friendly, by attaining the absolute score on each object Example F( )=0. 9 F( )=0. 5 Qualitative model q Query condition is represented as a relative ordering of objects User-friendly by alleviating user from specifying the absolute score on each object q Example q > 20

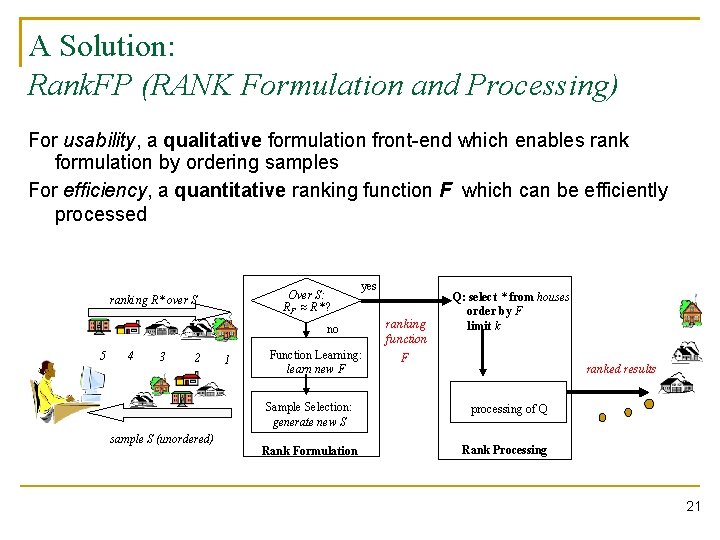

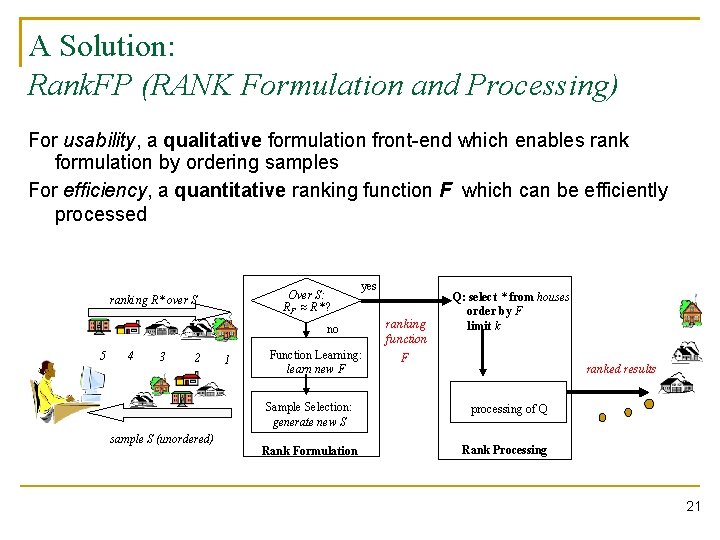

A Solution: Rank. FP (RANK Formulation and Processing) For usability, a qualitative formulation front-end which enables rank formulation by ordering samples For efficiency, a quantitative ranking function F which can be efficiently processed Over S: RF » R* ? ranking R* over S yes no 5 4 3 2 1 Function Learning: learn new F Sample Selection: generate new S sample S (unordered) Rank Formulation ranking function Q: select * from houses order by F limit k F ranked results processing of Q Rank Processing 21

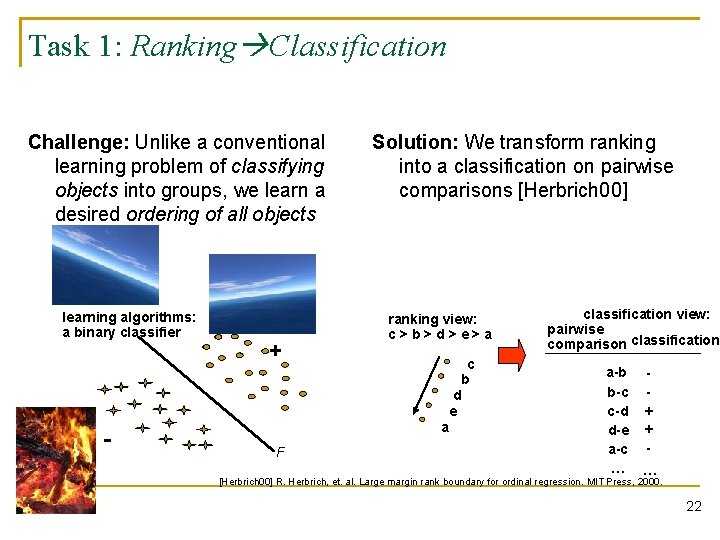

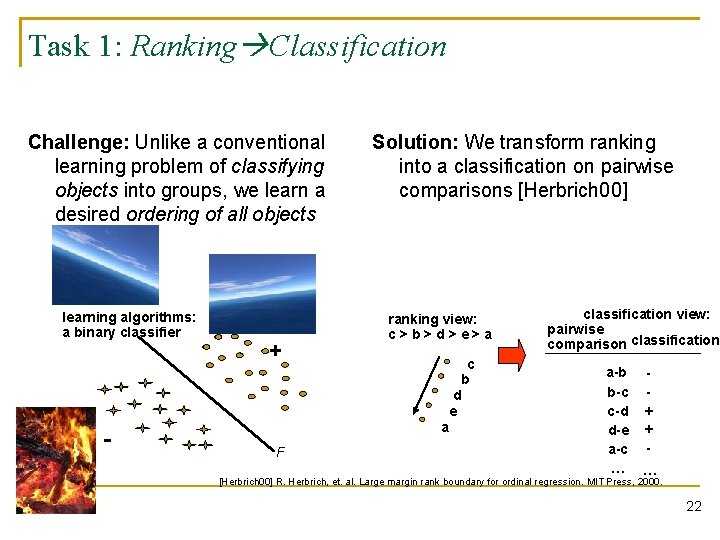

Task 1: Ranking Classification Challenge: Unlike a conventional learning problem of classifying objects into groups, we learn a desired ordering of all objects learning algorithms: a binary classifier - + F Solution: We transform ranking into a classification on pairwise comparisons [Herbrich 00] ranking view: c>b>d>e>a c b d e a classification view: pairwise comparison classification a-b b-c c-d d-e a-c … + + … [Herbrich 00] R. Herbrich, et. al. Large margin rank boundary for ordinal regression. MIT Press, 2000. 22

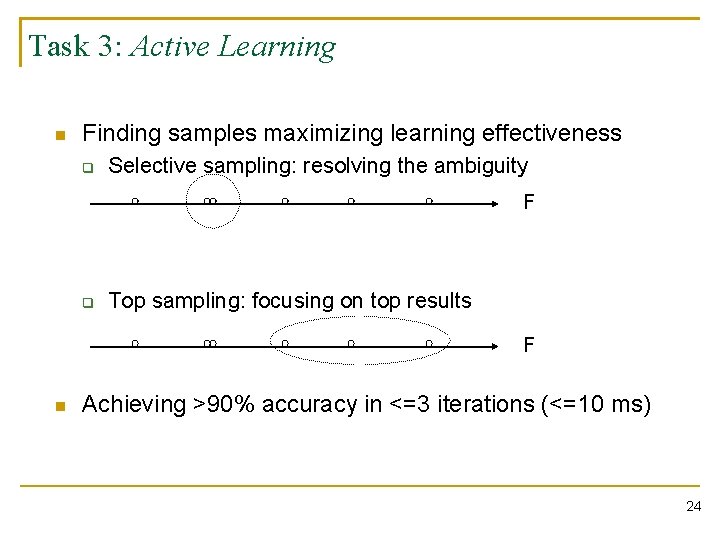

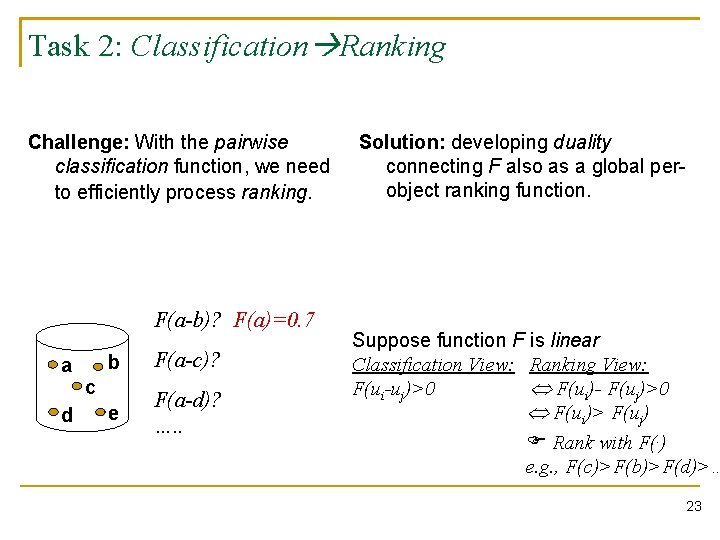

Task 2: Classification Ranking Challenge: With the pairwise classification function, we need to efficiently process ranking. F(a-b)? F(a)=0. 7 a d b F(a-c)? e F(a-d)? …. . c Solution: developing duality connecting F also as a global perobject ranking function. Suppose function F is linear Classification View: Ranking View: F(ui-uj)>0 F(ui)- F(uj)>0 F(ui)> F(uj) F Rank with F(. ) e. g. , F(c)>F(b)>F(d)>… 23

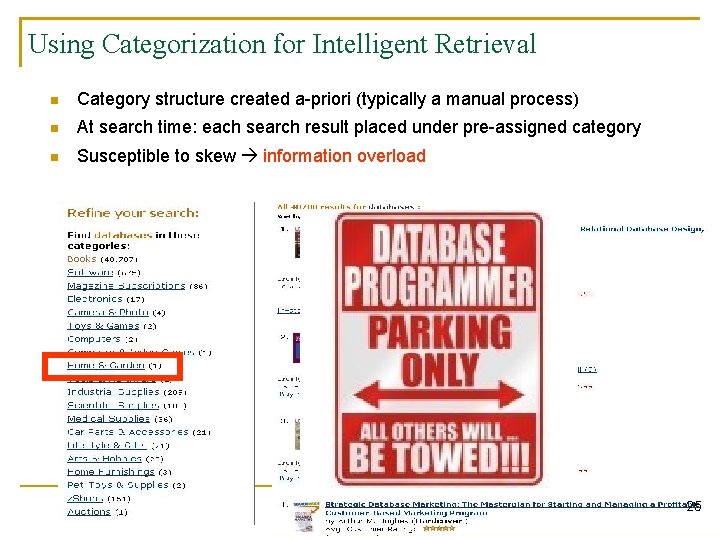

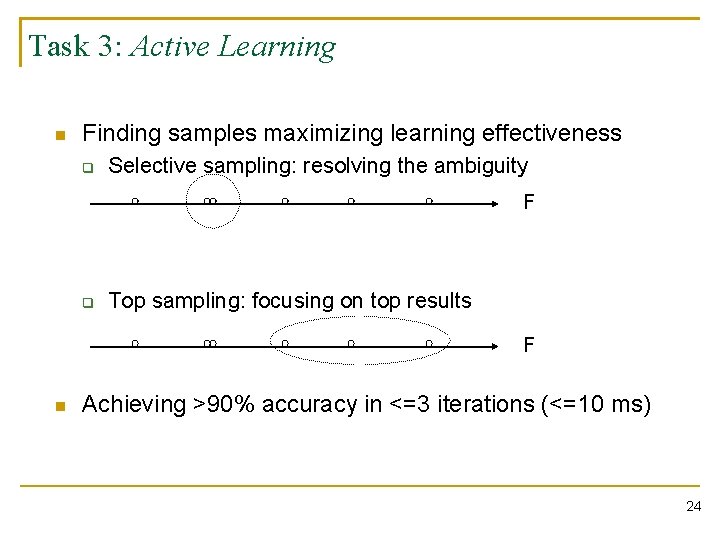

Task 3: Active Learning n Finding samples maximizing learning effectiveness q Selective sampling: resolving the ambiguity F q Top sampling: focusing on top results F n Achieving >90% accuracy in <=3 iterations (<=10 ms) 24

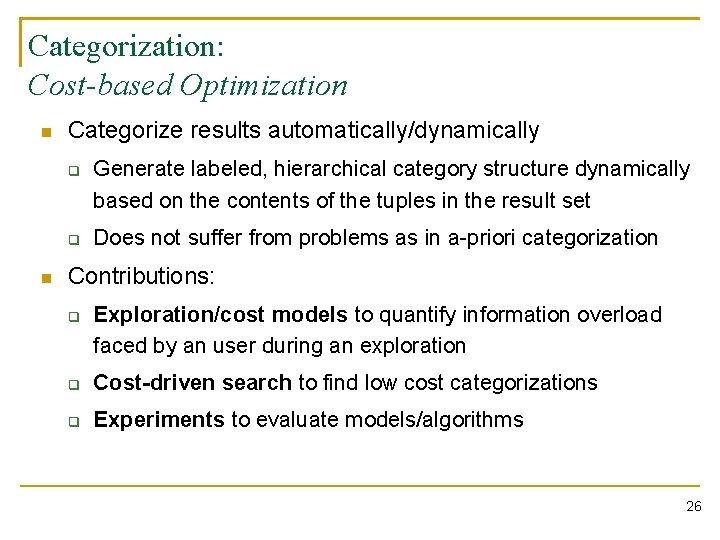

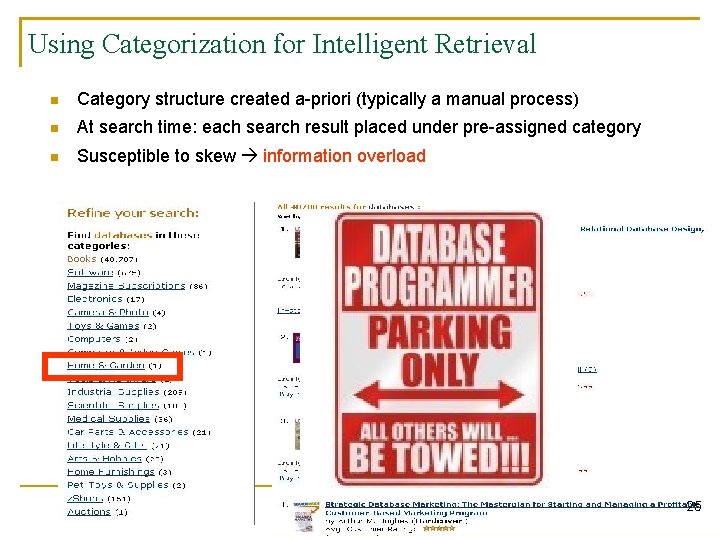

Using Categorization for Intelligent Retrieval n Category structure created a-priori (typically a manual process) n At search time: each search result placed under pre-assigned category n Susceptible to skew information overload 25

Categorization: Cost-based Optimization n Categorize results automatically/dynamically q q n Generate labeled, hierarchical category structure dynamically based on the contents of the tuples in the result set Does not suffer from problems as in a-priori categorization Contributions: q Exploration/cost models to quantify information overload faced by an user during an exploration q Cost-driven search to find low cost categorizations q Experiments to evaluate models/algorithms 26

Thank You! 27