Artificial Intelligence Games 1 Game Tree Search Ian

- Slides: 16

Artificial Intelligence Games 1: Game Tree Search Ian Gent ipg@cs. st-and. ac. uk

Artificial Intelligence Game Tree Search Part I : Part III: Game Trees Mini. Max A bit of Alpha-Beta

Perfect Information Games z Unlike Bridge, we consider 2 player perfect information games z Perfect Information: both players know everything there is to know about the game position y y no hidden information (e. g. opponents hands in bridge) no random events (e. g. draws in poker) two players need not have same set of moves available examples are Chess, Go, Checkers, O’s and X’s z Ginsberg made Bridge 2 player perfect information y by assuming specific random locations of cards y two players were North-South and East-West 3

Game Trees z A game tree is like a search tree y nodes are search states, with full details about a position x e. g. chessboard + castling/en passant information y edges between nodes correspond to moves y leaf nodes correspond to determined positions x e. g. Win/Lose/Draw x number of points for or against player y at each node it is one or other player’s turn to move 4

Game Trees Search Trees z Strong similarities with 8 s puzzle search trees y there may be loops/infinite branches y typically no equivalent of variable ordering heuristic x “variable” is always what move to make next z One major difference with 8 s puzzle y The key difference is that you have an opponent! z Call the two players Max and Min y Max wants leaf node with max possible score x e. g. Win = + y Min wants leaf node with min score, x e. g. Lose = - 5

The problem with Game trees z Game trees are huge y y O’s and X’s not bad, just 9! = 362, 880 Checkers/Draughts about 1040 Chess about 10 120 Go utterly ludicrous, e. g. 361! 10750 z Recall from Search 1 Lecture, y It is not good enough to find a route to a win y Have to find a winning strategy y Unlike 8 s/SAT/TSP, can’t just look for one leaf node x typically need lots of different winning leaf nodes y Much more of the tree needs to be explored 6

Coping with impossibility z It is usually impossible to solve games completely y Connect 4 has been solved y Checkers has not been x we’ll see a brave attempt later z This means we cannot search entire game tree y we have to cut off search at a certain depth x like depth bounded depth first, lose completeness z Instead we have to estimate cost of internal nodes z Do so using a static evaluation function 7

Static evaluation z A static evaluation function should estimate the true value of a node y true value = value of node if we performed exhaustive search y need not just be /0/- even if those are only final scores y can indicate degree of position x e. g. nodes might evaluate to +1, 0, -10 z Children learn a simple evaluation function for chess y P = 1, N = B = 3, R = 5, Q = 9, K = 1000 y Static evaluation is difference in sum of scores y chess programs have much more complicated functions 8

O’s and X’s z A simple evaluation function for O’s and X’s is: y y Count lines still open for ma. X, Subtract number of lines still open for min evaluation at start of game is 0 after X moves in center, score is +5 z Evaluation functions are only heuristics y e. g. might have score -2 but ma. X can win at next move x O - X x - O X x - - - z Use combination of evaluation function and search 9

Mini. Max z Assume that both players play perfectly y Therefore we cannot optimistically assume player will miss winning response to our moves z E. g. consider Min’s strategy y wants lowest possible score, ideally - y but must account for Max aiming for + y Min’s best strategy is: x choose the move that minimises the score that will result when Max chooses the maximising move y hence the name Mini. Max z Max does the opposite 10

Minimax procedure z z z Statically evaluate positions at depth d From then on work upwards Score of max nodes is the max of child nodes Score of min nodes is the min of child nodes Doing this from the bottom up eventually gives score of possible moves from root node y hence best move to make z Can still do this depth first, so space efficient 11

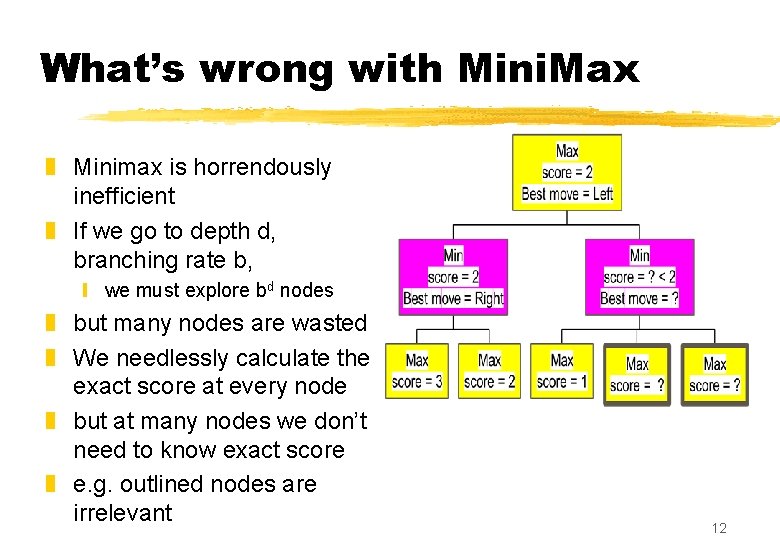

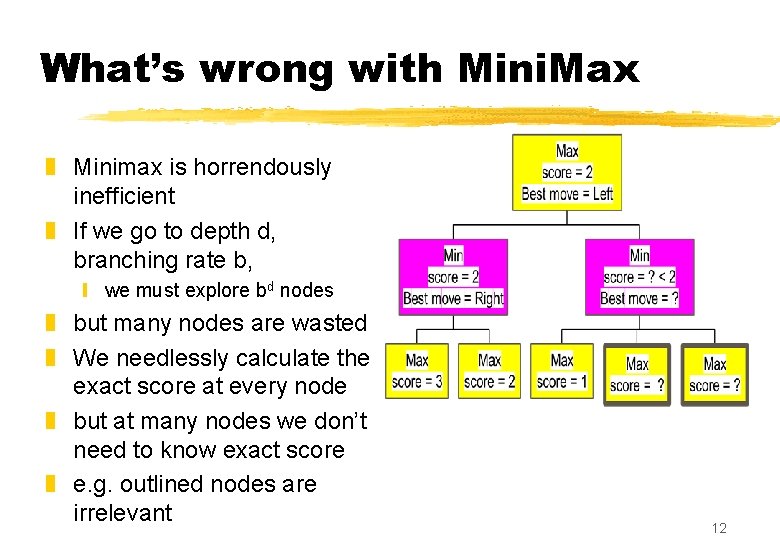

What’s wrong with Mini. Max z Minimax is horrendously inefficient z If we go to depth d, branching rate b, y we must explore bd nodes z but many nodes are wasted z We needlessly calculate the exact score at every node z but at many nodes we don’t need to know exact score z e. g. outlined nodes are irrelevant 12

Alpha-Beta search z Alpha-Beta = z Uses same insight as branch and bound z When we cannot do better than the best so far y we can cut off search in this part of the tree z More complicated because of opposite score functions z To implement this we will manipulate alpha and beta values, and store them on internal nodes in the search tree 13

Alpha and Beta values z At a M x node we will store an alpha value y the alpha value is lower bound on the exact minimax score y the true value might be y if we know Min can choose moves with score < x then Min will never choose to let Max go to a node where the score will be or more z At a Min node, we will store a beta value y the beta value is upper bound on the exact minimax score y the true value might be z Alpha-Beta search uses these values to cut search 14

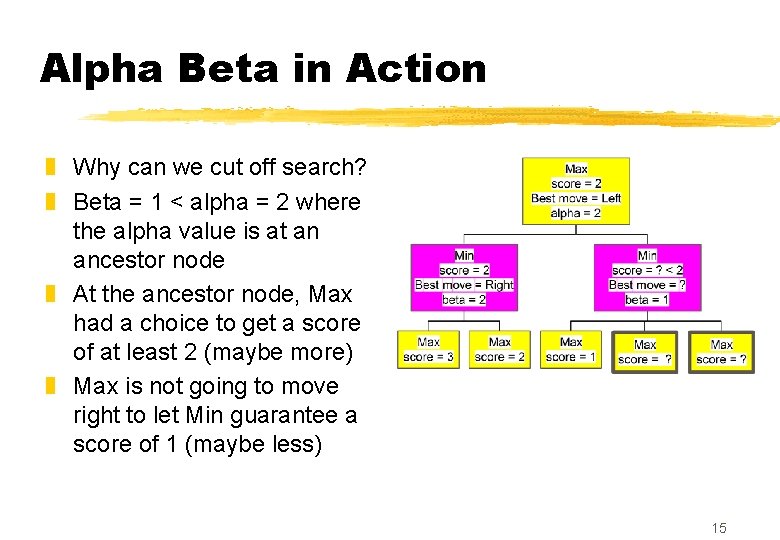

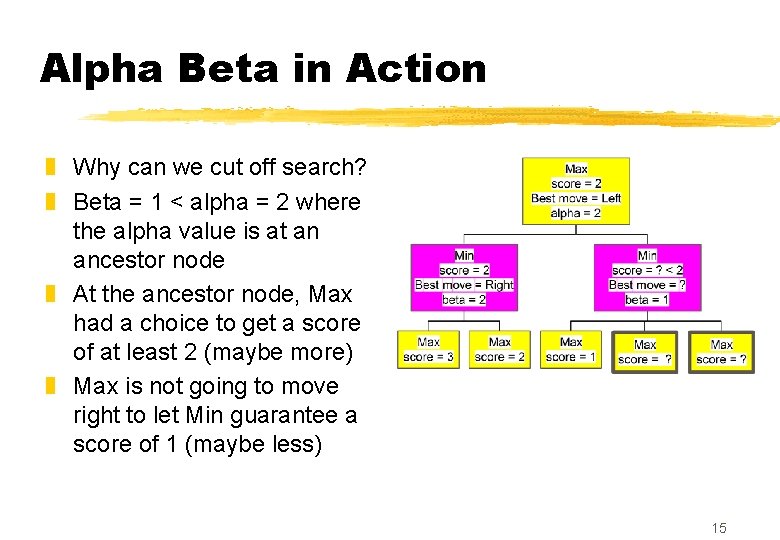

Alpha Beta in Action z Why can we cut off search? z Beta = 1 < alpha = 2 where the alpha value is at an ancestor node z At the ancestor node, Max had a choice to get a score of at least 2 (maybe more) z Max is not going to move right to let Min guarantee a score of 1 (maybe less) 15

Summary and Next Lecture z Game trees are similar to search trees y but have opposing players z Minimax characterises the value of nodes in the tree y but is horribly inefficient z Use static evaluation when tree too big z Alpha-beta can cut off nodes that need not be searched z Next Time: More details on Alpha-Beta 16